Abstract

The need to develop more effective feedback has become a growing concern in training. Feedback should be designed to provide meaningful information in order to help them improve their performance. On the other hand, the feedback should be designed not to increase the learners’ mental workload even while they maximize the benefits of using such feedback during training. Recently, Kim [1] developed the metacognitive monitoring feedback method. This methodology was tested in a computer-based training environment. The authors’ results showed that metacognitive monitoring feedback significantly improved participants’ performance during two days of a training session. However, the previous study did not investigate the impact of metacognitive monitoring feedback on participants’ mental workload and situational awareness. Hence, in this study, we investigated those needs and found a negative relationship between situational awareness and workload when the trainees observed the metacognitive monitoring feedback.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Developing advanced training methods that not only consider situational awareness but also mental workload is a growing concern in a computer-based training environment. According to Norman [2], learners’ situational awareness can be improved when trainees observe feedback that contains valuable information related to the task they learn during a training session. Many studies have been conducted to develop a better training method that can improve trainees’ situational awareness without increasing their workload. Among them, the concept of metacognitive monitoring feedback was recently developed by Kim [1]. The feedback showed a significant performance improvement on a visual identification task in a computer-based training environment [1]. It was post-test feedback and showed the central role of trainees’ learning in a human-in-the-loop simulation. However, how the metacognitive monitoring feedback affects trainees’ mental workload was not tested in the previous studies. Hence, the effect of metacognitive monitoring feedback on mental workload was investigated in the current study. Also, it is important to understand how metacognitive monitoring feedback influences the relationship between mental workload and situational awareness. For this reason, we studied this relationship resulting from the metacognitive monitoring feedback. In this study, Endsley’s situation awareness model [3] was used as the underlying basis for measuring trainees’ situational awareness. Also, retrospective confidence judgments (RCJ) and the NASA task load index (TLX) were used as metrics for assessing confidence levels and mental workload, respectively.

For the experimental group, SA level-based metacognitive monitoring feedback was designed for the experiment. The participants were exposed to the feedback screens after they had answered all situation awareness probes. The participants were monitored for a percentage of their responses for each level of SA, and each level of retrospective judgment was rated separately. The participants who were assigned to the control group did not receive the metacognitive monitoring feedback after they answered all SA questions.

The primary research questions were as follows: Does SA level-based metacognitive monitoring feedback influence learner workload? If so, is there any correlation between situational awareness and workload? The following hypotheses were tested in the human-in-the-loop simulation environment.

-

Hypothesis #1: The metacognitive monitoring feedback significantly influences learner workload.

-

Hypothesis #2: The metacognitive monitoring feedback significantly influences the correlation between situational awareness and workload.

2 Method

2.1 Computer-Based Training Environment

To test both hypotheses, a time windows-based human-in-the-loop simulation was used as a training tool. In this simulation framework, every event generated from the simulator was based on the concept of time window developed by Thiruvengada and Rothrock [4]. During the training, participants were required to learn how to defend their battleship against hostile aircraft. Their main task was identifying unknown aircraft and taking appropriate actions. To defend the ship, they must learn the Rules of Engagement (RoE). To recognize the identification of unknown aircraft, they need to understand the meaning of cues that related to the identification of the aircraft. Figure 1 shows the simulation interface, and the details of the Rules of Engagement are shown in Table 1.

2.2 Procedure

The experiment consisted of two sessions – a practice session and training session. Before the experiment, the participants were asked their previous experience with a radar monitoring task and video game. The participants took a 60 min practice session. During this session, the participants learned task-specific skills, such as how to perform the rules of engagement, how to identify unknown aircraft, and how to engage the target aircraft. After that, they received an instructor’s feedback about their performance. They also experience several practice runs during the session. Each practice scenario took 5 min to complete. Figure 2 shows the detail procedure for the practice session.

The participants underwent a training session. Figure 3 shows the detailed procedure of the training session.

Each participant performed multiple different scenarios. Table 2 shows one of the simulation scenarios that was used in this study. In this scenario, the total number of aircraft and time windows were twenty-four and forty-six, respectively. There were four friendly aircraft and six known aircraft (start TN with 40**) and fourteen unknown aircraft (start TN with 60**) in the scenario. All events occurred based on time windows in specific time sequences and were tied to situational awareness questionnaires.

While the participants were performing one of the training scenarios, the simulation was frozen automatically at a random time between 10 and 15 min. After the freeze, the participants saw the screens for SA probes and RCJ probes.

The following are examples of situation awareness questionnaires used in the experiment:

-

Level 1 SA: Perception of the Elements in the Environment

-

Question: What was the location of TN6026?

Choice: Within 50 NM, 40 NM, 30 NM, or 20 NM

-

-

Level 2 SA: Comprehension of the Current Situation

-

Question: What was the primary identification of TN6023?

Choice: Friendly or Hostile

-

-

Level 3 SA: Projection of Future Status

-

Question: TN6027 is following “Flight Air Route” and moving to “Genialistan”:

Choice: True or False

-

The following are the probes for RCJ:

-

RCJ probes based on SA Level

-

Level 1: “How well do you think you have detected the objects in your airspace?”

-

Level 2: “How well do you think you are aware of the current overall situation of your airspace?”

-

Level 3: “How well do you think you are aware of where the overall situation of your airspace is heading?”

After they answered all questions, the participants in the experimental group received the SA level-based metacognitive monitoring feedback. The others in a control group did not receive any feedback. Figure 4 shows an example of the SA level-based metacognitive monitoring feedback. The feedback consists of three main components: (1) a screenshot of the frozen moment with the answers of SA questions; (2) Participant’s SA responses, correct SA answers, and SA questions; (3) Visual graphs of both RCJ and SA performance.

The participants in the experimental group observed the feedback screens that contain the information regarding how they answered all SA probes with the images of the radar monitor at the frozen moment and the results of each level of SA probes as well as each level of RCJ scores. The exposure time for the feedback screen was 1 min to minimize the effect of bias due to uneven exposure. The control group did not receive any feedback.

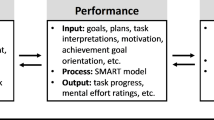

2.3 Performance Metrics

Retrospective Confidence Judgments (RCJ).

Retrospective Confidence Judgments (RCJ) is one of the metacognitive monitoring metrics that is commonly used in research related to metacognition [6]. This is a self-rating report regarding the participants’ confidence level for their responses before knowing whether they are correct or incorrect. RCJ is one of the ways to understand the metacognitive monitoring processes associated with retrieval of metamemory [7]. We collected RCJ scores (scale: 1 to 100) after the participants answered the SA probes during the testing sessions.

Situational Awareness Global Assessment Technique (SAGAT).

SAGAT is one of the famous measures of situational awareness [8]. It is designed for a computer-based simulation environment in dynamic systems (e.g., driving simulator, flight simulator, or process monitoring task). This technique was used in this study to collect participants’ situational awareness in given conditions. To measure their situational awareness, SA probes for each SA level were presented to the participants after a simulation clock passed 10 min from the beginning. The accuracy of participant’s situational awareness (SA Accuracy) was calculated by

NASA-Task Load Index (TLX).

It is the most well-known measure of subjective workload technique. NASA-TLX consists of mental demand, physical demand, temporal demand, performance, effort, and frustration. This multidimensional subjective workload rating technique is commonly used as a tool to assess operator’s workload related to aviation tasks [9] and flight simulators [10]. If NASA-TLX score is close to 100, it represents a high workload. If the score is close to 0, it means the operator had a low workload while he or she performed the task.

3 Results

3.1 Analysis of Variance

We compared participants’ RCJ score, SA accuracy, and NASA-TLX score between the groups. For the RCJ, there were no significant differences between the groups; RCJ (F(2,90) = 1.05, p = 0.357). In addition, there was no significant difference on NASA-TLX between the groups (F(2,90) = 0.16, p = 0.849). However, SA accuracy was significantly different between the groups (F(2,90) = 7.95, p < 0.001). The experimental group’s SA accuracy was significantly higher than the control group.

3.2 Correlation Matrix

Table 3 shows correlations between RCJ, SA, and NASA-TLX for both groups. The experimental group shows significant correlations between RCJ, SA accuracy, and NASA-TLX, while the control group shows a correlation between SA accuracy and RCJ (no correlation between RCJ and NASA-TLX and between SA accuracy and NASA-TLX).

4 Discussion

The present study compared the effects of SA level-based metacognitive monitoring feedback on situational awareness in a computer-based training environment. The accuracy of situational awareness, mental workload, and subject ratings of retrospective confidence judgments were collected through the human-in-the-loop simulation.

-

Hypothesis #1: The metacognitive monitoring feedback significantly influences learner workload.

NO, there was no evidence to support the hypothesis that SA level-based metacognitive feedback significantly affects trainees’ mental workload when we compared the NASA-TLX scores between the two groups. Therefore, we infer that this metacognitive monitoring feedback does not increase the learners’ workload during the training. To understand this phenomenon, further analysis of NASA-TLX data between groups is necessary.

-

Hypothesis #2: The SA-based metacognitive monitoring feedback significantly influences the correlation between situational awareness and workload.

YES, we found a negative correlation between SA accuracy and NASA-TLX in the experimental group. It shows that the participants who had better situational awareness experienced a lower mental workload during the training, while the performers with a poor situational awareness showed a higher mental workload. This phenomenon might be explained by the metacognitive framework developed by Nelson and Narens [11]. According to the framework, there are two layers in human cognition: (1) meta-level and (2) object-level. Here, object-level is defined as the process of cognitive activities from human sensors (e.g., vision, hearing, taste, smell, or touch). Meta-level is defined as a mental model of a particular task related to meta-knowledge from object-level. Many studies in the field of metacognition have shown that students could learn new concepts and skills through the interplay of these two levels, and the communication between these two levels plays one of the critical factors to stimulate student’s learning process. During the experiment, the SA-based metacognitive monitoring feedback provided the latest meta-knowledge of the radar monitoring task and helped the trainees update their mental models of unknown aircraft identification. In other words, the participants could easily observe the modification of the meta-knowledge in object-level through the feedback. However, the control group was not able to receive the metacognitive monitoring feedback. Hence, they could not efficiently update their mental models of the identification task compared to the experimental group.

In this study, we investigated the impact of metacognitive monitoring feedback on mental workload and situational awareness in a computer-based training environment. The initial findings of our study provided a better understanding of the metacognitive monitoring process and its relation to workload in a computer-based environment.

There are several limitations of the present study. First, the experiment has not been formatted to interpret the underlying workload mechanism between object-level and meta-level. Hence, the future research should investigate the cognitive-affective status of the learners with their workload levels by using biosensors (e.g., electroencephalography, eye tracking, and electrocardiography). Secondly, the findings of this study are limited to visual identification tasks. For that reason, it would be better to investigate the effect of metacognitive monitoring feedback in different domains.

References

Kim, J.H.: The effect of metacognitive monitoring feedback on performance in a computer-based training simulation. Appl. Ergon. 67, 193–202 (2018)

Norman, D.A.: The ‘problem’ with automation: inappropriate feedback and interaction, not ‘over-automation’. Philos. Trans. R. Soc. Lond. Ser. B Biol. Sci. 327(1241), 585–593 (1990)

Endsley, M.R.: Toward a theory of situation awareness in dynamic systems. Hum. Factors: J. Hum. Factors Ergon. Soc. 37(1), 32–64 (1995)

Thiruvengada, H., Rothrock, L.: Time windows-based team performance measures: a framework to measure team performance in dynamic environments. Cogn. Technol. Work 9(2), 99–108 (2007)

Kim, J.H.: Developing a Metacognitive Training Framework in Complex Dynamic Systems Using a Self-regulated Fuzzy Index (2013)

Dunlosky, J., Metcalfe, J.: Metacognition. Sage Publications, Thousand Oaks (2008)

Dougherty, M.R., et al.: Using the past to predict the future. Mem. Cogn. 33(6), 1096–1115 (2005)

Endsley, M.R.: Situation Awareness Global Assessment Technique (SAGAT). IEEE (1988)

Nygren, T.E.: Psychometric properties of subjective workload measurement techniques: implications for their use in the assessment of perceived mental workload. Hum. Factors: J. Hum. Factors Ergon. Soc. 33(1), 17–33 (1991)

Hancock, P., Williams, G., Manning, C.: Influence of task demand characteristics on workload and performance. Int. J. Aviat. Psychol. 5(1), 63–86 (1995)

Nelson, T.O., Narens, L.: Metamemory: a theoretical framework and new findings. Psychol. Learn. Motiv. 26, 125–141 (1990)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Kim, J.H. (2018). The Impact of Metacognitive Monitoring Feedback on Mental Workload and Situational Awareness. In: Harris, D. (eds) Engineering Psychology and Cognitive Ergonomics. EPCE 2018. Lecture Notes in Computer Science(), vol 10906. Springer, Cham. https://doi.org/10.1007/978-3-319-91122-9_3

Download citation

DOI: https://doi.org/10.1007/978-3-319-91122-9_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-91121-2

Online ISBN: 978-3-319-91122-9

eBook Packages: Computer ScienceComputer Science (R0)