Abstract

Due to the weak robust and unsatisfied performance in the color natural index, color colorfulness index and brightness of some existing algorithms, a novel bionic based algorithm is proposed in this paper. In the proposed algorithm, the human visual contrast sensitivity is introduced in to set the threshold for external lightness calculation, and potential function in Markov random fields for calculating external lightness is built; color constancy is quantified as adjusting pixel values along the vertical grayline, so that the scene reflectance can be restored with changing color saturation only; function for adjusting image background brightness is proposed by simulating the human visual photosensitive adaptability, and then the enhancement result satisfying the human photopic vision is restored under the constraint of the adjusted background brightness. The experimental results yield that the proposed algorithm can get better color natural index and color colorfulness index than some existing algorithms, and the brightness of enhancement results is more suitable for the human photopic vision.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Human vision system has prominent faculty at apperceiving and processing scene’s color, brightness, size, detail and the other information, therefore, bionic based image processing methods have been being explored by experts in this field. Some outstanding bionic based image enhancement methods have been proposed in recent years, including method proposed by Academician Wang of CAS, which proposed a novel bio-inspired algorithm to enhance the color image under low or non-uniform lighting conditions that models global and local adaptation of the human visual system [1], method proposed by Lu from Beijing Institute of Technology, which is based on the retinal brightness adaption and lateral inhibition competition mechanism of ganglion cells [2], Liu of CAS also proposed a novel image enhancement method simulating human vision system, which is based on the adjacency relation of image regions, a gray consolidation strategy is proposed to represent image using the least gray, and according to the Just Noticeable Difference (JND) curve, it signs a gray mapping relation for maximum perception of human eyes to enhance image [3].

In this paper, we proposed a novel Self-adaptive defog method based on bionic. In the proposed algorithm, Retinex model is adopted to describe a haze degraded image [4], white balance is performed on the degraded image at first, then, human visual brightness adaption mechanism is introduced in to estimate background brightness, and then under the constraint of color constancy image contrast is enhanced based on human visual contrast sensitivity, and finally in order to make the enhancement result satisfy human vision better, the brightness of the restored image is adjusted directed by the brightness of the original image.

2 Image Enhancement Based on HVS

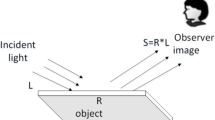

Retinex model is adopted here to describe a degraded image, whose expression is

where I is the obtained image, \( T \) is the reflectance image, and L is the external illumination. Under the weather condition of haze, L does not take the property of spatial smoothness as under weather condition of unbalanced illumination, because it is greatly disturbed by scene depth, therefore, L takes the property of local smoothness. In order to get Retinex model’s solution \( T \), and make the enhancement result be with better visual pleasure, the following steps are proposed to solute the problem: Firstly, white balance is put on I, then the background brightness of white-balanced I is calculated; Secondly, calculate the external illumination L, and then remove L from the white-balanced I under the constraint of color constancy to upgrade the image’s contrast and restore the scene’s real color; Finally, adjust the brightness of the reflectance image to the dynamic suitable to human visual system. The detail processes could be described as Fig. 1.

2.1 White Balance and the Calculation of Background Brightness

White balance is used to correct the external illumination’s color and normalize the original image. The key process of white balance is to acquire the color of external illumination. White balance could be described as Eq. (2).

In Eq. (2) \( L_{\infty } { = (}L_{\infty ,r} ,L_{\infty ,g} ,L_{\infty ,b} ) \), it represents the color of external illumination. Generally, external illumination is estimated by sky area of an image, then we could calculate the color of external illumination through the following constraints:

-

No . 1, sky area locates at image’s top, it could be described as \( i \le 2\% {\text{H}} \), where H is the total row of I, and \( i \) is the horizontal ordinate of pixels, the origin is located at the top left corner.

-

No. 2, the brightness of sky area is larger than almost all the other pixels, it could be described as \( L_{\infty } \ge 99\% \hbox{max} (I ) \).

Through the research of human visual brightness adaption mechanism, we know that when perceiving the brightness of the central pixel, human vision always be disturbed by pixels around [5], therefore, we could calculate background brightness through Eq. (3),

where \( N_{0} (x ) \) is a local patch centered at x except x, the patch size is \( 5 \times 5 \) in this paper; \( \varphi (y ) \) is Gaussian weighted-coefficient; \( I_{V}^{K} (y ) \) is the lightness component of white-balanced image in HSV space.

2.2 Estimate the External Illumination

Transform \( I_{{}}^{K} \) to logarithmic domain, we get

As \( I^{k} \le L \), so \( I^{w} \le l \), and because \( 0 \le I^{K} ,L \le 1 \), we have \( I^{w} \le 0 ,l \le 0 \), so \( |I^{w} |\ge |l | \). Let \( {}^{\sim}I^{w} \) , \( {}^{\sim}\tau \) , \( {}^{\sim}l \) represent the absolute value of \( I^{w} \), \( \tau \), and \( l \) respectively. In the haze weather conditions, external illumination could result in the degradation of image contrast and color saturation directly. According to human vision’s contrast sensitivity mechanism, we designed the following steps to calculate external illumination:

When pixels in window \( {\Omega} \) centralized with pixel x satisfies the condition \( \frac{{ |{}^{\sim}I_{x}^{w} { - }{}^{\sim}l_{\text{o}} |}}{{{}^{\sim}l_{\text{o}} }} \ge N_{{\Omega} } \), we let the external illumination above pixel x to be 0, that is \( {}^{\sim}l = 0 \) ,where \( {}^{\sim}l_{\text{o}} \) is the average background brightness of the window, \( N_{{\Omega} } \) is the corresponding just noticeable difference.

When pixels in window \( {\Omega} \) satisfies the condition \( \frac{{ |{}^{\sim}I_{x}^{w} { - }{}^{\sim}l_{\text{o}} |}}{{{}^{\sim}l_{\text{o}} }} < \, N_{{\Omega} } \), we could calculate the external illumination above pixel j through Eq. (5),

In the functional \( F ({}^{\sim}l ) \), the data term \( |\nabla ({}^{\sim}I_{v}^{{^{w} }} { - }{}^{\sim}l ) | \) forces the reflectance image to have maximal contrast, and \( {}^{\sim}I_{v}^{{^{w} }} \) is the lightness component of image \( I^{w} \) in HSV space. The second penalty term \( \left( {1 - \left| {{}^{\sim}l_{x} - {}^{\sim}l_{y} } \right|} \right) \) is smoothness term, which forces spatial smoothness on the external illumination. \( \eta \) is non-negative parameter and acts as the strength of the smoothness term here.

The above function is a potential function of Markov random fields (MRFs). Detailed implementation of the framework is described as the following steps:

-

①

Compute the data term \( |\nabla ({}^{\sim}I_{v}^{{^{w} }} { - }{}^{\sim}l ) | \) from \( {}^{\sim}I_{v}^{{^{w} }} \);

-

②

Compute the smoothness term \( \left( {1 - \left| {{}^{\sim}l_{x} - {}^{\sim}l_{y} } \right|} \right) \);

-

③

Do the inference to get the external illumination \( {}^{\sim}l \).

Detailed algorithm to calculate the data cost could be described as the following pseudocode:

-

①

for \( {}^{\sim}l \) = 0 to \( \omega \cdot \mathop {\hbox{min} }\limits_{{c \in \left\{ {r,g,b} \right\}}} ({}^{\sim}I_{c}^{{^{w} }} ) \)

compute \( |\nabla ({}^{\sim}I_{v}^{{^{w} }} { - }{}^{\sim}l ) | \)

-

②

return \( |\nabla ({}^{\sim}I_{v}^{{^{w} }} { - }{}^{\sim}l ) | \) for all pixels in window \( {\Omega} \), and for each pixel, \( |\nabla ({}^{\sim}I_{v}^{{^{w} }} { - }{}^{\sim}l ) | \) is a vector with \( \omega \cdot \mathop {\hbox{min} }\limits_{{c \in \left\{ {r,g,b} \right\}}} ({}^{\sim}I_{c}^{{^{w} }} ) \) dimensions.

Where \( \omega \) is introduced in to keep a very small amount of haze for the distance objects, to ensure the image seem natural, and it is fixed to 0.95 here [6].

By obtaining both data cost and smoothness cost, we have a complete graph in term of Markov random fields. In step 3, to do the inference in MRFs with number of labels equals to \( \omega \cdot \mathop {\hbox{min} }\limits_{{c \in \left\{ {r,g,b} \right\}}} ({}^{\sim}I_{c}^{{^{w} }} ) \), we use the graph-cut with multiple labels [7] or belief propagation.

2.3 Calculate the Reflectance Image

In Fig. 2, OA is gray scale line, on which pixels have the same intensity, and it ranges from black point O(0,0,0) to white point A(255,255,255). PH is the perpendicular from point P to line OA, and the less the |PH| is, the lower the color saturation of pixel P is [8]. Color constancy reflects in image is that pixel values in three different channels keep in order and move along the direction of HP. In addition, from Eq. (6), expression of color saturation, we know that the difference among the three channels is little, which leads to the reduction of color saturation. In conclusion, removing haze from image and getting the scene reflectance under the constraint of color constancy is to move pixel p along the direction of HP, make pixel value of one channel tend to be 0, increase the difference between three channels and promote the color saturation.

In which S represents the color saturation of image. Suppose that the coordinates of pixel P and H are \( (P_{r} , { }P_{g} , { }P_{b} ) \) and \( (h_{r} , { }h_{g} , { }h_{b} ) \) respectively, as \( {\text{PH}} \bot {\text{OA}} \), and pixel in OA satisfies \( h_{r} = h_{g} = h_{b} \), then there is \( h_{r} = h_{g} = h_{b} = \frac{{p_{r} + p_{g} + p_{b} }}{3} \), so we get \( \overrightarrow {\text{HP}} = (\frac{{{ - 2}P_{r} + P_{g} + P_{b} }}{ 3},\frac{{P_{r} { - 2}P_{g} + P_{b} }}{ 3},\frac{{P_{r} + P_{g} { - 2}P_{b} }}{ 3}) \). After dehazing pixel P moves to \( {\text{P}}^{\prime} \), whose coordinate is \( (P_{r} - q_{r} \cdot {}^{\sim}l,P_{g} - q_{g} \cdot {}^{\sim}l,P_{b} - q_{b} \cdot {}^{\sim}l) \), so  , in which \( q_{r} ,q_{g} ,q_{b} \) is the weight of step length in R, G, B channel respectively. Because

, in which \( q_{r} ,q_{g} ,q_{b} \) is the weight of step length in R, G, B channel respectively. Because  and

and  are parallel to each other, so

are parallel to each other, so

In conclusion, the steps of recovering scene reflectance is concluded as following:

-

Step 1: set the step weight of the minimal channel of \( {}^{\sim}I^{w} \) to be 1. For example, suppose \( q_{r} = 1 \), then the step weights of other two channels are

$$ \begin{aligned} q_{g} = \frac{{P_{r} - 2P_{g} + P_{b} }}{{ - 2P_{r} + P_{g} + P_{b} }}, \hfill \\ q_{b} = \frac{{P_{r} + P_{g} - 2P_{b} }}{{ - 2P_{r} + P_{g} + P_{b} }}. \hfill \\ \end{aligned} $$(8) -

Step 2: subtract the external illumination with different weight in three channels,

$$ \begin{aligned} {}^{\sim}\tau_{r} & { = }{}^{\sim}I_{r}^{{^{w} }} - {}^{\sim}l ,\\ {}^{\sim}\tau_{g} & { = }{}^{\sim}I_{g}^{{^{w} }} - q_{g} \cdot {}^{\sim}l, \\ {}^{\sim}\tau_{b} & { = }{}^{\sim}I_{b}^{{^{w} }} - q_{b} \cdot {}^{\sim}l. \\ \end{aligned} $$(9)

From the hypothesis of Step 1 we know that \( {}^{\sim}I_{r}^{w} \le {}^{\sim}I_{g}^{w} \) , \( {}^{\sim}I_{r}^{w} \le {}^{\sim}I_{b}^{{^{w} }} \), and it is easy to demonstrate that \( q_{g} \le 1 \), \( q_{b} \le 1 \), while \( q_{r} = 1 \), thus the channel of minimum value takes the maximum step weight. Moreover, from \( q_{g} - q_{b} = \frac{{ - 3({}^{\sim}I_{g}^{w} - {}^{\sim}I_{b}^{{^{w} }} )}}{{ - 2{}^{\sim}I_{r}^{w} + {}^{\sim}I_{g}^{w} + {}^{\sim}I_{b}^{{^{w} }} }} \) it is we know that if \( {}^{\sim}I_{g}^{w} \le {}^{\sim}I_{b}^{{^{w} }} \) then \( q_{g} \ge q_{b} \), and if \( {}^{\sim}I_{g}^{w} \ge {}^{\sim}I_{b}^{{^{w} }} \), then \( q_{g} \le q_{b} \), therefore the channel of maximum value corresponds the minimum step weight. In conclusion Eq. (9) ensures that channel with the largest luminance value subtracts the smallest external illumination, while channel with the smallest luminance value subtracts the largest external illumination, so the relative size among three color channels keeps unchanged and the color saturation is enhanced.

-

Step 3: put exponential transform on \( \tau \), and get the finally dehazed scene reflectance,

$$ T = \text{e}^{\tau } . $$(10)

2.4 Brightness Emendation

The areas with dense haze will be dark after removing external illumination, so the brightness compensation of scene reflectance is necessary. It could be drawn from the curve describing human vision’s Just Noticeable Difference (JND) that the best brightness for human vision to apperceive details is 125, so we rearrange the reflectance image brightness to around this value. The final result could be got through Eq. (11),

Where \( \mu \) is the average brightness after correction, and it is set to be \( 1 25/255 \) here, k is a non-negative parameter, deciding the dynamic range of brightness, and it is set to be 0.2 here. Curve of this function could be seen from Fig. 3(b), and it is clear that this function could heighten the brightness of dark area effectively and restrain the brightness of lightful area.

The final enhancement result could be got through Eq. (12),

Where \( L_{{T,{\text{O}}}} \) is the background brightness of \( T \), and it could be calculated by Eq. 3.

3 Experimental Results

To demonstrate the effectiveness of our method, we chose some classical images from representational papers [6, 9] for test, and compared our results with the other two distinguished algorithms, K. He’s dark channel prior [10] and Tarel’s fast visibility restoration [11], which were quoted vastly in recent years. We listed the key variables during enhancing visibility process in Fig. 4: external illumination \( L \), the scene reflectance without brightness emendation \( T \), the scene reflectance after brightness emendation \( T_{adjust} \). We also tested some other images in Figs. 5, 6 and 7 are the comparative experimental results.

-

Comparing the original images with the enhancement results in Fig. 5, we confirmed that the proposed algorithm could improve images plagued by fog effectively, and it could recover the real color of scenes. From Figs. 6 and 7 we could notice that the results of proposed approach have a higher contrast and vivid color. However the saturation overtops, the image luminance has a good balance and increase the image details. At the same time, objects far away from the camera become visible in our results, while K. He’s results appear to be dim although the algorithm could reduce fog illusion effectively, and Tarel’s results still have fog illusion.

To evaluate the results of enhancing visibility listed in Figs. 6 and 7 objectively, we adopted color natural index [11] (CNI), color colorfulness index [11] (CCI) and gradient based definition [4, 9] here. The gradient based definition could be got through Eq. (13). Table 1 shows these objective evaluation results.

The CNI can be calculated by:

where \( S_{{{\text{av}\_}skin}} \), \( S_{{av{\_}grass}} \) and \( S_{{av{\_}sky}} \) represent the mean saturation of the three kinds pixels, the corresponding numbers of pixels are \( n_{skin} \), \( n_{grass} \) and \( n_{sky} \).

The definition of CCI is:

where \( S_{av} \) is the mean of image saturation, \( \sigma \) is its standard deviation.

We can compute the image definition by Eq. (18). In which M are the total rows and columns respectively; \( I_{\text{H}} \), \( I_{\text{V}} \) are the gradients in horizontal and vertical direction respectively. The Table 1 indicates that our algorithm has a distinct superiority in color natural and abundance.

More statistical experiments are listed in Figs. 8, 9 and 10, 66 pictures with uniform fog are taken from Tarel’s dataset: www.lcpc.fr/english/products/image-databases/article/frida-foggy-road-image-database.

4 Concluding Remarks and Discussion

In this paper, we propose a novel defog method based on bionics, which is enlightened by the substance of image contrast improvement, and the proposed algorithm is proved to have a good performance at real color restoration, contrast improvement and brightness emendation. Qualitative and quantitative results demonstrate that the proposed method can get better color natural index and color colorfulness index than some existing algorithms, and it is robuster and more effective. However, scenes near the camera become over enhancement, and scenes far away from the camera appears a lot of noise after enhancing visibility. Therefore, for future work, we intend to concentrate on the above constraints of our method.

References

Wang, S.-J., Ding, X.-H., Liao, Y.-H., Guo, D.-H.: A novel bio-inspired algorithm for color image enhancement. Acta Electron. Sin. 36(10), 1970–1973 (2008)

Lu, L.-L., Gao, K., Shao, X.-G., Ni, G.-Q.: An adaptive high dynamic range color image enhancement algorithm based on human vision property. Trans. Beijing Inst. Technol. 32(4), 415–419 (2012)

Liu, X., Wu, J., Hao, Y.-M., Zhu, F.: Image enhancement method for human vision. J. Comput. Eng. 38(2), 254–256 (2012)

Li, Q.-H., Bi, D.-Y., Ma, S.-P., He, L.-Y.: Non-uniform lightness image repeated exposure directed by visual mechanism. J. Acta Autom. Sin. 39(9), 1458–1466 (2013)

Tan, R.T.: Visibility in bad weather from a single image. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–8, June 2008

He, K., Sun, J., Tang, X.: Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 33(12), 1–13 (2011)

Szeliski, R., Zabih, R., Scharstein, D., Veksler, O., Kolmogorov, V., Agarwala, A., Tappen, M., Rother, C.: A comparative study of energy minimization methods for Markov random fields. In: Leonardis, A., Bischof, H., Pinz, A. (eds.) ECCV 2006. LNCS, vol. 3952, pp. 16–29. Springer, Heidelberg (2006). https://doi.org/10.1007/11744047_2

Gan, J.-H., He, T.-L.: Pixel-level single image dehazing algorithm in color space. Appl. Res. Comput. 22(9), 3591–3593 (2012)

Lan, X., Zhang, L.-P., Shen, H.-F., Yuan, Q.-Q., Li, H.-F.: Single image haze removal considering sensor blur and noise. EURASIP J. Adv. Sig. Process. (2013). http://asp.eurasipjournals.com/content/2013/1/86

Tarel, J.-P., Hautiere, N.: Fast visibility restoration from a single color or gray level image. In: Proceedings of IEEE International Conference on Computer Vision (ICCV), pp. 2201–2208, September 2009

Huang, K.-Q., Wang, Q., Wu, Z.-Y.: Natural color image enhancement and evaluation algorithm based on human visual system. In: Computer Vision and Image Understanding, pp. 52–63 (2006). http://www.elsevier.com/locate/cviu

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Ma, Sp., Li, QH., Bi, DY., Dong, YY. (2017). A Novel Self-adaptive Defog Method Based on Bionic. In: Zhao, Y., Kong, X., Taubman, D. (eds) Image and Graphics. ICIG 2017. Lecture Notes in Computer Science(), vol 10667. Springer, Cham. https://doi.org/10.1007/978-3-319-71589-6_25

Download citation

DOI: https://doi.org/10.1007/978-3-319-71589-6_25

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-71588-9

Online ISBN: 978-3-319-71589-6

eBook Packages: Computer ScienceComputer Science (R0)