Abstract

The excellent feature set or feature combination of cotton foreign fibers is great significant to improve the performance of machine-vision-based recognition system of cotton foreign fibers. To find the excellent feature sets of foreign fibers, in this paper presents three metaheuristic-based feature selection approaches for cotton foreign fibers recognition, which are particle swarm optimization, ant colony optimization and genetic algorithm, respectively. The k-nearest neighbor classifier and support vector machine classifier with k-fold cross validation are used to evaluate the quality of feature subset and identify the cotton foreign fibers. The results show that the metaheuristic-based feature selection methods can efficiently find the optimal feature sets consisting of a few features. It is highly significant to improve the performance of recognition system for cotton foreign fibers.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The cotton foreign fibers, such as ropes, wrappers, plastic films and so on, are closely related to the quality of the final cotton textile products [1]. In the recent years, the machine-vision-based recognition systems have been widely used to assess the quality of cottons [2, 3], in which classification accuracy is an key measure to validate the performance of recognition systems. To improve the classification accuracy, finding the optimal feature sets with high accuracy is an efficient way due to because it can improve the accuracy and speed of recognition systems.

Feature selection (FS) is a main approach to find the optimal feature sets by reduce the irrelevant or redundant features. Currently, FS has been used to the area of machine learning and data mining [4]. Since to find the optimum feature sets is a NP problem, the researchers begin to turn to find the near optimal feature set and have proposed many algorithms [5, 6].

Currently, metaheuristic algorithms have attracted so much attention, the representive algorithms are particle swarm optimization (PSO for short), ant colony optimization (ACO for short) and genetic algorithm (GA for short) [5, 7, 8]. For metaheuristic algorithms, they are firstly given an evaluating measure of the quality of feature sets, and iteratively improve a specific candidate set. Finally, the excellent feature sets are obtained. The metaheuristic algorithms makes few assumptions on the optimal feature sets and find the optimal feature sets in very large search spaces. This is very suitable for the FS problem.

In this paper, three metaheuristic algorithms for FS are presented to find the optimal feature combination of cotton foreign fibers, which are GA for FS (GAFS for short), ACO for FS (ACOFS for short) and PSO for FS (PSOFS for short). Two classifiers, which are the k-nearest neighbor (KNN for short) [9] and support vector machine (SVM for short) [10], are used to evaluate the quality of subsets and to identify cotton foreign fibers. The aim of our works is applying these algorithms to the data sets of cotton foreign fibers to discover the new and challenging results. The comparison analysises of these algorithms illustrate the excellent search ability of the proposed metaheuristic algorithms and the feature sets obtained by them can efficiently improve the performance of recognition systems of cotton foreign fibers.

The remainder is organized as follows. The applications context and the proposed FS methods are presented in Sect. 2. The results and discussion are describled in Sect. 3. Section 4 describles the conclusion.

2 Materials and Methods

2.1 Application Background

The cotton foreign fibers usually fall into six groups, that are feather, hemp rope, plastic film, cloth, hair, polypropylene and, respectively. The cotton foreign fibers induce the quality of the cotton textile products [1]. The grade evaluation using machine-vision-based recognition systems is mainly an approach to solve this problem [2]. These systems in general have three key steps, which are image segmentation, feature extraction and classification of foreign fiber, respectively.

Considering real-time problem, the feature set, which includes a few number of features and has high accuracy, is important due to reduction of the detection time and improvement of classification accuracy. As a result, FS is important to the online recognition systems of cotton foreign fibers. In our work, we have applied three metaheuristic algorithms to this area for obtaining the optimal feature sets of cotton foreign fibers.

2.2 Data Preparation

Firstly, we obtain the foreign fiber images by our test platform, and 1200 representative images including foreign fibers are selected to extract the dataset. The width of the obtained images is 4000 pixels and their height is 500 pixels. Several examples are shown in Fig. 1. These images are divided into six groups in terms of categories of foreign fibers, and every group contains 200 images.

Then, we segment these images into small foreign fiber objects only including cotton foreign fiber. Finally, the 2808 objects are obtained and the number of hair, black plastic film, cloth, rope, polypropylene twines and feather objects is 204, 408, 432, 492, 528 and 744, respectively. The following step is extracting features from these objects.

The color, shape, and texture features can be extracted in cotton foreign fibers. Since accurate classification is difficult in only using one or two features [2]. Therefore we need extraction of all kinds of features including color, shape and texture features, and find the excellent feature combination by FS approaches.

In our experiment, a total of 80 features are extracted from foreign fiber objects, and the number of color, texture and shape features is 28, 42 and 10, respectively. These extracted features are used to build the 80-dimensional feature vector.

After the data is generated, normalization is implemented to reduce the impact of different dimensions.

2.3 Metaheuristic Algorithms for Feature Selection

Fitness Function.

Fitness function is important for metaheuristic algorithms, it is used evaluate the quality of each subset. Considering the online classification problem, we expect that the found feature set should has small size and high accuracy. Therefore, fitness evaluation is designed to combine the accuracy of classifier with the length of feature subset. The specific fitness function in this paper is the following Eq. (1):

where X denotes the subset, J(X) is the classification accuracy of subset X, |X| denotes the feature number of the subset X, υ and ψ are used to adjust the relative importance of accuracy and size. In this study, two classifiers, KNN and SVM are adopted to evaluate the quality of subset.

Particle Swarm Optimization for Feature Selection.

PSO belongs to population-based metaheuristics and is proposed by Kennedy and Eberhart [11]. PSO explores the search space by movements of particles with a velocity and each particle is updated based on its past best position and the current best particle. PSO can efficiently balance the exploration and exploitation and is an efficient optimization algorithm [12, 13].

Supposing the particle i is denoted as \( \vec{X}_{i} = \;(x_{i,1} ,x_{i,2} , \cdots ,x_{i,d} ) \), which has the velocity \( \vec{V}_{i} \; = (v_{i,1} ,v_{i,2} , \cdots ,v_{i,d} ) \). \( \vec{P}_{i} \; = (p_{i,1} ,p_{i,2} , \cdots ,p_{i,d} ) \) denotes the past best position of the particle i. \( \vec{P}_{g} \; = (p_{g,1} ,p_{g,2} , \cdots ,p_{g,d} ) \) denotes the best particle.

Each bit of the particle only lies in one of two states, i.e. zero or one, which will be changed according to probabilities. To change the velocity from continuous space to probability space, the following sigmoid function is using in algorithm:

The velocity is recalculated in terms of Eq. (3):

where w denotes inertia weight and is updated at the iteration t according to Eq. (4):

where w max denotes respectively the maximal value of the inertia weight, w min denotes the minimum of the inertia weight. The t max is the maximal times of iterations. The parameters c 1 and c 2 denote the acceleration coefficients. The parameters r 1 and r 2 are random numbers varying from 0 to 1. x i , j , p i , j and p g , j belong to zero or one. v max is the maximum velocity.

The new particle position is updated by Eq. (5):

where and rnd denotes the random number in [0, 1] from uniform distribution.

Ant Colony Optimization for Feature Selection.

ACO is proposed based on the idea of ants finding food by the shortest path between food source and nest [14]. In ACO, the addressed problem is modelled as a graph, in which the ants search a minimum cost path. The good paths mean the emergent result of the global cooperation among ants. In each iteration finding the optimal solutions, many ants construct their solutions by heuristic information and trail pheromone [15].

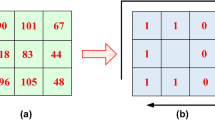

In the ACOFS, every candidate solution is mapped into an ant represented by a binary vector where the bit one or zero respectively means that the corresponding feature is selected or not.

Heuristic Information:

Heuristic information generally represents the attractiveness of each feature. If heuristic information is not used, the algorithm would be greedy, and the better solution is not found [5]. To evaluate the heuristic information [16], the information gain is calculated in this study.

Feature Selection:

In each iteration, the ant k determines whether the feature i is selected or not according to transition probability p i . The transition probability p i is given as follows:

where \( J^{k} \) represents the feasible feature set, \( \eta_{i} \) is the heuristic desirability of the feature i, \( \tau_{i} \) is the pheromone value of the feature i. α and β is used to adjust the relative importance of the heuristic information and pheromone.

Pheromone update:

After all ants have constructed their feature sets, the algorithm trigger the pheromone evaporation, and according to Eq. (7) each ant k deposits a quantity of pheromone \( \Delta \tau_{i}^{k} (t) \), which is calculated according the following equation:

where \( s^{k} (t) \) denotes the found feature set by ant k at iteration t. \( \gamma (x) \) denotes fitness function.

The pheromone can be updated according to the following rule:

where \( \rho \in (0,1) \) denote the pheromone decay coefficient which can avoid stagnation. m denotes the number of ants and g is the best ant. For all ants, the pheromone is updated according to Eq. (8)

Genetic Optimization for Feature Selection.

GA is proposed by Holland, which is a metaheuristic based on the idea of genetic and natural selection. GA has been used to solve the FS tasks [7]. In evolution, each species has to change its chromosome combination to adapt to the complicated and changing environment for surviving in the world. In GA, a chromosome denotes a potential solution of problem, which is evaluated by fitness function. In GA, a new generation with better survival abilities is generated by crossover and mutation. GA usually includes coding, selection, crossover, mutation.

Encoding:

In the GAFS, a chromosome represents a candidate feature set which consist of genes, and a gene is a feature which are encoded in the form of binary strings. If the gene is coded into ‘1’, the corresponding feature is selected, otherwise, the gene is coded into ‘0’. The bit i in the chromosome denotes the feature i. Each chromosome is initialized randomly.

Selection:

The selection is to select the chromosomes into the new generation among the current population. A certain number of chromosomes are probabilistically selected into the next generation, where the probability of selecting chromosomes i is Eq. (9)

where S(i) denotes the fitness value of the chromosome i, m is the number of the chromosomes.

Crossover:

The crossover operator is used to exchange genes between two chromosomes. The representative crossover operators are multi-point crossover, double-point crossover and single-point crossover [7]. In this study, double-point crossover is performed. After some chromosomes have been selected into the next generation’s population, additional chromosomes are generated by using a crossover operation. Crossover takes two parent individuals, which are chosen from the current generation by using the probability function given by Eq. (9), and creates two offspring by recombining portions of both parents.

Mutation:

Mutation operator is used to determine the variety of the chromosomes, which let local variations into the chromosomes and keeps the diversity of the population, This can help to find the good solution in search space. Here, the number of chromosomes depends on the mutation rate. Then a gene of the mutating chromosome is selected at random and its value is changed from ‘1’ to ‘0’ or ‘0’ to ‘1’, respectively.

3 Results and Discussion

In our experiments, the configuration of computer is as follows: CPU 2.66 GHz, main memory 4.0 GB and Windows 7 system. All the algorithms are coded and run in the Matlab development environment. Two different classifiers, KNN and SVM, are taken for evaluating the quality of solution. To efficiently evaluate the methods, the 10-fold cross validation is used in our experiments.

The parameters of PSOFS, ACOFS and GAFS is set according to Table 1. These parameters are selected in terms of experiences.

Table 2 shows the results of performance comparisons of three algorithms. As we can see, for KNN classifier, ACOFS has the best result among these methods, the selected subset has the smallest size and highest classification accuracy. For SVM, PSOFS can obtain the subset with highest accuracy, but the subset obtained by ACOFS includes the least features.

Figure 2 intuitively shows the accuracy of the different subsets obtained by three algorithms and the original set with KNN and SVM, respectively. As shown in Fig. 2, for KNN and SVM, all the optimal subsets obtained by three metaheuristic-based algorithms achieve much higher accuracy than the original set without feature selection.

Tables 3, 4, 5 and 6 show the detailed classification results of the subset obtained by three meta-heuristic algorithms and the original set using KNN classifier. As shown in Tables 3, 4, 5 and 6, the classification accuracy of the cloth and hair is efficiently improved by using the subset selected by three meta-heuristic algorithms, at least increased by 8 % and 15 %, respectively.

The curves of fitness of the three metaheuristic-based algorithms with KNN, SVM in a certain run are shown in Fig. 3, respectively. The curves shown in Fig. 3 are representative according to our preliminary experiments. As we can see in Fig. 3, for KNN and SVM, ACOFS is more efficient and faster than PSOFS and GAFS. On average, PSOFS and GACOFS need about 70 iterations to find the optimum subset, while ACOFS only needs less than 40 iterations.

4 Conclusions

A key issue in machine-vision-based recognition system of cotton foreign fibers is to find the optimal feature set. In this study, FS based on metaheuristic optimization, namely ACOFS, PSOFS and GAFS, have been proposed to address this FS problem. Two different classifiers, KNN and SVM are taken for evaluating the quality of solution and classification. The experimental results show the presented methods have the excellent ability of finding a reduced set of features with high accuracy in the dataset of cotton foreign fiber. The selected feature set is great significant for machine-vision-based recognition system for cotton foreign fibers. In our future work, we will focus on improving the performance of classifiers used in the recognition rate of recognition systems of cotton foreign fibers.

References

Yang, W., Li, D., Zhu, L., Kang, Y., Li, F.: A new approach for image processing in foreign fiber detection. Comput. Electron. Agric. 68(1), 68–77 (2009)

Li, D., Yang, W., Wang, S.: Classification of foreign fibers in cotton lint using machine vision and multi-class support vector machine. Comput. Electron. Agric. 74(2), 274–279 (2010)

Yang, W., Lu, S., Wang, S., Li, D.: Fast recognition of foreign fibers in cotton lint using machine vision. Math. Comput. Model. 54(3), 877–882 (2011)

Lin, J.Y., Ke, H.R., Chien, B.C., Yang, W.P.: Classifier design with feature selection and feature extraction using layered genetic programming. Expert Syst. Appl. 34(2), 1384–1393 (2008)

Bolón-Canedo, V., Sánchez-Maroño, N., Alonso-Betanzos, A.: A review of feature selection methods on synthetic data. Knowl. Inf. Syst. 34(3), 483–519 (2013)

Sun, Z., Bebis, G., Miller, R.: Object detection using feature subset selection. Pattern Recogn. 37(11), 2165–2176 (2004)

Pedergnana, M., Marpu, P.R., Dalla Mura, M., Benediktsson, J.A., Bruzzone, L.: A novel technique for optimal feature selection in attribute profiles based on genetic algorithms. IEEE Trans. Geosci. Remote Sens. 51(6), 3514–3528 (2013)

Chen, H.L., Yang, B., Wang, G., Liu, J., Xu, X., Wang, S.J., Liu, D.Y.: A novel bankruptcy prediction model based on an adaptive fuzzy k-nearest neighbor method. Knowl.-Based Syst. 24(8), 1348–1359 (2011)

Cover, T., Hart, P.: Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 13(1), 21–27 (1967)

Xuegong, Z.: Introduction to statistical learning theory and support vector machines. Acta Automatica Sinica 26(1), 32–42 (2000)

Eberhart, R.C., Kennedy, J.: A new optimizer using particle swarm theory. In: Proceedings of the Sixth International Symposium on Micro Machine and Human Science, vol. 1, pp. 39–43 (1995)

Chen, H.L., Yang, B., Wang, G., Wang, S.J., Liu, J., Liu, D.Y.: Support vector machine based diagnostic system for breast cancer using swarm intelligence. J. Med. Syst. 36(4), 2505–2519 (2012)

Kennedy, J., Eberhart, R.C.: A discrete binary version of the particle swarm algorithm. In: IEEE International Conference on Systems, Man, and Cybernetics 1997, vol. 5, pp. 4104–4108 (1997)

Dorigo, M., Maniezzo, V., Colorni, A.: Ant system: optimization by a colony of cooperating agents. IEEE Trans. Syst. Man Cybern. B 26(1), 29–41 (1996)

Zhao, X., Li, D., Yang, B., Ma, C., Zhu, Y., Chen, H.: Feature selection based on improved ant colony optimization for online detection of foreign fiber in cotton. Appl. Soft Comput. 24, 585–596 (2014)

Forsati, R., Moayedikia, A., Jensen, R., Shamsfard, M., Meybodi, M.R.: Enriched ant colony optimization and its application in feature selection. Neurocomputing 142, 354–371 (2014)

Acknowledgments

This study is funded by the National Natural Science Foundation of China (61402195, 61471133 and 61571444), Guangdong Natural Science Foundation (2016A030310072), Guangdong Science and Technology Plan Project (2015A070709015 and 2015A020209171), the Science and Technology Plan Project of Wenzhou, China (G20140048), Shenzhen strategic emerging industry development funds (JCYJ20140418100633634.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 IFIP International Federation for Information Processing

About this paper

Cite this paper

Zhao, X. et al. (2016). Comparative Study on Metaheuristic-Based Feature Selection for Cotton Foreign Fibers Recognition. In: Li, D., Li, Z. (eds) Computer and Computing Technologies in Agriculture IX. CCTA 2015. IFIP Advances in Information and Communication Technology, vol 478. Springer, Cham. https://doi.org/10.1007/978-3-319-48357-3_2

Download citation

DOI: https://doi.org/10.1007/978-3-319-48357-3_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-48356-6

Online ISBN: 978-3-319-48357-3

eBook Packages: Computer ScienceComputer Science (R0)