Abstract

In this paper we discuss various navigational aids for people who have a visual impairment. Navigational technologies are classified according to the mode of accommodation and the type of sensor utilized to collect environmental information. Notable examples of navigational aids are discussed, along with the advantages and disadvantages of each. Operational and design considerations for navigational aids are suggested. We conclude with a discussion of how multimodal interaction benefits people who use technology as an accommodation and can benefit everyone.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

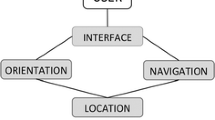

Technologies that utilize multimodal interaction have the ability to benefit everyone. For example, commercial GPS devices, such as the Garmin Drive 60LM, use both visual and auditory alerts to assist drivers in navigating safely [1]. Automobile manufacturers have recognized the complex nature of operating a vehicle and have begun to incorporate multimodal systems into other aspects of vehicles (e.g., safety features) [2]. While the benefits and disadvantages of these new technologies have yet to be thoroughly assessed, many people would recognize the visual system’s important role in mobility and orientation, whether driving or walking. For example, if a person is engaged in wayfinding (i.e., both orienting and navigating) she must determine her current location, find a suitable route, utilize cues or landmarks to plan the next step and detect arrival at the intended location [3]. Each step in the wayfinding process can be cognitively demanding; however, for sighted people the process is performed automatically and is less effortful than navigating without sight. In order to successfully navigate, people with a visual impairment must learn to interpret nonvisual sensory signals to assist with each of the four steps in a wayfinding task. Navigating via the use of non-visual means is typically less accurate than utilizing visual cues because tactile, olfactory, and audible landmarks can be temporary, confusing, and take longer to interpret [4]. Thus, wayfinding taxes the cognitive and attentional demands of people with a visual impairment more than their sighted counterparts and results in less precise outcomes [5].

Multimodal technologies are a possible solution to the problem of navigating with a visual impairment. Indeed, multiple resource theory (MRT) continues to have an impact on technological design [6]. While MRT was intended as a theory of simultaneous, multitask performance, attention remains a unified mental construct that cannot be ignored when designing technological interfaces [7]. The application of multimodal interfaces to the design of navigational technologies for those with a visual impairment offers numerous advantages. First, the technology can substitute the use of a non-visual sensory modality in place of an impaired visual system. Navigational aids that allow for sensory substitution are not necessarily multimodal (see Sect. 3 for a discussion of specific navigational aids); however, multimodal interfaces offer a second benefit: the ability to translate visual cues into the sensory modality that can best represent the information needed to successfully orient and travel. For example, finding an optimal route to travel can be indicated by vibrotactile stimulation and discovering information about a location (e.g., a store’s hours of operation) can be indicated audibly. While Morse code could be used to convey textual information through vibrotactile means, this would require someone to know another language in order to accurately interpret the stimuli. Similarly, determining a correct path of travel could be accomplished by listening to auditory information; however, this can result in imprecise navigation due to the inexactness of spatial language (e.g., “take a slight right in 300 feet”) [8]. Sensory substitution devices are also less invasive and more affordable than sensory replacement devices (e.g., cochlear implants).

2 Classifying Multimodal Functionality in Navigational Technologies for People with a Visual Impairment

2.1 Obstacle Detection vs Route Guidance

Tools for navigating with a visual impairment can be classified according to the primary goal of the technology: obstacle detection or route guidance. Mobility aids, such as a white cane or a dog guide, are primarily used for obstacle detection. Route Guidance is accomplished by using navigational aids (sometimes called electronic travel aids or ETAs). Mobility aids typically have a limited effective range of a few meters whereas ETAs are intended to provide more detailed information about the environment and have longer range [9]. Differences in the effective range of use of navigational technologies should be taken into consideration when designing travel aids. For example, vibrotactile feedback is appropriate for obstacle detection whereas auditory instructions can provide different information about objects and locations farther away. The way information is received and understood by the human brain plays a part. Vision, audition, and taction can be perceived spatiotemporally [7]. However, there are differences in how sensory input is understood and acted upon [10]. Tactile feedback may take less time to understand but audition may provide more readily understandable information. Individual differences in sensory integration and processing are further defined in populations with one or more disabilities as a result of the level of impairment [11]. Designers of navigational technologies must take these differences into account when deciding on the appropriate modes of sensory substitution and information presentation.

2.2 Camera, GPS, and Sonar Technologies

Navigational aids can also be defined by the type of sensors used to receive environmental information. Commonly used sensors include cameras, GPS, infrared sensors (IR), lasers, radio frequency identification (RFID), and sonic sensors (sonar). Any navigational aid using these types of sensors provides auditory or tactile feedback to the user, and sometimes both. Some devices provide multimodal feedback to the user without being designed to do so (e.g., white canes). There are advantages and disadvantages to each type of technology. Navigational devices that employ cameras, lasers, or sonar allow people with a visual impairment to sense objects up to several meters away and do not require the environment to be retrofitted. However, such devices do not work well in crowds and have trouble with moving objects. Sonar devices providing a high degree of resolution about the environment tend to have a steeper learning curve, thus requiring more time and effort to learn. Sonar can interfere with a person’s hearing, a sensory modality heavily relied upon by someone with impaired or no vision. Infrared and RFID are effective in both indoor and outdoor environments; however, expensive retrofitting of the environment is required. Additionally, IR and RFID do not require line of sight in order to work but are limited in range. A more commonly used navigational aid, GPS, is highly customizable and allows pedestrians with a visual impairment to preview their routes before traveling. However, GPS does not work indoors and the degree of accuracy provided by GPS systems is not detailed enough for people with a visual impairment. The accuracy of GPS systems is further degraded by dense foliage, tall buildings, and cloud cover. Each technology has advantages and disadvantages and people with a visual impairment often compensate for the weaknesses in their devices by carrying multiple tools on each journey [12].

3 Discussion of Selected Navigational Technologies

The following passage discusses notable examples of navigational technologies for people with a visual impairment. This review is not a comprehensive list; instead, it is meant to emphasize important aspects of user interaction with navigational aids. For a thorough discussion of navigating with a visual impairment and navigation aids for people with a visual impairment, see Blasch and Welsh’s book on orientation and mobility [13]. Technologies that magnify objects (e.g., screen readers, monocular devices) are not included in this paper.

3.1 White Cane

The white cane is a symbol of having a visual impairment; indeed, all 50 of the United States have “White Cane” laws, which protect pedestrians who have a visual impairment [14]. While a cane may not be considered to be an advanced technological device, contemporary white canes can be found in many variants according to user preference. Different cane tips provide varying degrees of tactile information and produce distinct sounds when coming into contact with objects. Materials such as aluminum and graphite are used in construction to keep the cane strong while reducing weight. The white cane was intended to be used as an extension of touch; people receive tactile feedback when the cane encounters objects in the environment; however, the cane also produces sounds when interacting with objects. The maximum effective range of the white cane is thus extended when experienced users learn to detect large objects nearby (or the absence of those objects, such as a passage between buildings) using echolocation. The remaining technologies discussed here are not intended to replace the white cane; rather, they are complementary devices.

3.2 Ultra Cane

The Ultra Cane, developed by Sound Foresight Technology Ltd, utilizes ultrasonic technology to detect potential hazards at a range of two to four meters in front of the user [16]. The cane can sense objects on the ground or in front of the user’s head and torso, thus detecting obstacles that would normally be missed by a traditional white cane. Feedback about obstacle location is delivered in the form of vibrotactile stimulation in the handle of the cane. Ground level obstacles are signaled with activation of the rear vibromotor; head level obstacles are indicated by activation of the forward vibromotor. Although the Ultra Cane can detect hazards not ordinarily detected by a traditional white cane, the Ultra Cane is bulkier, heavier, and more expensive. As with any ultrasonic technology, line of sight is required for reliable obstacle detection. The Ultra Cane’s vibrotactile feedback may increase the user’s cognitive workload as he spends time and effort discerning which signals are from the device’s vibromotors and which are from contact with the environment.

3.3 Sonic Pathfinder

The Sonic Pathfinder, developed by Perceptual Alternatives, is a head-mounted device that warns the user via musical notes of obstacles in the path of travel [17]. As users approach an obstacle, notes produced by the Pathfinder descend the musical scale indicating proximity to an object. Orientation of the object to the user is indicated by location of the note (i.e., left and/or right ear detects the signal). A distinctive feature of the Pathfinder is that the effective range is determined by the user’s walking speed, which allows for a greater degree of control. The distinctive appearance of the device is also a disadvantage. People who have a visual impairment do not want public perception to be focused on their disability [18]. The Pathfinder’s use of musical notes to convey information to the user means that the person’s hearing is at least partially blocked. For people who have little or no vision, the sense of hearing becomes even more important, especially when navigating.

3.4 Nurion Laser Cane

Similar to the Ultra Cane, the Nurion Laser Cane detects obstacles in front of the user; however, instead of ultrasonic transducers, the Laser Cane utilizes diode lasers [19]. Information about the location of obstacles is delivered to the user via auditory and vibrotactile feedback. As with ultrasonic technology, line of sight is required to use the Laser Cane.

3.5 BrainPort V100

The BrainPort V100, developed by Wicab, employs a tactile display and camera to convey information about objects to users [20]. The camera is mounted on a pair of sunglasses and connects to the tactile display, which sits on the user’s tongue. The tactile “picture” communicated to the user has a maximum resolution of 400 by 400 pixels, which allows the person to discern objects in the environment. The BrainPort has not yet seen widespread adoption, however, the tongue display draws on decades of research with tactile displays for the abdomen and back [21–23]. Users may not want to travel in public with a cord hanging out of the mouth; however, the potential benefits offered by the system may warrant using the device while traversing problematic routes (e.g., near construction sites).

3.6 Talking Signs

Talking Signs, developed by the San Francisco based Smith-Kettlewell Eye Research Institute, is an audible signage system composed of a handheld IR receiver and strategically placed IR transmitters. The user waves the device around until a signal is located and, once a signal is detected, the handheld receiver decodes the audio message conveyed by the transmitter. Audio messages can describe aspects of the environment (e.g., “ticket kiosk”) and can guide users to a location by honing in on the IR signal, which is directional in nature. Talking Signs can work in both indoor and outdoor environments and the system is an orientation device since both wayfinding information and landmark identification are provided.

3.7 CINVESTAV

A navigational aid in the form of smart glasses was developed at the Center for Research and Advanced Studies (CINVESTAV). The system combines GPS, ultrasound, cameras, and artificial intelligence (AI) to assist with visually impaired navigation [25]. Obstacle detection is provided by cameras and ultrasound technology while the GPS offers navigational capabilities. The AI can interpret text, colors, and recognize locations and relay the information through synthetic speech. The CINVESTAV glasses are not yet commercially available and behavioral studies are needed to confirm the usability and durability of the system.

3.8 GPS Units

GPS technology has greatly improved the navigational abilities of many drivers and pedestrians; however, many of the commercially available units are not accessible to people who must navigate with a visual impairment [15]. This is reflected in the high cost of GPS units developed specifically for people with impaired or no vision (usually several hundred to several thousand dollars). The BrailleNote GPS, developed by Sendero, can provide detailed verbal information about street names, locations, and points of interest. The system can be customized according to user preference and the software is accessible. The hardware is expensive, however, and additional software updates are required to be purchased in the future in order to maintain accurate guidance. Most importantly, without additional hardware a blind pedestrian will not be able to obtain precise location information from GPS technology alone. For example, although someone who is legally blind may be able to tell that the sidewalk is four feet away, a pedestrian that has no light perception (i.e., completely blind) could find herself lost in the middle of an intersection. Additionally, GPS does not work in interior environments and accuracy is degraded when the pedestrian is between tall buildings.

3.9 Smartphone Apps

Many smartphone navigation apps for people with a visual impairment give auditory directions; however, there are several apps that convey information via haptic or multimodal means. Blindsquare is similar to GPS apps intended for use by sighted individuals [27]. Using a smartphone’s onboard GPS technology, the application determines a user’s current location and incorporates information from other apps (e.g., Foursquare) about the surrounding environment. Blindsquare allows a user to mark and save locations for easy referencing. Most notably, the app allows the user to customize the filters so that only relevant information is presented. Although not a navigation app, TapTapSee can assist people with a visual impairment in identifying landmarks and signage [28]. Object recognition and identification can be used to help a traveler know his current location, the first step in a wayfinding task. Smartphone technology has allowed for multiple functions to be incorporated into a handheld device, such that innovative apps and services can be obtained. Using the app Be My Eyes, a person with a visual impairment can use her phone to call someone for help and establish a live video connection with someone who can assist her with anything from finding items in a grocery store to knowing the expiration date on a jar of mayonnaise [29]. ARIANNA, an app for independent indoor navigation, allows a user to use his cellphone to detect colored lines on the floor of a building, such as those found in hospitals [30]. The user inputs his desired destination in the app and scans the environment with his phone until the correct colored line is detected. While following the path, his phone vibrates as long as his phone camera can detect the appropriate colored line in the middle of the screen. A disadvantage of this app is that it will only work if the colored lines are already in place, not hidden by objects in the way, and not too worn to be useful. ARIANNA’s developers have suggested using infrared lines which can be detected by the IR sensors already in contemporary smartphones. Future smartphones may include more hardware, which could allow for the development of apps that will further assist in navigation, particularly for those with a visual impairment.

4 Future Development of Navigational Technologies for Those with a Visual Impairment

The rapid development of navigational technologies (e.g., GPS units) and smartphones, coupled with advances in imaging technology and processing power heralds an optimistic future for travelers, sighted or not. Unfortunately, our understanding of the human perceptual and cognitive systems does not advance as quickly. There is a need for behavioral research in every aspect of wayfinding: sensory perception, integration, and translation, cognitive and attentional demands of orienting and navigating, and usability research with regard to technologies used. The need for research in these areas is highlighted by the issues faced by people with a visual impairment.

4.1 Vibrotactile Feedback for Navigation

Vibrotactile feedback has been demonstrated to be effective at helping people to navigate, even under stressful conditions [31, 32]. Prior research has demonstrated that feedback from vibrotactile stimuli can result in faster orienting than spatial language with no loss of accuracy, even when participants were placed in total darkness [33]. The optimal form of a vibrotactile navigational aid may be a wearable unit, which allows for directional information to be mapped onto the body. Route guidance can be arranged spatially on the user’s body while information about proximity is coded in another tactile dimension (e.g., amplitude) [34]. There is a need for more research investigating optimal locations on the body for receiving vibrotactile feedback for navigational purposes, especially with regard to the needs of people with a visual impairment (see Sect. 4.4 for a discussion) [35]. There is evidence to indicate that a multimodal navigational system can result in less direction and distance errors compared to unimodal interfaces, even if mental workload (e.g., demand on attentional resources) is increased [36].

4.2 Types and Levels of Automation to Assist the User

The proliferation and continued use of navigational technologies suggests that such systems are beneficial. The ways in which automation can assist a person who is utilizing navigational systems are described in a seminal work by Parasuraman, Sheridan, and Wickens. [37]. Returning to our earlier example of a person using a GPS unit to navigate, we see how automating the technology can benefit (or harm) her. When activated, a GPS unit automatically keeps track of the user’s position; the system is engaged in information acquisition (receiving positional information from satellites) and performing at a high level of automation (level 10). Information acquisition by technology is analogous to sensory perception in humans. Our user decides to input a destination (more information is acquired, but at a much lower level of automation: (1) and the system computes an optimal path of travel (information analysis, similar to working memory in humans, occurs at level 7 of automation). The traveler approves of a route suggested by the system and the GPS unit provides route guidance. The above example is open to interpretation. For example, some GPS units may offer one suggestion for path of travel, others may offer several. By automating component processes of wayfinding (e.g., keeping track of position and heading), the mental workload of the traveler is reduced. Combining automation with assistive navigational aids can result in improved performance, reduced mental workload, and/or greater feelings of safety and satisfaction. More research is needed to determine how the different types and levels of automation can affect navigating with a visual impairment. Further, there is a need for research investigating the aspects of how to optimally substitute sensory modalities, translate sensory information, and represent information in a multimodal way [36]. There is also a need for research investigating how information can be input into navigational systems via multimodal interaction.

4.3 Further Design Considerations

Considerations for the design of assistive navigational aids must take into account the population for which the device is being developed. An audible GPS unit would not benefit someone who is deaf and a visual display would not be advantageous, or usable, for someone who is completely blind. Despite a wide range of disabilities and functioning sensory systems, there are several design characteristics that have been identified as being beneficial for everyone [3]. First, a navigation system should be able to identify changes in the environment and notify the user. Changes in the environment can be incorporated via software updates or camera-based hardware coupled with an AI. A second consideration for device design is the need to reduce the number of devices people with a visual impairment have to carry in order to accommodate for having an impairment. One method for ameliorating the problem is to incorporate new technologies into existing devices that people with a visual impairment are already carrying (e.g., smartphones). Furthermore, navigational aids, or any assistive device, should not increase the social stigma of having a disability by drawing unwanted attention [38]. Devices should be customizable; as a user’s needs change (e.g., deteriorating vision) the device can continue to be used as an aid without having to be replaced or a having to learn a new device. Finally, incorporating multimodal interaction into assistive devices, particularly navigational aids, is a critical step toward ensuring that these devices remain useful, usable, and enjoyable.

4.4 Accommodating for Aging, Multiple Impairments, Concomitant Medical Conditions

Many of the studies involving sensory substitution and multimodal interfaces involve young adults with no reported physical or mental disabilities [31–34]. For the purposes of this discussion, we have focused on visual impairments and presumed that limited or no vision was the only difference between people with a visual impairment and people without a disability. However, a visual impairment is oftentimes the result of a concomitant medical condition (e.g., diabetic retinopathy, stroke) that can further impair someone’s ability to navigate, be mobile, and/or use technological aids. The vast majority of people with a visual impairment are not born with a disability; indeed, the opposite is true. Vision loss is mostly seen in adults older than 50 years of age. Aging can result in decrements in cognitive and physical ability. As such, hearing loss often occurs with vision loss and it is not uncommon for a visual impairment to develop alongside a tactile impairment. In no other population is the necessity of having multimodal technologies more apparent.

4.5 Customization to Account for Individual Differences

As mentioned in Sect. 4.3, navigational technologies should be customizable. This is not just to account for individual differences in sensory modalities, but also user preferences. Assistive technologies are abandoned for numerous reasons, even if a benefit is still provided through use of the technology [39–41]. Technological devices that allow for a desirable degree of individual customization, including the ability to operate the device via multimodal means, are likely to be more useful and desirable to users [42].

4.6 Advances in Multimodal Technology Can Benefit Us All

We have focused on how sensory substation and multimodal interaction have been employed in navigational aids for people with a visual impairment; however, technologies and environments that are more accessible for those with a disability are accessible for everyone. Multimodal interaction allows for a greater degree of interaction, freedom of choice, and user preference when interacting with a system. More research is needed in every aspect of multimodal interfaces; however, there is a need for such research with regard to individuals with a physical or cognitive impairment.

References

Garmin. http://www.garmin.com/en-US

Consumer Reports. http://www.consumerreports.org/cro/magazine/2015/04/cars-that-can-save-your-life/index.htm

Quinones, P.A., Greene, T., Yang, R., Newman, M.: Supporting visually impaired navigation: a needs-finding study. In: CHI 2011 Extended Abstracts on Human Factors in Computing Systems, pp. 1645–1650. ACM (2011)

Thinus-Blanc, C., Gaunet, F.: Representation of space in blind persons: vision as a spatial sense? Psych bull 121(1), 20 (1997)

Rieser, J.J., Guth, D.A., Hill, E.W.: Mental processes mediating independent travel: implications for orientation and mobility. Vis. Impair Blindness 76, 213–218 (1982)

Sarter, N.: Multiple-resource theory as a basis for multimodal interface design: Success stories, qualifications, and research needs. In: Attention Theory Practice, pp. 187–195 (2006)

Hancock, P.A., Oron-Gilad, T., Szalma, J.L.: Elaborations of the multiple-resource theory of attention. In: Kramer, A.F., Wiegmann, D.A.K. (eds.) Attention: From Theory to Practice, pp. 45–56 (2006)

Frank, A.U., Mark, D.M: Language issues for geographical information systems (1991)

Brabyn, J.: A Review of Mobility Aids and Means of Assessment. Martinus Nijhoff, Boston (1985)

Kunimi, M., Kojima, H.: The effects of processing speed and memory span on working memory. GeroPsych J. Gerontopsychology Geriatr. Psychiatry 27(3), 109 (2014)

Mangione, C.M., Phillips, R.S., Seddon, J.M., Lawrence, M.G., Cook, E.F., Dailey, R., Goldman, L.: Development of the ‘Activities of Daily Vision Scale’: a measure of visual functional status. Med. Care, 1111–1126 (1992)

Kane, S.K., Jayant, C., Wobbrock, J.O., Ladner, R.E.: Freedom to roam: a study of mobile device adoption and accessibility for people with visual and motor disabilities. In: Proceedings of the 11th International ACM SIGACCESS Conference on Computers and Accessibility, pp. 115–122. ACM (2009)

Blasch, B.B., Welsh, R.L., Wiener, W.R.: Foundations of Orientation and Mobility, 2nd edn. AFB Press, New York (1997)

Blasch, B., Stuckey, K.: Accessibility and mobility of persons who are visually impaired: a historical analysis. J. Vis. Impairment Blindness (JVIB), 89(5) (1995)

Giudice, N.A., Legge, G.E.: Blind navigation and the role of technology. Eng. handb. smart technol. aging disabil. independence, 479–500 (2008)

Sound Foresight. http://www.soundforesight.co.uk/

Perceptual alternatives. http://www.sonicpathfinder.org/

Williams, M.A., Hurst, A., Kane, S.K.: Pray before you step out: describing personal and situational blind navigation behaviors. In: Proceedings of the 15th International ACM SIGACCESS Conference on Computers and Accessibility, p. 28. ACM, October 2013

Nurion-Raycal. http://www.nurion.net/LC.html

Wicab. http://www.wicab.com/

Bach-Y-Rita, P.: Brain Mechanisms in Sensory Substitutions. Academic Press, New York (1972)

Bach-y-Rita, P., Tyler, M.E., Kaczmarek, K.A.: Seeing with the brain. Int. J. Hum. Comput. INTERACT. 15(2), 285–295 (2003)

Novich, S.D., Eagleman, D.M.: A vibrotactile sensory substitution device for the deaf and profoundly hearing impaired. In: Haptics Symposium (HAPTICS), p. 1. IEEE, February 2014

Crandall, W., Brabyn, J., Bentzen, B.L., Myers, L.: Remote infrared signage evaluation for transit stations and intersections. J. Rehab. Res. Devel. 36(4), 341–355 (1999)

CINVESTAV. http://www.cinvestav.mx/

Sendero. http://www.senderogroup.com/

Blindsquare. http://blindsquare.com/

TapTapSee. http://www.taptapseeapp.com/

Be My Eyes. http://www.bemyeyes.org/

Gallo, P., Tinnirello, I., Giarré, L., Garlisi, D., Croce, D., Fagiolini, A.: ARIANNA: pAth recognition for indoor assisted navigation with augmented perception (2013). arXiv preprint arXiv:1312.3724

Merlo, J.L., Terrence, P.I., Stafford, S., Gilson, R., Hancock, P.A., Redden, E.S., White, T.L.: Communicating through the use of vibrotactile displays for dismounted and mounted soldiers (2006)

Merlo, J.L., Stafford, S., Gilson, R., Hancock, P.A.: The effects of physiological stress on tactile communication. In: Proceedings of the Human Factors and Ergonomics Society Annual Meeting, vol. 50, No. 16, pp. 1562–1566. Sage Publications, October 2006

Faugloire, E., Lejeune, L.: Evaluation of heading performance with vibrotactile guidance: The benefits of information–movement coupling compared with spatial language. J. Exp. Psychol. Appl. 20(4), 397 (2014)

van Erp, J.B., van Veen, H.A., Jansen, C., Dobbins, T.: Waypoint navigation with a vibrotactile waist belt. ACM Trans. Appl. Percept. (TAP) 2(2), 106–117 (2005)

Machida, T., Dim, N.K., Ren, X.: Suitable body parts for vibration feedback in walking navigation systems. In: Proceedings of the Third International Symposium of Chinese CHI on ZZZ, pp. 32–36. ACM, April 2015

Fujimoto, E., Turk, M.: Non-visual navigation using combined audio music and haptic cues. In: Proceedings of the 16th International Conference on Multimodal Interaction, pp. 411–418. ACM, November 2014

Parasuraman, R., Sheridan, T.B., Wickens, C.D.: A model for types and levels of human interaction with automation. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 30(3), 286–297 (2000)

Shinohara, K., Wobbrock, J.O.: In the shadow of misperception: assistive technology use and social interactions. In: CHI 2011 - SIGCHI Conference on Human Factors in Computing Systems, pp. 705–714 (2011)

Riemer-Reiss, M.L., Wacker, R.R.: Factors associated with assistive technology discontinuance among individuals with disabilities. J. Rehabil. 66(3), 44 (2000)

Jutai, J., Day, H.: Psychosocial impact of assistive devices scale (PIADS). Technol. Disabil. 14(3), 107–111 (2002)

Federici, S., Borsci, S.: The use and non-use of assistive technology in Italy: preliminary data. In: 11th AAATE Conference: Everyday Technology for Independence and Care. (2011)

Oviatt, S.: Multimodal interfaces. Hum. Comput. Interact. Handb. Fundam. Evolving Technol. Emerg. Appl. 14, 286–304 (2003). Chicago

Acknowledgements

We extend our gratitude to Brian Michaels of Florida Blind Services and the staff of Lighthouse Central Florida for their assistance with understanding the wayfinding issues faced by people who have a visual impairment. Our thanks go to Jerry Aubert (UCF College of Medicine), Michael Judith (Innovative Space Technologies, LLC), David Metcalf (UCF Institute for Simulation and Training), and the Mixed Emerging Technology Integration Lab at the Institute for Simulation and Training. We are grateful for their support.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Schwartz, M., Benkert, D. (2016). Navigating with a Visual Impairment: Problems, Tools and Possible Solutions. In: Schmorrow, D., Fidopiastis, C. (eds) Foundations of Augmented Cognition: Neuroergonomics and Operational Neuroscience. AC 2016. Lecture Notes in Computer Science(), vol 9744. Springer, Cham. https://doi.org/10.1007/978-3-319-39952-2_36

Download citation

DOI: https://doi.org/10.1007/978-3-319-39952-2_36

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-39951-5

Online ISBN: 978-3-319-39952-2

eBook Packages: Computer ScienceComputer Science (R0)