Abstract

Teaching material on scientific computing has traditionally been very focused on the mathematics and the applications, while details on how the computer is programmed to solve the problems have received little attention. Many end up writing as simple programs as possible, without being aware of much useful computer science technology that would increase the fun, efficiency, and reliability of the their scientific computing activities.

This chapter demonstrates a series of good practices and tools from modern computer science, using the simple mathematical problem \(u^{\prime}=-au\), \(u(0)=I\), such that we minimize the mathematical details and can go more in depth with implementations. The goal is to increase the technological quality of computer programming and make it match the more well-established quality of the mathematics of scientific computing.

The conventions and techniques outlined here will save you a lot of time when you incrementally extend software over time from simpler to more complicated problems. In particular, you will benefit from many good habits:

-

new code is added in a modular fashion to a library (modules),

-

programs are run through convenient user interfaces,

-

it takes one quick command to let all your code undergo heavy testing,

-

tedious manual work with running programs is automated,

-

your scientific investigations are reproducible,

-

scientific reports with top quality typesetting are produced both for paper and electronic devices.

You have full access to this open access chapter, Download chapter PDF

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

Teaching material on scientific computing has traditionally been very focused on the mathematics and the applications, while details on how the computer is programmed to solve the problems have received little attention. Many end up writing as simple programs as possible, without being aware of much useful computer science technology that would increase the fun, efficiency, and reliability of the their scientific computing activities.

This chapter demonstrates a series of good practices and tools from modern computer science, using the simple mathematical problem \(u^{\prime}=-au\), \(u(0)=I\), such that we minimize the mathematical details and can go more in depth with implementations. The goal is to increase the technological quality of computer programming and make it match the more well-established quality of the mathematics of scientific computing.

The conventions and techniques outlined here will save you a lot of time when you incrementally extend software over time from simpler to more complicated problems. In particular, you will benefit from many good habits:

-

new code is added in a modular fashion to a library (modules),

-

programs are run through convenient user interfaces,

-

it takes one quick command to let all your code undergo heavy testing,

-

tedious manual work with running programs is automated,

-

your scientific investigations are reproducible,

-

scientific reports with top quality typesetting are produced both for paper and electronic devices.

5.1 Implementations with Functions and Modules

All previous examples in this book have implemented numerical algorithms as Python functions. This is a good style that readers are expected to adopt. However, this author has experienced that many students and engineers are inclined to make ‘‘flat’’ programs, i.e., a sequence of statements without any use of functions, just to get the problem solved as quickly as possible. Since this programming style is so widespread, especially among people with MATLAB experience, we shall look at the weaknesses of flat programs and show how they can be refactored into more reusable programs based on functions.

5.1.1 Mathematical Problem and Solution Technique

We address the differential equation problem

where a, I, and T are prescribed parameters, and \(u(t)\) is the unknown function to be estimated. This mathematical model is relevant for physical phenomena featuring exponential decay in time, e.g., vertical pressure variation in the atmosphere, cooling of an object, and radioactive decay.

As we learned in Chap. 1.1.2, the time domain is discretized with points \(0=t_{0}<t_{1}\cdots<t_{N_{t}}=T\), here with a constant spacing \(\Delta t\) between the mesh points: \(\Delta t=t_{n}-t_{n-1}\), \(n=1,\ldots,N_{t}\). Let un be the numerical approximation to the exact solution at tn. A family of popular numerical methods are provided by the θ scheme,

for \(n=0,1,\ldots,N_{t}-1\). This formula produces the Forward Euler scheme when \(\theta=0\), the Backward Euler scheme when \(\theta=1\), and the Crank–Nicolson scheme when \(\theta=1/2\).

5.1.2 A First, Quick Implementation

Solving (Ch5.E3) in a program is very straightforward: just make a loop over n and evaluate the formula. The \(u(t_{n})\) values for discrete n can be stored in an array. This makes it easy to also plot the solution. It would be natural to also add the exact solution curve \(u(t)=Ie^{-at}\) to the plot.

Many have programming habits that would lead them to write a simple program like this:

This program is easy to read, and as long as it is correct, many will claim that it has sufficient quality. Nevertheless, the program suffers from two serious flaws:

-

1.

The notation in the program does not correspond exactly to the notation in the mathematical problem: the solution is called y and corresponds to u in the mathematical description, the variable A corresponds to the mathematical parameter I, N in the program is called Nt in the mathematics.

-

2.

There are no comments in the program.

These kind of flaws quickly become crucial if present in code for complicated mathematical problems and code that is meant to be extended to other problems.

We also note that the program is flat in the sense that it does not contain functions. Usually, this is a bad habit, but let us first correct the two mentioned flaws.

5.1.3 A More Decent Program

A code of better quality arises from fixing the notation and adding comments:

Comments in a program

There is obviously not just one way to comment a program, and opinions may differ as to what code should be commented. The guiding principle is, however, that comments should make the program easy to understand for the human eye. Do not comment obvious constructions, but focus on ideas and (‘‘what happens in the next statements?’’) and on explaining code that can be found complicated.

Refactoring into functions

At first sight, our updated program seems like a good starting point for playing around with the mathematical problem: we can just change parameters and rerun. Although such edit-and-rerun sessions are good for initial exploration, one will soon extend the experiments and start developing the code further. Say we want to compare \(\theta=0,1,0.5\) in the same plot. This extension requires changes all over the code and quickly leads to errors. To do something serious with this program, we have to break it into smaller pieces and make sure each piece is well tested, and ensure that the program is sufficiently general and can be reused in new contexts without changes. The next natural step is therefore to isolate the numerical computations and the visualization in separate Python functions. Such a rewrite of a code, without essentially changing the functionality, but just improve the quality of the code, is known as refactoring. After quickly putting together and testing a program, the next step is to refactor it so it becomes better prepared for extensions.

Program file vs IDE vs notebook

There are basically three different ways of working with Python code:

-

1.

One writes the code in a file, using a text editor (such as Emacs or Vim) and runs it in a terminal window.

-

2.

One applies an Integrated Development Environment (the simplest is IDLE, which comes with standard Python) containing a graphical user interface with an editor and an element where Python code can be run.

-

3.

One applies the Jupyter Notebook (previously known as IPython Notebook), which offers an interactive environment for Python code where plots are automatically inserted after the code, see Fig. 5.1.

It appears that method 1 and 2 are quite equivalent, but the notebook encourages more experimental code and therefore also flat programs. Consequently, notebook users will normally need to think more about refactoring code and increase the use of functions after initial experimentation.

5.1.4 Prefixing Imported Functions by the Module Name

Import statements of the form from module import * import all functions and variables in module.py into the current file. This is often referred to as ‘‘import star’’, and many find this convenient, but it is not considered as a good programming style in Python. For example, when doing

we get mathematical functions like sin and exp as well as MATLAB-style functions like linspace and plot, which can be called by these well-known names. Unfortunately, it sometimes becomes confusing to know where a particular function comes from, i.e., what modules you need to import. Is a desired function from numpy or matplotlib.pyplot? Or is it our own function? These questions are easy to answer if functions in modules are prefixed by the module name. Doing an additional from math import * is really crucial: now sin, cos, and other mathematical functions are imported and their names hide those previously imported from numpy. That is, sin is now a sine function that accepts a float argument, not an array.

Doing the import such that module functions must have a prefix is generally recommended:

The modules numpy and matplotlib.pyplot are frequently used, and since their full names are quite tedious to write, two standard abbreviations have evolved in the Python scientific computing community:

The downside of prefixing functions by the module name is that mathematical expressions like \(e^{-at}\sin(2\pi t)\) get cluttered with module names,

Such an expression looks like exp(-a*t)*sin(2*pi*t) in most other programming languages. Similarly, np.linspace and plt.plot look less familiar to people who are used to MATLAB and who have not adopted Python’s prefix style. Whether to do from module import * or import module depends on personal taste and the problem at hand. In these writings we use from module import * in more basic, shorter programs where similarity with MATLAB could be an advantage. However, in reusable modules we prefix calls to module functions by their function name, or do explicit import of the needed functions:

Prefixing module functions or not?

It can be advantageous to do a combination: mathematical functions in formulas are imported without prefix, while module functions in general are called with a prefix. For the numpy package we can do

such that mathematical expression can apply exp, sum, and sqrt and hence look as close to the mathematical formulas as possible (without a disturbing prefix). Other calls to numpy function are done with the prefix, as in np.linspace.

5.1.5 Implementing the Numerical Algorithm in a Function

The solution formula (Ch5.E3) is completely general and should be available as a Python function solver with all input data as function arguments and all output data returned to the calling code. With this solver function we can solve all types of problems (Ch5.E1)–(Ch5.E2) by an easy-to-read one-line statement:

Refactoring the numerical method in the previous flat program in terms of a solver function and prefixing calls to module functions by the module name leads to this code:

Tip: Always use a doc string to document a function!

Python has a convention for documenting the purpose and usage of a function in a doc string: simply place the documentation in a one- or multi-line triple-quoted string right after the function header.

Be careful with unintended integer division!

Note that we in the solver function explicitly covert dt to a float object. If not, the updating formula for u[n+1] may evaluate to zero because of integer division when theta, a, and dt are integers!

5.1.6 Do not Have Several Versions of a Code

One of the most serious flaws in computational work is to have several slightly different implementations of the same computational algorithms lying around in various program files. This is very likely to happen, because busy scientists often want to test a slight variation of a code to see what happens. A quick copy-and-edit does the task, but such quick hacks tend to survive. When a real correction is needed in the implementation, it is difficult to ensure that the correction is done in all relevant files. In fact, this is a general problem in programming, which has led to an important principle.

The DRY principle: Don’t repeat yourself!

When implementing a particular functionality in a computer program, make sure this functionality and its variations are implemented in just one piece of code. That is, if you need to revise the implementation, there should be one and only one place to edit. It follows that you should never duplicate code (don’t repeat yourself!), and code snippets that are similar should be factored into one piece (function) and parameterized (by function arguments).

The DRY principle means that our solver function should not be copied to a new file if we need some modifications. Instead, we should try to extend solver such that the new and old needs are met by a single function. Sometimes this process requires a new refactoring, but having a numerical method in one and only one place is a great advantage.

5.1.7 Making a Module

As soon as you start making Python functions in a program, you should make sure the program file fulfills the requirement of a module. This means that you can import and reuse your functions in other programs too. For example, if our solver function resides in a module file decay.py, another program may reuse of the function either by

or by a slightly different import statement, combined with a subsequent prefix of the function name by the name of the module:

The requirements for a program file to also qualify for a module are simple:

-

1.

The filename without .py must be a valid Python variable name.

-

2.

The main program must be executed (through statements or a function call) in the test block.

The test block is normally placed at the end of a module file:

When the module file is executed as a stand-alone program, the if test is true and the indented statements are run. If the module file is imported, however, __name__ equals the module name and the test block is not executed.

To demonstrate the difference, consider the trivial module file hello.py with one function and a call to this function as main program:

Without the test block, the code reads

With this latter version of the file, any attempt to import hello will, at the same time, execute the call hello() and hence write ‘‘Hello, World!’’ to the screen. Such output is not desired when importing a module! To make import and execution of code independent for another program that wants to use the function hello, the module hello must be written with a test block. Furthermore, running the file itself as python hello.py will make the block active and lead to the desired printing.

All coming functions are placed in a module!

The many functions to be explained in the following text are put in one module file decay.py .

What more than the solver function is needed in our decay module to do everything we did in the previous, flat program? We need import statements for numpy and matplotlib as well as another function for producing the plot. It can also be convenient to put the exact solution in a Python function. Our module decay.py then looks like this:

We could consider doing from numpy import exp, sqrt, sum to make the mathematical expressions with these functions closer to the mathematical formulas, but here we employed the prefix since the formulas are so simple and easy to read.

This module file does exactly the same as the previous, flat program, but now it becomes much easier to extend the code with more functions that produce other plots, other experiments, etc. Even more important, though, is that the numerical algorithm is coded and tested once and for all in the solver function, and any need to solve the mathematical problem is a matter of one function call.

5.1.8 Example on Extending the Module Code

Let us specifically demonstrate one extension of the flat program in Sect. 5.1.2 that would require substantial editing of the flat code (Sect. 5.1.3), while in a structured module (Sect. 5.1.7), we can simply add a new function without affecting the existing code.

Our example that illustrates the extension is to make a comparison between the numerical solutions for various schemes (θ values) and the exact solution:

Wait a minute!

Look at the flat program in Sect. 5.1.2, and try to imagine which edits that are required to solve this new problem.

With the solver function at hand, we can simply create a function with a loop over theta values and add the necessary plot statements:

A call to this experiment_compare_schemes function must be placed in the test block, or you can run the program from IPython instead:

We do not present how the flat program from Sect. 5.1.3 must be refactored to produce the desired plots, but simply state that the danger of introducing bugs is significantly larger than when just writing an additional function in the decay module.

5.1.9 Documenting Functions and Modules

We have already emphasized the importance of documenting functions with a doc string (see Sect. 5.1.5). Now it is time to show how doc strings should be structured in order to take advantage of the documentation utilities in the numpy module. The idea is to follow a convention that in itself makes a good pure text doc string in the terminal window and at the same time can be translated to beautiful HTML manuals for the web.

The conventions for numpy style doc strings are well documented, so here we just present a basic example that the reader can adopt. Input arguments to a function are listed under the heading Parameters, while returned values are listed under Returns. It is a good idea to also add an Examples section on the usage of the function. More complicated software may have additional sections, see pydoc numpy.load for an example. The markup language available for doc strings is Sphinx-extended reStructuredText. The example below shows typical constructs: 1) how inline mathematics is written with the :math: directive, 2) how arguments to the functions are referred to using single backticks (inline monospace font for code applies double backticks), and 3) how arguments and return values are listed with types and explanation.

If you follow such doc string conventions in your software, you can easily produce nice manuals that meet the standard expected within the Python scientific computing community.

Sphinx requires quite a number of manual steps to prepare a manual, so it is recommended to use a premade script to automate the steps. (By default, the script generates documentation for all *.py files in the current directory. You need to do a pip install of sphinx and numpydoc to make the script work.) Figure 5.2 provides an example of what the above doc strings look like when Sphinx has transformed them to HTML.

5.1.10 Logging Intermediate Results

Sometimes one may wish that a simulation program could write out intermediate results for inspection. This could be accomplished by a (global) verbose variable and code like

The professional way to do report intermediate results and problems is, however, to use a logger. This is an object that writes messages to a log file. The messages are classified as debug, info, and warning.

Introductory example

Here is a simple example using defining a logger, using Python’s logging module:

Running this program gives the following output in the log file myprog.log:

The logger has different levels of messages, ordered as critical, error, warning, info, and debug. The level argument to logging.basicConfig sets the level and thereby determines what the logger will print to the file: all messages at the specified and lower levels are printed. For example, in the above example we set the level to be info, and therefore the critical, error, warning, and info messages were printed, but not the debug message. Setting level to debug (logging.DEBUG) prints all messages, while level critical prints only the critical messages.

The filemode argument is set to w such that any existing log file is overwritten (the default is a, which means append new messages to an existing log file, but this is seldom what you want in mathematical computations).

The messages are preceded by the date and time and the level of the message. This output is governed by the format argument: asctime is the date and time, levelname is the name of the message level, and message is the message itself. Setting format=’%(message)s’ ensures that just the message and nothing more is printed on each line. The datefmt string specifies the formatting of the date and time, using the rules of the time.strftime function.

Using a logger in our solver function

Let us let a logger write out intermediate results and some debugging results in the solver function. Such messages are useful for monitoring the simulation and for debugging it, respectively.

The application code that calls solver_with_logging needs to configure the logger. The decay module offers a default configure function:

If the user of a library does not configure a logger or call this configure function, the library should prevent error messages by declaring a default logger that does nothing:

We can run the new solver function with logging in a shell:

During this execution, each logging message is appended to the log file. Suppose we add some pause (time.sleep(2)) at each time level such that the execution takes some time. In another terminal window we can then monitor the evolution of decay.log and the simulation by the tail -f Unix command:

Especially in simulation where each time step demands considerable CPU time (minutes, hours), it can be handy to monitor such a log file to see the evolution of the simulation.

If we want to look more closely into the numerator and denominator of the formula for \(u^{n+1}\), we can change the logging level to level=logging.DEBUG and get output in decay.log like

Logging can be much more sophisticated than shown above. One can, e.g., output critical messages to the screen and all messages to a file. This requires more code as demonstrated in the Logging Cookbook.

5.2 User Interfaces

It is good programming practice to let programs read input from some user interface, rather than requiring users to edit parameter values in the source code. With effective user interfaces it becomes easier and safer to apply the code for scientific investigations and in particular to automate large-scale investigations by other programs (see Sect. 5.6).

Reading input data can be done in many ways. We have to decide on the functionality of the user interface, i.e., how we want to operate the program when providing input. Thereafter, we use appropriate tools to implement the particular user interface. There are four basic types of user interface, listed here according to implementational complexity, from lowest to highest:

-

1.

Questions and answers in the terminal window

-

2.

Command-line arguments

-

3.

Reading data from files

-

4.

Graphical user interfaces (GUIs)

Personal preferences of user interfaces differ substantially, and it is difficult to present recommendations or pros and cons. Alternatives 2 and 4 are most popular and will be addressed next. The goal is to make it easy for the user to set physical and numerical parameters in our decay.py program. However, we use a little toy program, called prog.py, as introductory example:

The essential content is that prog.py has two input parameters: delta and p. A user interface will replace the first two assignments to delta and p.

5.2.1 Command-Line Arguments

The command-line arguments are all the words that appear on the command line after the program name. Running a program prog.py as python prog.py arg1 arg2 means that there are two command-line arguments (separated by white space): arg1 and arg2. Python stores all command-line arguments in a special list sys.argv. (The name argv stems from the C language and stands for ‘‘argument values’’. In C there is also an integer variable called argc reflecting the number of arguments, or ‘‘argument counter’’. A lot of programming languages have adopted the variable name argv for the command-line arguments.) Here is an example on a program what_is_sys_argv.py that can show us what the command-line arguments are

A sample run goes like

We make two observations:

-

sys.argv[0] is the name of the program, and the sublist sys.argv[1:] contains all the command-line arguments.

-

Each command-line argument is available as a string. A conversion to float is necessary if we want to compute with the numbers 5.0 and 104.

There are, in principle, two ways of programming with command-line arguments in Python:

-

Positional arguments: Decide upon a sequence of parameters on the command line and read their values directly from the sys.argv[1:] list.

-

Option-value pairs: Use --option value on the command line to replace the default value of an input parameter option by value (and utilize the argparse.ArgumentParser tool for implementation).

Suppose we want to run some program prog.py with specification of two parameters p and delta on the command line. With positional command-line arguments we write

and must know that the first argument 2 represents p and the next 0.5 is the value of delta. With option-value pairs we can run

Now, both p and delta are supposed to have default values in the program, so we need to specify only the parameter that is to be changed from its default value, e.g.,

How do we extend the prog.py code for positional arguments and option-value pairs? Positional arguments require very simple code:

If the user forgets to supply two command-line arguments, Python will raise an IndexError exception and produce a long error message. To avoid that, we should use a try-except construction:

Using sys.exit(1) aborts the program. The value 1 (actually any value different from 0) notifies the operating system that the program failed.

Command-line arguments are strings!

Note that all elements in sys.argv are string objects. If the values are used in mathematical computations, we need to explicitly convert the strings to numbers.

Option-value pairs requires more programming and is actually better explained in a more comprehensive example below. Minimal code for our prog.py program reads

Because the default values of delta and p are float numbers, the args.delta and args.p variables are automatically of type float.

Our next task is to use these basic code constructs to equip our decay.py module with command-line interfaces.

5.2.2 Positional Command-Line Arguments

For our decay.py module file, we want to include functionality such that we can read I, a, T, θ, and a range of \(\Delta t\) values from the command line. A plot is then to be made, comparing the different numerical solutions for different \(\Delta t\) values against the exact solution. The technical details of getting the command-line information into the program is covered in the next two sections.

The simplest way of reading the input parameters is to decide on their sequence on the command line and just index the sys.argv list accordingly. Say the sequence of input data for some functionality in decay.py is I, a, T, θ followed by an arbitrary number of \(\Delta t\) values. This code extracts these positional command-line arguments:

Note that we may use a try-except construction instead of the if test.

A run like

results in

and consequently the assignments I=1.0, a=0.5, T=4.0, thet=0.5, and dt_values = [1.5, 0.75, 0.1].

Instead of specifying the θ value, we could be a bit more sophisticated and let the user write the name of the scheme: BE for Backward Euler, FE for Forward Euler, and CN for Crank–Nicolson. Then we must map this string to the proper θ value, an operation elegantly done by a dictionary:

Now we can do

and get ‘theta=0.5‘in the code.

5.2.3 Option-Value Pairs on the Command Line

Now we want to specify option-value pairs on the command line, using --I for I (I), --a for a (a), --T for T (T), --scheme for the scheme name (BE, FE, CN), and --dt for the sequence of dt (\(\Delta t\)) values. Each parameter must have a sensible default value so that we specify the option on the command line only when the default value is not suitable. Here is a typical run:

Observe the major advantage over positional command-line arguments: the input is much easier to read and much easier to write. With positional arguments it is easy to mess up the sequence of the input parameters and quite challenging to detect errors too, unless there are just a couple of arguments.

Python’s ArgumentParser tool in the argparse module makes it easy to create a professional command-line interface to any program. The documentation of ArgumentParser demonstrates its versatile applications, so we shall here just list an example containing the most basic features. It always pays off to use ArgumentParser rather than trying to manually inspect and interpret option-value pairs in sys.argv!

The use of ArgumentParser typically involves three steps:

A function for setting up all the options is handy:

Each command-line option is defined through the parser.add_argument methodFootnote 1. Alternative options, like the short --I and the more explaining version --initial_condition can be defined. Other arguments are type for the Python object type, a default value, and a help string, which gets printed if the command-line argument -h or --help is included. The metavar argument specifies the value associated with the option when the help string is printed. For example, the option for I has this help output:

The structure of this output is

Finally, the --dt option demonstrates how to allow for more than one value (separated by blanks) through the nargs=’+’ keyword argument. After the command line is parsed, we get an object where the values of the options are stored as attributes. The attribute name is specified by the dist keyword argument, which for the --dt option is dt_values. Without the dest argument, the value of an option --opt is stored as the attribute opt.

The code below demonstrates how to read the command line and extract the values for each option:

As seen, the values of the command-line options are available as attributes in args: args.opt holds the value of option --opt, unless we used the dest argument (as for --dt_values) for specifying the attribute name. The args.opt attribute has the object type specified by type (str by default).

The making of the plot is not dependent on whether we read data from the command line as positional arguments or option-value pairs:

5.2.4 Creating a Graphical Web User Interface

The Python package Parampool can be used to automatically generate a web-based graphical user interface (GUI) for our simulation program. Although the programming technique dramatically simplifies the efforts to create a GUI, the forthcoming material on equipping our decay module with a GUI is quite technical and of significantly less importance than knowing how to make a command-line interface.

Making a compute function

The first step is to identify a function that performs the computations and that takes the necessary input variables as arguments. This is called the compute function in Parampool terminology. The purpose of this function is to take values of I, a, T together with a sequence of \(\Delta t\) values and a sequence of θ and plot the numerical against the exact solution for each pair of \((\theta,\Delta t)\). The plots can be arranged as a table with the columns being scheme type (θ value) and the rows reflecting the discretization parameter (\(\Delta t\) value). Figure 5.3 displays what the graphical web interface may look like after results are computed (there are \(3\times 3\) plots in the GUI, but only \(2\times 2\) are visible in the figure).

To tell Parampool what type of input data we have, we assign default values of the right type to all arguments in the compute function, here called main_GUI:

The compute function must return the HTML code we want for displaying the result in a web page. Here we want to show a table of plots. Assume for now that the HTML code for one plot and the value of the norm of the error can be computed by some other function compute4web. The main_GUI function can then loop over \(\Delta t\) and θ values and put each plot in an HTML table. Appropriate code goes like

Making one plot is done in compute4web. The statements should be straightforward from earlier examples, but there is one new feature: we use a tool in Parampool to embed the PNG code for a plot file directly in an HTML image tag. The details are hidden from the programmer, who can just rely on relevant HTML code in the string html_text. The function looks like

Generating the user interface

The web GUI is automatically generated by the following code, placed in the file decay_GUI_generate.py .

Running the decay_GUI_generate.py program results in three new files whose names are specified in the call to generate:

-

1.

decay_GUI_model.py defines HTML widgets to be used to set input data in the web interface,

-

2.

templates/decay_GUI_views.py defines the layout of the web page,

-

3.

decay_GUI_controller.py runs the web application.

We only need to run the last program, and there is no need to look into these files.

Running the web application

The web GUI is started by

Open a web browser at the location 127.0.0.1:5000. Input fields for I, a, T, dt_values, and theta_values are presented. Figure 5.3 shows a part of the resulting page if we run with the default values for the input parameters. With the techniques demonstrated here, one can easily create a tailored web GUI for a particular type of application and use it to interactively explore physical and numerical effects.

5.3 Tests for Verifying Implementations

Any module with functions should have a set of tests that can check the correctness of the implementations. There exists well-established procedures and corresponding tools for automating the execution of such tests. These tools allow large test sets to be run with a one-line command, making it easy to check that the software still works (as far as the tests can tell!). Here we shall illustrate two important software testing techniques: doctest and unit testing. The first one is Python specific, while unit testing is the dominating test technique in the software industry today.

5.3.1 Doctests

A doc string, the first string after the function header, is used to document the purpose of functions and their arguments (see Sect. 5.1.5). Very often it is instructive to include an example in the doc string on how to use the function. Interactive examples in the Python shell are most illustrative as we can see the output resulting from the statements and expressions. For example, in the solver function, we can include an example on calling this function and printing the computed u and t arrays:

When such interactive demonstrations are inserted in doc strings, Python’s doctest module can be used to automate running all commands in interactive sessions and compare new output with the output appearing in the doc string. All we have to do in the current example is to run the module file decay.py with

This command imports the doctest module, which runs all doctests found in the file and reports discrepancies between expected and computed output. Alternatively, the test block in a module may run all doctests by

Doctests can also be embedded in nose/pytest unit tests as explained in the next section.

Doctests prevent command-line arguments!

No additional command-line argument is allowed when running doctests. If your program relies on command-line input, make sure the doctests can be run without such input on the command line.

However, you can simulate command-line input by filling sys.argv with values, e.g.,

The execution command above will report any problem if a test fails. As an illustration, let us alter the u value at t=1.5 in the output of the doctest by replacing the last digit 8 by 7. This edit triggers a report:

Pay attention to the number of digits in doctest results!

Note that in the output of t and u we write u with 14 digits. Writing all 16 digits is not a good idea: if the tests are run on different hardware, round-off errors might be different, and the doctest module detects that the numbers are not precisely the same and reports failures. In the present application, where \(0<u(t)\leq 0.8\), we expect round-off errors to be of size \(10^{-16}\), so comparing 15 digits would probably be reliable, but we compare 14 to be on the safe side. On the other hand, comparing a small number of digits may hide software errors.

Doctests are highly encouraged as they do two things: 1) demonstrate how a function is used and 2) test that the function works.

5.3.2 Unit Tests and Test Functions

The unit testing technique consists of identifying smaller units of code and writing one or more tests for each unit. One unit can typically be a function. Each test should, ideally, not depend on the outcome of other tests. The recommended practice is actually to design and write the unit tests first and then implement the functions!

In scientific computing it is not always obvious how to best perform unit testing. The units are naturally larger than in non-scientific software. Very often the solution procedure of a mathematical problem identifies a unit, such as our solver function.

Two Python test frameworks: nose and pytest

Python offers two very easy-to-use software frameworks for implementing unit tests: nose and pytest. These work (almost) in the same way, but our recommendation is to go for pytest.

Test function requirements

For a test to qualify as a test function in nose or pytest, three rules must be followed:

-

1.

The function name must start with test_.

-

2.

Function arguments are not allowed.

-

3.

An AssertionError exception must be raised if the test fails.

A specific example might be illustrative before proceeding. We have the following function that we want to test:

The corresponding test function could, in principle, have been written as

The last two lines, however, are never written like this in test functions. Instead, Python’s assert statement is used: assert success, msg, where success is a boolean variable, which is False if the test fails, and msg is an optional message string that is printed when the test fails. A better version of the test function is therefore

Comparison of real numbers

Because of the finite precision arithmetics on a computer, which gives rise to round-off errors, the == operator is not suitable for checking whether two real numbers are equal. Obviously, this principle also applies to tests in test functions. We must therefore replace a == b by a comparison based on a tolerance tol: abs(a-b) < tol. The next example illustrates the problem and its solution.

Here is a slightly different function that we want to test:

We write a test function where the expected result is computed as \(\frac{1}{3}x\) rather than \(x/3\):

This test_third function executes silently, i.e., no failure, until x becomes 0.15. Then round-off errors make the == comparison False. In fact, seven of the x values above face this problem. The solution is to compare expected and computed with a small tolerance:

Always compare real numbers with a tolerance!

Real numbers should never be compared with the == operator, but always with the absolute value of the difference and a tolerance. So, replace a == b, if a and/or b is float, by

The suitable size of tol depends on the size of a and b (see Problem 5.5). Unless a and b are around unity in size, one should use a relative difference:

Special assert functions from nose

Test frameworks often contain more tailored assert functions that can be called instead of using the assert statement. For example, comparing two objects within a tolerance, as in the present case, can be done by the assert_almost_equal from the nose framework:

Whether to use the plain assert statement with a comparison based on a tolerance or to use the ready-made function assert_almost_equal depends on the programmer’s preference. The examples used in the documentation of the pytest framework stick to the plain assert statement.

Locating test functions

Test functions can reside in a module together with the functions they are supposed to verify, or the test functions can be collected in separate files having names starting with test. Actually, nose and pytest can recursively run all test functions in all test*.py files in the current directory, as well as in all subdirectories!

The decay.py module file features test functions in the module, but we could equally well have made a subdirectory tests and put the test functions in tests/test_decay.py .

Running tests

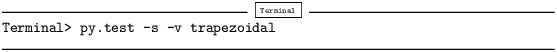

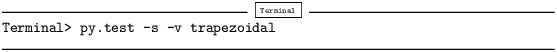

To run all test functions in the file decay.py do

The -s option ensures that output from the test functions is printed in the terminal window, while -v prints the outcome of each individual test function.

Alternatively, if the test functions are located in some separate test*.py files, we can just write

to recursively run all test functions in the current directory tree. The corresponding

command does the same, but requires subdirectory names to start with test or end with _test or _tests (which is a good habit anyway). An example of a tests directory with a test*.py file is found in src/softeng/tests .

Embedding doctests in a test function

Doctests can also be executed from nose/pytest unit tests. Here is an example of a file test_decay_doctest.py where we in the test block run all the doctests in the imported module decay, but we also include a local test function that does the same:

Running this file as a program from the command line triggers the doctest.testmod call in the test block, while applying py.test or nosetests to the file triggers an import of the file and execution of the test function test_decay_modue_with_doctest.

Installing nose and pytest

With pip available, it is trivial to install nose and/or pytest: sudo pip install nose and sudo pip install pytest.

5.3.3 Test Function for the Solver

Finding good test problems for verifying the implementation of numerical methods is a topic on its own. The challenge is that we very seldom know what the numerical errors are. For the present model problem (Ch5.E1)–(Ch5.E2) solved by (Ch5.E3) one can, fortunately, derive a formula for the numerical approximation:

Then we know that the implementation should produce numbers that agree with this formula to machine precision. The formula for un is known as an exact discrete solution of the problem and can be coded as

A test function can evaluate this solution on a time mesh and check that the u values produced by the solver function do not deviate with more than a small tolerance:

Among important things to consider when constructing test functions is testing the effect of wrong input to the function being tested. In our solver function, for example, integer values of a, \(\Delta t\), and θ may cause unintended integer division. We should therefore add a test to make sure our solver function does not fall into this potential trap:

In more complicated problems where there is no exact solution of the numerical problem solved by the software, one must use the method of manufactured solutions, compute convergence rates for a series of \(\Delta t\) values, and check that the rates converges to the expected ones (from theory). This type of testing is fully explained in Sect. 3.1.6.

5.3.4 Test Function for Reading Positional Command-Line Arguments

The function read_command_line_positional extracts numbers from the command line. To test it, we must decide on a set of values for the input data, fill sys.argv accordingly, and check that we get the expected values:

Note that sys.argv[0] is always the program name and that we have to copy that string from the original sys.argv array to the new one we construct in the test function. (Actually, this test function destroys the original sys.argv that Python fetched from the command line.)

Any numerical code writer should always be skeptical to the use of the exact equality operator == in test functions, since round-off errors often come into play. Here, however, we set some real values, convert them to strings and convert back again to real numbers (of the same precision). This string-number conversion does not involve any finite precision arithmetics effects so we can safely use == in tests. Note also that the last element in expected and computed is the list dt_values, and == works for comparing two lists as well.

5.3.5 Test Function for Reading Option-Value Pairs

The function read_command_line_argparse can be verified with a test function that has the same setup as test_read_command_line_positional above. However, the construction of the command line is a bit more complicated. We find it convenient to construct the line as a string and then split the line into words to get the desired list sys.argv:

Recall that the Python function zip enables iteration over several lists, tuples, or arrays at the same time.

Let silent test functions speak up during development!

When you develop test functions in a module, it is common to use IPython for interactive experimentation:

Note that a working test function is completely silent! Many find it psychologically annoying to convince themselves that a completely silent function is doing the right things. It can therefore, during development of a test function, be convenient to insert print statements in the function to monitor that the function body is indeed executed. For example, one can print the expected and computed values in the terminal window:

After performing this edit, we want to run the test again, but in IPython the module must first be reloaded (reimported):

Now we clearly see the objects that are compared.

5.3.6 Classical Class-Based Unit Testing

The test functions written for the nose and pytest frameworks are very straightforward and to the point, with no framework-required boilerplate code. We just write the statements we need to get the computations and comparisons done, before applying the required assert.

The classical way of implementing unit tests (which derives from the JUnit object-oriented tool in Java) leads to much more comprehensive implementations with a lot of boilerplate code. Python comes with a built-in module unittest for doing this type of classical unit tests. Although nose or pytest are much more convenient to use than unittest, class-based unit testing in the style of unittest has a very strong position in computer science and is so widespread in the software industry that even computational scientists should have an idea how such unit test code is written. A short demo of unittest is therefore included next. (Readers who are not familiar with object-oriented programming in Python may find the text hard to understand, but one can safely jump to the next section.)

Suppose we have a function double(x) in a module file mymod.py:

Unit testing with the aid of the unittest module consists of writing a file test_mymod.py for testing the functions in mymod.py. The individual tests must be methods with names starting with test_ in a class derived from class TestCase in unittest. With one test method for the function double, the test_mymod.py file becomes

The test is run by executing the test file test_mymod.py as a standard Python program. There is no support in unittest for automatically locating and running all tests in all test files in a directory tree.

We could use the basic assert statement as we did with nose and pytest functions, but those who write code based on unittest almost exclusively use the wide range of built-in assert functions such as assertEqual, assertNotEqual, assertAlmostEqual, to mention some of them.

Translation of the test functions from the previous sections to unittest means making a new file test_decay.py file with a test class TestDecay where the stand-alone functions for nose/pytest now become methods in this class.

5.4 Sharing the Software with Other Users

As soon as you have some working software that you intend to share with others, you should package your software in a standard way such that users can easily download your software, install it, improve it, and ask you to approve their improvements in new versions of the software. During recent years, the software development community has established quite firm tools and rules for how all this is done. The following subsections cover three steps in sharing software:

-

1.

Organizing the software for public distribution.

-

2.

Uploading the software to a cloud service (here GitHub).

-

3.

Downloading and installing the software.

5.4.1 Organizing the Software Directory Tree

We start with organizing our software as a directory tree. Our software consists of one module file, decay.py, and possibly some unit tests in a separate file located in a directory tests.

The decay.py can be used as a module or as a program. For distribution to other users who install the program decay.py in system directories, we need to insert the following line at the top of the file:

This line makes it possible to write just the filename and get the file executed by the python program (or more precisely, the first python program found in the directories in the PATH environment variable).

Distributing just a module file

Let us start out with the minimum solution alternative: distributing just the decay.py file. Then the software is just one file and all we need is a directory with this file. This directory will also contain an installation script setup.py and a README file telling what the software is about, the author’s email address, a URL for downloading the software, and other useful information.

The setup.py file can be as short as

The py_modules argument specifies a list of modules to be installed, while scripts specifies stand-alone programs. Our decay.py can be used either as a module or as an executable program, so we want users to have both possibilities.

Distributing a package

If the software consists of more files than one or two modules, one should make a Python package out of it. In our case we make a package decay containing one module, also called decay.

To make a package decay, create a directory decay and an empty file in it with name __init__.py. A setup.py script must now specify the directory name of the package and also an executable program (scripts=) in case we want to run decay.py as a stand-alone application:

We have also added some author and download information. The reader is referred to the Distutils documentation for more information on how to write setup.py scripts.

Remark about the executable file

The executable program, decay.py, is in the above installation script taken to be the complete module file decay.py. It would normally be preferred to instead write a very short script essentially importing decay and running the test block in decay.py. In this way, we distribute a module and a very short file, say decay-main.py, as an executable program:

In this package example, we move the unit tests out of the decay.py module to a separate file, test_decay.py, and place this file in a directory tests. Then the nosetests and py.test programs will automatically find and execute the tests.

The complete directory structure reads

5.4.2 Publishing the Software at GitHub

The leading site today for publishing open source software projects is GitHub at http://github.com, provided you want your software to be open to the world. With a paid GitHub account, you can have private projects too.

Sign up for a GitHub account if you do not already have one. Go to your account settings and provide an SSH key (typically the file ~/.ssh/id_rsa.pub) such that you can communicate with GitHub without being prompted for your password. All communication between your computer and GitHub goes via the version control system Git. This may at first sight look tedious, but this is the way professionals work with software today. With Git you have full control of the history of your files, i.e., ‘‘who did what when’’. The technology makes Git superior to simpler alternatives like Dropbox and Google Drive, especially when you collaborate with others. There is a reason why Git has gained the position it has, and there is no reason why you should not adopt this tool.

To create a new project, click on New repository on the main page and fill out a project name. Click on the check button Initialize this repository with a README, and click on Create repository. The next step is to clone (copy) the GitHub repo (short for repository) to your own computer(s) and fill it with files. The typical clone command is

where username is your GitHub username and projname is the name of the repo (project). The result of git clone is a directory projname. Go to this directory and add files. As soon as the repo directory is populated with files, run

The above git commands look cryptic, but these commands plus 2–3 more are the essence of what you need in your daily work with files in small or big software projects. I strongly encourage you to learn more about version control systems and project hosting sites [6].

Your project files are now stored in the cloud at https://github.com/username/projname. Anyone can get the software by the listed git clone command you used above, or by clicking on the links for zip and tar files.

Every time you update the project files, you need to register the update at GitHub by

The files at GitHub are now synchronized with your local ones. Similarly, every time you start working on files in this project, make sure you have the latest version: git pull origin master.

You are recommended to read a~quick intro that makes you up and going with this style of professional work. And you should put all your writings and programming projects in repositories in the cloud!

5.4.3 Downloading and Installing the Software

Users of your software go to the Git repo at github.com and clone the repository. One can use either SSH or HTTP for communication. Most users will use the latter, typically

The result is a directory projname with the files in the repo.

Installing just a module file

The software package is in the case above a directory decay with three files

To install the decay.py file, a user just runs setup.py:

This command will install the software in system directories, so the user needs to run the command as root on Unix systems (therefore the command starts with sudo). The user can now import the module by import decay and run the program by

A user can easily install the software on her personal account if a system-wide installation is not desirable. We refer to the installation documentation for the many arguments that can be given to setup.py. Note that if the software is installed on a personal account, the PATH and PYTHONPATH environment variables must contain the relevant directories.

Our setup.py file specifies a module decay to be installed as well as a program decay.py. Modules are typically installed in some lib directory on the computer system, e.g., /usr/local/lib/python2.7/dist-packages, while executable programs go to /usr/local/bin.

Installing a package

When the software is organized as a Python package, the installation is done by running setup.py exactly as explained above, but the use of a module decay in a package decay requires the following syntax:

That is, the call goes like packagename.modulename.functionname.

Package import in __init__.py

One can ease the use of packages by providing a somewhat simpler import like

This is accomplished by putting an import statement in the __init__.py file, which is always run when doing the package import import decay or from decay import. The __init__.py file must now contain

Obviously, it is the package developer who decides on such an __init__.py file or if it should just be empty.

5.5 Classes for Problem and Solution Method

The numerical solution procedure was compactly and conveniently implemented in a Python function solver in Sect. 5.1.1. In more complicated problems it might be beneficial to use classes instead of functions only. Here we shall describe a class-based software design well suited for scientific problems where there is a mathematical model of some physical phenomenon, and some numerical methods to solve the equations involved in the model.

We introduce a class Problem to hold the definition of the physical problem, and a class Solver to hold the data and methods needed to numerically solve the problem. The forthcoming text will explain the inner workings of these classes and how they represent an alternative to the solver and experiment_* functions in the decay module.

Explaining the details of class programming in Python is considered far beyond the scope of this text. Readers who are unfamiliar with Python class programming should first consult one of the many electronic Python tutorials or textbooks to come up to speed with concepts and syntax of Python classes before reading on. The author has a gentle introduction to class programming for scientific applications in [8], see Chapter 7 and 9 and Appendix E. Other useful resources are

-

The Python Tutorial: http://docs.python.org/2/tutorial/classes.html

-

Wiki book on Python Programming: http://en.wikibooks.org/wiki/Python_Programming/Classes

-

tutorialspoint.com: http://www.tutorialspoint.com/python/python_classes_objects.htm

5.5.1 The Problem Class

The purpose of the problem class is to store all information about the mathematical model. This usually means the physical parameters and formulas in the problem. Looking at our model problem (Ch5.E1)–(Ch5.E2), the physical data cover I, a, and T. Since we have an analytical solution of the ODE problem, we may add this solution in terms of a Python function (or method) to the problem class as well. A possible problem class is therefore

We could in the u_exact method have written self.I*exp(-self.a*t), but using local variables I and a allows the nicer formula I*exp(-a*t), which looks much closer to the mathematical expression \(Ie^{-at}\). This is not an important issue with the current compact formula, but is beneficial in more complicated problems with longer formulas to obtain the closest possible relationship between code and mathematics. The coding style in this book is to strip off the self prefix when the code expresses mathematical formulas.

The class data can be set either as arguments in the constructor or at any time later, e.g.,

(Some programmers prefer set and get functions for setting and getting data in classes, often implemented via properties in Python, but this author considers that overkill when there are just a few data items in a class.)

It would be convenient if class Problem could also initialize the data from the command line. To this end, we add a method for defining a set of command-line options and a method that sets the local attributes equal to what was found on the command line. The default values associated with the command-line options are taken as the values provided to the constructor. Class Problem now becomes

Observe that if the user already has an ArgumentParser object it can be supplied, but if she does not have any, class Problem makes one. Python’s None object is used to indicate that a variable is not initialized with a proper value.

5.5.2 The Solver Class

The solver class stores parameters related to the numerical solution method and provides a function solve for solving the problem. For convenience, a problem object is given to the constructor in a solver object such that the object gets access to the physical data. In the present example, the numerical solution method involves the parameters \(\Delta t\) and θ, which then constitute the data part of the solver class. We include, as in the problem class, functionality for reading \(\Delta t\) and θ from the command line:

Note that we see no need to repeat the body of the previously developed and tested solver function. We just call that function from the solve method. In this way, class Solver is merely a class wrapper of the stand-alone solver function. With a single object of class Solver we have all the physical and numerical data bundled together with the numerical solution method.

Combining the objects

Eventually we need to show how the classes Problem and Solver play together. We read parameters from the command line and make a plot with the numerical and exact solution:

5.5.3 Improving the Problem and Solver Classes

The previous Problem and Solver classes containing parameters soon get much repetitive code when the number of parameters increases. Much of this code can be parameterized and be made more compact. For this purpose, we decide to collect all parameters in a dictionary, self.prm, with two associated dictionaries self.type and self.help for holding associated object types and help strings. The reason is that processing dictionaries is easier than processing a set of individual attributes. For the specific ODE example we deal with, the three dictionaries in the problem class are typically

Provided a problem or solver class defines these three dictionaries in the constructor, we can create a super class Parameters with general code for defining command-line options and reading them as well as methods for setting and getting each parameter. A Problem or Solver for a particular mathematical problem can then inherit most of the needed functionality and code from the Parameters class. For example,

By inheritance, these classes can automatically do a lot more when it comes to reading and assigning parameter values:

A generic class for parameters

A simplified version of the parameter class looks as follows:

The file decay_oo.py contains a slightly more advanced version of class Parameters where the functions for getting and setting parameters contain tests for valid parameter names, and raise exceptions with informative messages if any name is not registered.

We have already sketched the Problem and Solver classes that build on inheritance from Parameters. We have also shown how they are used. The only remaining code is to make the plot, but this code is identical to previous versions when the numerical solution is available in an object u and the exact one in u_e.

The advantage with the Parameters class is that it scales to problems with a large number of physical and numerical parameters: as long as the parameters are defined once via a dictionary, the compact code in class Parameters can handle any collection of parameters of any size. More advanced tools for storing large collections of parameters in hierarchical structures is provided by the ParampoolFootnote 2 package.

5.6 Automating Scientific Experiments

Empirical scientific investigations based on running computer programs require careful design of the experiments and accurate reporting of results. Although there is a strong tradition to do such investigations manually, the extreme requirements to scientific accuracy make a program much better suited to conduct the experiments. We shall in this section outline how we can write such programs, often called scripts, for running other programs and archiving the results.

Scientific investigation

The purpose of the investigations is to explore the quality of numerical solutions to an ordinary differential equation. More specifically, we solve the initial-value problem

by the θ-rule:

This scheme corresponds to well-known methods: \(\theta=0\) gives the Forward Euler (FE) scheme, \(\theta=1\) gives the Backward Euler (BE) scheme, and \(\theta=\frac{1}{2}\) gives the Crank–Nicolson (CN) or midpoint/centered scheme.

For chosen constants I, a, and T, we run the three schemes for various values of \(\Delta t\), and present the following results in a report:

-

1.

visual comparison of the numerical and exact solution in a plot for each \(\Delta t\) and \(\theta=0,1,\frac{1}{2}\),

-

2.

a table and a plot of the norm of the numerical error versus \(\Delta t\) for \(\theta=0,1,\frac{1}{2}\).

The report will also document the mathematical details of the problem under investigation.

5.6.1 Available Software

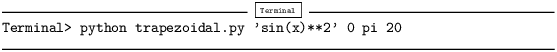

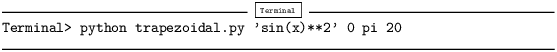

Appropriate software for implementing (Ch5.E5) is available in a program model.py , which is run as

The command-line input corresponds to setting I = 1.5, a = 0.25, T = 6, and run three values of \(\Delta t\): 1.25, 0.75, ad 0.5.

The results of running this model.py command are text in the terminal window and a set of plot files. The plot files have names M_D.E, where M denotes the method (FE, BE, CN for \(\theta=0,1,\frac{1}{2}\), respectively), D the time step length (here 1.25, 0.75, or 0.5), and E is the plot file extension png or pdf. The text output in the terminal window looks like

The first column is the θ value, the next the \(\Delta t\) value, and the final column represents the numerical error E (the norm of discrete error function on the mesh).

5.6.2 The Results We Want to Produce

The results we need for our investigations are slightly different than what is directly produced by model.py:

-

1.

We need to collect all the plots for one numerical method (FE, BE, CN) in a single plot. For example, if 4 \(\Delta t\) values are run, the summarizing figure for the BE method has \(2\times 2\) subplots, with the subplot corresponding to the largest \(\Delta t\) in the upper left corner and the smallest in the bottom right corner.

-

2.

We need to create a table containing \(\Delta t\) values in the first column and the numerical error E for \(\theta=0,0.5,1\) in the next three columns. This table should be available as a standard CSV file.

-

3.

We need to plot the numerical error E versus \(\Delta t\) in a log-log plot.

Consequently, we must write a script that can run model.py as described and produce the results 1–3 above. This requires combining multiple plot files into one file and interpreting the output from model.py as data for plotting and file storage.

If the script’s name is exper1.py, we run it with the desired \(\Delta t\) values as positional command-line arguments:

This run will then generate eight plot files: FE.png and FE.pdf summarizing the plots with the FE method, BE.png and BE.pdf with the BE method, CN.png and CN.pdf with the CN method, and error.png and error.pdf with the log-log plot of the numerical error versus \(\Delta t\). In addition, the table with numerical errors is written to a file error.csv.

Reproducible and replicable science

A script that automates running our computer experiments will ensure that the experiments can easily be rerun by anyone in the future, either to confirm the same results or redo the experiments with other input data. Also, whatever we did to produce the results is documented in every detail in the script.

A project where anyone can easily repeat the experiments with the same data is referred to as being replicable, and replicability should be a fundamental requirement in scientific computing work. Of more scientific interest is reproducibilty, which means that we can also run alternative experiments to arrive at the same conclusions. This requires more than an automating script.

5.6.3 Combining Plot Files

The script for running experiments needs to combine multiple image files into one. The montage and convert programs in the ImageMagick software suite can be used to combine image files. However, these programs are best suited for PNG files. For vector plots in PDF format one needs other tools to preserve the quality: pdftk, pdfnup, and pdfcrop.

Suppose you have four files f1.png, f2.png, f3.png, and f4.png and want to combine them into a \(2\times 2\) table of subplots in a new file f.png, see Fig. 5.4 for an example.

The appropriate ImageMagick commands are

The first command mounts the four files in one, the next convert command removes unnecessary surrounding white space, and the final convert command makes the white background transparent.

High-quality montage of PDF files f1.pdf, f2.pdf, f3.pdf, and f4.pdf into f.pdf goes like

5.6.4 Running a Program from Python

The script for automating experiments needs to run the model.py program with appropriate command-line options. Python has several tools for executing an arbitrary command in the operating systems. Let cmd be a string containing the desired command. In the present case study, cmd could be ’python model.py --I 1 --dt 0.5 0.2’. The following code executes cmd and loads the text output into a string output:

Unsuccessful execution usually makes it meaningless to continue the program, and therefore we abort the program with sys.exit(1). Any argument different from 0 signifies to the computer’s operating system that our program stopped with a failure.

Programming tip: use _ for dummy variable

Sometimes we need to unpack tuples or lists in separate variables, but we are not interested in all the variables. One example is

but error is of no interest in the example above. One can then use underscore _ as variable name for the dummy (uninteresting) variable(s):

Here is another example where we iterate over a list of three-tuples, but the interest is limited to the second element in each three-tuple:

We need to interpret the contents of the string output and store the data in an appropriate data structure for further processing. Since the content is basically a table and will be transformed to a spread sheet format, we let the columns in the table be represented by lists in the program, and we collect these columns in a dictionary whose keys are natural column names: dt and the three values of θ. The following code translates the output of cmd (output) to such a dictionary of lists (errors):

5.6.5 The Automating Script

We have now all the core elements in place to write the complete script where we run model.py for a set of \(\Delta t\) values (given as positional command-line arguments), make the error plot, write the CSV file, and combine plot files as described above. The complete code is listed below, followed by some explaining comments.

We may comment upon many useful constructs in this script:

-

[float(arg) for arg in sys.argv[1:]] builds a list of real numbers from all the command-line arguments.

-

[’%s_%s.png’ % (method, dt) for dt in dt_values] builds a list of filenames from a list of numbers (dt_values).

-

All montage, convert, pdftk, pdfnup, and pdfcrop commands for creating composite figures are stored in a list and later executed in a loop.

-

glob(’*_*.png’) returns a list of the names of all files in the current directory where the filename matches the Unix wildcard notation *_*.png (meaning any text, underscore, any text, and then .png).

-

os.remove(filename) removes the file with name filename.

-

failure = os.system(cmd) runs an operating system command with simpler syntax than what is required by subprocess (but the output of cmd cannot be captured).

5.6.6 Making a Report

The results of running computer experiments are best documented in a little report containing the problem to be solved, key code segments, and the plots from a series of experiments. At least the part of the report containing the plots should be automatically generated by the script that performs the set of experiments, because in the script we know exactly which input data that were used to generate a specific plot, thereby ensuring that each figure is connected to the right data. Take a look at a~sample report to see what we have in mind.

Word, OpenOffice, GoogleDocs

Microsoft Word, its open source counterparts OpenOffice and LibreOffice, along with GoogleDocs and similar online services are the dominating tools for writing reports today. Nevertheless, scientific reports often need mathematical equations and nicely typeset computer code in monospace font. The support for mathematics and computer code in the mentioned tools is in this author’s view not on par with the technologies based on markup languages and which are addressed below. Also, with markup languages one has a readable, pure text file as source for the report, and changes in this text can easily be tracked by version control systems like Git. The result is a very strong tool for monitoring ‘‘who did what when’’ with the files, resulting in increased reliability of the writing process. For collaborative writing, the merge functionality in Git leads to safer simultaneously editing than what is offered even by collaborative tools like GoogleDocs.

HTML with MathJax

HTML is the markup language used for web pages. Nicely typeset computer code is straightforward in HTML, and high-quality mathematical typesetting is available using an extension to HTML called MathJax, which allows formulas and equations to be typeset with LaTeX syntax and nicely rendered in web browsers, see Fig. 5.5. A relatively small subset of LaTeX environments for mathematics is supported, but the syntax for formulas is quite rich. Inline formulas look like \( u’=-au \) while equations are surrounded by $$ signs. Inside such signs, one can use \[ u’=-au \] for unnumbered equations, or \begin{equation} and \end{equation} for numbered equations, or \begin{align} and \end{align} for multiple numbered aligned equations. You need to be familiar with mathematical typesetting in LaTeX to write MathJax code.

The file exper1_mathjax.py calls a script exper1.py to perform the numerical experiments and then runs Python statements for creating an HTML file with the source code for the scientific report.

LaTeX

The de facto language for mathematical typesetting and scientific report writing is LaTeX. A number of very sophisticated packages have been added to the language over a period of three decades, allowing very fine-tuned layout and typesetting. For output in the PDF format, see Fig. 5.6 for an example, LaTeX is the definite choice when it comes to typesetting quality. The LaTeX language used to write the reports has typically a lot of commands involving backslashes and braces, and many claim that LaTeX syntax is not particularly readable. For output on the web via HTML code (i.e., not only showing the PDF in the browser window), LaTeX struggles with delivering high quality typesetting. Other tools, especially Sphinx, give better results and can also produce nice-looking PDFs. The file exper1_latex.py shows how to generate the LaTeX source from a program.

Sphinx

Sphinx is a typesetting language with similarities to HTML and LaTeX, but with much less tagging. It has recently become very popular for software documentation and mathematical reports. Sphinx can utilize LaTeX for mathematical formulas and equations. Unfortunately, the subset of LaTeX mathematics supported is less than in full MathJax (in particular, numbering of multiple equations in an align type environment is not supported). The Sphinx syntax is an extension of the reStructuredText language. An attractive feature of Sphinx is its rich support for fancy layout of web pages. In particular, Sphinx can easily be combined with various layout themes that give a certain look and feel to the web site and that offers table of contents, navigation, and search facilities, see Fig. 5.7.

Markdown

A recent, very popular format for easy writing of web pages is Markdown. Text is written very much like one would do in email, using spacing and special characters to naturally format the code instead of heavily tagging the text as in LaTeX and HTML. With the tool Pandoc one can go from Markdown to a variety of formats. HTML is a common output format, but LaTeX, epub, XML, OpenOffice/LibreOffice, MediaWiki, and Microsoft Word are some other possibilities. A Markdown version of our scientific report demo is available as an IPython/Jupyter notebook (described next).

IPython/Jupyter notebooks.