Abstract

Our two ears do not function as fixed and independent sound receptors; their functioning is coupled and dynamically adjusted via the contralateral medial olivocochlear efferent reflex (MOCR). The MOCR possibly facilitates speech recognition in noisy environments. Such a role, however, is yet to be demonstrated because selective deactivation of the reflex during natural acoustic listening has not been possible for human subjects up until now. Here, we propose that this and other roles of the MOCR may be elucidated using the unique stimulus controls provided by cochlear implants (CIs). Pairs of sound processors were constructed to mimic or not mimic the effects of the contralateral MOCR with CIs. For the non-mimicking condition (STD strategy), the two processors in a pair functioned independently of each other. When configured to mimic the effects of the MOCR (MOC strategy), however, the two processors communicated with each other and the amount of compression in a given frequency channel of each processor in the pair decreased with increases in the output energy from the contralateral processor. The analysis of output signals from the STD and MOC strategies suggests that in natural binaural listening, the MOCR possibly causes a small reduction of audibility but enhances frequency-specific inter-aural level differences and the segregation of spatially non-overlapping sound sources. The proposed MOC strategy could improve the performance of CI and hearing-aid users.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Olivocochlear reflex

- Speech intelligibility

- Spatial release from masking

- Unmasking

- Dynamic compression

- Auditory implants

- Hearing aids

- Speech encoding

- Sound processor

1 Introduction

The central nervous system can control sound coding in the cochlea via medial olivocochlear (MOC) efferents (Guinan 2006). MOC efferents act upon outer hair cells, inhibiting the mechanical gain in the cochlea for low- and moderate-intensity sounds (Cooper and Guinan 2006) and may be activated involuntarily (i.e., in a reflexive manner) by ipsilateral and/or contralateral sounds (Guinan 2006). This suggests that auditory sensitivity and dynamic range could be dynamically and adaptively varying during natural, binaural listening depending on the state of activation of MOC efferents.

The roles of the MOC reflex (MOCR) in hearing remain controversial (Guinan 2006, 2010). The MOCR may be essential for normal development of cochlear active mechanical processes (Walsh et al. 1998), and/or to minimize deleterious effects of noise exposure on cochlear function (Maison et al. 2013). A more widely accepted role is that the MOCR possibly facilitates understanding speech in noisy environments. This idea is based on the fact that MOC efferent activation restores the dynamic range of auditory nerve fibre responses in noisy backgrounds to values observed in quiet (Fig. 5 in Guinan 2006), something that probably improves the neural coding of speech embedded in noise (Brown et al. 2010; Chintanpalli et al. 2012; Clark et al. 2012). The evidence in support for this unmasking role of the MOCR during natural listening is, however, still indirect (Kim et al. 2006). A direct demonstration would require being able to selectively deactivate the MOCR while keeping afferent auditory nerve fibres functional. To our knowledge, such an experiment has not been possible for human subjects up until now. Here, we argue that the experiment can be done in an approximate manner using cochlear implants (CIs).

Cochlear implants can enable useful hearing for severely to profoundly deaf persons by bypassing the cochlea via direct electrical stimulation of the auditory nerve. The sound processor in a CI (Fig. 1) typically includes an instantaneous back-end compressor in each frequency channel of processing to map the wide dynamic range of sounds in the acoustic environment into the relatively narrow dynamic range of electrically evoked hearing (Wilson et al. 1991). The compressor in a CI serves the same function as the cochlear mechanical compression in a healthy inner ear (Robles and Ruggero 2001). In the fitting of modern CIs, the amount and endpoints of the compression are adjusted for each frequency channel and its associated intra-cochlear electrode(s). The compression once set is fixed. Cochlear implant users thus lack MOCR effects, but these effects can be reinstated, and thus the role(s) of the MOCR in hearing can be assessed by using dynamic rather than fixed compression functions. For example, contralateral MOCR effects could be reinstated by making compression in a given frequency channel vary dynamically depending upon an appropriate control signal from a contralateral processor (Fig. 1). Ipsilateral MOCR effects could be reinstated similarly, except that the control signal would originate from the ipsilateral processor. Using this approach, the CI offers the possibility to mimic (with dynamic compression) or not mimic (with fixed compression) the effects of the MOCR. Such activation or deactivation of MOCR effects is not possible for subjects who have normal hearing.

Envisaged way of incorporating MOC efferent control to a standard pulsatile CI sound processor. The elements of the standard sound processor are depicted in black (Wilson et al. 1991) and the MOC efferent control in green. See the main text for details

Our long-term goal is to mimic MOCR effects with CIs. This would be useful not only to explore the roles of the MOCR in acoustic hearing but to also reinstate the potential benefits of the MOCR to CI users. The present study focuses on (1) describing a bilateral CI sound processing strategy inspired by the contralateral MOCR; (2) demonstrating, using CI processor output-signal simulations, some possible benefits of the contralateral MOCR in acoustic hearing.

2 Methods

2.1 A Bilateral CI Sound Processor Inspired by the Contralateral MOCR

To assess the potential effects of the contralateral MOCR in hearing using CIs, pairs of sound processors (one per ear) were implemented where the amount of compression in a given frequency channel of each processor in the pair was either constant (STD strategy) or varied dynamically in time in a manner inspired by the contralateral MOCR (MOC strategy).

The two processors in each pair were as shown in Fig. 1. They included a high-pass pre-emphasis filter (first-order Butterworth filter with a 3-dB cutoff frequency of 1.2 kHz); a bank of twelve, sixth-order Butterworth band-pass filters whose 3-dB cut-off frequencies followed a modified logarithmic distribution between 100 and 8500 Hz; envelope extraction via full-wave rectification (Rect.) and low-pass filtering (LPF) (fourth-order Butterworth low-pass filter with a 3-dB cut-off frequency of 400 Hz); and a back-end compression function.

The back-end compression function was as follows (Boyd 2006):

where x and y are the input and output amplitudes to/from the compressor, respectively, both of them assumed to be within the interval [0, 1]; and c is a parameter that determines the amount of compression. In the STD strategy, c was constant (c = c max) and identical at the two ears. In the MOC strategy, by contrast, c varied dynamically in time within the range [c max, c min], where c max and c min produce the most and least amount of compression, respectively. For the natural MOCR, the more intense the contralateral stimulus, the greater the amount of efferent inhibition and the more linear the cochlear mechanical response (Hood et al. 1996). On the other hand, it seems reasonable that the amount of MOCR inhibition depends on the magnitude of the output from the cochlea rather than on the level of the acoustic stimulus. Inspired by this, we assumed that the instantaneous value of c for any given frequency channel in an MOC processor was inversely related to the output energy, E, from the corresponding channel in the contralateral MOC processor. In other words, the greater the output energy from a given frequency channel in the left-ear processor, the less the compression in the corresponding frequency channel of the right-ear processor, and vice versa (contralateral ‘on-frequency’ inhibition). We assumed a relationship between c and E such that in absence of contralateral energy, the two MOC processors in the pair behaved as a STD ones (i.e., c = c max for E = 0).

Inspired by the exponential time courses of activation and deactivation of the contralateral MOCR (Backus and Guinan 2006), the instantaneous output energy from the contralateral processor, E, was calculated as the root-mean-square (RMS) output amplitude integrated over a preceding exponentially decaying time window with two time constants.

2.2 Evaluation

The compressed output envelopes from the STD and MOC strategies were compared for different signals in different types and amounts of noise or interferers (see below). Stimulus levels were expressed as RMS amplitudes in full-scale decibels (dBFS), i.e., dB re the RMS amplitude of a 1-kHz sinusoid with a peak amplitude of 1 (i.e., dB re 0.71).

The effects of contralateral inhibition of compression in the MOC strategy were expected to be different depending upon the relative stimulus levels and frequency spectra at each ear. For this reason, and to assess performance in binaural, free-field scenarios, all stimuli were filtered through KEMAR head-related transfer functions (HRTFs) (Gardner and Martin 1995) prior to their processing through the MOC or STD strategies. HRTFs were applied as 512-point finite impulse response filters. STD and MOC performance was compared assuming sound sources at eye level (0° of elevation).

For the present evaluations, c max was set to 1000, a value typically used in clinical CI sound processor, and c min to 1. The energy integration time constants were 4 and 29 ms. These parameter values were selected ad hoc to facilitate visualising the effects of contralateral inhibition of compression in the MOC strategy and need not match natural MOCR characteristics or improve CI-user performance (see the Discussion).

All processors were implemented digitally in the time domain, in MatlabTM and evaluated using a sampling frequency of 20 kHz.

3 Results

3.1 The MOC Processor Enhances Within-channel Inter-aural Level Differences

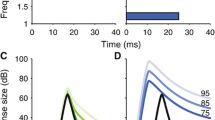

The compressed envelopes at the output from the STD and MOC processors were first compared for a 1-kHz pure tone presented binaurally from a sound source in front of the left ear. The tone had a duration of 50 ms (including 10-ms cosine squared onset and offset ramps) and its level was ‑20 dBFS. Figure 2a shows the output envelopes for channel #6. Given that the stimulus was binaural and filtered through appropriate HRTFs, there was an acoustic inter-aural level difference (ILD), hence the greater amplitude for the left (blue traces) than for the right ear (red traces). Strikingly, however, the inter-aural output difference (IOD, black arrows in Fig. 2a) was greater for the MOC than for the STD strategy. This is because the gain (or compression) in the each of the two MOC processors was inhibited in direct proportion to the output energy from the corresponding frequency channel in the contralateral processor. Because the stimulus level was higher on the left ear, the output amplitude was also higher for the left- than for the right-ear MOC processor. Hence, the left-ear MOC processor inhibited the right-ear MOC processor more than the other way around.

Compressed output envelopes (a) and compression parameter (b) for frequency channel #6 of the STD and MOC processors in response to a 1-kHz pure tone presented binaurally from a sound source in front of the left ear. Red and blue traces depict signals at the left (L) and right (R) ears, respectively. The thick horizontal line near the abscissa depicts the time period when the stimulus was on. Note the overlap between the dashed red and blue traces in the bottom panel

Of course, the gains of the left-ear MOC processor were also inhibited by the right-ear processor to some extent. As a result, the output amplitude in the left-ear processor was slightly lower for the MOC than for the STD strategy (green arrow in Fig. 2a). The amount of this inhibition was, however, smaller than for the right-ear MOC processor because (1) the stimulus level was lower on the right ear, and (2) the gain in the right-ear processor was further inhibited by the higher left-ear processor output. In other words, the asymmetry in the amount of contralateral inhibition of gain (or compression) was enhanced because it depended on the processors’ output rather on their input amplitudes.

Figure 2b shows that the compression parameter, c, in channel #6 of the MOC processors was dynamically varying, hence the IOD was relatively smaller at the stimulus onset and increased gradually in time until it reached a plateau effect. The figure also illustrates the time course of this effect. Note that at time = 0 s, compression was identical for the right-ear and the left-ear MOC processors, and equal to the compression in the STD processors (c = 1000). Then, as the output amplitude from the left-ear processor increased, compression in the right-ear processor decreased (i.e., Eq. (1) became more linear). This decreased the output amplitude from the right-ear processor even further, which in turn reduced the inhibitory effect of the right-ear processor on the left-ear processor.

Unlike the MOC processor, whose output amplitudes and compression varied dynamically depending on the contralateral output energy, compression in the two STD processors was fixed (i.e., c was constant as depicted by the dashed lines in Fig. 2b). As a result, IODSTD was a compressed version of the acoustic ILD and smaller than IODMOC. In summary, the MOC processor enhanced the within-channel ILDs and presumably conveyed a better lateralized signal compared to the STD processor.

3.2 The MOC Processor Enhances the Spatial Segregation of Simultaneous Sounds

Spatial location is a powerful cue for auditory streaming (Bregman 2001). This section is devoted to showing that the MOC processor can enhance the spatial segregation of speech sounds in a multi-talker situation.

Figure 3 shows example ‘electrodograms’ (i.e., graphical representations of processors’ output amplitudes as a function of time and frequency channel number) for the STD and MOC strategies for two disyllabic Spanish words uttered simultaneously in the free field by speakers located on either side of the head: the word ‘diga’ was uttered on the left side, while the word ‘sastre’ was uttered on the right side of head. In this example, the two words were actually uttered by the same female speaker and were presented at the same level (‑20 dBFS). Approximate spectrograms for the two words are shown in Fig. 3a and b as the output from the processors’ filter banks (BPF in Fig. 1). Note that up to the filterbank stage, processing was identical and linear for the STD and MOC strategies.

A comparison of left- and right-ear ‘electrodograms’ for the STD and MOC strategies. The stimulus mimicked a free-field multi-talker condition where a speaker in front of the left ear uttered the Spanish word ‘diga’ while a second speaker in front of the right ear uttered the word ‘sastre’. a, b Output from the processors linear filter banks (BPF in Fig. 1) for each separate word. c, d. Electrodograms for the left- and right-ear STD processors. e, f. Electrodograms for the left- and right-ear MOC processors. Colour depicts output amplitudes in dBFS

The overlap between the words’ most significant features was less for the MOC (Fig. 3e–f) than for the STD strategy (Fig. 3c–d). For the STD strategy, significant features of the two words were present in the outputs of the left- and right-ear processors. By contrast, one can almost ‘see’ that the word diga, which was uttered on the left side of the head, was encoded mostly in the output of the left MOC processor, and the word sastre, which was uttered on the right side of the head, was encoded mostly in the right MOC processor output, with little interference between the two words at either ear. This suggests that the MOC processor may enhance the lateralization of speech, and possibly spatial segregation, in situations with multiple spatially non-overlapping speakers. Although not shown, something similar occurred for speech presented in competition with noise. This suggests that the MOC processor may (1) enhance speech intelligibility in noise or in ‘cocktail party’ situations, and (2) reduce listening effort.

4 Discussion and Conclusions

Our main motivation was to gain insight into the roles of the MOCR in acoustic hearing by using the unique stimulus controls provided by CIs. As a first step, we have proposed a bilateral CI sound coding strategy (the MOC strategy) inspired by the contralateral MOCR and shown that, compared to using functionally independent sound processors on each ear, our strategy can enhance ILDs on a within-channel basis (Fig. 2a) and the segregation of spatially distant sound sources (Fig. 3). An extended analysis (Lopez-Poveda 2015) revealed that it can also enhance within-channel amplitude modulations (a natural consequence from using overall less compression) and improve the speech-to-noise ratio in the speech ear for spatially non-overlapping speech and noise sources. The proposed MOC strategy might thus improve the intelligibility of speech in noise, and the spatial segregation of concurrent sound sources, while reducing listening effort. These effects and their possible benefits have been inferred from analyses of MOC-processor output envelopes for a limited number of spatial conditions. Though promising, further research is necessary to confirm that they hold for other spatial conditions and for behavioural hearing tasks.

The proposed MOC strategy is inspired by the contralateral MOCR but is not an accurate model of it. So far, we have disregarded that contralateral MOCR inhibition is probably stronger for apical than for basal cochlear regions (Lilaonitkul and Guinan 2009a; Aguilar et al. 2013) and that there is a half-octave shift in frequency between the inhibited cochlear region and the spectrum of contralateral MOCR elicitor sound (Lilaonitkul and Guinan 2009b). In addition, the present MOC-strategy parameters were selected ad hoc to facilitate visualization of the reported effects rather than to realistically account for the time course (Backus and Guinan 2006) or the magnitude (Cooper and Guinan 2006) of contralateral MOCR gain inhibition. Our next steps include assessing the potential benefits of more accurate MOC-strategy implementations and parameters.

Despite these inaccuracies, it is tempting to conjecture that the natural contralateral MOCR can produce effects and benefits for acoustic hearing similar to those reported here for the MOC processor. Indeed, the results in Fig. 3 are consistent with the previously conjectured role of the contralateral MOCR as a ‘cocktail party’ processor (Kim et al. 2006). The present analysis shows that this role manifests during ‘free-field’ listening and results from a combination of head-shadow frequency-specific ILDs with frequency-specific contralateral MOCR inhibition of cochlear gain. This and the other possible roles of the MOCR described here would complement its more widely accepted role of improving the neural encoding of speech in noisy environments by restoring the effective dynamic range of auditory nerve fibre responses (Guinan 2006). The latter effect is neural and cannot show up in the MOC-processor output signals used in the present analyses.

The potential benefits attributed here to the contralateral MOCR are unavailable to current CI users and this might explain part of their impaired performance understanding speech in challenging environments. The present results suggest that the MOC processor, or a version of it, might improve CI performance. The MOC strategy may also be adapted for use with bilateral hearing aids.

References

Aguilar E, Eustaquio-Martin A, Lopez-Poveda EA (2013) Contralateral efferent reflex effects on threshold and suprathreshold psychoacoustical tuning curves at low and high frequencies. J Assoc Res Otolaryngol 14(3):341–357

Backus BC, Guinan JJ (2006) Time-course of the human medial olivocochlear reflex. J Acoust Soc Am 119(5):2889–2904

Boyd PJ (2006) Effects of programming threshold and maplaw settings on acoustic thresholds and speech discrimination with the MED-EL COMBI 40+ cochlear implant. Ear Hear 27(6):608–618

Bregman AS (2001) Auditory scene analysis: the perceptual organization of sound. Second MIT Press, Massachusetts Institute of Technology, Cambridge (Massachusetts)

Brown GJ, Ferry RT, Meddis R (2010) A computer model of auditory efferent suppression: implications for the recognition of speech in noise. J Acoust Soc Am 127(2):943–954

Chintanpalli A, Jennings SG, Heinz MG, Strickland EA (2012) Modeling the anti-masking effects of the olivocochlear reflex in auditory nerve responses to tones in sustained noise. J Assoc Res Otolaryngol 13(2):219–235

Clark NR, Brown GJ, Jurgens T, Meddis R (2012) A frequency-selective feedback model of auditory efferent suppression and its implications for the recognition of speech in noise. J Acoust Soc Am 132(3):1535–1541

Cooper NP, Guinan JJ (2006) Efferent-mediated control of basilar membrane motion. J Physiol 576(Pt 1):49–54

Gardner WG, Martin KD (1995) HRTF measurements of a KEMAR. J Acoust Soc Am 97(6):3907–3908

Guinan JJ (2006) Olivocochlear efferents: anatomy, physiology, function, and the measurement of efferent effects in humans. Ear Hear 27(6):589–607

Guinan JJ (2010) Cochlear efferent innervation and function. Curr Opin Otolaryngol Head Neck Surg 18(5):447–453

Hood LJ, Berlin CI, Hurley A, Cecola RP, Bell B (1996) Contralateral suppression of transient-evoked otoacoustic emissions in humans: intensity effects. Hear Res 101(1–2):113–118

Kim SH, Frisina RD, Frisina DR (2006) Effects of age on speech understanding in normal hearing listeners: relationship between the auditory efferent system and speech intelligibility in noise. Speech Commun 48(7):855–862

Lilaonitkul W, Guinan JJ (2009a) Human medial olivocochlear reflex: effects as functions of contralateral, ipsilateral, and bilateral elicitor bandwidths. J Assoc Res Otolaryngol 10(3):459–470

Lilaonitkul W, Guinan JJ (2009b) Reflex control of the human inner ear: a half-octave offset in medial efferent feedback that is consistent with an efferent role in the control of masking. J Neurophysiol 101(3):1394–1406

Lopez-Poveda EA (2015) Sound enhancement for cochlear implants. Patent WO2015169649 A1.

Maison SF, Usubuchi H, Liberman MC (2013) Efferent feedback minimizes cochlear neuropathy from moderate noise exposure. J Neurosci 33(13):5542–5552

Robles L, Ruggero MA (2001) Mechanics of the mammalian cochlea. Physiol Rev 81(3):1305–1352

Walsh EJ, McGee J, McFadden SL, Liberman MC (1998) Long-term effects of sectioning the olivocochlear bundle in neonatal cats. J Neurosci 18(10):3859–3869

Wilson BS, Finley CC, Lawson DT, Wolford RD, Eddington DK, Rabinowitz WM (1991) Better speech recognition with cochlear implants. Nature 352(6332):236–238

Acknowledgments

We thank Enzo L. Aguilar, Peter T. Johannesen, Peter Nopp, Patricia Pérez-González, and Peter Schleich for comments. This work was supported by the Spanish Ministry of Economy and Competitiveness (grants BFU2009-07909 and BFU2012-39544-C02-01) to EAL-P and by MED-EL GmbH.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

<SimplePara><Emphasis Type="Bold">Open Access</Emphasis> This chapter is distributed under the terms of the Creative Commons Attribution-Noncommercial 2.5 License (http://creativecommons.org/licenses/by-nc/2.5/) which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.</SimplePara> <SimplePara>The images or other third party material in this chapter are included in the work's Creative Commons license, unless indicated otherwise in the credit line; if such material is not included in the work's Creative Commons license and the respective action is not permitted by statutory regulation, users will need to obtain permission from the license holder to duplicate, adapt or reproduce the material.</SimplePara>

Copyright information

© 2016 The Author(s)

About this paper

Cite this paper

Lopez-Poveda, E.A., Eustaquio-Martín, A., Stohl, J.S., Wolford, R.D., Schatzer, R., Wilson, B.S. (2016). Roles of the Contralateral Efferent Reflex in Hearing Demonstrated with Cochlear Implants. In: van Dijk, P., Başkent, D., Gaudrain, E., de Kleine, E., Wagner, A., Lanting, C. (eds) Physiology, Psychoacoustics and Cognition in Normal and Impaired Hearing. Advances in Experimental Medicine and Biology, vol 894. Springer, Cham. https://doi.org/10.1007/978-3-319-25474-6_12

Download citation

DOI: https://doi.org/10.1007/978-3-319-25474-6_12

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-25472-2

Online ISBN: 978-3-319-25474-6

eBook Packages: Biomedical and Life SciencesBiomedical and Life Sciences (R0)