Abstract

Blood vessel segmentation of retinal images plays an important role in the diagnosis of eye diseases. In this paper, we propose an automatic unsupervised blood vessel segmentation method for retinal images. Firstly, a multi-dimensional feature vector is constructed with the green channel intensity and the vessel enhanced intensity feature by the morphological operation. Secondly, self-organizing map (SOM) is exploited for pixel clustering, which is an unsupervised neural network. Finally, we classify each neuron in the output layer of SOM as retinal neuron or non-vessel neuron with Otsu’s method, and get the final segmentation result. Our proposed method is validated on the publicly available DRIVE database, and compared with the state-of-the-art algorithms.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Several pathologies affecting the retinal vascular structures due to diabetic retinopathy can be found in retinal images. Blood vessel segmentation from retinal images plays a crucial role for diagnosing complications due to hypertension, diabetes, arteriosclerosis, cardiovascular disease and stroke [1]. Automatic and accurate blood vessel segmentation system could provide several useful features for diagnosis of various retinal diseases, and reduce the doctors’ workload. However, the retinal images have low contrast, and large variability is presented in the image acquisition process [2], which deteriorates automatic blood vessel segmentation results.

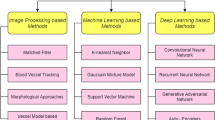

Many studies for retinal vessel segmentation have been reported, including rule-based method [3], model-based method [4–7], matched filtering [8–10], and supervised method [2, 11–14].

In this paper, we propose an automatic unsupervised segmentation method to partition the retinal images into two types: vessel and non-vessel. For improving the segmentation results, we construct a multi-dimensional feature vector with the green channel intensity and the enhanced intensity feature by the morphological operation. Then, an unsupervised neural network – self-organizing map (SOM) is exploited as the classifier for pixel clustering. Finally, we classify each neuron in the output layer of SOM as retinal neuron or non-vessel neuron with Otsu’s method, and get the final segmentation results.

The rest of this paper is organized as follow. Section 2 presents our proposed vessel segmentation method for retinal images. In Sect. 3, experimental results are presented, followed by the conclusion in Sect. 4.

2 Our Proposed Retinal Vessel Segmentation Method

In this section, a detailed description about our proposed segmentation method is presented. Firstly, a multi-dimensional feature vector is extracted for each pixel. Then, the algorithm based on neural network is proposed for automatic blood vessel segmentation.

2.1 Feature Extraction

Retinal images often show important lighting variations, poor contrast and noise [2]. In this paper, we expand each pixel of retinal image into a multi-dimensional feature vector, characterizing the image data beyond simple pixel intensities.

The Green Channel Intensity Feature.

In original RGB retinal images, the green channel shows the best vessel-background contrast, while the red and blue channels show low contrast and are noisy [2, 11]. So, we select the green channel from the RGB retinal image, and the green channel intensity of each pixel is taken as the intensity feature. Figure 1(a) is the original RGB retinal image from DRIVE database, and the green channel image is shown in Fig. 1(b).

Illustration of the feature extraction process. (a) Original RGB retinal image. (b) The green channel of the original image. (c) Shade-corrected image. (d) Vessel enhanced image. (e) The segmentation result with our proposed method. (f) The manual segmentation result by the first specialist (Color figure online).

Vessel Enhanced Intensity Feature.

Retinal images often contain background intensity variation because of uniform illumination, which deteriorates the segmentation results. In the present work, the shade-correction method mentioned in [15] is used to remove the background lightening variations. The shade-correction image of Fig. 1(b) is presented in Fig. 1(c).

After background homogenization, the contrast between the blood vessels and the background is generally poor in the retinal images. Vessel enhancement is utilized for estimating the complementary image of the homogenized image, and subsequently applying the morphological top-hat transformation with a disc of eight pixels in radius. Figure 1(d) is the vessel enhancement image of Fig. 1(c).

In order to generate the features which could overcome the lighting variation, we integrate the enhanced intensity feature with the green channel intensity as the pixel feature vector.

2.2 Segmentation System

Self-organizing Map.

In present, neural-network-based method is often used in retinal image segmentation [11]. As an unsupervised clustering method, Kohonen’s self-organizing map (SOM) [16] is a two-layer feedforward competitive learning neural network that can discover the topological structure hidden in the data and display it in one or two dimensional space. Therefore, we exploit SOM method for blood vessel segmentation.

SOM consists of an input layer and a single output layer of M neurons which usually form a two-dimensional array. In the output layer, each neuron i has a d-dimensional weight vector \( w_{i} = [w_{i1} , \ldots ,w_{id} ] \). At each training step t, the input vector \( x_{p} \) of pixel p in the retinal image I is randomly chosen. Distance \( d_{{x_{p} ,i}} (t) \) between \( x_{p} (t) \) and each neuron i in the output layer is computed. The winning neuron c is the neuron with the weight vector closest to \( x_{p} \), \( c = \arg \mathop {\hbox{min} }\limits_{i} d_{{x_{p} ,i}} (t), \, i \in \{ 1, \ldots ,M\} . \)

A set of neighboring neurons of the winning node c is denoted as \( N_{c} \), which decreases its neighboring radius of the winning neuron with time. \( N_{t} (c,i) \) is the neighborhood kernel function around the winning neuron c at time t. The neighborhood kernel function is a non-increasing function of time t and of the distance of neuron i from the winning neuron c in the 2-D output layer. The kernel function can be taken as a Gaussian function

where r i is the coordinate of neuron i on the output layer and \( N_{c} (t) \) is the kernel width. The weight-updating rule in the sequential SOM algorithm can be written as \( w_{i} (t + 1) = w_{i} (t) + \alpha (t)N_{t} (c,i)(x_{p} (t) - w_{i} )\quad \forall i \in N_{c} ,\,p \in I \). The parameter \( \alpha (t) \) is the learning rate of the algorithm. Generally, the learning rate \( \alpha (t) \) and the kernel width \( N_{c} (t) \) are monotonically decreasing functions of time [16].

SOM possesses some very useful properties. Kohonen [17] has argued that the density of the weight vectors assigned to an input region approximates the density of the inputs occupying this region. Second, the weight vectors tend to be ordered according to their mutual similarity.

In our work, we exploit self-organizing map [16] to cluster pixels in the retinal image. Vessels of the retinal image belong to the detail information. To reserve the thin and small vessels in the segmentation result, we set the size of output layer with 4 × 4. So, there are multiple neurons in the output layer (vessel neurons or non-vessel neurons) after SOM clustering.

Labeling the Output Neurons’ Class.

After clustering with SOM algorithm, there are multiple output neurons including vessel neurons and non-vessel neurons. We use Otsu’s method to estimate the neuron class.

Otsu’s method is used to automatically perform clustering-based image thresholding [18]. The algorithm assumes that the image contains two classes of pixels following bi-modal histogram (foreground pixels and background pixels), and then calculates the optimum threshold separating the two classes so that their combined spread (intra-class variance) is minimal [19].

Postprocessing.

Finally, in the visual inspection, small isolated regions misclassified as blood vessels are also observed. If the vessel region is connected with no more than 30\,pixels, it will be reclassified as non-vessel. The segmentation result of our proposed method is shown in Fig. 1(e).

3 Experimental Results

3.1 Database and Similarity Indices

The DRIVE database [13] is used in our experiments. This dataset is a public retinal image database, and is widely used by other researchers to test their blood vessel segmentation methods. Moreover, the DRIVE database provides two sets of manual segmentations made by two different observers for performance validation. In our experiments, performance is computed with the segmentation of the first observer as ground truth.

To quantify the overlap between the segmentation results and the ground truth for vessel pixels and non-vessel pixels, accuracy (Acc) are adopted in our experiments. The accuracy of our segmentation method.

For visual inspection, Fig. 2 depicts the blood vessel segmentation results on different retinal images from DRIVE database. Figure 2(a), (d) and (g) are original retinal images with different illumination conditions, and their segmentation results using our proposed method are shown in Fig. 2(b), (e) and (h) respectively. The manual segmentation results by the first specialist are presents in Fig. 2(c), (f) and (i) for visual comparison. It is evident that our method is robust to the low contrast and large variability in the retinal images, and gets accurate segmentation results.

In addition, we give a quantitative validation of our method on the DRIVE database with available gold standard images. Since the images dark background outside the field-of-view (FOV) is provided, accuracy (Acc) values are computed for each image considering FOV pixels only. The results are listed in Table 1, and the last row of the table shows average Acc value for 20 images in the database.

3.2 Comparing the Performance of Our Algorithm with Other Methods

In order to compare our approach with other retinal vessel segmentation algorithms, the average Acc value is used as measures of method performance. We compare our method with the following published methods: Martinez-Parez et al. [3], Jiang and Mojon [4], Chaudhuri et al. [8], Cinsdikici and Aydin [10], and Niemeijer et al. [12]. The comparison results are summarized in Table 2, which indicate our proposed method outperforms most of the other methods.

4 Conclusions

This study proposes a retinal vessel segmentation method based on neural network algorithm. To overcome the problem of low contrast and large variability in retinal images, we construct the feature vector with the intensity from green channel and the vessel enhanced intensity feature. Then, we classify the pixels in retinal image with SOM algorithm. Finally, we label each neuron in the output layer of SOM as retinal neuron or non-vessel neuron with Otsu’s method, and get the final segmentation results.

Our method is validated on the DRIVE database with available gold standard images. From the visual inspection and quantitative validation of our method in the experiments, it is evident that our method is robust to the low contrast and large variability in the retinal images, and gets accurate segmentation results. In addition, we compare our method with the state-of-art methods, and the experimental results indicate that out method outperforms most of the other methods.

References

Kanski, J.J.: Clinical Ophthalmology: A systematic approach. Butterworth-Heinemann, London (1989)

Roychowdhury, S., Koozekanani, D.D., Parhi, K.K.: Blood vessel segmentation of fundus images by major vessel extraction and sub-image classification. IEEE J. Biomed. Health Inform. 99 (2014). doi:10.1109/JBHI.2014.2335617

Marinez-Perez, M.E., Hughes, A.D., Thom, S.A., Bharath, A.A., Parker, K.H.: Segmentation of blood vessels from red-free and fluorescein retinal images. Med. Imaging Anaysis 11, 47–61 (2007)

Jiang, X., Mojon, D.: Adaptive local thresholding by verification-based multithreshold probing with application to vessel detection in retinal images. IEEE Trans. Pattern Anal. Mach. Intell. 25(1), 131–137 (2003)

Vermeer, K.A., Vos, F.M., Lemij, H.G., Vossepoel, A.M.: A model based method for retinal blood vessel detection. Comput. Biol. Med. 34, 209–219 (2004)

Lam, B., Yan, H.: A novel vessel segmentation algorithm for pathological retina images based on the divergence of vector fields. IEEE Trans. Med. Imaging 27(2), 237–246 (2008)

Al-Diri, B., Hunter, A., Steel, D.: An active contour model for segmenting and measuring retinal vessels. IEEE Trans. Med. Imaging 28, 1488–1497 (2009)

Chaudhuri, S., Chatterjee, S., Katz, N., Nelson, M., Goldbaum, M.: Detection of blood vessels in retinal images using two-dimensional matched filters. IEEE Trans. Med. Imaging 8(3), 263–269 (1989)

Odstrcilikb, J., Kolar, R., Budai, A., et al.: Retinal vessel segmentation by improved matched filtering: evaluation on a new high-resolution fundus image database. IET Image Proc. 7, 373–383 (2013)

Cinsdikici, M.G., Aydin, D.: Detection of blood vessels in ophthalmoscope images using MF/ant (matched filter/ant colony) algorithm. Comput. Methods Programs Biomed. 96, 85–95 (2009)

Marin, D., Aquino, A., Gegundez-Arias, M.E., Bravo, J.M.: A new supervised method for blood vessel segmentation in retinal images by using gray-level and moment invariants-based features. IEEE Trans. Med. Imaging 30, 146–158 (2011)

Niemeijer, M., Staal, J., Ginneken, B.V., Loog, M., Abramoff, M.D.: Comparative study of retinal vessel segmentation methods on a new publicly available database. SPIE Med. Imag. 5370, 648–656 (2004)

Staal, J., Abramoff, M.D., Niemeijer, M., Viergever, M.A., Ginneken, B.: Ridge-based vessel segmentation in color images of the retina. IEEE Trans. Med. Imaging 23, 501–509 (2004)

Kande, G.B., Savithri, T.S., Subbaiah, P.V.: Segmentation of vessels in fundus images using spatially weighted fuzzy C-means clustering algorithm. Int. J. Comput. Sci. Netw. Secur. 7, 102–109 (2007)

Niemeijer, M., van Ginneken, B., Staal, J.J., Suttorp-Schulten, M.S.A., Abramoff, M.D.: Automatic detection of red lesions in digital color fundus photographs. IEEE Trans. Med. Imaging 24, 584–592 (2005)

Kohonen, T.: The self-organizing maps. Proc. IEEE 78, 1464–1480 (1990)

Kohonen, T.: Self-organizing Maps. Springer, New York (1995)

Sezgin, M., Sankur, B.: Survey over image thresholding techniques and quantitative performance evaluation. J. Electron. Imaging 13, 146–165 (2004)

Otsu, N.: A threshold selection method from gray-level histograms. IEEE Trans. Sys. Man. Cyber. 9, 62–66 (1979)

Acknowledgements

This project is supported in part by Shenzhen Science and Technology plan Project (JCYJ20120615101059717), and Project of Shenzhen Institute of Information Technology (SYS201004).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Zhang, J., Cui, Y., Jiang, W., Wang, L. (2015). Blood Vessel Segmentation of Retinal Images Based on Neural Network. In: Zhang, YJ. (eds) Image and Graphics. ICIG 2015. Lecture Notes in Computer Science(), vol 9218. Springer, Cham. https://doi.org/10.1007/978-3-319-21963-9_2

Download citation

DOI: https://doi.org/10.1007/978-3-319-21963-9_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-21962-2

Online ISBN: 978-3-319-21963-9

eBook Packages: Computer ScienceComputer Science (R0)