Abstract

Graph partitioning, or community detection, is an important tool for investigating the structures embedded in real data. The spectral method is a major algorithm for graph partitioning and is also analytically tractable. In order to analyze the performance of the spectral method, we consider a regular graph of two loosely connected clusters, each of which consists of a random graph, i.e., a random graph with a planted partition. Since we focus on the bisection of regular random graphs, whether the unnormalized Laplacian, the normalized Laplacian, or the modularity matrix is used does not make a difference. Using the replica method, which is often used in the field of spin-glass theory, we estimate the so-called detectability threshold; that is, the threshold above which the partition obtained by the method is completely uncorrelated with the planted partition.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Considerable attention has been paid to the graph clustering or community detection problem and a number of formulations and algorithms have been proposed in the literature [1–5]. Although the meaning of a module in each detection method may not be equivalent, we naturally wish to know in what manner the methods perform typically and the point at which a method fails to detect a certain structure in principle [6–8]. Otherwise, we need to test all the existing methods, and this clearly requires a huge cost and is also redundant. Although most studies of the expected performance were experimental, using benchmark testing [9–11], it is expected that theoretical analysis will give us a deeper insight.

As frequently done in benchmarks, we consider random graphs having a planted block structure. The most common model is the so-called stochastic block model (or the planted partition model) [12]. Although many variants of the stochastic block model have been proposed in the literature [13–16], in the simplest case, the vertices within the same module are connected with a high probability p in , while the vertices in different modules are connected with a low probability p out . When the difference between the probabilities is sufficiently large, \(p_{in} \gg p_{out}\), the graph has a strong block structure and the spectral method detects almost or exactly the same partition as the planted partition. As we increase the probability between the modules p out , the partition obtained by the spectral method tends to very different from the planted one, and finally, they are completely uncorrelated. The point of the transition is called the detectability threshold [17–20]. Since we know that the graph is generated by the stochastic block model, the ultimate limit of this threshold is given by Bayesian inference and it is known that, in the case of the two-block model,

where c in = p in N, c out = p out N and \(\overline{c}\) is the average degree. N is the total number of vertices in the graph. Equation (12.1) indicates that, even when the vertices are more densely connected within a module than between modules, unless the difference is sufficiently large, it is statistically impossible to infer the embedded structure.

It was predicted by Nadakuditi and Newman in [20] that the spectral method with modularity also has the same detectability threshold as Eq. (12.1). However, it was numerically shown in [21] that this applies only to the case where the graph is not sparse. Despite its significance, a precise estimate of the detectability threshold of the spectral method in the sparse case seems to remain missing.

In this article, we derive an estimate of the detectability threshold of the spectral method of the two-block regular random graph. It should be noted that the simplest stochastic block model, which we explained above, has Poisson degree distribution, while we impose a constraint such that the degree does not fluctuate. Therefore, our results do not directly provide an answer to the missing part of the problem. They do, however, provide a fruitful insight into the performance of the spectral method. Moreover, in the present situation, we do not face the second difficulty of the spectral method: the localization of the eigenvectors. Although the localization of eigenvectors is another important factor in the detectability problem, it is outside the scope of this article.

This article is organized as follows. In Sect. 12.2, we briefly introduce spectral partitioning of two-block regular random graphs and mention that the eigenvector corresponding to the second-smallest eigenvalue contains the information of the modules. In Sect. 12.3, we show the average behavior of the second-smallest eigenvalue and the corresponding eigenvector as a function of the parameters in the model. Finally, Sect. 12.4 is devoted to the conclusion.

2 Spectral Partitioning of Regular Random Graphs With Two-Block Structure

The model parameters in the two-block regular random graph are the total number of vertices N, the degree of each vertex c, and the fraction of the edges between modules \(\gamma = l_{\mathrm{int}}/N\). The graph is constructed as follows. We first set module indices on the vertices, each of which has c half edges, or stubs, and randomly connect the vertices in different modules with l int edges. We connect the rest of the edges at random within the same module. We repeat the process so that every edge is connected to a pair of vertices. This random graph is sparse when c = O(1), because the number of edges is of the same order as the number of vertices N. We calculate the degree of correlation between the partition obtained by the spectral method and the planted partition as γ varies.

The choices of the matrix that can be used in the spectral method is wide. The popular matrices are the unnormalized Laplacian L, the normalized Laplacian \(\mathcal{L}\), and the modularity matrix B. For the bisection of regular random graphs, however, all the partitions they yield have shown to be the same [22]. Thus, we analyze the unnormalized Laplacian L, since it is the simplest. The basic procedure of the spectral bisection with the unnormalized Laplacian L is quite simple. We solve for the eigenvector corresponding to the second-smallest eigenvalue of L and classify each vertex according to the sign of the corresponding component of the eigenvector; the vertices with the same sign belong to the same module. Therefore, our goal is to calculate the behavior of the sign of the eigenvector as a function of γ.

3 Detectability Threshold

We use the so-called replica method, which is often used in the field of spin-glass theory in statistical physics. The basic methodology here is parallel to that in [23]. Although the final goal is to solve for the eigenvector corresponding to the second-smallest eigenvalue or the statistics of its components, let us consider estimating the second-smallest eigenvalue, averaged over the realization of the random graphs. We denote by \([\ldots ]_{L}\) the random average over the unnormalized Laplacians of the possible graphs. For this purpose, we introduce the following “Hamiltonian” \(H(\boldsymbol{x}\vert L)\), “partition function” Z(β | L), and “free energy density” f(β | L):

where \(\boldsymbol{x}\) is an N-dimensional vector, \(\boldsymbol{1}\) is a vector in which each element equals one, and T represents the transpose. The delta function \(\delta (\vert \boldsymbol{x}\vert ^{2} - N)\) in (12.3) is to impose the norm constraint. It should be noted that the eigenvector corresponding to the smallest eigenvalue is proportional to \(\boldsymbol{1}\) and this choice is excluded by the constraint \(\delta (\boldsymbol{1}^{\mathrm{T}}\boldsymbol{x})\). In the limit of \(\beta \rightarrow \infty\), in conjunction with the operation of \(\delta (\boldsymbol{1}^{\mathrm{T}}\boldsymbol{x})\), the contribution in the integral of the “partition function” Z(β | L) is dominated by the vector that minimizes the value of the “Hamiltonian” \(H(\boldsymbol{x}\vert L)\), under the constraint of being orthogonal to the eigenvector \(\boldsymbol{1}\) of the smallest eigenvalue. Therefore, the “partition function” is dominated by the eigenvector of the second-smallest eigenvalue and the “free energy density” f(β | L) extracts it, i.e.,

The quantity we need is \(\left [\lambda _{2}\right ]_{L}\), the second-smallest eigenvalue averaged over the unnormalized Laplacians. However, because the average of the logarithm of the “partition function” is difficult to calculate, we recast \(\left [\lambda _{2}\right ]_{L}\) as

The assessment of [Z n(β | L)] L is also difficult for a general real number n. However, when n takes positive integer values, [Z n(β | L)] L can be evaluated as follows. For a positive integer n, [Z n(β | L)] L is expressed as

This means that [Z n(β | L)] L has a meaning of a partition function for a system of n-replicated variables \(\boldsymbol{x}_{1},\boldsymbol{x}_{2},\ldots,\boldsymbol{x}_{n}\) that is subject to no quenched randomness. In addition, the assumption of the graph generation guarantees that the effective Hamiltonian \(\mathcal{H}_{\mathrm{eff}}(\beta,\boldsymbol{x}_{1},\boldsymbol{x}_{2},\ldots,\boldsymbol{x}_{n})\) is of the mean field type. These indicate that \(N^{-1}\ln [Z^{n}(\beta \vert L)]_{L}\) for n = 1, 2, … can be evaluated exactly by the saddle point method with respect to certain macroscopic variables (order parameters) as \(N \rightarrow \infty\). After some calculations, we indeed reach an expression with the saddle point evaluation as

where \(\mathcal{N}_{G}\) is the total number of graph configurations and

In the above equations, four functions \(Q_{r}(\mu _{1}^{(r)},\ldots,\mu _{n}^{(r)})\) and \(\hat{Q}_{r}(\mu _{1}^{(r)},\ldots,\mu _{n}^{(r)})\) (r = 1, 2) play the roles of order parameters.

Unfortunately, this expression cannot be employed directly for the computation of (6) as \(Q_{r}(\mu _{1}^{(r)},\ldots,\mu _{n}^{(r)})\) and \(\hat{Q}_{r}(\mu _{1}^{(r)},\ldots,\mu _{n}^{(r)})\) are defined only for n = 1, 2, …. To overcome this inconvenience, we introduce the following assumption at the dominant saddle point.

In the current system, this restricts the functional forms of \(Q_{r}(\mu _{1}^{(r)},\ldots,\mu _{n}^{(r)})\) and \(\hat{Q}_{r}(\mu _{1}^{(r)},\ldots,\mu _{n}^{(r)})\) as

which yields an expression of \(N^{-1}\ln [Z^{n}(\beta \vert L)]_{L}\) that can be extended for n of a real number. We then substitute that expression into (12.6), which finally provides

where we set

The above procedure is often termed the replica method. Although its mathematical validity of the replica method has not yet been proved, we see that our assessment based on the simplest permutation symmetry for the replica indices offers a fairly accurate prediction for the experimental results below.

Due to the space limitation, we hereafter show only the results, omitting all the details of the calculation (see [24] for complete calculation including detailed derivation of (12.11)). In the limit of large size \(N \rightarrow \infty\), the saddle-point analysis of (12.11) yields the solution

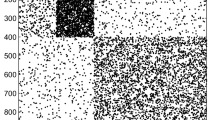

where \(\varGamma = 1 -\gamma /(cp_{1}p_{2})\); we set the size of each module as N 1 = p 1 N and N 2 = p 2 N. The region of constant eigenvalue in (12.14) indicates that the second-smallest eigenvalue is in the spectral band, i.e., the information of the modules is lost there and an undetectable region exists. Therefore, the boundary of (12.14) is the critical point where the phase transition occurs. The plot of the second-smallest eigenvalue \(\left [\lambda _{2}\right ]_{L}\) is shown in Fig. 12.1. Although the dots represent the results of the numerical experiment of a single realization, the results agree with (12.14) quite well.

Second-smallest eigenvalue as a function of γ. The solid line represents the estimate of the average over the realization of the graphs \(\left [\lambda _{2}\right ]_{L}\) and the dots represent the results of the numerical experiment of a single realization with N = 1000 and c = 4. The module sizes are set to be equal, \(p_{1} = p_{2} = 0.5\).

In terms of γ, the boundary of Eq. (12.14) can be recast as

where

Since cp 1 p 2 is the value of γ in a uniform (i.e., one-block) regular random graph, the factor f(c) represents the low value of the threshold as compared to that in the uniform random case.

The distribution of the components of the corresponding eigenvector can also be obtained through this calculation. Although it cannot be written analytically, we can solve for it by iterating a set of integral equations that result from the saddle-point evaluation of the right hand side of (12.6). As shown in Fig. 12.2, the results of our analysis agree with the corresponding numerical experiment excellently. In Fig. 12.2, the dots represent the average over 100 realizations of the random graphs. The ratio of misclassified vertices are shown in Fig. 12.3. It increases polynomially with respect to γ and saturates at the detectability threshold.

Distributions of the elements in the eigenvector corresponding to the second-smallest eigenvector. Each plot shows the distribution of elements in each module, i.e., the distribution on the left corresponds to the module that is supposed to have negative sign elements and the distribution on the right corresponds to the module that is supposed to have positive sign elements, respectively. The dots represent the average results of the numerical experiments, taken over 100 samples. The ratio of the modules are set to be p 1 = 0. 6 and p 2 = 0. 4

It should be note that, even when the number of vertices is infinity, the fraction of misclassified vertices remains finite. The misclassification of the vertices occurs because the planted partition is not the optimum in the sense of the spectral bisection. The spectral method with the unnormalized Laplacian L constitute the continuous relaxation of the discrete minimization problem of the so-called RatioCut. The RatioCut is lower for a partition with a sparse cut, while it penalizes for unbalanced partitions in the sense of the number of the vertices within a module; there may always exist a better cut in the sense of the RatioCut than the planted partition in the graph when γ is large.

Finally, let us compare our estimate with results of studies in the literature. In the following, we focus on the case of equal size modules, i.e., \(p_{1} = p_{2} = 0.5\). Let the total degree within a module be K in and let the total degree from one module to the others be K out. Since we have \(K = cN = 2(K_{\mathrm{in}} + K_{\mathrm{out}})\) and K out = γ N, Eq. (12.15) reads

In addition, in the limit \(N \rightarrow \infty\), we have

Therefore, (12.17) can be recast as

This condition converges to the ultimate detectability threshold (12.1) in the dense limit \(c \rightarrow \infty\). There exists, however, a huge gap between (12.1) and (12.20) when the degree c is small; considering the fact that the upper bound of the parameter c in − c out is 2c, this gap is not negligible at all. Thus, the implication of our results is that we cannot expect the spectral threshold to detect modules all the way down to the ultimate detectability threshold, even in regular random graphs, where the localization of the eigenvectors is absent.

4 Conclusion

In summary, we derived an estimate of the detectability threshold (12.20) of the spectral method of the two-block regular random graphs. The threshold we obtained agrees with the results of the numerical experiment excellently and is expected to be asymptotically exact in the limit \(N \rightarrow \infty\). Our results indicate that the spectral method cannot detect modules all the way down to the ultimate detectability threshold (12.1), even when the degree is fixed to a constant. Since the threshold (12.20) converges to (12.1) as the degree c increases, this gap becomes negligible in the case where the degree is sufficiently large and this supports the results obtained by Nadakuditi and Newman [20].

A method for achieving the ultimate detectability threshold with the spectral method has already been proposed by Krzakala et al. [25]. They proposed using a matrix called the non-backtracking matrix, which avoids the elements of eigenvectors to be localized at a few vertices. A question about this formalism is: to what extent is the gap in the detectability in fact closed by the non-backtracking matrix as compared to the Laplacians? Our estimate gives a clue to the answer to this question. In order to gain further insight, we need to analyze the case of graphs with degree fluctuation. In that case, the methods using the unnormalized Laplacian and the normalized Laplacian will no longer be equivalent. Moreover, it is important to verify the effect of the localization of eigenvectors on the detectability. These problems remain as future work.

References

Fortunato S (2010) Phys Rep 486:75

Luxburg U (2007) Stat Comput 17(4):395

Newman MEJ (2006) Phys Rev E 74(3):036104

Rosvall M, Bergstrom C (2008) Proc Natl Acad Sci USA 105(4):1118

Palla G, Derényi I, Farkas I, Vicsek T (2005) Nature 435(7043):814

Fortunato S, Barthélemy M (2007) Proc Natl Acad Sci USA 104(1):36

Good BH, de Montjoye YA, Clauset A (2010) Phys Rev E 81(4):046106

Kawamoto T, Rosvall M (2015) Phys Rev E 91:012809

Leskovec J, Lang KJ, Anirban D, Mahoney MW (2009) Internet Math 6(1):29

Lancichinetti A, Fortunato S (2009) Phys Rev E 80(5), 056117

Aldecoa R, Marín I (2013) Sci Rep 3:2216

Holland PW, Laskey KB, Leinhardt S (1983) Soc Networks 5(2):109

Airoldi EM, Blei DM, Fienberg SE, Xing EP (2008) J Mach Learn Res 9:1981

Karrer B, Newman MEJ (2011) Phys Rev E 83(1):016107

Ball B, Karrer B, Newman MEJ (2011) Phys Rev E 84(3):036103

Peixoto TP (2012) Phys Rev E 85(5):056122

Reichardt J, Leone M (2008) Phys Rev Lett 101(7):078701

Decelle A, Krzakala F, Moore C, Zdeborová L (2011) Phys Rev Lett 107(6):065701

Decelle A, Krzakala F, Moore C, Zdeborová L (2011) Phys Rev E 84(6):066106

Nadakuditi RR, Newman MEJ (2012) Phys Rev Lett 108(18):188701

Zhang P, Krzakala F, Reichardt J, Zdeborová L (2012) J Stat Mech 2012(12):P12021

Newman MEJ (2013) Phys Rev E 88(4):042822

Kabashima Y, Takahashi H (2012) J Phys A 45(32):325001

Kawamoto T, Kabashima Y (2015) Limitations in the spectral method for graph partitioning: Detectability threshold and localization of eigenvectors. Phys Rev E 91(062):803 doi:10.1103/PhysRevE.91.062803

Krzakala F, Moore C, Mossel E, Neeman J, Sly A, Zdeborová L, Zhang P (2013) Proc Natl Acad Sci USA 110(52):20935

Acknowledgements

This work was supported by JSPS KAKENHI Nos. 26011023 (TK), 25120013 (YK), and the JSPS Core-to-Core Program “Non-equilibrium dynamics of soft matter and information.”

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This book is distributed under the terms of the Creative Commons Attribution Non-commercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Copyright information

© 2015 The Author(s)

About this paper

Cite this paper

Kawamoto, T., Kabashima, Y. (2015). Detectability Threshold of the Spectral Method for Graph Partitioning. In: Takayasu, H., Ito, N., Noda, I., Takayasu, M. (eds) Proceedings of the International Conference on Social Modeling and Simulation, plus Econophysics Colloquium 2014. Springer Proceedings in Complexity. Springer, Cham. https://doi.org/10.1007/978-3-319-20591-5_12

Download citation

DOI: https://doi.org/10.1007/978-3-319-20591-5_12

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-20590-8

Online ISBN: 978-3-319-20591-5

eBook Packages: Physics and AstronomyPhysics and Astronomy (R0)