Abstract

We suggest practical and simple methods for Monte Carlo estimation of the (small) probabilities of large losses using importance sampling. We argue that a simple optimal choice of importance sampling distribution is a member of the generalized extreme value distribution and, unlike the common alternatives such as Esscher transform, this family achieves bounded relative error in the tail. Examples of simulating rare event probabilities and conditional tail expectations are given and very large efficiency gains are achieved.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Suppose \(\mathbf {Y=}\) \((Y_{1},Y_{2}, \ldots ,Y_{m})\) is a vector of independent random variables each with cumulative distribution function (cdf) \(F\) and probability density function (pdf) \(f\)with respect to Lebesgue measure. Suppose we wish to estimate the probability of a large loss, \(p_{t} =P(L(\mathbf {Y})>t)\) where \(L(\mathbf {Y})\) is the loss determined by the realization \(\mathbf {Y}\) (usually assumed to be monotonic in its components) and \(t\) is some predetermined threshold. There are many different loss functions \(L(\mathbf {Y})\) used in rare event simulation, including barrier hitting probabilities of sums or averages of independent random variables, or of processes such as an Ornstein–Uhlenbeck or Feller process. The methods discussed here are designed for problems in which a small number of continuous factors are the primary contributors to large losses. We wish to use importance sampling (IS) (see [3], Sect. 4.6 or [12] p. 183): generate independent replications of \(\mathbf{Y}\) repeatedly, say \(n\) times, from an alternative distribution, say one with pdf \(f_\mathrm{IS}(\mathbf {y})\) and then estimate the above expected value using the IS estimator \(E_{\theta }(\left[ I(L(\mathbf{{Y}})>t)f(\mathbf {Y})/f_\mathrm{IS}(\mathbf {Y} )\right] ),\) where \(I(*)\) denotes the indicator function. We use

If we denote by \(E_{\text {IS}}\) the expected value under the IS distribution \(f_{\text {IS}},\) and by \(E\) the expected value under the original distribution \(f,\) then

confirming that this is an unbiased estimator. There is a great deal of the literature on such problems when the event of interest is “rare”, i.e., when \(p_{t}\) is very small, and many different approaches depending on the underlying loss function and distribution. We do not attempt a review of the literature in the limited space available. Excellent reviews of the methods and applications are given in Chap. 6 of [1] and Chap. 10 of [9]. Highly efficient methods have been developed for tail estimation in very simple problems, such as when the loss function consists of a sum of independent identically distributed increments. In this paper, we will provide practical tools for simulation of such problems in many examples of common interest. For rare events, the variance or standard error is less suitable as a performance measure than a version scaled by the mean because in estimating very small probabilities such as \(0.0001,\) it is not the absolute size of the error that matters but its size relative to the true value.

Definition 1

The relative error (RE) of the importance sample estimator is the ratio of the estimator’s standard deviation to its mean.

Simulation is made more difficult for rare events because crude Monte Carlo fails. As a simple illustration, suppose we wish to estimate a very small probability \(p_{t}.\) To this end, we generate \(n\) values of \(L(\mathbf {Y} _{i})\) and estimate this probability with \(\hat{p}=X/n\) where \(X\) is the number of times that \(L(\mathbf {Y}_{i})>t\) and \(X\) has a Binomial\((n,p_{t})\) distribution. In this case, the relative error is

For rare events, \(p_{t}\) is small and the relative error is very large. If we wish a normal-based confidence interval for \(p_{t}\) of the form \((\hat{p} _{t}-0.1\hat{p}_{t},\hat{p}_{t}+0.1\hat{p}_{t})\) for example, we are essentially stipulating a certain relative error \(({\mathrm{RE}}=0.05102)\) whatever the value of \(p_{t}.\) In order to achieve a reasonable bound on the relative error, we would need to use sample sizes that were of the order of \(p_{t} ^{-1}\), i.e., larger and larger sample sizes for rarer events.

Returning to the estimator (1), if we take its variance, we obtain

the relative error is

The relative error decreases linearly in \(n^{-1/2}\) but it is the factor

highly sensitive to \(t\) when \(p_{t}\) is small, that determines whether an IS distribution is good or bad for a given problem. There is a large literature regarding the use of importance sampling for such problems, much recommending the use of the exponential tilt or Esscher transform. The suggestion is to adopt an IS distribution of the form

and then tune the parameter \(\theta \) so that the IS estimator is as efficient as possible (see, for example [1, 7, 15]). Chapter 10 of [9] provides a detailed discussion of methods and applications as well as a discussion of the boundedness of relative error. McLeish [11] demonstrates that the IS distribution (2) is suboptimal and unlike the alternatives we explore there, does not typically achieve bounded relative error. We argue for the use of the generalized extreme value (GEV) family of distributions for such problems. A loose paraphrase of the theme of the current paper is “all you really needFootnote 1 is GEV”. Indeed in Appendix A, we prove a result (Proposition 1) which shows that, under some conditions, there is always an importance sampling estimator whose relative error is bounded in the tail obtained by generating the distance along one principal axis from an extreme value distribution, while leaving the other coordinates unchanged in distribution. We now consider some one-dimensional problems.

2 The One-Dimensional Case

Consider estimating \(P\left( L(Y)>t\right) \) where the value of \(t\) is large, the random variable \(Y\) is one-dimensional and \(L(y)\) is monotonically increasing. We would like to use an importance sample distribution for which, by adjusting the values of the parameters, we can have small relative error for any large \(t\). We seek a parametric family \(\{f_{\theta };\theta \in \varTheta \}\) of importance sample estimators which have bounded relative error as follows:

Definition 2

Suppose \(\fancyscript{H}\) is the class of non-negative integrable functions {\(I(L(Y)\!>t) ; \text {for}\, t >T\)}.We say a parametric family {\({f_{\theta };\theta \in \varTheta }\)}has bounded relative error for the class \(\fancyscript{H}\) if

A parametric family has bounded relative error for estimating functions in a class \(\fancyscript{H}\) if, for each \(t>T,\) there exists a parameter value \(\theta \) which provides bounded relative error. Indeed, a bound on the relative error of approximately \(0.738n^{-1/2}\) can be achieved by importance sampling if we know the tail behavior of the distribution. There are very few circumstances under which the exponential tilt, families of continuous densities of the form

provides bounded relative error. The literature recommending the exponential tilt usually rests on demonstrating logarithmic efficiency (see [1], p. 159 or Sect. 10.1 of [9]), a substantially weaker condition that does not guarantee a bound in the relative error. Although we may design a simulation optimally for a specific criterion such as achieving small relative error in the estimation of \(P(L(Y)>t),\) we are more often interested in the nature of the whole tail beyond \(t\). For example, we may be interested in \(E\left[ \left( L(Y)-t\right) I(L(Y)>t)\right] =\int _{t}^{\infty }P(L(Y)>s)\mathrm{{d}}{}s\) and this would require that a single simulation be efficient for estimating all parameters \(P(L(Y)>s),s>t.\) The property of bounded relative error provides some assurance that the family used adapts to the whole tail, rather than a single quantile.

For simplicity, we assume for the present that \(Y\) is univariate, has a continuous distribution, and \(L(Y)\) is a strictly increasing function of \(Y.\) Then

and we can achieve bounded relative error if we use an importance sample distribution drawn from the family

where \(T(y)\) behaves, for large values of \(y\), roughly like a linear function of \(\bar{F}(y)=1-F(y).\) If \(T(y)\sim -\bar{F}(y)\) as \(y\rightarrow \infty ,\) the optimal parameter \(\theta \) is \(\theta _{t}=\frac{k_{2}}{p_{t}}\simeq \frac{1.5936}{p_{t}}\) Footnote 2 and the limit of the relative error of the IS estimator is \(\simeq 0.738n^{-1/2}\) (see Appendix A). The simplest and most tractable family of distributions with appropriate tail behavior is the GEV distribution associated with the density \(f(y)\).

We now provide an intuitive argument in favor of the use of the GEV family of IS distributions. For a more rigorous justification, see Appendix A.

The choice \(T(y)=-\bar{F}(y)\) provides asymptotic bounded relative error [11]. Consider a family of cumulative distribution functions

The corresponding probability density function \(F_{\theta }^{\prime }(y)\) is of the form (3). As \(\theta \rightarrow \infty \) and \(y\rightarrow \infty \) in such a way that \(\theta \bar{F}(y)\) converges to a nonzero constant, then

so that

Therefore, \(F_{\theta }(y)\) is asymptotically equivalent to the distribution of the maximum of \(\theta \) observations from the original target distribution \(F.\) This, suitably normalized, converges to a member of the GEV family of distributions. We also show in [11] that the optimal parameter is asymptotic to \(\theta ={k_{2}}/{p_{t}}\) as \(p_{t}\rightarrow 0\). Consequently,

Thus, when we use the corresponding extreme value importance sample distribution, about 20.3 % of the observations will fall below \(t\) and the other 79.7 % will fall above, and this can be used to identify one of the parameters of the IS distribution. Of course, only the observations greater than \(t\) are directly relevant to estimating quantities like \(P(L>t)\). This leads to the option of conditioning on the event \(L>t\) and using the generalized Pareto family (see Appendix B).

The three distinct classes of extreme value distributions and some of their basic properties are outlined in Appendix B. All have simple closed forms for their pdf, cdf, and inverse cdf and can be easily and efficiently generated. In addition to a shape parameter \(\xi ,\) they have location and scale parameters \(d,c\) so the cdf is \(H_{\xi }(\frac{x-d}{c})\), where \(H_{\xi }(x)\) is the cdf for unit scale and 0 location parameter. We say that a given continuous cdf \(F\) falls in the maximum domain of attraction of an extreme value cdf \(H_{\xi }(x)\) if there exist sequences \(c_{n},d_{n}\) such that

We will choose an extreme value distribution with parameters that approximate the distribution of the maximum of \(\theta _{t}={k_{2}}/{p_{t}}\) random variables from the original density \(f(y)\). Further properties of the extreme value distributions and detail on the choice of parameters is given in Appendix B. Proposition 1 in Appendix A shows that if \(F\) is in the domain of attraction of \(H_{0},\) then \(H_{0}\) provides a family of IS distributions with bounded relative error. It is also unique in the sense that any other such IS distribution has tails essentially equivalent to those of \(H_{0}\). A similar result can be proved for \(\xi \ne 0.\) The superiority of the extreme value distributions for importance sampling stems from the bound on relative error, but equally from their ease of simulation, the simple closed form for the pdf and cdf and the maximum stability property, which says that the distribution of the maximum of i.i.d. random variables drawn from this distribution is a member of the same family.

We get a better sense of the extent of the variance reduction using IS if we compare sample sizes required to achieve a certain relative error. If we use crude random sampling in order to estimate \(p_{t}\) using a sample size \(n_\mathrm{cr},\) the relative error of the crude estimator is

whereas if we use a GEV importance sample of size \(n_{\text {IS}},\) the relative error is \({\mathrm{RE}}(IS)\simeq 0.738n_{\text {IS}}^{-1/2}\). Equating these, the ratio of the sample sizes required for a fixed relative error is \(\frac{n_\mathrm{cr}}{n_{\text {IS}}}\simeq \frac{1.84}{p_{t}}\) for \(p_{t}\) small. Indeed, if \(p_{t}=10^{-4},\) an importance sample estimator based on a sample size \(5\times 10^{6}\) is quite feasible on a small laptop computer, but is roughly equivalent to a crude Monte Carlo estimator of sample size \(9.2\times 10^{10},\) possible only on the largest computers.

3 Examples

3.1 Example 1: Simulation Estimators of Quantiles and TailVar for the Normal Distribution

Rarely when we wish to simulate an expected value in the region of the space \([L(Y)>t]\) is this the only quantity of interest. More commonly, we are interested in various functions sensitive to the tail of the distribution. This argues for using an IS estimator with bounded relative error rather than the more common practice of simply conditioning on the region of interest. For a simple example, suppose \(Y\) follows a \(N(0,1)\) distribution and we wish to estimate a property of the tail defined by \(Y>t,\) where \(t\) is large. Suppose we simulate from the conditional distribution given \(Y>t,\) that is from the pdf

where \(\phi \) and \(\varPhi \) are the standard normal pdf and cdf respectively. If we wish also to estimate \(P(Y>t+s|Y>t)\sim e^{-st-\frac{s^{2}}{2}}\) for \(s>0\) fixed, sampling from this pdf is highly inefficient, since for \(n\) simulations from pdf (6), the RE for estimating \(P(Y>t+s|Y>t)\) is approximately \(n^{-1/2}\sqrt{e^{st+\frac{s^{2}}{2}}-1}\) and this grows extremely rapidly in both \(t\) and \(s.\) We would need a sample size of around \(n=10^{4}e^{st+\frac{s^{2}}{2}}\) (or about 60 trillion if \(s=3\) and \(t=6\)) from the IS density (6) to achieve a RE of 1 %.

Crude Monte Carlo fails here but use of IS with the usual standard exponential tilt or Esscher transform with \(T(y)=y\), though very much better, still fails to deliver bounded relative error. In [11], it is shown that the relative error is \(\sim \) \(\left( \frac{\pi }{2}\right) ^{1/4}\sqrt{t/n}\rightarrow \infty \) as \(p_{t} \rightarrow 0.\) While the IS distribution obtained by the exponential tilt is a very large improvement over crude Monte Carlo and logarithmically efficient, it still results in an unbounded relative error as \(p_{t}\rightarrow 0\).

The Normal distribution is in the maximum domain of attraction of the Gumbel distribution (\(\xi =0\)) so our arguments suggest that we should use as IS distribution

with parameters \(c,d\) selected to match the distribution function \(\left( \varPhi (y)\right) ^{k_{2}/p_{t}}\) (see Appendix A). Using this Gumbel distribution as an IS distribution permits a very substantial increase in efficiency.

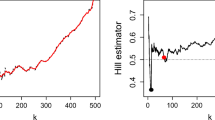

To show how effective this is as an IS distribution, we simulate from the Gumbel distribution with cdf (7)\(.\) The weights attached to a given IS simulated value of \(Y\) are the ratio of the two pdfs, the standard normal and the Gumbel, or

For example with \(t=4.7534,\) \(p_{t}=10^{-6}\) and Gumbel parameters \(c=0.20\) and \(d=4.85\), the relative error in \(10^{6}\) simulations was \(0.729n^{-1/2} .\) We can compare this with the exponential tilt, equivalent to using the normal\((t,1)\) distribution as an IS distribution, whose relative error is \(2.32n^{-1/2}\), or with crude Monte Carlo, with relative error around \(10^{3}n^{-1/2}.\)

Suppose that our interest is in estimating the conditional tail expectation or TVaR\(_{\alpha }\) based on simulations. The TVaR\(_{\alpha }\) is defined as \(E(Y|Y>t)=\frac{E[YI(Y>t)]}{P(Y>t)}\). We designed the GEV parameters for simulating the numerator, \(E[YI(Y>t)]\). If we are interested in estimating TVaR\(_{0.0001}\) by simulation, \(t=\mathrm{VaR}_{0.0001}=3.719\) the true value is

We will generate random variables \(Y_{i}\) using the Gumbel (\(0.282,3.228\) ) distribution and then attach weights (8) to these observations. The estimate of TVaR\(_{\alpha }\) is then the average of the values \(w(Y_{i})\times Y_{i}\) averaged only over those values that are greater than \(t\).

The Gumbel distribution is supported on the whole real line, while the region of interest is only that portion of the space greater than \(t\) so one might generate \(Y_{i}\) from the Gumbel distribution conditional on the event \(Y_{i}>t\) rather than unconditionally. The probability \(P(Y_{i}>t)\) where \(Y_{i}\) is distributed according to the Gumbel(\(c_{\theta },d_{\theta })\) distribution is \(\exp (-e^{-\left( t-d_{\theta }\right) /c_{\theta }})\) and this is typically around 0.80 indicating that about 20 % of the time the Gumbel random variables fall in the “irrelevant” portion of the sample space \(S<t.\) Since it is easy to generate from the conditional Gumbel distribution \(Y|Y>t\) this was also done for a further improvement in efficiency. This conditional distribution converges to the generalized Pareto family of distributions (see Theorem 2 of Appendix B). In this case, since \(\xi =0,\) \(P(Y-u\le z|Y>u)\rightarrow 1-e^{-z}\) as \(u\rightarrow \infty \). Therefore, in order to approximately generate from the conditional distribution of the tail of the Gumbel, we generate the excess from an exponential distribution.

Table 1 provides a summary of the results of these simulations. Several simulation methods for estimating TVaR\(_{\alpha }\) = \(E(Y|Y>t)=\frac{E[YI(Y>t)]}{p_{t}}\) with \(p_{t}=P(Y>t)\) as well as estimates of \(p_{t}\) are compared. Since TVaR is a ratio, we consider estimates of the denominator and numerator, i.e., \(p_{t}\) and \(E[YI(Y>t)]\) separately. The underlying distribution of \(Y\) is normal in all cases. The methods investigated are:

-

1.

Crude Simulation (Crude) Generate independently \(Y_{i},\) \(i=1, \ldots ,n\) from original (normal) distribution. Estimate \(p_{t}\) using \(\frac{1}{n}\sum _{i=1}^{n}I(Y_{i}>t)\) and estimate \(E\left[ YI(Y>t)\right] \) using \(\frac{1}{n}\sum _{i=1}^{n}Y_{i}I(Y_{i}>t).\)

-

2.

Exponential Tilt or Shifted Normal IS (SN) Generate independently \(Y_{i},\) \(i=1, \ldots ,n\) from \(N(t,1)\) distribution. Estimate \(p_{t}\) using \(\frac{1}{n}\sum _{i=1}^{n}w_{i}I(Y_{i}>t)\) and estimate \(E\left[ YI(Y>t)\right] \) using \(\frac{1}{n}\sum _{i=1}^{n}w_{i} Y_{i}I(Y_{i}>t)\) where \(w_{i}\) are the weights, obtained as the likelihood ratio

$$ w_{i}=w(Y_{i})=\frac{\phi (Y_{i})}{\phi (Y_{i}-t)}. $$Since the exponential tilt, applied to a Normal distribution, results in another Normal distribution with a shifted mean and the same variance, this is an application of the exponential tilt.

-

3.

Extreme Value IS (EVIS) Generate independently \(Y_{i}\), \(i=1, \ldots ,n\) from the Gumbel\((c,d)\) distribution. Estimate \(p_{t}\) using \(\frac{1}{n}\sum _{i=1}^{n}w_{i}I(Y_{i}>t)\) and estimate \(E\left[ YI(Y>t)\right] \) using \(\frac{1}{n}\sum _{i=1}^{n}w_{i}Y_{i}I(Y_{i}>t)\) where \(w_{i}\) are the weights, obtained as the likelihood ratio

$$ w_{i}=w(Y_{i})=\frac{\phi (Y_{i})}{\frac{1}{c}h_{0}(\frac{Y_{i}-d}{c})} $$where \(\frac{1}{c}h_{0}(\frac{Y_{i}-d}{c})\) is the corresponding Gumbel pdf.

-

4.

Conditional Extreme Value IS (Cond EVIS) Generate independently \(Y_{i},\) \(i=1, \ldots ,n\) from the Gumbel\((c,d)\) distribution conditioned on \(Y>t\). Estimate \(p_{t}\) using \(\frac{1}{n}\sum _{i=1} ^{n}w_{i}I(Y_{i}>t)=\frac{1}{n}\sum _{i=1}^{n}w_{i}\) and estimate \(E\left[ YI(Y>t)\right] \) using \(\frac{1}{n}\sum _{i=1}^{n}w_{i}Y_{i}I(Y_{i} >t)=\frac{1}{n}\sum _{i=1}^{n}w_{i}Y_{i}\) where \(w_{i}\) are the weights, obtained as the likelihood ratio

$$ w_{i}=w(Y_{i})=\frac{\phi (Y_{i})}{g(Y_{i})} $$and where

$$ g(s)=\frac{\frac{1}{c}h_{0}(\frac{s-d}{c})}{1-H_{0}(\frac{t-d}{c})}, \quad \text { for}~ s>t\ $$is the corresponding conditional Gumbel pdf.

Conditional Normal IS Since we are interested in the tail behavior of the random variable \(Y\) given \(Y>t\) it would be natural to simulate from the conditional distribution \(Y|Y>t.\) Unfortunately, this is an infeasible method because it requires advance knowledge of \(p_{s}=P(Y>s)\) for all \(s\ge t\).

We indicate in Table 1 the relative error of these various methods in the case \(p_{t}=0.0001,t=3.719.\) The corresponding parameters of the Gumbel distribution that we used were \(c=0.243,\) \(\ d=3.84\) but the results are quite robust to the values of these parameters. Notice that the efficiency gain of the conditional extreme value simulation, as measured by the ratio of variances, is around \(\left( \frac{100}{0.47}\right) ^{2}\simeq 45,270\) relative to a crude Monte Carlo and around \(\left( \frac{2.05}{0.47}\right) ^{2}\simeq 19\) relative to the exponential tilt.

3.2 Example 2: Simulating a Portfolio Credit Risk Model

We provide a simulation of a credit risk model using importance sampling. The model, once the industry standard, is the normal copula model for portfolio credit risk, introduced in Morgan’s CreditMetrics systemFootnote 3 (see [5]). Under this model, the \(k^{\prime }\)th firm defaults with probability \(p_{k}(Z),\) and this probability depends on \(m\) unobserved factors that comprise the vector \(Z.\) Losses on a portfolio then take the form \(L=\sum _{k=1}^{\nu }c_{k}Y_{k}\) where \(Y_{k}\), the default indicator, is a Bernoulli random variable with \(P(Y_{k}=1)=p_{k}(Z)\), \(\ (\)denoted \(Y_{k}\sim \text {Bern}(p_{k}(Z))\)), \(p_{k}\) are functions of common factors \(Z,\) and \(c_{k}\) is the portfolio exposure to the \(k^{\prime }th\) default. Suppose we wish to estimate \(P(L>t).\)

3.2.1 One-Factor Case

In the simplest one-factor case, \(p_{k}\) are functions \(p_{k}(Z)=\varPhi \left( \frac{a_{k}Z~+~\varPhi ^{-1}(\rho _{k})}{\sqrt{1-a_{k}^{2}}}\right) \) of a common standard normal \(N(0,1)\) random variable \(Z\), the scalars \(a_{k}\) are the factor loadings or weights and \(\rho _{k}\) represents the marginal probability of default (it is easy to see that \(E[p_{k}(Z)]=\rho _{k}).\)

If we wish to simulate an event \(P(L>t)\) which has small probability, there are two parallel opportunities for importance sampling, both investigated by [4].

For example, for each given \(Z,\) we might replace the Bernoulli distribution of \(Y_{k}\) with a distribution having higher probabilities of default, i.e., replace \(p_{k}(Z)\) by \(q_{k}\) where \(q_{k}\ge p_{k}(Z).\) The choice of \(q_{k}\) is motivated by an exponential tilt as is argued in [4]. Conditional on the factor \(Z,\) the tilted Bernoulli random variables \(Y_{k}\) are such that \(E(\sum _{k=1}^{\nu }c_{k}Y_{k}|Z)=L.\) We do not require the use of importance sampling in this second stage of the simulation so having used an IS distribution for \(Z,\) we generate Bernoulli (\(p_{i}(Z)\)) random variables \(Y_{i}\). There are two similar alternatives in the first stage, generate \(Z\) from the Gumbel or generate \(\tilde{L}=\sum _{k=1}^{\nu }c_{k}p_{k}(Z)\), a proxy for the loss, from the Gumbel distribution. These two alternatives give similar results since the Gumbel is the extreme value distribution corresponding to both \(Z\) and \(\tilde{L}\), and \(\tilde{L}\) is a nondecreasing function of \(Z\). In Table 2, we give the results corresponding to the second of these alternatives, simulating \(\tilde{L}=\sum _{k=1}^{\nu }c_{k}p_{k}(Z)\) and then solving for the factor \(Z.\) Unlike [4], where a shifted normal IS distribution for \(Z\) is used, we use the Gumbel distribution for \(L\) motivated by the arguments of Sect. 2. Extreme value importance sampling provides a very substantial variance reduction over crude simulation of course, but also over importance sampling using the exponential tilt. We determine appropriate parameters for the Gumbel extreme value distribution by quantile matching and then draw \(Z\) from a Gumbel(\(c,d)\) distribution. We use the parameters taken from the numerical example in [4], i.e., \(\nu =1{,}000\) obligors, exposures \(c_{k}\) are 1, 4, 9, 16, and 25 with 200 at each level of exposure, and the marginal default probabilities \(\rho _{k}=0.01\) (\(1+\sin 16\frac{\pi k}{\nu })\) so that they range from 0 to 2 %. The factor loadings \(a_{k}\) were generated as uniform random variables on the interval (0,1). In summary, the main difference with [4] is our use of the Gumbel distribution for simulating \(\tilde{L}\) rather than the shifted normal and the lack of a tilt for \(Y_{k}.\)

The resulting relative errors estimated from \(30{,}000\) simulations are shown in Table 2, and evidently there is a significant variance reduction achieved by the choice of the Gumbel distribution. For example, when the threshold \(t\) was chosen to be 2,000, there was a decrease in the variance by a factor of approximately \(\left( \frac{2.33}{0.69}\right) ^{2}\) or about \(11\) and a much more substantial decrease over crude by a factor of around \(\left( \frac{15.34}{0.69}\right) ^{2}\) or about \(494\).

3.2.2 Multifactor Case

In the multifactor case, the event that an obligor \(k\) fails is determined by a Bernoulli random variable \(Y_{k}\sim \text {Bern}(p_{k})\). The loss function \(L=\sum _{k=1}^{\nu }c_{k}Y_{k}\) is then a linear function of \(Y_{k}\) and corresponding exposures \(c_{k}\). We wish to estimate the probability of a large loss: \(P(L>t)\). The values \(p_{k}\) are functions

of a number of factors \(\mathbf{{Z}}^{T}=(Z_{1}, \ldots ,Z_{m})\) where the individual factors \(Z_{i},i=1, \ldots ,m\) are independent standard normal random variables. Here \(\rho _{k}\) is the marginal probability that obligor \(k\) fails, i.e., \(P(Y_{k}=1)=E[p_{k}(\mathbf{{Z}})]=\rho _{k}\) since \(\mathbf{{a}}_{k}\mathbf{{Z}}\) is \(N(0,\mathbf{{a}}_{k}\mathbf{{a}}_{k}^{T})\) (see [4], p. 1644) and the row vectors \(\mathbf{{a}}_{k}\) are factor loadings which relate the factors to the specific obligors.

\({\mathbf {Simulation \ Model}}\) We begin with brief description of the model simulated in [4, p. 1650], that is the basis of our comparison. We assume \(\nu =1{,}000\) obligors, the marginal probabilities of default \(\rho _{k}=0.01(1+\sin (16\pi \frac{k}{\nu }))\), and the exposures \(c_{k}=[\frac{5k}{\nu }]^{2},k=1, \ldots ,\nu \). The components of the factor loading vector \(\mathbf {a}_{k}\) were generated as independent \(\text {U}(0,\frac{1}{\sqrt{m}})\), where \(m\) is the number of factors. The simulation described in [4] is a two-stage Monte Carlo IS method. The first stage simulates the latent factors in \(\mathbf {Z}\) by IS, where the importance distributions are independent univariate Normal distributions with means obtained by solving equating modes and with variances unchanged equal to 1. Specifically they choose the normal IS distribution having the same mode as

because this is approximately proportional to \(P(\mathbf{{Z}}=\mathbf{{z}}|L>t)\), the ideal IS distribution. In other words, the IS distribution for \(Z_{i}\) is \(N(\mu _{i},1),i=1, \ldots ,m\) where the vector of values of \(\mu _{i}\) is given by (see [4], Eq. (20))

with (see [4], p. 1648)

Conditional on the values of the latent factors \(\mathbf {Z}\), the second stage of the algorithm in [4] is to twist the Bernoulli random variables \(Y_{k}\) using modified Bernoulli distributions, i.e., with a suitable change in the values of the probabilities \(P(Y_{k}=1),k=1, \ldots ,\nu \). Our comparisons below are with this two-stage form of the IS algorithm.

Our simulation for this portfolio credit risk problem is a one-stage IS simulation algorithm. If there are \(m\) factors in the portfolio credit risk model we simulate \(m-1\) of them \({\tilde{Z}}_{i}\) from univariate normal \(N(\mu _{i},1),i=1, \ldots ,m-1\) with a different mean, as in [4], but then we simulate an approximation to the total loss, \({\tilde{L}}\), from a Gumbel distribution, and finally set \({\tilde{Z}}_{m}\) equal to the value implied by \({\tilde{Z}}_{1}, \ldots ,{\tilde{Z}}_{m-1}\) and \({\tilde{L}}\). This requires solving an equation

for \({\tilde{Z}}_{m}\). The parameters \(\mathbf{{\mu }}=(\mu _{1},\mu _{2} , \ldots ,\mu _{m-1})\) are obtained from the crude simulation. Having solved (12), we attach weight to this IS point (\({\tilde{Z}}_{1}, \ldots ,{\tilde{Z}}_{m}\)) equal to

and

We choose the parameters \(\mu _{i},i=1, \ldots ,m-1\) for the above IS distributions using estimates of the quantity

based on the preliminary simulation, with the parameters \(c,d\) of the Gumbel obtained from (24).

We summarize our algorithm for the portfolio credit risk problem as follows:

-

1.

Conduct a crude MC simulation and estimate the parameter \(\mathbf {\mu }\) in (14).

-

2.

Estimate parameters \(c\) and \(d\) of the Gumbel distribution (24) where \(E(\tilde{L})\) is estimated by \(\mathrm{average}(L|L>t)\).

-

3.

Repeat (a)–(d) for independent simulations as \(j=1, \ldots ,n\) where \(n\) is the sample size of the simulation.

-

(a)

Generate \({\tilde{L}}\) from the Gumbel(\(c,d\)) distribution.

-

(b)

Generate \({\tilde{Z}_{i}},i=1, \ldots ,m-1\) from the univariate normal \(N(\mu _{i},1)\) distributions.

-

(c)

Solve \({\tilde{L}}({\tilde{Z}}_{1}, \ldots ,{\tilde{Z}}_{m-1} ,{\tilde{Z}}_{m})=t\) for \({\tilde{Z}}_{m}\) and calculate (13).

-

(d)

Simulate a loss \(L_{j}=\sum _{k=1}^{\nu }c_{k}Y_{k}\) where \(Y_{k}\sim \text {Bern}(p_{k}({\tilde{\mathbf {Z}}}))\) with \(p_{k}({\tilde{\mathbf {Z}}} )=\varPhi \Big (\frac{\mathbf {a}_{k}{\tilde{\mathbf {Z}}}+\varPhi ^{-1}(\rho _{k})}{\sqrt{1-\mathbf {a}_{k}\mathbf {a}_{k}^{T}}}\Big )\).

-

(a)

-

4.

Estimate \(p_{t}\) using a weighted average

$$ \frac{1}{n}\sum _{j=1}^{n}\omega _{j}I(L_{j}>t)\text {. } $$ -

5.

Estimate the variance of this estimator using \(n^{-1}\) times the sample variance of the values \(\omega _{j}I(L_{j}>t),j=1, \ldots ,n\).

\({\mathbf {Simulation \,Results}}\) The results in Table 3 were obtained by using crude Monte Carlo, importance sampling using the GEV distribution as the IS distribution, and the IS approach proposed in [4]. In the crude simulations, the sample size is 50,000, while in the later two methods, the sample size is 10,000.

Notice that for a modest number of factors there is a very large reduction in variance over the crude (for example the ratio of relative error corresponding to 2 factors, \(t=2{,}500\) corresponds to an efficiency gain or variance ratio of nearly 2,400) and a significant improvement over the Glasserman and Li [4] simulation with a variance ratio of approximately 4. This improvement erodes as the number of factors increases, and in fact the method of Glasserman and Li has smaller variance in this case when \(m=10.\) In general, ratios of multivariate densities of large dimension tend to be quite “noisy”; although the weights have expected value \(1,\) they often have large variance. A subsequent paper will deal with the large dimensional case.

4 Conclusion

The family of extreme value distributions are ideally suited to rare event simulation. They provide a very tractable family of distributions and have tails which provide bounded relative error regardless of how rare the event is. Examples of simulating values of risk measures demonstrate a very substantial improvement over crude Monte Carlo and a smaller improvement over competitors such as the exponential tilt. This advantage is considerable for relatively low-dimensional problems, but there may be little or no advantage over an exponential tilt when the dimensionality of the problem increases.

Notes

- 1.

For importance sampling estimates of rare events, at least, with apologies to the Beatles.

- 2.

Here \(k_{2}\simeq 1.5936\) is the unique positive solution to the equation \(e^{-k}+\frac{k}{2}=1\).

- 3.

Very popular prior to 2008!

- 4.

\(k_{2}\simeq 1.5936\) and \(c\simeq 0.738\) are the unique positive solutions to the equations \(e^{k}=\frac{1}{1-\frac{k}{2}}=1+k^{2}(1+c^{2})\).

References

Asmussen, S., Glynn, P.W.: Stochastic Simulation: Algorithms and Analysis. Springer, New York (2007)

De Haan, L., Resnick, S.I.: Local limit theorems for sample extremes. Ann. Probab. 10, 396–413 (1982)

Glassserman, P.: Monte Carlo Methods in Financial Engineering. Springer, New York (2004)

Glasserman, P., Li, J.: Importance sampling for portfolio credit risk. Manag. Sci. 51(11), 1643–1656 (2005)

Gupton, G., Finger, C.C., Bhatia, M.: CreditMetrics, Technical Document of the Morgan Guaranty Trust Company http://www.defaultrisk.com/pp_model_20.htm (1997)

Hall, P.: On the rate of convergence of normal extremes. J. Appl. Probab. 16, 433–439 (1979)

Homem-de-Mello, T., Rubinstein, R.Y.: Rare event probability estimation using cross-entropy. In: Proceedings of the 2002 Winter Simulation Conference, pp. 310–319 (2002)

Kroese, D., Rubinstein, R.Y.: Simulation and the Monte Carlo Method, 2nd edn. Wiley, New York (2008)

Kroese, D., Taimre, T., Botev, Z.I.: Handbook of Monte Carlo Methods. Wiley, New York (2011)

Kotz, S., Nadarajah, S.: Extreme Value Distributions: Theory and Applications. Imperial College Press, London (2000)

McLeish, D.L.: Bounded relative error importance sampling and rare event simulation. ASTIN Bullet. 40, 377–398 (2010)

McLeish, D.L.: Monte Carlo Simulation and Finance. Wiley, New York (2005)

McNeil, A.J., Frey, R., Embrechts, P.: Quantitative Risk Management. Princeton University Press, Princeton (2005)

Pickands, J.: Sample sequences of maxima. Ann. Math. Stat. 38, 1570–1574 (1967)

Ridder, A., Rubinstein, R.: Minimum cross entropy method for rare event simulation. Simulation 83, 769–784 (2007)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendices

Appendix A: Assumptions and Results

We suppose without loss of generality that the argument to the loss function is a multivariate normal MNV\((0,I_{m})\) random vector \(\mathbf {Z},\) since any (possibly dependent) random vector \(\mathbf {Y}\) can be generated from such a \(\mathbf {Z}.\) We begin by assuming that “large” values of \(L(\mathbf {Z})\) are determined by the distance of \(\mathbf {Z}=(Z_{1} ,Z_{2}, \ldots ,Z_{m})\) from the origin in a specific direction, i.e.,

Assumption 1

There exists a direction vector \(\mathbf {v\in \mathfrak {R}}^{m}\) such that, for all fixedvectors \(\mathbf {w\in \mathfrak {R}}^{m},\)

where \(Z_{0}\) is \(N(0,1).\)

We propose an importance sampling distribution generated as follows:

where \(Y\) has the extreme value distribution \(H_{0}(\frac{y-d}{c}).\) If we replace the distribution of \(Y\) by the standard normal, it is easy to see that (16) gives \(\mathbf{{Z}} \sim \mathrm{MVN}(\mathbf{{0}}, \mathbf{I}_{m})\) so the IS weight function in this case is simply the ratio of the two univariate distributions for \(Y.\)

Assumption 2

Suppose that for any fixed \(\mathbf {w\in \mathfrak {R}}^{m},\) there exits \(y_{0}\) such that\(L(y\mathbf {v}~+~\mathbf {w})\) is an increasing function of \(y\) for \(y>y_{0}.\)

Proposition 1

Under assumptions 1 and 2, there is a sequence of importance sampling distributions of the form (16) which provides bounded relative error asymptotic to \(cn^{-1/2}\) as \(p_{t}\rightarrow 0\) where \(c\simeq 0.738\).

In order to prove this result, we will use the following lemma, a special case of Corollary 1 of [11]:

Lemma 1

Suppose the random variable \(Y\) has a continuous distribution with cdf \(F_{Y}\). Suppose that \(\ T(y)\) is nondecreasing and for some real number \(a\) we have \(a+T(y)\sim -\overline{F}_{Y}(y)\) as \(y\rightarrow y_{F}^{-}\) with \(y_{F}=\sup \{y;F_{Y}(y)<1\}\le \infty .\) Then the IS estimator for sample size \(n\) obtained from density (3) with \(\theta =\theta _{t}=\frac{k_{2}}{p_{t}} \) has bounded RE asymptotic to \(cn^{-1/2}\) as \(p_{t}\rightarrow 0\) where \(c=\frac{1}{k_{2}}\sqrt{e^{k_{2}} -1-k_{2}^{2}}\simeq 0.738\).Footnote 4

Proof of Proposition 1

The condition (15) allows us to solve an asymptotically equivalent univariate problem, i.e., estimate \(P(L_{1}(Y)>t)\) where \(L_{1} (Y)=L(Y\mathbf {v),}\) \(Y\sim N(0,1).\) Clearly, the Normal distribution for \(Y\) satisfies \(F_{Y}\in \mathrm{MDA}(H_{0}(x))\) so that there exist sequences \(c_{n},d_{n}\) such that \(F_{Y}^{n}(d_{n}+c_{n}x)\rightarrow H_{0}(x)\) as \(n\rightarrow \infty \) for the GEV \(H_{0}.\) Lemma 1 shows that the importance sampling distribution

provides bounded relative error for the estimation of \(p_{t}\) as \(t\rightarrow \infty \) and \(p_{t}\rightarrow 0.\) Note that the probability density function of the maximum \(n=\) \(\frac{k_{2}}{p_{t}}+1\) random variables drawn from the distribution \(F_{Y}(y)\) is given by

Furthermore, by the local limit or density version of convergence to the extreme value distributions, (see Theorem 2 (b) [2] or [14]), with \(y=d_{n}+c_{n}x,\) and \(x=(y-d_{n})/c_{n}\),

which implies, combining (17) and (18), that

Therefore, the extreme value distribution provides a bounded relative error importance sampling distribution, equivalent to (17).

Appendix B: Maximum Domain of Attraction and Properties of The Generalized Extreme Value Distributions

Maximum domain of attraction

If there are sequences of real constants \(c_{n}\) and \(d_{n},\) \(n=1,2, \ldots \) where \(c_{n}>0\) for all \(n,\) such that

for some nondegenerate cdf \(H(x),\) then we say that \(F\) is in the maximum domain of attraction (MDA) of the cdf \(H\) and write \(F\in \text {MDA}(H).\) The Fisher–Tippett theorem (see Theorem 7.3 of [13]) characterizes the possible limiting distributions \(H\) as members of the generalized extreme value distribution (GEV). A cdf is a member of this family if it has cumulative distribution function of the form \(H_{\xi }(\frac{x-d}{c})\) where \(c>0\) and

Theorem 1

(Fisher–Tippet, Gnedenko) If \(F\in \mathrm{MDA}(H)\) for some nondegenerate cdf \(H,\) then \(H\) must take the form (21).

The properties of the GEV distributions listed in Table 4 are obtained from routine calculations and properties in [13] or [10].

Choosing the parameters \(c\) and \(d\)

The GEV has \(H_{\xi }(\frac{y-d}{c})\) and probability density function \(c^{-1}h_{\xi }(\frac{y-d}{c}).\) Other parameters can be easily found in the above table. We wish to choose an extreme value distribution with parameters corresponding to the maximum of a sample of \(\theta _{t}={k_{2}}/{p_{t}}\) random variables from the original density \(f(y).\) In other words, we wish to find values of \(d_{\theta _{t}}\) and \(c_{\theta _{t}}\) so that

and this leads to matching \(t\) with the quantile corresponding to \(e^{-k_{2} }\simeq 0.203.\) In other words, one parameter is determined by the equation

Another parameter can be determined using the crude simulation and the values of \(Y\) for which \(L(Y)>t.\) We can match another quantile, for example, the median, the mode, or the sample mean which estimates \(E[Y\) \(\vert \) \(L(Y)>t].\) In the case of standard normally distributed inputs and the Gumbel distribution, matching the conditional expected value \(E(L|L>t)\) and (23) results approximately in:

Here \(E(\tilde{L})=\mathrm{average}(L|L>t)\) based on a preliminary crude simulation of values of \(L\) simulated under the original distribution. Of course, one could also use maximum likelihood estimation to determine appropriate parameters for the ID distribution (see [10]) but the specific choice of estimator seemed to have little impact on the quality of the importance sampling provided that the estimated GEV density was sufficiently dispersed.

As an alternative to simulating \({\tilde{L}}\) from the GEV, we may simulate instead from \({\tilde{L}|\tilde{L}>t,}\) resulting in the generalized Pareto distribution. For a given c.d.f. \(F,\) the conditional excess distribution is

Then the conditional excess distribution can be approximated by the so-called generalized Pareto distribution for large values of \(u\) (see [13], Theorem 7.20):

Theorem 2

(Pickands, Balkema, de Haan) \(F\in \mathrm{MDA}(H_{\xi })\) for some \(\xi \) if and only if

for some positive measurable function \(\beta (u)\) where \(G_{\xi ,\beta }\) is the c.d.f of the Generalized Pareto (GP) distribution:

Rights and permissions

Open Access This chapter is distributed under the terms of the Creative Commons Attribution Noncommercial License, which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Copyright information

© 2015 The Author(s)

About this paper

Cite this paper

McLeish, D.L., Men, Z. (2015). Extreme Value Importance Sampling for Rare Event Risk Measurement. In: Glau, K., Scherer, M., Zagst, R. (eds) Innovations in Quantitative Risk Management. Springer Proceedings in Mathematics & Statistics, vol 99. Springer, Cham. https://doi.org/10.1007/978-3-319-09114-3_18

Download citation

DOI: https://doi.org/10.1007/978-3-319-09114-3_18

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-09113-6

Online ISBN: 978-3-319-09114-3

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)