Abstract

he sigma-matrix four-vector is defined as \((\bar {\sigma }^{\mu })^{\dot {\alpha } \alpha } := ({\mathbb{1} },-\boldsymbol {\sigma })^{\top }\), where \(\boldsymbol {\sigma } = (\hat \sigma _1,\hat \sigma _2, \hat \sigma _3)\) is the list of the Pauli matrices \(\hat \sigma _i\).

You have full access to this open access chapter, Download chapter PDF

The sigma-matrix four-vector is defined as \((\bar {\sigma }^{\mu })^{\dot {\alpha } \alpha } := ({\mathbb{1} },-\boldsymbol {\sigma })^{\top }\), where \(\boldsymbol {\sigma } = (\hat \sigma _1,\hat \sigma _2, \hat \sigma _3)\) is the list of the Pauli matrices \(\hat \sigma _i\).Footnote 1 We rewrite \((\sigma ^{\mu })_{\alpha \dot \alpha } = - \epsilon _{\dot \alpha \dot \beta } (\bar {\sigma }^{\mu })^{\dot \beta \beta } \epsilon _{\beta \alpha }\) in matrix notation, as

Substituting the explicit expressions for \(\bar {\sigma }^{\mu }\) gives \(\sigma ^0 = {\mathbb{1} }\) and \(\sigma ^i = \hat \sigma _i\), hence \(\sigma ^{\mu } = ({\mathbb{1} }, \boldsymbol {\sigma })^{\top }\). Multiplying the latter by the metric tensor proves the second identity,

To prove the third identity, \(\mathrm {Tr}\left (\sigma ^{\mu } \bar {\sigma }^{\nu }\right ) = 2 \eta ^{\mu \nu }\), we consider it for fixed values of \(\mu \) and \(\nu \). The facts that the Pauli matrices have vanishing trace and obey the anti-commutation relation \(\{\hat \sigma _i,\hat \sigma _j \} = 2 \delta _{ij}\) imply that

for \(i,j=1,2,3\). Putting these together gives the third identity.

The Pauli matrices and the identity matrix form a basis of all \(2\times 2\) matrices. Any \(2\times 2\) matrix \(M_{\alpha \dot \alpha }\) can thus be expressed as

By contracting both sides by \((\bar \sigma ^{\nu })^{\dot \alpha \beta }\) and computing the trace using the third identity, we can express the coefficients of the expansion in terms of M as \(m^{\mu } = \mathrm {Tr}\left (M \bar {\sigma }^{\mu }\right )/2\). Substituting this into the expansion of \(M_{\alpha \dot \alpha }\) gives

Since this holds for any matrix M, it follows that

Contracting both sides with suitable Levi-Civita symbols gives the fourth identity.

FormalPara Exercise 1.2: Massless Dirac Equation and Weyl Spinors-

(a)

Any Dirac spinor \(\xi \) can be decomposed as \(\xi = \xi _+ + \xi _-\), where \(\xi _{\pm }\) satisfy the helicity relations

$$\displaystyle \begin{aligned} P^{\pm} \xi_{\pm} = \xi_{\pm} \,, \qquad \quad P_{\pm} \xi_{\mp} = 0 \,, \end{aligned} $$(5.7)with \(P_{\pm } = ({\mathbb{1} } \pm \gamma ^{5})/2\). Using the Dirac representation of the \(\gamma \) matrices in Eq. (1.24) we have that

$$\displaystyle \begin{aligned} P_{\pm} = \begin{pmatrix} {\mathbb{1}}_2 & \pm {\mathbb{1}}_2 \\ \pm {\mathbb{1}}_2 & {\mathbb{1}}_2 \end{pmatrix} \,. \end{aligned} $$(5.8)The helicity relations then constrain the form of \(\xi _{\pm }\) to have only two independent components:

$$\displaystyle \begin{aligned} \xi_{+} = \left(\xi^0, \xi^1, \xi^0, \xi^1 \right)^{\top} \,, \qquad \xi_{-} = \left(\xi^0, \xi^1, -\xi^0, -\xi^1 \right)^{\top} \,. \end{aligned} $$(5.9)Indeed, \(u_+\) and \(v_-\) (\(u_-\) and \(u_+\)) have the form of \(\xi ^+\) (\(\xi ^-\)). We now focus on \(\xi _+\). We change variables from \(k^{\mu }\) to \(k^{\pm }\) and \(\mathrm {e}^{{\mathrm {i}} \phi }\), which have the benefit of implementing \(k^2=0\). Then we have that

$$\displaystyle \begin{aligned} \gamma^{\mu} k_{\mu} = \begin{pmatrix} \frac{k^++k^-}{2} & 0 & \frac{k^--k^+}{2} & - \mathrm{e}^{-{\mathrm{i}} \phi} \sqrt{k^+ k^-} \\ 0 & \frac{k^++k^-}{2} & - \mathrm{e}^{{\mathrm{i}} \phi} \sqrt{k^+ k^-} & \frac{k^+-k^-}{2} \\ \frac{k^+-k^-}{2} & \mathrm{e}^{-{\mathrm{i}} \phi} \sqrt{k^+ k^-} & - \frac{k^++k^-}{2} & 0 \\ \mathrm{e}^{{\mathrm{i}} \phi} \sqrt{k^+ k^-} & \frac{k^--k^+}{2} & 0 & -\frac{k^++k^-}{2} \\ \end{pmatrix} \,. \end{aligned} $$(5.10)Plugging the generic form of \(\xi _{+}\) into the Dirac equation \(\gamma ^{\mu } k_{\mu } \xi ^+ = 0\) gives one equation, which fixes \(\xi ^1\) in terms of \(\xi ^0\),

$$\displaystyle \begin{aligned} \xi^1 = \mathrm{e}^{{\mathrm{i}} \phi} \sqrt{\frac{k^-}{k^+}} \, \xi^0 \,. \end{aligned} $$(5.11)Since the equation is homogeneous, the overall normalisation is arbitrary. Choosing \(\xi ^0 = \sqrt {k^+}/\sqrt {2}\) gives the expressions for \(\xi _+=u_+=v_-\) given above.

-

(b)

For any Dirac spinor \(\xi \) we have

$$\displaystyle \begin{aligned} \bar{\xi} P_{\pm} = \xi^{\dagger} \gamma^0 P_{\pm} = \xi^{\dagger} P_{\mp} \gamma^0 = \left(\gamma^0 P_{\pm} \xi \right)^{\dagger} \,, \end{aligned} $$(5.12)where we used that \((\gamma ^5)^{\dagger } = \gamma ^5\) and \(\{\gamma ^5,\gamma ^0\} = 0\). From this it follows that

$$\displaystyle \begin{aligned} \bar{u}_{\pm} P_{\pm} = 0 \,, \qquad \bar{u}_{\pm} P_{\mp} = \bar{u}_{\pm} \,, \qquad \bar{v}_{\pm} P_{\pm} = \bar{v}_{\pm} \,, \qquad \bar{v}_{\pm} P_{\mp} = 0 \,. \end{aligned} $$(5.13) -

(c)

Through matrix multiplication we obtain the explicit expression of U,

$$\displaystyle \begin{aligned} U = \frac{1}{\sqrt{2}} \begin{pmatrix} {\mathbb{1}}_{2} & -{\mathbb{1}}_{2} \\ {\mathbb{1}}_{2} & {\mathbb{1}}_{2} \\ \end{pmatrix} \,, \end{aligned} $$(5.14)which is indeed a unitary matrix. The Dirac matrices in the chiral basis then are

$$\displaystyle \begin{aligned} \gamma^{0}_{\text{ch}}=U \gamma^{0} U^{\dagger} = \left ( \begin{array}{ll}0 & {{\mathbb{1}}}_{2\times 2}\\ {{\mathbb{1}}}_{2\times 2} & 0\end{array}\right )\,, \qquad \gamma^{i}_{\text{ch}}= U \gamma^{i} U^{\dagger} = \left ( \begin{array}{ll}0 & \boldsymbol{\sigma}^{i}\\ -\boldsymbol{\sigma}^{i} & 0\end{array}\right )\, , \end{aligned} $$(5.15)with \(i=1,2,3\). Putting these together gives

$$\displaystyle \begin{aligned} {} \gamma^{\mu}_{\text{ch}} = \begin{pmatrix} 0 & \sigma^{\mu} \\ \overline{\sigma}^{\mu} & 0 \\ \end{pmatrix} \,. \end{aligned} $$(5.16)Similarly, we obtain the expression of \(\gamma ^5\), which in this basis is diagonal,

$$\displaystyle \begin{aligned} {} \gamma^5_{\text{ch}}=U \gamma^{5} U^{\dagger} = \begin{pmatrix}- {\mathbb{1}}_{2} & 0 \\ 0 & {\mathbb{1}}_{2} \end{pmatrix} \,. \end{aligned} $$(5.17)Finally, the solutions to the Dirac equation in the chiral basis are given by

$$\displaystyle \begin{aligned} U u_+ = \left( 0, 0, \sqrt{k^+}, \mathrm{e}^{{\mathrm{i}} \phi} \sqrt{k^-} \right)^{\top} \,, \qquad U u_- = \left( \sqrt{k^-} \mathrm{e}^{-{\mathrm{i}} \phi}, -\sqrt{k^+}, 0, 0 \right)^{\top}\,, \end{aligned} $$(5.18)and similarly for \(v_{\pm }\).

-

(d)

The product of four Dirac matrices in the chiral representation (5.16) is given by

$$\displaystyle \begin{aligned} \gamma^{\mu} \gamma^{\nu} \gamma^{\rho} \gamma^{\tau} = \begin{pmatrix} \sigma^{\mu} \bar{\sigma}^{\nu} \sigma^{\rho} \bar{\sigma}^{\tau} & 0 \\ 0 & \bar{\sigma}^{\mu} \sigma^{\nu} \bar{\sigma}^{\rho} \sigma^{\tau} \\ \end{pmatrix} \,. \end{aligned} $$(5.19)Multiplying to the right by

$$\displaystyle \begin{aligned} \frac{1}{2} \left( {\mathbb{1}} - \gamma_5 \right) = \begin{pmatrix} {\mathbb{1}} & 0 \\ 0 & 0 \\ \end{pmatrix} \end{aligned} $$(5.20)selects the top left entry,

$$\displaystyle \begin{aligned} \frac{1}{2} \gamma^{\mu} \gamma^{\nu} \gamma^{\rho} \gamma^{\tau} \left( {\mathbb{1}} - \gamma_5 \right) = \begin{pmatrix} \sigma^{\mu} \bar{\sigma}^{\nu} \sigma^{\rho} \bar{\sigma}^{\tau} & 0 \\ 0 & 0 \\ \end{pmatrix} \,. \end{aligned} $$(5.21)Taking the trace of both sides of this equation finally gives Eq. (1.29). Note that this result does not depend on the representation of the Dirac matrices, as the unitarity matrices relating different representations drop out from the trace. Using \({\mathbb{1} }+\gamma _5\) instead gives a relation for \({\mathrm {Tr}}\left (\bar {\sigma }^{\mu } \sigma ^{\nu } \bar {\sigma }^{\rho } \sigma ^{\tau }\right )\).

-

(a)

The Jacobi identity for the generators (1.49) can be proven directly by expanding all commutators. We recall that the commutator is bilinear. The first term on the left-hand side gives

$$\displaystyle \begin{aligned} \left[T^{a}, [ T^{b}, T^{c}]\right] = T^a T^b T^c - T^a T^c T^b - T^b T^c T^a + T^c T^b T^a \,. \end{aligned} $$(5.22)Summing both sides of this equation over the cyclic permutations of the indices (\(\{a,b,c\}\), \(\{b,c,a\}\), \(\{c,a,b\}\)) gives Eq. (1.49).

-

(b)

We substitute the commutation relations (1.46) into the Jacobi identity for the generators (1.49). The first term gives

$$\displaystyle \begin{aligned} \left[T^{a}, [ T^{b}, T^{c}]\right] = - 2 f^{bce} f^{aeg} T^{g} \,. \end{aligned} $$(5.23)By summing over the cyclic permutations of the indices and removing the overall constant factor we obtain

$$\displaystyle \begin{aligned} {} \left(f^{abe} f^{ceg} + f^{bce} f^{aeg} + f^{cae} f^{beg} \right) T^{g} = 0 \,. \end{aligned} $$(5.24)We recall that repeated indices are summed over. Since the generators \(T^{g}\) are linearly independent, their coefficients in Eq. (5.24) have to vanish separately. This gives the Jacobi identity (1.50).

-

(c)

Any \(N_c \times N_c\) complex matrix M can be decomposed into the identity \({\mathbb{1} }_{N_c}\) and the \(su(N_c)\) generators \(T^a\) (with \(a=1,\ldots , N_c^2-1\)),

$$\displaystyle \begin{aligned} M = m_0 \, {\mathbb{1}}_{N_c} + m_a \, T^a \,. \end{aligned} $$(5.25)As usual, the repeated indices are summed over. The coefficients of the expansion can be obtained by multiplying both sides by \({\mathbb{1} }_{N}\) and \(T^a\), and taking the trace. Using the tracelessness of \(T^a\) and \({\mathrm {Tr}} T^a T^b = \delta ^{ab}\) we obtain that \(m_0 = {\mathrm {Tr}}(M)/N_c\) and \(m_a = {\mathrm {Tr}} (M T^a)\). We then rewrite the expansion as

$$\displaystyle \begin{aligned} \left[ \left(T^a\right)_{i_1}^{\ j_1} \left(T^a\right)_{i_2}^{\ j_2} - \delta_{i_1}^{\ j_2} \delta_{i_2}^{\ j_1} + \frac{1}{N_c} \delta_{i_1}^{\ j_1} \delta_{i_2}^{\ j_2} \right] M_{j_2}^{\ i_2} = 0\,. \end{aligned} $$(5.26)Since this equation holds for any complex matrix M, it follows that the coefficient of \(M_{j_2}^{\ i_2}\) vanishes. This yields the desired relation.

-

(a)

The commutator of \(T^a T^a\) with the generators \(T^b\) is given by

$$\displaystyle \begin{aligned} \begin{aligned} \left[ T^a T^a, T^b \right] & = T^a \left[ T^a, T^b \right] + \left[ T^a, T^b \right] T^a \\ & = {\mathrm{i}} \sqrt{2} f^{abc} \left( T^{a} T^{c} + T^{c} T^{a} \right) \,, \end{aligned} \end{aligned} $$(5.27)which vanishes because of the anti-symmetry of \(f^{abc}\). In the first line we used that \([A B,C] = A [B,C] + [A,C] B\), which can be proven by expanding all commutators, and in the second line we applied the commutation relations (1.46).

-

(b)

The Casimir invariant of the fundamental representation follows directly from the completeness relation (1.51),

$$\displaystyle \begin{aligned} \begin{aligned} \left(T^a_F \right)_{i}^{\ k} \left(T^a_F \right)_{k}^{\ j} & = \delta_{i}^{\ j} \delta_{k}^{\ k} - \frac{1}{N_c} \delta_{i}^{\ k} \delta_{k}^{\ j} \\ & = \frac{N_c^2-1}{N_c} \left( {\mathbb{1}}_{N_c} \right)_{ij} \,, \end{aligned} \end{aligned} $$(5.28)from which we read off that \(C_F = (N_c^2-1)/N_c\). For the adjoint representation, we use Eq. (1.56) to express the generators in terms of structure constants,

$$\displaystyle \begin{aligned} \left(T^a_A T^a_A \right)^{bc} = 2 f^{bak} f^{cak} \,. \end{aligned} $$(5.29)We express one of the structure constants in terms of generators through Eq. (1.48),

$$\displaystyle \begin{aligned} \left(T^a_A T^a_A \right)^{bc} = - {\mathrm{i}} 2 \sqrt{2} {\mathrm{Tr}}\left(T^b_F T^a_F T^k_F \right) f^{cak} \,, \end{aligned} $$(5.30)where we also used the anti-symmetry of \(f^{cak}\) to remove the commutator from the trace. Next, we move \(f^{cak}\) into the trace and rewrite \( f^{cak} T^k_F \) in terms of a commutator,

$$\displaystyle \begin{aligned} \left(T^a_A T^a_A\right)^{bc} = 2 {\mathrm{Tr}} \left( T^b_F T^a_F [T^a_F, T^c_F] \right) \,. \end{aligned} $$(5.31)Applying the completeness relation (1.51) finally gives

$$\displaystyle \begin{aligned} \left(T^a_A T^a_A\right)^{bc} = 2 \, N_c \left( {\mathbb{1}}\right)^{bc} \,, \end{aligned} $$(5.32)from which we see that \(C_A = 2 \, N_c\).

Note that in many QCD contexts it is customary to normalise the generators so that \(\mathrm {Tr}(T^a T^b) = \delta ^{ab}/2\), as opposed to \(\mathrm {Tr}(T^a T^b) = \delta ^{ab}\) as we do here. This different normalisation results in \(C_A = N_c\) and \(C_F = (N_c^2-1)/(2 N_c)\).

The identities (a) and (b) follow straightforwardly from the definition of the bra-ket notation and from the expression of \(\gamma ^{\mu }\) in terms of Pauli matrices,

Setting \(j=i\) in the previous identities and using that \((\sigma ^{\mu })_{\beta \dot {\beta }} = \epsilon _{\beta \alpha } \epsilon _{\dot {\beta }\dot {\alpha }} (\bar {\sigma }^{\mu })^{\dot {\alpha }\alpha }\) gives the relation (c),

We obtain the relation (d) by substituting the identities \(\tilde {\lambda }_i^{\dot {\alpha }} \lambda _i^{\alpha } = p_i^{\mu } (\bar {\sigma }_{\mu })^{\dot {\alpha }\alpha }\) and \(\text{tr}\left (\sigma ^{\mu } \bar {\sigma }^{\nu }\right ) = 2 \eta ^{\mu \nu }\) into (b) with \(j=i\),

In order to prove the Schouten identity, we recall that a spinor \(\lambda _i\) is a two-dimensional object. We can therefore expand \(\lambda _3\) in a basis constructed from \(\lambda _1\) and \(\lambda _2\),

Contracting both sides of this equation by \(\lambda _1\) and \(\lambda _2\) gives a linear system of equations for the coefficients \(c_1\) and \(c_2\),

Substituting the solution of this system into Eq. (5.37) and rearranging the terms gives the Schouten identity. Finally, the identity a) and \((\bar {\sigma }^{\mu })^{\dot {\alpha } \beta } (\bar {\sigma }_{\mu })^{\dot {\beta } \alpha } = 2 \epsilon ^{\dot {\alpha }\dot {\beta }} \epsilon ^{\beta \alpha }\) give the Fierz rearrangement,

-

(a)

The Lorentz generators in the scalar representation are obtained by setting to zero the x-independent representation matrices \(S^{\mu \nu }\) in Eq. (1.10):

$$\displaystyle \begin{aligned} M^{\mu\nu} = {\mathrm{i}}\, \left (x^{\mu}\,\frac{\partial}{\partial x_{\nu}} - x^{\nu}\,\frac{\partial}{\partial x_{\mu}} \right ) \, . \end{aligned} $$(5.40)We act with \(M^{\mu \nu }\) on a generic function \(f(x)\), which we express in terms of its Fourier transform as \(f(x) = \int {\mathrm {d}}^4 p \, \mathrm {e}^{{\mathrm {i}} p\cdot x} \tilde {f}(p)\). By integrating by parts and using \(x^{\mu } = - {\mathrm {i}} \frac {\partial }{\partial p_{\mu }} \mathrm {e}^{{\mathrm {i}} p\cdot x}\) we obtain

$$\displaystyle \begin{aligned} M^{\mu \nu} f(x) = \int {\mathrm{d}}^4 p \, \mathrm{e}^{{\mathrm{i}} p\cdot x} \tilde{M}^{\mu \nu} \tilde{f}(p) \,, \end{aligned} $$(5.41)where

$$\displaystyle \begin{aligned} \tilde{M}^{\mu \nu} = {\mathrm{i}} \left( p^{\mu} \frac{\partial}{\partial p_{\nu}} - p^{\nu} \frac{\partial}{\partial p_{\mu}} \right) \end{aligned} $$(5.42)is the momentum-space realisation of the Lorentz generators. Indeed, one can verify that this form of the generators satisfies the commutation relations of the Poincaré algebra in Eqs. (1.8) and (1.9).

-

(b)

We begin with \(m_{\alpha \beta }\). It is instructive to spell out the indices of \(S^{\mu \nu }_{\mathrm {L}}\),

$$\displaystyle \begin{aligned} \left(S^{\mu\nu}_{\mathrm{L}}\right)_{\alpha\beta} = \frac{{\mathrm{i}}}{4} \epsilon_{\beta\gamma} \left[ \left(\sigma^{\mu}\right)_{\alpha\dot\alpha} \left(\bar{\sigma}^{\nu}\right)^{\dot\alpha\gamma} - \left(\sigma^{\nu}\right)_{\alpha\dot\alpha} \left(\bar{\sigma}^{\mu}\right)^{\dot\alpha\gamma} \right] \,. \end{aligned} $$(5.43)Contracting it with \(\tilde {M}_{\mu \nu }\) and doing a little spinor algebra gives

$$\displaystyle \begin{aligned} {} m_{\alpha\beta} = \frac{1}{2} \lambda_{\beta} \left(\sigma^{\mu}\right)_{\alpha\dot\alpha} \tilde{\lambda}^{\dot\alpha} \frac{\partial}{\partial p^{\mu}} + \frac{1}{2} \lambda_{\alpha} \tilde{\lambda}_{\dot \alpha} \left(\bar{\sigma}^{\mu}\right)^{\dot\alpha\gamma} \epsilon_{\gamma\beta} \frac{\partial}{\partial p^{\mu}} \,. \end{aligned} $$(5.44)We now need to express the derivatives with respect to \(p^{\mu }\) in terms of derivatives with respect to \(\lambda ^{\alpha }\) and \(\tilde {\lambda }^{\dot \alpha }\). For this purpose, we use the identity \(p^{\mu } = \tilde {\lambda }^{\dot \alpha } \lambda ^{\alpha } \left (\sigma ^{\mu }\right )_{\alpha \dot \alpha }/2\) (see Exercise 1.5), which allows us to use the chain rule,

$$\displaystyle \begin{aligned} {} \frac{\partial}{\partial \lambda^{\alpha}} = \frac{\partial p^{\mu}}{\partial \lambda^{\alpha}} \frac{\partial}{\partial p^{\mu}} = \frac{1}{2} \left(\sigma^{\mu}\right)_{\alpha\dot\alpha} \tilde{\lambda}^{\dot\alpha} \frac{\partial}{\partial p^{\mu}} \,. \end{aligned} $$(5.45)This takes care of the first term on the RHS of Eq. (5.44). For the second term, we do the same but with the equivalent identity \(p^{\mu } = \lambda _{\alpha } \tilde {\lambda }_{\dot \alpha } \left (\bar {\sigma }^{\mu }\right )^{\dot \alpha \alpha }/2\). Using that \(\frac {\partial \lambda _{\beta }}{\partial \lambda ^{\alpha }} = \epsilon _{\beta \alpha }\) we obtain

$$\displaystyle \begin{aligned} {} \frac{\partial}{\partial \lambda^{\alpha}} = \frac{1}{2} \tilde{\lambda}_{\dot\beta} \left(\bar{\sigma}^{\mu}\right)^{\dot\beta \beta} \epsilon_{\beta \alpha} \frac{\partial}{\partial p^{\mu}} \,. \end{aligned} $$(5.46)Substituting Eqs. (5.45) and (5.46) into Eq. (5.44) finally gives the desired expression of \(m_{\alpha \beta }\). The computation of \(\overline {m}_{\dot \alpha \dot \beta }\) is analogous.

-

(c)

The n-particle generators are given by

$$\displaystyle \begin{aligned} \begin{aligned} & m_{\alpha \beta} = \sum_{k=1}^n \left( \lambda_{k \alpha} \frac{\partial}{\partial \lambda_k^{\beta}} + \lambda_{k \beta} \frac{\partial}{\partial \lambda_k^{\alpha}}\right) \,, \quad \overline{m}_{\dot\alpha \dot\beta} = \sum_{k=1}^n \left( \tilde{\lambda}_{k \dot\alpha} \frac{\partial}{\partial \tilde{\lambda}_k^{\dot\beta}} + \tilde{\lambda}_{k \dot\beta} \frac{\partial}{\partial \tilde{\lambda}_k^{\dot\alpha}}\right) \,, \\ & \tilde{M}^{\mu \nu} = {\mathrm{i}} \sum_{k=1}^n \left( p_k^{\mu} \frac{\partial}{\partial p_{k \nu}} - p_k^{\nu} \frac{\partial}{\partial p_{k \mu}} \right) \,. \end{aligned} \end{aligned} $$(5.47)We act with \(m_{\alpha \beta }\) and \(\overline {m}_{\dot \alpha \dot \beta }\) on \(\langle ij \rangle = \lambda _i^{\gamma } \lambda _{j\,\gamma }\) and \([ ij ] = \tilde {\lambda }_{i \, \dot \gamma } \lambda _{j}^{\dot \gamma }\). \(\langle ij \rangle \) (\([ij]\)) depends only on the \(\lambda _i\) (\(\tilde {\lambda }_i\)) spinors, and is thus trivially annihilated by \(\overline {m}_{\dot \alpha \dot \beta }\) (\(m_{\alpha \beta }\)). With a bit more of spinor algebra we can show that \(\langle ij \rangle \) is annihilated also by \(m_{\alpha \beta }\),

$$\displaystyle \begin{aligned} \begin{aligned} m_{\alpha \beta} \langle ij \rangle & = \sum_{k=1}^n \left[ \delta_{ik} \delta^{\gamma}_{\, \beta} \lambda_{k \, \alpha} \lambda_{j \, \gamma} + \delta_{jk} \epsilon_{\gamma \beta} \lambda_{k \, \alpha} \lambda_i^{\gamma} + \left( \alpha \leftrightarrow \beta \right) \right] = \\ & = \lambda_{i \, \alpha} \lambda_{j \, \beta} - \lambda_{i \, \beta} \lambda_{j \, \alpha} + \left( \alpha \leftrightarrow \beta \right) = 0 \,. \end{aligned} \end{aligned} $$(5.48)Similarly we can show that \(\overline {m}_{\dot \alpha \dot \beta } [ij] =0 \). The Lorentz generators are first-order differential operators. As a result, any function of a Lorentz-invariant object is Lorentz invariant as well. We can thus immediately conclude that \(s_{ij} = \langle ij\rangle [ji]\) is annihilated by \(m_{\alpha \beta }\) and \(\overline {m}_{\dot \alpha \dot \beta }\). Alternatively, we can show that

$$\displaystyle \begin{aligned} \tilde{M}_{\mu \nu} s_{ij} = 2 {\mathrm{i}} \left[ p_{i \mu} p_{j \nu} + p_{i \nu} p_{j \mu} - \left(\mu \leftrightarrow \nu \right) \right] = 0 \,. \end{aligned} $$(5.49)

-

(a)

In order to construct an explicit expression for the polarisation vectors we will write a general ansatz and apply constraints to fix all free coefficients. The polarisation vector \(\epsilon ^{\dot {\alpha }\alpha }_i\) is a four-dimensional object which satisfies constraints involving the corresponding external momentum \(p_i^{\dot {\alpha } \alpha } = \tilde {\lambda }_i^{\dot {\alpha }} \lambda _i^{\alpha }\) and reference vector \(r_i^{\dot {\alpha } \alpha } = \tilde {\mu }_i^{\dot {\alpha }} \mu ^{\alpha }\). For generic kinematics, i.e. for \(p_i \cdot r_i \neq 0\) (and thus \(\langle \lambda _i \mu _i \rangle \neq 0\) and \([ \tilde {\lambda }_i \tilde {\mu }_i ]\neq 0\)), one can show that \(\tilde {\lambda }_i^{\dot {\alpha }} \lambda _i^{\alpha }\), \(\tilde {\mu }_i^{\dot {\alpha }} \mu _i^{\alpha }\), \(\tilde {\lambda }_i^{\dot {\alpha }} \mu _i^{\alpha }\) and \(\tilde {\mu }_i^{\dot {\alpha }} \lambda _i^{\alpha }\) are linearly independent, and thus form a basis in which we can expand \(\epsilon ^{\dot {\alpha }\alpha }_i\). Our ansatz for \(\epsilon ^{\dot {\alpha }\alpha }_i\) therefore is

$$\displaystyle \begin{aligned} \epsilon^{\dot{\alpha}\alpha}_i = c_1 \, \tilde{\lambda}_i^{\dot{\alpha}} \lambda_i^{\alpha} + c_2 \, \tilde{\mu}_i^{\dot{\alpha}} \mu_i^{\alpha} + c_3 \, \tilde{\lambda}_i^{\dot{\alpha}} \mu_i^{\alpha} + c_4 \, \tilde{\mu}_i^{\dot{\alpha}} \lambda_i^{\alpha} \,. \end{aligned} $$(5.50)The transversality and the gauge choice,

$$\displaystyle \begin{aligned} \begin{aligned} & \epsilon^{\dot{\alpha}\alpha}_i \left(p_i\right)_{\alpha \dot{\alpha}} = c_2 \langle \mu_i \lambda_i \rangle [ \tilde{\lambda}_i \tilde{\mu}_i ] = 0 \,, \\ & \epsilon^{\dot{\alpha}\alpha}_i \left(r_i\right)_{\alpha \dot{\alpha}} = c_1 \langle \lambda_i \mu_i \rangle [ \tilde{\mu}_i \tilde{\lambda}_i ] = 0 \,, \end{aligned} \end{aligned} $$(5.51)imply that \(c_1 = c_2 = 0\). The light-like condition,

$$\displaystyle \begin{aligned} \epsilon^{\dot{\alpha}\alpha}_i \left(\epsilon_i\right)_{\alpha \dot{\alpha}}= 2 c_3 c_4 \langle \lambda_i \mu_i \rangle [ \tilde{\lambda}_i \tilde{\mu}_i ] = 0 \,, \end{aligned} $$(5.52)has two solutions: \(c_3=0\) and \(c_4 = 0\). We parametrise the two solutions as

$$\displaystyle \begin{aligned} \epsilon_{A,i}^{\dot{\alpha}\alpha} = n_A \tilde{\lambda}_i^{\dot{\alpha}} \mu_i^{\alpha} \,, \qquad \quad \epsilon_{B,i}^{\dot{\alpha}\alpha} = n_B \tilde{\mu}_i^{\dot{\alpha}} \lambda_i^{\alpha} \,. \end{aligned} $$(5.53)Next, we normalise the two solutions such that \(\epsilon _{A,i} \cdot \epsilon _{B,i}=-1\) and \(\epsilon _{A,i}^* = \epsilon _{B,i}\). This implies that

$$\displaystyle \begin{aligned} {} n_A n_B = \frac{-\sqrt{2}}{\langle \lambda_i \mu_i \rangle} \frac{\sqrt{2}}{[ \tilde{\lambda}_i \tilde{\mu}_i ]} \,, \qquad \qquad n_A^*=n_B \,. \end{aligned} $$(5.54)There is now some freedom in fixing \(n_A\) and \(n_B\), which we must use to ensure that the two solutions have the correct helicity scaling. We may parametrise \(n_A = n \, \mathrm {e}^{{\mathrm {i}} \varphi }\) and \(n_B = n \, \mathrm {e}^{-{\mathrm {i}} \varphi }\) with real n and \(\varphi \), and fix the phase \(\varphi \) by requiring that the solutions are eigenvectors of the helicity operator. It is however simpler to follow a heuristic approach. Recalling that \(\langle \lambda _i \mu _i \rangle ^* = - [ \tilde {\lambda }_i \tilde {\mu }_i ]\), we notice that a particularly simple solution to the constraints (5.54) is given by \(n_A = -\sqrt {2}/\langle \lambda _i \mu _i \rangle \) and \(n_B = \sqrt {2}/[ \tilde {\lambda }_i \tilde {\mu }_i ]\). Following this guess, we have two fully determined vectors which satisfy all constraints of the polarisation vectors:

$$\displaystyle \begin{aligned} \epsilon_{A,i}^{\dot{\alpha}\alpha} = -\sqrt{2} \, \frac{\tilde{\lambda}_i^{\dot{\alpha}} \mu_i^{\alpha}}{\langle \lambda_i \mu_i \rangle} \,, \qquad \quad \epsilon_{B,i}^{\dot{\alpha}\alpha} = \sqrt{2} \, \frac{\tilde{\mu}_i^{\dot{\alpha}} \lambda_i^{\alpha}}{[ \tilde{\lambda}_i \tilde{\mu}_i ]} \,. \end{aligned} $$(5.55)Finally, we need to check that \(\epsilon _{A,i}^{\dot {\alpha }\alpha }\) and \(\epsilon _{B,i}^{\dot {\alpha }\alpha }\) are indeed eigenvectors of the helicity generator h in Eq. (1.122), which in this case takes the form

$$\displaystyle \begin{aligned} h = \frac{1}{2} \left[ -\lambda_{i}^{\alpha}\frac{\partial}{\partial \lambda_{i}^{\alpha}} -\mu_{i}^{\alpha}\frac{\partial}{\partial \mu_{i}^{\alpha}} + \tilde\lambda_{i}^{{\dot{\alpha}}}\frac{\partial}{\partial \tilde\lambda_{i}^{{\dot{\alpha}}}} + \tilde{\mu}_{i}^{{\dot{\alpha}}}\frac{\partial}{\partial \tilde{\mu}_{i}^{{\dot{\alpha}}}} \right]\, . \end{aligned} $$(5.56)The explicit computation yields that

$$\displaystyle \begin{aligned} h \epsilon_{A,i}^{\dot{\alpha}\alpha} = +\epsilon_{A,i}^{\dot{\alpha}\alpha}\,, \qquad \quad h \epsilon_{B,i}^{\dot{\alpha}\alpha} = -\epsilon_{B,i}^{\dot{\alpha}\alpha}\,. \end{aligned} $$(5.57)We can therefore identify \(\epsilon _{A,i}^{\dot {\alpha }\alpha }=\epsilon _{+,i}^{\dot {\alpha }\alpha }\) and \(\epsilon _{B,i}^{\dot {\alpha }\alpha }=\epsilon _{-,i}^{\dot {\alpha }\alpha }\), which completes the derivation.

-

(b)

We rewrite the spinor expressions for the polarisation vectors as Lorentz vectors using the identities of Exercise 1.5,

$$\displaystyle \begin{aligned} \epsilon_{+,i}^{\mu} = -\frac{1}{\sqrt{2}} \frac{ \mu_i^{\alpha} (\sigma^{\mu})_{\alpha\dot{\alpha}} \tilde{\lambda}_i^{\dot{\alpha}}}{\langle \lambda_i \mu_i \rangle} \,, \qquad \quad \epsilon_{-,i}^{\mu} = \frac{1}{\sqrt{2}} \frac{ \lambda_i^{\alpha} (\sigma^{\mu})_{\alpha\dot{\alpha}} \tilde{\mu}_i^{\dot{\alpha}}}{[ \tilde{\lambda}_i \tilde{\mu}_i ]} \,. \end{aligned} $$(5.58)Plugging these expressions into the polarisation sum gives

$$\displaystyle \begin{aligned} \sum_{h=\pm} \epsilon_{h,i}^{\mu} \epsilon_{h,i}^{* \nu} = -\frac{1}{2} \frac{ (\sigma^{\mu})_{\alpha\dot{\alpha}} \tilde{\lambda}_i^{\dot{\alpha}} \lambda_i^{\beta} (\sigma^{\nu})_{\beta\dot{\beta}} \tilde{\mu}_i^{\dot{\beta}} \mu_i^{\alpha} + \left(\mu \leftrightarrow \nu \right)}{\langle \lambda_i \mu \rangle [ \tilde{\lambda}_i \tilde{\mu}_i ]} \,. \end{aligned} $$(5.59)Next, we rewrite the numerator in terms of traces as

$$\displaystyle \begin{aligned} \sum_{h=\pm} \epsilon_{h,i}^{\mu} \epsilon_{h,i}^{* \nu} = -\frac{1}{2} \frac{\text{Tr}\left[\sigma^{\mu} p_i \sigma^{\nu} r_i \right]+ \left(\mu \leftrightarrow \nu \right)}{\langle \lambda_i \mu \rangle [ \tilde{\lambda}_i \tilde{\mu}_i ]} \,. \end{aligned} $$(5.60)We then use the identity (1.29) to rewrite the trace of Pauli matrices in terms of Dirac matrices. Finally, by using

$$\displaystyle \begin{aligned} {} \begin{aligned} & {\mathrm{Tr}}\left(\gamma^{\mu} \gamma^{\nu} \gamma^{\rho} \gamma^{\tau}\right) = 4 \left(\eta^{\mu \nu} \eta^{\rho \tau} - \eta^{\mu \rho} \eta^{\nu \tau} + \eta^{\mu \tau} \eta^{\nu \rho} \right) \,, \\ & {\mathrm{Tr}}\left(\gamma^{\mu} \gamma^{\nu} \gamma^{\rho} \gamma^{\tau} \gamma_5 \right) = - 4 \, {\mathrm{i}} \, \epsilon^{\mu \nu \rho \tau} \,, \end{aligned} \end{aligned} $$(5.61)we obtain

$$\displaystyle \begin{aligned} \sum_{h=\pm} \epsilon_{h,i}^{\mu} \epsilon_{h,i}^{* \nu} = -\eta^{\mu\nu} + \frac{p_i^{\mu} r_i^{\nu}+p_i^{\nu} r_i^{\mu}}{p_i \cdot r_i} \,. \end{aligned} $$(5.62)

We start from the full Feynman rule four-point vertex (1.66) contracted with dummy polarisation vectors \(\epsilon _{i}\),

and use \(f^{abe}\, f^{cde}=-{\mathrm {Tr}}([T^{a},T^{b}]\, [T^{c},T^{d}]) / 2\), which is obtained from Eqs. (1.48) and (1.51). Note that the \(U(1)\) piece cancels out here. Expanding out the commutators in the traces and collecting terms of identical colour ordering gives

which is the result quoted in Eq. (1.149).

FormalPara Exercise 1.9: Independent Gluon Partial Amplitudes-

(a)

Taking parity and cyclicity into account we have the following independent four-gluon tree-level amplitudes:

$$\displaystyle \begin{aligned} A^{\text{tree}}_{4}(1^{+},2^{+},3^{+},4^{+}) \, ,\qquad & A^{\text{tree}}_{4}(1^{-},2^{+},3^{+},4^{+}) \, , \\ A^{\text{tree}}_{4}(1^{-},2^{-},3^{+},4^{+})\, , \qquad & A^{\text{tree}}_{4}(1^{-},2^{+},3^{-},4^{+})\, . \end{aligned} $$The last two are related via the \(U(1)\) decoupling theorem as

$$\displaystyle \begin{aligned} \begin{aligned} A^{\text{tree}}_{4}(1^{-},2^{+},3^{-},4^{+}) &= - A^{\text{tree}}_{4}(1^{-},2^{+},4^{+},3^{-}) - A^{\text{tree}}_{4}(1^{-},4^{+},2^{+},3^{-}) \\ &= - A^{\text{tree}}_{4}(3^{-},1^{-},2^{+},4^{+}) - A^{\text{tree}}_{4}(3^{-},1^{-},4^{+},2^{+}) \, . \end{aligned} \end{aligned} $$(5.65)Hence only the three amplitudes \(A^{\text{tree}}_{4}(1^{+},2^{+},3^{+},4^{+})\), \(A^{\text{tree}}_{4}(1^{-},2^{+},3^{+},4^{+})\) and \(A^{\text{tree}}_{4}(1^{-},2^{-},3^{+},4^{+}) \) are independent. In fact the first two of this list vanish, so there is only one independent four-gluon amplitude at tree-level to be computed.

-

(b)

Moving on to the five-gluon case, we have the four cyclic and parity independent amplitudes

$$\displaystyle \begin{aligned} A^{\text{tree}}_{5}(1^{+},2^{+},3^{+},4^{+},5^{+}) \, ,\qquad & A^{\text{tree}}_{5}(1^{-},2^{+},3^{+},4^{+},5^{+}) \, , \\ A^{\text{tree}}_{5}(1^{-},2^{-},3^{+},4^{+},5^{+})\, , \qquad & A^{\text{tree}}_{4}(1^{-},2^{+},3^{-},4^{+},5^{+})\, . \end{aligned} $$Looking at the following \(U(1)\) decoupling relation we may again relate the last amplitude in the above list to the third one

$$\displaystyle \begin{aligned} \begin{aligned} &A^{\text{tree}}_{5}(2^{+},3^{-},4^{+},5^{+},1^{-}) = \\ & =- A^{\text{tree}}_{5}(3^{-},2^{+},4^{+},5^{+},1^{-}) - A^{\text{tree}}_{5}(3^{-},4^{+},2^{+},5^{-},1^{-})\\ &- A^{\text{tree}}_{5}(3^{-},4^{+},5^{+},2^{+},1^{-}) \\ &= - A^{\text{tree}}_{5}(1^{-},3^{-},2^{+},4^{+},5^{+}) - A^{\text{tree}}_{5}(1^{-},3^{-},4^{+},2^{+},5^{+})\\ &- A^{\text{tree}}_{5}(1^{-},3^{-},4^{+},5^{+},2^{+}) \,. \end{aligned} \end{aligned} $$(5.66)Hence also for the five-gluon case there are only three independent amplitudes: \(A^{\text{tree}}_{5}(1^{+},2^{+},3^{+},4^{+},5^{+})\), \(A^{\text{tree}}_{5}(1^{-},2^{+},3^{+},4^{+},5^{+})\), \(A^{\text{tree}}_{5}(1^{-},2^{-},3^{+},\)\(4^{+},5^{+})\). The first two in this list vanish, leaving us with one independent and non-trivial five-gluon tree-level amplitude, of the MHV type.

Exercise 1.10: The\(\overline {\text{MHV}}_3\)Amplitude

Using the three-point vertex in Eq. (1.149) we obtain

Choosing the same reference momentum for all polarisations, \(r^{\dot \alpha \alpha } = \tilde {\mu }^{\dot \alpha } \mu ^{\alpha }\), we have

where we used that \(p_i+p_j+p_k=0\). Substituting these into Eq. (5.67) yields

Since the left-handed spinors are collinear, we may set \(\lambda _{2}=a\lambda _{1}\) and \(\lambda _{3}=b\lambda _{1}\). Momentum conservation \(\lambda _{1}(\tilde \lambda _{1} + a\tilde \lambda _{2}+b\tilde \lambda _{3})=0\) then implies that \(a = [31]/ [23]\) and \(b= [12]/ [23]\). Substituting these into Eq. (5.69) finally gives

There are two colour-ordered diagrams contributing to \(A_{\bar {q}qgg}^{\text{tree}}(1^{-}_{\bar q}, 2^{+}_{q}, 3^{-}, 4^{+})\):

The first graph (I) is proportional to  which vanishes for the reference-vector choice \(\mu _{4}\, \tilde \mu _{4}=p_{1}\). This is so as

which vanishes for the reference-vector choice \(\mu _{4}\, \tilde \mu _{4}=p_{1}\). This is so as

Evaluating the second graph (II) with the colour-ordered Feynman rules we obtain

where \(q=p_1+p_2\) and \(p_{ij} = p_i-p_j\). The term \((2)\) vanishes for our choice \(\mu _{4}\, \tilde \mu _{4}=p_{1}\),

For the term \((3)\), we note that  to find

to find

which is killed by the choice \(\mu _3\tilde {\mu }_3 = p_2\). Hence, for this choice of reference vectors only the term \((1)\) in Eq. (5.73) contributes. One has

and, using momentum conservation,

Inserting these into the term \((1)\) of Eq. (5.73) and using \(q^{2}=\langle 12\rangle [21]\) yields

as claimed. The helicity count of our result is straightforward and correct:

We parametrise the collinear limit \(5^+ \parallel 6^+\) by

with \(P = \lambda _P \tilde {\lambda }_P = p_5 + p_6\). Substituting this into the Parke-Taylor formula (1.192) for \(A_{6}^{\mathrm {tree}}(1^{-}, 2^{-}, 3^{+}, 4^{+}, 5^{+}, 6^{+})\) gives

Comparing this to the expected collinear behaviour from Eq. (2.5),

and using Eq. (1.192) for the 5-gluon amplitudes, we see that the term with \({\mathrm {Split}}_{+}^{\mathrm {tree}}(x,5^{+},6^{+})\) is absent. Since \( A_{5}^{\mathrm {tree}}(1^{-}, 2^{-}, 3^{+}, 4^{+}, P^{-}) \neq 0\), we deduce that

as claimed.

FormalPara Exercise 2.2: Soft Functions in the Spinor-Helicity FormalismThe leading soft function for a positive-helicity gluon with colour-ordered neighbours a and b is given by Eq. (2.19) with \(n=a\) and \(1=b\),

Using Eq. (1.124) for the polarisation vector with \(\mu \) as reference spinor, we have that

Substituting this with \(i=a,b\) into Eq. (5.83) and using the Schouten identity give

as claimed. We can obtain the negative-helicity soft function by acting with spacetime parity on the positive-helicity one. Parity exchanges \(\lambda ^{\alpha }\) and \(\tilde {\lambda }^{\dot \alpha }\), which amounts to swapping \(\langle i j \rangle \) with \([ j i ]\).

We now turn to a positive-helicity graviton. The starting point is again Eq. (2.19),

We parametrise the graviton’s polarisation vector by two copies of the gauge-field one,

where we spelled out the arbitrary reference vectors x and y. Substituting this into Eq. (5.86) and using Eq. (1.124) for the polarisation vectors gives the desired result:

As above, the negative-helicity result can by obtained through parity conjugation.

FormalPara Exercise 2.3: A \(\bar {q}qggg\) Amplitude from Collinear and Soft LimitsLet us consider the collinear limit \(3^{-} \parallel 4^{+}\) of the quark-gluon amplitude \(A_{\bar {q}qggg}^{\text{tree}}(1_{\bar q}^{-}, 2_{q}^{+}, 3^{-}, 4^{+}, 5^{+})\). We parametrise the limit with

where \(P=p_3+p_4\). The collinear factorisation theorem implies that

where we inserted Eq. (2.7) for the splitting functions, and Eqs. (2.36) and (2.37) for the amplitudes. The limiting form of Eq. (5.89) suggests that the amplitude before the limit takes the form

The form above leads us to conjecture the following n-particle generalisation:

By analogy with Eq. (5.89), we see that the conjectured form of the n-particle amplitude Eq. (5.91) is consistent with the collinear limits \(3^{-} \parallel 4^{+}\) and \(i^{+} \parallel (i+1)^{+}\) for \(i=4,\ldots , n-1\). Let us also study two soft limits. First we take \(\lambda _{3}\to 0\). Then we immediately see that

Since the expected behaviour in the limit is

and the relevant soft function is not zero, this implies that

which is thus the conjectured n-particle generalisation of Eq. (2.36). Taking the soft limit \(4^{+}\to 0\) (or any other positive-helicity gluon leg) on the other hand again allows us to check the self-consistency of Eq. (5.91),

We want to determine the NMHV six-gluon amplitude \(A_{6}^{\text{tree}}(1^{+},2^{+},3^{+},4^{-},5^{-}, 6^{-})\). The \([6^-1^+\rangle \) shift leads to the BCFW recursion relation

Since the all-plus/minus and single-plus/minus tree amplitudes vanish, only two contributions are non-zero. Diagrammatically, they are given by

where we used the short-hand notation \(P_{ij} = p_i+p_j\). The first diagram is given by

The corresponding z-pole is at \(z_{\text{I}} = P_{12}^2/\langle 6 | P_{12} | 1 ] = \langle 1 2 \rangle / \langle 6 2 \rangle \). Hence we have

where we used the Schouten identity to simplify \(|\hat {1}\rangle \), and, for \(\hat {P}_{12} = \hat {p}_1 + p_2\),

By combining the above we obtain

The expression for \( [\hat 6 \,\hat P_{12}]\) can be further simplified by noting that \(P_{26}^{2}+P_{12}^{2}+P_{16}^{2} = (p_6+p_1+p_2)^2\). Substituting all these into Eq. (5.97), with the sign convention (1.113), gives our final expression for diagram (I):

For the second diagram we start with

Now the shift parameter takes the value \(z_{\text{II}} = [ 6 5 ] / [ 5 1 ]\), which implies

and hence

Plugging these into Eq. (5.102) yields

Finally, by combining the two diagrams we obtain

In the soft limit \(p_5 \to 0\) we have the reduced momentum-conservation condition \(p_{1}+p_{2}+p_{3}+p_{4}+p_{6}=0\), which implies that \((p_6+p_1+p_2)^2 = \langle 3 4 \rangle [ 4 3 ]\) and \((p_1+p_5+p_6)^2 = \langle 1 6 \rangle [ 6 1 ]\). Using these in Eq. (2.67) and pulling out the pole term \(( [45] [56])^{-1}\) gives

We use the reduced momentum conservation and the Dirac equation to simplify

and the soft limit for \(\langle 4|P_{56}|1] = \langle 4 6 \rangle [ 6 1 ]\), obtaining

The two terms in the parentheses may be simplified using a Schouten identity as

By plugging this into the above we find

which indeed matches the expected factorisation,

with the soft function given in Eq. (2.25).

FormalPara Exercise 2.6: Mixed-Helicity Four-Point Scalar-Gluon AmplitudeThe \([4^- 1^+\rangle \) shift leads to the BCFW recursion

where we used Eqs. (2.78) and (2.79) for the three-point scalar-gluon amplitudes (with \(g=1\)), \(P=p_1+p_2\), and \(r_1\) (\(r_4\)) denotes the reference momentum of the gluon leg 1 (4). With the gauge choice \(r_{1}=\hat {p}_4\) and \(r_{4}=\hat {p}_1\) along with the identities \(|\hat 4\rangle =|4\rangle \) and \(|\hat 1]=|1]\) for the \([4^- 1^+\rangle \) shift one has

Plugging these into the above yields the final compact result

The commutation relations with the dilatation operator d,

are manifest from dimensional analysis. We recall in fact that d measures the mass dimension, i.e. \([d,f] = [f] f\) where \([f]\) denotes the dimension of f in units of mass, and that the helicity spinors \(\lambda _i\) and \(\tilde {\lambda }_i\) have mass dimension \(1/2\). It remains for us to compute the commutator \([k_{\alpha {\dot {\alpha }}}, p^{\beta {\dot {\beta }}}]\), which is given by

By using Eq. (2.102) for a single particle with raised index,

and the analogous equation with dotted indices, we obtain

which concludes the proof of Eq. (2.107).

FormalPara Exercise 2.8: Inversion and Special Conformal Transformations-

(a)

Using the inversion transformation \(I \, x^{\mu }= x^{\mu }/x^{2}\) and the translation transformation \(P^{\mu } \, x = x^{\mu }-a^{\mu }\) we have

$$\displaystyle \begin{aligned} I \, P^{\mu} \, I \, x^{\mu}= I \, P^{\mu} \, \frac{x^{\mu}}{x^{2}} & = I \, \frac{x^{\mu}-a^{\mu}}{(x-a)^{2}} = \frac{\frac{x^{\mu}}{x^{2}}-a^{\mu}}{\left(\frac{x}{x^{2}}-a \right)^{2}}= \frac{x^{\mu}-a^{\mu}x^{2}}{1-2 \, a\cdot x + a^{2}\, x^{2}} \,, \end{aligned} $$(5.120)which equals the finite special conformal transformation in Eq. (2.111).

-

(b)

We begin by computing the Jacobian factor \(|\partial x^{\prime }/\partial x|\), i.e. the absolute value of the determinant of the matrix with entries \(\partial x^{\prime \mu }/\partial x^{\nu }\) for \(\mu ,\nu =0,1,2,3\). It is convenient to decompose the special conformal transformation \(x\to x^{\prime }\) as in point a):

$$\displaystyle \begin{aligned} x^{\mu} \quad \overset{I}{\longrightarrow} \quad y^{\mu} := \frac{x^{\mu}}{x^2} \quad \overset{P^{\mu}}{\longrightarrow} \quad z^{\mu} := y^{\mu} - a^{\mu} \quad \overset{I}{\longrightarrow} \quad x^{\prime \mu} := \frac{z^{\mu}}{z^2} \,. \end{aligned} $$(5.121)The Jacobian factor for \(x\to x^{\prime }\) then factorises into the product of the Jacobian factors for the three separate transformations:

$$\displaystyle \begin{aligned} \left| \frac{\partial x^{\prime}}{\partial x} \right| = \left| \frac{\partial x^{\prime}}{\partial z} \right| \, \left| \frac{\partial z}{\partial y} \right| \, \left| \frac{\partial y}{\partial x} \right| \,. \end{aligned} $$(5.122)For the first inversion, \(x^{\mu } \to y^{\mu }\), we have that

$$\displaystyle \begin{aligned} \frac{\partial y^{\mu}}{\partial x^{\nu}} = \frac{1}{x^2}\left( \eta^{\mu}{}_{\nu} - 2 \, \frac{x^{\mu} x_{\nu}}{x^2} \right) \,, \end{aligned} $$(5.123)so that the Jacobian factor takes the form

$$\displaystyle \begin{aligned} \left| \frac{\partial y}{\partial x} \right| = \big(x^2\big)^{-4} \left| \mathrm{det}\left( \eta^{\mu}{}_{\nu} - 2 \, \frac{x^{\mu} x_{\nu}}{x^2} \right) \right| \,. \end{aligned} $$(5.124)We use the representation of the determinant in terms of Levi-Civita symbols:

$$\displaystyle \begin{aligned} \left| \frac{\partial y}{\partial x} \right| &= \frac{\big(x^2\big)^{-4}}{4!} \left| \epsilon_{\mu_1 \mu_2 \mu_3 \mu_4} \epsilon^{\nu_1 \nu_2 \nu_3 \nu_4} \left( \eta^{\mu_1}{}_{\nu_1} - 2 \, \frac{x^{\mu_1} x_{\nu_1}}{x^2} \right)\right. \\ & \left.\quad \ldots \left( \eta^{\mu_4}{}_{\nu_4} - 2 \, \frac{x^{\mu_4} x_{\nu_4}}{x^2} \right) \right| \,. \end{aligned} $$(5.125)The contractions involving two, one, or no factors of \(\eta ^{\mu _i}{ }_{\nu _i}\) vanish because of the anti-symmetry of the Levi-Civita symbol. The contractions with three factors of \(\eta ^{\mu _i}{ }_{\nu _i}\) are equal. This leads us to

$$\displaystyle \begin{aligned} \left| \frac{\partial y}{\partial x} \right| = \frac{\big(x^2\big)^{-4}}{4!} \left| \epsilon_{\mu \nu \rho \sigma} \epsilon^{\mu \nu \rho \sigma} + 4 \, \epsilon_{\mu \nu \rho \sigma_1} \epsilon^{\mu \nu \rho \sigma_2} \left( - 2 \, \frac{x^{\sigma_1} x_{\sigma_2}}{x^2} \right) \right| \,. \end{aligned} $$(5.126)Using the identities \( \epsilon _{\mu \nu \rho \sigma } \epsilon ^{\mu \nu \rho \sigma } = - 4!\) and \( \epsilon _{\mu \nu \rho \sigma _1} \epsilon ^{\mu \nu \rho \sigma _2} = - 3! \, \eta ^{\sigma _2}{ }_{\sigma _1}\)Footnote 2 gives

$$\displaystyle \begin{aligned} \left| \frac{\partial y}{\partial x} \right| = \big(x^2\big)^{-4} \,. \end{aligned} $$(5.127)Similarly, for the second inversion, \(z \to x^{\prime }\), we have

$$\displaystyle \begin{aligned} \begin{aligned} \left| \frac{\partial x^{\prime}}{\partial z} \right| & = \big(z^2\big)^{-4} \\ & = \left( \frac{1 - 2 \, a \cdot x + a^2 x^2}{x^2} \right)^{-4} \,. \end{aligned} \end{aligned} $$(5.128)The Jacobian factor of the translation \(y^{\mu } \to z^{\mu } = y^{\mu }-a^{\mu }\) is simply 1, as the translation parameter \(a^{\mu }\) does not depend on \(y^{\mu }\). Putting the above together gives

$$\displaystyle \begin{aligned} \left| \frac{\partial x^{\prime}}{\partial x} \right| = \left(1 - 2 \, a \cdot x + a^2 x^2\right)^{-4} \,. \end{aligned} $$(5.129)

We now consider the transformation rule for the scalar field \({\varPhi }\) in Eq. (2.112),

and expand both sides in a Taylor series around \(a^{\mu } = 0\). For the LHS we obtain

Since \(\mathnormal {\varPhi }^{\prime }(x) - \mathnormal {\varPhi }(x) = \mathcal {O}(a)\), we can replace \(\partial _{\nu } \mathnormal {\varPhi }^{\prime }(x) \) by \(\partial _{\nu } \mathnormal {\varPhi }(x) \) in the above. Plugging this into Eq. (5.130) and expanding also the RHS gives

By comparing this to the defining equation of the generators (2.113),

we can read off the explicit form of the generator,

as claimed.

FormalPara Exercise 2.9: Kinematical Jacobi IdentityWe start from the expression of \(n_s\) given in Eq. (2.119). We choose the reference momenta \(r_i\) for the polarisation vectors \(\epsilon _i\) so as to kill as many terms as possible. We recall that \(\epsilon _i \cdot r_i = 0\). Choosing \(r_1 = p_2\), \(r_2 = p_1\), \(r_3 = p_4\), and \(r_4 = p_3\) yields

The other factors are obtained from \(n_s\) by replacing the particles’ labels as

Adding the three factors gives

which vanishes because of momentum conservation.

FormalPara Exercise 2.10: Five-Point KLT RelationThe squaring relation (2.139) in the five-point case reads

where \(S_2\) is the set of permutations of \(\{2,3\}\), namely \(S_2 = \big \{ \{2,3\}, \, \{3,2\} \big \}\). We recall that the KLT kernels \(S[\sigma |\rho ]\) are given by Eq. (2.140) with \(n=5\),

where \(\theta (\sigma _{j}, \sigma _{i})_{\rho }=1\) if \(\sigma _{j}\) is before \(\sigma _{i}\) in the permutation \(\rho \), and zero otherwise. We then have

where \(s_{ij} = 2 \, p_i \cdot p_j\), and similarly

Plugging the above into the squaring relation (5.138) gives

The terms in the square brackets can be simplified using the BCJ relations (2.133). For instance, for the first term we use

which is obtained by replacing \(1\to 3\), \(2\to 1\), \(3\to 2\), \(4 \to 5\) and \(5 \to 4\) in Eq. (2.133) with \(n=5\). Substituting

into Eq. (5.142) finally gives

as claimed.

FormalPara Exercise 3.1: The Four-Gluon Amplitude in \(\mathcal {N}=4\) Super-Symmetric Yang-Mills TheoryWe begin with the \(s_{12}\) channel. The cut integrand is given by the following product of tree amplitudes:

The constant factors multiplying the amplitudes deserve a few remarks. First, we have a factor counting each field’s multiplicity in the \(\mathcal {N}=4\) super-multiplet: 1 gluon (g), 4 gluinos (\(\mathnormal {\varLambda }\)), and 6 scalars (\(\phi \)). Next, the factors of imaginary unit “\({\mathrm {i}}\)” follow from the factorisation properties of tree-level amplitudes as discussed below Eq. (2.3). In particular, note that the factorisation of the fermion line does not require any factors of “\({\mathrm {i}}\)”, as opposed to gluons and scalars. Finally, the gluino’s contribution comes with a further factor of \(-1\) coming from the Feynman rule for the closed fermion loop. The only non-vanishing contribution comes from the product of purely gluonic amplitudes with \(h_1=-\) and \(h_2=+\),

This is the same as in the non-supersymmetric YM theory computed in Sect. 3.2 (see Eq. 3.34), hence we can immediately see that

as claimed.

In contrast to the \(s_{12}\) channel, all fields contribute to the \(s_{23}\)-channel cut:

The second and fourth terms can be obtained by swapping \(1 \leftrightarrow 2\) and \(3 \leftrightarrow 4\) in the first and the third ones, respectively. We put all terms over a common denominator:

Factoring it out we have

Here the “magic” of \(\mathcal {N}=4\) super Yang-Mills theory comes into play: the five terms above conspire together to form the fourth power of a binomial,

which can be further simplified using a Schouten identity, obtaining

This matches \(\mathcal {C}_{23|41}^{\text{box}}\) (see Eq. (3.46)), and we can thus conclude that

-

(a)

We parametrise the loop momentum \(l_1\) using the spinors of the external momenta as in Eq. (3.58). We then rewrite the quadruple cut equations,

$$\displaystyle \begin{aligned} \begin{cases} l_1^2 = 0 \,, \\ l_2^2 = (l_1-p_2)^2 = 0 \,, \\ l_3^2 = (l_1-p_2-p_3)^2 = 0 \,, \\ l_4^2 = (l_1+p_1)^2 = 0 \,, \end{cases} \end{aligned} $$(5.155)in terms of the parameters \(\alpha _i\), as

$$\displaystyle \begin{aligned} \begin{cases} \alpha_1 s_{12} = 0 \,, \\ \alpha_2 s_{12} = 0 \,, \\ (\alpha_1 \alpha_2-\alpha_3 \alpha_4)s_{12} = 0 \,, \\ \alpha_1 s_{13} + \alpha_2 s_{23} + \alpha_3 \langle 1 3 \rangle [ 3 2 ] + \alpha_4 \langle 2 3 \rangle [ 3 1 ] = s_{23} \,. \end{cases} \end{aligned} $$(5.156)For generic kinematics this system has two solutions:

$$\displaystyle \begin{aligned} \left(l_1^{(1)}\right)^{\mu} = \frac{\langle 2 3 \rangle}{\langle 1 3 \rangle} \frac{1}{2} \langle 1 | \gamma^{\mu} | 2 ] \,, \qquad \left(l_1^{(2)}\right)^{\mu} = \frac{[ 2 3 ]}{[ 1 3 ]} \frac{1}{2} \langle 2 | \gamma^{\mu} | 1 ] \,. \end{aligned} $$(5.157)The spinors of the on-shell loop momenta on the first solution can be chosen as

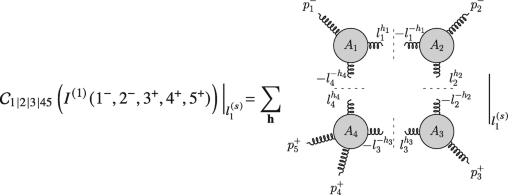

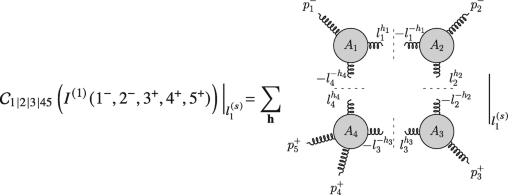

$$\displaystyle \begin{aligned} {} \begin{array}{llll} & |l_1^{(1)} \rangle = \frac{\langle 2 3 \rangle}{\langle 1 3 \rangle} |1\rangle \,, \qquad \quad && |l_1^{(1)}] = |2] \,, \\ & |l_2^{(1)} \rangle = \frac{\langle 2 1 \rangle}{\langle 1 3 \rangle} |3\rangle \,, && |l_2^{(1)}] = |2] \,, \\ & |l_3^{(1)} \rangle = |3\rangle \,, && |l_3^{(1)}] = \frac{\langle 2 1 \rangle}{\langle 1 3 \rangle} |2] - |3] \,, \\ & |l_4^{(1)} \rangle = |1\rangle \,, && |l_4^{(1)}] = |1] + \frac{\langle 2 3 \rangle}{\langle 1 3 \rangle} |2] \,. \end{array} \end{aligned} $$(5.158)The spinors for the second solution, \(l_1^{(2)}\), are obtained by swapping \(\langle \rangle \leftrightarrow []\) in the first one. For each solution \(l_1^{(s)}\), the quadruple cut is obtained by summing over all internal helicity configurations \(\mathbf h = (h_1,h_2,h_3,h_4)\) (with \(h_i = \pm \)) the product of four tree-level amplitudes,

(5.159)

(5.159)Consider \(A_4\). The only non-vanishing four-gluon tree-level amplitude with two positive-helicity gluons is the MHV one, namely \(h_4=-h_3=-. A_3\) is thus \(\overline {\text{MHV}}\), and \(h_2=+\). Since MHV/\(\overline {\text{MHV}}\) three-point vertices cannot be adjacent, \(A_2\) must be MHV, and \(A_1\overline {\text{MHV}}\). This fixes the remaining helicity, \(h_1=+\). The quadruple cuts therefore receive contribution from one helicity configuration only, which we represent using the black/white notation as

(5.160)

(5.160)Recall that the trivalent vertices impose constraints on the momenta. The \(\overline {\text{MHV}}\) vertex attached to \(p_1\) and the MHV vertex attached to \(p_2\) imply that \(|l_1\rangle \propto |1\rangle \) and \(|l_1] \propto |2]\), or equivalently that \(l_1^{\mu } \propto \langle 1 | \gamma ^{\mu } |2]\). Only the solution \(l_1^{(1)}\) is compatible with this constraint. Indeed, we can show explicitly that the contribution from the second solution vanishes, for instance

(5.161)

(5.161)where we used \(|l_1^{(2)}] = \big ([23]/[13] \big ) |1]\) and \(|l_4^{(2)}] = |1]\). We assign spinors to \(-p\) according to the convention (1.113), namely \(|-p\rangle = {\mathrm {i}} |p\rangle \) and \(|-p] = {\mathrm {i}} |p]\). We thus have that

$$\displaystyle \begin{aligned} \mathcal{C}_{1|2|3|45}\left( I^{(1)}(1^-,2^-,3^+,4^+,5^+) \right) \bigg|{}_{l_1^{(2)}} = 0 \,. \end{aligned} $$(5.162)On the first solution, the quadruple cut is given by

$$\displaystyle \begin{aligned} \mathcal{C}_{1|2|3|45} \big|{}_{l_1^{(1)}} = \frac{[ l_1 l_4 ]^3}{[ 1 l_1 ] [ l_4 1 ]} \frac{\langle l_1 2 \rangle^3}{\langle 2 l_2 \rangle \langle l_2 l_1 \rangle} \frac{[ 3 l_3 ]^3}{[ l_3 l_2 ] [ l_2 3 ]} \frac{\langle l_4 l_3 \rangle^3}{\langle l_3 4 \rangle \langle 4 5 \rangle \langle 5 l_4 \rangle } \Bigg|{}_{l_1^{(1)}} \,, \end{aligned} $$(5.163)where we omitted the argument of \(\mathcal {C}\) for the sake of compactness. Plugging in the spinors from Eq. (5.169) and simplifying gives

$$\displaystyle \begin{aligned} \mathcal{C}_{1|2|3|45}\left( I^{(1)}(1^-,2^-,3^+,4^+,5^+) \right) \bigg|{}_{l_1^{(1)}} = {\mathrm{i}} \, s_{12} s_{34} \left( \frac{{\mathrm{i}} \, \langle 1 2 \rangle^3}{\langle 2 3 \rangle \langle 3 4 \rangle \langle 4 5 \rangle \langle 5 1 \rangle} \right) \,, \end{aligned} $$(5.164)where in the parentheses we recognise the tree-level amplitude. Averaging over the two cut solutions (as in Eq. (3.73) for the four-gluon case) gives the four-dimensional coefficient of the scalar box integral,

$$\displaystyle \begin{aligned} c_{0;1|2|3|45}(1^-,2^-,3^+,4^+,5^+) & \!=\! \frac{1}{2} \sum_{s=1}^2 \mathcal{C}_{1|2|3|45}\left( I^{(1)}(1^-,2^-,3^+,4^+,5^+) \right) \bigg|{}_{l_1^{(s)}} \\ & = \frac{{\mathrm{i}}}{2} s_{12} s_{34} A^{(0)}(1^-,2^-,3^+,4^+,5^+) \,, \end{aligned} $$(5.165)as claimed.

-

(b)

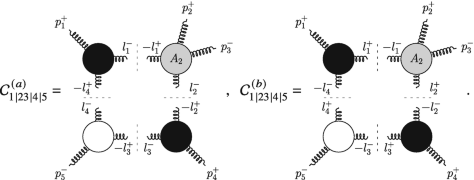

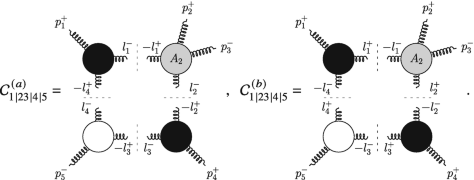

The solution of the quadruple cut can be obtained as in part (a) of this exercise. Alternatively, we can take a more direct route by exploiting the black/white formalism for the trivalent vertices. On each of the two solutions \(l_1^{(s)}\) the quadruple cut is given by

(5.166)

(5.166)The only non-vanishing tree-level four-point amplitude is the MHV (or equivalently \(\overline {\text{MHV}}\)) one, so we have either \(h_1=h_2=-\) or \(h_1=h_2=+\). Specifying \(h_1\) and \(h_2\) and excluding adjacent black/white vertices fixes all the other helicities, so that the quadruple cut receives contribution from two helicity configurations:

(5.167)

(5.167)In both cases the trivalent vertices constrain \(|l_4\rangle \propto |1\rangle \) and \(|l_4] \propto |5]\). The two configurations are thus non-vanishing only on one solution of the quadruple cut, say \(l_1^{(1)}\), which we parametrise starting from \(l_4\) as

$$\displaystyle \begin{aligned} \left( l_4^{(1)} \right)^{\mu} = a \, \frac{1}{2} \langle 1 | \gamma^{\mu} |5] \,. \end{aligned} $$(5.168)The value of a is fixed by requiring that \(l_2^{(1)} = l_4^{(1)}+p_4+p_5\) is on shell (i.e. \(\big (l_2^{(1)}\big )^2=0\)), which gives \(a=\langle 4 5 \rangle / \langle 1 4 \rangle \). The spinors for the internal momenta on this solution can then be chosen as

$$\displaystyle \begin{aligned} {} \begin{array}{llll} & |l_1^{(1)} \rangle = |1\rangle \,, && |l_1^{(1)}] = \frac{\langle 4 5 \rangle}{\langle 1 4 \rangle} |5] - |1] \,, \\ & |l_2^{(1)} \rangle = |4\rangle \,, && |l_2^{(1)}] = |4] + \frac{\langle 1 5 \rangle}{\langle 1 4 \rangle} |5] \,, \\ & |l_3^{(1)} \rangle = |4\rangle \,, && |l_3^{(1)}] = \frac{\langle 1 5 \rangle}{\langle 1 4 \rangle} |5] \,, \\ & |l_4^{(1)} \rangle = |1\rangle \,, \qquad \quad && |l_4^{(1)}] = \frac{\langle 4 5 \rangle}{\langle 1 4 \rangle} |5] \,. \end{array} \end{aligned} $$(5.169)The first contribution to the quadruple cut is given by

$$\displaystyle \begin{aligned} \begin{aligned} \mathcal{C}_{1|23|4|5}^{(a)} \Big|{}_{l_1^{(1)}} & = \frac{[l_4 1]^3}{[1l_1][l_1l_4]} \frac{\langle3 l_2\rangle^3}{\langle l_2 l_1 \rangle \langle l_1 2 \rangle \langle 2 3 \rangle} \frac{[l_2 4]^3}{[4l_3][l_3l_2]} \frac{\langle 5 l_4 \rangle^3}{\langle l_4 l_3 \rangle \langle l_3 5 \rangle} \bigg|{}_{l_1^{(1)}} \\ & = {\mathrm{i}} \, s_{45} s_{15} \left( \frac{\langle 3 4 \rangle \langle 1 5 \rangle}{\langle 1 4 \rangle \langle 3 5 \rangle} \right)^4 \left(\frac{{\mathrm{i}} \, \langle 3 5 \rangle^4}{\langle 1 2 \rangle \langle 2 3 \rangle \langle 3 4 \rangle \langle 4 5 \rangle \langle 5 1 \rangle} \right) \,, \end{aligned} \end{aligned} $$(5.170)where in the right-most parentheses of the second line we recognise the tree-level amplitude \(A^{(0)}(1^+,2^+,3^-,4^+,5^-)\). The computation of the second term is analogous. Summing up the two contributions finally gives

$$\displaystyle \begin{aligned} \mathcal{C}_{1|23|4|5} \Big|{}_{l_1^{(1)}} = {\mathrm{i}} \, s_{45} s_{15} \, A^{(0)} \, \left[ \left( \frac{\langle 3 4 \rangle \langle 1 5 \rangle}{\langle 1 4 \rangle \langle 3 5 \rangle} \right)^4 + \left( \frac{\langle 1 3 \rangle \langle 4 5 \rangle}{\langle 1 4 \rangle \langle 3 5 \rangle} \right)^4 \right] \,, \end{aligned} $$(5.171)where we omitted the argument of \(\mathcal {C}_{1|23|4|5}\) and \(A^{(0)}\) for compactness. The second solution, \(l_1^{(2)}\), is the complex conjugate of the first one. The quadruple cut vanishes on it by the argument above,

$$\displaystyle \begin{aligned} \mathcal{C}_{1|23|4|5} \left(I^{(1)}(1^+,2^+,3^-,4^+,5^-)\right) \Big|{}_{l_1^{(2)}} = 0\,. \end{aligned} $$(5.172)Finally, we obtain the coefficient of the scalar box function at order \(\epsilon ^0\) by averaging over the two solutions:

$$\displaystyle \begin{aligned} & c_{0;1|23|4|5}(1^+,2^+,3^-,4^+,5^-) \\ & \quad = \frac{{\mathrm{i}}}{2} s_{45} s_{15} \, A^{(0)} \, \left[ \left( \frac{\langle 3 4 \rangle \langle 1 5 \rangle}{\langle 1 4 \rangle \langle 3 5 \rangle} \right)^4 + \left( \frac{\langle 1 3 \rangle \langle 4 5 \rangle}{\langle 1 4 \rangle \langle 3 5 \rangle} \right)^4 \right] \,. \end{aligned} $$(5.173)

-

(a)

We contract both sides of the form-factor decomposition in Eq. (3.81) by the basis tensors \(\eta ^{\mu _1 \mu _2}\) and \(p_1^{\mu _1} p_1^{\mu _2}\), obtaining

$$\displaystyle \begin{aligned} {} \begin{cases} F_2^{[D]} \left[k^2 \right] = a_{2,00} \, D + a_{2,11} \, p_1^2 \,, \\ F_2^{[D]}\left[(k\cdot p_1)^2 \right] = a_{2,00} \, p_1^2 + a_{2,11} \, (p_1^2)^2 \,. \\ \end{cases} \end{aligned} $$(5.174)For the sake of simplicity we omit the dependence of the bubble integrals on \(p_1\), and we introduce the short-hand notations \(D_1=k^2\) and \(D_2 = (k-p_1)^2\) for the inverse propagators. Solving the linear system (5.174) for the form factors gives

$$\displaystyle \begin{aligned} {} \begin{aligned} a_{2,00} & = \frac{1}{D-1} \Big( F_2^{[D]}\left[k^2 \right] - \frac{1}{p_1^2} F_2^{[D]}\left[(k\cdot p_1)^2 \right] \Big) \,, \\ a_{2,11} & = \frac{1}{p_1^2 (D-1)} \Big( \frac{D}{p_1^2} F_2^{[D]}\left[(k\cdot p_1)^2 \right] - F_2^{[D]}\left[k^2 \right]\Big) \,. \end{aligned} \end{aligned} $$(5.175)The contraction of the rank-2 bubble with \(\eta ^{\mu _1\mu _2}\) is given by a scaleless integral and thus vanishes in dimensional regularisation,

$$\displaystyle \begin{aligned} {} F_2^{[D]}\left[ k^2 \right] = \int_k \frac{1}{(k-p_1)^2} = 0 \,. \end{aligned} $$(5.176)The contraction with \(p_1^{\mu _1} p_1^{\mu _2}\) is instead given by

$$\displaystyle \begin{aligned} {} F_2^{[D]}\left[ (k\cdot p_1)^2 \right] = \frac{1}{4} \int_k \left( \frac{(p_1^2)^2}{D_1 D_2} + \frac{D_1}{D_2} + \frac{D_2}{D_1} - 2 + 2 \frac{p_1^2}{D_2} - 2 \frac{p_1^2}{D_1} \right) \,, \end{aligned} $$(5.177)where we used that \(2\, k\cdot p_1 = D_1 - D_2 + p_1^2\). All terms but the first one vanish in dimensional regularisation. To see this explicitly, consider for instance the second term. By shifting the loop momentum by \(p_1\) we can rewrite it as a combination of manifestly scaleless integrals,

$$\displaystyle \begin{aligned} \int_k \frac{k^2}{(k-p_1)^2} = \int_k 1 + p_1^2 \int_k \frac{1}{k^2} + 2 p_1^{\mu} \int_k \frac{k_{\mu}}{k^2} \,, \end{aligned} $$(5.178)which vanish in dimensional regularisation (see Sect. 4.2.1 in Chap. 4). Equation (5.177) thus reduces to

$$\displaystyle \begin{aligned} {} F_2^{[D]}\left[ (k\cdot p_1)^2 \right] = \frac{(p_1^2)^2}{4} F_2^{[D]}[1] \,. \end{aligned} $$(5.179)Substituting Eqs. (5.176) and (5.179) into Eq. (5.175) finally gives

$$\displaystyle \begin{aligned} \begin{aligned} a_{2,00} & = -\frac{p_1^2}{4 (D-1)} \, F_2^{[D]}\left[1 \right] \,, \\ a_{2,11} & = \frac{D}{4 (D-1)} \, F_2^{[D]}\left[1 \right] \,. \end{aligned} \end{aligned} $$(5.180) -

(b)

We proceed as we did in part (a). For compactness, we define

$$\displaystyle \begin{aligned} \begin{aligned} & T_{1}^{\mu_1 \mu_2 \mu_3} = \eta^{\mu_1\mu_2} p_1^{\mu_3} + \eta^{\mu_2\mu_3} p_1^{\mu_1} + \eta^{\mu_3\mu_1} p_1^{\mu_2} \,, \\ & T_{2}^{\mu_1 \mu_2 \mu_3} = p_1^{\mu_1} p_1^{\mu_2} p_1^{\mu_3} \,. \end{aligned} \end{aligned} $$(5.181)Note that \(F_2^{[D]}\left [k^{\mu _1} k^{\mu _2} k^{\mu _3} \right ]\) is symmetric under permutations of the Lorentz indices. While \(T_2\) enjoys this symmetry, the three separate terms of \(T_1\) do not. That is why they appear together in \(T_1\) rather than with distinct form factors. The symmetry property would in fact constrain the latter to be equal. We then contract both sides of the tensor decomposition (3.82) with the basis tensors, and solve the ensuing \(2\times 2\) linear system for the form factors, Using the following contractions,

$$\displaystyle \begin{aligned} \begin{aligned} & F_2^{[D]}\left[k^{\mu_1} k^{\mu_2} k^{\mu_3} \right] \left(T_1\right)_{\mu_1 \mu_2 \mu_3} = 0 \,, \\ & F_2^{[D]}\left[k^{\mu_1} k^{\mu_2} k^{\mu_3} \right] \left(T_2\right)_{\mu_1 \mu_2 \mu_3} = \frac{(p_1^2)^3}{8} F_2^{[D]}\left[1\right]\,, \\ & T_1^{\mu_1\mu_2\mu_3} \left(T_1\right)_{\mu_1\mu_2\mu_3} = 3 p_1^2 (D+2) \,, \\ & T_1^{\mu_1\mu_2\mu_3} \left(T_2\right)_{\mu_1\mu_2\mu_3} = 3 (p_1^2)^2 \,, \\ & T_2^{\mu_1\mu_2\mu_3} \left(T_2\right)_{\mu_1\mu_2\mu_3} = (p_1^2)^3 \,, \end{aligned} \end{aligned} $$(5.182)we obtain

$$\displaystyle \begin{aligned} \begin{aligned} a_{2,001} & = - \frac{p_1^2}{8 (D-1)} \, F_2^{[D]}\left[1\right] \,, \\ a_{2,111} & = \frac{D+2}{8(D-1)} \, F_2^{[D]} \left[1\right] \,. \end{aligned} \end{aligned} $$(5.183)

-

(a)

The physical space is 3-dimensional, and may be spanned by \(\{p_1,p_2,p_3\}\). The spurious space is 1-dimensional. In order to construct a vector \(\omega \) spanning the spurious space, we start from a generic ansatz made from the spinors associated with \(p_1\) and \(p_2\),

$$\displaystyle \begin{aligned} \omega^{\mu} = \alpha_1 p_1^{\mu} + \alpha_2 p_2^{\mu} + \alpha_3 \frac{1}{2} \langle 1 | \gamma^{\mu} | 2 ] + \alpha_4 \frac{1}{2} \langle 2 | \gamma^{\mu} | 1 ] \,, \end{aligned} $$(5.184)and constrain it by imposing the orthogonality to the external momenta and the normalisation (\(\omega ^2 = 1\)). While \(\omega \cdot p_1 = 0\) and \(\omega \cdot p_2 = 0\) fix \(\alpha _1 = \alpha _2 = 0\), the orthogonality to \(p_3\) and the normalisation imply

$$\displaystyle \begin{aligned} \alpha_3 \langle 1 3 \rangle [ 3 2 ] + \alpha_4 \langle 2 3 \rangle [ 3 1 ] = 0 \,, \qquad \quad \alpha_3 \alpha_4 \, s_{12} = -1 \,, \end{aligned} $$(5.185)where \(s_{ij} = (p_i + p_j)^2\). The solution is given by

$$\displaystyle \begin{aligned} {} \omega^{\mu} = \frac{1}{2 \sqrt{s_{12} s_{23} s_{13}}} \big[ \langle 1 | \gamma^{\mu} | 2 ] \langle 2 3 \rangle [ 3 1 ] - \langle 2 | \gamma^{\mu} | 1 ] \langle 1 3 \rangle [ 3 2 ] \big] \,. \end{aligned} $$(5.186) -

(b)

We rewrite the spinor chains in Eq. (5.186) in terms of traces of Pauli matrices,

$$\displaystyle \begin{aligned} \omega^{\mu} = \frac{1}{2 \sqrt{s_{12} s_{23} s_{13}}} \big[ \text{Tr}\left( \sigma^{\mu} \bar{\sigma}^{\rho} \sigma^{\tau} \bar{\sigma}^{\nu} \right) - \text{Tr}\left( \sigma^{\mu} \bar{\sigma}^{\nu} \sigma^{\tau} \bar{\sigma}^{\rho} \right) \big] p_{1 \nu} p_{2 \rho} p_{3 \tau} \,. \end{aligned} $$(5.187)We trade the Pauli matrices for Dirac matrices through Eq. (1.29). The terms free of \(\gamma _5\) cancel out thanks to the cyclicity of the trace and the identity \(\text{Tr}\left ( \gamma ^{\mu } \gamma ^{\nu } \gamma ^{\rho } \gamma ^{\tau } \right ) = \text{Tr}\left ( \gamma ^{\tau } \gamma ^{\rho } \gamma ^{\nu } \gamma ^{\mu } \right )\). We rewrite the traces with \(\gamma _5\) in terms of the Levi-Civita symbol using \(\text{Tr}\left (\gamma ^{\mu } \gamma ^{\nu } \gamma ^{\rho } \gamma ^{\tau } \gamma _5\right ) = -4 {\mathrm {i}} \epsilon ^{\mu \nu \rho \tau }\), obtaining

$$\displaystyle \begin{aligned} \omega^{\mu} = \frac{2 {\mathrm{i}}}{ \sqrt{s_{12} s_{23} s_{13}}} \epsilon^{\mu \nu \rho \tau} p_{1\nu} p_{2\rho} p_{3\tau} \,. \end{aligned} $$(5.188)

-

(a)

We rewrite the triangle integral as

$$\displaystyle \begin{aligned} F^{[D]}_3(p_1,p_2) = \int_k \frac{1}{(-D_1) \, (-D_2) \, (-D_3)} \,, \end{aligned} $$(5.189)with inverse propagators

$$\displaystyle \begin{aligned} D_1 = k^2 \,, \quad D_2 =(k-p_1)^2 \,, \quad D_3 = (k-p_1-p_2)^2 \,. \end{aligned} $$(5.190)The \({\mathrm {i}} 0\) is irrelevant here, and we thus omit it. We introduce a two-dimensional parametrisation of the loop momentum \(k^{\mu }\) by expanding it in a basis formed by two independent external momenta, say \(p_1^{\mu }\) and \(p_2^{\mu }\), as

$$\displaystyle \begin{aligned} {} k^{\mu} = a_1 \, p_1^{\mu} + a_2 \, p_2^{\mu} \,. \end{aligned} $$(5.191)Since there are only two degrees of freedom, parametrised by \(a_1\) and \(a_2\), the three inverse propagators of the triangle integral cannot be algebraically independent (over the field of the rational functions of s). In order to find the relation among them, we express them in terms of \(a_1\) and \(a_2\),

$$\displaystyle \begin{aligned} {} \begin{aligned} & D_1 = s \, a_1 (a_1-a_2) \,, \\ & D_2 = s \, (1-a_1) (1+a_2-a_1) \,, \\ & D_3 = s \, (1 - a_1) (a_2 - a_1) \,, \end{aligned} \end{aligned} $$(5.192)with \(s=p_1^2\). We then solve two of these equations to express \(a_1\) and \(a_2\) in terms of inverse propagators. Choosing \(D_1\) and \(D_3\) we obtain

$$\displaystyle \begin{aligned} a_1 = \frac{D_1}{D_1-D_3} \,, \quad a_2 = \frac{(D_1-D_3)^2 - s \, D_1}{s \, (D_3-D_1)} \,. \end{aligned} $$(5.193)Plugging these into the expression of \(D_2\) in Eq. (5.192) gives a relation among the three inverse propagators,

$$\displaystyle \begin{aligned} {} 1 = \frac{1}{s} \left( D_1+D_2-D_3 - \frac{D_1 D_2}{D_3} \right) \,. \end{aligned} $$(5.194)Inserting this into the numerator of the triangle integral, expanding, and removing the scaleless integrals which integrate to zero finally gives

$$\displaystyle \begin{aligned} \begin{aligned} F^{[2-2\epsilon]}_{3}(p_1,p_2) & = \frac{1}{s} \int_k \frac{1}{k^2 (k-p_1)^2} + \text{terms missed in }2D \\ & = \frac{1}{s} \, F_2^{[2-2\epsilon]}(p_1) + \text{terms missed in }2D\,. \end{aligned} \end{aligned} $$(5.195)Up to terms which are missed by the two-dimensional analysis, the triangle integral in \(D=2-2\epsilon \) dimensions can be expressed in terms of a bubble integral, and is thus reducible.

-

(b)

In \(D=2\) dimensions, any three momenta are linearly dependent. The Gram matrix \(G(k,p_1,p_2)\) therefore has vanishing determinant,

$$\displaystyle \begin{aligned} -\frac{1}{4} s^2 k^2 - s \, (k\cdot p_1) (k \cdot p_2) - s \, (k \cdot p_2)^2 = 0 \,, \end{aligned} $$(5.196)which can be verified using a two-dimensional parametrisation of \(k^{\mu }\) such as Eq. (5.191). In order to convert it into a relation among the inverse propagators, we express the scalar products of the loop momentum in terms of inverse propagators,

$$\displaystyle \begin{aligned} k^2 = D_1 \,, \qquad k\cdot p_1 = \frac{D_1-D_2+s}{2} \,, \qquad k\cdot p_2 = \frac{D_2-D_3-s}{2} \,. \end{aligned} $$(5.197)Expressing the determinant of \(G(k,p_1,p_2)\) in terms of inverse propagators gives the relation (5.194).

-

(c)

The steps are the same as for the previous point, but the algebraic manipulations are cumbersome. We implement them in the Mathematica notebook Ex3.5_Reducibility.wl [1]. In \(D=4\) dimensions the following Gram determinant vanishes:

$$\displaystyle \begin{aligned} {} \text{det} \, G\left(k,p_1,p_2,p_3,p_4\right) = 0\,. \end{aligned} $$(5.198)We aim to rewrite this in terms of the inverse propagators of the pentagon:

$$\displaystyle \begin{aligned} {} \begin{array}{ll} D_1 = k^2 \,, & D_4 = (k-p_1-p_2-p_3)^2 \,, \\ D_2 = (k-p_1)^2 \,, \qquad \quad & D_5 = (k-p_1-p_2-p_3-p_4)^2 \,. \\ D_3 = (k-p_1-p_2)^2 \,, \qquad \qquad & \\ \end{array} \end{aligned} $$(5.199)The first step is to parametrise the kinematics in terms of independent invariants \(s_{ij} = (p_i+p_j)^2\). It is instructive to count the latter for a generic number of particles n. There are \(n(n+1)/2\) distinct scalar products \(p_i\cdot p_j\) with \(i,j=1,\ldots ,n\). Momentum conservation gives n constraints, as we may contract \(\sum _{i=1}^n p_i^{\mu } = 0\) by \(p_j^{\mu }\) for any \(j=1,\ldots ,n\). Moreover, we have n on-shell constraints: \(p_i^2=0\) for \(i=1,\ldots ,n\). We are thus left with \(\frac {n(n+1)}{2} -2 n = n (n-3)/2 \) independent invariants. For \(n=4\) that gives 2 independent invariants—the familiar s and t Mandelstam invariants—while for \(n=5\) we have 5. It is convenient to choose them as \(\mathbf {s} := \{s_{12},s_{23},s_{34},s_{45},s_{51}\}\). We now need to express all scalar products \(p_i\cdot p_j\) in terms of \(\mathbf {s}\). We may do so by solving the linear system of equations obtained from momentum conservation as discussed above:

$$\displaystyle \begin{aligned} \sum_{i=1}^5 p_i \cdot p_j = 0 \,, \forall \, j=1,\ldots, 5\,. \end{aligned} $$(5.200)We rewrite the latter in terms of \(s_{ij}\)’s and solve. We do this in the Mathematica notebook, obtaining—for example—that \(p_1 \cdot p_4 = (s_{23} - s_{45} - s_{51})/2\).

We now turn our attention to the scalar products involving the loop momentum: \(k^2\), and \(k\cdot p_i\) for \(i=1,\ldots ,4\) (\(k\cdot p_5\) is related to the others by momentum conservation). Having parametrised the kinematics in terms of independent invariants \(\mathbf {s}\), we may solve the system (5.199) to express them in terms of inverse propagators and \(\mathbf {s}\). For example, we obtain that \(k \cdot p_3 = (D_3 - D_4 + s_{45} -s_{12})/2\). Using this result, we can rewrite the Gram-determinant condition (5.198) as

$$\displaystyle \begin{aligned} 1 = \sum_{i=1}^5 A_i D_i + \sum_{i\le j=1}^5 B_{ij} D_i D_j \,, \end{aligned} $$(5.201)where \(A_i\) and \(B_{ij}\) are rational functions of the invariants \(\mathbf {s}\). Plugging this into the numerator of the pentagon integral and expanding finally gives the reduction into integrals with fewer propagators, up to terms missed in \(D=4\).

-

(a)

We parametrise the loop momentum as in Eq. (3.159). We recall that \(p_1 \cdot \omega _i = 0\) and \(\omega _i \cdot \omega _j = \delta _{ij} \, \omega _i^2\). The coefficient \(\alpha _1\) can be expressed in terms of propagators and external invariants by noticing that \(\alpha _1 = k\cdot p_1/p_1^2\), and rewriting \(k\cdot p_1\) in terms of inverse propagators (3.158). This gives

$$\displaystyle \begin{aligned} {} \alpha_1 = \frac{D_1-D_2 +p_1^2 + m_1^2-m_2^2}{2 \, p_1^2}\,. \end{aligned} $$(5.202)We thus see that \(\alpha _1\) does not depend on the loop momentum on the bubble cut \(D_1=D_2=0\). As a result, the loop-momentum parametrisation of the bubble numerator \(\mathnormal {\varDelta }_{1|2}\) depends only on three ISPs: \(k\cdot \omega _i\) for \(i=1,2,3\). The maximum tensor rank for a renormalisable gauge theory is two, hence a general parametrisation is

$$\displaystyle \begin{aligned} {} \begin{aligned} \mathnormal{\varDelta}_{1|2}&(k \cdot \omega_1, k \cdot \omega_2, k \cdot \omega_3) = c_{000} \\ & + c_{100} (k \cdot \omega_1) + c_{010} (k \cdot \omega_2) + c_{001} (k \cdot \omega_3) \\ & + c_{110} (k \cdot \omega_1) (k \cdot \omega_2) + c_{101} (k \cdot \omega_1) (k \cdot \omega_3) + c_{011} (k \cdot \omega_2) (k \cdot \omega_3) \\ & + c_{200} (k \cdot \omega_1)^2 + c_{020} (k \cdot \omega_2)^2 + c_{002} (k \cdot \omega_3)^2 \,. \end{aligned} \end{aligned} $$(5.203)The cut condition \(D_1=0\) implies one more constraint on the loop-momentum dependence:

$$\displaystyle \begin{aligned} {} \mathcal{C}_{1|2}\left(k_{\parallel}^2\right) + \sum_{i=1}^3 (k\cdot \omega_i)^2 \omega_i^2 -m_1^2 = 0 \,. \end{aligned} $$(5.204)Since \(\mathcal {C}_{1|2}\big (k_{\parallel }^2\big ) = (m_1^2 - m_2^2 + p_1^2)^2/(4 \, p_1^2)\) does not depend on the loop momentum on the cut, we may use Eq. (5.204) to eliminate, say, \((k\cdot \omega _3)^2\) from the numerator (5.203). It is however more convenient to implement the constraint (5.204) so as to maximise the number of terms which integrate to zero. The terms in the second and third line on the RHS of Eq. (5.203) contain odd powers of \(k\cdot \omega _i\), and thus vanish upon integration. Using transverse integration one can show that

$$\displaystyle \begin{aligned} \int_k \frac{(k \cdot \omega_i)^2}{D_1 D_2} = \frac{\omega_i^2}{\omega_j^2} \int_k \frac{(k \cdot \omega_j)^2}{D_1 D_2} \,. \end{aligned} $$(5.205)We can then use the constraint (5.204) to group \((k\cdot \omega _1)^2\), \((k\cdot \omega _2)^2\) and \((k\cdot \omega _3)^2\) into two terms which vanish upon integration. This can be achieved for instance as

$$\displaystyle \begin{aligned} \begin{aligned} \mathnormal{\varDelta}_{1|2}&(k \cdot \omega_1, k \cdot \omega_2, k \cdot \omega_3) = c_{0;1|2} \\ & + c_{1;1|2} (k \cdot \omega_1) + c_{2;1|2} (k \cdot \omega_2) + c_{3;1|2} (k \cdot \omega_3) \\ & + c_{4;1|2} (k \cdot \omega_1) (k \cdot \omega_2) + c_{5;1|2} (k \cdot \omega_1) (k \cdot \omega_3) + c_{6;1|2} (k \cdot \omega_2) (k \cdot \omega_3) \\ & + c_{7;1|2} \left[ (k \cdot \omega_1)^2 - \frac{\omega_1^2}{\omega_3^2} (k \cdot \omega_3)^2 \right] + c_{8;1|2} \left[ (k \cdot \omega_2)^2 - \frac{\omega_2^2}{\omega_3^2} (k \cdot \omega_3)^2 \right] \,, \end{aligned} \end{aligned} $$(5.206)such that only the term with coefficient \(c_{0;1|2}\) survives upon integration, as claimed.

-

(b)

The bubble cut of the one-loop amplitude \(A^{(1),[4-2\epsilon ]}_n\) is by definition given by

$$\displaystyle \begin{aligned} {} \mathcal{C}_{1|2} \left(A^{(1),[4-2\epsilon]}_n\right) = \int_k \left[ I^{(1)}(k) \prod_{i=1}^2 \left( D_i \, (-2 \pi {\mathrm{i}}) \, \delta^{(+)}\left(D_i\right) \right) \right] \,, \end{aligned} $$(5.207)where \(I^{(1)}(k)\) denotes the integrand of \(A^{(1),[4-2\epsilon ]}_n\). We parametrise the latter in terms of boxes, triangles, and bubbles. The terms which survive on the bubble cut \(1|2\) are

(5.208)

(5.208)Here, the ellipsis denotes terms which vanish on the cut. The sum over X runs over all triangle configurations which share the propagators \(1/(-D_1)\) and \(1/(-D_2)\), \(\omega _1^X\) and \(\omega _2^X\) are the vectors spanning the corresponding spurious loop-momentum space, and \(1/(-D_X)\) is the propagator which completes the triangle. Similarly, the sum over \(Y,Z\) runs over all box configurations sharing the propagators \(1/(-D_1)\) and \(1/(-D_2)\), \(\omega ^{YZ}\) spans their spurious-loop momentum space, and \(1/(-D_Y)\) and \(1/(-D_Z)\) are the inverse propagators which complete the box. Equating Eqs. (5.207) and (5.208) and solving for \(\mathnormal {\varDelta }_{1|2}\) gives

$$\displaystyle \begin{aligned} \begin{aligned} \mathnormal{\varDelta}_{1|2}&\left(k\cdot \omega_1, k\cdot \omega_2, k\cdot \omega_3\right) \bigg|{}_{D_i=0} = \bigg( I^{(1)}(k) \prod_{i=1}^2 D_i \\ & + \sum_X \frac{\mathnormal{\varDelta}_{1|2|X}\left( k \cdot \omega^X_1, k\cdot \omega^X_2 \right)}{D_X} - \sum_{Y,Z} \frac{\mathnormal{\varDelta}_{1|2|Y|Z}\left( k \cdot \omega^{YZ} \right)}{D_Y D_Z} \bigg) \bigg|{}_{D_i=0} \,, \end{aligned} \end{aligned} $$(5.209)as claimed.

We decompose the loop momentum into a four- and a \((-2\epsilon )\)-dimensional parts as

with \(k_1^{[-2\epsilon ]} \cdot k_1^{[4]} = 0 = k_1^{[-2\epsilon ]} \cdot p_i\) and \( k_1^{[-2\epsilon ]} \cdot k_1^{[-2\epsilon ]} = - \mu _{11}\). Note that \(\mu _{11} > 0\). The loop-integration measure factorises as \({\mathrm {d}}^D k_1 = {\mathrm {d}}^4 k_1^{[4]} \, {\mathrm {d}}^{-2\epsilon } k_1^{[-2\epsilon ]}\). We rewrite the integral on the LHS of Eq. (3.184) as

The integrand \(\mathcal {F}_n\) depends on the loop momentum only through its four-dimensional components \(k_1^{[4], \, \mu }\) and \(\mu _{11}\). In other words, the integrand does not depend on the angular coordinates of the \((-2\epsilon )\)-dimensional subspace. We thus introduce angular and radial coordinates as

and carry out the \((-2\epsilon )\)-dimensional angular integration in Eq. (5.211). We recall that the surface area of a unit-radius sphere in m-dimensions is given by

We obtain

We view the remaining \(\mu _{11}\) integration as the radial integration in a \((2r-2\epsilon )\)-dimensional subspace. The loop-integration measure in the latter is in fact given by

Exploiting again the independence of the integrand on the angular coordinates, we rewrite Eq. (5.214) as

Using Eq. (5.213) for the prefactor gives

which we simplify using Eq. (3.185). The loop integration on the RHS of Eq. (5.216) matches the scalar one-loop integral (i.e., with numerator 1) with loop momentum in \(D=4+2r - 2\epsilon \) dimensions and four-dimensional external momenta, namely

as claimed.

FormalPara Exercise 3.8: Projecting Out the Triangle CoefficientsThe solution follows from the theory of discrete Fourier transform. Let N be a positive integer. The functions

with \(k\in \mathbb {Z}\), form an orthogonal basis of the space of complex-valued functions on the set of the \(N^{\text{th}}\) roots of unity, \(\{ \text{e}^{2\pi {\mathrm {i}} l/N}, \, l=0,\ldots ,N-1\}\). In other words, they satisfy the orthogonality condition

This is straightforward for \(n=k\). For \(n\neq k\), Eq. (5.220) follows from the identity

with \(z = \text{e}^{2\pi {\mathrm {i}} (n-k)/N}\), and hence \(z^N = 1\).

We can then use the orthogonality condition to project out the triangle coefficients \(d_{k;1|2|3}\). Using Eqs. (3.192) and (3.193) we have that

Substituting Eq. (5.220) with \(N=7\), and solving for \(d_{k;1|2|3}\) gives

as claimed.

We now consider a rank-4 four-dimensional triangle numerator \(\mathnormal {\varDelta }_{1|2|3}^{(4)}(k\cdot \omega _1, k\cdot \omega _2)\). We parametrise the family of solutions to the triple cut by the angle \(\theta \) as in Eq. (3.190). Expanding sine and cosine into exponentials gives

The coefficients \(d_{k;1|2|3}^{(4)}\) can then be projected out using the \(9{\text{th}}\) roots of unity \(\text{e}^{{\mathrm {i}} \theta _l^{\prime }}\), with \(\theta _l^{\prime } = 2\pi \, l/9\), for \(l=-4,\ldots ,4\). By using the orthogonality condition (5.220) with \(N=9\) we obtain

-

(a)

After integration, the tensor integral \(F_3^{[D]}(P,Q)[k^{\mu }]\) can only be a function of \(P^{\mu }\) and \(Q^{\mu }\). We thus expand it as

$$\displaystyle \begin{aligned} F_3^{[D]}(P,Q)[k^{\mu}] = c_1 \, P^{\mu} + c_2 \, Q^{\mu} \,. \end{aligned} $$(5.226)Contracting both sides by \(P^{\mu }\) and \(Q^{\mu }\), and solving for the coefficients gives

$$\displaystyle \begin{aligned} {} \begin{aligned} & c_1 = \frac{1}{(P\cdot Q)^2-S \, T} \left[ (P\cdot Q) \, F_3^{[D]}(P,Q)[k\cdot Q] - T \, F_3^{[D]}(P,Q)[k\cdot P] \right] \,, \\ & c_2 = \frac{1}{(P\cdot Q)^2-S \, T} \left[ (P\cdot Q) \, F_3^{[D]}(P,Q)[k\cdot P] - S \, F_3^{[D]}(P,Q)[k\cdot Q] \right] \,. \end{aligned} \end{aligned} $$(5.227)Next, we need to rewrite the integrals above in terms of scalar integrals. To this end, we express the scalar products \(k \cdot P\) and \(k \cdot Q\) in terms of inverse propagators \(D_i\), as

$$\displaystyle \begin{aligned} k \cdot P = \frac{1}{2} \big(D_1-D_2 + \hat{S} \big) \,, \qquad \quad k \cdot Q = \frac{1}{2} \big( D_2 - D_3 + \hat{T} \big) \,, \end{aligned} $$(5.228)where

$$\displaystyle \begin{aligned} D_1 = k^2 - m_1^2 \,, \quad D_2 = (k-P)^2 - m_2^2 \,, \quad D_3 = (k-P-Q)^2-m_3^2\,, \end{aligned} $$(5.229)and

$$\displaystyle \begin{aligned} \hat{S} := S + m_1^2 - m_2^2 \,, \qquad \hat{T} := T + m_2^2 - m_3^2 + 2 \, (Q\cdot P) \,. \end{aligned} $$(5.230)We thus have that