Abstract

In order to effectively use digital media to promote low-barrier inquiry learning, teachers need professional knowledge and skills, as well as positive attitudes and self-efficacy assumptions. This study examines the effectiveness of in-service training on changing teachers’ attitudes and self-efficacy assumptions in the area of inclusion and digitalization as important prerequisites for being able to design digital learning environments for doing science in inclusive settings. The single-factor study design included a three-stage variation of teacher training sessions, in which 141 Bavarian biology teachers participated in small groups in a one-day webinar. Teachers planned and performed a digitally supported experimentation and were trained to meet the needs of diverse learners. There are significant improvements in attitudes toward digitalization and in teachers’ self-efficacy assumptions, regardless of the training group. However, there are no statistically significant differences between the training groups. Thus, although the training is effective overall, the influence of the theoretical discussion is too small compared to other phases of the intervention, such as the practical experimentation. There is also a correlation between attitudes towards inclusion and digitalization after the training. Overall, there is a demand for inclusion and digitalization to be used in a more integrated way in teacher training, and thus to further promote the participation of all students in doing science.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

The heterogeneity of students involves diverse learner needs. This is why inclusive teaching concepts are needed to enable all students to participate, especially in science education and its specific processes and procedures, like inquiry learning. Inquiry-based learning is an active method of learning. It begins with posing questions, problems, or scenarios, and can involve scientific methods, such as conducting experiments. The process of generating one’s own hypotheses and problem-solving approaches is important. In this complex process, many barriers can arise, such as the handling of materials and instructions (Stinken-Rösner & Abels, 2021) or methodological difficulties, e.g. hypothesizing, reflecting on measurement inaccuracies (Baur, 2018). However, there are many possibilities, for example, to prepare the scientific work methods in a differentiated and student-oriented way. This could be through their graduated complexity, the level of abstraction and the observation level (Bruckermann et al., 2017). Another approach can be the targeted use of digital media, which may enable access and avoid barriers through multimedia design (Kerres, 2018). In order to effectively use digital media to promote inquiry learning and reduce barriers, teachers need professional knowledge and competencies in inclusion and digitization and how to link them.

2 Theoretical Background

Following the Index for Inclusion, inclusion is about minimizing all barriers in education and learning for all students (Boban & Hinz, 2003, p. 11). This broad understanding of inclusion encompasses all dimensions of heterogeneity that may present barriers, such as gender, ability, special education needs, religion, and more. In terms of teachers’ professional knowledge, this shows a need in pedagogical knowledge, which encompasses the pedagogical aspects of teaching and the individual needs of students. Pedagogical knowledge (PK), together with content knowledge (CK) and technological knowledge (TK), forms the basis for the TPACK model, which describes teachers’ professional knowledge as the interplay of the different knowledge domains (Mishra & Koehler, 2006). CK refers to the subject matter being taught and goes beyond the scope of the school subject matter. PK includes the pedagogical aspects of teaching and the individual needs of students. TK includes the handling and knowledge of digital media and their application. In addition, the intersections of the individual disciplines are considered (Fig. 23.1). The intersection of pedagogical content knowledge (PCK) describes the didactic contexts of instructional design. This is specifically about the didactic preparation of subject matter content for the needs of the students. Technological content knowledge (TCK), on the other hand, is the knowledge of the technical and digital applications used in the subject matter. The intersection of technological pedagogical knowledge (TPK) realizes the use of digital media and technical possibilities tailored to the needs of the students. The eponymous intersection of the three areas of technological pedagogical content knowledge – TPACK – unites them to form the professional knowledge of teachers. TPACK thus describes teachers’ knowledge of how technologies can be used for a specific content, considering the needs of the students. In addition to professional knowledge, there are other factors that influence teaching actions. These include among others attitudes, motivation and self-regulation (Baumert & Kunter, 2006). If we take a closer look at attitudes, we can see that positive attitudes together with high self-efficacy expectations are an important predictor of planned behavior (Fishbein & Ajzen, 2010).

Teachers’ professional knowledge: TPACK Modell. Representation according to (Mishra & Koehler, 2006)

Based on the theory of planned behavior, it can be seen that these factors are multiple and interact with each other (Fishbein & Ajzen, 2010). Thus, attitudes are just as decisive in achieving a desired behavior as self-efficacy expectations and subjectively perceived norms. These in turn are influenced by beliefs, which are based on background factors. The background factors are divided into three categories: personal factors relate to, among other things, general attitude, intelligence or emotion; social factors include, for example, age, gender or education; information-related factors, on the other hand, refer to the experience and knowledge of the respective person on a particular issue (Fishbein & Ajzen, 2010). The interaction of these entire factors ultimately leads to behavior that can be observed. By measuring individual factors, more or less reliable statements can be made about future behavior. In the field of inclusive education, correlations have already been proven in studies. For example, teachers with positive attitudes towards inclusion show more effective teaching for all students (Jordan et al., 2009). Attitude and self-efficacy are predictors of planned behavior in inclusive teaching (Sharma & Jacobs, 2016). Another finding is that attending courses on inclusion has a positive impact on attitudes toward inclusion (Sharma, 2012; Miesera & Gebhardt, 2018; Miesera & Will, 2017). Similarly, teachers’ attitudes towards digital media are decisive for their implementation in the classroom (Eickelmann & Vennemann, 2017). All of these findings indicate that attitudes and self-efficacy expectations are important factors in the implementation of specific instructional practices. For both fields, inclusion and digitization, there are already study results and valid assumptions on which to build. However, in order to apply these general statements about inclusive teaching and the use of media to subject-specific problems, such as inquiry learning, the topics must be examined in conjunction with one another.

3 Aim of the Study and Research Question

The aim of the study is to prepare teachers specifically for the use of digital media in heterogeneous classes in biology lessons. In particular, the heterogeneity dimensions of ability and special needs will be focused on in order to investigate the use of digital media in scientific work methods. On the one hand, the question arises whether the targeted knowledge transfer in an intervention has an effect on the self-assessment in the TPACK domains. On the other hand, the teachers’ attitudes toward the topics of inclusion and digitization will be investigated, which will lead to the question of what connections exist between the self-assessment in the TPACK domains and the attitudes toward inclusion and digitalization.

4 Methods

4.1 Design and Intervention

The study design consists of a teacher training with pre and post survey. Biology teachers from Bavarian secondary schools took part in a one-day digital teacher training session dealing with “digitally supported inquiry learning for all students”. The study was verified and approved by the Bavarian State Ministry of Education and Cultural Affairs. Participation in both the study and the training was voluntary and unpaid. The training aimed at increasing teachers’ self-efficacy assumptions according to the TPACK model as shown in Fig. 23.2 (Mishra & Koehler, 2006). Therefore, teachers planned and performed an experiment on the enzymatic browning of apples, which was digitally supported in all phases: planning, implementation, evaluation (Weidenhiller et al., 2022). In addition, they considered the needs of students and elaborated on possible barriers. The outcome of the training was a planned experiment supported by digital media to differentiate the phases of the inquiry process. The teachers focused on the heterogeneity of their own classes and differentiated according to the needs of the students. Participants were randomly assigned to three experimental groups. The experimental groups differed in the instruction phases in advance of the work phase. The first group focused on the design of digital media, media didactics, and the use of digital media in science teaching (a.o. Hamilton et al., 2016, Kerres, 2018; Mayer, 2005; Mayer & Pilegard, 2014; Puentedura, 2006; Sweller, 2005). The second group focused on approaches to inclusive didactics, concepts for differentiation and their implementation in science lessons, as well as legal aspects of inclusion in Bavaria (a.o. Reich, 2013, 2014; Stinken-Rösner et al., 2020). The last group had an integrated format for the instructions. This included the mentioned aspects of digital media from the first experimental group, as well as the aspects of differentiation of the second group.

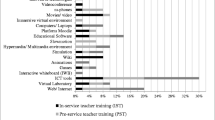

The pre-post survey contained scales about teachers’ attitudes toward digitalization and inclusion. The scale attitudes toward digitalization addressed different aspects of learning with digital media, such as anchoring in the curriculum, influence on the teaching level and on the student’s activity (Vogelsang et al., 2019). The scale attitudes toward inclusion contained two main constructs: ‘schooling and support’ and ‘social inclusion’ (Kunz et al., 2010). Furthermore, the survey covered self-efficacy assumptions regarding inclusion and digitalization in accordance with the TPACK model (modified according to Graham et al., 2009; Weidenhiller, in preparation). For this purpose, the TPACK scales of Graham et al. (2009) were modified and adapted to the content of the intervention. In total, five scales were used: TK, TPK, TCK, TPACK, DILAS (digitally supported inquiry learning for all students) (Weidenhiller, in preparation). The TK scale asks about the use and production of various media, such as presentations, videos, shared documents, or e-books unrelated to teaching. TPK is about the differentiated design of teaching materials or action sequences through the use of digital media. The TCK scale, on the other hand, contains items on the extent to which digital media help to make scientific phenomena easier to understand. The TPACK and DILAS scales now include questions about the instructional use of digital media in science. TPACK focuses on the general use of media in science lessons, whereas the DILAS scale deals specifically with science working methods in different class compositions.

4.2 Sample

The intervention took place from June 2021 to January 2022 as one-day teacher training sessions. A total of 141 Bavarian secondary school biology teachers (70% female) were trained in small groups of up to 10 people. The matched data sets of pretest and posttest correspond to approximately one-third of the total sample and are almost equally distributed across the three experimental groups (Table 23.1).

5 Data Analysis and Results

A Rasch analysis was performed to determine the quality of the scales. The calculations were performed on the matching data sets with pretest and posttest and were carried out with the Winsteps software. A high person separation (>2) and reliability (>0.8) implies a sensitive instrument (with enough items), which distinguish between high and low performers (Boone et al., 2014). A high item separation (>4) and reliability (>0.9) implies that the sample is large enough to have a good confirmation of the item difficulty hierarchy (ibid.). This means a high construct validity. The real reliabilities and separation indices for all scales are quite satisfactory, except for the item separation and reliability of the TCK scale and the item separation of the attitudes to digital media scale, which are below the desired values (Table 23.2).

A major advantage of the Rasch analysis in contrast to classical test theory is that the response format is not assumed to be metric. The Rasch analysis calculates the difficulty of the items and the ability of the individuals and reports them on the same scale in units of logits, which is presented in a Wrightmap. As an example, the Wrightmap of the Attitude toward Inclusion scale is shown (Fig. 23.3). The structure of the Wrightmap is as follows: On the left side of the map we see the persons, represented as “X”. These are plotted according to their person ability. The higher up a person is, the more capable they are. In the middle we see the “M”, which represents the mean ability of the cohort. On the right side we see the items, which are plotted by difficulty. The higher up an item is, the more difficult, or harder to agree with, the item is. For example, the item EI10 stands out, because it seems to be very difficult. In the translated version, the item reads, “If learners with special educational needs spent most of their time in regular classes, they would also receive all the support there that they would otherwise have in a small class or special school”. Thus, agreement with this item indicates that the same support is available at a regular school as at a special school. This question about support does not seem to be easily answered in the affirmative by the teachers. In comparison, the question about social inclusion, for example, can be affirmed more easily in this scale, as item EI12 shows. EI12 reads as follows: “The more time learners with special educational needs spend in a regular class, the more likely they are to be treated well by other classmates in their class”. The original factor analysis of the attitudes towards inclusion scale (EZI) shows two components (Kunz et al., 2010). The first component “Schooling and Support” consists of seven items, the second component “Social Inclusion” consists of four items. The item structure of this factor analysis is also reflected in the Wrightmap in this study. The upper box in Fig. 23.3 represents component 1. The lower box represents component 2. From this arrangement in the Wrightmap, we can now assume that overall the items dealing with social aspects of inclusion are easier to agree with than direct support in the class. Further calculations without anchoring item difficulty in the posttest did not show a different arrangement. Thus, the difficulty of the constructs seems constant before and after the intervention. As a second example, the DILAS scale is considered. In terms of content, the scale deals with inquiry learning processes in science, such as experimentation and modeling with digital media in heterogeneous classes. Over the items TP11 – TP14, the degree of heterogeneity in the class is varied from homogeneous (TP11) to heterogeneous in performance (TP12) to behavioral problems (TP13) and special educational needs (TP14). The level of digitalization remains the same. In items TP15-TP19, the level of digitization varies from no digital media (TP15) to digital instruction (TP16), digital observation (TP17), digital measurement (TP18), and purely digital laboratories (TP19). The class is always described as heterogeneous without more specific information. As shown in Fig. 23.4, the difficulty of the items correspond to the increasing level of digitization and diversity as described above. The more digital media intervene in the doing science process or the more explicitly diversity is described, the more difficult the item is rated. In terms of the teachers’ self-efficacy, this means that the higher the level of digitization and diversity, the less confident the teachers feel. To compare pretest and posttest results, the item difficulty of the pretest was anchored and then the person ability was calculated on the posttest. The mean of person ability in pre- and posttest is highlighted by circles in Fig. 23.4. The mean of the item difficulty is set to zero. The mean person ability on the pretest is below the mean item difficulty. In comparison, the posttest shows an improvement in mean person ability, which is now above the mean item difficulty. This means that an improvement in teachers’ self-efficacy assumption occurred in the course of the intervention. This visual difference is also statistically detectable. The one-way repeated measures MANOVA is significant, as shown in Table 23.3. In order to calculate the differences of the scales in pretest and posttest, first the person abilities for the respective scale at the respective time of measurement were calculated. The item difficulties were anchored as described above. The person abilities were compared between the measurements using a one-way repeated measures MANOVA. There were significant improvements in teacher ratings after the intervention, F(7, 45) = 6.791, p < .001, partial η2 = .514, Wilk’s Λ = .486. These calculations were made across the three groups. The univariate testing shows an improvement of the following scales: Attitudes towards digital media, TPACK, TCK, TPK and DILAS. By this improvement, a positive impact of the intervention on teachers can be identified.

The pretest shows medium to strong correlations between the scales TPACK, TCK, TPK, TK, and DILAS (Table 23.4). These correlations show a close interconnectedness of the scales and suggest that the assumed individual components of the self-efficacy assumption in the TPACK domain influence each other. There is also a weak correlation between attitudes towards digital media and the TPK self-efficacy assumption (r = 0.293, p = 0.031). The scale attitudes toward inclusion do not correlate with any other scale in the pretest.

In the posttest, the existing correlations remain (Table 23.4), but the scale attitudes toward digital media no longer correlate with TPK, but with TPACK (r = 0.299, p = 0.03). However, what is much more interesting is that there is a correlation between the scales attitudes toward inclusion and attitudes toward digital media with a medium effect (r = 0.316, p = 0.021), which was not there in the pretest. One interpretation could be that the two topics of inclusion and digital media are no longer understood in such a detached way as they were before the intervention and they are now thought of in a more linked way.

Comparing the three experimental groups, it is noticeable that there are no significant differences (Table 23.5). Again, the person abilities between the groups were tested for the single constructs. Afterwards, a group comparison between the three experimental groups – digital media, inclusion and integrated – was calculated. The one-way MANOVA found no statistically significant differences between the groups on the combined dependent variables, F(14, 86) = 0.521, p = .914, partial η2 = .078, Wilk’s Λ = .850. Despite supposed differences due to different instructional phases, no significance occurs, either because the groups are too small and thus the deviations are too large, which is reflected in a high standard deviation, or because no effects could be obtained. Table 23.5 shows the mean values and standard deviations of the three groups.

6 Discussion and Practical Implications

In summary, the following main findings can be described. Systematic variation in the instructional phase shows no difference in teachers’ attitudes or self-efficacy assumptions. Attitudes toward inclusion are stable across the intervention, whereas attitudes toward digitization change. In the attitude towards inclusion, the questions about social inclusion are much easier to agree with than the questions about adequate support. The correlation between the two scales indicates a stronger link between the topics after the intervention. There are weak correlations, if any, between attitudes and self-efficacy assumptions, arguing for separate constructs.

The aim of the study was to promote teachers specifically for the use of digital media in heterogeneous classes in biology lessons. Following the theory of planned behavior (Fishbein & Ajzen, 2010), it can be assumed that the goal has been achieved because positive attitudes and high self-efficacy assumptions are strong predictors for a desired behavior, in this case, the targeted use of digital media for differentiation in doing science. The results show significant improvements in attitudes toward digital media and in self-efficacy expectations in the TPACK domains. As discussed earlier, teachers with positive attitudes and with high self-efficacy expectations are more likely to demonstrate a desired behavior in inclusive teaching compared to teachers with more negative attitudes and lower self-efficacy expectations (Sharma & Jacobs, 2016). The significant improvement now allows the assumption that the teachers feel more competent in using digital media for inquiry learning in heterogeneous classes. Consequently, it can be assumed that the use of digital media in inquiry learning may be more frequent in future lessons, adapted to the needs of the students. However, this remains an assumption derived from theory, which must be verified, for example, through follow-up interviews.

Looking at the second research question (What are the relationships between the TPACK domains and attitudes?), it is important to note that the attitude scales deal separately with the domains of digital media and inclusion, whereas the TPACK scales require an intertwined approach. The TPACK scales (and the DILAS scale) deal with the intertwining of digital media and subject content and student needs. Within these constructs, correlations among the scales occurred in both the pretest and posttest. This is as expected, as the domains are strongly interrelated due to the technological component.

The attitude scales, on the other hand, are less linked to the TPACK domains than expected. Although there are weak correlations between attitudes toward digital media and individual TPACK domains, these are neither strong nor consistent across pretest and posttest. A stronger linkage was assumed here because the TPACK scales used constructs of both inclusion and media use. The limited prevalence of this shows that attitudes are a rather independent construct. The theory of planned behavior assumes that both attitudes and self-efficacy assumptions are influenced by beliefs, which in turn are influenced by background factors (Fishbein & Ajzen, 2010). Accordingly, only little cross-talk between attitudes and self-efficacy assumptions appeared here. The intervention seems to promote these two areas separately. It is all the more interesting that there is a correlation between attitudes toward inclusion and digitization in the posttest. These two constructs were still considered completely independently in the pretest. The linking of the two topics in the intervention therefore may has an effect here.

The Attitude Towards Inclusion scale shows that teachers find it easier to rate social aspects of inclusion positively than support aspects. This evaluation remains constant across the intervention. This is not in line with expectations, as the intervention focused strongly on the promotion of individual needs of students, but the social aspects were not in the foreground. One possible explanation is that attitudes themselves are a construct that is difficult to change. The underlying beliefs and factors have developed over long periods of time and require a lot of effort to change. The reportet changes of attitudes towards inclusion after an intervention in Miesera & Gebhardt (2018) and Miesera & Will (2017) belong to long term interventions. Accordingly, it can be assumed that a one-day intervention does not have the power to change them. Overall, multi day events in teacher training are considered more effective (Lipowsky & Rzejak, 2019, 2021). If we look at the DILAS scale, we see that teachers feel more confident the fewer digital media are used for scientific work and the more homogeneous the class is. This effect also remains constant, with the mean person ability increasing significantly after the training. In conclusion, this shows on the one hand the challenge of integrating innovations into the classroom and adapting them to heterogeneous needs, and on the other hand an improvement in self-efficacy.

In the post-test, we did not find any differences in the scales between the three intervention groups. The main change in the experimental design was the theoretical input in the instruction phase at the beginning. The experimental part and the discussion were basically the same in all three groups. Accordingly, we can say that the theoretical part of the training has too little influence to be measured with these scales. Rather, it can be assumed that application knowledge in the other phases of the training leads to the effect or overlaps the effect of the groups, or that the teachers already have a lot of knowledge, which makes a sharp separation of the groups impossible. This suggests, in accordance with the scientific discussion, that the attitude and self-efficacy assumptions do not only change based on theoretical discussion (Fishbein & Ajzen, 2010). Rather, other factors, such as experience, perceived control, and others, influence these personal characteristics as well (Fishbein & Ajzen, 2010). This effect is reflected in the significant improvements in almost all scales after the intervention. This suggests that the intervention itself is effective. Overall, it can be concluded that after the intervention, teachers feel more confident in using digital media during inquiry learning in heterogeneous classes and have more positive attitudes than before. According to the theory of planned behavior and the results of several studies as discussed (Eickelmann & Vennemann, 2017; Jordan et al., 2009; Sharma & Jacobs, 2016), we can conclude that the teachers may use more digital media for differentiation in future because of their attitudes and self-efficacy assumptions in the TPACK areas.

The following implications for teacher training can be derived from these results. Since there were no differences between the groups, none of the options can be emphasized. It is necessary to verify to what extent the instructional phase can be shortened or shifted in order to make it more effective. For example, the inclusion and digitalization of instruction could come into play after the barriers to the experiment identified. The practical phase should be placed more at the center of the training. Together with the subsequent discussion, it is a more effective component of the training than the instructional phase tested in this study. Overall, when designing teacher training, care should be taken to think about the increasing heterogeneity of students and incorporate it into the design. By linking the topics in the training, it is possible to provide teachers with differentiated options for action in the classroom through increased self-efficacy. However, there is the limiting factor that the training was offered as a webinar. In this respect, it is not possible to directly control what the teachers actually did in the instruction phase. Even with the camera switched on, it is not possible to guarantee that the teachers were not otherwise occupied outside of the work phases. The following additional limitations still need to be included in the results. There was only a small experimental group with 54 matching pre-posttests. Participation in the tests was voluntary and therefore the rate was only 50% and even lower for the matching data sets due to incorrect codes. Results are limited to secondary teachers. For future research, face-to-face sessions should be tested to examine instructional phases, while ensuring that teachers are not distracted. In addition, a better response rate would be expected. This would allow the possibility to verify the results of this study. To anchor the topic of inclusion, another practice phase could be integrated into the training, in which differentiation methods are run through by the teachers themselves, as in the previous practice phase. Another idea would be a training course of at least two sessions, which would allow for practical testing in the school, to see if this could improve teachers’ attitudes.

References

Baumert, J., & Kunter, M. (2006). Stichwort: Professionelle Kompetenz von Lehrkräften. Zeitschrift für Erziehungswissenschaft, 9(4), 469–520. https://doi.org/10.1007/s11618-006-0165-2

Baur, A. (2018). Fehler, Fehlkonzepte und spezifische Vorgehensweisen von Schülerinnen und Schülern beim Experimentieren: Ergebnisse einer videogestützten Beobachtung. Zeitschrift für Didaktik der Naturwissenschaften, 24(1), 115–129.

Boban, I., & Hinz, A. (2003). Index für Inklusion: Lernen und Teilhabe in der Schule der Vielfalt entwickeln. Martin-Luther-Universität Halle-Wittenberg.

Boone, W., Staver, J., & Yale, M. (2014). Rasch analysis in the human sciences. Springer.

Bruckermann, T., Ferreira Gonzalez, L., Münchhalfen, K., & Schlueter, K. (2017). Inklusive Fachdidaktik Biologie. In K. Ziemen (Ed.), Lexikon Inklusion (pp. 109–110). Vandenhoeck & Ruprecht.

Eickelmann, B., & Vennemann, M. (2017). Teachers’ attitudes and beliefs regarding ICT in teaching and learning in European countries. European Educational Research Journal, 16(6), 733–761.

Fishbein, M., & Ajzen, I. (2010). Predicting and changing behavior. The reasoned action approach. Psychology Press.

Graham, C. R., Burgoyne, N., Cantrell, P., Smith, L., St Clair, L., et al. (2009). TPACK development in science teaching: Measuring the TPACK confidence of inservice science teachers. TechTrends, 53(5), 70–79.

Hamilton, E. R., Rosenberg, J. M., & Akcaoglu, M. (2016). Examining the substitution augmentation modification redefinition (SAMR) model for technology integration. Technology Trends, 60(5), 433–441.

Jordan, A., Schwartz, E., & McGhie-Richmond, D. (2009). Preparing teachers for inclusive classrooms. Teaching and Teacher Education, 25(4), 535–542. https://doi.org/10.1016/j.tate.2009.02.010

Kerres, M. (2018). Mediendidaktik. Konzeption und Entwicklung digitaler Lernangebote (5th ed.). Walter de Gruyter GmbH.

Kunz, A., Luder, R., & Moretti, M. (2010). Die Messung von Einstellungen zur Integration (E-ZI). Empirische Sonderpädagogik, 2, 83–94.

Lipowsky, F., & Rzejak, D. (2019). Was macht Fortbildungenfür Lehrkräfte erfolgreich? – Ein Update. In B. Groot-Wilken & R. Koerber (Hrsg.), Nachhaltige Professionalisierung für Lehrerinnen und Lehrer. Ideen, Entwicklungen, Konzepte (S. 15–56). Bielefeld.

Lipowsky, F., & Rzejak, D. (2021). Fortbildungen für Lehrpersonen wirksam gestalten. Hg. v. Bertelsmann Stiftung. Gütersloh.

Mayer, R. E. (2005). Cognitive theory of multimedia learning. In R. E. Mayer (Ed.), The Cambridge handbook of multimedia learning (pp. 31–48). Cambridge University Press. https://doi.org/10.1017/CBO9780511816819.004

Mayer, R., & Pilegard, C. (2014). Principles for managing essential processing in multimedia learning: Segmenting, pre-training, and modality principles. In R. Mayer (Ed.), The Cambridge handbook of multimedia learning (Cambridge handbooks in psychology) (pp. 316–344). Cambridge University Press. https://doi.org/10.1017/CBO9781139547369.016

Miesera, S., & Gebhardt, M. (2018). Inklusive Didaktik in beruflichen Schulen – InkDibeS – ein Konzept für die Lehrerbildung: Videobasierte Fallkonstruktionen inklusiver Unterrichtssettings. In D. Buschfeld & M. Cleef (Eds.), Vielfalt des Lernens im Rahmen berufsbezogener Standards (QUA-LIS Schriftenreihe Beiträge zur Schulentwicklung). Waxmann.

Miesera, S., & Will, S. (2017). Inklusive Didaktik in der Lehrerbildung – Erstellung und Einsatz von Unterrichtsvideos. Haushalt in Bildung und Forschung, 6(3), 61–76. https://doi.org/10.3224/hibifo.v6i3.05

Mishra, P., & Koehler, M. J. (2006). Technological pedagogical content knowledge. A new framework for teacher knowledge. Teachers College Record, 108(6), 1017–1054.

Puentedura, R. (2006). Transformation, technology, and education [Blog post]. Retrieved from http://hippasus.com/resources/tte/

Reich, K. (2013). Inklusive Didaktik –Konstruktivistische Didaktik. In K. Zierer, D. Lamres, & C. v. Ossietzky (Eds.), Handbuch für Allgemeine Didaktik 2013 (pp.133–149). : Schneider Verlag.

Reich, K. (2014). Inklusive Didaktik: Bausteine für eine inklusive Schule. Beltz Verlag.

Sharma, U. (2012). Changing pre-service teachers’ beliefs to teach in inclusive classrooms in Victoria, Australia. Australian Journal of Teacher Education, 37(10), 53–66. https://doi.org/10.14221/ajte.2012v37n10.6

Sharma, U., & Jacobs, D. K. (2016). Predicting in-service educators’ intentions to teach in inclusive classrooms in India and Australia. Teaching and Teacher Education, 55, 13–23. https://doi.org/10.1016/j.tate.2015.12.004

Stinken-Rösner, L., & Abels, S. (2021). Digitale Medien als Mittler im Spannungsfeld zwischen naturwissenschaftlichem Unterricht und inklusiver Pädagogik. In S. Hundertmark, X. Sun, S. Abels, A. Nehring, R. Schildknecht, V. Seremet, & C. Lindmeier (Eds.), Naturwissenschaften und Inklusion, 4. Beiheft Sonderpädagogische Förderung heute (pp. 161–175). Beltz Juventa.

Stinken-Rösner, L., Rott, L., Hundertmark, S., Baumann, T., Menthe, J., Hoffmann, T., Nehring, A., & Abels, S. (2020). Thinking inclusive science education from two perspectives: Inclusive pedagogy and science education. Research in Subject-matter Teaching and Learning (RISTAL), 3, 30–45.

Sweller, J. (2005). Implications of cognitive load theory for multimedia learning. In R. E. Mayer (Ed.), The Cambridge handbook of multimedia learning (pp. 19–30). Cambridge University Press.

Vogelsang, C., Finger, A., Laumann, D., & Thyssen, C. (2019). Vorerfahrungen, Einstellungen und motivationale Orientierungen als mögliche Einflussfaktoren auf den Einsatz digitaler Werkzeuge im naturwissenschaftlichen Unterricht. Zeitschrift für Didaktik der Naturwissenschaften, 25(1), 115–129.

Weidenhiller, P. (in preparation). Fachspezifische Arbeitsweisen in heterogenen Klassen digital unterstützen: Eine Interventionsstudie mit Biologielehrkräften an Gymnasien und FOS/BOS.

Weidenhiller, P., Witzke, S., & Nerdel, C. (2022). Das Apfelexperiment – Enzymkinetik der Apfelbräunung mit digitalen Tools messen. In E. Watts, L. Stinken-Rösner, & M. Meier (Eds.), digital unterrichten. Biologie (6/2022). Friedrich Verlag.

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2024 The Author(s)

About this chapter

Cite this chapter

Weidenhiller, P., Miesera, S., Nerdel, C. (2024). Promoting Digitally Supported Inquiry Learning in Diverse Classrooms Through Teacher Training. In: Korfiatis, K., Grace, M., Hammann, M. (eds) Shaping the Future of Biological Education Research . Contributions from Biology Education Research. Springer, Cham. https://doi.org/10.1007/978-3-031-44792-1_23

Download citation

DOI: https://doi.org/10.1007/978-3-031-44792-1_23

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-44791-4

Online ISBN: 978-3-031-44792-1

eBook Packages: EducationEducation (R0)