Abstract

Since F-N curves have been proposed, the use of consequences-frequency matrices (CFM) has been extensively used in several domains and in risk and hazard analysis. It represents a common framework for many applications, including project management and decision-making. CFM are diagrams which possess consequence and frequency classes on the axes. They permit to classify risks and hazards based on expert knowledge with limited quantitative data. In this paper, we propose to present the main characteristics of such tools. The semi-quantitative approaches are privileged, numbers must be attached to the limit of classes, in order to avoid erroneous uses. Comparison with F-N curve is addressed. It appears that giving probabilities to all classes is a way to introduce uncertainties and to get exceedance curves. The use of triangular probability distribution functions to describe the belonging to classes is a possibility coupled with a Bayesian approach. A short example is provided.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

Because of the complexity of risk systems, it is not always possible to quantify fully the variables involved in the calculation, since many aspects are not fully quantifiable. However, in most cases, risk analysis is essential for decision-making (Van Westen and Greiving 2017), especially when the ins and outs are not well known. Methodologies have been developed to fulfil these gaps, since the nineties, notably by the NASA space shuttle program (Haimes 2009): “Risk filtering, ranking and management” (RFRM). To rank the risk events and scenarios, these methods may use the so-called “risk matrix” displaying frequency (λ) or hazard (Η) or probability versus consequences (Co), divided in classes for each axis. These consequences-frequency matrices (CFM) are diagrams representing consequence and frequency on x and y axes respectively, which are usually divided into classes. The CFM are often used and presented in textbooks (e.g., Ale 2009) as a tool for assessing and comparing different situations for objects at risk. The results are the possibility to rank and compare risk situations by classes of consequences (Co) and hazard level (H). Such approaches have been developed earlier in the industry production sector (Gillet 1985), and are used nowadays in administrations, army operations (Armée Suisse 2012) or material acquisition (DOD 2006), project management (Raydugin 2012), epidemic assessment in the EU (ECDC 2011) or at the world level (WHO 2020), evaluating risks related to corporate risk profile (TBCS 2011), commercial acquisitions (DOD 2006), natural risks (OFPP 2013) and also in insurances (ZHA 2013).

Since CFMs are a discrete version of F-N or f-N curves, where F if the frequency of events with the number of deaths equal or greater than N (f-N is the non-cumulative version), which are continuous in values, they have been extensively used in several domains, they are both probability consequences diagrams (PCDS) (Ale et al. 2015). But one of the drawbacks of F-N curves is the risk acceptability limits, which is not well established for F-N and f-N, and therefore for CFM. The F-N approaches are nowadays used in landslide hazard and risk assessment (Dai et al. 2002; Fell et al. 2008) and matrices are often cited but not so much used (Dai et al. 2002; Lacasse and Nadim 2009). The principles are the same, but CFM allow the analysis of scenarios that are often impossible to solve by detailed investigations with the limited resources of the project (Pasman and Vrijling 2003). They permit classifying risks based on expert knowledge with limited quantitative data or more quantitative approach. But they are often misused and not well applied, because it must be a semi-quantitative approach, and some simple rules are not well followed. Furthermore, they are often not used to visualize the evolution of risk with time or mitigation, or to represent uncertainty, etc. except a few examples (Wang et al. 2014; Macciotta et al. 2020). It seems that some codification must be addressed to make CFM more relevant to risk analysis. The classes that are used must be established in a clear way, and the question of frequency or/and probability must be well treated. The question of the uncertainty is raised, one of the proposed solutions it to estimate the belonging to all classes. Here we intend to raise some questions and problems that are partially solvable if some further information about the scales of frequencies and consequences is given, because we do not claim to be exhaustive, it is a first step towards a better use.

2 The Main Principle of Matrix Use

The CFM techniques aim at providing risk assessment for ranking different type of dangers (UK-CO 2017), or specific case study by answering the questions (Haimes 2009): What can go wrong? What are their consequences and likelihood? On the side of the industries and insurances the following procedure for risk filtering, ranking and management (RFRM) is used (Krause et al. 1995; Haimes 2009):

-

1.

Scope definitions: what are the problems?

-

2.

Creation of a group of experts concerned by each level of the analyzed risky system.

-

3.

Hazard identification, i.e., identification of potential events and their scenarios

-

4.

Risk filtering and ranking in several sub-stages which implies to establish frequency (probability) and impact classes and their corresponding limits (in loop with point 5)

-

4.1

Use decision trees to select for Co and H

-

4.2

Place in risk matrix

-

4.1

-

5.

Establish a ranking.

-

6.

Risk management, including the quantification of the potential risk reduction, which necessitate the understanding of causes and effects.

-

7.

Finalization of decision-making process

-

8.

Refinement of the process with the feedback

3 The Conceptual Background

Risk can be defined in different ways, for ISO (2009) it is defined as “The effect of uncertainty on objectives”, in the landslide community it is a “measure of the probability and severity of an adverse effect to life, health, properties, or the environment, Risk = Hazard × Potential Worth of Loss” (Fell et al. 2005), and hazard is defined as “probability that a particular danger (threat) occurs within a given period of time” (Fell et al. 2005). But this poses a problem, since risk units is “an annual loss of property’s value” (Fell et al. 2005), because there is no time dependence in the above formula, especially such as debris-flows or rockfall occurs more than once in a year. But the link between frequency (λi(Mi)) of random events of magnitude Mi or larger, which is the inverse of the return period (Ti = 1/λi), and the probability Pi there is at least one event during a period Δt is given by the Poisson law (Corominas et al. 2014) (Fig. 1):

Verbal terms for the potentiality of at least one event occurs within a period assuming frequency limits corresponding to T = 2, 10, 20, 200, 2000 years (after AGS 2007)

It means that it is equivalent to use λ or P and Δt, but the consequence is that the risk for a single scenario i of magnitude Mi for an object E located at the position X can be presented in two ways.

The first have a unit of loss per unit of time:

Where P(X| Mi) is the probability of propagation at the location X of an event of magnitude Mi, Exp(E, X) the exposure or probability of presence of the object E, V(I(X, Mi)) the vulnerability of the object for the intensity I at the location X knowing Mi and W(E) the value of the object or number of people affected. The hazard at the location X with an intensity I is given by λi(X) = H(X| Mi) = λ(Mi) P(X| Mi). For simplification, the intensity I is known based on Mi, but H can also directly include the intensity.

The second way it is to use the probability P of a period, usually 1 year (Δt = 1), and the consequence C, i.e.:

In case of multiple events with reconstruction, the use of Poisson law for n = 1, 2, …, n maybe used, if significant. The matrix usually represents Co versus P or H, but if P is used the period must be indicated, which is often omitted. For small frequency the P ≈ λ, there is less than 2% difference for the annual probability λΔt = 1/T > 25. To perform a full risk calculation class of magnitude must be used and formally an integration or a summation must be performed over Mi, X, (implicitly λi) and E.

In landslide community the matrix is often used to qualify a unique situation, and do not consider cumulative incremental risk as it can be made in F-N curves, but F-N curves can be represented as points in f-N curve for a specific event, but then the limits of risk acceptability and tolerability may be changed. The F-N is based on the same principle that the frequency f or H, it uses the concept of societal risk, i.e. the potential loss of life of N or more people with a frequency F (Jonkman et al. 2003), N was in the original version a dose of exposition to radioactivity (Farmer 1967) but it can be applied to human life as well for economic loss (Fell and Hartford 1997) or any consequences (Ale et al. 2015).

4 Example of Risk Matrix

Examples of risk matrix are numerous. As an example, the the UK cabinet office (2017), published a risk matrix (Fig. 2), for UK, comparing the different natural hazards, pandemics, major accidents, and social risks (they use societal which does not correspond to the landslide risk terminology). It is clear that climate and environmental related risk and pandemics are classified as the higher risk for the UK. For landslide the matrix developed by Porter and Morgenstern (2013) is very useful, because it provides in addition order of magnitudes of frequency and the different type of impacts for Health and Safety, Environment, Social & Cultural (Fig. 3). It underlined that the likelihood categories must be kept constant, but the consequences can be scaled depending on the purpose, for instance the size of a company and the risk of bankruptcy (Porter and Morgenstern 2013).

Summary by the UK Cabinet Office to represent the hazards, diseases, accidents, and social risks in a likelihood (probability) impact severity risk matrix (from UK-CO 2017)

Example of risk matrix designed by BGC company and proposed to geological survey of Canada Sample Qualitative Risk Evaluation Matrix (from Porter and Morgenstern 2013)

5 Issues Linked to the Use of Matrix

5.1 Scale and Verbal Terms

The use of scales to enter in the matrix is important, they are usually not numerical, but often annual probabilities are given for indication, like for AGS (2007) the verbal terms used to qualify the hazard is based on the frequency, but they named the frequency annual probability which is confusing (Ale et al. 2015) (Fig. 1). As mentioned in the RFRM the limits of acceptability in PCDS can be chosen depending on the situation and the experts’ opinions or can be fixed based on quantitative values as for F-N curves using criteria at the level of a country (Pasman and Vrijling 2003) or a region (Fell and Hartford 1997).

The studies about verbal terms quantifications show a large variability for different terms assessing the probabilities (Fig. 4). Several surveys about terms showed that the difference in probability on average between the terms “unlikely” and “likely” is of 60% from ~20% to ~70%, with a ratio varying approximately from 5 to 3.5, which is far from one order of magnitude (Fig. 4). For industrial project limits of the classes of probability can be below 0.1% for very low 10% for low, above 50% for high and 90% for very high (Raydugin 2012), which correspond to annual frequency 0.001, 0.11, 0.69, and 2.3 events per year respectively, but if it used for the life of the industry let say 30 years it must be divided by 30 (3.34 10−5, 0.0035, 0.023, 0.077 or in terms of T: ~3 104, 285, 43, 13) because λ = − ln (1 − P)/Δt which appears as more relevant. For military actions, the yardstick probabilities are very high, 5% is remote chance and 20% highly unlikely (Defence Intelligence 2023).

Results of various surveys of verbal terms with average (red line) and variability (grey zone) and the envelope of the data using light grey, showing the very large variability (modified from Barnes 2016)

The above considerations show the variability of interpretation. For landslides the comparison of the annual frequency used for the matrix in Australia (AGS 2007) and the Canadian one (Porter and Morgenstern 2013), the second being inspired by the first one (Fig. 1, Table 1). There is a shift in the value and variability in the terms, but the order of magnitude of events are similar and likely starts at 100 to 200 years return period, which indicates for those adverse conditions will probably occur during the life of the infrastructure or the building (AGS 2007).

The scales of the damages are very close as expected, in addition comparison with values for industries, the numbers are similar. Gillet (1985) proposed to compare the estimated hazard with standardized frequencies of occurrence and their associated expected losses like shown in the last two columns of Table 2, if it is higher or if the uncertainty is too high, then the risk reduction must be considered.

5.1.1 The Classes of Consequences and Frequency or Probability

As underlined above if the verbal terms are used, they must be attached to some numbers or explanation (Table 1) to make them more reliable, since the verbal alone can be very vague. To choose the membership to a class of consequences or frequency, several strategies can be used. It can be based on a set of indicators with weight which provide a value for one or for both scales as for geotechnical and geomorphic indicators used for quick clays risk analysis (Lacasse and Nadim 2009) (Fig. 5).

Continuous scale risk matrix or PCDS example using indicators leading to create relative risk classes in a consequence-(hazard) susceptibility diagram (based on the criteria of Lacasse and Nadim 2009)

The decision trees are standard tools to analyze risk or hazard (Leroueil and Locat 1998; Haimes 2009; Lacasse et al. 2008). They can be used to provide the class values for one or both axes. It is preferable to use dichotomic branches as proposed for pandemic (ECDC 2011), because it is easier to decide. For instance, the frequencies or return periods (Ti) can be deduced by the process below, which depend on the climate and the environment, thusno values are given:

-

1.

Are any signs of landslide activity present?

-

(a)

In the scree/talus slope or in the landslide deposits

-

(b)

Within the slope

-

(i)

Scar clearly visible

-

(ii)

Displacements

-

(i)

-

(a)

-

If YES ➔ proceed to point 2, if NO go to point 4

-

2.

Can recent landslide activity be observed?

-

(a)

In the scree or in the deposits

-

(i)

Fresh traces are visible

-

(i)

-

(b)

Within the slope

-

(i)

Scar (fresh)

-

(ii)

Active displacement (measurable)

-

(i)

-

(a)

-

If NO ➔ proceed to point 3, if YES the frequency can be assumed to be less than T1 years.

-

3.

Are the traces of landslide activity apparently unchanged but still clear?

-

(a)

In the scree or in the deposits

-

(i)

Recent traces are not visible

-

(i)

-

(b)

Within the slope

-

(i)

Scar (no trace of activity)

-

(ii)

Displacement traces clearly visible

-

(i)

-

(a)

-

If YES the frequency can be assumed between T1 and T2 years, and if NO go to point 4.

-

4.

Are the traces not obvious?

-

(a)

Presence of old vegetation

-

(b)

Smoothed topography

-

(a)

-

If YES the frequency can be larger than T3 years, and if NO it can be estimated larger than T2 years but less than T3 years.

For the consequences scale, it is also possible to perform a decision tree to set the classes, as for instance for a landslide threatening a road (Fig. 6).

Another possibility is to ask to the expert to set the probability of belong to all the classes of the scale, as proposed by Vengeon et al. (2001), for which the frequency is set by an array of probability—delay for failure of landslides. Such an approach can be used for consequences and frequency as well (Table 2).

5.2 Setting the Risk Limits for Risk Matrix and F-N Curves

CFMs suffer some weaknesses. The risk scale (colours) is often not consistent (Cox Jr. 2008), because for instance, a point at the corner of a class can belong to 3 different risk classes, then in that case by changing by one unity each axes the difference can be of 2 class levels. In principle the classes of risk have to respect as much as possible the principle that the isorisk limits are hyperbolas, i.e., H = R/Co. Let us make an analogy with F-N diagram which can be considered as numerical “matrix”. In case of aversion to risks (φ), the limits must be adapted as it is the case for F-N diagram for floods in the Netherlands φ = 2, where N is the consequence:

As a consequence, the CFM integrating aversion, in a 5 × 5 matrix, using simply two relative scales numbered from 1 (very low) to 5 (very high), it is possible to create 4 risk classes without triple points based on λ × Co providing a risk scale going from 1 to 25, but using the same risk scale the second and third risk class must be merged because the range of value goes from 1 to 125 and the high risk limits move to 25 (Fig. 7).

F-N diagrams are cumulative, but some authors tend to use non-cumulative f-N curve as did Farmer (1967) originally (Ale et al. 2015), this raises a question: does the F-N limits must be the integral of f-N (Ale et al. 2015)? When creating risk classes for probability consequences diagrams, the classes limits must be set up depending on one or the other assumption. In addition, they must be set designed on which domain it applies, i.e., population, region, country, object infrastructures, etc. The limits are usually not defined by the law so as mentioned above the rules must be set by a group or expert or using a true participative approach (Mereu et al. 2007). In the Netherland the F-N criterion for unacceptability are based on the risk for the whole population considering the industrial hazard is at 10−6 and for the sum of incremental risks to 10−5, then assuming that F(≥N) × N2 < 10−3 for N ≥ 10 (Pasman and Vrijling 2003).

This process highlights the significance of considering the specific domain it is being applied to. In the context of Hong Kong, the current approach involves utilizing the land area as a basis (Hungr and Wong 2007). However, it is worth noting that this may not be the most optimal choice, as the F-N is dependent on the number of people affected. Therefore, in principle, it would be preferable to establish the individual risk threshold multiplied by the population concerned to get the threshold for one death. The selection of the aversion parameter, i.e., the slope of the limit, should be made with consideration of cultural factors. As shown for the Netherlands the reasoning can be more sophisticated, based on observed F-N curve for various hazards. This way to think must be kept in mind when fixing matrix risk classes.

Another issue is the individual risk (IR), because matrix can be used for specific activity, as we have seen the limit for army, industry or voluntary dangerous activity the threshold must be adapted and can be defined as follow (Pasman and Vrijling 2003): IR = β × 10−3, where β is the policy factor, assuming the following values 100 for voluntary or exceptional professional activity (rescuing, military, etc.), 1 with self-determination assuming a direct benefit and 0.01 for facing involuntary hazard.

As final remarks, if you add people in the system then or if the territory is extended, shall the line of acceptance changes? Yes, if the individual risk is kept and no if an improvement is requested. f-N is problematic, because it corresponds to an incremental risk, so depending on how many threats are considered the limits will change. This depends on the ratio of the importance of the considered threats versus the others.

5.3 Uncertainty

Because of the fuzziness of human appreciation, uncertainty must be added. There are several ways to add it as it is distributed in more than one class the estimation of F and Co. The use of minimum, maximum and average can be used to assess the uncertainty. In fact, the belonging to all classes can also be assessed (see below).

5.4 Risk Reduction

The risk matrices are very useful to visualize the risk reduction scenario, especially in a qualitative way, because it can represent the paths (arrows) of the different option of risk scenarios (Solheim, et al. 2005). In addition, it allows to input the risks that can be induced by the changes created by a mitigation. In industrial material sciences, such representations are used (Cowles et al. 2012).

For instance, the flood management can create fuse plug spillway in protective dike (Schmocker et al. 2013), to force the flood in less at-risk areas. In the case of rockfall, dikes or nets, can make local authorities confident and let new construction behind protective measures, which, in fact, can increase the risks (Farvacque et al. 2019). When the risk matrices are used for representing risk reduction, they must include the questions about the potential consequences (Fig. 8).

Example of potential building area in a high hazard area and illustration of the proposed solutions. CFM is used to represent the degree of risk. The scope of tolerable risk (light grey) is between the limits of tolerance and of acceptability (modified after Jaboyedoff et al. 2014)

5.5 Representing the Cascading Effect

It means that a rule must be added to the use of risk matrix, which to consider and represent the cascading effect (Gill and Malamud 2016) of protecting an area. The cascading effects are important nowadays, especially in the context of climate change. In the case of Natech (Risks from Natural Hazards at Hazardous Installations) the use of matrices can be very useful to show the consequences of a natural disaster (Girgin et al. 2019).

5.6 Adding Dimensions

Frequency and consequences are not the only parameters that can be considered, some other parameters can be added such as:

-

Difficulty to reduce risk (Zhang et al. 2016).

-

Uncertainty (Gillet 1985).

-

The difficulty is to detect (Martinez-Marquez et al. 2020) or characterize the hazard.

-

Etc.

If 3 variables are used, frequency, consequences and a third one among the previous ones, a 3D matrix can be used or a matrix using different symbol shape and size can provide 4D information for instance (Fig. 9).

(a) example of 3D matrix including difficulties with a scale inspired from Zhang et al. (2016) and (b) conceptual example of a 4-dimensional matrix including difficulty to reduce and uncertainty

5.7 Cumulative Versus Non-cumulative Scale

F-N graphs are in cumulative form, but even if it is cumulative, it is non-exhaustive looking at the risk level, so therefore the frequency is calibrated on incremental risks, normally dedicated to one type of hazard and their consequences. The scales for risk matrix are semi-quantitative or qualitative, but they must follow the same principle as F-N or f-N.

In most of the case, the risk matrices are non-cumulative because it allows to compare different situations or type of risks. But a semiquantitative approach may allow a cumulative approach, or, as we will see in an example below, each scenario represented by a class of the matrix must be qualified.

5.7.1 An Example Ambiguous Use of Matrix

In Switzerland the assessment of hazard level uses a matrix representing the return period or frequency and the intensity (Raetzo et al. 2002). It is in fact a sort of risk matrix because intensity levels are related to some resistance of objects which is called “danger”. The matrix is a 3 × 3 matrix, and the axes are inverted, and the frequency scale is inverted (Fig. 10a). It contains issues like a triple point (7), three half classes and when looking at the transposition in an intensity—frequency the scale appear to be very refined even if it seems possible to get the frequencies by the investigation (Fig. 10b). Considering the question of what the probabilities attached to the limit high (30 years), medium (100 years) and low (300 years) are, respectively, for a 1-year are 3.28%, 1.00% and 0.33%, which is very close and difficult to discriminate, uncertainty is necessary to assess in a such case, especially for verbal appreciations, but such a scale can be relevant on the field by looking at the traces of rockfall for instance, but it must be kept in mind that has a high uncertainty. Nowadays in Switzerland scenario must be assessed for all return periods (OFEV 2016), but the principle of the matrix is still used.

(a) Hazard Swiss matrix for rockfall (after Raetzo et al. 2002) and (b) corresponding Energy (linear)—frequency (log) diagram

6 Summaries of the Recommendations

Following the above observations and findings, we can make a non-exhaustive list of elements to consider when creating risk matrices:

-

Usually, CFM includes relative scales based on verbal terms, but they must be attached to numbers or description of frequency and consequences scales.

-

The risk classes scale must follow rules such classes multiplication R ij = λi × Co j, or they can be adapted on purpose with justification or considering aversion.

-

The matrix must be preferentially equal or larger than 4 × 4 matrix.

-

No triple points must appear.

-

-

The uses for decision trees are very useful for choosing the classes on both axes.

-

When using F-N concept, the strategy must be clearly defined, and the limits must be adapted considering the population and the individual risk threshold, this must be described. In the case of not using such a strategy, this must be clearly stated.

-

Risk matrices are an excellent tool to represent risk reduction paths.

-

The matrix can be used for any type of consequences.

-

The cascading events can be represented by such matrix, but the conditions leading so such situations must be clearly described and the probability and frequency estimation correctly assessed.

-

The use of cumulative version must consider that depending on the extent of the treats considered, the threshold can change, because usually for one threat it is 10−2 times the individual risk for involuntary risk, because it is incremental it is one risk among others. It is the way to use F-N curves but for matrix it is not recommended.

-

The uncertainty must be assessed.

-

The dimension of the matrix can exceed 2, especially by adding uncertainty and difficulty to reduce risk.

The risk matrix as well as the F-N or F-Consequence graphs provide a framework for risk assessment, which is very useful for representing, communicating, and teaching.

7 Example of Integration of Assessment for all Classes

In the following lines, an example of CFM is given related to an update of the risk assessment of Pont Bourquin landslide (Switzerland) (Jaboyedoff et al. 2009, 2014). The goal is to evaluate all the probabilities of a 5 × 5 risk matrix classes. The method uses CFM by developing point 4 of the above-mentioned RFRM process, including part of the points 3 to 5. It uses a Bayesian approach to estimate the belonging to classes of frequency and consequences based on prior probabilities provided by experts (here the expert is the author to set the example), the likelihood being based on triangular distribution. The probabilities to belong to a class of risk are calculated by the multiplication of both probabilities to belong to frequency and consequences.

Similar attempts were performed for technical system by Krause et al. (1995) introducing uncertainty in the input data by using fuzzy logic: the classes are fuzzified by applying a membership function to the classes of frequencies and consequences, we replaced fuzzy logic by Bayesian approach.

8 Method

8.1 The Belonging to a Class and its Uncertainty

Uncertainties are involved when experts are providing their opinions about the belonging to a class of events, which can be named “very low, low, medium, high, very high” referring frequencies and consequences. As for the fuzzy logic framework (Zadeh 1975), the class belonging is not unique and there exists a probability of belonging to the other classes. It means that when an expert attributes an event to a class it also implies a “membership” to other neighboring classes. Instead of using the fuzzy logic terminology as “membership”, it is preferred to use the likelihood P(Cj| CEi) of the attribution to a class CEi by an expert to be distributed to neighboring classes Cj.

The prior probability to belong to each class is equivalent to ask to experts an estimation of the uncertainty empirically. It can be decided by a group of experts, or an expert can give his definition of belonging to a class. This can be a direct way to introduce the uncertainty, but we try to go a bit further here.

Formally, if a class has a lower limit lj and upper limit lj + 1 and if the distribution of the weights from a class chosen by an expert CEi to the others using a scale of value x is given by f(x)dx, then the likelihood or weight to belong effectively to the class Cj is given by (Jaboyedoff et al. 2014):

The function f(x) can be of any type, and they are chosen by using for instance the variance, the mean, etc. Here, we will use the triangular distribution (Kotz and van Drop 2004; Haimes 2009) (Fig. 11):

Where F(x) is the cumulative distribution function (CDF) of f(x) (F(x) = ∫ f(x)dx), and the domain [a, b] corresponds to non-zero f(x) and c is the x value of the maximum of f(x).

8.2 Classes Definitions

Such triangular density function (pdf) is often used to represent expert uncertainty (Vose 2008). It presents the advantage to ask expert simple questions to define the distribution (Jaboyedoff et al. 2014):

-

What do you consider as the lower possible value (a) for an event (frequency, intensity, impacts, etc.), classified in the class Cj?

-

What do you consider as the upper possible value (b) for an event (frequency, intensity, damage, etc.), classified in the class Cj?

Here, we will consider that the maximum of f(x), i.e., f(c) is located at the central value of a class considered by the expert as the most probable, but for the lower and the upper classes the choice is different, and other solutions can be chosen.

8.3 The Expert Assessment for a Specific Event

For most of the matrix approaches (Haimes 2009), all the possible events (Ev) have to be listed. Each event corresponds to a process that can lead to different scenarios for consequence and frequency.

The classes and their associated distribution definitions are independent of the events. An expert has its own interpretation of a potential event Ev that can lead to different scenarios of consequences and frequency independently. The expert may give, for each scenario, a weight to each class of consequences, and this is also applicable for frequencies scenarios. Therefore, all couples of frequencies -consequences (Co) related to an event Ev (λ, Co) must be distributed following the potential scenarios. For instance, as proposed by Vengeon et al. (2001), the frequency can be set first by an array of probability or weight—delay (return period or frequency) or more generally relative classes of probability. Then, the weight (probability) must be normalized to 1 and distributed among the respective belonging to the other classes, i.e., P(CEi) the expert weight distribution for one event (prior probability) (Fig. 12a). This is a also performed for the impact. Using the Bayesian theory, the probabilities of a class C P(C) of a frequency Pλ(Ci) or an consequence PCo(Cj) of classes λ, Co respectively are given by (Jaboyedoff et al. 2014):

By introducing values for the limits, it is then possible to provide exceedance curves for consequence or return period (Fig. 12b).

8.4 The Matrix Construction

The different probabilities for classes for frequencies Pλ(Ci) and consequences PCo(Cj) can be multiplied to get a matrix of probability of each matrix element (P(Cij)) is given by element (Fig. 13):

where each element of the risk matrix corresponds to scenarios defined by the frequency and the consequences, the two scales are considered implicitly independent. This allows to obtain an exceedance curve of risk level that is attributed to each element in two ways. In the first case, for each amount of money the average frequency is calculated:

Where λi are the frequency for each frequency class. In the second case, the class values 1 to 5 with 5 being the highest of both scales are multiplied, going from 1 to 25. The annual risk can be calculated and frequency of exceedance of each Coj is obtained by cumulating the frequencies of the frequency classes, and the risk classes can also be presented in terms of probability to exceed each risk classes.

8.5 The Example of a Particular Unstable Mass of Pont Bourquin Landslide

The example below is partially taken from Jaboyedoff et al. (2014) using a new assessment since no major event occurred and considering the new situation.

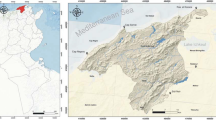

8.5.1 Landslide Settings

Pont Bourquin Landslide (PBL) is located in the Swiss Prealps, close to Les Diablerets, Switzerland (Jaboyedoff et al. 2009) (Fig. 14). First, evidence of gravitational deformation activity can be observed on aerial photos taken in the late sixties. This activity gradually developed until 2004. The first large slope displacements happened in 2006, when movements up to 80 cm occurred on the head scarp. Subsequently, a mudflow reached the road just below the landslide on fifth of July 2007 and another flow destroyed the forest at the toe of the slide in August 2010. For this second event, we were able to demonstrate the drop of surface shear wave seismic velocity at a depth of 9–11 m a few days before the collapse (Mainsant et al. 2012). Afterwards, remedial works were carried out, including a trunk-framed box at the toe of the instability and gullies on the mass body to evacuate surface waters. However, the landslide body is still moving, with velocities like the former ones.

Nowadays, the landslide is still active, and three zones are particularly threatening. In the present example, we will assess the risk for one of these potential source areas. It is approximately 5000 m3 material that is detached on the north-eastern part of the landslide. The displacement is observable by visual inspection of the back scarp, sudden reactivation failure of this compartment and a fast propagation toward the road is expected. A detailed description of the situation is not the goal of this paper, therefore, we will simply present an example illustrating the general framework of the method.

8.5.2 The Classes and Scales

To create the limits of classes for the frequencies or return periods we use a modified version of the Swiss danger matrix (Lateltin 1997): Very low (300–1000 years), Low (100–300 years), Medium (50–100 years), High (5–50 years) and Very high (0–5 years), the average is used as the frequency for the classes.

The classes of consequences have been designed depending on the considered scenario. We used five million CHF as the worst-case scenario, which corresponds, according to the willingness-to-pay value generally used in Switzerland, to the death of one person (Fig. 6). Each class is one order of magnitude different from its neighbors.

8.5.3 Setting the Prior Probabilities

Prior probabilities have been set using the Eq. [1] and Pλ(Ci) from Fig. 12 and the triangular distributions shown in Fig. 11. For very low frequencies, looking at the likelihood functions in Fig. 13 shown below the matrix, the error of attribution can be quite high towards the higher frequencies, while for the very high frequencies they are distributed symmetrically between the two highest classes. The intermediate classes are distributed nearly symmetrically over neighboring classes.

The first question is whether or not the landslide will reach to the road after a sudden reactivation failure, considering up to the possibility of killing one person in a vehicle. The weights PCo(CEj) were sat at 1.9%, 19.2%, 57.7%, 19.2%, 1.9% respectively for the classes very low, low, medium, high and very high, used with the values indicted in Fig. 6. The vertical distributions in Fig. 13 are used for the likelihood functions used to calculate the probability to belong to a class. Please note that not all hazard sources have been considered here (Fig. 14).

8.5.4 Results

The use of prior probability to correct expert assessment (Fig. 12a) implies that the average return period changes from 44 to 48 years, which is limited by construction (Fig. 12). The curve of exceedance shows that the corrected probability to be below 5 years is around 20%, whereas it is 5% for the original value (expert opinion), and to exceed 100 years goes from zero to 6% (Fig. 12b). The exceedances of the consequences per event (Fig. 15) show first that the average value of corrected costs (344,000.—CHF) is increased by more than 50% more than double the non-corrected value (222,000.- CHF). The probability to exceed 50,000.—CHF decreases from 80% to 60% but are very similar for high values.

We can extract the curve of exceedance for the risk level divided in five classes from 1 to 25 (5 × 5) in 5 equal classes (Fig. 16). This shows that there is around 5% chance to be in the very high-risk class and 50% to be lower than medium risk. It is also possible to obtain the annual risk:

which is around 33,000.- CHF/year.

Using Eq. 5, an exceedance curve can also be obtained (Fig. 17) by summing the frequency of decreasing damage levels, which indicate the frequency of 4.3 × 10−3 (or on average every 230 years) to reach five million CHF, 5.7 × 10−2 (or on average every 18 years) to be over 50,000.- CHF and 8.5 × 10−2 (or on average every 12 years) to overpass 5000.- CHF.

9 Discussion and Conclusion

The use of CFMs must follow certain rules, the use of numbers for the classe’s delimitations is important to avoid misunderstanding since the verbal qualification are often fuzzy. If the numbers are used, it enables extracting semi-quantitative risk estimation as well as the curve of exceedance. The limits must be fixed depending on the case study and the domain on which it applies as for F-N diagram.

The example tries to demonstrate that if it is possible to give a probability of belonging to all classes of a risk matrix, it provides a more reliable results which can be delivered in different forms and integrate fully uncertainty that is expressed by the exceedance curves. This approach allows to avoid most of the problems linked to the use of CFM.

The fact that the experts must give two times their opinions, first for the likelihood (the scale fuzziness), and second for the prior probability (weight for each class) is a way to introduce uncertainty, which is often lacking in the risk matrix approach. The expert can be separated in two groups, for the likelihood it can be fixed by regulation, for instance. The use of Bayesian approach is also easier than fuzzy logic. Here we used the concept of having only an estimation by the expert of the PDFs for categories of consequences and of frequency belonging. But another solution would have to give a PDFs for the frequency classes of each consequence level. It allows to extend the domain of hazard and consequences that are often not considered by experts. In addition, it does not only give one result, but also gives exceedance curves.

The results obtained from a more classical risk analysis was greater than the one analyzed here (Limousin 2013), it gave 278′000.- CHF/year for 3 unstable masses. But since nearly 10 years no large event occurred, which has the consequence to lower the expert hazard assessment. Nevertheless, there are several remaining problems:

-

The discretization of the value by classes which are not equal in width raises the problem of non-linearity and singular points at the limits of the classes. This must be further explored, especially using other PDFs or CDFs such as power law or exponential.

-

The way to calculate the risk and its distribution must be explored further, because the solution proposed is not unique.

-

The extension of the classes to infinite and to zero is a problem, the question is if the limits of classes are used for the return period or frequency or if the average of limits is used are chosen the problem are different. The sum of CFM probability matrix is equal to one.

It is clear from above that risk matrix can integrate the risk reduction or the difficulty to reduce it along with the uncertainty. The use of PDF estimate is also a tool to see at which level to act, i.e., reducing high impact event or small impact event or both, such an approach permits to discriminate the solutions. In addition, we experienced in lectures that the use of risk matrix is a good way to promote collective work in a classroom and to address several different types of risks.

We think that the present method improves some of the weaknesses of the matrix approach that can greatly support decision-making or may help for communicating in a better way the risks.

References

AGS (Australian Geomechanics Society) (2007) Sub-committee on landslide risk management, a National Landslide Risk Management Framework for Australia. Aust Geomech 42:1–36

Ale B (2009) Risk: an introduction: the concepts of risk, danger and chance. Routledge, Taylor and Francis Group, p 134

Ale B, Burnap P, Slater D (2015) On the origin of PCDS–(probability consequence diagrams). Saf Sci 72:229–239. https://doi.org/10.1016/j.ssci.2014.09.003

Armée Suisse (2012) Sécurité intégrale, p. 153, Retrived April 2023. https://www.vtg.admin.ch/content/vtg-internet/fr/service/info_trp/sicherheit/_jcr_content/contentPar/tabs_copy/items/downloads/tabPar/downloadlist/downloadItems/190_1472734389467.download/52_059_f.pdf

Barnes A (2016) Making Intelligence analysis more intelligent: using numeric probabilities. Intell National Secur 31:327–344. https://doi.org/10.1080/02684527.2014.994955

Corominas J, van Westen C, Frattini P, Cascini L, Malet JP, Fotopoulou S, Catani F, Van Den Eeckhaut M, Mavrouli O, Agliardi F, Pitilakis K, Winter MG, Pastor M, Ferlisi S, Tofani V, Hervás J, Smith JT (2014) Recommendations for the quantitative analysis of landslide risk. Bull Eng Geol Environ 73(2):209–263

Cowles B, Backman D, Dutton R (2012) Verification and validation of ICME methods and models for aerospace applications. Integr Mater Manuf Innov 1:3–18. https://doi.org/10.1186/2193-9772-1-2

Cox LA Jr (2008) W’at's wrong with risk matrices? Risk Anal 28:497–512

Dai FC, Lee CF, Ngai YY (2002) Landslide risk assessment and management: an overview. Eng Geol 64(1):65–87

Defence Intelligence (2023) Defence Intelligence–communicating probability. https://www.gov.uk/government/news/defence-intelligence-communicating-probability. Accessed February 2023

DOD (2006) Risk management guide for DOD acquisition, 6th Edition (Version 1.0), ADA470492, p. 34

ECDC (2011) European Centre for disease prevention and control: operational guidance on rapid risk assessment methodology. Stockholm, ECDC, 68

Farmer FR (1967) Siting criteria-a new approach. Containment and Siting of Nuclear Power Plants. Proceedings of a Symposium on the Containment and Siting of Nuclear Power Plants

Farvacque M, Lopez-Saez J, Corona C, Toe D, Bourrier F, Eckert N (2019) How is rockfall risk impacted by land-use and land-cover changes? Insights from the French Alps. Glob Planet Chang 174:138–152. https://doi.org/10.1016/j.gloplacha.2019.01.009

Fell R, Hartford D (1997) Landslide risk management. In: Landslide risk assessment. Routledge, pp 51–109

Fell R, Ho KK, Lacasse S, Leroi E (2005) A framework for landslide risk assessment and management. In: Landslide risk management. CRC Press, pp 13–36

Fell R, Corominas J, Bonnard C, Cascini L, Leroi E, Savage WZ (2008) Guidelines for landslide susceptibility, hazard and risk zoning for land use planning. Eng Geol 102(3):85–98

Gill JC, Malamud BD (2016) Hazard interactions and interaction networks (cascades) within multi-hazard methodologies. Earth Syst Dynam 7:659–679. https://doi.org/10.5194/esd-7-659-2016

Gillet JE (1985) Rapid ranking of process Hazard. Process Eng 66:19–22

Girgin S, Necci A, Krausmann E (2019) Dealing with cascading multi-hazard risks in national risk assessment: the case of Natech accidents. Int J Disaster Risk Reduct 35:101072. https://doi.org/10.1016/j.ijdrr.2019.101072

Haimes YY (2009) Risk Modeling, Assessment, and Management, 3rd edn. John Wiley & Sons, p 1009

Hungr O, Wong HN (2007) Landslide risk acceptability criteria: are F–N plots objective? Geotech News 25:47–50

ISO (2009) Risk management–principles and guidelines. ISO 31000:24

Jaboyedoff M, Loye A, Oppikofer T, Pedrazzini A, GüeIli Pons M, Locat J (2009) Earth-flow in a complex geological environment: the example of Pont Bourquin, les Diablerets (Western Switzerland). In: Malet J-P, Remaître A, Bogaard T (eds) Landslide processes, pp 131–137

Jaboyedoff M, Aye Z, Derron MH, Nicolet P, Olyazadeh R (2014) Using the consequence–frequency matrix to reduce the risk: examples and teaching. Int. Conf. on the Analysis and Management of Changing Risk for natural hazards–CHANGES project, Padova Italy. p. 9

Jonkman SN, van Gelder PHAJM, Vrijling JK (2003) An overview of quantitative risk measures for loss of life and economic damage. J Hazard Mater 99:1–30. https://doi.org/10.1016/S0304-3894(02)00283-2

Kotz S, van Drop JR (2004) Beyond Beta: other continuous families of distri-butions with bounded support and applications. World Scientific, p 289

Krause J-P, Mock R, Gheorghe AD (1995) Assessment of risks from technical systems: integrating fuzzy logic into the Zurich Hazard analysis method. Int J Environ Res Public Health (IJEP) 5(2–3):278–296

Lacasse S, Nadim F (2009) Landslide risk assessment and mitigation strategy. In: Sassa K, Canuti P (eds) Landslides–disaster risk reduction. Springer, Berlin Heidelberg, Berlin, Heidelberg, pp 31–61

Lacasse S, Eidsvik U, Nadim F, Høeg K, Blikra LH (2008) Event Tree analysis of Åknes rock slide hazard. IV Geohazards Québec, 4th Canadian Conf. on Geohazards. 551–557

Lateltin O (1997) Prise en compte des dangers dus aux mouvements de terrain dans le cadre des activités ‘e l'aménagement du territoire. Recommandations, OFEFP, 42

Leroueil S, Locat J (1998) Slope movements: geotechnical characterisation, risk assessment and mitigation. Proc. 11th Danube-European Conf. Soil Mech. Geotech. Eng., Porec, Croatia, 95–106. Also published in proc. 8th congress Int. Assoc. Eng. Geology, Vancouver, 933-944, Balkema, Rotterdam

Limousin P (2013) De la caractérisation du sol, à l’étude de propagation et des risques: Les cas du glissement de Pont Bourquin. Univ. Lausanne Master Thesis, p. 100

Macciotta R, Gräpel C, Keegan T, Duxbury J, Skirrow R (2020) Quantitative risk assessment of rock slope instabilities that threaten a highway near Canmore, Alberta, Canada: managing risk calculation uncertainty in practice. Can Geotech J 57(3):337–353

Mainsant G, Larose E, Brönnimann C, Jongmans D, Michoud C, Jaboyedoff M (2012) Ambient seismic noise monitoring of a clay landslide: toward failure prediction. JGR-ES 117, F01030:12. https://doi.org/10.1029/2011JF002159

Martinez-Marquez D, Terhaer K, Scheinemann P, Mirnajafizadeh A, Carty CP, Stewart RA (2020) Quality by design for industry translation: three-dimensional risk assessment failure mode, effects, and criticality analysis for additively manufactured patient-specific implants. Engg Rep 2:e12113. https://doi.org/10.1002/eng2.12113

Mereu A, Sardu C, Minerba L, Sotgiu A, Contu P (2007) Evidence based public health policy and practice: participative risk communication in an industrial village in Sardinia. J Epidemiol Community Health(1979-) 61:122–127

OFEV (2016) Protection contre les dangers dus aux mouvements de terrain. Aide à l’exécution concernant la gestion des dangers dus aux glissements de terrain, aux chutes de pierres et aux coulées de boue. Office fédéral de l’environnement, Berne L’environnement pratique 1608:98

OFPP (2013) Aide-mémoire KATAPLAN.- Analyse et prévention des dangers et préparation aux situations d’urgence. Office fédéral de la protection de la population, p. 55

Pasman HJ, Vrijling JK (2003) Social risk assessment of large technical systems. In: Human Factors and Ergonomics in Manufacturing & Service Industries, vol 13, pp 305–316. https://doi.org/10.1002/hfm.10046

Porter M, Morgenstern N (2013) Landslide risk evaluation–canadian technical guidelines and best practices related to landslides: a national initiative for loss reduction; geological survey of Canada. Open File 7312:21. https://doi.org/10.4095/292234

Raetzo H, Lateltin O, Bollinger D, Tripet J (2002) Hazard assessment in Switzerland–codes of practice for mass movements. Bull Eng Geol Environ 61:263–268. https://doi.org/10.1007/s10064-002-0163-4

Raydugin Y (2012) Consistent application of risk Management for Selection of engineering design options in mega-projects. Int J Risk Conting Manag (IJRCM) 1:44–55. https://doi.org/10.4018/ijrcm.2012100104

Schmocker L, Höck E, Mayor PA, Weitbrecht V (2013) Hydraulic model study of the fuse plug spillway at Hagneck Canal, Switzerland. J Hydraul Eng 139:894–904. https://doi.org/10.1061/(ASCE)HY.1943-7900.0000733

Solheim A, Bhasin R, De Blasio FV, Blikra LH, Boyle S, Braathen A, Dehls J, Elverhøi A, Etzelmüllerü B Glimsdal S (2005) International Centre for Geohazards (ICG): Assessment, prevention and mitigation of geohazards. Nor J Geol 85:45-62. Norsk Geologisk Forening.

TBCS (Treasury Board of Canada Secretariat) (2011) Guide to corporate risk profiles. Retrieved in April 2023. https://www.canada.ca/en/treasury-board-secretariat/corporate/risk-management/corporate-risk-profiles.html

UK-CO (Cabinet Office) (2017) National risk register of civil emergencies. Retrieved from https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/644968/UK_National_Risk_Register_2017.pdf

Vengeon JM, Hantz D, Dussauge C (2001) Prédictibilité des éboulements roc eux: approche probabiliste par combinais’n d'études historiques et géomécaniques. Rev Fr Géotech 95/96:143–154

Vose D (2008) Risk anal ysis: a quantitative guide, 3rd edn. John Wiley & Sons, p 752

Wang X, Frattini P, Crosta GB, Zhang L, Agliardi F, Lari S, Yang Z (2014) Uncertainty assessment in quantitative rockfall risk assessment. Landslides 11:711–722. https://doi.org/10.1007/s10346-013-0447-8

Van Westen CJ, Greiving S (2017) Multi-hazard risk assessment and decision making, Environmental hazards Methodologies for Risk Assessment and Management. Dalezios, N. R. (ed.). IWA Publishing, PP 31-94.

WHO, 2020. How to use WHO risk assessment and mitigation checklist for mass gatherings in the context of COVID-19. WHO/2019-nCoV/Mass_gathering_RAtool/20202, p. 4

Zadeh LA (1975) The concept of a linguistic variable and its applications to approximate reasoning, part I. Inf Sci 8:199–249

ZHA (2013) Methodology Zurich Hazard Analysis. www.zurich.com/riskengineering/global/services/strategic_risk_management/zha_services. Last Access 18 Apr 2013

Zhang D, Han J, Song J, Yuan Y (2016) A risk assessment approach based on fuzzy 3D risk matrix for network device. In: 2016 2nd IEEE international conference on computer and communications (ICCC), pp 1106–1110

Acknowledgement

The author would like to thank all the members of the risk team, in particular P. Limousin, at ISTE, UNIL for their great support and Prof. M. Kanevski who suggested us to use Bayesian approach. I also warmly thank Prof. J. Locat for his comments and corrections. Part of this research was funded by the European Commission within the Marie Curie Research and Training Network ‘CHANGES (led by C. Van Westen): Changing Hydrometeorological Risks as Analysed by a New Generation of European Scientists’ (2011-2014, Grant No. 263953) under seventh framework program.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this chapter

Cite this chapter

Jaboyedoff, M. (2023). Consequence: Frequency Matrix as a Tool to Assess Landslides Risk. In: Alcántara-Ayala, I., et al. Progress in Landslide Research and Technology, Volume 2 Issue 2, 2023. Progress in Landslide Research and Technology. Springer, Cham. https://doi.org/10.1007/978-3-031-44296-4_5

Download citation

DOI: https://doi.org/10.1007/978-3-031-44296-4_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-44295-7

Online ISBN: 978-3-031-44296-4

eBook Packages: Earth and Environmental ScienceEarth and Environmental Science (R0)