Abstract

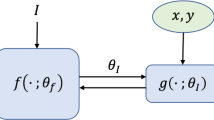

As an emerging paradigm, federated learning (FL) trains a shared global model by multi-party collaboration without leaking privacy since no private data transmission between the server and clients. However, it still faces two challenges: statistical heterogeneity and communication efficiency. To tackle them simultaneously, we propose pFedLHNs, which assigns each client with both a small hypernetwork (HN) and a large target network (NN) whose parameters are generated by the hypernetwork. Each client pulls other clients’ hypernetworks from the server for local aggregation to personalize its local target model and only interacts the small hypernetwork with other clients via the central server to reduce communication costs. Besides, the server also aggregates received local hypernetworks to construct a global hypernetwork and uses it to initialize new joining out-of-distribution (OOD) clients for cold start. Extensive experiments on three datasets with Non-IID distributions demonstrate the superiority of pFedLHNs in the trade-off between model accuracy and communication efficiency. The case studies justify its tolerance to statistical heterogeneity and new OOD clients.

This research is supported in part by the National Science Foundation of China under Grant 62141412, 62272253, 62272252, and the Fundamental Research Funds for the Central Universities.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Acar, D.A.E., et al.: Federated learning based on dynamic regularization. In: Proceedings of ICLR, Virtual, Austria. OpenReview.net (2021)

Achituve, I., et al.: Personalized federated learning with gaussian processes. CoRR abs/2106.15482 (2021)

Agrawal, S., et al.: Genetic CFL: optimization of hyper-parameters in clustered federated learning. CoRR abs/2107.07233 (2021)

Arivazhagan, M.G., et al.: Federated learning with personalization layers. CoRR abs/1912.00818 (2019)

Chen, H., et al.: On bridging generic and personalized federated learning. CoRR abs/2107.00778 (2021)

Chen, T., et al.: LAG: lazily aggregated gradient for communication-efficient distributed learning. In: Proceedings of NeurIPS 2018, pp. 5055–5065 (2018)

Collins, L., et al.: Exploiting shared representations for personalized federated learning. In: Proceedings of ICML, Virtual Event. vol. 139, pp. 2089–2099. PMLR (2021)

Diao, E.: Heterofl: Computation and communication efficient federated learning for heterogeneous clients. In: Proceedings of ICLR (2021)

Dinh, C.T., et al.: Personalized federated learning with moreau envelopes. In: Proceedings of NeurIPS virtual (2020)

Dinh, C.T., et al.: Fedu: A unified framework for federated multi-task learning with laplacian regularization. CoRR abs/2102.07148 (2021)

Fallah, A., et al.: Personalized federated learning with theoretical guarantees: a model-agnostic meta-learning approach. In: Proceedings of NIPS, virtual (2020)

Ha, D., et al.: Hypernetworks. In: Proc. ICLR 2017, Toulon, France. OpenReview.net (2017)

Horváth, S.: FjORD: Fair and accurate federated learning under heterogeneous targets with ordered dropout. In: Proceedings of NIPS (2021)

Huang, Y., et al.: Personalized cross-silo federated learning on non-iid data. In: Proceedings of AAAI. pp. 7865–7873. AAAI Press (2021)

Krizhevsky, A., et al.: Learning multiple layers of features from tiny images (2009)

Kulkarni, V., et al.: Survey of personalization techniques for federated learning. CoRR abs/2003.08673 (2020)

LeCun, Y., et al.: Gradient-based learning applied to document recognition. Proc. IEEE 86(11), 2278–2324 (1998)

Li, Q., et al.: Federated learning on non-iid data silos: An experimental study. CoRR abs/2102.02079 (2021)

Li, T., et al.: Federated optimization in heterogeneous networks. In: Proceedings MLSys, Austin, TX, USA. mlsys.org (2020)

Li, T., et al.: Ditto: Fair and robust federated learning through personalization. In: Proceedings of ICML, Virtual Event. vol. 139, pp. 6357–6368. PMLR (2021)

Liang, P.P., et al.: Think locally, act globally: Federated learning with local and global representations. CoRR abs/2001.01523 (2020)

Liu, S., et al.: Adaptive network pruning for wireless federated learning. IEEE Wirel. Commun. Lett. 10(7), 1572–1576 (2021)

Mansour, Y., et al.: Three approaches for personalization with applications to federated learning. CoRR abs/2002.10619 (2020)

McMahan, B., et al.: Communication-efficient learning of deep networks from decentralized data. In: Proceeidngs of AISTATS, Fort Lauderdale, FL, USA. vol. 54, pp. 1273–1282. PMLR (2017)

Ouyang, X., et al.: Clusterfl: a similarity-aware federated learning system for human activity recognition. In: Proceedings of MobiSys, USA, pp. 54–66. ACM (2021)

Reisizadeh, A., et al.: Fedpaq: A communication-efficient federated learning method with periodic averaging and quantization. In: Proceedings of AISTATS. vol. 108, pp. 2021–2031. PMLR, Online (2020)

Sai, et al.: SCAFFOLD: stochastic controlled averaging for federated learning. In: Proceedings ICML, Virtual Event. vol. 119, pp. 5132–5143. PMLR (2020)

Shamsian, A., et al.: Personalized federated learning using hypernetworks. In: Proceedings of ICML, Virtual Event. vol. 139, pp. 9489–9502. PMLR (2021)

Shi, X., et al.: FFedCL: Fair Federated Learning with Contrastive Learning. In: Proceedings of ICASSP (2023)

Smith, V., et al.: Federated multi-task learning. In: Proceedings of NIPS, Long Beach, CA, USA, pp. 4424–4434 (2017)

Sun, B., et al.: Partialfed: Cross-domain personalized federated learning via partial initialization. In: Proceedings of NIPS, Virtual Event (2021)

Sun, J., et al.: Communication-efficient distributed learning via lazily aggregated quantized gradients. In: Proceedings of NeurIPS, Canada, pp. 3365–3375 (2019)

Wang, K., et al.: Federated evaluation of on-device personalization. CoRR abs/1910.10252 (2019)

Yang, T., et al.: Designing energy-efficient convolutional neural networks using energy-aware pruning. In: Proceedings of CVPR, USA, pp. 6071–6079. IEEE Computer Society (2017)

Yi, L., et al.: SU-Net: an efficient encoder-decoder model of federated learning for brain tumor segmentation. In: Proceedings of ICANN (2020)

Yi, L., et al.: QSFL: A two-level uplink communication optimization framework for federated learning. In: Proceedings of ICML. vol. 162, pp. 25501–25513. PMLR (2022)

Yi, L., et al.: FedRRA: reputation-aware robust federated learning against poisoning attacks. In: Proceedings of IJCNN (2023)

Zhang, M., et al.: Personalized federated learning with first order model optimization. In: Proceedings of ICLR Virtual Event, Austria. OpenReview.net (2021)

Zhao, Y., et al.: Federated learning with non-iid data. CoRR abs/1806.00582 (2018)

Zhu, H., et al.: Federated learning on non-iid data: a survey. Neurocomputing 465, 371–390 (2021)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Yi, L., Shi, X., Wang, N., Xu, Z., Wang, G., Liu, X. (2023). pFedLHNs: Personalized Federated Learning via Local Hypernetworks. In: Iliadis, L., Papaleonidas, A., Angelov, P., Jayne, C. (eds) Artificial Neural Networks and Machine Learning – ICANN 2023. ICANN 2023. Lecture Notes in Computer Science, vol 14256. Springer, Cham. https://doi.org/10.1007/978-3-031-44213-1_43

Download citation

DOI: https://doi.org/10.1007/978-3-031-44213-1_43

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-44212-4

Online ISBN: 978-3-031-44213-1

eBook Packages: Computer ScienceComputer Science (R0)