Abstract

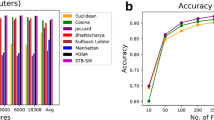

Data normalization is a vital preprocessing technique in which the data is either scaled or converted so features will make an equal contribution. The success of classifiers, like K-Nearest Algorithm, is highly dependent on data quality to generalize classification models. In its turn, KNN is the simplest and most widely-used model for different machine learning-based tasks, including text classification, pattern recognition, plagiarism and intrusion detection, ranking models, sentiment analysis, etc. While the core of KNN is basically based on similarity measures, its performance is also highly contingent on the nature and representation of data. It is commonly known in literature that to secure competitive performance with KNN, data must be normalized. This raises a key question about which normalization method would lead to the best performance. To answer this question, the normalization of data with KNN, which has not yet been given good attention, is investigated in this work. We provide a comparative study on the significant impact of data normalization on KNN performance using six normalization methods, namely, Decimal, L2-Norm, Max/Min, Std Norm, TFIDF and BoW. On eight publicly-available datasets, experimental results show that no method dominates the others. However, the L2-Norm, Decimal, and TFIDF methods were shown to obtain the best performance (measured by accuracy, precision, and recall) in most evaluation metrics. Moreover, run time analysis shows that KNN is working efficiently with BoW, followed by TFIDF.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Data Availability

The dataset used in this work is publicly available.

References

Abdalla, H.I., Amer, A.A.: Towards highly-efficient k-nearest neighbor algorithm for big data classification. In: 2022 5th International Conference on Networking, Information Systems and Security: Envisage Intelligent Systems in 5G//6G-Based Interconnected Digital Worlds (NISS), pp. 1–5. IEEE, March 2022

Abdalla, H.I., Amer, A.A.: Boolean logic algebra driven similarity measure for text based applications. PeerJ Comput. Sci. 7, e641 (2021)

Sethi, A., et al.: Supervised enhancer prediction with epigenetic pattern recognition and targeted validation. Nat. Methods 17(8), 807–814 (2020)

Pan, Z., Wang, Y., Pan, Y.: A new locally adaptive k-nearest neighbor algorithm based on discrimination class. Knowl.-Based Syst. 204, 106185 (2020)

Wang, X.: A fast exact k-nearest neighbors algorithm for high dimensional search using k-means clustering and triangle inequality. In: The 2011 International Joint Conference on Neural Networks, pp. 1293–1299. IEEE, July 2011

Ciaccia, P., Patella, M., Zezula, P.: M-tree: an efficient access method for similarity search in metric spaces. In: VLDB, vol. 97, pp. 426–435, August 1997

Shokrzade, A., Ramezani, M., Tab, F.A., Mohammad, M.A.: A novel extreme learning machine based kNN classification method for dealing with big data. Expert Syst. Appl. 183, 115293 (2021)

Guttman, A.: R-trees: a dynamic index structure for spatial searching. In: Proceedings of the 1984 ACM SIGMOD International Conference on Management of Data, pp. 47–57, June 1984

Zhang, S.: Cost-sensitive KNN classification. Neurocomputing 391, 234–242 (2020)

Zhang, S., Li, X., Zong, M., Zhu, X., Cheng, D.: Learning k for knn classification. ACM Trans. Intell. Syst. Technol. (TIST) 8(3), 1–19 (2017)

Gionis, A., Indyk, P., Motwani, R.: Similarity search in high dimensions via hashing. In: VLDB, vol. 99, no. 6, pp. 518–529, September 1999

Arya, S., Mount, D.M., Netanyahu, N.S., Silverman, R., Wu, A.Y.: An optimal algorithm for approximate nearest neighbor searching fixed dimensions. J. ACM (JACM) 45(6), 891–923 (1998)

Li, W., et al.: Approximate nearest neighbor search on high dimensional data—experiments, analyses, and improvement. IEEE Trans. Knowl. Data Eng. 32(8), 1475–1488 (2019)

Zhang, S., Li, X., Zong, M., Zhu, X., Wang, R.: Efficient kNN classification with different numbers of nearest neighbors. IEEE Trans. Neural Netw. Learn. Syst. 29(5), 1774–1785 (2017)

Abdalla, H.I., Amer, A.A.: On the integration of similarity measures with machine learning models to enhance text classification performance. Inf. Sci. 614, 263–288 (2022)

Jayalakshmi, T., Santhakumaran, A.: Statistical normalization and back propagation for classification. Int. J. Comput. Theory Eng. 3(1), 1793–8201 (2011)

Pan, J., Zhuang, Y., Fong, S.: The impact of data normalization on stock market prediction: using SVM and technical indicators. In: Berry, M.W., Hj. Mohamed, A., Yap, Bee Wah (eds.) SCDS 2016. CCIS, vol. 652, pp. 72–88. Springer, Singapore (2016). https://doi.org/10.1007/978-981-10-2777-2_7

Amer, A.A., Mohamed, M.H., Al Asri, K.: ASGOP: an aggregated similarity-based greedy-oriented approach for relational DDBSs design. Heliyon 6(1), e03172 (2020)

Amer, A.A., Abdalla, H.I.: An integrated design scheme for performance optimization in distributed environments. In: International Conference on Education and e-Learning Innovations, pp. 1–8. IEEE, July 2012

Abdalla, H.I.: A brief comparison of k-means and agglomerative hierarchical clustering algorithms on small datasets. In: Qian, Z., Jabbar, M., Li, X. (eds.) WCNA 2021. LNEE, pp. 623–632. Springer, Singapore (2022). https://doi.org/10.1007/978-981-19-2456-9_64

Nguyen, L., Amer, A.A.: Advanced cosine measures for collaborative filtering. Adapt. Personal. (ADP) 1, 21–41 (2019)

Abdalla, H.I., Amer, A.A., Amer, Y.A., Nguyen, L., Al-Maqaleh, B.: Boosting the item-based collaborative filtering model with novel similarity measures. Int. J. Comput. Intell. Syst. 16(1), 123 (2023)

Abdalla, H.I., Amer, A.A., Ravana, S.D.: BoW-based neural networks vs. cutting-edge models for single-label text classification. Neural Comput. Appl. 1–14 (2023)

Acknowledgement

The author would like to thank and appreciate the support they received from the Research Office of Zayed University for providing the necessary facilities to accomplish this work. This research has been supported by the Research Incentive Fund (RIF) Grant Activity Code: R22083—Zayed University, UAE.

Funding

This research has been supported by Research Incentive Fund (RIF) Grant Activity Code: R22083 – Zayed University, UAE.

Author information

Authors and Affiliations

Contributions

Both authors are key contributors in conception and design, implementing the approach and analyzing results of all experiments, and the preparation, writing and revising the manuscript.

Corresponding author

Editor information

Editors and Affiliations

Ethics declarations

The authors declare that they have no competing interests.

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Abdalla, H.I., Altaf, A. (2023). The Impact of Data Normalization on KNN Rendering. In: Hassanien, A., Rizk, R.Y., Pamucar, D., Darwish, A., Chang, KC. (eds) Proceedings of the 9th International Conference on Advanced Intelligent Systems and Informatics 2023. AISI 2023. Lecture Notes on Data Engineering and Communications Technologies, vol 184. Springer, Cham. https://doi.org/10.1007/978-3-031-43247-7_16

Download citation

DOI: https://doi.org/10.1007/978-3-031-43247-7_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-43246-0

Online ISBN: 978-3-031-43247-7

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)