Abstract

This chapter offers an introduction to lab-based science research by delving into the basics of experimental research planning: research designs, statistical power, and selection of the number of participants required for a planned experiment. The theoretical points are illustrated with elements from a pilot study on empathy and closeness in partnered dance developed at Goldsmiths, University of London, entitled ‘Pilot Studies on Empathy and Closeness in Mutual Entrainment/Improvisation vs. Formalised Dance with Different Types of Rhythm (Regular, Irregular, and No Rhythm) and Coupling Visual, Haptic, Full Coupling): Building a Case for the Origin of Dance in Mutual Entrainment Empathic Interactions in the Mother–Infant Dyad’ (Balinisteanu, 2023).

The case study is based on a pilot study on empathy and closeness in partnered dance developed at Goldsmiths, University of London, entitled Pilot Studies on Empathy and Closeness in Mutual Entrainment/Improvisation vs. Formalised Dance with Different Types of Rhythm (Regular, Irreg-ular, and No Rhythm) and Coupling (Visual, Haptic, Full Coupling): Building a Case for the Origin of Dance in Mutual Entrainment Empathic Interactions in the Mother-Infant Dyad (Balinisteanu, 2023).

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

What Is a Science Experiment?

In the fifteenth century, the Irish-born Fellow of the Royal Society in London, Robert Boyle (1627–1691), articulated a new practice of knowledge: arguing that the phenomena of nature can be reproduced in the laboratory, he promoted the idea that knowledge is based on fact witnessed independently (by researchers), and that the recording of fact should be free from subjective interference. Boyle thereby articulated the fundamental principles of modern objective science.

What Is a Science Laboratory?

A science laboratory is the space where an experiment takes place. In order to study a phenomenon, it must be imported into the laboratory space. This means that what is studied is not a phenomenon as it occurs, naturally, in nature. In the process of importing or translating it into the laboratory, many of the myriad conditions that create a phenomenon are lost. Hence, the validity of laboratory studies is limited by comparison to the conceivably infinite dimensions of a natural phenomenon. What is studied in the laboratory is only a number of facets, dimensions, or aspects of the phenomenon. Or a laboratory study can be directed at discovering what these dimensions, facets, or aspects might be. In spite of these limitations, laboratory studies have significantly advanced human knowledge in almost every aspect of our lives, from health to personal relationships, including, of course, the technology we have for entertainment, transport, communication, utilities, and so forth.

Point to take forward: A lab study has limited ecological validity because the studied phenomenon is not exactly the same as the phenomenon that occurs ecologically, that is, naturally, in our environment.

For example, if one wanted to study empathy, only certain dimensions, aspects, and facets of what we call empathy can be made manifest inside the lab space. Outside the lab, as all of us know, empathy is experienced in infinite ways in many contexts. To study those, we need critical, introspective thinking, whether we use the methods of, for example, philosophy, theology, or art. That is to say that some aspects of experience, or phenomena, remain outside of the purview of science. To have a complete understanding of a phenomenon and how we, humans, experience it, we need the rich methods and heritages of both the sciences and the humanities.

What/Who Is in a Lab Space?

A lab space contains an area where the phenomenon that is imported or translated from nature can be allowed to manifest. In that area, there may be sophisticated technologies that we use to recreate the phenomenon we want to study. In neuroaesthetics, which focuses on the human psyche’s involvement with art, that area will almost always be occupied by the subjects or participants in the experiment. The studied phenomenon is the psychological experience of these participants, to the extent that we create conditions for this experience to become manifested. For example, if we wish to study empathy, we must create conditions for inducing the experience of empathy in our participants’ psyche. Pondering these lab limitations, as previously pointed out, one may already notice how different this experience will be from that experienced in the natural environment. Nevertheless, contemporary research has evolved to a stage where psychological experiences can be induced in such a way that they are very close to the experiences lived outside the laboratory, in the ‘real’ world. In the particular case of neuroaesthetics research, we study the neuropsychological mechanisms involved in the perception and creation of various forms of art. Hence, the kind of phenomena that we need to translate, in the sense of transporting from one place (the world) to another (the lab), pertain to aesthetic experience. For example, we may have to translate or import dance from the communities where it takes place naturally into the much more strictly controlled space of the lab. We can do this by creating conditions, in the lab, for parts or dimensions or aspects of naturally occurring dance to take place so that these parts, dimensions, aspects can be witnessed by researchers and measured.

Point to take forward: A laboratory is a space where researchers can translate, transport or import phenomena occurring naturally in the natural environment for the purpose of measuring their aspects, dimensions, parts under strictly controlled conditions.

Thus, in addition to the space where the phenomenon we wish to study can be recreated, a lab space contains measuring instruments. There exist a wide range of instruments for measuring psychological phenomena. Most psychological phenomena can be measured behaviourally or neuro-physiologically. Commonly encountered neurophysiological measuring instruments include encephalographs (EEG), functional magnetic resonance imaging (fMRI) scanners, heart rate variability (HRV) measuring systems, pupillometers, wristbands collecting HRV data as well as measuring galvanic skin response (GSR) along with movement acceleration data using accelerometer sensors, to mention only a few among many other fairly sophisticated devices.

As for behavioural measures, researchers mostly use questionnaires, which are also referred to as instruments. For example, if one wanted to measure empathy, one could use the Interpersonal Reactivity Index (IRI) instrument (Davis, 1983). The Interpersonal Reactivity Index is a 28-item questionnaire consisting of 4 subscales, with each subscale comprising 7 items. These 4 subscales measure 4 different dimensions of dispositional empathy: empathic concern (EC) measures the feeling of compassion for another individual who experiences distress; perspective-taking (PT) measures cognitive, as opposed to emotional, empathy; personal distress (PD) is a self-focused measure of one’s feeling of distress when confronted with a situation in which there are other individuals in distress; fantasy (F) measures one’s capacity to empathise with fictional characters, such as may be encountered in narrative stories or films. The participants to whom the questionnaire is administered respond to each question or item by reporting the degree or extent to which the situation described in the item text is representative of their own experience. A participant’s response to each item is recorded on a Likert scale with two anchors (A = Does not describe me well; E = Describes me very well). A and E can be assigned minimal and maximal values: for example, A is usually assigned a value of 0 and E is usually assigned a value of 4. The IRI instrument measures general empathy on each of the 4 subscales. It is not intended as a means of measuring or assessing total empathy. Thus, when analysing the collected data (the scores each participant recorded for each item) one should not analyse the sum of scores obtained on all 28 items, but, separately, the scores recorded on each of the 4 subscales. The IRI instrument is a measure of trait. This means that it measures chronic tendencies which are relatively stable over time. Hence, this instrument is not suitable for measuring the feelings one experiences in the moment. To measure the feelings one reports that s/he experiences in the moment we need a measure of state.

An example of instrument that we can use for recording measures of state, suitable for the situation which we exemplified, one in which we wish to measure feelings of empathy, is the Inclusion of Other in the Self (IOS) instrument (Aron et al., 1992). The IOS instrument is a pictorial measure of closeness designed for evidencing the feeling of closeness between two individuals. Two circles, one representing the self and another representing the other, are shown with six degrees of overlapping and one without overlapping, from non-overlapping to nearly completely overlapping. The participants are asked to select which of the six images best represents the extent of the feeling of closeness they experience in relation to another individual.

Pause and Think Question: How would you use these two instruments, IRI and IOS, in a study on dance?

If we decided to use the IRI and IOS instruments to study dance, we would have to administer the questionnaires to our participants. Equally important, we would have to find a way to translate or import dance, from the natural environment where it occurs when a rather large number of conditions result in the possibility of its manifestations, in the lab (examples of such conditions include the day of the week, say, it is Sunday in a traditional village, people are in their leisure time, there is a festive day, and many other factors such as the presence of a band playing instruments, the state of the weather, the condition of dancers, i.e. they are fit for dancing and in vibrant mood, and so on and so forth). Of course, we will not be able to recreate all these conditions (and more) in the lab. But we do not have to. Suppose that our experience (and a large body of previous research) tells us that people dance because it helps the community stick together. In other words, dance fosters pro-social behaviour. If this is the significance of the problem or question we wish to address, the next step in outlining our research plan is to ask how dance fosters pro-social behaviour: what psychological mechanisms are involved? We may decide (again, based on our intuitions and life experience, but, importantly, based also on previous research) to develop the hypothesis that dance fosters pro-social behaviour because it has an effect on empathy and closeness. We can measure this effect by using the IRI and IOS instruments.

Point to take forward: To study a phenomenon we have imported into the lab, we need to assess its significance, which helps us devise a hypothesis, thereby helping us to choose instruments for measuring the effects of various factors, that are inextricable from the phenomenon, on relevant aspects, dimensions, or parts of the phenomenon.

What will we, in fact, measure? This question is more difficult than it seems. The answer has to do with the fact that we have decided to import a phenomenon into the laboratory space and apply a number of measures to it (in our example, scores measured using the IOS and IRI instruments). When we import or (literally!) translate a phenomenon into the lab space, we treat it as a construct. For example, empathy, when it occurs in the world outside the lab, is first intuited as a distinct subjective experience. That is to say that, first, we notice the existence of a phenomenon which feels (is experienced) like something that has its own specificity: our subjective (not objective!) experience tells us that we have come across something that is important in its own right. We may then decide to look for an explanation for this distinctiveness and name it in our subjective minds. And so it is with empathy.

According to Montag et al. (2008), the German philosopher Theodor Lipps (1851–1914) was the first theoretician of empathy and a scholar admired by none other than Sigmund Freud (1856–1939). Lipps was fascinated by the fact that part of humans’ experience of observing geometrical shapes is a tendency to ‘fill’ these shapes with a sense of life, a process he referred to using the term Einfühlung, coined in 1873 by another German philosopher, Robert Vischer (1847–1933). Einfühlung literally means ‘in-feeling’. Lipps extended his observations to situations where humans watch other humans, or combinations of geometrical shapes and other humans contained in the same tableaux. A specific subjective experience Lipps often used as an example is that of a spectator watching an acrobat performing on a tightrope. The spectator tends to mirror in his mind what she or he imagines that the acrobat experiences. The spectator ‘fills in’ with feeling the image that he or she perceives. The spectator’s experience of this ‘inner imitation’ was eventually deemed by the wider scholarly community to be sufficiently distinct from sympathy to merit its own name, translated in English as ‘empathy’ and then adopted as a neologism into many other languages (for example, the Romanian word ‘empatie’). Thus, outside the laboratory, a feeling was experienced that seemed sufficiently distinct from other feelings to be given its own name. Therefore, when we import or translate this feeling into the laboratory we can say that we study empathy. However, what we really study is a construct. When Lipps and others named an observed phenomenon, a feeling, or a subjective experience, using the word ‘empathy’ they construed that feeling as empathy. To overcome the subjectivity of this construal, which would allow us to study this subjective experience using the objective instruments of science, we must find the dimensions of this construct that we can measure. What we will in fact measure are the dimensions of a construct, which is different only in degree from the act of measuring any geometrical shape using a ruler (note that geometrical shapes are also constructs, that is, shapes abstracted from the visual experience of the world around us). As noted, and, now, noted again with deeper insight, the dimensions of empathy that the IRI instrument measures are empathic concern (EC), perspective-taking (PT), personal distress (PD), and fantasy (F). Hence, one might say that, if empathy had a physical geometrical shape, it would have the four dimensions reiterated above. But, of course, feelings do not have geometrical shapes (or do they?). Nevertheless, note the following points to remember.

Point to take forward: What is measured in a scientific laboratory is always a construct that has a number of clearly identified dimensions that can be measured, and for which measuring instruments are available.

We have so far ‘established’ a laboratory space, we have settled on complementary phenomena that we wish to translate or import into that space in order to study them scientifically (empathy and closeness in partnered dance), and we have chosen a number of instruments (IRI and IOS) which helped us to clarify which dimensions of the phenomena-turned-constructs we wish to measure in light of the significance of our research question, namely that partnered dance increases empathy and closeness, thereby fostering pro-social behaviour. What we now need in order to proceed is a research design.

What Is a Research Design?

A research design is a set of conditions we create in the lab in order to test those dimensions of a construct derived from a phenomenon that we deem important in light of the significance of our research question. As regards our example of studying the constructs empathy and closeness in order to see whether they increase in partnered dance thereby fostering pro-social behaviour, what we now need is to establish a number of conditions that allow us to disentangle various aspects of dance in order to see how each of them contributes to, or affects, the experiences of closeness and empathy. We therefore need a bit more knowledge about types of research designs. There are many of these, ranging from fairly simple to extremely complex. Here we will introduce some of the more basic designs. However, these research designs should not be regarded as less valuable than more complex designs, as they can certainly lead to sophisticated analyses, and some have been known to overturn established theories by virtue of their sheer simplicity. A really helpful guide, used here, is that developed by Stangor and Walinga (2014), freely available online under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License. The link can be found by consulting the reference list.

Types of Research Designs

Research designs fall within three main categories: descriptive, correlational, and experimental.

Descriptive research designs employ qualitative methods, being focused on the quality of a participant’s subjective experience. The word ‘quality’ indicates that we focus less on measurable quantities, such as the mathematical values of dimensions of psychological constructs, and more on how a psychological experience is made sense of subjectively. Descriptive research captures the ‘state of affairs’ (of the heart, or the soul, so to speak) as described by a participant who reports his or her understanding of what they are experiencing or have been experiencing (in other words, they report where they are in the process of making sense of that experience). Usually, this information is collected through using various interview techniques (for example structured or semi-structured interviews). This information is analysed using a complex set of methods which allow researchers to code the information they have recorded during the interviews and establish hierarchical and overarching themes. Interestingly, it is sometimes discovered that some of these themes escaped the awareness of the participants themselves. As regards our research example, if one wanted to do qualitative descriptive research on how dance affects empathy and closeness, one would have to establish criteria for selecting a participant sample, then recruit participants, then devise an interview programme, perhaps asking participants how they would describe their feelings of empathy and closeness immediately after they have been engaged in a performance as dancers. After that, researchers would parse the material gathered from each and every interviewee (usually more than one researcher does that separately and some corroboration work is undertaken at the end of this process). Eventually, overarching themes governing relevant sub-themes will emerge. The researchers would thus understand the governing theme that dominates the experience of empathy during dance, with the important limitation that this understanding is derived from subjective accounts, emerging from a participant’s personal (subjective) insight into what has happened to them. While this type of research design is employed by researchers striving for objectivity, for many scientists it remains, well, subjective, and therefore less fit to advance the aim of uncovering objective dimensions of experience. Still, even those researchers who are more scientifically minded acknowledge the extremely important fact that the topics of objective science need must be drawn from the subjective experience of the real world. And, at least as the psyche is concerned, we can only know the real world to the extent that it is registered in our minds, that is, to the extent that a phenomenon we have witnessed leaves us with more or less rich sets of subjective impressions. Yet, as previously mentioned, we can construe measurable dimensions for those impressions, and we can do that in such ways that the richness and relevance of subjective experiences is not lost.

Correlational research designs employ quantitative research methods. Once we start thinking about quantitative research methods we must start thinking about variables in more precise ways. So far we have insisted on the idea that in order to undertake a scientific study of a phenomenon we must establish and measure the dimensions of that phenomenon as we have construed them for the purposes of lab research. That means that we have envisioned that those dimensions will become manifest in the lab and that we could account for their manifestations, by measuring them, as they occur in the participants’ psychological life. However, no two participants are exactly alike. Hence, we will encounter some variation in our measurements. Thus, it now turns out, what we can measure are not the dimensions of a perfect construct, as if that construct could exist somehow apart from a participant’s individual psychological life. What we can measure are dimensions the value of which varies, however little, from person to person. In other words, we measure the variance of one, or sets of, variables (that is, of dimensions that cannot remain fixed, but change according to the individual psychological universe of each experiment participant—and, beyond the lab, of each human person).

We may have decided that it is in the interest of addressing our research questions to explore the relevant phenomenon from several angles, using different types of instruments that come with their own sets of conditions, that is, with constraints specific to one instrument or another. In our example, we already decided that we will study empathy and closeness in partners moving together using the IRI and IOS instruments. A major constraint that comes with this type of behavioural instruments results from one of their main characteristics, namely that they rely on self-report questionnaires. Importantly, the data we collect is not collected directly through unmediated contact with the dimensions of a phenomenon (or, to use a more or less inspired phrase, scooped directly from the participants’ minds). The data is in fact provided by the participants in their self-reports vis-à-vis our questionnaire items. Nevertheless, we can trust this information because the behavioural instruments are validated on large samples and in a variety of contexts, which is to say that mostly the variance in many, many, participants’ responses is sufficiently similar for almost all of these participants to not be in the position of outliers.

Mental note: In statistical analysis an outlier is a value in our data that sits awkwardly outside the homogenous cluster containing most of the other values.

Let us suppose, however, that we have read the scientific literature and came across the notion that there may exist neurophysiological markers for empathy (see for instance Deuter et al., 2018). Diving deeper into the scientific literature, we may conclude that among these can be numbered the following: HRV (High frequency and low frequency heart rate variability, that is, HF-HRV and LF-HRV), P300 amplitudes recorded using electroencephalographs (EEG), and pupil size reactivity. Because in the locus coeruleus–noradrenaline (LC-NE) arousal system intermediate LC tonic and higher phasic firing engender higher cognitive control, and because we can find evidence of higher cognitive control by finding large P300 amplitudes and high HF-HRV (0.15–1.04 Hz) in the context of higher arousal (larger pupil size), we may conclude that our participants experience less affective empathy, but more cognitive empathy; conversely, when low LC tonic firing and intermediate phasic firing is present, along with intermediate P300 amplitudes, high HF-HRV, and closer to normal pupil size, we may conclude that our participants’ experience of empathy is more affective or emotional (Faller et al., 2019; Patel & Azzam, 2005). Let us then suppose that we have decided to use EEG to measure P300 amplitudes to see whether the values we obtain are correlated with the results obtained through using our behavioural instruments. In doing so, we will have adopted a correlational research design.

Mental note: The P300 signal is transient and notably difficult to ‘catch’. Because it is transient, we need to repeat the events that might trigger it, thus making possible the manifestation of a series of event-related potentials (ERPs). We will not discuss ERP research designs on this occasion. Do remember, however, that P300 reflects the mental process of evaluation and categorisation, being a measure of cognitive control, or, rather, attention, and only indirectly and hypothetically a measure of cognitive empathy.

Let us underline a point the importance of which cannot be overestimated, and it is one that is often given pride of place in psychology blogs, online groups, or even a lecturer’s academic office: Correlation does not imply causation. If values are correlated, all that this means is that the variance measured with one set of instruments is closely similar to the variance observed using another set of instruments. The variables vary at the same time and similarly, but we cannot infer solely from that that these two observed variances have the same cause, nor can we say that one variable determines the other.

Point to take forward: Correlation does not imply causation.

Thus, in our example, we cannot say based solely on correlations that the event evidenced by larger P300 amplitudes causes the event that leads to a participant scoring higher for perspective-taking, or the other way around (perspective-taking is one of the two ‘behavioural’ dimensions of cognitive, as opposed to affective, empathy, upon which the IRI instrument is based). All that we can say is that we observed higher perspective-taking scores in experiment participants for whom we also observed larger amplitudes in the P300 signal that we recorded using EEG. When we say ‘event’ here we mean the interaction between a participant and one or, as is usually the case, more stimuli, so that in in fact more than one event takes place (one event may be caused by rhythm, another by seeing or not seeing the dance partner’s face, another by holding or not holding hands). To clarify the similarity in variation we need to know exactly which event leads to which variation, but correlational studies cannot tell us more than that there is a relationship between the variations registered using what are ultimately incommensurable instruments (subjective reports and objective instrumental recordings in our example).

We can, however, venture to predict that, given closely similar conditions, the correlation will be observed again and again; in other words, the similarity in variance is not a coincidence. When we do so, if we have only two variables, we must refer to one of the variables as the predictor variable and to the other variable as the outcome variable. In other words, we could hypothesise that the existence of variance in variable Y (predictor variable) predicts the existence of variance in variable X (outcome variable) (Stangor & Walinga, 2014, 108). In our example, we could hypothesise that larger amplitudes in the P300 signal predict higher scores on the perspective-taking subscale of the IRI instrument. If we measured and processed P300 values and PT scores for 7 participants, and standardised the values to make them mathematically commensurable, we could represent this graphically as in Fig. 1 (note that the graph is intended solely for the purpose of illustration and that it is not based on actual collected data):

In this fictional graph, each dot represents one experiment participant whose P300 amplitudes have been supposedly collected and processed, and who provided self-reported scores on the IRI perspective-taking subscale. The respective values would have been mathematically transformed so that the values of PT scores are commensurable with the values obtained by recording the P300 signal. We notice that as the values of P300 amplitudes are higher on the Y axis, the PT scores increase on the X axis. Let us keep tabs on these values by using a table (Table 1):

Note the straight line in Fig. 1. It indicates that the two variables are in linear correlation. The strength and direction of the linear relationship between the two variables can be measured using the Pearson correlation coefficient, or Pearson's r. We can calculate Pearson's r for our fictive data using SPSS, a statistical analysis software package. First, we need to define our variables on the SPSS Variable View sheet, and then enter our data in the Data View sheet. The result will look like this (Fig. 2):

We calculate Pearson’s r by clicking Analyze > Correlate > Bivariate. In the ensuing screen, we select our two variables from the window on the left and transfer them to the window on the right, obtaining the result shown in Fig. 3. Checking that the option Exclude cases pairwise is selected under Options, we click Continue and then OK. We will obtain the result shown in Table 2.

We can now trace the intersection of P300 with PT to find out Pearson’s r, which in our fictive example has a value of r = 0.959. The result also tells us that the correlation is highly significant at p < 0.001. We will discuss the importance of the p-value in more detail in the next section.

As a rule of thumb, we know that our variables are strongly correlated in a linear relationship when 0.5 < r < 1. The Pearson correlation can take a range of values from +1 to −1. A value of 0 indicates that there is no association between the two variables. A value greater than 0 indicates a positive association; that is, as the measured values of one variable increase, so do the values of the other variable. This measures the strength of the correlation. Whether the correlation is significant is indicated by the Sig. value or the p-value. A small sig./ p-level indicates a stronger relationship. To assess the strength of correlation according to the Pearson correlation coefficient the following scale can be used:

-

1.

High degree: r lies between ±0.50 and ±1, strong correlation.

-

2.

Moderate degree: r lies between ± 0.30 and ±0.49, medium correlation.

-

3.

Low degree: r lies below ± 0.29, small correlation.

-

4.

No correlation: r is zero.

In our fictive example, we calculated that the 2 variables are strongly and positively correlated. If only that were really so!

Experimental research designs employ quantitative research methods. They are used to infer conclusions about the causal relationship between two or more variables. This implies organising a manipulation of one or more variables in order to compare the different effects a variable has on another one or more variables. The difference in effect is caused by the different conditions we create through our manipulation.

Hence, the role of our manipulation is to enable us to control one or more variables in order to disentangle their effects and specify objectively how a variable affects another one or more variables. The variable we control is called an Independent Variable (IV). The variable registering, in its variance, the effect of the IV, is called a Dependent Variable (DV). For an experimental study to work, we must clearly specify our IVs and DVs. In our example, focused on studying the effect of dance on empathy, the IV can be rhythm, and the DV can be closeness. We can have more than one DV, of course. In our example we have two behavioural DVs, empathy and closeness, which we can measure using the IRI and IOS instruments, respectively. If we decided to pursue our exploration of how dance rhythm effects a change in P300 amplitudes, we would have a third DV, a ‘neurophysiological’ DV, which we could name ‘P300’. This would enable us to combine a correlational study with an experimental one. We will not focus on neurophysiological DVs here, but only on behavioural DVs.

Our IV can have several levels, according, for example, to the type of rhythm we wish to explore in terms of its effect on closeness and empathy. There is, of course, more than one rhythm type in our natural world. For example, we can easily distinguish between regular and irregular rhythm. In the subsequently proposed lab work, we will learn how to study and analyse the effect of a two-level IV on two DVs. The IV will be rhythm type with two levels: regular rhythm and irregular rhythm. The DVs will be closeness and empathy. Our data will be collected by using IOS and IRI questionnaires, respectively.

Start thinking: what type of dance, or form of movement together, would you be interested in exploring? These can be partnered dance in pairs, group dance in a circle, lines of dancers facing one another, and some other, but, to ensure representativity, it may be a good idea to choose one of these three, as these are the most commonly encountered forms of moving together.

Now that we have clarified what our IV and DVs are, and what instruments we will use to measure them, in addition to securing sufficient lab space, we must start thinking about the stimuli we will use, and the experiment participants that we need to enable us to carry out our study.

Stimuli

Stimuli are artificial (in the sense of man-made, or, more precisely, researcher-made) recreations of those elements of a naturally occurring phenomenon that we wish to isolate in order to study their effects on other dimensions, aspects, and elements of a phenomenon. Do remember that a ‘global’ phenomenon is composed of many phenomena and that what we include in the ‘globe’ is chosen with a degree of subjectivity, as when we created the construct ‘empathy’ with its measurable dimensions. This ‘globe’ which presumably contains the phenomenon we wish to study is but a slice of natural reality. Natural reality is much more fluid and amenable to myriad varying forces. It often takes a lot of ingenuity to create appropriate stimuli. However, things are easier in the context of the example we have been following. Since our IV levels are regular rhythm and irregular rhythm, all that we need to do is obtain separate recordings of these rhythms.

This can be done using specialised software, such as GarageBand. In our lab demonstration, we will use two sound tracks obtained by programming a 4/4 (regular) rhythm and a 7/8 (irregular) rhythm in GarageBand. Since our focus is rhythm, no other instrument was programmed in order to avoid bringing in more variables that are difficult to control, such as pitch, melodiousness, and harmoniousness. However, it is important to achieve a compromise between a tightly controlled stimulus and real-life situations. For this reason, our sound tracks will contain rhythm produced by selecting a conga drum. While this sound is synthetic (electronically synthesised), it does approximate very well the type of sound humans must have heard since the dawn of times, given that drums, and, generally speaking, percussion, are likely among the first instruments and sound techniques produced in our civilisations.

Importantly, in order to keep a tight leash on our experimental conditions, that is, in order to control the IV as much as possible, both tracks will have exactly the same duration and will be relayed over exactly the same audio devices. The experiment itself will take place in the same room both for our regular rhythm and for our irregular rhythm conditions, again, to minimise the influence of possible extraneous variables that can have an effect on our IV. Note that even the slightest variation in our experimental set-up can become an extraneous variable. In our case, for example, although we will use an audio system to play the sound tracks, the movement of our participants’ feet on the floor (stepping) can create an additional layer of rhythm. That is acceptable if all our participants, across conditions, will move on the same floor. We could, additionally, ask the participants to remove their shoes and move in bare feet or with only their socks on.

Since we now have our stimuli, lab space, a set of measuring instruments, a choice of research design, and a specification of our IVs and DVs, we can focus next on a central aspect of our experiment: the participants.

Between-Subjects and Within-Subjects Designs

Well, we haven’t quite finalised our research design until we decide whether it will be a between-subjects or within-subjects design. This choice affects the number of participants we need for our study. A between-subjects experimental design is one in which different participants are tested in each condition. In our example, if we chose a between-subjects design, we would need to recruit a higher number of participants, as we would have to employ different (teams of) participants in each of our two conditions. In other words, the dancers who will move together in the regular rhythm condition will be other people than the dancers who will move together in the irregular rhythm condition. If we were to employ a within-subjects design, the same dancers who moved together in the regular rhythm condition would participate, in the exact same set-up, in the irregular rhythm condition (preserving their position in space, or their partner, etc.). A within-subjects research design is therefore one in which the same participants are tested in all of our experimental conditions.

-

Points to take forward:

-

In a between-subjects design, different participants undertake trials in each condition.

-

In a within-subjects design, the same participants undertake trials in all conditions.

While within-subjects designs have greater statistical power than between-subjects designs, we must choose carefully which of the two is more appropriate for the experiment we wish to carry out. For example, if we are interested in finding out how a proposed intervention affects a population over a period of time, it is in the interest of our research to choose a within-subjects design. If, for example, we wish to study the efficacy of a treatment on a specific participants sample (e.g. people suffering from a certain condition), one way to do so is to administer the treatment at set time intervals to the same participants. The statistical analyses of our results will give us an indication of the efficacy of the treatment or intervention, that we can then compare to a control group (that is, a group containing an equal number of participants as the treatment group, but whose members did not receive the treatment).

However, when the perception of art is the focus of our research, as is the case in neuroaesthetics, we must consider a number of other issues. To better understand the advantages of a between-subjects approach, we may focus on error variance. Error variance is the variance due to extraneous variables that we have not taken into account. It is also called residual or unexplained variance, that is, variance that we cannot explain because we cannot attribute it to the variables we have chosen to study (in our example, error variance would be variance that cannot be attributed to either regular or irregular rhythm, and may come from differences between participants within their group, such as previous dance expertise). Therefore, we are interested in showing that the proportion of variance attributable to our intervention in each condition (e.g. moving together on regular as opposed to irregular rhythm) is sufficiently large to distinguish the groups even when there exists variance attributable to extraneous variables within each group. If that were the case, and given that dance is a widespread cultural phenomenon (that is, not specific to a set of individuals, such as people suffering from a certain medical condition), the credibility of our results would be strengthened.

One other important thing we need to be concerned with when we study scientifically the mechanisms involved in the perception and creation of art is learning and transfer across conditions. If, as in our example, we want to study the effect of rhythm on closeness and empathy in partnered dance in pairs, we should consider that the longer two individuals spend together, the more empathic they may become toward one another, and the more likely it is that their feeling of closeness vis-à-vis their partner may increase. In studies of other forms of art, such as visual art (e.g. paintings) the participants of a within-subjects design may be primed by sets of images they have previously seen as part of one experimental condition so that how they respond to a different set of images may be biased. In such a case we might be better off if we choose a between-subjects design, unless, of course, we are interested precisely in examining that bias.

Let us then choose, for our lab experiment, a between-subjects design, partly because we want to see through the error variance caused by extraneous variables, and partly because we wish to avoid individuals being biased by the time they spend together in terms of their empathy and closeness responses. However, this does not mean that we cannot do a within-subjects analysis as part of our experimental design. We can include a within-subjects dimension in our experiment without affecting the benefits we derive from our between-subjects design.

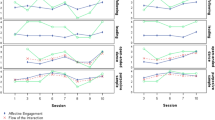

Hence, we are interested in finding out whether the length of time spent by two participants in any one condition has an effect on their experience of empathy and feeling of closeness. This is not the same time-length issue highlighted above, where we wanted to avoid participants becoming too familiar with each other because they have spent a lot of time together by moving together in all conditions, therefore, a much longer period of time than the one on which we focus here. Here, we are simply concerned with the possibility that even, say, a seven-minute-long period of time may be sufficient for the participants’ ‘togetherness’ to have an effect on our DVs. Thus, and this might have been mentioned earlier (think: why?), we will have to take their IRI and IOS measures both before and after the intervention (that is, both before and after the period of time when they move together in pairs on either regular or irregular rhythm). Therefore, we will measure the responses of the same participants at two different time points. We will then have a within-subject design mixed with our between-subjects design: different participants will dance in pairs in each condition (between-subjects), but within each condition the effect of our intervention (the dance) will be measure twice for the same participants (within-subjects). This is called a mixed within-between design.

Are we ready to go? Not yet. What else have we not yet decided on?

Statistical Power, Significance, and Sample Size

Yes, right. We have yet to decide how many participants we need to recruit for our experiment to yield significant results, results that we may say, with sufficient degree of confidence, are representative not only as regards our participants, but as regards the population of which they are a sample. In other words, we need to decide on the number of participants we need in order to obtain a good balance between statistical power and statistical significance.

Statistical Power is a measure between 0 and 1 which indicates how good our experimental set-up is at detecting a false null hypothesis. When we hypothesise that dance on either regular or irregular rhythm affects empathy and closeness, supposing that our analysis will likely confirm our hypothesis, we in fact anticipate that we will find ourselves in one of four positions after our experiment and analyses will have been completed. At that point, we will make a statement based on our statistical analyses, while the reality may or may not be as we state it:

-

1.

We will state that an effect was present and an effect really was present;

-

2.

We will state that an effect was present, but an effect was not really present (Type I Error);

-

3.

We will state that no effect was present, and our intervention really had no effect;

-

4.

We will state that no effect was present, but in reality an effect was actually present (Type II Error).

Remember that, at this point, that is, before our experiment even commences, we are not in a position to make such statements. We merely anticipate that we will find ourselves in one of the four positions, and we need to take precautions to ensure that we will find ourselves in either position 1 or position 3 (preferably in position 1). We need to make sure, as much as we can at this point, that we will not commit a Type I or a Type II error.

To better understand these two types of error, we must discuss the concept of null hypothesis. The null hypothesis is a conjecture of a rather abstract nature: we suppose the worst-case scenario, so to speak, a scenario in which all of our participants are exactly the same, so that there will be absolutely no variation between individual responses during the trial or intervention that they will undergo. If their psychological responses are exactly similar, we will have no grounds for claiming that our intervention had any effect on these participants. From this, we may infer that there is no relationship whatsoever between the intervention and the participants’ responses. In our example, the null hypothesis would be that moving together in pairs with participants positioned face-to-face has no effect whatsoever on their experience of empathy or feeling of closeness, regardless of the group (regular or irregular rhythm) to which they have been assigned. What we in fact hope to achieve is to obtain evidence that will allow us to reject the null hypothesis. As noted, statistical power is expressed as a number between 0 and 1, and the closer it is to 1, the more confident or ‘powerful’ we are in saying that we have not missed an effect if there was one, thus avoiding to commit a type II error. However, we must be aware that we will never be able to have absolute confidence in stating that an effect was present. We must accept that we may commit a type I error, thus accepting that, at least as regards some of our data, the null hypothesis might be true (an effect was not really present).

We must, of course, be willing to take that risk. But we can only accept that risk within certain limits. The risk is represented using a conventional probability value (symbolised using the Greek letter ‘α’) of there occurring a type I error, a value referred to as level of signifance. We set the α value as a percentage of our confidence (e.g. 5%) that we accept is shaky, and if we represent absolute confidence as the unit 1, α becomes 0.05. After running our analysis we will obtain a number quantifying the probability that the mean of the participants’ responses falls within the 5% interval covered by the α value (e.g. 0–0.05). This is our ‘p-value’. If this probability is higher than α we cannot reject the null hypothesis, for fear that we would commit a type I error. This ‘p’ does not measure power, it measures probability; but it is a power-related value, because by increasing the amount of risk we accept (i.e. increasing α), we increase the range of values based on which we decide to reject the null hypothesis, and thus increase our power to say that we haven't missed an effect. However, this increase in power is unsafe, for we increase power by increasing risk. The level of significance conventionally accepted as safe is between 0.001 and 0.05.

As in real life, in statistics, too, power is linked with confidence. Thus, we will not reject the null hypothesis when p is higher than 0.05. Making this choice will allow us to confidently assert that (in spite of our prediction, and, usually, deeply embedded hopes) our intervention did not have a significant effect on the participants. Conversely, when p is smaller than α, we can fairly confidently assert that it is highly likely that our intervention did have an effect. We can represent mathematically the confidence with which we reject the null hypothesis as CI = 100(1 − α)% where CI = confidence interval, and α = percentage of accepted risk that the null hypothesis is true when we say that it isn't (the risk that there is no effect when we say there is one). If, for example, we accept a high risk of committing a type I error, α = 0.6, then our confidence to assert that our intervention had an effect decreases to 40% even though our power is high. But if we accept a small risk by setting the α value between 0.001 and 0.05, our (genuine) confidence increases significantly. In the end, we may now realise, power is the extent to which our confidence matters.

We can, of course, discuss power in different ways. If we say that two groups do not differ when they actually do, so that we mistakenly accept the null hypothesis, we commit a type II error, the probability of which is quantified using the symbol β. In this case, our power to correctly reject the null hypothesis can be calculated as 1 − β. For example, if β = 0.6, then power = 0.4, and we are rather less assured in asserting that there was no effect, because the probability of there actually being an effect that our analysis did not register is too high (β = 0.6). Now, remember that the α value indicated how confident we can be in saying that our power matters, or is significant. Does our confidence in asserting that there was an effect matter, if we are not also confident in saying that if an effect were there we would not have missed it?

Real power, then, is the extent to which a test (p-value) of significance (set α) verifies our confidence about there having been an effect when one really was there. We always hope for the best of all worlds (powerful results with low risk), and, as we will realise during our experimental work, one sure way of satisfying that hope is to increase the number of participants. Imagine we had tested every human being who has ever lived or will ever live. Our analysis would then have something like godly power. Fortunately, that is not possible. In our lab session, we will be much more reasonable in undertaking an experiment, collecting data, and analysing it.

-

Before signing out from this lesson, remember:

-

p (with α=0.05) < 0.05 means that our results have statistical significance;

-

Power > 0.8 means that the test of significance is potent.

References

Aron, A., Aron, E. N., & Smollan, D. (1992). Inclusion of other in the self scale and the structure of interpersonal closeness. Journal of Personality and Social Psychology, 63(4), 596–612.

Balinisteanu, T. (2023). Pilot studies on empathy and closeness in mutual entrainment/improvisation vs. formalised dance with different types of rhythm (regular, irregular, and no rhythm) and coupling (visual, haptic, full coupling): Building a case for the origin of dance in mutual entrainment empathic interactions in the mother–infant dyad. Behav. Sci., 13, 859. https://doi.org/10.3390/bs13100859

Davis, M. H. (1983). Measuring individual differences in empathy: Evidence for a multidimensional approach. Journal of Personality and Social Psychology, 44(1), 113–126.

Deuter, C. E., Nowacki, J., Wingenfeld, K., Kuehl, L. K., Finke, J. B., Dziobek, I., & Otte, C. (2018). The role of physiological arousal for self-reported emotional empathy. Autonomic Neuroscience, 214, 9–14. https://doi.org/10.1016/j.autneu.2018.07.002

Faller, J., Cummings, J., Saproo, S., & Sajda, P. (2019). Regulation of arousal via online neurofeedback improves human performance in a demanding sensory-motor task. Proceedings of the National Academy of Sciences of the United States of America, 116(13), 6482–6490. https://doi.org/10.1073/pnas.1817207116

Montag, C., Gallinat, J., & Heinz, A. (2008). Images in psychiatry: Theodor Lipps and the concept of empathy: 1851–1914. The American Journal of Psychiatry, 165(10), 1261.

Patel, S. H., & Azzam, P. N. (2005). Characterization of N200 and P300: Selected studies of the event-related potential. International Journal of Medical Sciences, 126(11), 2124–2131.

Stangor, C., & Walinga, J. (2014). Introduction to psychology (1st Canadian ed.). BCcampus. https://opentextbc.ca/introductiontopsychology/

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2024 The Author(s)

About this chapter

Cite this chapter

Balinisteanu, T. (2024). Unit 1 Lesson: Behavioural Experiments—Research Designs, Statistical Power, Sample Size Case Study: Empathy and Closeness in Partnered Dance. In: Balinisteanu, T., Priest, K. (eds) Neuroaesthetics. Palgrave Macmillan, Cham. https://doi.org/10.1007/978-3-031-42323-9_3

Download citation

DOI: https://doi.org/10.1007/978-3-031-42323-9_3

Published:

Publisher Name: Palgrave Macmillan, Cham

Print ISBN: 978-3-031-42325-3

Online ISBN: 978-3-031-42323-9

eBook Packages: Behavioral Science and PsychologyBehavioral Science and Psychology (R0)