Abstract

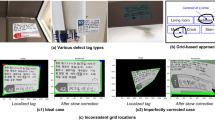

This study aims to improve the performance of optical character recognition (OCR), particularly in identifying printed Korean text marked by hand-drawn circles from images of construction defect tags. Despite advancements in mobile technologies, marking text on paper remains a prevalent practice. The typical approach for recognition in this context is to first detect the circles from the images and then identify the text entity within the region using OCR. Numerous OCR models have been developed to automatically identify various text types, but even a competition-winning multilingual model by Baek et al. does not perform well in recognizing circled Korean text, yielding a weighted F1 score of just 69%. The core idea of the lumped approach proposed in this study is to recognize circles and named entities as one instance. For this purpose, the YOLOv5 is fine tuned to detect 65 types of named entity overlapped with hand-drawn circles and yields a weighted F1 score of 94%, 25% higher than a typical approach using YOLOv5 for circle detection and a model by Baek et al. for subsequent OCR. This work thereby introduces a novel approach for developing advanced text information extraction methods and processing paper-based marked text in the construction industry.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Singh A, Bacchuwar K, Bhasin A (2012) A survey of OCR applications. IJMLC, 314–318. https://doi.org/10.7763/IJMLC.2012.V2.137

Baek J et al (2019) What is wrong with scene text recognition model comparisons? Dataset and model analysis. In: 2019 IEEE/CVF international conference on computer vision (ICCV). IEEE, Seoul, Korea (South), pp 4714–4722. https://doi.org/10.1109/ICCV.2019.00481

Islam N, Islam Z, Noor N (2016) A survey on optical character recognition system. J Inf Commun Technol 10:4

Bassil Y, Alwani M (2012) OCR post-processing error correction algorithm using google online spelling suggestion. arXiv:1204.0191

Gossweiler. R, Kamvar. M, Baluja S (2009) What’s up CAPTCHA?: A CAPTCHA based on image orientation. In: Proceedings of the 18th international conference on World wide web - WWW 2009. ACM Press, Madrid, Spain, p 841. https://doi.org/10.1145/1526709.1526822

Optical character recognition market size report (2030). https://www.grandviewresearch.com/industry-analysis/optical-character-recognition-market. Accessed 14 July 2022

Awel MA, Abidi AI (2019) Review on optical character recognition. Int Res J Eng Technol (IRJET) 06:5

Nadeau D, Sekine S (2007) A survey of named entity recognition and classification. Lingvisticæ Investigationes. 30:3–26. https://doi.org/10.1075/li.30.1.03nad

Packer T.L et al (2010) Extracting person names from diverse and noisy OCR text. In: Proceedings of the fourth workshop on Analytics for noisy unstructured text data—AND 2010. ACM Press, Toronto, ON, Canada, p 19. https://doi.org/10.1145/1871840.1871845

Rodriquez KJ, Bryant M, Blanke T, Luszczynska M (2012) Comparison of named entity recognition tools for raw OCR text. In: 2012 conference on natural language processing (KONVENS), p 5

Hamdi A, Jean-Caurant A, Sidère N, Coustaty M, Doucet A (2020) Assessing and minimizing the impact of OCR quality on named entity recognition. In: Hall M, Merčun T, Risse T, Duchateau F (eds) International conference on theory and practice of digital libraries. Springer, Cham, pp 87–101. https://doi.org/10.1007/978-3-030-54956-5_7

Zheng Z, Lu X-Z, Chen K-Y, Zhou Y-C, Lin J-R (2022) Pretrained domain-specific language model for natural language processing tasks in the AEC domain. Comput Ind 142:103733. https://doi.org/10.1016/j.compind.2022.103733

GitHub - ultralytics/yolov5: YOLOv5 in PyTorch > ONNX > CoreML > TFLite. https://github.com/Ultralytics/Yolov5. Accessed 07 July 2022

Pi Y, Duffield N, Behzadan AH, Lomax T (2022) Visual recognition for urban traffic data retrieval and analysis in major events using convolutional neural networks. Comput. Urban Sci. 2:2. https://doi.org/10.1007/s43762-021-00031-w

Xu Y, Zhang J (2022) UAV-based bridge geometric shape measurement using automatic bridge component detection and distributed multi-view reconstruction. Autom Constr 140:104376. https://doi.org/10.1016/j.autcon.2022.104376

Sezen G, Cakir M, Atik ME, Duran Z (2022) Deep learning-based door and window detection from building façade. In: The international archives of the photogrammetry, remote sensing and spatial information sciences. Copernicus GmbH, pp 315–320. https://doi.org/10.5194/isprs-archives-XLIII-B4-2022-315-2022

Redmon. J, Divvala S, Girshick R, Farhadi A (2016) You only look once: unified, real-time object detection. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR), pp 779–788. https://doi.org/10.1109/CVPR.2016.91

Chng CK et al (2019) ICDAR2019 robust reading challenge on arbitrary-shaped text - RRC-ArT. In: 2019 International conference on document analysis and recognition (ICDAR), pp 1571–1576. https://doi.org/10.1109/ICDAR.2019.00252

What is wrong with scene text recognition model comparisons? Dataset and model analysis. https://github.com/clovaai/deep-text-recognition-benchmark. Accessed 16 May 2022

Korean font image. https://aihub.or.kr/aidata/133. Accessed 09 May 2022

Rezgui Y, Zarli A (2006) Paving the way to the vision of digital construction: a strategic roadmap. J. Constr. Eng. Manage. 132:767–776. https://doi.org/10.1061/(ASCE)0733-9364(2006)132:7(767)

Acknowledgements

This work was supported by a National Research Foundation of Korea grant funded by the Korean government (No. 2021R1A2C3008209).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Suh, S., Lee, G., Gil, D. (2024). Lumped Approach to Recognize Types of Construction Defect from Text with Hand-Drawn Circles. In: Skatulla, S., Beushausen, H. (eds) Advances in Information Technology in Civil and Building Engineering. ICCCBE 2022. Lecture Notes in Civil Engineering, vol 357. Springer, Cham. https://doi.org/10.1007/978-3-031-35399-4_5

Download citation

DOI: https://doi.org/10.1007/978-3-031-35399-4_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-35398-7

Online ISBN: 978-3-031-35399-4

eBook Packages: EngineeringEngineering (R0)