Abstract

This contribution moves from the assumption that algorithmic outcomes disadvantaging one or more stakeholder groups is not the only way a recommender system can be unfair since additional forms of structural injustice should be considered as well. After describing different ways of supplying digital labor as waged labor or consumer labor, it is shown that the current design of recommender systems necessarily requires digital labor for training and tuning, making it a structural issue. The chapter then presents several fairness concerns raised by the exploitation of digital labor. These regard, among other things, the unequal distribution of produced value, the poor work conditions of digital laborers, and the unawareness of many individuals of their laborer’s condition. To address this structural fairness issue, compensatory measures are not adequate, and a structural change of the ways training data are collected is necessary.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction: Multisided (Un)Fairness in Recommender Systems

Current research on AI fairness extensively focuses on ways to detect, measure and prevent discrimination and unjustified unequal treatment of individuals or group of individuals being affected by automated decisions (Barocas et al. 2019). Examples of unfair outcomes of automated decisions include discrimination of credit applicants based on gender (Verma and Rubin 2018) or race (Lee and Floridi 2021), unfair distribution of access to medical treatment among patients (Giovanola and Tiribelli 2022), disadvantaging women when selecting job applications (Dastin 2018), etc. These examples have a common denominator: fairness issues are investigated in one specific stakeholder’s group; namely, the group of users applying for access to a service or a position and being subject of algorithmic classification. While this kind of fairness evaluation is certainly essential to detect discrimination of groups or individuals, in some cases a multi-sided consideration of the different actors involved in the design, commercialization and use of an AI system is necessary to complete the picture. The ethic audit of recommender systems is one of these cases.

Evaluating fairness in recommender systems is a complex task and requires a multi-stakeholder analysis (Burke 2017; Milano et al. 2021) as well as a precise definition of the fairness aspects and indicators being audited (Deldjoo et al. 2021). Since recommender systems are socio-technical systems tailoring content recommendations for different users, Milano et al. identify four categories of stakeholders involved in a recommendation: users, content providers, system viz. platform providers and developers, and society at large (Milano et al. 2021). When asking whether a recommender system is a fair system, it is therefore necessary to ask to whom the system is being fair. Indeed, unfair treatment might affect either a group of members of one (or more) stakeholder group(s), or one (or more) stakeholder group(s) at large. To analyze a cross-stakeholder group scenario, Burke introduced the notions of C-fairness (consumer fairness), P-fairness (provider fairness) and CP-fairness (consumer and provider fairness) (Burke 2017) highlighting that, when it comes to recommending content or services, not only consumers can be discriminated (C-fairness issues), e.g. by not being shown offers that are classified as being out of their league even though they could be interesting for them, but also service providers (P-fairness issues), e.g. by being given reduced visibility to their product compared to other products of the same type. Depending on the risk for consumers, service providers, or both, of being discriminated against, a system should meet C-fairness, P-fairness or CP-fairness conditions (Burke 2017). A concrete example of discrimination risk for both stakeholders can be found in real estate sharing economy platforms, where real estate owners offering their property for rent are matched with travelers looking for accommodation. In this scenario, it has been shown that the system’s performance might vary across demographic groups based, among other things, on users’ self-declared gender, sexual orientation, age, and main spoken language (Solans et al. 2021). Thus, while some travelers belonging to specific demographic groups might experience limited access to housing, some renters might enjoy less visibility on the platform.

After asking who is being treated unfairly, it is essential to ask how the affected stakeholders are being treated unfairly. This adds a complexity layer to the analysis since there may be different indicators of unequal treatment. When it comes to (mis)classification of individuals, much effort is being put into current research to identify suitable metrics to quantify the disparity of system performance for different demographic groups (Mehrabi et al. 2021; Verma and Rubin 2018). Since in some applications misclassification can lead to missing important life chances, such as job or education opportunities, or access to credit or housing, it is crucial to detect and address the fairness issues related to algorithmic discrimination based on demographic attributes. In addition to this, there are fairness issues that are not related to misclassification and are hardly expressible through statistical quantities. These issues concern, among other things, the application environment of an AI-system, the decision-making process (procedural fairness) (Grgić-Hlača et al. 2018), and structural injustice (Kasirzadeh 2022). Therefore, a stakeholder belonging to a disadvantaged group might experience discrimination even though a fairness metric does not detect an unfair outcome, e.g., by being penalized before interacting with or being scrutinized by an AI system or being in a disadvantaged position compared with other stakeholders interacting with the system, e.g., having less control on the system or less bargaining power than others.

In this chapter I describe a structural fairness issue of this last type concerning recommender systems. I argue that certain stakeholder groups are structurally in a disadvantaged position because of the design of most recommender systems and their commercial implementation in a techno-capitalist environment. In Sect. 5.2, I define the disadvantaged stakeholders as those supplying data used to train and fine-tune a recommender system through their “digital labor”. I characterize digital labor as a form of exploitation and describe different forms it may take. In Sect. 5.3, I highlight why the exploitation of digital labor is unethical by pointing not only at the unfair distribution of produced value among stakeholders, but also at further related issues concerning transparency and human well-being. In Sect. 5.4, I propose ways to address this structural fairness issue through the acknowledgment of digital labor, its regulation, and the end of its exploitation.

2 Digital Labor as a Structural Issue in Recommender Systems

In the last two decades, many scholars attempted to rethink the Marxist concept of labor in light of the digital transformation of capitalism and of the rise of social media and platform economy, examining new sites of labor market and value production (Fuchs and Fisher 2015; Maxwell 2016; Scholz 2013). Digital labor is generally understood as a value-producing activity in online environments and can either be waged or unwaged (Scholz 2013). In both cases it is characterized by explicit exploitation dynamics. Digital labor can take many forms depending on who is producing value and how the laborer’s activity is exploited. In this section I introduce some kinds of digital labor presenting very different settings, but sharing the feature of being value producing activities subject to exploitation.

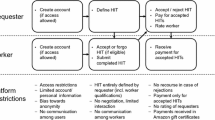

Starting with waged labor, it is possible to consider the cases of independent gig workers and of employed workers. Gig work based waged digital labor such as click-working to train AI-systems on Amazon Mechanical Turk is usually poorly paid, and until now did not entitle workers to a minimum wage (Aytes 2013). The almost complete absence of laws regulating work on emerging platforms at the beginning of the last decade, as well as the absence of a syndicate for gig workers, paved the way for workers’ exploitation in digital environments. In addition to offering low pay for completing tasks, gig work platforms did not provide social or health insurance for workers, making their life and work conditions even more precarious. Contrary to independent gig workers, employed workers receive a fixed salary. However, this is not a guarantee of good work conditions. We can take content moderation on Facebook as an example. Content moderators might be part-time or full-time employees hired by the social media or a third-party company and not gig workers. However, also in this case unacceptable work conditions and low pay were reported (Newton 2019). Facebook content moderators revealed that employees cope with seeing traumatic images and videos by telling dark jokes about committing suicide, then smoking marijuana during breaks to numb their emotions. Moreover, employees are constantly monitored at the workplace and are allowed to take very few short breaks. After they leave the company, they often develop PTSD-like symptoms and are not eligible for any support by Facebook (Newton 2019). All these forms of waged digital labor have been recently referred to as “ghost work” (Gray and Suri 2019) to highlight the fact that those people executing (micro)tasks essential for the functioning of many apps and platforms we use in our everyday life are invisible to the end users.

Concerning unwaged digital labor, also referred as a kind of consumer labor (Jarrett 2015), users are not always aware they are engaging in value-producing activities, e.g. by training an AI-system or providing businesses with essential data. Using captchas and re-captchas as authentication methods is an example of unpaid cognitive labor since most users don’t know that, at the same time, they are producing valuable data for image recognition systems (Aytes 2013). The same goes for tagging images and using hashtags on social media and other online platforms since this contributes to improved content labeling by machine learning systems (Bouquin 2020; Casilli and Posada 2019). Some scholars highlighted that even general online time on social networks, engagement with social media posts or searches on search engines should be seen as a form of consumer labor since they produce navigation data that are very valuable for the service provider (Fisher 2020; Vercellone 2020). Even though nowadays an increasing number of users are aware that navigation data are recorded and processed for algorithm optimization, user classification, targeted advertising and many other purposes, for many years ignorance about these facts were prevailing and many users did not know they were exchanging digital labor for search results or social media content. On subscription platforms users might even pay while supplying service providers with digital labor. It should be reminded that consumer labor does not only concern digital services and can be found in many other industries (Jarrett 2015) – for example when people advertise for clothing companies by having their logos printed over the shirt, or when user behavior is tracked by stores. The specificity of our case, that is, of recommender systems on digital platforms, resides in the fact that users, through their online activity, supply navigation data that are indispensable for the system to run.

Moreover, there are forms of unwaged digital labor that are not disguised by system providers and of which users are aware. One example is the unpaid creation of content that will be spread by recommender systems, thereby fueling their very functioning and constituting their reason of existence. While in some cases, posters might improve their reputation and visibility, or enjoy commercial revenue from the creation of content, private posting aiming at reaching a network of friends or followers does not generally have such a return. Particularly remarkable is the case of cultural and/or creative content produced by semi-professionals or amateurs on blogs, vlogs and other online platforms (Terranova 2000), in which individuals invest a great amount of time in work that is hardly acknowledged or rewarded by the cultural industry or by system providers.

It is important to remark that digital labor does not only concern the users’ group but is rather a cross-stakeholder issue. Indeed, click-workers and gig workers constitute an independent stakeholder group since they are not necessarily platform users and are not covering the users’ role in any case while completing system-training tasks. The same goes for content creators, who might be digital laborers working from a service provider’s side using a content recommending platform to promote the product they represent.

To conclude this section, it is possible to show that the above-mentioned forms of digital labor are structurally necessary for the existence, functioning and profitability of recommender systems in different application environments. First, the paid or unpaid production of training data for an AI-system allows one to optimize functions such as natural language processing and image recognition, which are essential, for example, to classify social media posts and recommend them to the right users (Bouquin 2020). Furthermore, content moderation by human operators – irrespective of their employment status – is vital both to avoid the spread of harmful or illegal content that AI-content moderation systems fail to recognize, and to allow content that was wrongly flagged as offensive, whose removal would constitute a limitation on free expression. A functioning moderation loop can prevent digital platforms from losing a consistent share of their profits. For instance, in the European legal framework, as prescribed by the Digital Service Act, failure to remove notified illegal content leads to the platform’s civil liability. Finally, when it comes to consumer labor, users in the role of either content viewers or creators, provide both the raw material and the final product of recommender systems and of the platforms they run on. Even though some news aggregators, as well as many music and film streaming platforms, also recommend content created by professionals or at least not in digital labor conditions, user generated content (UGC) is indispensable for all social networks, which could not exist without it. On dating apps recommending possible users to match based on their proximity, online activity and shared interests and photos (Tinder Newsroom 2022), users’ profiles are the very UGC being consumed. In addition, users’ online behavior and navigation data are necessary to improve the expected relevance of recommendations and, as a consequence, users’ engagement on the platform. Moreover, users’ content views are the very product of those content recommendation platforms (including social networks) whose main revenue depend on advertising. Without UGC and/or the monetization of users’ attention, many content recommendation platforms would neither have any source of revenue nor a reason to exist.

3 Fairness Issues from Value Distribution to Work Conditions and Laborers’ Awareness

The reliance of recommender systems on one or more forms of digital labor is structural and design driven. This fact is part of the reason why digital labor is so valuable for system providers. It is difficult to estimate how much value digital labor produces, but for many activities it is possible to roughly quantify the worth at stake. A user spending online time on a social network and whose attention is being monetized through targeted advertising is producing value worth the cost of the single advertisement’s views and clicks,Footnote 1 plus a value corresponding to the worth of keeping the system updated to their current interests to optimize future content recommendation. Content creators might generate content for free, which then contributes to keeping users online that are either targeted with advertising or paying a subscription (or even both). A group of click-workers receive cents to train software that will be sold for thousands of dollars. A group of moderators paid minimum wage saves millions of dollars that would otherwise be spent on fines, lawyers and statutory damages, allowing tech companies to have higher profits. These examples highlight two main recurrent and not mutually exclusive ways digital labor produces value. The first is the direct monetization of the individual activity without considering its synergy with other laborer’s activity and is more intuitive to quantify. Examples include a user clicking on advertising, a gig worker filling out data individually in a database that will be sold at a high price, a content creator creating content that will be put behind a paywall by the system provider, etc. The second derives from the synergy between the activity of many laborers and requires a holistic consideration of the value producing process. The activity of a single click-worker classifying images to train an image recognition system, like the activity of an individual worker in an assembly line, do not produce any value considered alone. Value is produced only if the outcome of this activity is integrated with the outcome of many other worker’s activity, e.g., contributing to form a database big enough to train a system that will be sold for a high price or that will successfully recommend ads. The same goes for users’ navigation data. These, considered together, are actually extremely important for recommender systems, since automated predictions based, among other things, on collaborative filtering techniques, that is, in filtering patterns of rating or usage produced by users’ interaction with the systems (Koren and Bell 2011).

In order to understand how value is distributed among stakeholders, it is also necessary to make an estimate of the value that is given to digital laborers in exchange for their value producing activity. However, this is not an easy task either since the value given to digital laborers in exchange for work is not always a monetary one. Following the above-mentioned examples, three categories of laborer can be distinguished based on the quality and quantity of value corresponding to them. The first is constituted by the waged laborer paid money to complete tasks. The other two derive from a sub-classification of the group of unwaged laborers, that is, those supplying “consumer labor” (Jarrett 2015). On the one hand, there are those using a service for free – who are very likely to be targeted with ads, producing value both individually and in synergy with other users. On the other hand, there are those paying a subscription fee to access a service – who usually have access to more exclusive content and whose navigation might be ads-free, making them produce less or no value individually, but still being involved in synergic value production. Indeed, even though they are corresponding the monetary value for the service they get to the system provider, they still supply digital labor since their ratings and navigation data are necessary for the functioning of the recommender system, especially if running on collaborative filtering. I’ll call the first group “subscription-free laborers” and the second group “subscriber laborers”. I will use the label “consumer laborers” to refer to both.

Contrary to the waged laborer, consumer laborers do not receive any money. They are given something else in exchange for their value-producing activity and, in the case of subscriber laborers, for their subscription fee. What they get is namely what is keeping them hooked to their online habits (Eyal and Hoover 2019): interesting information, entertaining content, exciting networking opportunities in work and private life, etc. Moreover, in the case of social media, hosting and spreading UGC is part of the service. Content recommendations are valuable if they are relevant, that is, if a user finds the recommended content interesting, entertaining, etc. While it is possible to measure the relevance in terms of accuracy from a statistical point of view, for example comparing predicted ratings of an item with the actual user ratings (Doshi 2018), measuring relevance from the subjective point of view of a user and quantifying its value for them poses a challenge. In the case of a subscriber, the willingness to keep paying a subscription fee to receive content recommendations from a certain provider instead of looking for other options or cancelling the service, might be taken as an indicator that the service is worth at least the amount of the subscription fee to the user.Footnote 2 Users’ subscriptions is a successful business model for many content recommending platforms such as Netflix and Spotify, and ads-free, premium versions of dating apps like Tinder and Bumble, of social networks like LinkedIn, and of video broadcasting platforms such as Youtube, which crossed the 50 M subscribers threshold in 2021 (blog.youtube 2022). Moreover, social networks such as Instagram and TikTok are exploring subscription models to individual influencers’ accounts (Dutta 2022; TikTok 2022).

Also, in the case of subscription-free laborers, the willingness to start paying a fee could be taken as an indicator of the value recommended content has for them. How much should the fee be, and should it be the same for all users? Let’s consider the case of Meta (formerly Facebook Inc.) social networks. If Meta wanted to provide ads-free navigation, they should earn their revenue by collecting subscription fees. In 2020 their average revenue per user (ARPU) was 32.02 USD (Dixon 2022b). Considering the geographical distribution of the revenue adds key information: in 2020 Facebook Inc. ARPU was 163.86 $ in the US and Canada, 50.95 $ in Europe, 13.77 $ in Asia and Pacific, and 8.76 $ in the rest of the world (Dixon 2022a). Requiring a 32 $/year subscription fee would therefore be inadequate for two reasons: first, it would not reflect the regional revenue of the company; second, it would not consider the different average income around the world. Accordingly, the subscription fee should be adapted to the regional average ARPU and national average income. Considering that the Meta group profits are growing – 40.96 $ global ARPU in 2021 (Dixon 2022b) and 98.54 $ in the first half of 2022 just in US and Canada (Dixon 2022a) –, and that these averages include inactive users, making it reasonable to suppose that excluding inactive users from the count would increase the sum significantly, would an average US active user be ready to pay around 200$/year to be on the Meta Group social networks?

Since the answer to this question depends on the individual degree of appreciation of the service and on the impact of the fee on the individual income, it is not possible to provide a general answer. Some surveys show that the majority of participants would still prefer an ads-based business model to a subscription-based one (Hutchinson 2020; Sindermann et al. 2020). However, this might depend not only on satisfaction with the service, but also on the affordability of the fee. Imagine a low-earning person having to pay a monthly fee for every digital service they use: one for the Meta group, one for the Google-Alphabet group, one for Spotify, one for Netflix, one for Amazon, and so on. The digital services bill would easily go over 100$/month. If every digital platform – including search engines – added a subscription fee, a consequence would be that low-income people would have to quit using some services and experience digital exclusion. This would also not be in the economic interest of tech companies since they would lose users and revenue in this way. Since just a smaller part of the users would be interested in and could afford to pay a subscription fee to use services that are free right now, for tech companies to keep increasing their profits – which is the companies’ goal in the capitalist economic framework – exploiting consumer labor by monetizing their online time must belong structurally to their business model. Unless it was against the law, of course.

The considerations on the different worth of produced value and corresponded value of different kinds of digital labor presented in this and in the previous section can be summed up as follows:

-

1.

Waged laborers are likely to be paid poorly, often below minimum wage, even though their work is indispensable to train and develop software commercialized for thousands of dollars to many customers and essential to run systems at the base of million dollars’ worth businesses.

-

2.

Consumer laborers produce essential data for collaborative filtering, contributing to make predictions more accurate. Without processing their navigation data, recommender systems could not work at all. This happens without any economic return and irrespective of whether the laborer is paying a subscription or not.

-

3.

Subscription-free laborers’ online time is monetized, among other things, through targeted advertising. It is arguable whether the value of the content recommendation received in exchange is worthy for the laborers as the monetization of their time is for tech companies and depends on the individual case. While some people might find this exchange fair or just don’t mind the fact that their data are being further processed, all those not valuing the received content recommendations enough are involved in an unbalanced exchange.

It is now possible to highlight several structural fairness issues related to the value production of different kinds of digital labor and to their redistribution. Starting from the mere consideration of the exchanged value, an ethical issue concerning the unfair distribution of the generated economic value immediately stands out. On the one hand, the very low pay of the waged laborer allows system providers to increase their profits, which they partially redistribute to a reduced number of high-earning executives, software developers, marketing and communication managers, etc., without rewarding those laborers whose work fuels ML-systems in the first place.Footnote 3 On the other hand, consumer laborers are supplying essential navigation data for free, which, processed together, allow the functioning of recommender systems. Some subscription-free laborers might believe that they are getting valuable content in exchange for that, but they are already being targeted with ads in exchange for content recommendation, which makes the additional, synergic way of producing value come on top. Only some subscription-free laborers might find recommended content so valuable to think it fair to supply the system provider with so much digital labor.

Focusing specifically on waged laborers, another issue is related with their unfair work conditions. On the one hand, workers are poorly paid, which in the case of platforms crowdsourcing work from independent contractors such as Amazon Mechanical Turk is usually below minimum wage (Irani 2015) and could amount to one or two dollars an hour (Milland 2019; Newman 2019). This pushes gig workers to extend their working days and be always available for new gigs to make a living wage. Moreover, they usually don’t have job benefits such as health or social insurance (Sawafta 2019). On the other hand, work conditions are physically and mentally extenuating. Contract-workers have very few short breaks and are constantly monitored (Newton 2019). Content moderators and content taggers are exposed to sensitive content that is explicit and/or offensive and left without help to alleviate eventual trauma cause by viewing this content (Newton 2019; Sawafta 2019). These work conditions put workers’ health at risk and are far from guaranteeing any financial stability or work-life balance. Finally, since most tasks can be executed remotely, digital labor represents a case of work outsourcing and offshoring, and can be seen as part of the larger phenomenon of “algorithmic coloniality” or “data colonialism” (Mohamed et al. 2020), meaning that inhabitants of former colonies are still affected by certain oppression and exploitation patterns they used to be subjected to during the colonial time. In concrete terms, users in western countries usually benefit from the result of underpaid digital labor in Africa, Latin America and Southeast Asia (Anwar and Graham 2019; Rani and Furrer 2021).

Focusing on consumer labor, systems providers’ limited transparency and/or users’ unawareness concerning the use of their navigation data puts many users in a disadvantaged position in the stakeholders’ group since they lack the resources to control what data they provide and how this is monetized, and therefore to defend their privacy. The fact that many digital services are controlled de facto by monopolies or oligopolies of big players – owning the most used platforms and being able to develop more performing software because of the larger amount of training data they have access to – further diminished the bargaining power of single users involved in an unfair exchange loop. On top of this, the discrimination issues mentioned at the beginning (Barocas et al. 2019; Burke 2017; Milano et al. 2020) also apply to the consumer laborer since the content recommendation they get in exchange for their digital labor and/or their subscription fees might be inaccurate and biased against some user groups.

Considering the unfair treatment and the poor working conditions of digital laborers, the label “digital proletariat” was used by several authors to highlight the analogy with the factory working class during the industrial revolution (Gabriel 2020; Jiménez González 2022; The Economist 2018). As well as at the beginning of the industrial age, the absence of laws and regulations protecting laborers’ rights led to their exploitation. The acknowledgment of the fairness issues concerning digital labor calls for more laborers’ rights and for tailored solutions to tackle the problem in many application fields – including recommender systems.

4 Addressing the Problem

As shown in Sect. 5.2, digital labor is a structural issue in recommender systems. That means that those fairness issues related to it, like the case of discrimination issues rooting in structural injustice, cannot be simply addressed through measures aiming at solving the problem by correcting code or datasets – in other words, cannot be solved by computer scientists and software developers alone since the problem is not just a computational issue (Balayn and Gürses 2021; Kasirzadeh 2022). To address structural injustice, the whole economic and socio-political frameworks surrounding the examined unjust interactions should be considered in order to understand how responsibility for the generated disadvantages is distributed (Young 2011). In the specific case of digital labor in recommender systems, we have seen that the absence of a specific legal framework to regulate new forms of work, combined with the launch of new digital platforms whose ability to succeed in a capitalist market depends on the collection of cheap data in a large amount and short times, and with the great demand of recommendations for digital content, facilitated the establishment of labor exploitation practices. Acknowledging this fact and acknowledging the existence of digital labor is the necessary first step towards a fairer treatment of those stakeholders being now disadvantaged.

Focusing on the unfair distribution of produced value, some observers suggest the introduction of a “data dividend”, that is, a share of the worth generated by data processing to be paid by tech companies to the users (Feygin et al. 2019). A similar proposal is the introduction of a digital basic income – to be funded through higher taxation of tech companies – to compensate users for their digital labor and for the negative consequences that the platform and gig economies are having on the job market (Ferraris 2018, 2021). Even though the will to fairly redistribute value shared by these approaches is well intended, it can be objected that these solutions do not address fairness issues at the root and might even raise additional concerns. Indeed, getting financial compensation for digital labor – whatever form this compensation takes – can be seen as an incentive for users to sell off their privacy and other basic rights. Moreover, this would raise further questions concerning who will determine the amount of the data dividend. Given the large number of data dividend beneficiaries (potentially the whole world population), even the multi-billion-dollar revenues of the biggest tech industries would end up being split in single-digit shares (Tsukayama 2020). This cannot represent a form of compensation for giving up privacy as a basic right or fair work conditions – which are rather priceless – nor can it smooth out the social inequalities accentuated by digital labor. Therefore, cash flow from tech-companies to users will not solve fairness issues.

Instead of compensating digital laborers for generating useful data, laborer exploitation should not occur in the first place. Concerning waged workers, workers’ rights – e.g., as formulated in Art. 23 and 24 of the Universal Declaration of Human Rights (United Nations 1948) or in Art. 6 and 7 of the International Covenant on Economic, Social and Cultural Rights (OHCHR 1976) – should be respected. The effective hourly pay should allow a decent standard of living and in no case be below minimum wage; work conditions should not put workers’ physical or mental health at risk; rest, leisure and limitation of working hours, as well as periodic holiday with pay, should be guaranteed; workers should have the right to form and join trade unions and the right to strike. This can be achieved by acknowledging waged digital labor as work and regulating this as such. Also, the exploitation of gig workers masked as “independent contractors” should be subject to stricter regulation aimed at guaranteeing respect of human and workers’ rights.Footnote 4 In general, supply chain laws like the one entered in to force in Germany of January 1st 2023 (BAFA 2023) or the one being currently drafted by the European Commission (European Commission 2022), requiring large companies to investigate their supply chains, to identify corporate social responsibility risks, and to take appropriate action when risks for the environment or for human rights are discovered, represent a powerful tool to fight workers’ rights violations and should be applied to the digital labor market as well. Furthermore, for workers to stand united for their rights and gain bargaining power when it comes to negotiating work conditions with big corporations, gig workers and digital laborers’ trade unions are needed.

Concerning consumer labor, transparency about data collection and processing should be guaranteed.Footnote 5 Supplying system providers with data that are not strictly necessary for the functioning of the system should be the result of a visible opt-in choice and not a default setting that can be opted out of. Users should be able to decide in an informed way whether they want to supply additional data in exchange for a serviceFootnote 6 and the actual form of the terms of service of many digital services do not allow this kind of decision since they are too long and hardly comprehensible (Patar 2019), and users are nudged to accept them without reading them (Berreby 2017). It should be made clear in a brief and understandable way for the average user which data are necessary for the functioning of the recommender system and whether the system providers intend to further process this data for training (or other) purposes for users to make an informed decision on accepting the service conditions. Indeed, while system providers must always comply to applicable law concerning transparency and data protection and regulations should address the application scenarios that put user rights and societal wellbeing at risk, when a system is released, it is ultimately up to individual users to decide whether the service is worth the data (eventually coming on top of a service fee) that are lawfully asked in exchange.

5 Conclusion

This chapter has shown that the fast development of work in digital environments met a regulatory gap that allowed different forms of labor exploitation. This constitutes a structural fairness issue for recommender systems since their functioning and their success in the market depends on digital labor and on the unjust practices connected with it. To tackle this problem, digital labor must be acknowledged for what it is, and future regulations must counter unfair treatment of digital laborers. This will require a structural change of the value production and redistribution processes, remunerating waged workers with adequate pay, granting proper work conditions, and giving to users a real choice when it comes to the exchange of data for services.

Notes

- 1.

Also people reading magazines or watching TV are exposed to advertising. However, the specificity of recommended advertising on online platforms is microtargeting. On media that do not allow microtargeted advertising such as cable TV or magazines, it is still possible to target a specific group of customers – e.g., young readers of an indie music magazine, people interested in new furniture for their home reading an interior design magazine, or children watching cartoons on TV on Sunday morning – but all the readers and viewers, including those who are not potential customers, will see the same advertising. This fact affects how the price of advertising space is determined since in the micro-targeting scenario this can be related to the number of potential customers reached and to the actual clicks on the ad, while in the other case it is related, among other things, to the overall visibility of the medium and its prominence for the target audience.

- 2.

In general, and not limited to the recommender system scenario, this consideration does not apply to services that are strictly necessary to the user and/or to which there are no alternatives, such as paid software or network subscription necessary to carry out a work task, and/or whose fee payment is compulsory, such as the broadcasting contribution for public radio and television in some countries. In these cases, users have to pay the fee even if they don’t value the service. However, this is unlikely to be the case for many application fields of recommender systems and, at least nowadays, definitely not the case for the field of entertainment.

- 3.

Of course, these considerations on the unfair redistribution of value, as well as those regarding poor working conditions, also apply to many other industries and were at the center of Marx’ critique of labor after the first industrial revolution (Fuchs and Fisher 2015). As mentioned above concerning consumer labor, the specificity of our case resides in the fact that the unfairly distributed surplus value comes from data production and processing.

- 4.

For challenges to the existing law due to virtual work see Haupt and Wollenschläger (2001).

- 5.

In the European legal framework, the General Data Protection Law (GDPR) addresses these issues. See also the contribution by Levina and Mattern in Section III of this volume for a discussion of transparency and data protection issues.

- 6.

References

Anwar, Mohammad Amir, and Mark Graham. 2019. Digital Labour at Economic Margins: African Workers and the Global Information Economy. Review of African Political Economy.

Aytes, Ayhan. 2013. Return of the Crowds. Mechanical Turk and Neoliberal States of Exception. In Digital Labor: The Internet as Playground and Factory, ed. Trebor Scholz. New York: Routledge.

BAFA. 2023. Information on the Supply Chain Act. https://www.bafa.de/DE/Lieferketten/Multilinguales_Angebot/multilinguales_angebot_node.html. Accessed 17 Mar 2023.

Balayn, Agathe, and Gürses, Seda. 2021. Beyond Debiasing: Regulating AI and its inequalities. https://edri.org/wp-content/uploads/2021/09/EDRi_Beyond-Debiasing-Report_Online.pdf.

Barocas, Solon, Moritz Hardt, and Arvind Narayanan. 2019. Fairness and Machine Learning. fairmlbook.org.

Berreby, David. 2017. Click to agree with what? No one reads terms of service, studies confirm. https://www.theguardian.com/technology/2017/mar/03/terms-of-service-online-contracts-fine-print. Accessed 24 Sept 2022.

blog.youtube. 2022. 50 million. https://blog.youtube/news-and-events/50-million/. Accessed 4 Sept 2022.

Bouquin, Stephen. 2020. “Il n’y a pas d’automatisation sans micro-travail humain” – Grand entretien avec Antonio A. Casilli. Les Mondes du travail 24–25: 3–21.

Burke, Robin. 2017. Multisided Fairness for Recommendation. https://arxiv.org/pdf/1707.00093.

Casilli, Antonio A., and Julian Posada. 2019. The Platformization of Labor and Society. In Society and the Internet: How Networks of Information and Communication are Changing our Lives, ed. Mark Graham and William H. Dutton, 293–306. Oxford: Oxford University Press.

Dastin, Jeffrey. 2018. Amazon scraps secret AI recruiting tool that showed bias against women. https://www.reuters.com/article/us-amazon-com-jobs-automation-insight-idUSKCN1MK08G. Accessed 29 Aug 2022.

Deldjoo, Yashar, Vito Walter Anelli, Hamed Zamani, Alejandro Bellogín, and Tommaso Di Noia. 2021. A Flexible Framework for Evaluating User and Item Fairness in Recommender Systems. User Modeling and User-Adapted Interaction 31 (3): 457–511. https://doi.org/10.1007/s11257-020-09285-1.

Dixon, S. 2022a. Facebook: Average Revenue per User Region 2022. Statista. https://www.statista.com/statistics/251328/facebooks-average-revenue-per-user-by-region/. Accessed 6 Sept 2022.

———. 2022b. Meta ARPU 2021. Statista. https://www.statista.com/statistics/234056/facebooks-average-advertising-revenue-per-user/. Accessed 6 Sept 2022.

Doshi, Neerja. 2018. Recommendation systems – Models and evaluation – Towards data science. https://towardsdatascience.com/recommendation-systems-models-and-evaluation-84944a84fb8e. Accessed 4 Sept 2022.

Dutta, Soumyadip. 2022. Instagram paid subscription: Price, Who is eligible, how to enable, how to subscribe. https://www.techbloat.com/instagram-paid-subscription-price-who-is-eligible-how-to-enable-how-to-subscribe.html. Accessed 4 Sept 2022.

European Commission. 2022. EUR-Lex – 52022PC0071 – EN – EUR-Lex. https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A52022PC0071. Accessed 17 Mar 2023.

Eyal, Nir, and Ryan Hoover. 2019. Hooked: How to Build Habit-Forming Products. New York: Portfolio/Penguin.

Ferraris, Maurizio. 2018. Salario di mobilitazione: un’idea per i nuovi poveri dell’era digitale. https://www.agendadigitale.eu/cultura-digitale/ferraris-salario-di-mobilitazione-unidea-per-i-nuovi-poveri-dellera-digitale/. Accessed 9 Sept 2022.

———. 2021. Webfare e libertà, se il “consumo” produce valore: ecco come e perché ricompensarlo. https://www.agendadigitale.eu/cultura-digitale/webfare-e-liberta-se-il-consumo-produce-valore-ecco-come-e-perche-ricompensarlo/. Accessed 9 Sept 2022.

Feygin, Yakov, Hecht, Brent, Prewitt, Matthew, Li, Hanlin, Vincent, Nicholas, Lala, Chirag, and Scarcella, Luisa. 2019. A Data Dividend that Works: Steps Toward Building an Equitable Data Economy. https://www.berggruen.org/ideas/articles/a-data-dividend-that-works-steps-toward-building-an-equitable-data-economy/.

Fisher, Eran. 2020. Audience Labour on Social Media: Learning from Sponsored Stories.

Fuchs, Christian, and Eran Fisher, eds. 2015. Reconsidering Value and Labour in the Digital Age. London/Imprint: Palgrave Macmillan UK/Palgrave Macmillan.

Gabriel, Markus. 2020. Fiktionen. Berlin: Suhrkamp Verlag.

Giovanola, Benedetta, and Simona Tiribelli. 2022. Beyond Bias and Discrimination: Redefining the AI Ethics Principle of Fairness in Healthcare Machine-Learning Algorithms. AI & Society: 1–15. https://doi.org/10.1007/s00146-022-01455-6.

Gray, Mary, and Siddharth Suri. 2019. Ghost Work: How Amazon, Google, and Uber are Creating a New Global Underclass. Boston: Houghton Mifflin Harcourt Publishing.

Grgić-Hlača, Nina, Muhammad Bilal Zafar, Krishna P. Gummadi, and Adrian Weller. 2018. Beyond Distributive Fairness in Algorithmic Decision Making: Feature Selection for Procedurally Fair Learning. Proceedings of the AAAI Conference on Artificial Intelligence 32 (1). https://doi.org/10.1609/aaai.v32i1.11296.

Haupt, Susanne, and Michael Wollenschläger. 2001. Virtueller Arbeitsplatz – Scheinselbständigkeit bei einer modernen Arbeitsorganisationsform. NZA 6: 289–296.

Hutchinson, Andrew. 2020. Would people pay to use social media platforms to avoid data-sharing? [Infographic]. https://www.socialmediatoday.com/news/would-people-pay-to-use-social-media-platforms-to-avoid-data-sharing-info/575956/. Accessed 6 Sept 2022.

Irani, Lilly. 2015. Justice for “data janitors” – Public books. https://www.publicbooks.org/justice-for-data-janitors/. Accessed 7 Sept 2022.

Jarrett, Kylie. 2015. Feminism, Labour and Digital Media: The Digital Housewife. London: Routledge.

Jiménez González, Aitor. 2022. Law, Code and Exploitation: How Corporations Regulate the Working Conditions of the Digital Proletariat. Critical Sociology 48 (2): 361–373. https://doi.org/10.1177/08969205211028964.

Kaesling, Katharina. 2021. § 327 BGB Anwendungsbereich. In jurisPK-BGB 9. Aufl., ed. Herberger, Martinek, Rüßmann, Weth, and Würdinger.

Kasirzadeh, Atoosa. 2022. Algorithmic Fairness and Structural Injustice: Insights from Feminist Political Philosophy. In AIES’22: Proceedings of the 2022 AAAI/ACM Conference on AI, Ethics, and Society: August 1–3, 2022, Oxford, 349–356. AIES’22: AAAI/ACM Conference on AI, Ethics, and Society, Oxford United Kingdom. 19.05.2021, 21.05.2021. New York: The Association for Computing Machinery. https://doi.org/10.1145/3514094.3534188.

Koren, Yehuda, and Robert Bell. 2011. Advances in Collaborative Filtering. In Recommender Systems Handbook, ed. Francesco Ricci, Lior Rokach, Bracha Shapira, and Paul B. Kantor, 145–186. Boston: Scholars Portal.

Latte, Simona. 2021. Personal Data in Exchange for Digital Content and Digital Services: Directive 2019/770/EU. European Journal of Privacy Law & Technologies.

Lee, Michelle Seng Ah., and Luciano Floridi. 2021. Algorithmic Fairness in Mortgage Lending: From Absolute Conditions to Relational Trade-offs. Minds and Machines 31 (1): 165–191. https://doi.org/10.1007/s11023-020-09529-4.

Maxwell, Richard, ed. 2016. The Routledge Companion to Labor and Media. New York/London: Routledge.

Mehrabi, Ninareh, Fred Morstatter, Nripsuta Saxena, Kristina Lerman, and Aram Galstyan. 2021. A Survey on Bias and Fairness in Machine Learning. ACM Computing Surveys 54 (6): 1–35. https://doi.org/10.1145/3457607.

Milano, Silvia, Mariarosaria Taddeo, and Luciano Floridi. 2020. Recommender Systems and Their Ethical Challenges. AI & Society 35 (4): 957–967. https://doi.org/10.1007/s00146-020-00950-y.

———. 2021. Ethical Aspects of Multi-stakeholder Recommendation Systems. The Information Society 37 (1): 35–45. https://doi.org/10.1080/01972243.2020.1832636.

Milland, Kristy. 2019. From bottom to top: How Amazon mechanical Turk disrupts employment as a whole – Brookfield Institute for Innovation + Entrepreneurship. https://brookfieldinstitute.ca/from-bottom-to-top-how-amazon-mechanical-turk-disrupts-employment-as-a-whole/. Accessed 7 Sept 2022.

Mohamed, Shakir, Marie-Therese Pong, and William Isaac. 2020. Decolonial AI: Decolonial Theory as Sociotechnical Foresight in Artificial Intelligence. Philosophy & Technology 33 (4): 659–684. https://doi.org/10.1007/s13347-020-00405-8.

Newman, Andy. 2019. I found work on an Amazon website. I made 97 cents an hour. https://www.nytimes.com/interactive/2019/11/15/nyregion/amazon-mechanical-turk.html?mtrref=undefined&gwh=27F128B69E3C4E8334EA5840428F3472&gwt=pay&assetType=PAYWALL. Accessed 7 Sept 2022.

Newton, Casey. 2019. The secret lives of Facebook moderators in America. https://www.theverge.com/2019/2/25/18229714/cognizant-facebook-content-moderator-interviews-trauma-working-conditions-arizona. Accessed 1 Sept 2022.

OHCHR. 1976. International Covenant on economic, social and cultural rights. https://www.ohchr.org/en/instruments-mechanisms/instruments/international-covenant-economic-social-and-cultural-rights. Accessed 9 Sept 2022.

Patar, Dustin. 2019. Most online ‘terms of service’ are incomprehensible to adults, study finds. https://www.vice.com/en/article/xwbg7j/online-contract-terms-of-service-are-incomprehensible-to-adults-study-finds. Accessed 24 Sept 2022.

Rani, Uma, and Marianne Furrer. 2021. Digital Labour Platforms and New Forms of Flexible Work in Developing Countries: Algorithmic Management of Work and Workers. Competition & Change 25 (2): 212–236. https://doi.org/10.1177/1024529420905187.

Sawafta, Sara. 2019. The working conditions of digital workers in Amazon mechanical Turk. https://medium.com/@ssara1977/the-working-conditions-of-digital-workers-in-amazon-mechanical-turk-dedb2022539a. Accessed 7 Sept 2022.

Scholz, Trebor, ed. 2013. Digital Labor: The Internet as Playground and Factory. New York: Routledge.

Sindermann, Cornelia, Daria J. Kuss, Melina A. Throuvala, Mark D. Griffiths, and Christian Montag. 2020. Should We Pay for Our Social Media/Messenger Applications? Preliminary Data on the Acceptance of an Alternative to the Current Prevailing Data Business Model. Frontiers in Psychology 11: 1415. https://doi.org/10.3389/fpsyg.2020.01415.

Solans, David, Francesco Fabbri, Caterina Calsamiglia, Carlos Castillo, and Francesco Bonchi. 2021. Comparing Equity and Effectiveness of Different Algorithms in an Application for the Room Rental Market. In Proceedings of the 2021 AAAI/ACM Conference on AI, Ethics, and Society, 978–988. AIES’21: AAAI/ACM Conference on AI, Ethics, and Society, Virtual Event USA. 19.05.2021, 21.05.2021. New York: Association for Computing Machinery. https://doi.org/10.1145/3461702.3462600.

Terranova, Tiziana. 2000. Free Labor: Producing Culture for the Digital Economy. Social Text 18 (2): 33–58.

The Economist. 2018. Should Internet Firms Pay for the Data Users Currently Give Away? The Economist, January 11.

TikTok. 2022. Exploring New Ways for Creators to Build Their Community and be Rewarded with LIVE Subscription. TikTok, May 23.

Tinder Newsroom. 2022. Powering Tinder® – The method behind our matching. https://www.tinderpressroom.com/powering-tinder-r-the-method-behind-our-matching/. Accessed 24 Sept 2022.

Tsukayama, Hayley. 2020. Why getting paid for your data is a bad deal. https://www.eff.org/it/deeplinks/2020/10/why-getting-paid-your-data-bad-deal. Accessed 9 Sept 2022.

United Nations. 1948. Universal Declaration of Human Rights. United Nations. https://www.un.org/en/about-us/universal-declaration-of-human-rights. Accessed 9 Sept 2022.

Vercellone, Carlo. 2020. Les plateformes de la gratuité marchande et la controverse autour du Free Digital Labor: une nouvelle forme d’exploitation? Revue ouverte d’ingénierie des systèmes d’information 1 (2). https://doi.org/10.21494/ISTE.OP.2020.0502.

Verma, Sahil, and Julia Rubin. 2018. Fairness Definitions Explained. In FairWare 2018: 2018 ACM/IEEE International Workshop on Software Fairness: proceedings: 29 May 2018, Gothenburg, Sweden, 1–7. ICSE’18: 40th International Conference on Software Engineering, Gothenburg Sweden. 29.05.2018, 29.05.2018. Los Alamitos: IEEE Computer Society. https://doi.org/10.1145/3194770.3194776.

Young, Iris Marion. 2011. Responsibility for Justice. New York/Oxford: Oxford University Press.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this chapter

Cite this chapter

Genovesi, S. (2023). Digital Labor as a Structural Fairness Issue in Recommender Systems. In: Genovesi, S., Kaesling, K., Robbins, S. (eds) Recommender Systems: Legal and Ethical Issues. The International Library of Ethics, Law and Technology, vol 40. Springer, Cham. https://doi.org/10.1007/978-3-031-34804-4_5

Download citation

DOI: https://doi.org/10.1007/978-3-031-34804-4_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-34803-7

Online ISBN: 978-3-031-34804-4

eBook Packages: Religion and PhilosophyPhilosophy and Religion (R0)