Abstract

The ethical analysis related to the impact of digital technologies on the future of work needs to be conducted considering the theoretical diversity of ethics. After reviewing prominent existing approaches to ethics (utilitarianism, deontological ethics, virtue ethics), this chapter suggests the need for an agent-centred ethical perspective based on goods, norms, and virtues for the evaluation of ethical issues related to digital technologies and their impact on the future of work. Different examples illustrate the merits of this approach, helping to untangle complex issues concerning the relationship between the nature and scope of digital technologies, regulatory needs within the new social and technological context, and the intentions and attitudes of workers towards their work, their personal flourishing, and their contribution to the good of society.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

10.1 Introduction

The Fourth Industrial Revolution (Schwab, 2016; Schwab & Davis, 2018) generated an intense debate on the way that digital technologies should be used and on the tools of ethical analysis that are essential in defining whether or not these technologies contribute to human flourishing and to the good of society (Jasanoff, 2016; Brusoni & Vaccaro, 2017; Floridi et al., 2018; Bertolaso & Rocchi, 2022). Mobile computing, social media, big data analytics, cloud computing, and the Internet of Things can be regarded as examples of digital technologies or, more accurately, as third platform technologies (as categorised by the International Data Corporation in 2015, see also Lynn et al., 2020).

Digital technologies generate a new set of ethical questions, which will be explored in this chapter, with specific reference to the impact that digital technologies have on the future of work, especially regarding their contribution to workers’ personal flourishing and to the good of society. Academic research has been slow to systematically address the question of ethics in relation to the future of work. Extensive reviews of literature in this area, such as the one presented by Balliester and Elsheikhi (2018), do not even mention the term “ethics”.

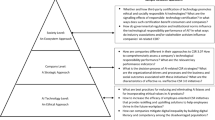

A factor that contributes to the scarcity of academic research in the space of ethics and the future of work concerns the tendency to treat the ethical perspective as a stopgap, merely a way of addressing the limitations of regulatory efforts. This chapter aims to take a first step towards addressing this research gap by suggesting an agent-centred perspective which can be used to orient ethical judgement regarding the design and use of specific digital technologies in new and redesigned jobs. The agent-centred perspective considers (1) how digital technologies contribute to human flourishing and the good of society; (2) the way that norms are set so that they facilitate the realisation of these goods in this renewed social and technological context; and (3) the human traits that enable people to flourish and contribute to the good of society through their work.

The remainder of this chapter proceeds as follows. Section 10.2 presents an overview regarding the question of ethics and briefly outlines the approach to ethics that best addresses the new context of human work. Section 10.3 presents an agent-centred perspective, considering the goods, norms, and virtues necessary to evaluate the impact of digital technologies on the future of work. The final section offers some conclusions, opening avenues for further research.

10.2 What Is Ethics? Exploring Ethical Approaches and Their Capability to Analyse the Impact of Digital Technologies on the Future of Work

Ethics is commonly thought of as a way of determining right from wrong. This is not inaccurate, but it is not the whole story. Ethics like any other interesting phenomenon can only be understood within a theoretical framework. And like most other disciplines, the field of ethics is rife with theoretical diversity. While a review of all ethical theories is beyond the scope of this chapter, a broad overview of some of the major schools of thought will be helpful before considering which ethical perspective(s) would be especially useful for analysing ethical problems related to the impact of digital technologies on the future of work. The discipline of ethics is typically seen as divided between three major schools: utilitarianism, deontology, and virtue ethics (Baron et al., 1997). There is significant diversity within each of these perspectives; however, this schema is a good starting point for our purposes. There are other significant approaches to ethics, but for this article we consider these three ethical frameworks.

Utilitarianism is one form of consequentialism, the latter being a broad approach to ethics that focuses solely on promoting good outcomes (Parfit, 1984). Utilitarianism focuses on one specific type of good outcome, that is, the happiness or wellbeing of affected persons. It is also impartial in that it treats the happiness of each person as equally relevant when determining which action is right (Hooker, 2000). While this perspective contrasts with much “common sense morality” (Parfit, 1984, p. 40), in some ways it offers an intuitively plausible way of thinking about ethics. Ethics is about promoting happiness, performing actions that result in the most beneficial consequences for others.

But utilitarianism’s focus on impartiality also presents significant difficulties, even on its own terms. Many of the things that most make us happy involve partiality, relationships with friends, for example, or a mother’s preferential love for her child (Parfit, 1984). Likewise, intentions matter. We care about more than the benefits that flow from a friendship; we also care about the reasons why a friend acts as she does. In other words, relationships and intentions matter more than utilitarianism suggests, at least in its standard formulations (Parfit, 1984). Likewise, it is very difficult to estimate the consequences of actions and the notion of happiness may not be determinate enough to provide concrete guidance for action (MacIntyre, 2007). Because of these problems, it makes sense to look at the other ethical frameworks.

A second prominent ethical theory, commonly seen as the main counterpart of utilitarianism, is deontology, usually associated with the work of Immanuel Kant. Deontology is focused on identifying correct rules or principles, according to which actions are morally right. In the Groundwork of the Metaphysics of Morals, Kant (2012) famously introduced a series of principles, what he called categorical imperatives, which he argued are equivalent formulations of the supreme principle of morality. Perhaps the most famous of which is Kant’s Formula of Humanity, “so act that you use humanity, in your own person as well as in the person of any other, always at the same time as an end, never merely as a means” (Kant, 2012, p. 41, italics removed). Unlike utilitarianism, the formula of humanity clearly focuses on the value of specific relationships with specific persons. It does this by refraining from aggregating ethical value, that is, not trading off harms to some for greater benefits to others. Instead, it requires people to treat others with respect, to avoid manipulating or intentionally harming others. While this principle provides some concrete guidance as to how one should act, it leaves the issue of what sort of behaviour is incompatible with humanity at an intuitive level (MacIntyre, 2007).

This problem of indeterminacy is even more evident in the Formula of Universal Law, “I ought never to proceed except in such a way that I could also will that my maxim should become a universal law” (Kant, 2012, p. 17). This principle is supposed to provide a test to determine whether an action is right or wrong. For example, someone considering whether it would be right to lie to get a loan with no intention of actually repaying it could apply this test. Doing so would indicate that if it became a universal practice that everyone lies whenever some benefit could be gained, then social norms concerning promising would break down. As such, a lying promise would fail this test, indicating that it would be unethical to do (see Kant, 2012). But in the case of many other actions, the outcome is not so clear. It seems possible that all sorts of horrible actions could be universal laws. Could racism, for example, be made a universal law? Plausibly, it could. The world would be a much worse place and it would be especially difficult for minorities, but it would not obviously violate Kant’s test. And there may be many other actions like this, meaning that Kant’s principles are unlikely to provide a sufficient way to deal with many ethical problems (Scanlon, 2011). As such, it is worth considering virtue ethics as an alternative to these two ethical theories.

Without ignoring the question of which action is right or wrong (see Hursthouse, 1999), virtue ethics focuses on a more fundamental question: What does it mean to live a flourishing life? As such, it can be considered an “agent-centred” approach (Annas, 1995), focused on living and acting well. Human flourishing involves the fulfilment of human capacities in a coherent manner throughout the course of a unified life. It considers various human capacities, emotional, intellectual, social, creative, etc., and the various social relationships, norms, values, and attitudes that are necessary to fulfil these capacities. Here, the focus of ethics is expanded to consider the role of norms and virtues in facilitating human flourishing. As such, organisational contexts, involving various forms of work, are especially important (Sison & Fontrodona, 2012). The type of work that one does and the manner in which it is performed may have a substantial impact on one’s potential to live a flourishing life, especially if one’s work benefits other stakeholders and enables one to develop one’s capacities.

Thus, virtue ethics integrates a concern with rules and good consequences, typical of deontology and utilitarianism, into a broader analysis focused on the question of the good of the acting person (MacIntyre, 1999; Rhonheimer, 2011), asking what goods are at stake within specific social contexts and how these goods can be integrated into a unified life (MacIntyre, 2007). Considering this perspective, an analysis of digital technologies is crucial. Indeed, digital technologies are not just new tools, whose use can be analysed in the same way as we analyse the proper or improper use of other kinds of objects. Digital technologies “transform the surrounding environment and create new ontological spaces” (Russo, 2018, p. 656), constituting a new interface with reality (Capone et al., forthcoming).

From a virtue ethics perspective, we can ask a number of questions about these new technologies all linked with the issue of human flourishing. How do new technologies impact employees? Do they promote their emotional, intellectual, and professional development? How do they affect relationships at work? Do they harm employees’ abilities to form meaningful relationships that contribute to flourishing lives? Likewise, how do these new technologies impact society? Do they enable more efficient and effective forms of work that benefit a range of stakeholders? Finally, what habits and virtues do these new technologies promote or inhibit? Because of its more comprehensive focus on human flourishing and the common good, virtue ethics allows for a more fine-grained analysis of new digital technologies. As such, it is an especially fruitful lens with which to consider them.

10.3 Towards an Agent-Centred Perspective for the Ethical Analysis of Digital Technologies in the Future of Work

An agent-centred ethical approach such as virtue ethics offers the necessary tools for an ethical analysis of the complex issues surrounding the impact of digital technologies on the future of work. Indeed, the introduction of digital technologies not only requires an analysis of the goodness of the outcome related to the application of a new technology to a specific profession (utilitarian approach—emphasis on the goods), or only an analysis of how a specific technology complies with existing norms or respect determined principles (deontological approach—emphasis on the norms). There is a need for a more fine-grained consideration of intertwined issues surrounding these new technologies, focused on specific goods that are at stake within new modes of work. We can follow Aristotle (2000) in understanding the “goods” as the objects we desire in themselves.Footnote 1 In an agent-centred ethical perspective, we can consider human flourishing (on an individual level) and the common good (on a social level) to be the ultimate goods that we seek (MacIntyre, 1999), and consider the norms and virtues that facilitate the achievement of these goods in the context of new modes of work.Footnote 2

A brief example may help to illustrate this. In 2019, a group of Microsoft workers published a letter for the Microsoft’s CEO and President, to express their criticism of the company’s decision to sell the HoloLens technology to the U.S. Army for the use in combat (Lee, 2019).Footnote 3 The Microsoft workers argued that they did not want their work to be at the service of “weapons development”, since they viewed their work for Microsoft as a way of empowering people and organisations.Footnote 4 Microsoft HoloLens are a mixed reality technology that works by combining different technologies (eye-tracking, hand-tracking, holographic technology, spatial mapping, and many more) in head-mounted smart glasses that enable the user to display information, create and interact with holograms, construct virtual reality settings, and much more. Applications of this technology can be found in medicine, education, manufacturing, and, in the case that drew criticism from Microsoft workers, military settings.

It may be possible to evaluate the benefits of the application of the HoloLens in military contexts and compare them to the harms they are likely to produce (as in a Utilitarian perspective) and end up with a (particularly complex) calculus of the impact of this technology in this application on overall wellbeing. But if this sort of calculation is possible (which may not be the case since accounting for all the possible benefits and harms in the long term would be extremely difficult), this sort of analysis would be likely to leave the Microsoft workers unsatisfied. Even if the benefits of military applications of HoloLens would, according to some scale, outweigh the harm, a further question remains: would these workers be justified in contributing to this harm just because it may lead to beneficial outcomes? In other words, does the mere fact of aggregate benefits absolve the Microsoft workers, leaving them without “dirty hands”? And, leaving aside responsibilities, a question of whether this kind of work is still meaningful for the workers arise too.

Only a consideration of this technology in the context of the workers’ particular life narratives and characters can provide a wider perspective for the ethical consideration of this problem. Goods are not only external, tangible, and measurable components of a morally neutral conception of wellbeing as in the Utilitarian perspective; “internal goods” are also important. These are goods that are intrinsically valuable and morally salient (MacIntyre, 2007). As Moore summarises, “internal goods are of two kinds. First, there is the good product or, we may add in an organizational context, the good service. […] Second, however, there is the internal good which involves the perfection of the practitioners engaged in the craft or practice” (Moore, 2017, p. 57). In the context of this example, the relevant internal goods include the excellence of the technology produced, which the workers view as linked directly with beneficial results for individuals and organisations, and the excellence of the life of a Microsoft worker, someone who can view herself as doing work that empowers others. This understanding of work plays an essential role in motivating these workers, and, arguably, the technologies that they have produced would have been impossible absent this morally salient conception of work at Microsoft. Thus, beyond a consideration of consequences, it is necessary to consider whether military applications of HoloLens are consistent with the ideals and virtues of the workers that have developed it, since a focus solely on outcomes is not sufficient to account for the complex system of intentions, actions, and circumstances that surround the design, development, and production of this particular digital technology. More generally, it is essential to ask whether digital technologies are creating spaces and opportunities for workers to flourish as human beings capable of contributing to the good of society.

At the level of norms, Kant’s categorical imperatives offer plausible general principles. However, there is a large gap between these principles and specific norms that could inform decision making. For example, in the famous “Moral Machine” experiment (Awad et al., 2018), people were asked to decide on different scenarios encountered by a self-driving car with a brake failure: in the event of an unavoidable collision, should the car harm a passenger or a pedestrian? Does it matter if pedestrians are elderly? What if they are workers or homeless? Thus, the principles of fairness and beneficence commonly associated with Kantian ethics (Gabriel, 2020) need other criteria to solve this kind of dilemma. In concrete real-life scenarios, there is a need for the adoption of new norms that can capture the challenges of the renewed workplace. These norms should be informed by experience and historical developments. This highlights the role of regulation in informing ethical decision making. This is particularly evident in the case of Uber, one of the most well-known of the new types of digitally enabled workFootnote 5 (known as “platform work”, see Deganis et al., 2021), where tensions between regulatory efforts, workers’ rights, and the economic interests of the platform have been in the headlines many times (e.g., Ram, 2018; Scheiber, 2021). In this context and in others involving new forms of work there is need to consider new regulations that ensure that all relevant stakeholders’ interests are accounted for. More generally, concrete norms are needed, norms that can only be developed through political debate and deliberation, in order to protect the relevant goods at stake in these new work contexts. Likewise, virtues are needed to ensure that norms are implemented properly.

A virtue is a human trait that enables a person to flourish and contribute to the good of society, enabling individuals to act well (Aristotle, 2000; Melé, 2009). Recent research has sought to relocate the tradition of virtues in the renewed technological context (Vallor, 2016; Rocchi, 2019), and there are publications which address the need to consider virtues when assessing specific digital technologies (e.g., Grodzinsky, 2017; Gal et al., 2020) or new modes of work enabled by technology (Rocchi & Bernacchio, 2022). The Cambridge Analytica scandal led many to consider how the extremely good potential of digital technologies (a social media platform and big data analytics) and the existence of norms that would protect consumers’ privacy are still not enough to guarantee the achievement of societal good. While the Cambridge Analytica whistle-blower disclosed this situation and made people aware of the misuse of data—showing the virtues of justice and courage—a myriad of similar situations on a smaller scale still expose our data to different kind of violations, and the development of the virtues in those responsible for this data would fill the gap in regulation enforcement (or, sometimes, even regulation gaps). For example, the exercise of the virtue of justice, defined as the habit of giving each person what is due to her, would help companies make appropriate use of the information they source from their clients. Thus, virtues enable individuals within organisations, not only to choose effective means within the constraints of a given regulatory framework but, more importantly, to ensure that regulations are implemented so that they support work that contributes to workers’ personal flourishing and to the good of society. While acknowledging the importance of external goods as tangible outcome of work (e.g., salary, social status, reputation), the virtues enable the workers to consciously seek internal goods, that is, excellent work that contributes positively to society.

In conclusion, the presented agent-centred approach (typical of virtue ethics) would consider—simultaneously—the goodness of the outcome (goods), the norms necessary to avoid harm and facilitate cooperation for the good of society (norms), and the habits of those involved in the creation (and not just in the use) of digital technologies (virtues). It is important to clarify that the presented perspective does not intend to provide ready-made solutions, but it rather provides the intellectual tools to think about these issues in greater depth, appreciating the impact of digital technologies on the future of work.

10.4 Conclusions and Future Research

This chapter presents an overview of different ethical approaches and highlights how an agent-centred approach to ethics, based on goods, norms, and virtues, is the most suitable to analyse from an ethical point of view the impact of digital technologies on the future of work.

Further research can enrich the framework on the three levels. As for the goods, parameters to evaluate the goodness of a digital technology, including the goods of human flourishing and the common good, should be defined more clearly in this new context of digital work, by establishing metrics that can account for goodness within a company’s decision-making process. At the level of norms, an analysis of incentives that encourage virtuous behaviours and discourage societal harm, and more generally, the regulations that can promote human flourishing in this new context, could be studied. The development of concrete norms deriving from general principles within the context of decision-making algorithms in AI-based technologies constitutes another area of further research at the level of norms. Finally, at the level of virtues, it can be explored how the cultivation of specific virtues favour the development of workers’ human flourishing and enhance their willingness to meaningfully contribute to the good of society. More generally, a reconsideration of a theory of action that takes into account the object, end, and circumstances of the action performed within the renewed technological and organisational context would be welcomed.

Notes

- 1.

Aranzadi (2011) offers a simple explanation of this definition.

- 2.

- 3.

For recent developments of this deal, see Browning (2021).

- 4.

The original post on Twitter can be found here: https://twitter.com/MsWorkers4/status/1099066343523930112.

- 5.

See Chap. 4 for a more detailed discussion.

References

Annas, J. (1995). The morality of happiness (1st ed.). Oxford University Press.

Aranzadi, J. (2011). The possibilities of the acting person within an institutional framework: Goods, norms, and virtues. Journal of Business Ethics, 99(1), 87–100.

Aristotle. (2000). Nicomachean ethics (Cambridge Texts in the History of Philosophy). Cambridge University Press.

Awad, E., Dsouza, S., Kim, R., Schulz, J., Henrich, J., Shariff, A., Bonnefon, J.-F., & Rahwan, I. (2018). The moral machine experiment. Nature, 563(7729), 59–64.

Balliester, T., & Elsheikhi, A. (2018). The future of work: A literature review. International Labour Organization Working Papers, 29.

Baron, M., Pettit, P., & Slote, M. (1997). Three methods of ethics: A debate (Great Debates in Philosophy). Blackwell.

Bertolaso, M., & Rocchi, M. (2022). Specifically human: Human work and care in the age of machines. Business Ethics: A European Review, 31(3), 888–898.

Browning, K. (2021). Microsoft will make augmented reality headsets for the army in a $21.9 billion deal. The New York Times, March 31. https://www.nytimes.com/2021/03/31/business/microsoft-army-ar.html

Brusoni, S., & Vaccaro, A. (2017). Ethics, technology and organizational innovation. Journal of Business Ethics, 143(2), 223–226.

Capone, L., Rocchi, M., & Bertolaso, M. (forthcoming). Rethinking ‘Digital’: A Genealogical Enquiry into the Meaning of Digital and Its Impact on Individuals and Society. AI & Society.

Deganis, I., Tagashira, M., & Yang, W. (2021). Digitally enabled new forms of work and policy implications for labour regulation frameworks and social protection systems. United Nations Department of Economic and Social Affairs, Policy Brief 113.

Floridi, L., Cowls, J., Beltrametti, M., Chatila, R., Chazerand, P., Dignum, V., Luetge, C., et al. (2018). AI4People—An ethical framework for a good AI society: Opportunities, risks, principles, and recommendations. Minds and Machines, November.

Gabriel, I. (2020). Artificial Intelligence, values, and alignment. Minds and Machines, 30(3), 411–437.

Gal, U., Jensen, T. B., & Stein, M.-K. (2020). Breaking the vicious cycle of algorithmic management: A virtue ethics approach to people analytics. Information and Organization, 30(2), 100301.

Grodzinsky, F. S. (2017). Why Big Data needs the virtues. In T. M. Powers (Ed.), Philosophy and computing (Philosophical Studies Series) (pp. 221–234). Springer International Publishing.

Hooker, B. (2000). Ideal code, real world: A rule-consequentialist theory of morality. Oxford University Press.

Hursthouse, R. (1999). On virtue ethics. Oxford University Press.

Jasanoff, S. (2016). The ethics of invention: Technology and the human future (The Norton Global Ethics Series) (1st ed.). W.W. Norton & Company.

Kant, I. (2012). Groundwork of the metaphysics of morals (Cambridge Texts in the History of Philosophy) (Rev. ed.). Cambridge University Press.

Lee, D. (2019). Microsoft staff: Do not use HoloLens for war. BBC News, February 22. https://www.bbc.com/news/technology-47339774

Lynn, T., Mooney, J. G., Rosati, P., & Fox, G. (Eds.). (2020). Measuring the business value of cloud computing (Palgrave Studies in Digital Business and Enabling Technologies). Palgrave Macmillan.

MacIntyre, A. C. (1992). Plain persons and moral philosophy: Rules, virtues and goods. The American Catholic Philosophical Quarterly, 66(1), 3–19.

MacIntyre, A. C. (1999). Dependent rational animals: Why human beings need the virtues. Open Court.

MacIntyre, A. C. (2007). After virtue: A study in moral theory (3rd ed.). University of Notre Dame Press.

Melé, D. (2005). Ethical education in accounting: Integrating rules, values and virtues. Journal of Business Ethics, 57(1), 97–109.

Melé, D. (2009). Business ethics in action: Seeking human excellence in organizations. Palgrave Macmillan.

Moore, G. (2017). Virtue at work: Ethics for individuals, managers and organizations. Oxford University Press.

Parfit, D. (1984). Reasons and persons. Clarendon Press.

Ram, A. (2018). Uber faces tough new regulations in London. Financial Times, February 15. https://www.ft.com/content/3796d1e0-124c-11e8-8cb6-b9ccc4c4dbbb

Rhonheimer, M. (2011). The perspective of morality: Philosophical foundations of thomistic virtue ethics. Catholic University of America Press.

Rocchi, M. (2019). Technomoral Financial Agents: The Future of Ethics in the Fintech Era. Finance and Common Good, 46–47, 25–42.

Rocchi, M., & Bernacchio, C. (2022). The virtues of Covid-19 pandemic: How working from home can make us the best (or the worst) version of ourselves. Business and Society Review, 127(3), 685–700.

Russo, F. (2018). Digital technologies, ethical questions, and the need of an informational framework. Philosophy & Technology, 31(4), 655–667.

Scanlon, T. M. (2011). How I Am Not a Kantian. In D. Parfit (Ed.), On What Matters: Volume Two, (pp. 116–139). Oxford University Press.

Scheiber, N. (2021). Uber and lyft ramp up legislative efforts to shield business model. The New York Times, June 9. https://www.nytimes.com/2021/06/09/business/economy/uber-lyft-gig-workers-new-york.html.

Schwab, K. (2016). The fourth industrial revolution (1st ed.). Crown Business.

Schwab, K., & Davis, N. (2018). Shaping the future of the fourth industrial revolution: A guide to building a better world (1st ed.). Currency.

Sison, A., & Fontrodona, J. (2012). The common good of the firm in the Aristotelian-Thomistic tradition. Business Ethics Quarterly, 22(2), 211–246.

Vallor, S. (2016). Technology and the virtues: A philosophical guide to a future worth wanting. Oxford University Press.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this chapter

Cite this chapter

Rocchi, M., Bernacchio, C. (2023). Digital Technologies and the Future of Work: An Agent-Centred Ethical Perspective Based on Goods, Norms, and Virtues. In: Lynn, T., Rosati, P., Conway, E., van der Werff, L. (eds) The Future of Work. Palgrave Studies in Digital Business & Enabling Technologies. Palgrave Macmillan, Cham. https://doi.org/10.1007/978-3-031-31494-0_10

Download citation

DOI: https://doi.org/10.1007/978-3-031-31494-0_10

Published:

Publisher Name: Palgrave Macmillan, Cham

Print ISBN: 978-3-031-31493-3

Online ISBN: 978-3-031-31494-0

eBook Packages: Business and ManagementBusiness and Management (R0)