Abstract

The potential of web-based peer assessment platforms to aid in instruction and learning has been well documented in literature. Evidence proposed that the use of web-based peer assessment is beneficial for both teachers and students in several aspects, but some findings also suggest that it might present a number of challenges. The aim of this chapter is to examine web-based peer assessment platforms in terms of their features that can potentially affect student learning, feedback exchange, and social interaction. A total of 17 eligible web-based peer assessment platforms were systematically reviewed against nine peer assessment design elements. Our results suggest that these platforms offer features to facilitate peer assessment in varied disciplines and in multiple ways, which has the potential to affect learning, feedback, and social interaction. However, as much as it offers technologically sound tools to aid instruction, we recommend extensive training for both teachers and students to maximise the features embedded in these platforms.

The present review was objectively conducted to ascertain the features of web-based platforms that support student learning, feedback, and social interaction. We did not receive any remuneration nor any compensation from the web-based platforms for the promotion of their products.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

The use of web-based peer assessment has exponentially increased in the last couple of decades due to its benefits for both instructors and students. Among these benefits, it is usually argued that web-based peer assessment lessens instructors’ workload by automatically managing peer assessment data (e.g., ratings, feedback) (Bouzidi & Jaillet, 2009), helps in conducting formative assessment (Søndergaard & Mulder, 2012), can help develop students’ motivation (Lai & Hwang, 2015), critical thinking (Wang et al., 2017), and positive affect (Chen, 2016). Nevertheless, there are also challenges to the implementation of web-based peer assessment. Some students perceive that web-based peer assessment is unfair (Kaufman & Schunn, 2011), academics find it challenging to create online learning environments (Adachi et al., 2018b), and some features of these web-based peer assessment platforms might limit the interpersonal and collaborative nature of peer assessment (Panadero, 2016; van Gennip et al., 2009) since some platforms are not capable of transmitting non-verbal cues needed for interaction (Phielix et al., 2010). Thus, as much as web-based peer assessment has great potentials, it also brings challenges and a key aspect for the success or failure in the implementation are the features offered in the web-based peer assessment platforms.

Because of this, our aim is to evaluate the characteristics and features of web-based peer assessment platforms to explore whether they facilitate students learning, feedback and social interaction. There are a number of reviews that have already compared and contrasted the different features and tools embedded in different web-based peer assessment platforms (e.g., Babik et al., 2016; Luxton-Reilly, 2009; Søndergaard & Mulder, 2012). However, we believe that there is a need to look at these platforms from an educational assessment lens, as previous reviews have reviewed the platforms from a computer science education or software engineering education lens. In doing this, we can determine its potential benefits and potential constraints in instruction and student interaction. With this in mind we used the peer assessment design elements framework (i.e., Adachi et al., 2018a) to determine how features of these platforms can affect three variables: students’ learning, the feedback provided through the platform, and the dynamics of student interaction online.

1.1 Web-Based Peer Assessment Platforms

The mode in which peer assessment is carried out is one of the most important decisions that teachers and instructors have to take if they want to implement peer assessment (Topping, 1998). One of the main decisions is whether to use a web-based platform or a more traditional paper-based approach. A recent meta-analysis that reviewed close to 60 studies found that web-based peer assessment shows larger effect size than paper-based peer assessment (g = 0.452 vs g = 0.237), which means that web-based may be preferable (Li et al., 2020). Similarly, web-based peer assessment was also deemed to be more convenient and flexible than paper-based peer assessment (Chen, 2016; Wen & Tsai, 2008) since it can be used synchronously or asynchronously with any web-connected device (e.g., computers, mobile devices, etc.) in different environments (e.g., classroom, or home) (Fu et al., 2019). Also, web-based peer assessment has specific features that might be too laborious to do in paper-based peer assessment, like allocating different grading weights at different stages of peer assessment to maintain reliability and validity of peer scores, algorithm-based pairing for assessors and assessees, or maintaining double-blind anonymity during the peer assessment process (Cho & Schunn, 2007; Patchan et al., 2018), or just simply aggregating and managing peer scores and peer feedback data in big courses. Because of the variety of tools and features available in different peer assessment platforms, a number of articles have reviewed different computer-supported and/or web-based peer assessment platforms available in published literature or in the educational technology market. Next, we discussed the three most relevant of these reviews.

First, Luxton-Reilly (2009), looked at the common features as well as the differences of various peer assessment platforms in a systematic review. He compared web-based peer assessment platforms based on: rubric design (i.e., if it is fixed or modifiable); rubric criteria (i.e., if it supports Boolean criteria like checkboxes; discrete choices; numeric scales; and, textual comments); possibility of discussion (i.e., dialogue between assessor and assessee); option to give backward feedback (i.e., if students and or instructors can assess the quality of feedback); flexibility of workflow (i.e., if the platform allows instructors to organise peer assessment workflow); and, evaluation (i.e., if there is a post evaluation performed in the study). Additionally, he categorized the platforms in three groups based on their context: generic, domain-specific, and context-specific systems. He grouped six peer assessment platforms under the “generic systems” where most features and activities in the platform can be configured by the instructor to cater to different disciplines and contexts. Seven platforms were grouped under “domain-specific systems” that were designed for specific disciplines (e.g., programming, essay writing). Finally, five platforms were grouped under “context-specific systems”, for platforms programmed solely for specific courses. Moreover, he expressed the need to further improve, or develop, web-based peer assessment platforms since the majority of the platforms he reviewed (13 of 18) were limited to computer science courses and settings. This review intended to serve as a helpful guide for developers in improving the design and features of existing and subsequent web-based peer assessment platforms.

Second, Søndergaard and Mulder (2012) evaluated peer assessment platforms based on four characteristics: (1) the ease of automation: automatic anonymisation and distribution of outputs and notification of instructors and students; (2) simplicity: convenience of the interface, ease of managing student data and integration with other learning management systems, and availability of resources for teachers and students; (3) customisability: flexibility to configure based on course needs; and, (4) accessibility: subscriptions and availability of a system online. They also analysed other features that might be essential to different contexts, like guidelines in pairing assessors and assessees, student assessor training/calibration, built-in plagiarism checks, and reporting tools to monitor the quality of feedback. Additionally, they categorised four web-based peer assessment platforms based on their focus, such as being training oriented, similarity checking oriented, customisation oriented, or writing skills oriented. This work provided an interesting framework for educators to evaluate if a web-based peer assessment platform is an appropriate formative and collaborative tool that support learning and student interaction, rather than a mere tool that collects peer scores or feedback.

Third and last, Babik et al. (2016) developed a peer-to-peer focused framework for evaluating the affordances and limitations of web-based peer assessment platforms based on an informal focused group discussion with instructors using web-based peer assessment in their courses and guided by the relevant practices of the peer assessment studies they reviewed from academic papers. Based on the categorized discussion of instructors’ practices, they listed five primary objectives for web-based peer assessment: (1) eliciting evaluation; (2) assessing achievement and generating learning analytics; (3) structuring automated peer assessment workflow; (4) reducing or controlling for evaluation biases; and (5) changing social atmosphere of the learning community as the main objectives for the use of web-based peer assessment. They viewed these objectives as “system independent”, where instructors determine what they need for instruction outside of the platform. While the functions and design in the platforms are categorized under “system-dependent” features. This study is important because they looked at platforms from the point-of-view of individuals making decisions on how web-based peer assessment is implemented in various courses—the instructors.

Taken altogether, these three reviews of web-based peer assessment platforms were made to assist instructors in planning their lessons to integrate student-centred assessment practices such as peer assessment. Additionally, they provided an overview of the technological advances in implementing web-based peer assessment. Nonetheless, there is still a need to investigate web-based peer assessment platforms from the point-of-view of a peer assessment design elements perspective, since the reviews we just presented were construed from a computer science education or software engineering context. Moreover, there is an increase in the number of platforms developed and updated since the last review, which poses the need to further investigate and determine the current directions of web-based peer assessment platforms. In the next section, we will describe the framework we utilised in evaluating each platform.

1.2 Peer Assessment Design Elements Framework

Topping (1998) wrote one foundational study to clarify how peer assessment can be carefully carried out in classrooms and research. He proposed a typology including seventeen variables, which were: (1) curriculum area; (2) objectives; (3) focus; (4) product/output; (5) relation to staff assessment; (6) official weight; (7) directionality; (8) privacy; (9) contact; (10) year; (11) ability; (12) constellations assessors; (13) constellations assessees; (14) place; (15) time; (16) requirement; and, (17) reward. The typology gave way for instructors and assessment researchers to construe peer assessment in an organised and systematic manner even if, unfortunately, it is still under-used and under-reported (Panadero, 2016). Importantly, since the original typology by Topping, there has been a number of new proposals that reorganize or amplify the original categories. For instance, van den Berg et al. (2006), categorised Topping’s variables into four clusters to respond to their course context, while van Gennip et al. (2009) classified the variables into three clusters considering how social interactions occurs between students in peer assessment.

More recently, Adachi et al. (2018a) added an additional dimension to Gielen et al.’s (2011) five-cluster work that reviewed and organised earlier ideas on peer assessment, which covered: (1) the decisions concerning peer assessment use; (2) peer assessment’s link to other elements in the learning environment; (3) interaction between peers; (4) composition of assessment groups; (5) management of assessment procedures, and (6) contextual elements. This peer assessment design elements framework is composed of 19 design elements that consider the diversity of peer assessment strategies, which were obtained from literature synthesis and their interview with academics from different disciplines. The design elements in this framework modified previous frameworks (e.g., Gielen et al., 2011; Topping, 1998) by collapsing, combining, and adding elements to form a unified one. For example, some elements were combined into one (i.e., requirement + reward into ‘formality and weighting’), while others were added into the framework (e.g., feedback utilisation). We have decided to use this framework as it covered design elements that are useful in future studies (i.e., Cluster VI: Contextual Elements).

In sum, the multiple iterations of peer assessment typologies suggest the idea that there is no “one size fits all” approach in implementing in the classroom and doing research in peer assessment. Also, it suggests that peer assessment is a complex process that requires further investigation due to rapid changes in the educational landscape. Therefore, there is a need to explore web-based peer assessment platform features to determine how it can affect students’ learning, the feedback that students provide and receive, and the dynamics of student interaction online. Examining the features of web-based peer assessment platforms that provide support to various interpersonal and intrapersonal factors that students go through during peer assessment is crucial since evidence has mentioned that it helps in promoting positive educational and affective outcomes (Chen, 2016; Lai & Hwang, 2015; Wang et al., 2017). Also, it is important to look at these interpersonal and intrapersonal factors because the interaction that occurs between students in web-based environments as a result of the features of web-based peer assessment may generate different social and human factors (i.e., thoughts, emotions, actions) that affect peer assessment outcomes (Panadero, 2016). Given that, there is a need to investigate the features of web-based peer assessment platforms from a peer assessment design elements framework to ascertain how these platforms can support peer assessment and student interaction online. Thus, we decided to perform a systematic review of platforms.

1.3 Search, Screening and Access to the Platforms, and Review Criteria

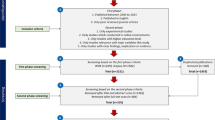

We used two approaches to identify the platforms. First, we extracted names of web-based peer assessment platforms from a parallel systematic review on intrapersonal and interpersonal variables in peer assessment. Second, a peer assessment expert was consulted for web-based peer assessment platform recommendations. In total, we identified 31 web-based platforms.

In screening the platforms, we visited each platform’s website to evaluate its availability. From this, 8 platforms were excluded (i.e., social media site, company tool, website in foreign language, website was unavailable or ceased to operate). Subsequently, the developers of the remaining platforms were contacted to request for complementary access to their platform if no free sign-up was available, as some required payment or licensing, or were offered exclusively for a select number of institutions. From this, 6 platforms were excluded (i.e., developers did not grant access or were unresponsive, platform was made for a specific course/commercially unavailable).

Finally, 17 web-based peer assessment platforms were evaluated in this study, which are: Aropä (United Kingdom); Blackboard Learning Management System (United States); Canvas Learning Management System (United States); CATME (United States); CritViz (United States); Crowd Grader (United States); Eduflow (Denmark); Eli Review (United States); Expertiza (United States); Kritik (Canada); Mobius SLIP (United States); Moodle Learning Management System (Australia); Peerceptiv (United States); Peergrade (Denmark); PeerMark (United States); PeerScholar (Canada); and, TEAMMATES (Singapore).

In evaluating the features of each web-based peer assessment platform, we extracted nine peer assessment design elements from Adachi et al.’s (2018a) framework covering three different areas. First, we evaluated the features that might have a direct influence in students’ learning, since a number of studies have expressed that some features of computer-supported collaborative learning environments (e.g., web-based peer assessment platforms) affects learning and performance (Janssen et al., 2007; Phielix et al., 2010, 2011; Zheng et al. 2020). Second, we evaluated the features that influence the feedback that student provide and receive when peer assessing since feedback is an essential component of peer assessment for both assessors and assessees (Gielen & De Wever, 2015; Patchan et al., 2016; Voet et al., 2018). Third, we evaluated aspects of social interaction between students since peer assessment is essentially a social and interpersonal process (Panadero, 2016; van Gennip et al., 2009). Table 8.1 shows the peer assessment design elements we selected and corresponding descriptions.

We coded the relevant information from each platform to a standard data extraction template. In most web-based peer assessment platforms, we created a standard sample activity where peer assessment was the main focus. Then, we looked at the feature options available when designing the activity which would relate to a certain design element (e.g., choosing “enable self-evaluation?” would relate to design element number 8; choosing “enable anonymity?” would relate to design element 9). When the information about certain design elements was unclear, we used the search function in the help centre or search bar available in the platform. To assess the validity of the coding, an external researcher conducted an independent coding of three of the 17 platforms included in this study, which resulted to 91.2% agreement. In the next sections, we will examine how the features of the 17 web-based peer assessment platforms influences learning, feedback, and social interaction.

2 Web-Based Peer Assessment Features Influencing Student Learning

In this section, we will analyse features of the web-based peer assessment platforms in terms of how they might influence student learning based on the following design elements: intended learning outcomes (for students), link to self-assessment, and calibration and scaffolding.

2.1 Intended Learning Outcomes for Students

With regard to the intended learning outcomes for students, Adachi et al. (2018a) construed it as a range of possible outcomes (e.g., transferable skills) as a result of peer assessment. In this case, we regarded it as the possible assessment activities that can be paired with peer assessment in the web-based peer assessment platform. From the platforms we reviewed, it was possible for 8 (47%) of the platforms to combine peer assessment, self-assessment, and team member evaluation in the design of an activity (i.e., Blackboard; Eduflow; Expertiza; Kritik; Moodle; Peerceptiv; PeerMark; and, PeerScholar). On the other hand, 4 (23.5%) of the platforms allowed instructors to include both peer assessment and self-assessment when setting up an activity (i.e., Aropä; Eli Review; Mobius SLIP; and, PeerGrade). Also, 3 (17.6%) platforms allowed teachers to arrange peer assessment of submitted outputs at the time of our data collection (i.e., Canvas, CritViz, and Crowd Grader), while 2 (11.8%) platforms were designed for team member evaluation in group works (i.e., CATME and TEAMMATES). Generally, the majority of the platforms can be used in a variety of educational fields and levels due to its flexible and modifiable nature. This flexibility allows the instructors to mix and match features that they wish to integrate in their class based on their intended learning outcomes for students. Such option is especially powerful given that instructors obviously play a central role in implementing new assessment designs in their courses, particularly peer assessment (Panadero & Brown, 2017).

2.2 Link to Self-assessment

Previous studies have acknowledged the benefits of the intertwined roles of peer assessment and self-assessment (Boud, 2013; Dochy et al., 1999; To & Panadero, 2019). Therefore, it was not surprising that the majority of the platforms had a self-assessment feature. To illustrate, there were 12 (70.6%) platforms where self-assessment (or self-critique, self-review; self-evaluation; self-check, etc.) was integrated in the design of the web-based peer assessment platform (i.e., Aropä; Blackboard; CATME; Eduflow; Expertiza; Kritik; Mobius SLIP; Peerceptiv; PeerGrade; PeerMark; PeerScholar; and TEAMMATES). Also, 1 (5.9%) platform did not appear to have ‘self-assessment’ as a named feature, but it has a different feature (e.g., Revision Notes) which can be considered as self-assessment (i.e., Eli Review). There were also 2 (11.8%) platforms that facilitated self-assessment, but it required instructors to set it up in a different feature (e.g., plug-in installation; as a quiz or survey; adding questions) (i.e., Canvas; Moodle). Finally, 2 (11.8%) platforms did not appear to have a self-assessment feature when we extracted information (i.e., CritViz; Crowd Grader).

Therefore, it can be said that in most of the platforms instructors would just have to click a few options to enable students to self-assess. Other platforms on the other hand, require self-assessment to be in an external activity, which may require a little work for instructors to set up. It is important to note that self-assessment was called with various terms in most of the platforms. Given that self-assessment has become an integral part of the platforms, it is important for instructors to carefully plan how self-assessment and peer assessment would be combined to reap the benefits of it. More than just simply making students rate the quality of their work or asking surface questions about students' perception of their submission, it would be more powerful if students could assess their work against concrete standards and criteria to facilitate better reflection during self-assessment (Panadero et al., 2016).

2.3 Calibration and Task Scaffolding

In terms of calibration and task scaffolding, 4 (23.5%) platforms had a built-in training and/or practice feature that students had to go through before proceeding with the peer assessment exercise (i.e., CATME; Kritik; PeerScholar; and, Expertiza). Additionally, in three of these platforms students could practice their peer scoring skills on fictitious team members or sample outputs before proceeding with the actual peer assessment (i.e., CATME; Kritik; Expertiza). There is also an option in a platform where an instructor could embed “Microlearning Experience” videos about giving effective peer feedback and accurate peer scores before assessing peer’s outputs (i.e., PeerScholar). While external training may depend on the instructor in the rest of the platforms (e.g., setting up an additional practice assessment activity before the actual peer assessment activity), the majority of the platforms offered a support page in their website with materials about how to give helpful feedback or accurate scores to peers (e.g., YouTube videos, guide prompts; articles). In such instances, it may require some effort for the students to navigate around the website to find such resources. Therefore, it would be more helpful if web-based platform developers integrate features for scaffolding and training prior to the actual peer assessment activity since providing students with sufficient and proper scaffolding to perform peer assessment, through multiple training and practice sessions, improves their assessment skills (Double et al., 2020; Li et al., 2020).

3 Web-Based Peer Assessment Features Influencing Feedback

In this section, we will analyse how the features of web-based peer assessment platforms influenced the feedback that students give to each other, based on the following design elements: feedback information type, feedback utilization, and moderation of feedback.

3.1 Feedback Information Type

In terms of feedback information type, all the 17 (100%) platforms supported both quantitative (e.g., peer scores) and qualitative (e.g., peer feedback) peer assessment. It is also important to note that some platforms also allowed the provision of multimedia recorded feedback (via audio or video). Also, the platforms offered a flexible way for instructors to set up their rubrics for peer scoring and prompts for peer feedback. For instance, these platforms allowed instructors to upload or create their rubrics or write their prompts in the website, or to adapt existing rubrics or prompts. These are important features since having students give and receive both quantitative and qualitative feedback are obviously the central actions of peer assessment (Topping, 1998).

In relation to platforms that support multimedia recorded feedback, evidence has shown that such feedback delivery approach helps in promoting deeper learning for assessors and assessees (Filius et al., 2019). However, although evidence showed that students provided better quality peer feedback in audio recorded mode than in a written mode, students perceived that preparing audio recorded peer feedback was not efficient (Reynolds & Russell, 2008). Importantly, students still preferred receiving written peer feedback over audio recorded peer feedback in a writing task (Reynolds & Russell, 2008). Granting that listening to recorded peer feedback may appear to be beneficial, the additional preparation involved might bring more work for students. Also, it might present challenges for instructors to manage students’ multimedia feedback since they also have to keep track of, not just the peer feedback messages itself, but also each assessor’s non-verbal gestures for video feedback, or prosodic features for audio feedback (e.g., intonation, stress, rhythm, etc.). Nonetheless, multimedia recorded peer feedback is important since it overcomes the limitations of text-based communication (e.g., absence of non-verbal cues) (Phielix et al., 2010). Therefore, further studies should consider looking at how the features of these multimedia recorded feedback can influence the dynamics between assessors and assessees in a web-based peer assessment environment.

3.2 Feedback Utilization

The uptake or utilization of feedback that students receive from various sources (e.g., peer, self, instructor) has been one of the focus of many feedback models (see Lipnevich & Panadero, 2021 for a review). Many web-based peer assessment platforms materialize this by integrating a resubmit function in their platforms. For instance, 14 (82.35%) of the platforms allowed students to submit multiple revisions of their work after peer assessment (i.e., Aropä; Blackboard; Canvas; CritViz; Eduflow; Eli Review; Expertiza; Kritik; Mobius SLIP; Moodle; Peerceptiv; PeerGrade; PeerMark; and, PeerScholar), while 1 (5.9%) platform did not seem to have a resubmission feature, but instructors may set up another assignment to allow resubmission (i.e., Crowd Grader). On the other hand, resubmission was not applicable for 2 (11.8%) platforms since it was developed to evaluate team members in a group task (i.e., CATME and TEAMMATES). Allowing students to resubmit their output, whether in-class or online, after receiving feedback facilitates assessment for learning, which can be beneficial for students (Black & Wiliam, 1998; Panadero et al., 2016). This provides students multiple opportunities to improve their work, while it also gives instructors multiple indices to determine how students are learning.

3.3 Feedback Moderation

In terms of the moderation of feedback, 10 (59%) platforms had a built-in mechanism for assessees responses to assessor’s judgements, disputing the peer scores received, or complain about inappropriate feedback (i.e., Aropä; Crowd Grader; Eduflow; Eli Review; Kritik; Mobius SLIP; Moodle; Peerceptiv; PeerGrade; and, Peer Scholar). To illustrate, these platforms allowed assesses to rate assessor’s feedback based on a variety of criteria (e.g., helpfulness, motivating, etc.), which instructors may integrate in the final grade. Also, there are features where assesses can “return the feedback” (or back-evaluate/back-review) on assessors’ feedback by giving suggestions on how the feedback can be improved, engaging in anonymous collaboration to ask for further advice, or simply ask for clarification if assessors’ feedback was vague (i.e., Aropä; Crowd Grader; Eduflow; Eli Review; Peerceptiv; PeerGrade; and, Peer Scholar). Additionally, some platforms also allowed students to flag inappropriate feedback or inaccurate scores, where the instructor would have to mediate to settle differences (i.e., Kritik; Moodle; PeerGrade). Besides assessees’ ratings of each feedback, it was also possible to automatically compare an assessor’s rating based on several indices (e.g., against other assessors of the same output) (i.e., Mobius SLIP). On the other hand, there are 3 (17.6%) platforms where the instructor may choose to censor or rate a feedback if it is inappropriate or inaccurate (i.e., Blackboard; PeerMark; and, TEAMMATES). The other 4 (23.5%) platforms relied on instructor’s manual monitoring of the process to moderate peer assessment (i.e., Canvas; CATME; CritViz; and, Expertiza).

Since students generate various thoughts, feelings, and actions in peer assessment (Panadero, 2016; Topping, 2021), it is not surprising that students may be concerned about retaliation when giving peers a critical feedback or low score (Patchan et al., 2018). Therefore, promoting student accountability in peer assessment is vital given that most web-based peer assessment activities in the majority of the platforms are anonymous. Although this feature may facilitate a discussion between assessors and assessees by allowing them to interact during the feedback process, such as in back-evaluations, we believe that investing more time in training students’ assessment skills—whether in web-based or face-to-face settings—will be more fruitful than encouraging students to do well in peer assessments because their peers would rate the quality or accuracy of their feedback, or because their peer’s rating of their feedback would be part of their course grade. Developing students’ assessment skills will enhance their evaluative judgement, which may be useful beyond schooling (Tai et al., 2018). Thus, finding the right balance between developing students’ assessment skills, and making them accountable for the feedback they give is an area that should be considered by instructors and platform developers.

4 Web-Based Peer Assessment Features Influencing Social Interactions

In this section, we will analyse how the features of the web-based peer assessment platforms affect interaction between students based on the following design elements: anonymity, peer configuration, and peer matching.

4.1 Anonymity

Implementing anonymity in peer assessment has been the subject of intensive discussion in recent years (see Panadero & Alqassab, 2019 for a review). Some studies suggest that anonymity is beneficial for students’ performance (Li, 2017; Lu & Bol, 2007) and their affect (Raes et al., 2015; Rotsaert et al., 2018; Vanderhoven et al., 2015), while others questioned its role in formative peer assessment activities since assessors and assesses are supposed to know each other to process feedback (Strijbos et al., 2009). In the web-based peer assessment platforms we reviewed, there were 15 (88.2%) platforms with a double-blind anonymity feature (e.g., completely unidentifiable, assignment of a number or pseudonym) and most of these platforms have options to remove the double-blind anonymity feature to make assessors and assessee identifiable (i.e., Aropä; Blackboard; Canvas; CritViz; Crowd Grader; Eduflow; Eli Review; Expertiza; Kritik; Mobius SLIP; Moodle; Peerceptiv; PeerGrade; PeerMark; and, PeerScholar). Finally, 2 (11.8%) platforms had a single-blind anonymity since it was designed for team member evaluation, where assessors know the identity of the assessee (typically their groupmate) they are assessing (i.e., CATME and TEAMMATES).

Since peer assessment is an interpersonal and social activity (Panadero, 2016; van Gennip et al., 2009), it is important to carefully plan the activities, so that students feel comfortable and safe. This was also noted in a previous web-based peer assessment platform review, which suggested to software developers to consider various institutional regulations in managing student privacy during peer assessment (Luxton-Reilly, 2009). The platforms we evaluated considered this aspect of peer assessment by integrating a number of flexible anonymity settings in their system. Then again, decisions lie with the instructors since there might be activities where peer assessment should be anonymised, and activities where putting off anonymity might be more beneficial for student interaction.

4.2 Peer Configuration

In terms of peer configuration, Gielen et al. (2011) notes that peer assessment can be done individually between students, between groups, or a combination of both. All of the 17 (100%) platforms we evaluated allowed a purely individual peer assessment between students (e.g., one assessor and one assessee). Additionally, there were 2 (11.8%) platforms designed for team member evaluation in group tasks (e.g., members of a group assesses each other in terms of helpfulness, contributions, etc.) which may not require a group submission of an output (i.e., CATME and TEAMMATES). Since the majority of the platforms allowed group submission, one would assume that they also allowed inter-group peer assessment (e.g., one group would assess another group’s output). Of the 15 platforms that allow group submission, there were 8 (53.3%) platforms which allowed both individual submission and individual peer assessment, as well as group submission and inter-group peer assessment (i.e., Aropä; Canvas; Eduflow; Kritik; Mobius SLIP; Peerceptiv; PeerGrade; and, Peer Scholar). Finally, there were 7 (46.7%) platforms which supported individual submission and individual peer assessment, as well as group submission, but it was unclear if they also supported inter-group peer assessment during the period of our data extraction (i.e., Blackboard; CritViz; Crowd Grader; Eli Review; Expertiza; Moodle; and, PeerMark).

In a recent article, Topping (2021) noted that the constellation of assessors and assessees during peer assessment can be a complicated decision to make. For instance, instructors have to consider: how students (or groups) created the output to be assessed? If peer assessment is to be done individually, by pairs, or by groups? Will peer assessment be reciprocal? While these questions can be answered by the instructor's objectives in performing peer assessment, the platforms we evaluated offered an array of options in configuring students to perform various peer assessment activities. Thus, choosing the right option to support better student interaction is crucial when planning peer assessment activities.

4.3 Peer Matching

With regards to how assessors and assessees are matched in the platforms we evaluated, 14 (82.4%) offered both system and instructor matching, where the platform allocated assessors and assesses based on its own algorithms for the prior, and a manual matching of assessors and assessees for the latter (i.e., Aropä; Blackboard; Canvas; CATME; CritViz; Eduflow; Eli Review; Expertiza; Mobius SLIP; Moodle; Peerceptiv; PeerGrade; PeerMark; and, Peer Scholar). The majority of these platforms also offered flexibility for instructor to set the minimum or maximum number of assessors per output. There were also platforms where the instructor may choose to keep the same matching of assessor and assessee per draft submission, or randomize the pairing every submission, or manually match students per submission (i.e., Peerceptiv). Apart from system and instructor matching, some platform also offered students to self-select outputs they want to assess (i.e., PeerMark). There were also unique platforms where the students fill a survey based on several aspects (e.g., schedule, sex, race, etc.), and the instructor may choose to system match students based on survey result similarity or diversity (i.e., CATME). On the other hand, 1 (5.9%) platform used artificial intelligence to match students based on several factors (e.g., equal distribution of weak and strong assessors for an output; or matching students based on output similarity) (i.e., Kritik). Also, 1 (5.9%) platform gave students a choice if they wish to participate in the peer assessment process, where they may choose to decline review or request review in the platform (i.e., Crowd Grader). It is also important to note that students who wish to participate in those reviews were incentivised through grades. Finally, 1 (5.9%) platform only supported instructor matching since it was designed for team member rating, and it could happen that students had already formed the group outside the system (i.e., TEAMMATES).

The variety of new approaches in matching assessors and assessees in peer assessment provides the chance for instructors to match the students based on several parameters. This is particularly useful for courses with a high number of students. While instructor matching is a “time-tested approach” of matching students and system generated matching is a “newer approach” of matching students, future studies should explore how these two approaches affect student interaction in peer assessment outcomes.

5 Conclusions

In this chapter, we investigated the features of 17 web-based peer assessment platforms to determine how they can potentially affect learning, students’ feedback exchange, and the social interaction. We used nine peer assessment design elements from Adachi et al.’s (2018a) framework. Overall, we deem that the majority of the analyzed platforms offered features in support of students' learning, to generate positive feedback exchange between assessors and assessees, and a productive social interaction between students, but all depend on the configuration chosen by the instructor. The question of whether some features are helpful or detrimental is beyond the scope of this study. However, we provided a set of categories that researchers and instructors may use to further examine platform features. Also, these features will be put to waste if students and instructors do not receive ample training on how to use and take advantage of the features embedded in these platforms along with training on peer assessment itself (Panadero et al., 2016; Panadero & Brown, 2017). Regarding the platforms and as we mentioned earlier, students should be trained on how to provide and process feedback, while instructors should also be onboarded on how to plan and properly harness the features embedded in the peer assessment platforms. In sum, there is large potential for web-based peer assessment platforms in having a significant impact on students’ peer assessment and academic performance, and on facilitating instructors' implementation of peer assessment. Researchers, instructors, educational technologists, and programmers should work together to seamlessly integrate web-based peer assessment platforms in more settings to cater to different courses and educational contexts.

References

Adachi, C., Tai, J., & Dawson, P. (2018a). A framework for designing, implementing, communicating and researching peer assessment. Higher Education Research and Development, 37(3), 453–467. https://doi.org/10.1080/07294360.2017.1405913

Adachi, C., Tai, J.H.-M., & Dawson, P. (2018b). Academics’ perceptions of the benefits and challenges of self and peer assessment in higher education. Assessment and Evaluation in Higher Education, 43(2), 294–306. https://doi.org/10.1080/02602938.2017.1339775

Babik, D., Gehringer, E., Kidd, J., Pramudianto, F., & Tinapple, D. (2016). Probing the landscape: Toward a systematic taxonomy of online peer assessment systems in education. In CSPRED 2016: Workshop on Computer-Supported Peer Review in Education. https://digitalcommons.odu.edu/teachinglearning_fac_pubs/22

Black, P., & Wiliam, D. (1998). Assessment and classroom learning. Assessment in Education: Principles, Policy and Practice, 5(1), 7–74. https://doi.org/10.1080/0969595980050102

Boud, D. (2013). Enhancing learning through self-assessment. Routledge. https://doi.org/10.4324/9781315041520

Bouzidi, L., & Jaillet, A. (2009). Can online peer assessment be trusted? Journal of Educational Technology and Society, 12(4), 257–268. http://www.jstor.org/stable/jeductechsoci.12.4.257

Chen, T. (2016). Technology-supported peer feedback in ESL/EFL writing classes: A research synthesis. Computer Assisted Language Learning, 29(2), 365–397. https://doi.org/10.1080/09588221.2014.960942

Cho, K., & Schunn, C. D. (2007). Scaffolded writing and rewriting in the discipline: A web-based reciprocal peer review system. Computers and Education, 48(3), 409–426. https://doi.org/10.1016/j.compedu.2005.02.004

Dochy, F., Segers, M., & Sluijsmans, D. (1999). The use of self-, peer and co-assessment in higher education: A review. Studies in Higher Education, 24(3), 331–350. https://doi.org/10.1080/03075079912331379935

Double, K. S., McGrane, J. A., & Hopfenbeck, T. N. (2020). The impact of peer assessment on academic performance: A meta-analysis of control group studies. Educational Psychology Review, 32(2), 481–509. https://doi.org/10.1007/s10648-019-09510-3

Filius, R. M., Kleijn, R. A. M., Uijl, S. G., Prins, F. J., Rijen, H. V. M., & Grobbee, D. E. (2019). Audio peer feedback to promote deep learning in online education. Journal of Computer Assisted Learning, 35(5), 607–619. https://doi.org/10.1111/jcal.12363

Fu, Q.-K., Lin, C.-J., & Hwang, G.-J. (2019). Research trends and applications of technology-supported peer assessment: A review of selected journal publications from 2007 to 2016. Journal of Computers in Education, 6(2), 191–213. https://doi.org/10.1007/s40692-019-00131-x

Gielen, M., & De Wever, B. (2015). Scripting the role of assessor and assessee in peer assessment in a wiki environment: Impact on peer feedback quality and product improvement. Computers and Education, 88, 370–386. https://doi.org/10.1016/j.compedu.2015.07.012

Gielen, S., Dochy, F., Onghena, P., Struyven, K., & Smeets, S. (2011). Goals of peer assessment and their associated quality concepts. Studies in Higher Education, 36(6), 719–735. https://doi.org/10.1080/03075071003759037

Janssen, J., Erkens, G., Kanselaar, G., & Jaspers, J. (2007). Visualization of participation: Does it contribute to successful computer-supported collaborative learning? Computers and Education, 49(4), 1037–1065. https://doi.org/10.1016/j.compedu.2006.01.004

Kaufman, J. H., & Schunn, C. D. (2011). Students’ perceptions about peer assessment for writing: Their origin and impact on revision work. Instructional Science, 39(3), 387–406. https://doi.org/10.1007/s11251-010-9133-6

Lai, C.-L., & Hwang, G.-J. (2015). An interactive peer-assessment criteria development approach to improving students’ art design performance using handheld devices. Computers and Education, 85, 149–159. https://doi.org/10.1016/j.compedu.2015.02.011

Li, L. (2017). The role of anonymity in peer assessment. Assessment and Evaluation in Higher Education, 42(4), 645–656. https://doi.org/10.1080/02602938.2016.1174766

Li, H., Xiong, Y., Hunter, C. V., Guo, X., & Tywoniw, R. (2020). Does peer assessment promote student learning? A meta-analysis. Assessment and Evaluation in Higher Education, 45(2), 193–211. https://doi.org/10.1080/02602938.2019.1620679

Lipnevich, A. A., & Panadero, E. (2021). A review of feedback models and theories: Descriptions, definitions, and conclusions. Frontiers in Education, 6. https://www.frontiersin.org/article/10.3389/feduc.2021.720195

Lu, R., & Bol, L. (2007). A comparison of anonymous versus Identifiable e-Peer review on college student writing performance and the extent of critical feedback. Journal of Interactive Online Learning, 6(2), 100–115.

Luxton-Reilly, A. (2009). A systematic review of tools that support peer assessment. Computer Science Education, 19(4), 209–232. https://doi.org/10.1080/08993400903384844

Panadero, E. (2016). Is it safe? Social, interpersonal, and human effects of peer assessment: A review and future directions. In Handbook of Human and Social Conditions in Assessment (pp. 247–266). Routledge.

Panadero, E., & Alqassab, M. (2019). An empirical review of anonymity effects in peer assessment, peer feedback, peer review, peer evaluation and peer grading. Assessment and Evaluation in Higher Education, 44(8), 1253–1278. https://doi.org/10.1080/02602938.2019.1600186

Panadero, E., & Brown, G. T. L. (2017). Teachers’ reasons for using peer assessment: Positive experience predicts use. European Journal of Psychology of Education, 32(1), 133–156. https://doi.org/10.1007/s10212-015-0282-5

Panadero, E., Jonsson, A., & Strijbos, J.-W. (2016). Scaffolding self-regulated learning through self-assessment and peer assessment: Guidelines for classroom implementation. In D. Laveault & L. Allal (Eds.), Assessment for Learning: Meeting the challenge of implementation (pp.311–326). Springer.

Patchan, M. M., Schunn, C. D., & Clark, R. J. (2018). Accountability in peer assessment: Examining the effects of reviewing grades on peer ratings and peer feedback. Studies in Higher Education, 43(12), 2263–2278. https://doi.org/10.1080/03075079.2017.1320374

Patchan, M. M., Schunn, C. D., & Correnti, R. J. (2016). The nature of feedback: How peer feedback features affect students’ implementation rate and quality of revisions. Journal of Educational Psychology, 108(8), 1098–1120. https://doi.org/10.1037/edu0000103

Phielix, C., Prins, F. J., & Kirschner, P. A. (2010). Awareness of group performance in a CSCL-environment: Effects of peer feedback and reflection. Computers in Human Behavior, 26(2), 151–161. https://doi.org/10.1016/j.chb.2009.10.011

Phielix, C., Prins, F. J., Kirschner, P. A., Erkens, G., & Jaspers, J. (2011). Group awareness of social and cognitive performance in a CSCL environment: Effects of a peer feedback and reflection tool. Computers in Human Behavior, 27(3), 1087–1102. https://doi.org/10.1016/j.chb.2010.06.024

Raes, A., Vanderhoven, E., & Schellens, T. (2015). Increasing anonymity in peer assessment by using classroom response technology within face-to-face higher education. Studies in Higher Education, 40(1), 178–193. https://doi.org/10.1080/03075079.2013.823930

Reynolds, J., & Russell, V. (2008). Can you hear us now? A comparison of peer review quality when students give audio versus written feedback. The WAC Journal, 19(1), 29–44. https://doi.org/10.37514/WAC-J.2008.19.1.03

Rotsaert, T., Panadero, E., & Schellens, T. (2018). Anonymity as an instructional scaffold in peer assessment: Its effects on peer feedback quality and evolution in students’ perceptions about peer assessment skills. European Journal of Psychology of Education, 33(1), 75–99. https://doi.org/10.1007/s10212-017-0339-8

Søndergaard, H., & Mulder, R. A. (2012). Collaborative learning through formative peer review: Pedagogy, programs and potential. Computer Science Education, 22(4), 343–367. https://doi.org/10.1080/08993408.2012.728041

Strijbos, J. -W., Ochoa, T. A., Sluijsmans, D. M. A., Segers, M. S. R., & Tillema, H. H. (2009). Fostering interactivity through formative peer assessment in (web-based) collaborative learning environments: In C. Mourlas, N. Tsianos, & P. Germanakos (Eds.), Cognitive and emotional processes in web-based Education (pp. 375–395). IGI Global. https://doi.org/10.4018/978-1-60566-392-0.ch018

Tai, J., Ajjawi, R., Boud, D., Dawson, P., & Panadero, E. (2018). Developing evaluative judgement: Enabling students to make decisions about the quality of work. Higher Education, 76(3), 467–481. https://doi.org/10.1007/s10734-017-0220-3

To, J., & Panadero, E. (2019). Peer assessment effects on the self-assessment process of first-year undergraduates. Assessment and Evaluation in Higher Education, 44(6), 920–932. https://doi.org/10.1080/02602938.2018.1548559

Topping, K. (1998). Peer assessment between students in colleges and universities. Review of Educational Research, 68(3), 249–276. https://doi.org/10.3102/00346543068003249

Topping, K. (2021). Peer assessment: Channels of operation. Education Sciences, 11(3), 91. https://doi.org/10.3390/educsci11030091

van den Berg, I., Admiraal, W., & Pilot, A. (2006). Design principles and outcomes of peer assessment in higher education. Studies in Higher Education, 31(3), 341–356. https://doi.org/10.1080/03075070600680836

van Gennip, N. A. E., Segers, M. S. R., & Tillema, H. H. (2009). Peer assessment for learning from a social perspective: The influence of interpersonal variables and structural features. Educational Research Review, 4(1), 41–54. https://doi.org/10.1016/j.edurev.2008.11.002

Vanderhoven, E., Raes, A., Montrieux, H., Rotsaert, T., & Schellens, T. (2015). What if pupils can assess their peers anonymously? A quasi-experimental study. Computers and Education, 81, 123–132. https://doi.org/10.1016/j.compedu.2014.10.001

Voet, M., Gielen, M., Boelens, R., & De Wever, B. (2018). Using feedback requests to actively involve assessees in peer assessment: Effects on the assessor’s feedback content and assessee’s agreement with feedback. European Journal of Psychology of Education, 33(1), 145–164. https://doi.org/10.1007/s10212-017-0345-x

Wang, X. -M., Hwang, G. -J., Liang, Z. -Y., & Wang, H. -Y. (2017). Enhancing students’ computer programming performances, critical thinking awareness and attitudes towards programming: An online peer-assessment attempt. Journal of Educational Technology and Society, 20(4), 58–68. https://www.jstor.org/stable/26229205

Wen, M. L., & Tsai, C.-C. (2008). Online peer assessment in an inservice science and mathematics teacher education course. Teaching in Higher Education, 13(1), 55–67. https://doi.org/10.1080/13562510701794050

Zheng, L., Zhang, X., & Cui, P. (2020). The role of technology-facilitated peer assessment and supporting strategies: A meta-analysis. Assessment and Evaluation in Higher Education, 45(3), 372–386. https://doi.org/10.1080/02602938.2019.1644603

Funding and Acknowledgements

The first author is funded by the European Union’s Horizon 2020 Research and Innovation Programme under the Marie Skłodowska Curie grant agreement Nº 847624. In addition, a number of institutions backed and co-financed his project. Any dissemination of results must indicate that it reflects only the author's view and that the Agency is not responsible for any use that may be made of the information it contains. The second author is funded by the Spanish National R+D call from the Ministerio de Ciencia, Innovación y Universidades (Generación del conocimiento 2019), Reference number: PID2019-108982GB-I00.

The authors would like to thank the web-based peer assessment platform developers who gave us complementary access to their applications.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this chapter

Cite this chapter

Ocampo, J.C.G., Panadero, E. (2023). Web-Based Peer Assessment Platforms: What Educational Features Influence Learning, Feedback and Social Interaction?. In: Noroozi, O., De Wever, B. (eds) The Power of Peer Learning. Social Interaction in Learning and Development. Springer, Cham. https://doi.org/10.1007/978-3-031-29411-2_8

Download citation

DOI: https://doi.org/10.1007/978-3-031-29411-2_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-29410-5

Online ISBN: 978-3-031-29411-2

eBook Packages: EducationEducation (R0)