Abstract

The setup of thesis circles at the exit level of undergraduate programs expects students to co-supervise each other’s work and multiple peer feedback is used to replace supervisor formative feedback. Integrating multiple peer feedback requires students to be able to make evaluative judgements by identifying relation patterns among different feedback givers and make a reasoned decision for improving their own work. Unfortunately, most undergraduate students find it difficult to deal with this high degree of multiplicity. Therefore, teachers should support feedback receivers through sufficient training materials and well-designed instructional activities to effectively make sense and integrate multiple peer feedback. Increasingly diverse research on peer feedback makes it difficult for teachers to interconnect all aspects in their instructional design. In this chapter, we develop a conjecture map to structure the design of instructional activities and to advance the current literature in four ways: (1) we use a combination of analogical/holistic and analytical comparisons to guide students throughout the peer review process, (2) we engage feedback receivers in epistemic reflection so that they grasp intra- and inter-feedback, (3) we describe the mediating processes on how these activities result in intervention outcomes of evaluative judgements and improved thesis work, and (4) we propose how to structure a feedback dialogue and generate a self-feedback report. Our instructional design demonstrates how to apply various design principles from multiple text integration and feedback literature to student integration of multiple peer feedback.

We have no conflicts of interest to disclose.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

1.1 Student Peer Review and Feedback in Thesis Circles

The didactic principles of collaborative learning, peer learning, and the process and social interaction of writing are becoming increasingly important in Dutch Higher Education (HE), with the uptake of undergraduates’ theses at the exit level (Elbow, 1998; Rajagopal et al., 2021; Romme & Nijhuis, 2002). Following these, peer review, defined as “an instructional writing activity in which students read and provide commentary on one another’s writing, and the purpose of this activity is to help students improve their writing and gain a sense of audience” (Breuch, 2004, p. 1), has been an important learner-centered activity in the context of thesis circles, a form of group supervision, in which a number of students are supervised under one or two academic supervisors in the process of writing their graduation thesis (Rajagopal et al., 2021; Romme & Nijhuis, 2002). In thesis circles, students often receive feedback from multiple peers to compensate for little and targeted supervisor feedback (Romme & Nijhuis, 2002). Starting from student independent work and critical thinking, students de facto act as non-formal co-supervisors of their peers and co-regulate each other’s learning (Romme & Nijhuis, 2002). Reviewing each other’s work helps students make sense of the quality criteria of academic writing and this understanding in turn helps them reflect on their own writing and increases the potential to improve their writing products (Cho & MacArthur, 2011; Huisman et al., 2018; Nicol et al., 2014; Noroozi et al., 2023). One challenge for students is the integration of multiple information sources by considering the contextual constraints and personal stand, which is an emerging theme of critical thinking in higher education (Elder & Paul, 2009; Facione, 2011).

1.2 Multiple Peer Feedback and the Need for Student Support

As suggested in the large-scale assessment literature, involving students in giving each other peer feedback is a cost-effective solution to compensate for supervisor feedback (Broadbent et al., 2018). However, the quality of peer feedback varies. Compared to teacher feedback based on profound didactic and content expertise (Gielen et al., 2010), peer feedback is not always treated seriously because students are uncertain of feedback quality from their equals (Latifi et al., 2021; Taghizadeh et al., 2022). In addition, students do not feel obliged to use peer feedback because there is no consequence on their grades if they do not use feedback in their revision (Zhao, 2010). To deal with this, involving multiple peers to give feedback seems to be a solution because applying a four-eyes principle is likely to ensure feedback quality. Students also suggest having “more reviews as then you had a better chance of getting one of good quality” (Nicol et al., 2014, p. 109).

Research of peer feedback and epistemological understanding suggests that students need training and support on how to deal with feedback made from reviewers with multiple perspectives and with different research interests and foci (Falchikov, 2013; Kuhn, 2020). This support can concern the quality of peer feedback, and on how students engage in deep processing of feedback (Ajjawi et al., 2021; Berndt et al., 2018) as well as how they integrate multiple feedback into a coherent set of suggestions for improving their writing.

1.3 Students Need Support on Assessing Feedback Quality

Regarding feedback quality, literature states that students attribute high-quality feedback to be attentive to their own work and to show emotions with detailed suggestions that are useful for them to make improvements on the subsequent tasks (Dawson et al., 2019). Training activities for students to give peer feedback is therefore often based on these quality criteria (Hsiao et al., 2015; Nicol & McCallum, 2021), but how students should judge the quality of received feedback has received less attention. As pointed out by recent research on feedback literacy, students need to develop “the understandings, capacities and dispositions needed to make sense of information and use it to enhance work or learning strategies” (Carless & Boud, 2018, p. 1316). Without an appropriate level of feedback literacy, it is difficult for students to judge the quality of peer feedback and determine which feedback is useful for their own task improvement, especially when students do not have sufficient criterion knowledge (i.e., how well quality work should look like) of quality feedback and integration strategies of multiple feedback.

1.4 Students Need Support on Integrating Multiple Feedback

As for student deep engagement with received feedback, recent attention has focused more on supporting students to transform external feedback (from teachers or peers) to their internal feedback, which is defined as “the new knowledge that students generate when they compare their current knowledge and competence against some reference information” (Nicol, 2021, p. 2). This theme aims to draw attention to the ultimate goal of feedback practices: to enhance student learning. The notion of generating new knowledge requires students to engage in higher order thinking skills, such as analysis, evaluation and synthesis. According to Nicol’s model, various types of external reference information can stimulate students to generate internal feedback (Nicol, 2021). The most effective one is comparing their own work with others. This kind of comparative judgment against concrete external reference information (others’ work) is analogical/holistic, reasoning from what is known about one exemplar or case to infer new information about another exemplar or case (Gentner et al., 2001). Analogical comparisons are different from analytical comparisons based on rubric consisting of criteria and standards, which students perceive as abstract and difficult (Nicol, 2021; Sadler, 2009). Although comparative judgement seems to be easier for students to generate internal feedback (Nicol, 2021), its validity that justifies the rationales of these judgments, still needs more research in peer feedback studies (Nicol & McCallum, 2021). In addition, when doing comparative judgement, it can be difficult for students to “identify the shared principles and rational structures” (Nicol, 2021, p. 6) which require higher order thinking skills (analysis and synthesis) to generate new knowledge (creation) and to improve their own work. Therefore, students need guidance to generate high quality internal feedback (e.g., using prompt questions to process and uptake feedback) from external multiple peer feedback. Also, learning activities should bridge the gap between student internal feedback and how to use new knowledge in the revision, to improve their own work.

1.5 Integrating Multiple Peer Feedback: Developing Instructional Design for a Complex Student Activity

Before integrating multiple peer feedback, students need to make evaluative judgements of feedback quality based on multiple assessment criteria of feedback content and form. They also need to organize multiple interpretations of their own work into a coherent action plan, based on task and personal learning goals. This integration consists of multiple comparative analyses and multiple relation constructions among different components of student work and multiple assessment criteria (Kuhn, 2020). These processes, without support, can overload students, especially for those who are not yet developed to deal with multiple perspectives (Kuhn, 2020).

Although several didactic strategies in peer feedback studies are proposed to guide the student process of feedback (Banihashem et al., 2022; Latifi & Noroozi, 2021), they mainly focus on a single feedback source, either from the teacher or one peer at a time (Falchikov, 2013; Nicol & McCallum, 2021; Winstone et al., 2017a, 2017b). In addition, students’ uptake of peer feedback and their efficiency of using peer feedback to improve her or his own work still needs more research. Some authors have advocated to embed these feedback processing in a broad context of course instructional design (Berndt et al., 2018; Dawson et al., 2019; Mercader et al., 2020). Taken all together, this chapter aims to build such an instructional design to support student integration of multiple peer feedback in a thesis circle context, drawing on academic knowledge in feedback literacy research and epistemological understanding.

2 Methodology

We follow the paradigm of Educational Design Research (McKenney & Reeves, 2014) to develop our instructional design for the integration of multiple feedback. In particular, we used a design conjecture mapping approach to identify conjectures (i.e., “unproven propositions that are thought to be true” [McKenney & Reeves, 2014, p. 32]) and theoretical principles (e.g., students need support and structure before doing peer review and feedback) for the specific instructional activities of multiple peer feedback on written work. We mapped out “how they are predicted to work together to produce desired outcomes” (Sandoval, 2014, p. 19).

A conjecture map is made to illustrate the salient design elements and how these elements function together to achieve the desired outcomes. Before identifying design characteristics (i.e., dimensions, elements and principles), we carried out a problem analysis by examining the complexities of undergraduate thesis writing, and looked at the student cognitive developmental stage to describe the challenges faced by undergraduate students when dealing with multiple peer feedback in a specific context of thesis circles. Through this analysis, we identified important needs for specific structure, scaffolding and learning activities. Based on the literature study, we formulated design questions and identified design conjectures to understand which features we need to integrate and which outcomes we aim to achieve.

Based on this conjecture map, we described an integrated instructional design that supports students to deal with multiple peer feedback, including sense-making and uptake of feedback. Our design then becomes synthesis of the theories and studies from feedback literacy, integration of multiple texts in reading comprehension, and cognitive processing and biases in decision making processes. We describe the theoretical and empirical research base underlying each stage of this design.

2.1 Complexities and Challenges of Multiple Peer Feedback Practices

A graduation thesis is perceived by students as the most challenging academic work in their bachelor’s program because it requires a greater degree of independent learning than previous assessments in the program curriculum (Huang, 2010; Todd et al., 2004). An undergraduate graduation requires students to use critical thinking, research, and writing skills for a specific problem statement or research question. It requires students to take responsibility and work independently in making decisions about the choice of thesis subject and supervisor, setting goals and making personalized planning, monitoring own progress and evaluating quality (Todd et al., 2004). The supervisor plays the central role in guiding and supporting this independent learning process, in a way that balances student autonomy and guidance (de Kleijn et al., 2012; Todd et al., 2004). Unfortunately, it is not easy to find an appropriate balance, because most senior undergraduates still rely on authority (i.e., supervisors, tutors, more competent peers) to deal with uncertainty arising from decision making and carrying out the tasks (Baxter Magolda, 2001). Independent learning becomes even more challenging in thesis circles, because students are supposed to co-supervise their peers (Romme & Nijhuis, 2002) while they are each other’s equals and everyone works on a different topic (within a shared theme) and while they work on their own topic and thesis.

From the perspective of epistemological development, independent inquiry requires students to reach the stage of contextual relativism or become evaluativists (i.e., both terms are used interchangeably in the following texts) that they know some solutions are better than others, depending on context (Hofer & Pintrich, 1997; Kuhn, 2020). Students need to go beyond the lower stages of dualism (seeing solutions are correct or wrong) and multiplicity (seeing each solution takes a different perspective). Instructing students to actively engage in critical reflection, perspective taking, and sense-making is likely to develop them to the stage of contextual relativism (Baxter Magolda, 2001; King & Kitchener, 2002; Moore, 2002).

In terms of writing a bachelor’s thesis, students are supposed to achieve contextual relativism (Moore, 2002): to judge an argument by its reasoning and supporting evidence, and consistency of how the argument is made within a certain context (King & Kitchener, 2002), to determine the most reasonable or probable argument based on the quality of justifications, and to draw adequate conclusions “representing the most complete, plausible, or compelling understanding of an issue on the basis of the available evidence” (King & Kitchener, 2002, p. 42). Making appropriate decisions for a thesis context requires students to deal with uncertainty (i.e., knowledge is subjective when facts are unknown (Kurfiss, 1990) and multiplicity (i.e., knowledge is conjectural, uncertain and open to interpretations) (Moore, 2002).

Unfortunately, the majority of undergraduate students are at the multiplicity stage: they accept that there are different degrees of sureness and they can be sure enough if they take a personal stance on an issue (King & Kitchener, 2002). We observe that students at this stage still look for well-defined criteria and standards to evaluate facts and knowledge. They find it difficult to judge something without a clear set of references. These difficulties not only lead to more uncertainties when working on different sections of students’ own theses, but also result in challenges for peer feedback uptake when students have to integrate comments from multiple reviewers. Whereas dealing uncertainties and multiplicity is particularly important in thesis circles when teacher feedback is replaced by peer feedback, research shows students tend to rely on sources from authority rather than their epistemic value (Baxter Magolda, 2001).

Moreover, independent inquiry and student epistemological understanding (i.e., epistemic beliefs and cognition) ideally should be developed over time and embedded in the program curriculum. Nonetheless, students do not always receive guidance or support on dealing with uncertainties and multiplicity during decision making (Moore & Felten, 2018; Todd et al., 2004). This implication for instructional design is that we should provide students with just-in-time scaffolds on their thesis writing to ensure their transition from multiplicity to contextual relativism. In particular, we find it important to make students aware of their biased perception towards feedback givers (i.e., preferring teacher over peer feedback), as part of developing student feedback literacy (Carless & Boud, 2018).

2.2 Design Hypothesis

In our endeavor to support student integration of multiple peer feedback in thesis circles, we work with the following overarching design hypothesis: Asking students to do analogical and analytical comparisons with epistemic reflection helps them integrate multiple peer feedback and transit to contextual relativism. We work within the context of thesis circles.

We use the three building blocks of conjecture mapping to make design choices on the embodiment, mediating processes, and outcomes (Sandoval, 2014) (see Fig. 3.1). The design elements, principles, and their inter-relationships in embodiment and mediating processes are translated from (i) the integrated framework of multiple texts (Barzilai et al., 2018; List & Alexander, 2019), including learner epistemological beliefs, learners’ strategic processing, and argument construction, and (ii) feedback literacy research (e.g., Carless & Boud, 2018; Dawson et al., 2019; Nicol, 2021; Nicol & McCallum, 2021).

3 Instructional Design

3.1 Embodiment

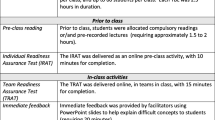

A basic instructional design requires the structure of the learning environment (set design, artifacts and tools), resources (set design, materials), sequence of tasks (epistemic/cognitive design), and social arrangements (social design, working in small groups, roles of receivers and peer reviewers, and their role tasks), such as the Activity Centred Analysis and Design (ACAD) framework (Yeoman & Carvalho, 2019). To develop a focused design on uptake of multiple feedback, we identify the three stages of feedback processing, preparation, execution and production, based on the literature on cultivating feedback literacy and integrated framework of multiple texts. Figure 3.2 gives an overview of these fundamental design elements of our instructional design for feedback uptake at the three stages, developed based on our conjecture map and the ACAD framework.

Instructional design for feedback uptake, based on Fig. 3.1 and Yeoman (2019, p. 69)

At the preparation stage, students should be provided with trainings on feedback literacy and structure to give feedback (Ajjawi et al., 2021). The published training materials of feedback literacy can be directly used together with our instructional design, such as instructional videos of the three processes of feedback (feed-up, feed-back, feed-forward) (Hattie & Timperley, 2007) and how to formulate constructive peer feedback. For example, supervisors can use or adapt materials from the Developing Engagement with Feedback Toolkit (DEFT) (Winstone & Nash, 2017). In addition, students who are feedback receivers can use a cover sheet (Bloxham & Campbell, 2010) to specify their personalized learning goals (i.e., specific aspects on which they are looking for feedback), accompanying their submitted thesis work.

As for the structure to give feedback, a peer feedback report for reviewers (see Table 3.1) can be used to summarize in-text comments and classify them based on assessment criteria of thesis content quality (e.g., what makes good introduction, literature review). The form of using a peer feedback report guides reviewers to relate written comments to the criteria and standards and it is more likely to induce process-related feedback (affirmations, argumentations), and to feed-forward suggestions (Dirkx et al., 2021).

3.1.1 Training Materials and Activities (at the Preparation Stage)

The training in our instructional design focuses on feedback uptake and epistemic cognition skills. The materials for feedback uptake include evaluative criteria of quality feedback (see the next paragraph), exemplars with good and poor feedback, and strategies for students to self-aware of their epistemic beliefs (Table 3.2).

Based on literature review, we select four evaluative criteria of quality feedback (Brookhart, 2008; Dawson et al., 2019; O’Donovan et al., 2021): purposefulness (i.e., task and writer’s personalized learning goals are considered in the feedback), validity (i.e., qualitative comments are based on assessment criteria of thesis content quality), specificity (i.e., explanations why thesis work does not meet the content criteria), and constructiveness (i.e., starting with appraisals and then critiques, followed by providing suggestions how to improve the work). Purposefulness and validity are particularly important to develop students to evaluativist stage, because students need to determine whose feedback is more appropriate for their goals (purposefulness) and more helpful for them to improve their work to meet thesis assessment criteria (validity). Also, specificity and constructiveness are indispensable to effectively deliver the explanations of purposefulness and validity (Gielen & De Wever, 2015).

The normative models for peer feedback training are often based on analytical comparisons (Evans, 2013; Jonassen, 2011), such as using a rubric with criteria and standards to evaluate a simple piece of student work and determine its quality levels. Unfortunately, analytical comparisons based on established criteria and standards are often abstract and difficult. In the case of feedback quality, evaluation criteria may be new to students, resulting from insufficient feedback literacy in the program curriculum. Therefore, using an exemplar to show how to apply criteria and standards is regarded as a more effective training method because students are supported by both analogical and analytical reasoning. An effective exemplar should be “authentic and user-friendly” (Carless & Chan, 2017, p. 930), similar or the same to student current assignment (e.g., feedback on thesis work) (Hendry et al., 2011), and explicit about how assessment criteria are applied to the feedback content (i.e., to show teacher tacit knowledge in evaluative judgments and quality expectations of the thesis work) (Lipnevich et al., 2014) and feedback form/technical aspect (i.e., constructiveness). Therefore, using past student work with peer feedback reports seems to be the best choice for exemplars.

As for epistemic cognition skills, literature shows evaluativists use more cognitive and metacognitive strategies, compared to people at lower levels of epistemological development (Greene & Yu, 2016). Therefore, reflection questions are used to make students aware of different dimensions of their epistemic beliefs (see Table 3.2) and to guide them to make different types of justifications.

Training activities provide students with practices to deal with multiple peer feedback and should simulate actual feedback processes in the Activity structure and Discursive practices of the conjecture map. In addition, supervisors and students should discuss exemplars so that they co-construct meanings of quality feedback and form reasoned justifications why it is good based on its interpretation of feedback criteria. Co-construction is essential to avoid the pitfalls that students regard exemplars as model answers and this in turn restricts student endeavor to make quality feedback (Carless & Chan, 2017). But before students can co-construct meaning, they need to first engage in deeper thinking processes rather than immediately participating in interactive dialogues with others. Following these rationales, we propose the following training design based on Carless and Chan’s dialogic model (2017) and a step-wise monologue-dialogue-discussion (Manning & Jobbitt, 2019). Our training design consists of both analogical/holistic and analytical comparisons and emphasizes the importance of sequencing attentive and active listening before interactive dialogues (which is fundamental for feedback uptake).

At the beginning of the training, students are informed of the purpose of using exemplars for feedback uptake training. Each student reads two exemplars of feedback reports (A and B) based on a thesis work and carries out holistic/analogical (as a whole, which feedback report is better) and analytic comparisons (which one is better per criterion). During the discussion, students work in pairs and in three rounds. During the first round (monologue), Student 1 talks about her/his analyses in three minutes and Student 2 listens and takes notes. During the second round (monologue), Student 2 talks about her/his analyses in three minutes and Student 1 listens and takes notes. This monologue step forces students to focus on important findings at a higher level and listening to each other first can stimulate confrontations and avoid minimal contributions. During the third round (dialogue), both students compare their analyses and collectively determine which exemplar is better, on which they need to provide justifications to explain why. Supervisors use the strategies in Table 3.2 to probe students’ epistemic beliefs. After these, the supervisor carries out the whole class discussions on each pair’s findings. Through the training sessions, students understand the feedback quality processes they need to apply to their own work in further learning activities.

3.1.2 Activity Structure (at the Execution Stage)

During the training activities, students do not relate multiple peer feedback to their own work and feedback yet. The Activity Structure aims to engage feedback receivers in understanding and evaluating individual (intra-feedback processing) and multiple (inter-feedback processing) peer feedback through analogical/holistic and analytical comparisons.

The design principles of Activity Structure are:

-

Align feedback uptake activities with the training materials and activities.

-

Analogical and holistic comparisons take place before analytic comparisons.

-

Guide decision making based on explanations and justifications.

-

Reflect why their decisions change.

-

Use organizational tools to make sense of and integrate multiple peer feedback.

As discussed in the introduction, feedback uptake is possibly influenced by receivers’ perception of reviewers’ level in thesis writing. Therefore, receivers carry out anonymous comparisons, by using any Learning Management System (LMS) that supports peer review procedures (e.g., Canvas). In the following texts, two peer reviewers are abbreviated as PR1 and PR2.

Intra-Feedback Understanding with Analogical and Analytical Comparisons. Understanding each reviewer’s feedback is the first step to deal with feedback. Feedback receivers are usually asked to read each peer feedback report and relate it to the in-text comments added to their own thesis work. Unfortunately, reading alone is not sufficient (Kuhn, 2020) and as Winstone and Nash stated, “Many students don’t even take any notice of their feedback!” (2017, p. 17). When being receivers, students need to be equipped and motivated to engage in and use feedback (Winstone et al., 2017a, 2017b). As informed by research in comparative judgements, comparing feedback quality is a purposeful activity that motivates students to read feedback carefully (otherwise they cannot compare) (Lesterhuis et al., 2017).

By holistic/analogical comparisons, receivers first identify the general impression that integrates several comments made by each reviewer by answering three questions: Is the reviewer positive, negative, constructive/neutral about your work? Which feedback report is better? Why do you make these choices?

By analytical comparisons, receivers compare the quality of each peer feedback report based on the criteria of purposefulness, validity, specificity, and constructiveness (see Table 3.3). They also need to justify their choices.

Inter-Feedback Understanding with Anonymous Analogical and Analytical Comparisons. Receivers at this stage need to identify the relationships between two reviewers’ feedback and select points for feedback dialogue in discursive practices. Again, receivers carry out two types of comparisons, but this time they focus on the content of peer reviewers’ feedback. By holistic/analogical comparisons, receivers now identify a pattern between two reviewers: Are two feedback reports complementary or conflicting each other (see Table 3.4)?

By analytic comparisons, receivers go through two rounds of comparisons. First, they identify a relation pattern between two reviewers on each content criterion and justify why it is complementary or conflicting. Secondly, they compare two reviewers’ feedback reports and indicate whether s(he) makes a tentative decision by indicating whether (s)he agrees or disagrees with analytic feedback on each criterion and justify why. In addition, they select points for feedback dialogue. Finally, they re-rank feedback quality made during intra-feedback understanding by answering this question: Which feedback report is better now? Why?

3.1.3 Discursive Practices: Student Feedback Dialogue and Self-feedback (at the Production Stage)

The importance of feedback dialogues has been advocated by multiple researchers in feedback literacy (e.g., Ajjawi & Boud, 2018; Carless & Chan, 2017; Winstone et al., 2017a, 2017b). As pointed out by Winstone et al. (2017a, 2017b), feedback receivers must decode the received feedback and respond in a way that allows reviewers to evaluate the feedback perceptions. In addition, receivers should play a proactive role in peer feedback dialogue (Zhu & To, 2021). In our Activity Structure, receivers have been decoding feedback content and evaluating feedback quality (see Tables 3.3 and 3.4), without knowing who reviewers are.

Before the feedback dialogue, PR1 and PR2 read each other’s feedback report and receiver’s completed Table 3.4, because the reviewers need to evaluate how the feedback is perceived and interpreted. As a Discursive Practice, the receiver attends to this evaluation and needs to actively find out “what to do differently, and how” (Winstone & Nash, 2017, p. 17). The feedback dialogue should be structured to facilitate different role tasks and be aligned with the training activities. We propose to adapt Manning and Jobbit’s model (2019) to dialogue-monologues-discussion (see Fig. 3.2). First, PR1 and PR2 have a dialogue to discuss whether they agree or disagree with the relation patterns in Table 3.4. For the complementary patterns, PR1 and PR2 elaborate on what the receiver can do. For the conflicting patterns, PR1 and PR2 need to find out why these differences occur in their feedback. The receiver listens, takes notes, and reacts to PR1 and PR2’s dialogue results. Then PR1 and PR2 take turns to react to the receiver’s disagreements (in Table 3.4) in a monologue while the receiver listens and takes notes. Finally, the receiver goes through the discussion points in Table 3.4 to have a group discussion with both PR1 and PR2. Then the receiver answers three reflective questions: (1) At the beginning of the feedback dialogue session, are you surprised when you know who the reviewers are? If so, why are you surprised? (2) After this feedback dialogue, which peer feedback report do you find better? PR1 or PR2? (3) What would you change your own feedback to PR1 and PR2 now and why? The detailed steps in this feedback dialogue are shown in Appendix.

At the end of the feedback dialogue, the receiver makes a self-feedback report by re-evaluating the relation patterns, making a final decision of each reviewer’s feedback on each criterion, and making an action plan (see Table 3.5).

3.2 Mediating Processes

The mediating processes are the hypothesized interactions triggered by Activity Structure and are directly contributed to the outcomes (Sandoval, 2014). Stimulating students to construct personal understanding from external feedback information is a prerequisite for putting it into action. As described in Activity structure, students are prompted to use effective cognitive strategies to understand each individual’s and multiple peers’ work. Macrostructure strategies are effective to enhance both intra- and inter-feedback understanding, such as identifying main ideas and organizational tools (Castells et al., 2021).

3.2.1 Sense-Making of Intra-Feedback

When doing analogical/holistic and analytic comparisons, receivers (with or without awareness) carry out comprehension monitoring (i.e., students’ self-evaluations of their understanding), epistemic monitoring (i.e., students’ monitoring of feedback not violating their prior knowledge, epistemic standards for trustworthiness), and the monitoring of cognitive product formation (i.e., students’ monitoring of their task goals and their achievement of expected cognitive outcomes) (List & Alexander, 2019). These strategies are important for students to make sense of the criteria of both feedback quality and thesis content and the relationship between these two sets of criteria. For example, a comment about research questions can be “The specific focus of the study only becomes clear at the end”. Receivers examine to what extent this comment is relevant to the criterion of research questions (validity, comprehension monitoring) and check their prior knowledge about research questions (epistemic monitoring): Is this comment elaborated with explanations? Is the focus characteristics of research questions only or does it relate more to the introduction section?

3.2.2 Sense-Making of Inter-Feedback

Several comparisons and reflective questions guide receivers to make sense of inter-feedback by constructing a mental representation of each peer reviewer’s feedback (i.e., holistic judgement), comparing and contrasting different interpretations of multiple criteria from multiple reviewers (i.e., complementary or conflicting), synthesizing complementary comments or reconciling conflicting comments (i.e., Table 3.4). The integration of multiple peer feedback is likely to take place, when receivers identify relation patterns among two reviewers, combine and organize information into a coherent whole, connect multiple inter-feedback links (e.g., whether two reviewers agree with each other holistically or analytically), and make decisions on which reviewer’s feedback to agree with.

3.2.3 Awareness of Epistemic Beliefs and Cognitive Bias

As discussed in the introduction, undergraduates need support on improving their epistemic beliefs to further develop from multiplicity to contextual relativism so that they can deal with the high complexity of their own thesis work and multiple peer feedback. Epistemic beliefs refer to students’ feelings and ideas about the nature and source of knowledge (Hofer & Pintrich, 1997) which are important in the peer feedback activities (Banihashem et al., 2023; Noroozi, 2018, 2022). Table 3.2 lists four dimensions of epistemic beliefs that are likely to influence student understanding and making judgment of others’ work and instructional strategies to make students examine their beliefs (Bråten et al., 2011).

As for cognitive bias, human mental processing relies on analogical reasoning. When encountering a new situation, we look for prior knowledge in our schema and try to locate similar knowledge or experience to help us make decisions. Unfortunately, prior knowledge is not always a reliable source because memories can fade and past experience was situated in a different context. Therefore, the Activity Structure explicitly asks students to compare peer feedback reports to their prior experiences (e.g., training activities, earlier comparison results).

Our conjecture map ends at the activity that students complete a self-feedback report (Table 3.5). We do not expand on how students use the feedback on their actual improvement of their work.

4 Outcomes

There are three learning outcomes of supporting students in the integration of multiple peer feedback. First, both analogical/holistic and analytical comparisons are likely to improve student levels of evaluative judgements based on a better understanding of criterion knowledge of quality feedback and quality thesis work. Second, different types of comparisons and questions engage students in all of the four dimensions of epistemic beliefs (in Table 3.2) and these in turn contribute to student development towards evaluativist (contextual relativism). Third, asking students to fill out sense-making tables (Tables 3.3 and 3.4) and to generate a self-feedback report (Table 3.5) is likely to result in improved work (Nicol et al., 2014; Wu et al., 2019).

5 Conclusion

Research on peer feedback has been exploding in numbers and diversity. However, the specific focus of each research school makes it difficult for teachers to interconnect all of these aspects in their instructional design (Nieminen et al., 2022). With this in mind, based on integration of research findings, we hope that a concrete instructional design with activity descriptions can support teachers in designing peer review activities in thesis circles. In the future study, we will implement each step in Activity Structure to corroborate the occurrence of Mediating Processes and Outcomes.

For peer feedback to be effective, students need a proper training and multiple practices to process and integrate multiple peer feedback so that integrated multiple peer feedback is likely to replace supervisor feedback effectively. It is inevitable that supervisors need to invest certain transition costs on training and multiple practices in the beginning. Fortunately, thesis circles often involve a group of supervisors to design and organize activities together. Through collaboration with others, in a long term, each supervisor’s transition costs will be paid off by implementing the proposed activities of our design.

Although this chapter focuses on feedback receivers, we are aware that feedback effectiveness cannot only count on the receivers’ uptake. Feedback is always interactive and reviewers’ feedback influences how feedback uptake takes place (Latifi et al., 2023). Still, when students are supported with these activities, materials (i.e., Tables 3.2, 3.3, 3.4 and 3.5) and reflective questions, they are more likely to change their own feedback giving behavior.

Finally, although this chapter focuses on multiple reviewers’ feedback in thesis writing, the support in this design can be applicable for students to deal with real-world discussions that often involve multiple voices and opinions. Integrating epistemological development to instruction design is important for students to gradually develop from multiplicity to contextual relativism and this should receive more attention in undergraduate curriculum design.

References

Ajjawi, R., & Boud, D. (2018). Examining the nature and effects of feedback dialogue. Assessment and Evaluation in Higher Education, 43(7), 1106–1119. https://doi.org/10.1080/02602938.2018.1434128

Ajjawi, R., Kent, F., Broadbent, J., Tai, J. H. -M., Bearman, M., & Boud, D. (2021). Feedback that works: A realist review of feedback interventions for written tasks. Studies in Higher Education, 1–14. https://doi.org/10.1080/03075079.2021.1894115

Banihashem, S. K., Noroozi, O., Biemans, H. J. A., & Tassone, V. C. (2023). The intersection of epistemic beliefs and gender in argumentation performance. Innovations in Education and Teaching International. https://doi.org/10.1080/14703297.2023.2198995

Banihashem, S. K., Noroozi, O., van Ginkel, S., Macfadyen, L. P., & Biemans, H. J. A. (2022). A systematic review of the role of learning analytics in enhancing feedback practices in higher education. Educational Research Review, 100489. https://doi.org/10.1016/j.edurev.2022.100489

Barzilai, S., Zohar, A. R., & Mor-Hagani, S. (2018). Promoting integration of multiple texts: A review of instructional approaches and practices. Educational Psychology Review, 30(3), 973–999. https://doi.org/10.1007/s10648-018-9436-8

Baxter Magolda, M. B. (2001). Making their own way: Narratives for transforming Higher Education to promote self-development. Stylus Publishing. https://books.google.nl/books?id=KPvxDwAAQBAJ

Berndt, M., Strijbos, J.-W., & Fischer, F. (2018). Effects of written peer-feedback content and sender’s competence on perceptions, performance, and mindful cognitive processing. European Journal of Psychology of Education, 33(1), 31–49. https://doi.org/10.1007/s10212-017-0343-z

Bloxham, S., & Campbell, L. (2010). Generating dialogue in assessment feedback: Exploring the use of interactive cover sheets. Assessment and Evaluation in Higher Education, 35(3), 291–300. https://doi.org/10.1080/02602931003650045

Bråten, I., Britt, M. A., Strømsø, H. I., & Rouet, J.-F. (2011). The role of epistemic beliefs in the comprehension of multiple expository texts: Toward an integrated model. Educational Psychologist, 46(1), 48–70. https://doi.org/10.1080/00461520.2011.538647

Breuch, L. -A. K. (2004). Virtual peer review: Teaching and learning about writing in online environments. State University of New York Press. http://site.ebrary.com/id/10594775

Brookhart, S. M. (2008). How to give effective feedback to your students. Association for Supervision and Curriculum Development

Broadbent, J., Panadero, E., & Boud, D. (2018). Implementing summative assessment with a formative flavour: A case study in a large class. Assessment and Evaluation in Higher Education, 43(2), 307–322. https://doi.org/10.1080/02602938.2017.1343455

Carless, D., & Boud, D. (2018). The development of student feedback literacy: Enabling uptake of feedback. Assessment and Evaluation in Higher Education, 43(8), 1315–1325. https://doi.org/10.1080/02602938.2018.1463354

Carless, D., & Chan, K. K. H. (2017). Managing dialogic use of exemplars. Assessment and Evaluation in Higher Education, 42(6), 930–941. https://doi.org/10.1080/02602938.2016.1211246

Castells, N., Minguela, M., Solé, I., Miras, M., Nadal, E., & Rijlaarsdam, G. (2021). Improving questioning–answering strategies in learning from multiple complementary texts: An intervention study. Reading Research Quarterly, n/a(n/a). https://doi.org/10.1002/rrq.451

Cho, K., & MacArthur, C. (2011). Learning by reviewing. Journal of Educational Psychology, 103(1), 73–84. https://doi.org/10.1037/a0021950

Dawson, P., Henderson, M., Mahoney, P., Phillips, M., Ryan, T., Boud, D., & Molloy, E. (2019). What makes for effective feedback: Staff and student perspectives. Assessment and Evaluation in Higher Education, 44(1), 25–36. https://doi.org/10.1080/02602938.2018.1467877

de Kleijn, R., Meijer, P., Brekelmans, M., & Pilot, A. (2012). Curricular goals and personal goals in master’s thesis projects: Dutch student-supervisor dyads. International Journal of Higher Education, 1, 1–11. https://doi.org/10.5430/ijhe.v2n1p1

Dirkx, K., Joosten-ten Brinke, D., Arts, J., & van Diggelen, M. (2021). In-text and rubric-referenced feedback: Differences in focus, level, and function. Active Learning in Higher Education, 22(3), 189–201. https://doi.org/10.1177/1469787419855208

Elbow, P. (1998). Writing without teachers (2nd edn.). Oxford University Press.

Elder, L., & Paul, R. (2009). Close reading, substantive writing and critical thinking: Foundational skills essential to the educated mind. Gifted Education International, 25(3), 286–295. https://doi.org/10.1177/026142940902500310

Evans, C. (2013). Making sense of assessment feedback in higher education. Review of Educational Research, 83(1), 70–120. https://doi.org/10.3102/0034654312474350

Facione, P. (2011). Critical thinking: What it is and why it counts. Retrieved Mar. 9th, 2020, from https://www.insightassessment.com/article/critical-thinking-what-it-is-and-why-it-counts-pdf

Falchikov, N. (2013). Improving assessment through student involvement: Practical solutions for aiding learning in higher and further education. Taylor & Francis.

Gentner, D., Holyoak, K. J., & Kokinov, B. N. (2001). The analogical mind: Perspectives from cognitive science. MIT Press.

Gielen, M., & De Wever, B. (2015). Structuring peer assessment: Comparing the impact of the degree of structure on peer feedback content. Computers in Human Behavior, 52, 315–325. https://doi.org/10.1016/j.chb.2015.06.019

Gielen, S., Tops, L., Dochy, F., Onghena, P., & Smeets, S. (2010). A comparative study of peer and teacher feedback and of various peer feedback forms in a secondary school writing curriculum. British Educational Research Journal, 36(1), 143–162. https://doi.org/10.1080/01411920902894070

Greene, J. A., & Yu, S. B. (2016). Educating critical thinkers: The role of epistemic cognition. Policy Insights from the Behavioral and Brain Sciences, 3(1), 45–53. https://doi.org/10.1177/2372732215622223

Hattie, J., & Timperley, H. (2007). The power of feedback. Review of Educational Research, 77(1), 81–112. https://doi.org/10.3102/003465430298487

Hendry, G. D., Bromberger, N., & Armstrong, S. (2011). Constructive guidance and feedback for learning: the usefulness of exemplars, marking sheets and different types of feedback in a first year law subject. Assessment & Evaluation in Higher Education, 36(1), 1–11. https://doi.org/10.1080/02602930903128904

Hofer, B. K., & Pintrich, P. R. (1997). The development of epistemological theories: Beliefs about knowledge and knowing and their relation to learning. Review of Educational Research, 67(1), 88–140. https://doi.org/10.3102/00346543067001088

Hsiao, Y. P., Brouns, F., van Bruggen, J., & Sloep, P. B. (2015). Effects of training peer tutors in content knowledge versus tutoring skills on giving feedback to help tutees’ complex tasks. Educational Studies, 41(5), 499–512. https://doi.org/10.1080/03055698.2015.1062079

Huang, L.-S. (2010). Seeing eye to eye? The academic writing needs of graduate and undergraduate students from students’ and instructors’ perspectives. Language Teaching Research, 14(4), 517–539. https://doi.org/10.1177/1362168810375372

Huisman, B., Saab, N., van Driel, J., & van den Broek, P. (2018). Peer feedback on academic writing: Undergraduate students’ peer feedback role, peer feedback perceptions and essay performance. Assessment and Evaluation in Higher Education, 1–14. https://doi.org/10.1080/02602938.2018.1424318

Jonassen, D. H. (2011). Learning to solve problems: A handbook for designing problem-solving learning environments. Routledge.

King, P., & Kitchener, K. (2002). The reflective judgment model: Twenty years of research on epistemic cognition. In B. K. Hofer, & P. R. Pintrich (Eds.), Personal epistemology: The psychology of beliefs about knowledge and knowing (pp. 37–61). Taylor & Francis Group.

Kuhn, D. (2020). Why is reconciling divergent views a challenge? Current Directions in Psychological Science, 29(1), 27–32. https://doi.org/10.1177/0963721419885996

Kurfiss, J. G. (1990). Critical thinking: Theory, research, practice, and possibilities. Teaching Sociology, 18, 581.

Latifi, S., Noroozi, O., & Talaee, E. (2021). Peer feedback or peer feedforward? Enhancing students’ argumentative peer learning processes and outcomes. British Journal of Educational Technology, 52(2), 768–784. https://doi.org/10.1111/bjet.13054

Latifi, S., & Noroozi, O. (2021). Supporting argumentative essay writing through an online supported peer-review script. Innovations in Education and Teaching International, 58(5), 501–511. https://doi.org/10.1080/14703297.2021.1961097

Latifi, S., Noroozi, O., & Talaee, E. (2023). Worked example or scripting? Fostering students’ online argumentative peer feedback, essay writing and learning. Interactive Learning Environments, 31(2), 655–669. https://doi.org/10.1080/10494820.2020.1799032

Lesterhuis, M., Verhavert, S., Coertjens, L., Donche, V., & De Maeyer, S. (2017). Comparative judgement as a promising alternative to score competences. In E. Cano, & G. Ion (Eds.), Innovative practices for higher education assessment and measurement (pp. 119–138). IGI Global. https://doi.org/10.4018/978-1-5225-0531-0.ch007

Lipnevich, A. A., McCallen, L. N., Miles, K. P., & Smith, J. K. (2014). Mind the gap! Students' use of exemplars and detailed rubrics as formative assessment. Instructional Science, 42(4), 539–559.

List, A., & Alexander, P. A. (2019). Toward an integrated framework of multiple text use. Educational Psychologist, 54(1), 20–39. https://doi.org/10.1080/00461520.2018.1505514

Manning, S. J., & Jobbitt, T. (2019). Engaged and interactive peer review: Introducing peer review circles. RELC Journal, 50(3), 475–482. https://doi.org/10.1177/0033688218791832

McKenney, S., & Reeves, T. C. (2014). Educational design research. In J. M. Spector, M. D. Merrill, J. Elen, & M. J. Bishop (Eds.), Handbook of research on educational communications and technology (pp. 131–140). Springer. https://doi.org/10.1007/978-1-4614-3185-5_11

Mercader, C., Ion, G., & Díaz-Vicario, A. (2020). Factors influencing students’ peer feedback uptake: Instructional design matters. Assessment and Evaluation in Higher Education, 45(8), 1169–1180. https://doi.org/10.1080/02602938.2020.1726283

Moore, J. L., & Felten, P. (2018). Academic development in support of mentored undergraduate research and inquiry. International Journal for Academic Development, 23(1), 1–5. https://doi.org/10.1080/1360144X.2018.1415020

Moore, W. S. (2002). Understanding learning in a postmodern world: Reconsidering the Perry scheme of intellectual and ethical development. In B. K. Hofer, & P. R. Pintrich (Eds.), Personal epistemology: The psychology of beliefs about knowledge and knowing (pp. 17–36). Pintrich, Taylor & Francis Group.

Nicol, D. (2021). The power of internal feedback: Exploiting natural comparison processes. Assessment and Evaluation in Higher Education, 46(5), 756–778. https://doi.org/10.1080/02602938.2020.1823314

Nicol, D., & McCallum, S. (2021). Making internal feedback explicit: Exploiting the multiple comparisons that occur during peer review. Assessment and Evaluation in Higher Education, 1–19.https://doi.org/10.1080/02602938.2021.1924620

Nicol, D., Thomson, A., & Breslin, C. (2014). Rethinking feedback practices in higher education: A peer review perspective. Assessment and Evaluation in Higher Education, 39(1), 102–122. https://doi.org/10.1080/02602938.2013.795518

Nieminen, J. H., Bearman, M., & Tai, J. (2022). How is theory used in assessment and feedback research? A critical review. Assessment and Evaluation in Higher Education, 1–18. https://doi.org/10.1080/02602938.2022.2047154

Noroozi, O. (2018). Considering students’ epistemic beliefs to facilitate their argumentative discourse and attitudinal change with a digital dialogue game. Innovations in Education and Teaching International, 55(3), 357–365. https://doi.org/10.1080/14703297.2016.1208112

Noroozi, O. (2022). The role of students’ epistemic beliefs for their argumentation performance in higher education. Innovations in Education and Teaching International, 1–12. https://doi.org/10.1080/14703297.2022.2092188

Noroozi, O., Banihashem, S. K., Biemans, H. J. A., Smits, M., Vervoort, M. T. W., & Verbaan, C. (2023). Design, implementation, and evaluation of an online supported peer feedback module to enhance students’ argumentative essay quality. Education and Information Technologies, 1–28. https://doi.org/10.1007/s10639-023-11683-y

O’Donovan, B. M., den Outer, B., Price, M., & Lloyd, A. (2021). What makes good feedback good? Studies in Higher Education, 46(2), 318–329. https://doi.org/10.1080/03075079.2019.1630812

Rajagopal, K., Vrieling-Teunter, E., Hsiao, Y. P., Van Seggelen-Damen, I., & Verjans, S. (2021). Guiding thesis circles in higher education: Towards a typology. Professional Development in Education, 1–18. https://doi.org/10.1080/19415257.2021.1973072

Romme, G., & Nijhuis, J. (2002). Collaborative learning in thesis rings.

Sadler, D. R. (2009). Indeterminacy in the use of preset criteria for assessment and grading. Assessment and Evaluation in Higher Education, 34(2), 159–179. https://doi.org/10.1080/02602930801956059

Sandoval, W. (2014). Conjecture mapping: An approach to systematic educational design research. Journal of the Learning Sciences, 23(1), 18–36. https://doi.org/10.1080/10508406.2013.778204

Taghizadeh Kerman, N., Noroozi, O., Banihashem, S. K., Karami, M. & Biemans, Harm. H. J. A. (2022). Online peer feedback patterns of success and failure in argumentative essay writing. Interactive Learning Environments, 1–10. https://doi.org/10.1080/10494820.2022.2093914

Todd, M., Bannister, P., & Clegg, S. (2004). Independent inquiry and the undergraduate dissertation: Perceptions and experiences of final-year social science students. Assessment and Evaluation in Higher Education, 29(3), 335–355. https://doi.org/10.1080/0260293042000188285

Winstone, N. E., & Nash, R. A. (2017). The “Developing Engagement with Feedback Toolkit (DEFT)”: Integrating assessment literacy into course design. In S. Elkington & C. Evans (Eds.), Transforming assessment in higher education: A case study series (pp. 48–52). Higher Education Academy.

Winstone, N. E., Nash, R. A., Parker, M., & Rowntree, J. (2017a). Supporting learners’ agentic engagement with feedback: A systematic review and a taxonomy of recipience processes. Educational Psychologist, 52(1), 17–37. https://doi.org/10.1080/00461520.2016.1207538

Winstone, N. E., Nash, R. A., Rowntree, J., & Parker, M. (2017b). ‘It’d be useful, but I wouldn’t use it’: Barriers to university students’ feedback seeking and recipience. Studies in Higher Education, 42(11), 2026–2041. https://doi.org/10.1080/03075079.2015.1130032

Wu, W. -H., Kao, H. -Y., Wu, S. -H., & Wei, C. -W. (2019). Development and evaluation of affective domain using student’s feedback in entrepreneurial Massive Open Online Courses. Frontiers in Psychology, 10. https://doi.org/10.3389/fpsyg.2019.01109

Yeoman, P., & Carvalho, L. (2019). Moving between material and conceptual structure: Developing a card-based method to support design for learning. Design Studies, 64, 64–89. https://doi.org/10.1016/j.destud.2019.05.003

Zhao, H. (2010). Investigating learners’ use and understanding of peer and teacher feedback on writing: A comparative study in a Chinese English writing classroom. Assessing Writing, 15(1), 3–17. https://doi.org/10.1016/j.asw.2010.01.002

Zhu, Q., & To, J. (2021). Proactive receiver roles in peer feedback dialogue: Facilitating receivers’ self-regulation and co-regulating providers’ learning. Assessment and Evaluation in Higher Education, 1–13. https://doi.org/10.1080/02602938.2021.2017403

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix: Steps in Student Feedback Dialogue

Appendix: Steps in Student Feedback Dialogue

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this chapter

Cite this chapter

Hsiao, Y.P., Rajagopal, K. (2023). Support Student Integration of Multiple Peer Feedback on Research Writing in Thesis Circles. In: Noroozi, O., De Wever, B. (eds) The Power of Peer Learning. Social Interaction in Learning and Development. Springer, Cham. https://doi.org/10.1007/978-3-031-29411-2_3

Download citation

DOI: https://doi.org/10.1007/978-3-031-29411-2_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-29410-5

Online ISBN: 978-3-031-29411-2

eBook Packages: EducationEducation (R0)