Abstract

Although misinformation is not a new problem, questions about its prevalence, its public impact, and how to combat it have taken on new urgency. An obvious solution to the problem of misinformation is to offer corrections (or debunkings) designed to clarify what is true and what is false. But corrections are not a panacea. Given the scope of the misinformation problem, we must consider: (1) which misinformation to prioritise for correction; (2) how to best correct misinformation; and (3) what else can be done pre-emptively to protect the public from future misdirection, as well as the need to tailor solutions to recognise cultural contexts. In deciding whether to correct, the source of the misinformation, its likely audience, and its harm should all be considered. Correction impact can be maximised by using REACT: repetition, empathy, alternative explanations, credible sources, and timeliness. Beyond correction, we must consider proactive solutions to build audience awareness and resistance. Promoting ‘sticky’ high-quality information, warning people against common myths and misleading techniques, encouraging health and information literacy, and designing platforms more resilient to misinformation efforts are all essential components in the management of infodemics now, and going forward into the future.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

7.1 Introduction

Although misinformation is not a new problem, questions about its prevalence, its public impact, and how to combat it have recently taken on new urgency. Declining trust in social institutions is undermining experts and sowing confusion, while the expansion of social media and internet use has enabled an abundance of information, including false or misleading information to spread more rapidly, especially during a disease outbreak. WHO calls this an ‘infodemic’ (WHO 2022).

An obvious solution to the problem of misinformation is to offer corrections (or debunkings) to clarify what is true and what is false. Broadly speaking, we know that corrections can mitigate misperceptions on a specific issue, but related attitudes and behaviours are more resistant to change (Porter and Wood 2019; Swire et al. 2017a). In some cases, correcting a single inaccurate gateway belief (e.g. the misconception that scientists disagree about climate change) can lead to sustained attitude change (an understanding that scientists agree that climate change is real and dangerous), which can then lead to policy support (van der Linden et al. 2019). In other cases, even when people seemingly accept the correction and acknowledge the inaccuracy of the misinformation, beliefs still continue to be influenced (Walter and Tukachinsky 2020). This continued influence effect is more likely when the misinformation implicates a central identity. For example, one study found that partisans were more likely to accept corrections when they targeted misinformation of misconduct of a single member of their preferred party rather than misconduct by their party in general (Ecker and Ang 2019).

Despite these limitations, corrections remain an important tool to address misinformation. Corrections can come from a variety of sources, including social peers, experts in a particular domain, and fact-checking or news organisations. These sources are complementary; peer correction is especially important given the scale of misinformation (Bode and Vraga 2021), but relies on experts and news organisations to provide the groundwork for the public and platforms to respond to misinformation.

To address misinformation, three related themes must be considered: (1) which misinformation to prioritise for correction, (2) how to best correct misinformation, and (3) what else can be done pre-emptively to protect the public from future misdirection. Additionally, corrections and other pre-emptive solutions for misinformation must be tailored to recognise cultural contexts. To date, much of the research regarding correction and best practices focuses on Western-style democracies. Identifying who serves as a trusted expert remains difficult, as it differs within each community. While many countries rated WHO highly for their COVID-19 response, this perception was not universal, or even consistent within individual countries (Bell et al. 2020).

Increasingly, research suggests that social media platforms focus largely on identifying and correcting English-language misinformation and, to a large extent, ignore non-English-speaking communities and many misinformation hotspots around the world (Avaaz 2021; Wong 2021). Likewise, modern fact-checking originated in the United States and remains more common in countries with a high degree of democratic governance (Amazeen 2020). Research and scholarship must pay more attention to language and cultural factors to tailor solutions to specific contexts (Malhotra 2020; Winters et al. 2021).

7.2 Prioritising Corrections

The scale of misinformation on social media means it may be impossible to respond adequately to all misinformation. Therefore, consideration of the source of the misinformation, the audience it is likely to reach, and the content itself can help provide a focus for which misinformation to prioritise for correction.

7.2.1 Misinformation Source

Not all sources of misinformation are equally important or easy to correct, so the 3 Ps of proximate, prominent, and persuasive sources should be prioritised. Proximity refers to the perceived social distance of a source. People are more likely to believe (mis)information when it is shared by their peers or those close to them, making peer corrections particularly valuable (Malhotra 2020; Margolin et al. 2017; Walter et al. 2020).

The second consideration is the prominence or reach of the source. A study conducted by the Reuters Institute found that although public figures contributed to only 20% of the total misinformation analysed in the study, these posts accounted for 69% of total engagement (Brennen et al. 2020). Opinion leaders or social media personalities wield considerable influence, and misinformation stemming from them can be particularly problematic (Pang and Ng 2017). Recent research suggests just 12 people, called the ‘Disinformation Dozen’, are responsible for the majority of anti-vaccine content on Facebook and Twitter (Ahmed 2021).

The third consideration is source persuasiveness or credibility. Misinformation coming from a trusted or seemingly expert source is likely to be especially persuasive and the use of fake experts is a frequently used tactic in disinformation campaigns (Cook 2020).

7.2.2 Misinformation Audience

Source proximity, prominence, and persuasiveness depend upon the audience. Considering the alignment between the source of misinformation and its likely audience is critical. When the misinformation source and content align with audience values, the misinformation is more likely to generate misperceptions.

A separate audience feature that should also be considered is the insularity of the audience. Misinformation shared within a receptive echo chamber makes correction more difficult; individuals turn to others for social support, which leads to continued misperception (Chou et al. 2018). On the other hand, finding trusted allies who can speak to their peers within otherwise insular groups facilitates correction.

7.2.3 Misinformation Content

Finally, the features of the misinformation itself, especially its salience, accuracy, and potential impact on its audiences, all determine whether information is perceived to be correct or not.

One important consideration is the salience or prominence of the misinformation itself beyond the actual source. Repeating messages makes people believe them more, even if the message is false and conflicts with existing knowledge (Fazio et al. 2015). When misinformation becomes salient, there is an increased need to address it before it can circulate even further.

The line between truth and falsehood is often blurred, but misinformation that directly counters clear expert consensus and concrete data should be prioritised (Vraga and Bode 2020a). Accessible and easy-to-understand materials from credible governing bodies or organisations, in particular, facilitate peer correction (Vraga and Bode 2021).

Finally, of paramount importance, is consideration of the potential negative repercussions of misinformation. While direct, immediate harm from misinformation can be critical (e.g. vaccine misinformation creating vaccine hesitancy), so, too, are potential longer-term effects such as decreased trust in scientists, health literature, or health professionals (e.g. vaccine misinformation lessening trust in doctors or nurses). Misinformation with the potential to cause individual or community harm should be prioritised in correction efforts.

7.3 How to Correct: REACT

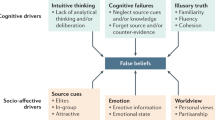

Once a decision has been made to correct a specific piece of misinformation, it is important to do so effectively. While corrections can help reduce misconceptions, it is not expected that they could be fully effective at reducing all misinformation beliefs at group level. To maximise corrective impact, we have summarised best practices using the acronym REACT (for additional summaries, see Lewandowsky et al. 2020; Paynter et al. 2019) (Fig. 7.1).

7.3.1 Repetition

While repetition has historically been exploited by propagandists and advertisers, it can also be used as a force for good when debunking misinformation through repetition of relevant core facts. Claim repetition can strengthen the perception of a social consensus, even if it originates from just a single source (Weaver et al. 2007). It can also be useful to refer to multiple sources of factual information or provide information on social norms, be that an expert consensus (Cook 2016), peer consensus (Ecker et al. 2022b; Vraga and Bode 2020b), or social endorsement of the correction (Vlasceanu and Coman 2022). Finally, even the best corrections may only produce temporary effects in reducing misperceptions, thus necessitating repeated intervention (Paynter et al. 2019; Swire et al. 2017b).

7.3.2 Empathy

When correcting misconceptions, it is important to consider how others may have arrived at a false belief and what their underlying concerns might be. Debunking messages should generally be fact-oriented and civil. The false information and underlying logical flaws should be addressed rather than attacking or ridiculing the misinformation source. Respectful engagement is important, even when the protagonists are not susceptible to rational argument, due to the potentially detrimental impact on observers. Observers often update their beliefs when they see someone else being corrected (often on social media) in a calm and evidence-based manner (Steffens et al. 2019; Vraga and Bode 2020b). Aggressive argumentation has been found to limit the credibility of the debunker (König and Jucks 2019), although uncivil corrections may still reduce misperceptions among some bystanders (Bode et al. 2020). Empathetic corrections should try to appreciate an audience’s worldview; for example, when debunking climate change misinformation, a conservative audience may be more susceptible to framing in terms of economic opportunities rather than government intervention (Kahan 2010). Of course, there are limits to this approach, and in the case of intentionally designed disinformation campaigns, undermining the credibility of the dis-informant may be warranted (MacFarlane et al. 2021; Walter and Tukachinsky 2020).

7.3.3 Alternative Explanation

Arguably the most important component of any correction is that it goes beyond merely challenging a false claim or labelling it as false. If available, corrections should provide factual alternative information, point to evidence, and explain why the misinformation is false (Seifert 2002; van der Meer and Jin 2020). Not only does this make a correction more persuasive, it also provides details that are stored in an individual’s memory and, thus, facilitates future retrieval of the corrective information (Swire et al. 2017b). These explanations need not be elaborate, and effective refutations can even be provided in the concise format of social-media posts (Ecker et al. 2020b).

7.3.4 Credible Source

The most important characteristic of a credible source is its perceived trustworthiness (Guillory and Geraci 2013). While expertise can also matter, especially for the debunking of science-related misinformation (Vraga and Bode 2017; Zhang et al. 2021), a non-expert source can still be effective, whereas a non-trusted source cannot (Ecker and Antonio 2021). The sources that will be perceived as credible will naturally vary across communities, cultural groups and countries. In-group sources, and especially known peers, should be used wherever available (Gallois and Liu 2021; Margolin et al. 2017; Pink et al. 2021). This also highlights the importance of building and maintaining high levels of community trust for organisations and individuals who seek to actively debunk misinformation in the public realm.

7.3.5 Timeliness

Even though the immediacy of a correction may not have a strong impact on the belief updating process itself (Johnson and Seifert 1994), the speed with which misinformation can travel through the contemporary information landscape (Vosoughi et al. 2018) incentivises quick debunking responses. Even if time does not allow for full-blown, detailed refutations, swift rebuttal of particularly concerning pieces of misinformation is still advised.

Critically, any debunking intervention is generally better than no intervention at all. While there are cases where misinformation carries lower risk of harm and can be ‘left alone’ – specifically, where the misinformation is gaining little traction or is deemed inherently harmless – correction is generally beneficial and carries little risk of harm itself. Indeed, concerns regarding potential backfire effects of corrections have been overblown (Ecker et al. 2022a; Swire-Thompson et al. 2020). Moreover, some design factors have been shown to matter less than initially assumed. For example, the order in which a correction presents the to-be-debunked misinformation and the associated facts (i.e. a ‘myth-fact’ or ‘fact-myth’ approach) seems largely inconsequential (Martel et al. 2021; Swire-Thompson et al. 2021).

Another example is the use of stories. While narrative elements can enhance engagement with corrections (Lazić and Žeželj 2021) with a receptive audience, non-narrative debunking that is fact-focused can be just as effective (Ecker et al. 2020a). Ultimately, corrections should be made accessible and relevant to their audience through the use of different techniques: (i) clear, accurate, and engaging graphics or visual simulations (Danielson et al. 2016; Thacker and Sinatra 2019); (ii) analogies (Danielson et al. 2016); or (iii) humour (Vraga et al. 2019).

7.4 Beyond Corrections: Proactive Approaches to Misinformation

Correction is inherently a reactive solution, because it occurs after misinformation has begun to spread. Misinformation is also not bound by reality; it can be created quickly and have considerable novelty and emotional appeal that further encourages its dissemination (Acerbi 2019; Vosoughi et al. 2018). As debunking requires considerable resources, it should be paired with other ways of reducing misinformation, such as promoting high-quality information, ‘prebunking’ misinformation, building health and information literacy, and redesigning media platforms.

7.4.1 Promoting High-Quality Information

Particularly in situations of great uncertainty, when timely access to high-quality information is not available (an ‘information void’), people may form more misconceptions or engage in increased speculation. Moreover, when made available, official recommendations compete with misinformation for attention. If high-quality information is to be heard and understood, it needs to be made ‘stickier’ than misinformation, more adept at grabbing attention, and remaining memorable.

Many of these recommendations for making information ‘sticky’ echo best practices for creating and sharing effective corrections. Highly trusted community leaders should be involved in the design and dissemination of official information, such as trusted military personnel chosen as the public face of the COVID-19 vaccine rollout in Portugal (Hatton 2021). This aims to ensure that information appeals to the target communities’ concerns, cultural values, and priorities. Materials should be as compelling and accessible as possible, supplementing facts with personal narratives and appeals to positive emotions when appropriate (Lazić and Žeželj 2021), using straightforward content and accessible language to account for low audience literacy, and delivering messages through a variety of media channels such as TV or posters for those without internet access.

Contradictory scientific or health information can potentially confuse audiences and undermine trust in guiding institutions (Nagler et al. 2019), so creators of high-quality information should be as transparent in disclosing the sources of information, the available evidence, and who was consulted. An acknowledgement of changes in evidence or recommendations, as well as the admission of errors, is also necessary (Ghio et al. 2021; Hyland-Wood et al. 2021).

7.4.2 Prebunking

‘Prebunking’ or ‘inoculation’ comprises two components: offering a warning about misinformation and pre-emptively refuting misinformation or explaining misleading techniques to build resilience against future attempts at deception (Compton 2020; McGuire 1961). Prebunking has been shown to be effective across different topics, including climate change and the COVID-19 pandemic (Basol et al. 2021; Schmid and Betsch 2019) and can be approached in two complementary ways: issue-based prebunking and logic-based prebunking.

Issue-based or fact-based prebunking requires the anticipation of potential misinformation in a particular domain. For example, many COVID-19 vaccine myths could have been foreseen, since they rely on often repeated tropes of the anti-vaccination movement, such as ‘vaccines are toxic’ or ‘vaccines are unnatural’ (Kata 2012). Another way to increase communication preparedness is to identify emerging or common concerns and rumours by systematically monitoring relevant data sources such as field reports, social media, and news articles (Ecker et al. 2022a). In Malawi, for example, preparations for the human papillomavirus (HPV) vaccine rollout in 2018 included tracking public opinion and pre-emptively informing and reassuring parents and caregivers (Global HPV Communication 2019). There are also guides that can provide resources on how to set up rumour-tracking systems (Fluck 2019; United Nations Children’s Fund 2020).

Logic-based or rhetorical prebunking teaches people about typical misinformation techniques to help them discern the difference between real and fake information. The FLICC framework provides an overview of five commonly used techniques of science denial (Cook 2020). These techniques and examples of each are: Fake Experts – when Jovana Stojkovic appeals to her authority as a psychiatrist to spread baseless vaccine claims in Serbia; Logical Fallacies – the claim ‘she is cancer-free, because she eats healthy food’ is based on the single cause fallacy; Impossible Expectations – ‘PCR tests for coronavirus are not 100% accurate, so we shouldn’t bother administering them’; Cherry Picking – basing the claim that Ivermectin is an effective COVID-19 treatment on a small number of poorly designed studies; and Conspiracy Theories – attributing random, uncontrollable events to malicious intents of powerful actors. Logic-based inoculations can be effectively scaled up through engaging games (Basol et al. 2021; Roozenbeek and van der Linden 2019), such as Bad News (www.getbadnews.com), Go Viral! (www.goviralgame.com), or Cranky Uncle (www.crankyuncle.com).

7.4.3 Literacy Interventions

A long-term approach to managing infodemics necessitates the improvement of health and media literacy, including information, news, and digital competencies. Educating citizens about specific media strategies can help minimise the impact of misinformation (Kozyreva et al. 2020). Encouraging people to ask questions – Do I recognise the news organisation that posted the story? Is the post politically motivated? – can reduce the spread of fake news (Lutzke et al. 2019), while simply reminding someone to consider accuracy can help them discern real from fake news (Pennycook et al. 2020).

It is also crucial to increase access to information and to empower local journalists to identify misinformation, such as First Draft’s collection of tools for journalists (First Draft 2020). During crises, governments can specifically look to collaborate with fact-check organisations that can help provide media literacy education for the community, as in the case of Indonesia (Kruglinski 2021). Simple interventions that empower people to handle misinformation such as tips for spotting false news or accuracy prompts are also scalable to social media platforms (Guess et al. 2020; Pennycook et al. 2021).

There are, however, several caveats to be kept in mind here. Social media literacy interventions may increase confusion through perceptions of hypocrisy between the actions and policies of individual platforms (Literat et al. 2021). Such a situation may even prompt cynicism towards all information (Vraga et al. 2021). Furthermore, interventions may not capture the attention of enough social media users (Tully et al. 2019).

7.4.4 Platform-Led Interventions and Technocognition

In the context of the COVID-19 pandemic, online platforms were quick to take action (Bell et al. 2020), with some introducing or prioritising fact-checking. This follows evidence suggesting such action reduces the impact of misinformation on beliefs (Courchesne et al. 2021). Algorithmic downranking, content moderation, redirection, and account de-platforming are among the most commonly employed interventions aimed at limiting exposure to misinformation. However, they have been criticised for encouraging censorship. Data on their effectiveness is also scarce, especially for non-Western populations (Courchesne et al. 2021).

The production and spread of misinformation can also be addressed by (re)designing online platforms using insights from psychology, communication, computer science, and behavioural economics. This approach has been labelled ‘technocognition’ (Lewandowsky et al. 2017). For example, online platforms such as WhatsApp have limited the number of times a message can be forwarded, thus slowing down the spread of information (de Freitas Melo et al. 2019). Alternatively, they could require readers to pass a comprehension quiz before commenting, as implemented by Norwegian public broadcaster NRK (Lichterman 2017). However, social media companies may not have the motivation or ability to enact these changes without public or governmental pressure.

7.5 Conclusion

Misinformation cannot ever be completely eradicated. However, uncovering the best methods for addressing misinformation in the most effective ways possible is still vital. Debunking misinformation can significantly reduce misperceptions when employed effectively. Misinformation that is more likely to have a negative impact, either because of the nature of the source, the audience, or the misinformation itself, should be prioritised for correction. Debunking is unlikely to backfire, so should be encouraged in most scenarios. Corrections can be made more effective by using best practices to REACT, using repetition, empathy, alternative explanations, credible sources, and timely responses in any debunking efforts.

Corrections are appropriate when misinformation is already circulating. However, the scope of the misinformation problem requires additional proactive solutions to build audience awareness and resistance. Promoting ‘sticky’ high-quality information, warning people against common myths and misleading techniques, encouraging health and information literacy, and designing platforms more resilient to misinformation efforts are all essential components in the management of infodemics now and going forward into the future.

References

Acerbi A (2019) Cognitive attraction and online misinformation. Palgrave Commun 5(1):1–7

Ahmed I (2021) The disinformation dozen: why platforms must act on twelve leading online anti-vaxxers. Center for Countering Digital Hate. https://www.counterhate.com/disinformationdozen

Amazeen MA (2020) Journalistic interventions: the structural factors affecting the global emergence of fact-checking. Journalism 21(1):95–111

Avaaz (2021) Left behind: how Facebook is neglecting Europe’s infodemic. https://secure.avaaz.org/campaign/en/facebook_neglect_europe_infodemic/

Basol M, Roozenbeek J, Berriche M, Uenal F, McClanahan W, van der Linden S (2021) Towards psychological herb immunity: cross-cultural evidence for two prebunking interventions against COVID-19 misinformation. Big Data Soc 8(1):1–18

Bell J, Poushter J, Fagan M, Kent N, Moncus JJ (2020) International cooperation welcomed across 14 advanced economies. Pew Research Center, https://www.pewresearch.org/global/2020/09/21/international-cooperation-welcomed-across-14-advanced-economies/

Bode L, Vraga EK (2021) People-powered correction: Fixing misinformation on social media. In: Tumber H, Waisbord S (eds) The Routledge companion to media disinformation and populism. Routledge, London, pp 498–506

Bode L, Vraga EK, Tully M (2020) Do the wright thing: Tone may not affect correction of misinformation on social media. Harvard Kennedy School: Misinformation Review https://misinforeview.hks.harvard.edu/article/do-the-right-thing-tone-may-not-affect-correction-of-misinformation-on-social-media/

Brennen JS, Simon FM, Howard PN, Nielsen RK (2020) Types, sources, and claims of COVID-19 misinformation. Reuters Institute. https://reutersinstitute.politics.ox.ac.uk/types-sources-and-claims-covid-19-misinformation

Chou WYS, Oh A, Klein WMP (2018) Addressing health-related misinformation on social media. JAMA 320(23):2417–2418

Compton J (2020) Prophylactic versus therapeutic inoculation treatments for resistance to influence. Commun Theory 30(3):330–343

Cook J (2016) Countering climate science denial and communicating scientific consensus. Oxford Research Encyclopedia of Climate Science https://oxfordre.com/climatescience/view/10.1093/acrefore/9780190228620.001.0001/acrefore-9780190228620-e-314

Cook J (2020) Deconstructing climate science denial. In: Holmes D, Richardson LM (eds) Edward Elgar Research Handbook in Communicating Climate Change. Edward Elgar, Cheltenham, UK

Courchesne L, Ilhardt J, Shapiro JN (2021) Review of social science research on the impact of countermeasures against influence operations. Harvard Kennedy School: Misinformation Review. https://misinforeview.hks.harvard.edu/article/review-of-social-science-research-on-the-impact-of-countermeasures-against-influence-operations/

Danielson RW, Sinatra GM, Kendeou P (2016) Augmenting the refutation text effect with analogies and graphics. Discourse Process 53(5–6):392–414

de Freitas Melo P, Vieira CC, Garimella K, Vaz de Melo POS, Benevenuto F (2019) Can WhatsApp counter misinformation by limiting message forwarding? Complex Netw:1–12

Ecker UKH, Ang LC (2019) Political attitudes and the processing of misinformation corrections. Polit Psychol 40(2):241–260

Ecker UKH, Antonio LM (2021) Can you believe it? An investigation into the impact of retraction source credibility on the continued influence effect. Mem Cogn 49(4):631–644

Ecker UKH, Butler LH, Hamby A (2020a) You don’t have to tell a story! A registered report testing the effectiveness of narrative versus non-narrative misinformation corrections. Cogn Res Princ Impl 5(1):1–26

Ecker UKH, O’Reilly Z, Reid JS, Chang EP (2020b) The effectiveness of short-format refutational fact-checks. Br J Psychol 111(1): 36–54.

Ecker UKH, Lewandowsky S, Cook J, Schmid P, Fazio LK, Brashier N, Kendeou P, Vraga EK, Amazeen MA (2022a) The psychological drivers of misinformation belief and its resistance to correction. Nat Rev Psychol 12(5):e0175799

Ecker UKH, Sanderson JA, McIlhiney P, Rowsell JJ, Quekett HL, Brown GDA, Lewandowsky S (2022b) Combining refutations and social norms increases belief change. Q J Exp Psychol. https://doi.org/10.1177/17470218221111750

Fazio LK, Brashier NM, Payne BK, Marsh EJ (2015) Knowledge does not protect against illusory truth. J Exp Psychol Gen 144(5):993–1002

First Draft (2020) Coronavirus: tools and guides for journalists. https://firstdraftnews.org/long-form-article/coronavirus-tools-and-guides-for-journalists/

Fluck VL (2019) Managing misinformation in a humanitarian context. Internews. https://internews.org/resource/managing-misinformation-humanitarian-context/

Gallois C, Liu S (2021) Power and the pandemic: a perspective from communication and social psychology. J Mult Discours 16(1):20–26

Ghio D, Lawes-Wickwar S, Yee Tang M, Epton T, Howlett N, Jenkinson E, Stanescu S, Westbrook J, Kassianos A, Watson D, Sutherland L, Stanulewicz N, Guest E, Scanlan D, Carr N, Chater A, Hotham S, Thorneloe R, Armitage CJ, Arden M, Hart J, Byrne-Davis L, Keyworth C (2021) What influences people’s responses to public health messages for managing risks and preventing infectious diseases? A rapid systematic review of the evidence and recommendations. BMJ Open 11(11):e048750

Global HPV Communication (2019) Crisis communication preparedness and response to support introduction of the HPV vaccine in Malawi 2018. https://globalhpv.com/document/crisis-communication-preparedness-and-response-to-support-introduction-of-the-hpv-vaccine-in-malawi/

Guess AM, Lerner M, Lyons B, Montgomery JM, Nyhan B, Reifler J, Sircar N (2020) A digital media literacy intervention increases discernment between mainstream and false news in the United States and India. Proc Natl Acad Sci 117(27):15536–15,545

Guillory JJ, Geraci L (2013) Correcting erroneous inferences in memory: the role of source credibility. J Appl Res Mem Cogn 2(4):201–209

Hatton B (2021) Naval officer wins praise for Portugal’s vaccine rollout. AP News, 23 September 2021. https://apnews.com/article/europe-health-pandemics-coronavirus-pandemic-coronavirus-vaccine-84aa55fe5549da02766557669ca4141b

Hyland-Wood B, Gardner J, Leask J, Ecker UKH (2021) Toward effective government communication strategies in the era of COVID-19. Humanit Soc Sci Commun 8(30):1–11

Johnson HM, Seifert CM (1994) Sources of the continued influence effect: when misinformation in memory affects later inferences. J Exp Psychol Learn Mem Cogn 20(20):1420–1436

Kahan D (2010) Fixing the communications failure. Nature 463:296–297

Kata A (2012) Anti-vaccine activists, web 2.0, and the postmodern paradigm: an overview of tactics and tropes used online by the anti-vaccination movement. Vaccine 30(25):3778–3789

König L, Jucks R (2019) Hot topics in science communication: aggressive language decreases trustworthiness and credibility in scientific debates. Public Underst Sci 28(4):401–416

Kozyreva A, Lewandowsky S, Hertwig R (2020) Citizens versus the internet: confronting digital challenges with cognitive tools. Psychol Sci Public Interest 21(3):103–156

Kruglinski J (2021) Countering an ‘infodemic’ amid a pandemic: UNICEF and partners respond to COVID-19 misinformation, one hoax at a time. UNICEF. https://www.unicef.org/indonesia/coronavirus/stories/countering-infodemic-amid-pandemic

Lazić A, Žeželj I (2021) A systematic review of narrative interventions: lessons for countering anti-vaccination conspiracy theories and misinformation. Public Underst Sci 30(6):644–670

Lewandowsky S, Ecker UKH, Cook J (2017) Beyond misinformation: understanding and coping with the ‘post-truth’ era. J Appl Res Mem Cogn 6(4):353–369

Lewandowsky S, Cook J, Lombardi D (2020) The debunking handbook 2020. Datarary. https://doi.org/10.17910/b7.1182

Lichterman J (2017) This site is ‘taking the edge off rant mode’ by making readers pass a quiz before commenting. Nieman Lab. https://www.niemanlab.org/2017/03/this-site-is-taking-the-edge-off-rant-mode-by-making-readers-pass-a-quiz-before-commenting/

Literat I, Abdelbagi A, Law NYL, Cheung MYY, Tang R (2021) Research note: likes, sarcasm, and politics: your responses to a platform-initiated media literacy campaign on social media. Harvard Kennedy School: Misinformation Review. https://misinforeview.hks.harvard.edu/article/research-note-likes-sarcasm-and-politics-youth-responses-to-a-platform-initiated-media-literacy-campaign-on-social-media/

Lutzke L, Drummond C, Slovic P, Árvai J (2019) Priming critical thinking: simple interventions limit the influence of fake news about climate change on Facebook. Glob Environ Chang 58:101964

MacFarlane D, Tay LQ, Hurlstone MJ, Ecker UKH (2021) Refuting spurious COVID-19 treatment claims reduces demand and misinformation sharing. J Appl Res Mem Cogn 10(2):248–258

Malhotra P (2020) A relationship-centered and culturally informed approach to studying misinformation on COVID-19. Soc Media Soc. https://doi.org/10.1177/2056305120948224

Margolin DB, Hannak A, Weber I (2017) Political fact-checking on Twitter: when do corrections have an effect? Polit Commun 35(2):196–219

Martel C, Mosleh M, Rand DG (2021) You’re definitely wrong, maybe: correction style has minimal effect on corrections of misinformation online. Media Commun 9(1):120–133

McGuire W (1961) The effectiveness of supportive and refutational defenses in immunizing and restoring beliefs against persuasion. Sociometry 24(2):184–197

Nagler RH, Yzer MC, Rothman AJ (2019) Effects of media exposure to conflicting information about mammography: results from a population-based survey experiment. Ann Behav Med 53(10):896–908

Pang N, Ng J (2017) Misinformation in a riot: a two-step flow view. Online Inf Rev 41(4):438–453

Paynter J, Luskin-Saxby S, Keen D, Fordyce K, Frost G, Imms C, Miller S, Trembath D, Tucker M, Ecker UKH (2019) Evaluation of a template for countering misinformation: real-world autism treatment myth debunking. PLoS ONE 14(1):e0210746

Pennycook G, McPhetres J, Zhang Y, Lu JG, Rand DG (2020) Fighting COVID-19 misinformation on social media: experimental evidence for a scalable accuracy-nudge intervention. Psychol Sci 31(7):770–780

Pennycook G, Epstein Z, Mosleh M, Arechar AA, Eckles D, Rand DG (2021) Shifting attention to accuracy can reduce misinformation online. Nature 592(7855):590–595

Pink SL, Chu J, Druckman JN, Rand DG, Willer R (2021) Elite party cues increase vaccination intentions among Republicans. Proc Natl Acad Sci 118(32):e2106559118

Porter E, Wood TJ (2019) False alarm: the truth about political mistruths in the Trump era. Cambridge University Press, Cambridge, UK

Roozenbeek J, van der Linden S (2019) Fake news game confers psychological resistance against online misinformation. Palgrave Commun 5(1):1–10

Schmid P, Betsch C (2019) Effective strategies for rebutting science denialism in public discussions. Nat Hum Behav 3(9):931–939

Seifert CM (2002) The continued influence of misinformation in memory: What makes a correction effective? Psychol Learn Motiv 41:265–292

Steffens MS, Dunn AG, Wiley KE, Leask J (2019) How organizations promoting vaccination respond to misinformation on social media: a qualitative investigation. BMC Public Health 19(1):1–12

Swire B, Berinsky AJ, Lewandowsky S, Ecker UKH (2017a) Processing political misinformation: comprehending the Trump phenomenon. R Soc Open Sci 4(3):160802

Swire B, Ecker UKH, Lewandowsky S (2017b) The role of familiarity in correcting inaccurate information. J Exp Psychol Learn Mem Cogn 43(12):1948–1961

Swire-Thompson B, DeGutis J, Lazer D (2020) Searching for the backfire effect: measurement and design considerations. J Appl Res Mem Cogn 9(3):286–299

Swire-Thompson B, Cook J, Butler LH, Sanderson JA, Lewandowsky S, Ecker UKH (2021) Evidence for a limited role of correction format when debunking misinformation. Cogn Res Princ Impl

Thacker I, Sinatra G (2019) Visualizing the greenhouse effect: restructuring mental models of climate change through a guided online simulation. Educ Sci 9(1):2–19

Tully M, Vraga EK, Bode L (2019) Designing and testing news literacy messages for social media. Mass Commun Soc 23(1):22–46

United Nations Children’s Fund (2020) Misinformation management guide: guidance for addressing a global infodemic and fostering demand for immunization. https://vaccinemisinformation.guide/

van der Linden S, Leiserowitz A, Maibach E (2019) The gateway belief model: a large-scale replication. J Environ Psychol 62:49–58

van der Meer TGLA, Jin Y (2020) Seeking formula for misinformation treatment in public health crises: the effects of corrective information type and source. Health Commun 35(5):560–575

Vlasceanu M, Coman A (2022) The impact of social norms on health-related belief update. Appl Psychol Health Well Being 14(2):453–464

Vosoughi S, Roy D, Aral S (2018) The spread of true and false news online. Science 359(6380):1146–1151

Vraga EK, Bode L (2017) Using expert sources to correct health misinformation in social media. Sci Commun 39(5):621–645

Vraga EK, Bode L (2020a) Defining misinformation and understanding its bounded nature: using expertise and evidence for describing misinformation. Polit Commun 37(1):136–144

Vraga EK, Bode L (2020b) Correction as a solution for health misinformation on social media. Am J Public Health 110(1S3):S278–S280

Vraga EK, Bode L (2021) Addressing COVID-19 misinformation on social media preemptively and responsively. Emerg Infect Dis 27(2):396–403

Vraga EK, Kim SC, Cook J (2019) Testing logic-based and humor-based corrections for science, health, and political misinformation on social media. J Broadcast Electron Media 63(3):393–414

Vraga EK, Tully M, Bode L (2021) Assessing the relative merits of news literacy and corrections in responding to misinformation on Twitter. New Media Soc. https://doi.org/10.1177/1461444821998691

Walter N, Tukachinsky R (2020) A meta-analytic examination of the continued influence of misinformation in the face of correction: how powerful is it, why does it happen, and how to stop it? Commun Res 47(2):155–177

Walter N, Brooks JJ, Saucier CJ, Suresh S (2020) Evaluating the impact of attempts to correct health misinformation on social media: a meta-analysis. Health Commun 36(3):1776–1784

Weaver K, Garcia SM, Schwarz N, Miller DT (2007) Inferring the popularity of an opinion from its familiarity: a repetitive voice can sound like a chorus. J Pers Soc Psychol 92(5):821–833

WHO (2022) Infodemic overview. World Health Organization, 2022. https://www.who.int/health-topics/infodemic#tab=tab_1

Winters M, Oppenheim B, Sengeh P, Jalloh MB, Webber N, Abu Pratt S, Leigh B, Molsted-Alvesson H, Zeebari Z, Johan Sundberg C, Jalloh MF, Nordenstedt H (2021) Debunking highly prevalent health misinformation using audio dramas delivered by WhatsApp: evidence from a randomised controlled trial in Sierra Leone. BMJ Glob Health 6(11):e006954

Wong JC (2021, April 12) How Facebook let fake engagement distort global politics: a whistleblower’s account. The Guardian. https://www.theguardian.com/technology/2021/apr/12/facebook-fake-engagement-whistleblower-sophie-zhang

Zhang J, Featherstone JD, Calabrese C, Wojcieszak M (2021) Effects of fact-checking social media vaccine misinformation on attitudes toward vaccines. Prev Med 145:106408

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access Some rights reserved. This chapter is an open access publication, available online and distributed under the terms of the Creative Commons Attribution-NonCommercial 3.0 IGO license, a copy of which is available at (http://creativecommons.org/licenses/by-nc/3.0/igo/). Enquiries concerning use outside the scope of the licence terms should be sent to Springer Nature Switzerland AG.

The designations employed and the presentation of the material in this publication do not imply the expression of any opinion whatsoever on the part of WHO concerning the legal status of any country, territory, city or area or of its authorities, or concerning the delimitation of its frontiers or boundaries. Dotted and dashed lines on maps represent approximate border lines for which there may not yet be full agreement.

The mention of specific companies or of certain manufacturers' products does not imply that they are endorsed or recommended by WHO in preference to others of a similar nature that are not mentioned. Errors and omissions excepted, the names of proprietary products are distinguished by initial capital letters.

Copyright information

© 2023 WHO: World Health Organization

About this chapter

Cite this chapter

Vraga, E.K., Ecker, U.K.H., Žeželj, I., Lazić, A., Azlan, A.A. (2023). To Debunk or Not to Debunk? Correcting (Mis)Information. In: Purnat, T.D., Nguyen, T., Briand, S. (eds) Managing Infodemics in the 21st Century . Springer, Cham. https://doi.org/10.1007/978-3-031-27789-4_7

Download citation

DOI: https://doi.org/10.1007/978-3-031-27789-4_7

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-27788-7

Online ISBN: 978-3-031-27789-4

eBook Packages: MedicineMedicine (R0)