Abstract

The LIGO-Virgo Collaboration achieved the first ‘direct detection’ of gravitational waves in 2015, opening a new “window” for observing the universe. Since this first detection (‘GW150914’), dozens of detections have followed, mostly produced by binary black hole mergers. However, the theory-ladenness of the LIGO-Virgo methods for observing these events leads to a potentially-vicious circularity, where general relativistic assumptions may serve to mask phenomena that are inconsistent with general relativity (GR). Under such circumstances, the fact that GR can ‘save the phenomena’ may be an artifact of theory-laden methodology.

This paper examines several ways that the LIGO-Virgo observations are used in theory and hypothesis testing, despite this circularity problem. First, despite the threat of vicious circularity, these experiments succeed in testing GR. Indeed, early tests of GR using GW150914 are best understood as a response to the threat of theory-ladenness and circularity. Each test searches for evidence that LIGO-Virgo’s theory-laden methods are biasing their overall conclusions. The failure to find evidence of this places constraints on deviations from the predictions of GR. Second, these observations provide a basis for studying astrophysical and cosmological processes, especially through analyses of populations of events. As gravitational-wave astrophysics transitions into mature science, constraints from early tests of GR provide a scaffolding for these population-based studies. I further characterize this transition in terms of its increasing connectedness to other parts of astrophysics and the prominence of reasoning about selection effects and other systematics in drawing inferences from observations.

Overall, this paper analyses the ways that theory and hypothesis testing operate in gravitational-wave astrophysics as it gains maturity. In particular, I show how these tests build on one another in order to mitigate a circularity problem at the heart of the observations.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

1 Introduction

The LIGO-Virgo Collaboration achieved the first ‘direct detection’ of gravitational waves in 2015, a discovery that marked a new epoch for gravitational-wave astrophysics—one in which gravitational waves provided a new “window” for observing the universe. Since this first detection (‘GW150914’), dozens of detections have followed, most produced by binary black hole mergers.

Merging black holes offer us unique access into the ‘dynamical strong field regime’ of general relativity (GR), due to the high speeds and strong gravitational fields involved with these events. Such events are of interest for learning about strong-field gravity, as well as about black hole populations and formation channels.

However, the LIGO-Virgo methods for observing these events also pose some interesting epistemic problems. Parameter estimation and other inferences about the source system are highly theory- or model-laden, in that all such inferences rely on assumptions about how source parameters determine merger dynamics and gravitational wave emission. This leads to a potentially vicious circularity, where general relativistic assumptions may serve to mask phenomena that are inconsistent with GR. Under such circumstances, the fact that GR can ‘save the phenomena’ may be an artifact of theory-laden methodology.

In this paper I examine the ways that the LIGO-Virgo Collaboration engages in theory testing (and model and hypothesis testing) despite this circularity problem.

I begin, in Sect. 4.2, by rehearsing some of the key epistemic challenges of gravitational-wave astrophysics, with an emphasis on issues of theory- or model-ladenness and circularity as they arise in the context of theory testing.

In Sect. 4.3 I examine several ways that the LIGO-Virgo observations act as tests of GR. I argue that the tests of GR using individual events (such as GW150914) are best understood as a response to the circularity problem described above; they test whether the circularity in their methodology is problematic, through searching for evidence that these methods are biasing the overall conclusions. This allows the LIGO-Virgo Collaboration to place constraints on the bias introduced by the specific assumptions being tested. While this does much to mitigate the circularity problem, I also discuss how degeneracies of various kinds make it difficult to constrain all sources of bias introduced by the use of GR models.

In Sect. 4.4 I then describe the further tests that become available as gravitational-wave astrophysics transitions into mature science. This involves a shift in focus from individual events to populations. I show how the earlier, event-based tests of GR provide a foundation on which these population-level inferences may be built. Specific examples include (in Sect. 4.4.1) inferences about the astrophysical mechanisms that produce binary black hole mergers (e.g., van Son et al. 2022), and (in Sect. 4.4.2) inferences about cosmological expansion (e.g., Chen et al. 2018). The use of populations helps reduce some remaining sources of uncertainty (e.g., due to distance/inclination degeneracy) but not others (e.g., due to ‘fundamental theoretical bias’). Either way, hypothesis testing using populations must continue to grapple with the issues raised in Sects. 4.2 and 4.3.

Finally, in Sect. 4.5, I discuss the themes that emerge from my examination of theory testing in earlier sections. First, I characterize a transition that is occurring in gravitational-wave astrophysics as it gains maturity. This involves a shift to populations; an increasing interdependence or connectedness with electromagnetic astrophysics; and a greater resemblance to other parts of astrophysics. This includes, for example, grappling with a ‘snapshot’ problem of drawing inferences about causal processes that occur over long timescales based on observations of a system at a single time. Second, I argue that theory-ladenness, circularities, and complex dependencies between hypotheses are important—but not insurmountable—challenges in gravitational-wave astrophysics. Furthermore I suggest the progress in this field will come where these vices can be made virtuous, by leveraging improvements in modeling, simulation, or observation in one domain to place constraints in another.

2 Epistemic Challenges for Theory Testing

The LIGO-Virgo Collaboration uses a network of detectors (specifically, gravitational-wave interferometers) to detect the faint gravitational wave signals produced by compact binary mergers, such as binary black hole mergers. The data produced by the interferometers are very noisy, so an important challenge of gravitational-wave astrophysics is that of separating the signals from the noise. This is most efficiently done through a modeled search, using a signal-processing technique called ‘matched filtering’.Footnote 1 Having extracted a gravitational wave signal, features of the source are inferred using a Bayesian parameter estimation process. This produces posterior distributions for values such as the masses and spins of the component black holes, the distance to the binary system, etc.

In the ‘discovery’ paper announcing GW150914, the LIGO-Virgo Collaboration describe this event as the first ‘direct detection’ of gravitational waves and the first ‘direct observation’ of a binary black hole merger (Abbott et al. 2016b). Elder (In preparation) provides an analysis of these terms—with a focus on what is meant by ‘direct’ in these cases—drawing connections to recent work in the philosophy of measurement (e.g., Tal 2012, 2013; Parker 2017).Footnote 2 However, these descriptions have the potential to obscure the fact that these observations are also indirect in the sense that they are mediated by models, such as those in the ‘EOBNR’ and ‘IMRPhenom’ modeling families (Abbott et al. 2016d). These models take source parameters and map them to gravitational-wave signals, via a description of the dynamical behaviour of the binary system. The success of the LIGO-Virgo experiments depends on the availability of accurate models spanning the parameter space for systems that the LIGO and Virgo interferometers are sensitive to.

Theory-ladenness comes in many forms and degrees, and is not inherently problematic. However, the theory-ladenness of experimental observations may be problematic when it leads to a vicious circularity. Such a circularity arises when the theoretical assumptions made by the experimenters—either in the physical design of the experiment or in subsequent inferences from the empirical data—guarantee that the observation will confirm the theory being tested.Footnote 3 In the case of the LIGO-Virgo experiments, the concern is that the use of general relativistic models to interpret the data guarantees that the results will be consistent with general relativity.Footnote 4

For the LIGO-Virgo experiments, there are two main layers of theory-ladenness to consider. These are due to the two main roles of the experiments: detecting gravitational waves (via ‘search’ pipelines) and observing compact binary mergers (via ‘parameter estimation’ pipelines).Footnote 5

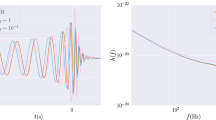

Detecting gravitational wave signals in the noisy LIGO-Virgo data is done using both modeled and unmodeled searches. The two modeled search pipelines, ‘GstLAL’ and ‘PyCBC’, are targeted searches for gravitational waves produced by compact binary coalescence (e.g., by the merger of two black holes). Both searches use ‘matched filtering.’ This involves correlating a known signal, or template, with an unknown signal, in order to detect the presence of the template within the unknown signal.Footnote 6 The unmodeled (or ‘minimally modeled’Footnote 7) ‘burst’ search algorithms, ‘cWB’ and ‘oLIB’, look for transient gravitational-wave signals by identifying coincident excess power in the time-frequency representation of the strain data from at least two detectors.Footnote 8 The modeled search pipelines, using matched filtering, are heavily theory- or model- laden, while the unmodeled search is less so. However, the modeled searches are more efficient at extracting signals from compact binary mergers, regularly reporting detections at higher statistical significance than the unmodeled searches.

Once a detection has been confirmed through the search pipelines the next step is to draw inferences about the properties of the source system on the basis of the signal. This involves assigning values to parameters describing features of interest. This process, ‘parameter estimation,’ is performed within a Bayesian framework. The basic idea is to calculate posterior probability distributions for the parameters describing the source system, based on some assumed model M that maps parameters about the source system to gravitational-wave signals. The sources of the gravitational waves detected so far are compact binary mergers. Such events are characterized by a set of intrinsic parameters—including the masses and spins of the component objects—as well as extrinsic parameters characterizing the relationship between the detector and the source—e.g., luminosity distance, and orientation of the orbital plane. The observation of compact binary mergers relies on having an accurate model relating the source parameters to the measured gravitational waveform.Footnote 9

These two ways that the LIGO-Virgo observations are theory-laden lead to some specific concerns about how the use of general relativistic models may be systematically biasing the LIGO-Virgo results. These issues have been discussed in detail elsewhere (see e.g., Elder Forthcoming, Sect. 5.3) so I limit my discussion to a brief summary.

In both cases, inaccuracies of the models may be due to either the failure of the models to accurately reflect the full general relativistic description of the situation, or the failure of GR to accurately describe the regimes being observed (or both). Following Yunes and Pretorius (2009), call these ‘modeling bias’ and ‘fundamental theoretical bias’ respectively.

In the first case—the observation of gravitational waves—the main concern is that any inaccuracy of the models might lead to a biased sampling of gravitational wave signals. Here, by ‘inaccuracy of the models’ I mean a lack of fit between the morphology of actual gravitational waves and template models. This may be due to either modeling bias or fundamental theoretical bias. There are several ways that the sampling may be biased. This includes both false positives (falsely identifying noise as a gravitational wave signal) and false negatives (failing to detect genuine signals). While these are both genuine problems to be overcome, a more insidious concern lies in between: imperfect signal extraction. If the models used for matched filtering are inaccurate, but still adequate to make a gravitational wave detection, the extracted signal may not accurately reflect the real gravitational waves. In this case, we can think of there being some residual signal left behind once the detected signal is removed. Thus the observation of gravitational waves may be systematically biased by any inaccuracies in the models used to observed them.

In the second case—observation of compact binary mergers—the main concern is that inaccurate models used in parameter estimation will systematically bias the posterior distributions for the parameters representing the properties of the source. Here, both modeling bias and theoretical bias are important sources of potential error, but the latter requires some explanation. The final stages of the binary black hole mergers observed by the LIGO-Virgo Collaboration occur in the dynamical strong field regime, because the two black holes are orbiting each other closely enough to be moving through strong gravitational fields with high velocities. At this stage, approximation schemes (such as post-Newtonian approximation) are no longer adequate for describing the dynamics of the binary and the full general relativistic description is needed. Such regimes have not been observed before; our empirical access to such regimes comes only from the LIGO-Virgo observations. The final stage of a binary black hole merger (including its gravitational wave emission) is thus (for all we know, at least prior to the LIGO-Virgo observations) a place where the description offered by GR may be inadequate. Thus the LIGO-Virgo Collaboration is performing theory-laden observations of a process where the theory itself is in question.

The two layers of theory-ladenness just described lead to circularity in the following sense: justifying confidence in the LIGO-Virgo observations relies on justification for the applicability of the theory in this context, while justification for the applicability of the theory in this context can only be based on the LIGO-Virgo observations.Footnote 10 Put another way, general relativistic models are used in the detection and interpretation of gravitational waves in such a way that detected signals will inevitably be well-described by general relativistic models, and interpreted as being produced by systems exhibiting general relativistic dynamics. In short, GR seems guaranteed to ‘save the phenomena’ of binary black hole mergers that are observed this way, since it is this theory that tells us what is being observed.

The theory-ladenness, and even the circularity I have described so far may seem unremarkable—indeed, some degree of theory-ladenness is a generic feature of scientific experiments. However, there are other features of the epistemic situation that render this circularity problematic in that they make it particularly difficult to uncover and circumvent any bias introduced by the theory- and model-laden methodology.

First, the LIGO-Virgo observations probe new physical regimes—the ‘dynamical strong field regime’—where it is possible that target systems will deviate from the predictions of GR in terms of their dynamical behaviour and gravitational-wave generation.

Second, the LIGO-Virgo observations are the only line of evidence into the source system. There are no earlier empirical constraints on the behaviour of such systems in the dynamical strong field regime, and no other independent access to these regimes is possible.Footnote 11 This limits the prospects for corroboration through coherence tests or consilience.

Third, no interventions on these systems are possible, given the astrophysical context. Controlled interventions to choose source parameters and then observe the resulting gravitational waves would allow the source properties and the resulting dynamics to be disentangled. Such a process would allow for a comparison between independent predictions and observations. In astrophysics, where such controlled interventions are generally impossible, it is common to use simulations as a proxy experiment in order to explore causal relationships and downstream observational signatures. However, in this case, it is the dynamical theory governing these systems that is in question. Increasing the precision of the simulation cannot overcome the problem of ‘fundamental theoretical bias’ introduced by the need to assume that GR is an adequate theory in these regimes.

These features of the epistemic situation mean that the theory-ladenness of the LIGO-Virgo methodology is a potential problem. If the observations are biased (e.g., distance estimates were consistently underestimated) this bias may go unchecked.

3 Testing General Relativity

Despite the epistemic challenges highlighted in Sect. 4.2, the LIGO-Virgo Collaboration does claim to use their observations to test general relativity. On the surface, the epistemic situation just described might seem to render this impossible. However, I will show that the theory testing done with individual events such as GW150914 goes beyond merely ‘saving the phenomena’. Rather, the tests performed probe specific ways that the theory-laden methodology might be biasing results and masking discrepancies with general relativity, by testing specific hypotheses about the signal and noise. In doing so, they place constraints on the bias introduced by the model-dependent methods being employed.Footnote 12

For a start, the direct detection of gravitational waves itself constitutes a kind of test of general relativity. After all, gravitational waves are an important prediction that distinguishes GR from its predecessor, Newtonian gravity. This is one test of GR that doesn’t suffer from the kind of circularity problem I described above. This is largely because confidence in the detection of gravitational waves depends primarily on confidence in the detector and the modeling of the measuring process, rather than confidence in a general relativistic description of the source (Elder and Doboszewski In preparation; Elder In preparation). However, this test largely serves as corroboration of what was already known from observations of the Hulse-Taylor binaries (Hulse and Taylor 1975; Taylor and Weisberg 1982). In short, this test of GR avoids the circularity objection, but it also fails to provide new constraints on possible deviations from this theory.

Other tests of GR by LIGO-Virgo depend on the properties of the detected waves and what these properties tell us about the nature and dynamical behavior of compact binaries. Abbott et al. (2016e) discusses a number of such tests performed using the data for GW150914. In addition, Abbott et al. (2019c) and Abbott et al. (2021d) extend these tests to the full populations of events from O2 and O3. The tests considered include what I will call the ‘residuals test,’ the ‘IMR consistency test,’ the ‘parameterized deviations test,’ and the ‘modified dispersion relation test.’Footnote 13 None of these tests have yet found evidence of deviations from general relativity.

First, the ‘residuals test’ tests the consistency of the residual data with noise (Abbott et al. 2016e). This involves subtracting the best-fit waveform from the GW150914 data and then comparing the residual with detector noise (for time periods where no gravitational waves have been detected). The idea here is to check whether the waveform has successfully removed the entire gravitational-wave signal from the data, or whether some of the signal remains. This process places constraints on the residual signal, and hence on deviations from the subtracted waveform that might still be present in the data. However, this doesn’t constrain deviations from GR simpliciter, due to the possibility that the best-fit GR waveform is degenerate with non-GR waveforms for events characterized by different parameters (Abbott et al. 2016e). That is, the same waveform could be generated by a compact binary merger (described by parameters different from those that we think describe the GW150914 merger) with dynamics that deviate from general relativistic dynamics. In this case, we could be looking at different compact objects than we think we are, behaving differently than we think they are, but nonetheless producing very similar gravitational wave signatures. Thus the residuals test could potentially show inconsistency with general relativity, but not all deviations from GR will be detectable in this way.

Second, the ‘IMR consistency test’ considers the consistency of the low-frequency part of the signal with the high-frequency part (Abbott et al. 2016e). This test proceeds as follows. First, the masses and spins of the two compact objects are estimated from the inspiral (low-frequency), using LALInference. This gives posterior distributions for component masses and spins. Then, using formulas derived from numerical relativity, posterior distributions for the remnant, post-merger object are computed. Finally, posterior distributions are also calculated directly from the measured post-inspiral (high-frequency) signal, and the two distributions are compared. These are also compared to the posterior distributions computed from the inspiral-merger-ringdown waveform as a whole. Every step of this test involves parameter estimation using general relativistic models. Thus general relativistic descriptions of the source dynamics are assumed throughout the test. Nonetheless, this test could potentially show inconsistency with general relativity. This would occur if parameter estimation for the low and high frequency parts of the signal did not cohere. The later part of the signal in particular might be expected to exhibit deviations from GR (if there are any). In contrast, previous empirical constraints give us reason to doubt that such deviations will be significant for the early inspiral. In the presence of high-frequency deviations, parameter estimation based on GR models will deviate from the values of a system that is well-described by general relativity. Hence (in such cases) we can expect the parameter values estimated from the low frequency part of the signal to show discrepancies with the parameter values estimated from the high frequency part of the signal.

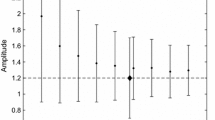

Third, the ‘parameterized deviations test’ checks for ‘phenomenological deviations’ from the waveform model (Abbott et al. 2016e). The basic idea of this test is to consider a family of parameterized analytic inspiral-merger-ringdown waveforms and to treat the coefficients of these waveforms as free variables (Abbott et al. 2016e, 6). This means that a new family of waveforms gIMR is generated by taking the frequency domain IMRPhenom waveform models and introducing fractional deformations \(\delta \hat {p_{i}}\) to the phase parameters p i. To test the theory, the p i are fixed at their GR values while one or more of the \(\delta \hat {p_{i}}\) are allowed to vary. The physical parameters associated with mass, spin, etc. are also allowed to vary as usual. Within this new parameter space, GR is defined as the position where all of the testing parameters \(\delta \hat {p_{i}}\) are zero. The values of all of the varying parameters are then estimated through a LALInference analysis and the resulting posterior distributions are compared to those generated using only standard GR waveform models. Although there are a range of alternatives to GR on the table, models of the gravitational waves we can expect to observe according to these theories are not available. Without detailed knowledge of the predictions of such theories (i.e., a library of models spanning the parameter space) it is difficult to say with confidence that any given gravitational wave observation favors GR over the alternatives. The parameterized deviations test is a way of overcoming this lack of alternative models. It does so by generating a more general set of models (gIMR) that do not presuppose general relativity. Evidence of deviations would thus not support any particular theory, but it would provide evidence against the hypothesis that GR is uniquely empirically adequate. Abbott et al. (2019c) extends this test to consideration of individual events from the LIGO-Virgo catalog GWTC-1 as well as ensembles of particularly strong events.Footnote 14

Fourth, the ‘modified dispersion relation’ test specifically considers the possibility of a modified dispersion relation, including that due to a massive graviton. Since such a modification would alter the propagation of gravitational waves, this allows us to place constraints on the mass of the graviton by using a similar method to that of the third test: the post-Newtonian terms for both the EOBNR and IMRPhenom waveform models are altered (according to the modified dispersion relation) and the Compton wavelength λ g is then treated as a variable. As with other tests, the posteriors generated in this analysis are consistent with general relativity—in this case, meaning consistency with a massless graviton.

Abbott et al. (2019c) classifies the first two of these tests as consistency tests, while the latter two are described as parameterized tests of gravitational wave generation and propagation respectively. This reflects the fact that the latter two use a modified set of models—models that have been altered to allow for the possibility of waveforms that deviate from the predictions of general relativity. Despite this distinction, all of these tests can be understood as consistency tests in the sense that they demonstrate that GR ‘saves the phenomena’ with respect to the dynamical behaviour of compact binary systems.

However, we have seen that the theory- or model-ladenness of the LIGO-Virgo observations has the potential to guarantee the consistency of their empirical results with the predictions of general relativity. The fact that general relativistic models ‘save the phenomena’ in this case may be an artifact of the role such models play in the observation process (e.g., parameter estimation).

With this problem of theory- or model-ladenness (and the related problem of circularity in validating both results and models) in mind, I offer an alternative interpretation of these ‘tests of general relativity’. Rather than testing high-level theory itself, these four tests probe specific ways that the model-ladenness of the observations could be biasing results, and thus masking inconsistencies with general relativity. The residuals test looks for evidence of imperfect signal extraction; the IMR consistency test looks for evidence of non-GR behaviour in the final stages of the merger that is obscured by the consistency of the early signal with general relativity; and the two parameterised tests look for evidence that a non-GR model might also ‘save the phenomena’, perhaps better than the general relativistic model. In each case, the tests place constraints on ways that the model-laden methodology might obscure deviations from general relativity.

The IMR consistency test is a particularly interesting case, searching for evidence of deviations from GR despite assuming GR at every individual step. Here, the possibility of degeneracies between GR and non-GR signals (noted above as a problem) is leveraged to search for deviations. Using this testing method, these deviations would manifest as discrepancies in parameter estimates. But such discrepancies could indicate that the overall gravitational wave signal we observe was inconsistent with general relativity, even though the two sections of the signal (taken separately) were consistent with general relativity.

Overall, these tests take a potentially vicious circularity and make a virtue of it; improving either models or the sensitivity of the detector will reap rewards in terms of both improved model validation and tighter empirical constraints on deviations from GR.

However, these tests do not place constraints on all possible deviations from general relativity. One important reason for this is that the possibility of degeneracies remains. The IMR consistency test and the two parameterised tests do place some constraints on the kind of deviations that might be present, but it remains an area of ongoing concern. This is especially true when we consider how any undiagnosed deviations might further bias the inferences made on the basis of biased parameter estimates. Yunes and Pretorius (2009) discuss this issue in their broader discussion of ‘fundamental theoretical bias’:

For a second hypothetical example, consider an extreme mass ratio merger, where a small compact object spirals into a supermassive BH [black hole]. Suppose that a Chern-Simons (CS)-like correction is present, altering the near-horizon geometry of the BH […] To leading order, the CS correction reduces the effective gravitomagnetic force exerted by the BH on the compact object; in other words, the GW emission would be similar to a compact object spiraling into a GR Kerr BH, but with smaller spin parameter a. Suppose further that near-extremal (a ≈ 1) BHs are common (how rapidly astrophysical BHs can spin is an interesting and open question). Observation of a population of CS-modified Kerr BHs using GR templates would systematically underestimate the BH spin, leading to the erroneous conclusion that near-extremal BHs are uncommon, which could further lead to incorrect inferences about astrophysical BH formation and growth mechanisms. (Yunes and Pretorius 2009, 3)

This provides an example of how certain deviations from general relativistic dynamics might not be detected as differences in gravitational waveforms, since these deviations are indistinguishable from a change in source parameters. Thus there is an underdetermination of the properties of the source by the observed gravitational wave signal. In this passage, Yunes and Pretorius also note that this ‘fundamental theoretical bias’ has consequences for further inferences based on the estimated properties of the binary. As I discuss in Sect. 4.4, managing biases in gravitational wave observations becomes a prominent feature of reasoning using populations of compact binary mergers.

Overall, the tests of GR performed by the LIGO-Virgo Collaboration provide a variety of constraints on ways that their model-dependent methodology might be biasing their results. Nonetheless, the theory- or model-ladenness remains an ongoing problem, especially when it comes to disentangling variation in source parameters from (possible) deviations from the predictions of general relativity.

4 Theory-Testing Beyond Individual Events

Since the announcement of the first gravitational wave detection (Abbott et al. 2016b) the LIGO-Virgo Collaboration has made nearly one hundred further detections.Footnote 15 These occurred over three observing runs (O1-O3), with upgrades to the interferometers being undertaken between runs to increase sensitivity. Details of these detections are available in gravitational wave transient catalogs GWTC-1, GWTC-2, and GWTC-3 (Abbott et al. 2019b, 2021b,c)

As gravitational wave events accumulate, gravitational-wave astrophysics is undergoing a transition in the scope of their targets of inquiry: from individual events to ensembles. With this comes the further possibility of probing astrophysical and cosmological processes, including the astrophysical mechanisms responsible for producing the population of compact binaries (‘formation channels’), and cosmic expansion (as measured by the Hubble constant).

Parameter estimation for individual events provides the foundations on which further inferences can be built. What I mean by this is that inferences about populations of binary black hole mergers (for example) are based on prior inferences about the properties of individual events. If these parameter estimates are systematically biased then these biases will be passed on to further inferences about the population. For this reason, the tests of GR discussed in Sect. 4.3 are vital for controlling systematic error beyond the original context of those tests.

In what follows, I sketch two examples of research programs that can be built on the initial scaffolding afforded by the observation of compact binary mergers. These include: testing models of binary black hole formation channels (Sect. 4.4.1) and performing precise measurements of the Hubble constant that are independent of the ‘cosmic distance ladder’ (Sect. 4.4.2).Footnote 16

These examples illustrate how the transition of gravitational wave astrophysics into mature science is characterized by a move towards learning about the causal processes responsible for shaping the observable universe, as well as building bridges with other areas of astrophysics. This building of connections with the rest of (electromagnetic-based) astrophysics means that gravitational wave astrophysics is becoming increasingly unified with astrophysics as a whole as it gains maturity. The picture that emerges is one of hypothesis-testing that leverages this unification; by placing an increasing number of constraints from multiple directions, an increasingly narrow region of the possibility space is isolated where the overall picture of astrophysical processes holds together.

4.1 Binary Black Hole Formation Channels

Once binary black hole mergers have been detected, one astrophysically important question is how such binaries formed in the first place. Some familiar methodological challenges of astrophysics make inferences about this evolution difficult. First, the evolution of a binary black hole system involves long timescale, meaning that we cannot watch such a process unfold. Instead, as is often the case in astrophysics, dynamical processes must be inferred from ‘snapshots’ (Jacquart 2020, 1210–1211, see also Anderl 2016). Second, there are no terrestrial experiments that we can perform that replicate the conditions relevant for the formation of these objects. Thus we are limited to what data are made available by nature. In the case of binary black holes, this is very sparse: while it is possible to make observations pertaining to the conditions of star formation at high redshifts, binary black hole systems are currently only observable in the very final stages of their inspiral and merger. As in the case considered by Jacquart (2020)—the formation of ring galaxies—computer simulations are vital for inferring long-timescale dynamics from snapshots. However, unlike Jacquart’s example, the available data here do not include snapshots from different stages in the process. Instead, the only ‘traces’ of these processes are the observed properties of the binary black hole merger.

Despite these challenges, some early progress has been made in seeking evidence about binary black hole formation channels. This work depends on developing models of the processes by which binary black holes form and evolve and determining the signatures of these different processes in terms properties of the observed populations of binary black hole mergers. For example, Belczynski et al. (2020) reviews several of the dynamical processes proposed for explaining the formation of the binaries observed by LIGO-Virgo, including the near-zero effective spins observed among binaries from O1 and O2.Footnote 17

Recent work by van Son et al. (2022) nicely illustrates the kind of reasoning involved in making inferences about formation channels for the binaries observed by LIGO-Virgo. This paper investigates the formation channels for binary black holes using simulations of different channels to determine signatures in (current and future) observations of binary black hole mergers. They find that the redshift evolution of the properties of binary black hole mergers encodes information about the origins of the binary components. However, decoding this information is complicated by what is called the delay time, t delay: the time between the formation of the progenitor stars and the final binary black hole merger.Footnote 18 Factoring in differing delay times, binary black hole mergers at the same redshift do not necessarily have shared origins.

Through simulations, van Son et al. (2022) identify two formation channels that contribute to the overall population of binary black hole mergers. These are the common envelope (CE) and stable Roche-lobe overflow (RLOF) channels. These simulations incorporate modeling of astrophysical processes relating to stellar evolution and binary interactions, such as stellar wind mass loss, mass transfer between the binary components, the role of supernova kicks, etc.

They find that the CE channel preferentially produces low mass black holes (< 30M ⊙) and short delay time (< 1Gy), while the stable RLOF channel preferentially produces black holes with large masses (> 30M ⊙) and long delay times (> 1Gy). These differences mean that the channels exhibit different redshift evolution; the binary black hole merger rate R BBH(z) is expected to be dominated by the CE channel at high redshifts, while there is significant contribution (∼40%) from the stable RLOF channel at low redshifts. Thus van Son et al. (2022) predict a distinct redshift evolution of R BBH(z) for low and high component masses.

Finding observational evidence of these signatures is challenging at present. As of O3, the gravitational-wave interferometer network was only observing out to redshifts of z ∼ 0.8 for the highest mass primary black holes. For smaller primary black holes (M BH,1 ∼ 10M ⊙), this network is only probing out to redshifts of z ∼ 0.1 − 0.2. However, this is expected to change in the coming decades, as upgrades to existing detectors (Advanced LIGO, Advanced Virgo, and KAGRA) and the addition of new detectors (e.g., the Einstein telescope and Cosmic Explorer) allow for the observation of binary black hole mergers out to higher redshifts (Abbott et al. 2017a; Maggiore et al. 2020).

With future observations, it may be possible to observe how a range of properties of the observed binaries change with redshift. Of course, doing so accurately requires having a good handle on the biases in the observations, from selection effects (e.g., the limited sensitivity of the detector means that lower-mass binaries are less likely to be observed at greater distances) to parameter degeneracies (e.g., distance/inclination of the binary), to the potential bias introduced by using general relativistic models. Nonetheless, the use of modeling to account for these biases in the data may result in data that are reliable enough to draw inferences about the evolution of the observed binary population.

Correcting data via models may naively seem problematic—after all theory- or model-ladenness is sometimes (as in this paper) a cause for concern. However, I am inclined to think that such treatment of data is both common and (usually) virtuous, resulting in ‘model-filtered’ data that is a better basis for inferences as a result of mitigating biases in the data. Here, I borrow the term ‘model-filtered’ from Alisa Bokulich, who defends a view of ‘model-data symbiosis’ and discusses a range of ways that data can be model-laden—in a way that is beneficial (Bokulich 2020, 2018).Footnote 19 Bokulich’s examples include the correction of the fossil record to account for known biases in this record. The resulting model-corrected data forms a better basis for inferences about biodiversity in the deep past. I take this view to also be a good fit for astrophysics. The gravitational wave catalog resembles the fossil record; while there are important biases in the observed data, modeling can help correct for these in order to build a timeline of events and learn more about the processes driving change over long timescales. On the flip side, the reliability of such data depends on the reliability of the models used to correct it.

4.2 Measuring the Hubble Constant

As the population of detected gravitational wave transients grows, it also becomes possible to use gravitational waves to probe cosmology. In particular, compact binary mergers (especially binary neutron star mergers) can be used to measure the Hubble constant (Abbott et al. 2017b). The Hubble constant H 0 is a measure of the rate of expansion of the universe at the current epoch. For distances less than about 50Mpc, H 0 is well-approximated by the following expression:

where v H is the local ‘Hubble flow’ velocity of the source (the velocity due to cosmic expansion rather than the peculiar velocities between galaxies) and d is the (proper) distance to the source.

To measure the Hubble constant one needs measurements of both v H and d. Gravitational wave signals encode information about the distance to their source, through the amplitude of the waves. Thus the parameter estimation process described above provides a measurement of d that is independent of the ‘cosmic distance ladder’ (and the electromagnetic observations used to calibrate this). This leads to compact binary mergers being called ‘standard sirens’ (analogous to the ‘standard candles’ provided by Type Ia supernovae). In contrast, measurements of v H rely on electromagnetic radiation. In particular, v H is inferred using the measured redshift of the host galaxy. Measurements of v H from the redshift must account for the peculiar velocity of the host galaxy through analysis of the velocities of the surrounding galaxies.

Distance measurements are complicated by the fact that there is a degeneracy between the distance to, and inclination of the source in terms of the amplitude of the measured gravitational waves. Face on binaries are “louder”, radiating gravitational waves at higher amplitude (hence SNR) than a binary that is viewed side-on. Altering the inclination of the binary mimics the effect of moving the source closer or farther away. Thus (as with standard candles) the measurement of distance provided by standard sirens is a little more complicated than it initially appears.

So far, one multi-messenger event has been reported by the LIGO-Virgo Collaboration.Footnote 20 For this event, an optical transient (AT 2017gfo) was found to coincide with the source of gravitational waves, leading to an identification of the source of both signals as a binary neutron star merger. Using gravitational waves to measure d and the electromagnetic counterpart to measure v H, Abbott et al. (2017b) obtain a measurement for H 0: 70.0+12.0 −8.0 kms−1 Mpc−1.Footnote 21

It is also possible to perform standard siren measurements with binary black hole mergers, fittingly called ‘dark sirens’, even in the absence of an electromagnetic counterpart. In such cases, the velocity v H is inferred statistically from the redshifts of possible host galaxies. The first such measurement was performed using GW170814, giving an estimated value of H 0 = 75+40 −32 kms−1 Mpc−1 (Soares-Santos et al. 2019).Footnote 22

These measurements of the Hubble constant based on individual events both have large uncertainties, in part due to the distance-inclination degeneracy and (in the latter case) the lack of an electromagnetic counterpart.

However, as gravitational-wave astrophysics transitions into a mature field, with a substantial population of events, the precision of measurements will improve. This is in part due to the projected increase in information about the polarization of the gravitational waves with an expanded network of detectors. This should lead to better measurements of the source inclination, and therefore distance (i.e., it should help break the degeneracy between these).

Additionally, the precision of the measurement is expected to increase with a larger population of detections, as uncertainties from peculiar velocities and distance should decrease with the sample size. Revised estimates of H 0 have already been made using populations of events, following O2 (Abbott et al. 2019a), and O3 (Abbott et al. 2021a).

The precision of H 0 measurements does not increase uniformly with each new event. Events with an identifiable counterpart and host galaxy contribute the most (since these have the best estimated peculiar velocities and hence redshift). Strong contributions also come from compact binary mergers in the ‘sweet spot’ where uncertainties associated with the distance and the peculiar velocities are comparable (Chen et al. 2018).Footnote 23 Chen et al. (2018) provide calculations showing how standard siren measurements of H 0 should increase in precision according to the number and properties of compact binary mergers detected over time. For example, for a population of 50 binary neutron star mergers with associated electromagnetic counterparts, the fractional uncertainty in the H 0 measurement would reach 2%. With this precision, gravitational-wave-based measurements of H 0 would be precise enough to perform stringent coherence tests with existing measurements—a potentially important step toward resolving the ‘Hubble tension’.Footnote 24 However, the prospects are less good for a population of binary black hole mergers.

In the absence of a large population of events with counterparts, much recent work has focused on maximizing the cosmological information that can be inferred from the increasingly large population of binary black hole mergers. Such populations can be effective probes of cosmic expansion when analyzed together with known astrophysical properties of the overall compact binary population. For example, Abbott et al. (2021a), provides improved estimates of the Hubble constant using 47 events from GWTC-3. They use two methods to do this. The first makes assumptions about the redshift evolution of the binary black hole population—in particular, that the mass scale does not vary with redshift—then fits the population for cosmological parameters (Abbott et al. 2021a, 11–12). In essence, this works by assuming that redshift evolution of the population properties is due to the cosmic expansion as opposed to intrinsic properties of the binary population. This approach is especially useful in the case that the population has sharp cut-off features (such as the ‘mass gap’ produced by pair-instability supernovae) (Ezquiaga and Holz 2022). The second method that they use involves associating gravitational wave sources with a probable host galaxies using existing galaxy surveys. This approach also makes assumptions about the binary black hole merger source population—including a fixed source mass distribution and fixed-rate evolution of the binaries—as well as assumptions about the selection effects that lead to incompleteness in the galaxy surveys (Abbott et al. 2021a, 13).

Overall, these methods yield estimates of H 0 = 68+12 −8 kms−1 Mpc−1 (first method) and H 0 = 68+8 −6 kms−1 Mpc−1 (second method). The latter is a significant improvement in precision over the measurement from O2, which was H 0 = 69+16 −8 kms−1 Mpc−1 (Abbott et al. 2019a). However, Abbott et al. (2021a) note that their estimate strongly depends on assumptions about the binary black hole source mass distribution: ‘if the source mass distribution is mismodeled, then the cosmological inference will be biased’ (Abbott et al. 2021a, 27).

We can now see that the two different investigations discussed in Sects. 4.4.1 and 4.4.2 are intertwined. There is a kind of degeneracy between population properties and cosmology; if we know about the population properties, we can learn about cosmology, and if we know something about cosmology, we can learn about the properties of the binary black hole population. This has the potential to introduce a new circularity when it comes to justifying inferences about either. However, as with the case of testing general relativity (Sect. 4.3), the circularity can also be virtuous; increasing constraints on one helps to constrain the other.

5 Conclusion

Gravitational-wave astrophysics has begun to transition from new to mature science. This transition is partly characterized by a shift from individual events (such as GW150914 or GW170817) to populations of events as the target of investigation. In studying populations of events, gravitational waves may be used to to probe new phenomena, including astrophysical processes (e.g., binary black hole formation channels) and cosmology (e.g., Hubble expansion).

As for studies of individual events, studying the properties of populations is based on theory-laden methodology. Parameter estimation using general relativistic models forms the foundation on which further inferences about populations can be built. The reliability of these inferences thus depends on the success of the LIGO-Virgo Collaboration in constraining the bias introduced by their use of general relativistic models. The tests I described in Sect. 4.3 provide crucial scaffolding for proceeding with inferences like those described in Sect. 4.4.1.

However, working with populations can also help with some sources of error. For example, the distance/inclination degeneracy limits the precision of measurements of H 0 from a single event. But this uncertainty is largely washed out with a large sample size, since binaries are not expected to have any preferred orientation with respect to us (though this must be corrected for selection effects, since the ‘louder’ face-on orientations are more likely to be detected).

Another feature of gravitational wave astrophysics’ transition to maturity is its increasing unification with other areas of astrophysics. This began with the multi-messenger observations of a binary neutron star merger, where electromagnetic observations combined with gravitational wave observations to give a more complete picture of the processes involved. However, just as importantly, bridges are built between gravitational wave and electromagnetic astrophysics when methods from these different fields are brought to bear on the same processes. This is true in the case of independent measurements of the Hubble constant, which offer the possibility of coherence tests between different measurements—and perhaps the eventual resolution of the Hubble tension. It is also true in the studies of binary black hole formation channels, where constraints from other areas of astrophysics (e.g., concerning star formation) can be brought to bear on plausible channels for the evolution of binary black holes. Thus connections are being forged via observational targets, coherence tests across independent measurements, and by importing constraints from one domain to inform investigations in another.

Alongside this increasing connectedness to other areas of astrophysics, gravitational-wave astrophysics is also increasingly resembling electromagnetic astrophysics in a few ways.

First, it is now facing the ‘snapshot’ problem of trying to infer causal processes that occur over long timescales based on temporal slices. This is a characteristic challenge not only for astrophysics at large, but also for historical science in general.

Second, the dependency relations between the target phenomenon and observational traces are increasingly complex, due to parameter degeneracies, degeneracies between source properties and cosmological effects, and other uncertainties concerning the astrophysical processes by which binary stars are thought to form and collapse, accrete matter and dissipate angular momentum, etc. There is thus a kind of holism that impedes hypothesis testing due to the difficulty in isolating a single hypothesis to test independently.

These complex relationships are gradually disentangled with the help of simulations. This often proceeds as a search for clear signatures to act as ‘smoking guns’. However, as with the case of standard sirens, progress in studying relationships between observations and target phenomena seems to be characterized more by increasing appreciation of the complexities than by finding true unambiguous smoking guns. Given all of this, controlling for selection effects and other systematics becomes a prominent component of reasoning about the populations being studied using gravitational waves.

Overall, testing hypotheses about astrophysical and cosmological processes with gravitational waves proceeds iteratively through improving the precision of measurements and further exploring dependency relationships, especially through simulations. This has some resemblance to what (Chang 2004, 45) calls ‘epistemic iteration’, a process where ‘successive stages of knowledge, each building on the preceding one, are created in order to enhance the achievement of certain epistemic goals’. This process, which Chang says could also be called a kind of ‘bootstrapping’, is one of progress through self-improvement, in the absence of secure epistemic foundations. This seems like a fruitful picture for making sense of progress in gravitational-wave astrophysics, where we have seen that the foundational theory (GR) is in question at the same time as the observations made utilizing that theory.Footnote 25 Furthermore, intertwined lines of inquiry about cosmology and black hole populations and formation channels proceed simultaneously, feeding back into one another without any of them providing an independently secure foundation for the others.

This process of iterative improvement gradually places tighter constraints on viable models and parameter values, while teasing apart complex dependencies between populations of binary systems and observations of their final moments. Even when these constraints are conditional, depending on various other assumptions in order to place a constraint on the phenomenon of interest, the accumulation of constraints across the entire web of hypotheses can gradually reduce the space of viable possibilities. Here again there are resonances with Chang (2004)’s coherentist epistemology, in which epistemic iteration allows for progress by building on previous knowledge despite its lack of secure foundations. Instead, previous knowledge enjoys a kind of tentative acceptance so that it can be built upon in the pursuit of epistemic values.

Overall, this paper has exhibited both the vices and virtues of interdependence in theory testing. We have seen how theory-laden methodology can lead to a vicious circularity, but also how this need not be an insurmountable hurdle for theory testing. Indeed, Sect. 4.3 showed how the LIGO-Virgo Collaboration perform tests of GR by placing constraints on different ways that their methods could be masking deviations from GR. The confidence in both theory and observations based on these tests provides the confidence needed to base further inferences on the LIGO-Virgo observations. The examples of Sects. 4.4.1 and 4.4.2 show how interdependence can lead to a kind of holist underdetermination in the sense that changes in cosmology are degenerate with changes in the properties of black hole binary populations. However, this also means that improved constraints in one domain can do double work, simultaneously introducing further constraints to related domains.

With the increasing interconnectedness between electromagnetic and gravitational wave astrophysics come more constraints on how it all must fit together. Between the increasing precision of gravitational wave detection, the improved modeling of compact binaries, and the increasing number of bridges across fields, the hope is that degeneracies, along with problems of theory-ladenness and circularities, which may look irresolvable viewed in isolation, might nonetheless be broken when viewed in their full astrophysical context.

Notes

- 1.

For details about this technique, see e.g., Maggiore (2008).

- 2.

Roughly, the ‘directness’ has to do with the nature of the inferences needed to make the detection claim; for a direct detection, this is based on the model of the measuring process (i.e., the understanding of the measuring device and how it couples to its environment) while an indirect detection also relies on a model of a separate target system. Note also that ‘detection’ and ‘observation’ have a range of meanings across different scientific contexts but are often used interchangeably to describe gravitational-wave detection/observation (Elder In preparation). In this paper, I use the term ‘observation’ in a broad, permissive way, encompassing measurements with complex scientific instruments (e.g., gravitational wave interferometers). Something like Shapere (1982)’s account of observation in astronomy will do to capture what I have in mind. I use the term ‘detection’ to include any empirical investigation (measurement, observation, etc.) that purports to establish the existence or presence of an entity or phenomenon within a target system. A successful detection meets some threshold of evidence (relative to the background knowledge and the standards of acceptance of the relevant scientific community) such that we accept the existence of that entity (though precisely what this acceptance constitutes will differ for realists and anti-realists).

- 3.

See Boyd and Bogen (2021, section 3) for an overview of theory-ladenness (and value-ladenness) in science.

- 4.

See Elder (Forthcoming) and Elder and Doboszewski (In preparation) for discussion of theory-ladenness and (vicious) circularities in the context of the LIGO-Virgo experiments. My brief exposition here draws on these more detailed discussions.

- 5.

Here ‘pipeline’ refers to the set of processes used to generate the particular product from the data. Thus ‘search pipelines’ are alternative pathways for processing data to detect gravitational wave signals and ‘parameter estimation pipelines’ are alternative pathways for estimating the values of source parameters (e.g., masses and spins of the binary components).

- 6.

The details of the modeled searches and their results for GW150914 are reported in Abbott et al. (2016a).

- 7.

The unmodeled searches are often (and more accurately) called ‘minimally modeled’ searches. However, I will use the ‘unmodeled’ terminology to highlight the contrast between search pipelines, since this search doesn’t use general relativistic modeling to detect gravitational waves.

- 8.

The details of this search and the results for GW150914 are reported in Abbott et al. (2016c).

- 9.

Examples include the previously mentioned EOBNR and IMRPhenom modeling families.

- 10.

For the purposes of this paper, I neglect the additional complication of validating models and simulations with respect to theory, which is made challenging by the lack of exact solutions to the Einstein field equations for such systems (Elder Forthcoming).

- 11.

For GW170817, the first multi-messenger observation of a binary neutron star merger, there was independent access—but not of a kind that placed strong independent constraints on the dynamical strong field regime (Elder In preparation).

- 12.

For the purposes of this paper, I use the term ‘test’ fairly loosely to encompass any empirical investigation where the outcome is taken to have a bearing on (or provide evidence relevant to) the acceptability of a theory or hypothesis. Thus I will consider something to be a test of GR if it makes use of empirical data and has an outcome that counts as evidence for or against the acceptance of GR.

- 13.

The first two of these, the residuals and IMR consistency tests, are also briefly discussed in Elder (Forthcoming).

- 14.

See Patton (2020) for an insightful discussion concerning this test.

- 15.

The collaboration has also expanded to become the LIGO-Virgo-KAGRA Collaboration, reflecting the expansion of the network to include KAGRA in Japan. However, KAGRA came online only shortly before the end of O3 and has not yet been involved in any detections.

- 16.

For the reader unfamiliar with these terms: ‘formation channel’ refers to the set of causal processes involved in the evolution of (in this case) a binary black hole system. Different formation channels are different pathways for producing the same kind of system; the ‘Hubble constant’, H 0, is a measure of the rate of expansion of the universe; and the ‘cosmic distance ladder’ refers to a set of methods for measuring distances to celestial objects. Since different methods have different domains of applicability, earlier ‘rungs’ on the ladder, measuring distances to closer objects, help form the basis for using methods corresponding to later ‘rungs’, measuring distances to more distant objects.

- 17.

- 18.

Note that the delay time is the sum of two timescales: first, the lifetime of the binary stars up until they both become compact objects; and second, the inspiral time of the black holes, up until the binary black hole merger (van Son et al. 2022, 2).

- 19.

- 20.

‘Multi-messenger’ means that the event was observed using at least two cosmic ‘messengers’ (electromagnetic radiation, neutrinos, gravitational waves, and cosmic rays). In this case, GW170817 was observed with gravitational waves as well as electromagnetic radiation across a broad range of frequencies.

- 21.

Note that all values quoted are based on Bayesian analysis and report the maximum posterior value with the minimal-width 68.3% credible interval.

- 22.

GW170814 was a strong signal that gave a well localized source region within a part of sky that is thoroughly covered by the Dark Energy Survey. This made it highly amenable to a statistical treatment of the redshifts of possible host galaxies.

- 23.

This sweet spot changes with the detector sensitivity since the fractional distance uncertainty scales with SNR. In addition to having a counterpart, GW170817 fell close to the sweet spot for the detector at the time (Chen et al. 2018, ‘Methods’).

- 24.

The ‘Hubble tension’ refers to the disagreement between existing current-best estimates of H 0 based on local and high-redshift measurements. See Matarese and McCoy (In preparation) for a detailed philosophical discussion of the Hubble tension.

- 25.

However, the case of gravitational-wave astrophysics is rather different from the examples that Chang considers. First, the theoretical and experimental developments in this field proceeded in parallel for decades; both strands of development had to reach an advanced stage before such measurement was possible. Thus the main focus of my analysis has not been on iterative improvement in the measurement of gravitational waves by the LIGO and Virgo interferometers, but rather on downstream inferences on the basis of these. Second, the phenomena of temperature Chang describes were accessible by a variety of means, including sensory experience and a range of measuring instruments. In contrast, gravitational waves are much harder to measure (to put it mildly) and accessible only by a small number of similar detectors. This provides a very limited basis for self-improvement on the basis of coherence with other measurements. Overall, on an abstract level, Chang’s idea of epistemic iteration seems like a good fit for what I have described. However, given the two major differences between epistemic contexts of building a temperature scale and observing gravitational waves, I think that more work is needed to draw out the relationship between iterative progress in these two cases.

References

Abbott, B.P., et al. 2016a. GW150914: First results from the search for binary black hole coalescence with advanced LIGO. Physical Review D 93: 122003. https://doi.org/10.1103/PhysRevD.93.122003

Abbott, B.P., et al. 2016b. Observation of gravitational waves from a binary black hole merger. Physical Review Letters 116: 061102. https://doi.org/10.1103/PhysRevLett.116.061102

Abbott, B.P., et al. 2016c. Observing gravitational-wave transient GW150914 with minimal assumptions. Physical Review D 93: 122004. https://doi.org/10.1103/PhysRevD.93.122004

Abbott, B.P., et al. 2016d. Properties of the binary black hole merger GW150914. Physical Review Letters 116: 241102. https://doi.org/10.1103/PhysRevLett.116.241102

Abbott, B.P., et al. 2016e. Tests of general relativity with GW150914. Physical Review Letters, 116, 221101. https://doi.org/10.1103/PhysRevLett.116.221101

Abbott, B.P., et al. 2017a. Exploring the sensitivity of next generation gravitational wave detectors. Classical and Quantum Gravity 34(4): 044001. https://doi.org/10.1088/1361-6382/aa51f4

Abbott, B.P., et al. 2017b. A gravitational-wave standard siren measurement of the Hubble constant. Nature 551: 85–88. https://doi.org/10.1038/nature24471

Abbott, B.P., et al. 2019a. A gravitational-wave measurement of the Hubble constant following the second observing run of advanced LIGO and Virgo. arXiv:1908.06060v2

Abbott, B.P., et al. 2019b. GWTC-1: A gravitational-wave transient catalog of compact binary mergers observed by LIGO and Virgo during the first and econd observing runs. Physical Review X 9: 031040. https://doi.org/10.1103/PhysRevX.9.031040

Abbott, B.P., et al. 2019c. Tests of general relativity with the binary black hole signals from the LIGO-Virgo catalog GWTC-1. Physical Review D 100: 104036. https://doi.org/10.1103/PhysRevD.100.104036

Abbott, R., et al. 2021a. Constraints on the cosmic expansion history fromGWTC-3. https://doi.org/10.48550/ARXIV.2111.03604

Abbott, R., et al. 2021b. GWTC-2: Compact binary coalescences observed by LIGO and Virgo during the first half of the third observing run. Physical Review X 11: 021053. https://doi.org/10.1103/PhysRevX.11.021053

Abbott, R., et al. 2021c. GWTC-3: Compact binary coalescences observed by LIGO and Virgo during the second part of the third observing run. https://doi.org/10.48550/ARXIV.2111.03606

Abbott, R., et al. 2021d. Tests of general relativity with GWTC-3. https://doi.org/10.48550/ARXIV.2112.06861

Anderl, S. 2016. Astronomy and astrophysics. In Oxford handbook of philosophy of science, ed. P. Humphreys. Oxford: Oxford University Press. https://doi.org/10.1093/oxfordhb/9780199368815.013.45

Belczynski, K., et al. 2020. Evolutionary roads leading to low effective spins, high black hole masses, and o1/o2 rates for LIGO/Virgo binary black holes. Astronomy and Astrophysics 636. https://doi.org/10.1051/0004-6361/201936528

Bokulich, A. 2018. Using models to correct data: Paleodiversity and the fossil record. Synthese 198(24): 5919–5940. https://doi.org/10.1007/s11229-018-1820-x

Bokulich, A. 2020. Towards a taxonomy of the model-ladenness of data. Philosophy of Science 87(5): 793–806. https://doi.org/10.1086/710516

Bokulich, A., W. Parker. 2021. Data models, representation and adequacy-for-purpose. European Journal for Philosophy of Science 11(1): 31–31. https://doi.org/10.1007/s13194-020-00345-2

Boyd, N.M. 2018. Evidence enriched. Philosophy of Science 85(3): 403–421. https://doi.org/10.1086/697747

Boyd, N.M., J. Bogen. 2021. Theory and observation in science. In The Stanford encyclopedia of philosophy, ed. E.N. Zalta, (Winter 2021). Stanford: Metaphysics Research Lab, Stanford University.

Chang, H. 2004. Inventing temperature: Measurement and scientific progress. Oxford: Oxford University Press.

Chen, H.-Y., M. Fishbach, D.E. Holz. 2018. A two per cent Hubble constant measurement from standard sirens within five years. Nature 562(7728), 545. https://doi.org/10.1038/s41586-018-0606-0

Elder, J. Forthcoming. Black hole coalescence: Observation and model validation. In Working toward solutions in fluid dynamics and astrophysics: What the equations don’t say. Springer Briefs. ed. L. Patton, E. Curiel. Berlin: Springer Elder, J. 2023a. Black hole coalescence: Observation and model validation. In Working toward solutions in fluid dynamics and astrophysics: What the equations don’t say. Springer Briefs. ed. L. Patton, E. Curiel. Berlin: Springer.

Elder, J. In preparation. Independent evidence in multi-messenger astrophysics.

Elder, J. In preparation. On the ‘direct detection’ of gravitational waves.

Elder, J., Doboszewski, J. In preparation. How theory-laden are observations of black holes?

Ezquiaga, J.M., D.E. Holz. 2022. Spectral sirens: Cosmology from the full mass distribution of compact binaries. Physical Review Letters 129: 061102. https://doi.org/10.48550/ARXIV.2202.08240

Farr, B., D.E. Holz, W.M. Farr. 2018. Using spin to understand the formation of LIGO and Virgo’s black holes. The Astrophysical Journal 854(1): L9. https://doi.org/10.3847/2041-8213/aaaa64

Hulse, R.A., J.H. Taylor. 1975. Discovery of a pulsar in a binary system. Astrophysical Journal 195: L51–L53. https://doi.org/10.1086/181708

Jacquart, M. 2020. Observations, simulations, and reasoning in astrophysics. Philosophy of Science 87(5): 1209–1220. https://doi.org/10.1086/710544

Leonelli, S. 2016. Data-centric biology: A philosophical study. Chicago: University of Chicago Press.

Maggiore, M. 2008. Gravitational waves. Volume 1, theory and experiments. Oxford: Oxford University Press.

Maggiore, M., et al. 2020. Science case for the Einstein telescope. Journal of Cosmology and Astroparticle Physics 2020(03): 050–050. https://doi.org/10.1088/1475-7516/2020/03/050

Matarese, V., C. McCoy. In preparation. When “replicability” is more than just “reliability”: The Hubble constant controversy.

Parker, W.S. 2017. Computer simulation, measurement, and data assimilation. British Journal for the Philosophy of Science 68(1): 273–304. https://doi.org/https://doi.org/10.1093/bjps/axv037

Patton, L. 2020. Expanding theory testing in general relativity: LIGO and parametrized theories. Studies in History and Philosophy of Science Part B: Studies in History and Philosophy of Modern Physics 69: 142–153. https://doi.org/https://doi.org/10.1016/j.shpsb.2020.01.001

Shapere, D. 1982. The concept of observation in science and philosophy. Philosophy of Science 49(4): 485–525. https://doi.org/https://doi.org/10.1086/289075

Soares-Santos, M., et al. 2019. First measurement of the Hubble constant from a dark standard siren using the dark energy survey galaxies and the LIGO/Virgo binary–black-hole merger GW170814. The Astrophysical Journal 876(1): L7. https://doi.org/10.3847/2041-8213/ab14f1

Tal, E. 2012. The epistemology of measurement: A model-based account, PhD Dissertation, University of Toronto http://search.proquest.com/docview/1346194511/

Tal, E. 2013. Old and new problems in philosophy of measurement. Philosophy Compass 8(12): 1159–1173. https://doi.org/https://doi.org/10.1111/phc3.12089

Taylor, J.H., J.M. Weisberg. 1982. A new test of general relativity - gravitational radiation and the binary pulsar PSR 1913+16. Astrophysical Journal 253: 908–920. https://doi.org/10.1086/159690

van Son, L.A.C., et al. 2022. The redshift evolution of the binary black hole merger rate: A weighty matter. The Astrophysical Journal 931(1): 17. https://doi.org/10.3847/1538-4357/ac64a3

Vitale, S., R. Lynch, R. Sturani, P. Graff. 2017. Use of gravitational waves to probe the formation channels of compact binaries. Classical and Quantum Gravity 34(3): 03LT01. https://doi.org/10.1088/1361-6382/aa552e

Yunes, N., F. Pretorius. 2009. Fundamental theoretical bias in gravitational wave astrophysics and the parametrized post-Einsteinian framework. Physical Review D 80: 122003. https://doi.org/10.1103/PhysRevD.80.122003

Acknowledgements

I would like to thank two anonymous referees for their helpful comments on an earlier version of this paper.

My work on this project was undertaken during my employment at Harvard’s Black Hole Initiative, which is funded in part by grants from the Gordon and Betty Moore Foundation and the John Templeton Foundation. The opinions expressed in this publication are those of the author and do not necessarily reflect the views of these Foundations.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this chapter

Cite this chapter

Elder, J. (2023). Theory Testing in Gravitational-Wave Astrophysics. In: Mills Boyd, N., De Baerdemaeker, S., Heng, K., Matarese, V. (eds) Philosophy of Astrophysics. Synthese Library, vol 472. Springer, Cham. https://doi.org/10.1007/978-3-031-26618-8_4

Download citation

DOI: https://doi.org/10.1007/978-3-031-26618-8_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-26617-1

Online ISBN: 978-3-031-26618-8

eBook Packages: Religion and PhilosophyPhilosophy and Religion (R0)