Abstract

The ongoing debate in philosophy of science over whether simulations are experiments has so far operated at too high a level of generality. I revisit this discussion in the context of simulation in astronomy and astrophysics, arguing that a specific subclass of simulations that include a significant amount of empirically obtained temporal data count as experiments. This subclass will be a small one, as the majority of simulations in astronomy and astrophysics will still suffer from a sparseness of data. But it remains the case that there exist examples of simulations that are experiments.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

1 Introduction

The legitimacy of simulation as experiment has received much attention in the philosophy of science. On the one hand, there are those who object to the treatment of simulations as experiments, either because they are merely formal exercises or because they are representationally inferior to traditional experiment (Guala 2006; Morgan 2005). On the other hand, there are proponents of epistemic equality between experiments and simulations who argue that the traditional objections to simulations misunderstand their structure and their role in science (Parker 2009; Morrison 2009). While I have reservations about whether it is necessary to meet the experimental threshold to do justice to the epistemology involved in astronomical and astrophysical (hereafter, A&A) practice, it is undeniable that the status of simulations as experiments has captured philosophical interest. In the majority of the literature, the debate concerns the use of simulation as one particular type of experiment: what Allan Franklin has called a conceptual experiment, one that engages in theory testing and/or prediction (Franklin 2016; Franklin 1981).

I argue that this debate has thus far operated at too high a level of generality. As in many areas of complex scientific practice, a general answer to the epistemic significance of some activity is likely to miss important details in individual cases and contexts. Instead, I focus on a key ingredient in the representational validity of a certain subclass of simulations that has undergone comparatively little scrutiny. I argue that the inclusion of temporal data has the potential to inform simulations in A&A in a way that meets representational adequacy constraints and sidesteps concerns about materiality. This practice can permit empirically rich simulations of evolving systems to count as conceptual experiments.

In what follows I briefly review a selective history of the simulation-experiment debate, focusing on the disputes over materiality and representational adequacy. I then describe the role of temporal data as a substantial property in dynamical simulations, which when used to inform simulations of evolving systems serves to increase representational adequacy. I build upon recent work in the growing field of philosophy of A&A by Melissa Jacquart (2020), Sibylle Anderl (2016, 2018), Siska De Baerdemaeker (2022), Siska De Baerdemaeker and Nora Boyd (2020), Jamee Elder (forthcoming), Katia Wilson (2016, 2017), Chris Smeenk (2013), and Michelle Sandell (2010) and defend a novel view in which simulation of the temporal evolution of a system constitutes a necessary, and together with certain other contextually variable measures of empirical adequacy, jointly sufficient condition for conceptual experimentation on dynamical systems. This conclusion is both pessimistic and optimistic: on the one hand, a significant majority of simulations in A&A will not fulfill these conditions and thus are not experimental. However, there does exist a specific subclass of simulations that do meet these criteria and should therefore be seen as conceptual experiments.

The question of whether simulations can count as experiments can be contextualized as hinging on the question:

-

CQ: Do simulations connect to the world in the relevant way?

I suggest that the answers to this question might be less obvious than it might initially seem, and that the inclusion of specific kinds of data (namely, temporal data) may be illuminating. In Sect. 11.1, I address the challenges inherent in achieving representational adequacy in simulation. It is almost always acknowledged that if simulations are to approach the epistemic productivity of experiments, they must achieve a level of representational adequacy sufficient for empirical accuracy. In Sect. 11.2 I will discuss the importance of temporal data with respect to this question, arguing that instantiations of temporal evolution serve as a so-far philosophically neglected relationship between simulations and the target system. Before that, however, it is important to take a step back and inventory the requirements placed on experiments in the literature and what these conditions indicate about the epistemology of experiment.

2 Epistemology of Simulations and Experiments

Allan Franklin, perhaps the most authoritative voice on experiment in the philosophical literature, lays out an array of ways in which experiments can be epistemically positive. They can be exploratory endeavors operating largely independently of previously accepted theory, they can be exercises in further clarifying the consequences of an assumed theory or model, they can test predictions or model components, they can be collections of measurements, they can also be rhetorically useful fictional exercises that serve to propel further research. Experiments may also concern the testing and perfection of new methodologies, rather than direct investigation of some target system (Franklin 2016, 1–4). This list is non-exhaustive, and the goals contained in it are not mutually exclusive. We may for example simultaneously seek to probe a new phenomenon in a model-independent context, while also testing a new measurement technique. The epistemology of experiment captured by these various activities points to a pragmatic picture of experimental activity. The role experiments play is deeply connected to the aims with which scientific research is conducted. Experiments can serve a wide variety of roles connected to scientific inquiry, so long as those roles are governed by endeavors that, as Franklin identifies, “add to scientific knowledge or [are] helpful in acquiring that knowledge” (Franklin 2016, 300–301).

There is nothing in the above-sketched picture of the epistemology of experiment that to my mind prima facie excludes simulation. I would argue instead that this picture probably applies to much of the work done using simulations, but certainly it specifically applies to the subclass of simulations that incorporate a fully developed model of temporal evolution. I think it is likely that when philosophers of science dispute the legitimacy of simulation as experiment, they have in mind a narrower view of experimentation somewhere along the lines of what Franklin calls “conceptually important” and “technically good” experiments. The former are experiments designed to test theories and predictions, while the latter are experiments that attempt to improve accuracy and precision in measurement (Franklin 1981, 2016, 2). A conceptual experiment can be understood as one that falls into the category of traditional Baconian experiment, where a researcher attempts to isolate a specific aspect of a system in order to test assumptions (whether those be specific variables, parameters, or predicted effects). It is an experiment that allows the refinement of theoretical concepts. Thus, another way to characterize the project set out here is a defense of simulations in A&A as conceptual experiments: when temporal data is properly instantiated in a simulation to represent a complete picture of the event/process under scrutiny, then the conditions for conceptual experimentation have been met.

3 Materiality and Representation

Often the question is raised whether A&A can be classified as experimental sciences. Ian Hacking infamously argued that the observational nature of A&A precluded them from operating as experimental sciences, and thereby impoverishes their epistemic significance (Hacking 1989). He lamented both the limited interventive ability of A&A and their observational nature, stating that, “galactic experimentation is science fiction, while extra- galactic experimentation is a bad joke,” and that A&A cannot facilitate realism about their postulated entities (Hacking 1989, 559). Since then, the situation has changed in some ways for A&A, and not in others. We still cannot directly intervene on galactic and extragalactic systems, but the use of simulations to fill this gap has become ubiquitous. Hacking’s challenge now requires an answer that addresses both the objections to the use of simulation as epistemically sufficient for experimentation, and the question of whether a largely observational science can still exhibit experimentation.

Much of the debate over whether computer simulations are representationally meaty enough to count as experiments has turned on the role of what has been called, “materiality.” The central idea, first expressed by Francesco Guala (2006) and subsequently critiqued by Parker (2009), is that the relationship between a traditional experiment (e.g., a swinging pendulum) and its target system of study is both a formal and material one. By this Guala means that there are certain formal similarities (e.g., physical laws) and material similarities (e.g., physical constituents) that obtain between the contents of the experiment and the content of the target system. An experimental measurement of gravitational acceleration using a simple pendulum as a harmonic oscillator has material content in common with the target system. Conversely, on Guala’s view simulations retain only formal similarity with their target system. They lack the common material substratum found between traditional experiments and a system, and thus cannot bear as substantively on research questions about how the world is really composed and structured. A similar view is expressed by Mary Morgan (2005), who claims that traditional experiments that share “ontological” composition with the target system are more epistemically powerful (Morgan 2005, 326).

Wendy Parker (2009) has criticized this view of experiment as too narrow and as stipulating a mutually exclusive relationship between simulations and experiments where one need not obtain. Rather, she argues that simulations should be understood as “time-ordered sequences of states,” with computer simulations specifically understood as such a sequence undertaken by a “digital computer, with that sequence representing the sequence of states that some real or imagined system” exhibits (Parker 2009, 487–488). She additionally defines a “computer simulation study” as that simulation plus all the attendant research activities that usually accompany the use of simulations in scientific practice, including development and analysis (ibid., 488) . Under this view, simulations very often qualify as experiments, and moreover can license generalization from conclusions about the simulation system to conclusions about a real-world material system, if that simulation properly represents the content of the world, including the causal relations between objects.Footnote 1 I find Parker’s view largely convincing and a good starting point for the central role of temporal data on which I will focus. While it is surely right to regard simulations as “time-ordered sequences,” one must be careful not to assume that the temporal dimension of simulations is merely formal. I will explain this idea in detail in the next section.

The central goal of Parker’s account is to disentangle discussions of simulations and experimentation from a focus on shared materiality. She argues that what is important in this discussion is the relevant similarity between the simulation and the material system simulated, given the research question of the study. Unless that research question is specifically about how to reconstruct a physical system in a lab setting, then material similarity need not be understood exclusively as common material composition (Parker 2009, 493). She cites meteorological simulation cases as prime examples of settings in which trying to construct a simulation made of the same material as the target system would be fruitless and impossible (Parker 2009, 494). Parker’s account does not prohibit the epistemic superiority of traditional experiment over simulation on ontological grounds in some cases; rather she rejects the generalization that such superiority should obtain across the board.

Margaret Morrison’s (2009) view is an attempt to further elucidate the way in which all experiments are almost always highly dependent on modeling. Traditional experiments are just as highly dependent on models for their epistemic context (Morrison 2009, 53–54). She likens the construction and tuning of parameters in a computer simulation to the calibration of equipment in a traditional experiment (Morrison 2009, 55). On this view, models themselves are “tools” for experimental inquiry and thus play both a formal and material role. Simulations are first and foremost models, and simulation studies with their attendant computational equipment and pre-and post-hoc analysis are not substantively different from traditional experiments with much of the same modeling infrastructure (ibid., 55). It is precisely because the simulations are built from data models of the phenomena in question, which themselves include volumes of indirect observational data, that these simulations can be said to “attach” to the physical system they are used to explain.

This point has also been nicely made by Katia Wilson, who describes astrophysical simulations as being composed of many pieces of empirical data, including processual data, morphological data, parameters from best fit, and empirical data included in the attendant background theory informing the simulation (Wilson 2017). Wilson stops short of considering this composition of simulations as sufficient to ground a view of them as fully experimental, largely because in many simulations there is insufficient data to fully represent the system. This concern, that simulations might not be reliable guides to new information about the world, still holds considerable sway among philosophers (Gelfert 2009; Roush 2017). This concern might be well placed when it comes to many simulations of A&A phenomena, particularly when, as I will discuss later, the body of data is subject to what James Peebles (2020) and Melissa Jacquart (2020) have called the “snapshot problem”—where data is assembled from multiple entities in an attempt to reconstruct a picture of single type of entity.

3.1 Intervention and Observation

I now turn briefly to the role of intervention (also called “manipulation”) in simulations. There may be some consensus that simulations are amenable to intervention. It is common practice to intervene on certain parameters or model components in search of a detectable change in effect. The role of intervention has been most influentially explicated in the philosophical literature by James Woodward (2003; 2008) in connection to causation. Briefly, Woodward’s idea is that if one were to intervene on a cause (manipulate it in some way) a corresponding change in an effect should be observed (Woodward 2008). In the context of experiment, intervention is often viewed as one of the defining traits: an experiment is seen as a controlled attempt to intervene on different features of a structure in order to observe the corresponding effects. Intervention is what largely characterizes the purposeful nature of experiment: experiments do not occur naturally but are the product of direct intervention by an experimenter, usually with a specific research goal in mind. Morgan, Guala, Parker, and Morrison all endorse some version of intervention as a necessary condition for experiment. Allan Franklin counts intervention as a hallmark of “good” experimentation, one that increases confidence in both the predicted effects of the experimental intervention and the experimental apparatus itself (Franklin 2016). Intervention should be understood as supervening on representation, as any intervention that occurs in an experiment that is not appropriately related to the target system of interest is not epistemically productive (a point well-captured by Franklin (2016)’s other desiderata).

It is a common position that A&A do not lend themselves to the same kind of intervention as other sciences because of their observational nature. This point was most forcefully made by Hacking (1989) in his discussion of the importance of realism and the epistemic hurdles that largely observational sciences face in producing experiments that can explain phenomena of interest. It is easy to see why such an argument can plague A&A: we cannot intervene on a target system of the Universe when we have yet to send probes much further than our own Solar System.

Simulation has been the answering methodology to the challenge of intervention in A&A. We can intervene on simulations, even highly complex ones. Specifically, simulations in which in the parameters and variables, and the relationships between them, are well understood (i.e., many hydrodynamical simulations, simulations of celestial movement and proto-planet evolution, etc.) are those in which finely grained intervention can and does take place. Intervention is, in many ways, one of the main goals of much simulation. Thus, the real challenge in my view to the legitimacy of A&A simulation as experimentation is not the lack of intervention, but clearly defining those cases in which the inner workings and relationships of the simulation are understood well enough to license the appropriate inferences. It is in this way that I believe the question of interventive potential in simulations supervenes on the question of representational adequacy. A simulation that counts as a conceptual experiment must include a sufficient amount of empirical data, and I argue that those that include a substantial amount of empirical temporal data will meet this requirement. It is important to be upfront that this demand will rule out many simulations in A&A as insufficiently representative and non-interventive, and therefore non-experimental. Simulations with highly uncertain dynamics or assumptions about model relations will not lend themselves to experimentation. This would therefore exclude many astrophysical models of as-yet poorly understood processes, such as black hole seed formation models.Footnote 2

4 A&A Simulation and Temporal Data

The importance of temporal data in their own right, rather than as mere modifiers or structures for other data, has only very recently begun to merit serious consideration from philosophers of science. David Danks and Sergey Pils have recently argued for the importance of considering measurement timescales in discussions of evidence amalgamation (Danks and Pils 2019). Julian Reiss has argued that time series data must be considered in accounts of causation, and moreover that it presents a challenge to the applicability of certain accounts (Reiss 2015). Dynamical sciences, those that concern the development and changes in a system over time, are deeply dependent on the acquisition of data that reports on the rate at which such processes occur, the order in which they occur, and the temporal duration of the system as a whole. Explanations of evolving systems cannot proceed without this kind of data, which is often acquired through varied and robust evidential sources.

Meanwhile, the fields of A&A have historically occupied a fringe position in philosophy of science. While discussions of discovery and theory change have sometimes engaged with cases in A&A (e.g., Kuhn 1957, 1970), the underlying conception of the discipline among philosophers appears to have largely adhered to Hacking’s (1989) dismissal of astronomy as a purely observational, and thus philosophically sparse, endeavor. Recent attempts to rehabilitate the significance of A&A for philosophy of science have highlighted the ways in which contemporary A&A go beyond the realm of pure description to offer predictions, explanations, and confirmations of A&A models. Most importantly for this discussion, much of data-driven A&A is concerned with the consideration of dynamical systems: observations of star mergers, galaxy rotations, cosmic inflation, planet composition, etc. all require the consideration of temporally evolving systems. Following the 2010 Decadal Survey, the cyclical report considered the roadmap for A&A science and the result of collaboration by a large panel of researchers, astronomers Graham et al. identify a key shift in A&A practice from stagnant “panoramic digital photography” to “panoramic digital cinematography,” where the time domain becomes the necessary setting for studying a large swath of A&A phenomena (Graham et al. 2012, 374). The recently released 2021 Decadal Survey, identifies time-domain astronomy as “the highest priority sustaining activity” in space research (NASEM 2021, 1–17), and states that,

Time-domain astronomy is now a mature field central to many astrophysical inquiries...The recent addition of the entirely new messengers—gravitational waves and high-energy neutrinos—to time-domain astrophysics provides the motivation for the survey’s priority science theme within New Messengers and New Physics. (NASEM 2021, 1–6)

This understanding of A&A, and by extension A&A simulation, indispensably involves time series data, requiring the analysis of temporal development of a system over time. Temporal data feature prominently and crucially in contemporary A&A, for small, medium, and large A&A entities alike. Contemporary, data-driven A&A must synthesize vast quantities of evidence to analyze and draw inferences regarding the behavior of astronomical bodies. In so doing, these sciences are deeply engaged in not merely the taxonomy and composition of phenomena, but dynamical modeling and explanation of the evolution of those phenomena over time.

4.1 The Nature of Temporal Data

Temporal data, both the content found in time series data and the structures created with time steps and timescales, have always played a foundational role in the practice of A&A (e.g., in measurements of the length of day and constellations), but formal development of analysis techniques coincided with the influx of new technological infrastructure, particularly more advanced telescopes, interferometers, and arrays (Scargle 1997). Nowadays, advanced statistical analysis of time series data is conducted using Fourier analysis, autoregressive modeling, Bayesian periodicity, and other techniques. Recent advances have also introduced parametric autoregressive modeling techniques to analyze astronomical light curves in order to accommodate irregular time series data sets (Feigelson et al. 2018). There are interesting questions connected to the epistemic status of individual analysis techniques, but I will bracket those for the forthcoming discussion. The important thing to note is that the contemporary analysis of time series data is now a complex, formalized, and multiplatform endeavor that often synthesizes data collected from numerous detectors and research teams. Simulation suites invoking empirical time series data have already been developed for upcoming and recently launched detectors, such as the JexoSim exoplanet transit simulation program for the James Webb Space Telescope (Sarkar et al. 2020).

Time series data can be broadly divided into two types: periodic and aperiodic. Periodic time series data describe the regular behavior of phenomena, such as the orbit of planets and pulsars. The periodicity of the system is a specific measurable value, necessary to classify the system. Aperiodic data pertain to phenomena that have a beginning and an end, such as the death of stars. Aperiodic time series data can be further divided into stochastic and transient data. The former describes phenomena like the accretion of stars and galaxies, which involves highly irregular and non-deterministic processes. Transient systems are those that undergo a discrete transformation or set of transformations from an initial to a final state (i.e., thermonuclear death of stars and binary mergers). Most time series data collection concerns the measurement of light from a source, though the advent of multimessenger astronomy has broadened the category of sources to include neutrinos, gravitational waves, and cosmic rays. Time series data collection from a light source is almost always used to generate a light curve, which is a representation of the brightness of a source at or over specific times. As astronomer Simon Vaughan describes it,

…the astronomer is usually interested in recovering the deterministic component and testing models or estimating parameters, e.g., burst luminosity and decay time, rotation period, etc. In other cases, the ‘noise’ itself may represent the fundamentally stochastic output of an interesting physical system, as in turbulent accretion flows around black holes. Here, the astronomer is interested in comparing the statistical properties of the observations with those of different physical models, or using the intrinsic luminosity variations to ‘map out’ spatial structure. These projects, and many others, are completely dependent on time-series data and analysis; our only access to the properties of physical interest is through their signature on the time variability of the light we receive. (Vaughan 2013, 3)

Vaughan’s characterization of the way in which time series analysis is indispensable to astronomy underscores what I identify as the necessary condition for conceptual experimentation on evolving systems. It is entirely impossible to generate a representation that is faithful to the nature of an evolving system without the introduction of temporal data.Footnote 3 This data also requires a different collection and interpretation methodology than other aspects of empirical representation. It must be further synthesized into a meaningful variable of the system (e.g., a light curve, leading to a brightness or luminosity estimate), that is an (at least) two-dimensional value, rather than a one-dimensional one (e.g., solar mass).

One of the principal goals in the collection of time series data is the generation of a timescale, which is a numerical representation of the rate at which a type of process occurs. For example, in order to have a comprehensive understanding of stellar evolution, it is not enough to simply observe the temporal evolution of individual stars; that is one step of many. A theory of stellar evolution will explain the rate at which entities that conform to certain constraints (i.e., types of stars) evolve; it is applicable to all tokens of a type. The generation of a timescale thus permits future classification of other systems.

Already one might have anticipated the way in which I seek to characterize the role of temporality in simulation. The philosophically common view of simulations (as largely formal exercises that do not attach to the target system in any way substantial enough to qualify as representations) risks conflating the differences between time series data and timescales. Timescales are formal, mathematical constraints on what counts as an instance of a type of temporally encoded process. Time series data are not: they are empirical data. This is because time series data are inherently observational, and thus representative of the target system. It is in this way that temporal data operates as a specific subclass of representation, one that is essential for an accurate representation of an evolving system. This kind of representation is often glossed over or otherwise neglected in philosophical treatments of representation, whereas it is actually a necessary condition for accurately representing a system that changes over time. Moreover, the ways in which temporal data is collected, synthesized, and instantiated in a simulation are complex and varied and cannot be subsumed under the umbrella of other means of instantiating empirical data in models.

4.2 Examples

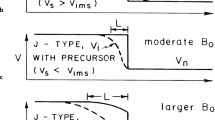

Aperiodic timescales for transient phenomena range from the very long (Gyr or even longer, if we consider the cosmological scale) to the very short (days to weeks, in the case of supernovae). A prime example of aperiodic simulation study is that of supernovae, the explosive deaths of stars. A set of recent studies using 2-D and 3-D simulations of neutron-driven supernovae make substantial use of time series data in order to draw conclusions about the dynamical evolution of this type of stellar process (Scheck et al. 2008; Melson et al. 2015). In Scheck, et al.’s first study, both high and low energy (neutrino velocity) models of stellar accretion and subsequent core-collapse explosion are integrated into the simulations, which each feature different light curve variables (Scheck et al. 2008, 970). Light curves (again, the numerical representation of brightness variation over time) are statistically represented as time series in simulations. These light curves are determined from a computation of the magnitude of the target object, in this case a star of 9.6 M☉, plotted as a function of time (see Fig. 11.1). Scheck et al.’s results provide early indication that a “hydrodynamic kick mechanism” initiates ~1 second after the core bounce of the supernovae. Interestingly, they “unambiguously” state that this observation yields a specific testable follow-up prediction, that the neutron velocity is measured against the direction of the outflow of supernova ejecta (Scheck et al. 2008, 985).

Scheck et al.’s plot of advection time \( {\tau}_{adv}^{\nabla } \) of shock-dispersed fluid and acoustic times (τ sound) against oscillation period (τ osc) for 6 of the models within the simulation. (Credit: Scheck et al., Astronomy and Astrophysics, vol 931, 477, 2008, reproduced with permission © ESO)

Melson et al. build upon Scheck’s 2-D simulation, importing the physical assumptions, to generate a more advanced 3-D simulation. Their study, using the same instantiation of light curve variation, shows that the post-shock turbulence of neutrino-driven supernovae produces measurable effects of “reduced mass accretion rate, lower infall velocities, and a smaller surface filling factor of convective downdrafts” (Melson et al. 2015, 1). These studies demonstrate the pivotal role of time series data in the construction of simulations, which is itself derived from empirical measurement. Moreover, in this case specific conclusions about the target systems are drawn. Thus, it seems clear that in the A&A context, simulations that use time series are being treated as a kind of experiment.

Yet another aperiodic case, this time at the even larger scale of galaxy evolution, illustrates the indispensability of time series data. Dubois et al. developed a large-scale, zoom-in hydrodynamical simulation, NEWHORIZON, of galaxy evolution using the adaptive mesh refinement RAMSES code. Time series data taken from observations and synthesized with theory of star formation rates (SFR) are used to inform empirical parameters for thermal pressure support (αvir) and the instantaneous velocity dispersion (σg). They also employ a time-integrated value for cosmic SFR density, which they generated by taking the individual mass of all particles (stars) and summing over them to attain a cosmic SFR density value. They then compare this value to observational measurements of cosmic SFR density, demonstrating the use of time series data to constrain temporal representation in the simulation (see Fig. 11.2) (Dubois et al. 2021). Importantly, they do flag uncertainties in these rates, but point out that these are consistent with uncertainties in observations due to cosmic variance.

Dubois et al. unambiguously laud the ability of simulations to improve theoretical understanding of galactic dynamics:

Therefore, cosmological simulations are now a key tool in this theoretical understanding by allowing us to track the anisotropic non-linear cosmic accretion…in a self-consistent fashion. (Dubois et al. 2021, 1)

… large-scale hydrodynamical cosmological simulations with box sizes of ∼50–300 Mpc have made a significant step towards a more complete understanding of the various mechanisms (accretion, ejection, and mergers) involved in the formation and evolution of galaxies… (Dubois et al. 2021, 3)

The NEWHORIZON simulation constitutes another case of the indispensability of time series data, this time both to inform and generate content in the simulation and to check the validity of the simulation values afterward. The tasks described by Dubois et al. as being performed by the simulation fall squarely into the tasks described by Franklin as the province of conceptual experiments: the testing and refinement of theory.

4.3 Challenges

One might argue that this view only works if one grants that simulations count as measurements, i.e., if they attached to the target system rather than function as exercises in detached theorizing. Guala has suggested that this kind of representation obtains when the experimental constituents are made of the same stuff as the target system (Guala 2006). But Parker and Morrison have emphasized how this requirement is too strong. I suggest the situation for simulations with significant amounts of temporal data is more complex (Parker 2009; Morrison 2009). Simulations of the kind most commonly used in A&A represent and attach to the world by instantiating substantial amounts of observational temporal data. In short, simulations of this kind are heavily constrained by observational data. As Morrison and Parker point out, simulations are almost never isolated computer exercises, but rather instantiate well-supported data models and are embedded in larger studies that include the sum of available observation and analysis on the research question at hand (Parker 2009; Morrison 2009). They are observationally constrained, which means that they effectively represent the target system in such a way that the system is instantiated in the simulation. Even more decisive is the way in which a simulation which accurately represents the temporal evolution or periodicity of a system instantiates one of that system’s most important properties: its temporal features. Usage of temporal data, as I have outlined above and as it is explained by Vaughan (2013), is a necessary condition of representing many astronomical systems. Insofar as such representation does not cut any corners that would have been covered in a laboratory setting, there is no real philosophical difference with respect to materiality between the representation found in simulations and those that could be produced in an ideal lab setting. In sum, simulations of dynamical systems do attach to the world, and a major part of how they do so is their instantiation of temporal data.

One might also object to the characterization of temporal data I have given by arguing that phenomena do not have any intrinsic timescale, but rather such constraints are placed on them by our analysis (Griesemer and Yamashita 2005). This argument is one that supposes that temporal constraints are the product of an observer, and thus are an artifact of scientific practice, not an inherent feature of the phenomena that can be used to ground claims about the representational relationship between models and target systems. It is straightforwardly true that phenomena do not have inherent timescales. A timescale, however, is the numerical rate of change of a given system. It is synthesized from time series data. A timescale is the formal constraint distilled from multiple sets of time series data. It can be understood as the formula that permits identification of future tokens as members of a type (e.g., if a repeated observations of specific events such as kilonovae show an emission period of roughly 2 weeks, then we can estimate the timescale for this kind of event to be 2 weeks and that window may be used to help classify future events). Thus, it is quite obviously a constraint on phenomena imposed by the observer. The worry expressed by Griesemer and Yamashita fails to adequately distinguish between time series data sets and the timescales created from them.

The more interesting question is whether temporal order is inherent in phenomena. This is a complicated question, one that requires addressing ongoing debates about the irreducibility of the arrow of time in evolving phenomena. But I do counter that linear temporal evolution of systems is not reducible in many sciences, so it’s unobvious why that would present a problem for the representational adequacy of simulations of evolving systems. It is a well-known problem that phenomenal laws of thermodynamics cannot be stripped of their linear directionality.

4.4 Discussion

A significant challenge to the integration of aperiodic temporal data in A&A simulations comes from what Jacquart and Peebles have discussed as “the snapshot” view—where data is assembled from multiple entities in an attempt to reconstruct a picture of single type of entity (Jacquart 2020; Peebles 2020). Essentially, it is often the case that researchers cannot observe aperiodic temporal changes in the same (i.e., same token) object over time, but must rather compile “snapshots” of evolutionary processes from different objects in different stages of the process. This kind of piecemeal assembly is required for models and simulations of most transient events, planetary and stellar evolution, gas clouds, etc. The reason why this problem permeates aperiodic temporal modeling is straightforward: almost all A&A systems evolve over very long timescales. This means that the timescales at which these entities evolve are so large that we could never hope to observe even a substantial part of them throughout the whole of human history, much less observe them entirely during normal research programs. Stars and planets evolve over tens of thousands of years, and so we must resort to discontinuous means to assemble continuous observations of them (Jacquart 2020). Jacquart explains that if one assumes continuity of a target object of study over time is necessary for true experimentation, then the kind of “cosmic experiments” performed in A&A are problematic (ibid.).

This problem is partly mitigated by the wide range of A&A entities at our viewing disposal, such that we can and do assemble largely complete pictures of temporal evolution from disparate entities. Other aperiodic events have comparatively much shorter timescales (e.g., ~2 weeks for the final stage of a neutron star merger). These short-timescale events do not struggle with the snapshot problem because they can be observed continuously in their entirety. But there is still a large catalogue of objects for which our temporal pictures are incomplete. And moreover, as Jacquart has pointed out, there are serious epistemic concerns with the snapshot approach to observing temporal evolution. It is not a given that the assemblage of data from different tokens, at different stages of development, even if they are of the same type, can stand in for a single continuous observation (ibid.).

The first thing to note about this problem is that it is not entirely unique. Similar problems are found in climate science, geology, and paleontology. To the extent that a problem pervades many sciences, there is prima faci reason to reject the suggestion that such a problem creates doubt as to the experimental status of one particular science. And more directly to the challenge, I think that the way we overcome this kind of problem rests in the practice of using simulations. We simulate the temporal evolution as it would appear if we were able to look at the real system in a time lapse (recall Graham et al.’s description of time-domain astronomy as “digital cinematography” (Graham et al. 2012; 374). This process does not need to be perfect to count as experimentation. Evidence produced by a simulation need not be definitive or ineluctable to count as evidence from experiment. Very few researchers assume that simulation outputs are the final word on any research question. Rather they often hope that their simulation results might be further corroborated by empirical evidence in the future, whether by additional simulations constructed from different data sets (a robustness condition) or by different types of empirical evidence (a variety of evidence condition). Their reason for optimism is the same reason why we would desire robust corroboration from traditional experiments. But the challenge with these simulations is that often the needed independent data is either not available, or else is drawn from the same data set used to construct the simulation, which creates a circularity problem for their empirical accuracy. The potential for circularity can be ameliorated by “splitting” data sets, in which some portions of datasets are used for model construction and other portions are kept separate for subsequent testing (Lloyd 2012, 396).

But this does not mean that simulations are in principle qualitatively different from and inferior to traditional experiments in the way often argued. Rather it means that there are practical constraints limiting the use of simulations as ideal representations of the target system. These same practical constraints exist in the context of many, if not most, traditional experiments. Because this a practical constraint, the situation might be expected to improve with the launches of more powerful detectors (the recently launched James Webb Space Telescope, and the upcoming Vera C. Rubin Observatory (f/k/a Large Synoptic Survey Telescope), Nancy Grace Roman Telescope, LISA, etc.).

A further but related challenge to the understanding of simulations as conceptual experiments may target the epistemic output of simulations when they are used to test models or model aspects. Can the output of a simulation provide a definitive answer to a research question, especially when that question requires an empirical, not merely logical, answer? Wilson stops short of considering the empirical composition of simulations sufficient to ground a view of them as fully experimental, largely because in many simulations there is insufficient data to fully represent the system (Wilson 2017). Wilson notes, drawing on Winsberg (2010) that there are cases in which much of the output data collected from the simulation synthesizes information already possessed, where the epistemic contribution of the simulation lies in its ability to illuminate hidden relationships in the data. But this problem is not insurmountable. Simulations can admit of empirical answers to research questions when the simulations are constructed with a sufficient amount of empirical data.

This view of simulation construction and our confidence in simulation results coheres with what Elisabeth Lloyd has called the “complex empiricism” approach to simulations and models. Rather than a one-to-one, veridical testing relationship between a single assumption and some piece of “raw” data (what Lloyd calls the “direct empiricist” approach and identifies as a descendant of the Hypothetico-Deductive view of explanation), simulations are more appropriately evaluated as complex entities that are constructed using a body of theoretical assumptions, background empirical evidence, and informed decision-making:

This updated view of model evaluation focuses on independent avenues of theoretical and observational support for various aspects of the simulation models, as well as the accumulation of a variety of evidence for them…Additionally, the provision of independent observational evidence for various aspects and assumptions of the models—such as measuring parameter values and relations between variables—increases the credibility of claims made on behalf of models. This support can go beyond or replace the provision of empirical support that might otherwise be provided by matching the predictions with observational datasets. There are, in other words, many more ways to empirically support a model than through predictive success of a single variable. (Lloyd 2012, 393–396)

Under this view, simulations attach to the world they are intended to represent through complex network of background evidence. Representation in simulations is not the unedited copying of complete pictures of the empirical world, but rather the complicated process of constructing an empirically informed patchwork from pieces. With this view of simulation in mind, the snapshot problem becomes an understood reality of modeling practice that can be addressed and worked around, rather than an insurmountable epistemic shortcoming.

One might still argue that the empirical data instantiated in these simulations is still piecemeal and therefore necessarily discontinuous. My answer here requires the recognition of the importance of regularity and law-governedness in A&A. We are often able to generalize beyond the empirical background data in a simulation to piece together discontinuous bits of new data because we are dealing with physical phenomena at (usually, and hopefully) well-understood scales and governed by background theory that has already earned confidence. This point is also underscored by Lloyd in the context of climate science, in which,

…the derivation of aspects of model structure from physical laws adds to the modelers’ convictions that some of the basic structure, proportions, and relations instantiated in models are fundamentally correct, and are unlikely to be challenged or undermined by datasets that themselves embody potentially arbitrary assumptions. (Lloyd 2012, 396)

In these cases, we can feel confident in generalizing outwards because we have reasonable theoretical grounds to assume certain regularities. Most importantly, this practice can help us make the epistemic jump from shorter to longer timescales, provided that we have sufficient background information to assume a continuity of physical constraints between systems. If we are able to continuously observe a shorter timescale event, like a star merger, in its entirety then we may proceed with more confidence in constructing simulations of longer timescale events, such as the prior evolution of those stars and their journey toward merger. While the latter will require the piecemeal assembly of the snapshot view, the primary worry associated with this methodology, that we may choose the wrong snapshots, can be ameliorated. We can generalize from well-understood physics of short timescale events to long timescale events to get around the epistemic uncertainty of the snapshot problem. Simulations that can overcome the snapshot hurdle by instantiating enough temporal data can therefore serve as conceptual experiments, if we are confident enough in the means by which their temporal data was assembled to license generalization to other cases. This is the condition for conceptual experimentation Franklin sets out (that theory and models may be tested), and I submit that the role played by temporal data in A&A simulations is sometimes representationally robust enough to meet it.

Importantly though, this response does not automatically apply to less well understood scales, such as the very large (cosmological) and very small (quantum).Footnote 4 For those cases, I suggest that the possible remedy once again lies in the use and instantiation of temporal data in simulation. It is not the case that only deterministic or simplified models of temporal data are used for simulation. Simulations can and are conducted using stochastic models of temporal data (e.g., Parkes Timing Array data for pulsars as seen in Reardon et al. 2021). It is these cases that suggest a possible way forward for more accurate and well-understood representations of simulations in A&A.

5 Conclusion

In the preceding discussion I have examined a brief history of the simulation/experiment debate and argued that much of the objections to simulations as experiments do not apply to dynamical simulations of temporal systems in A&A. These simulations, because they instantiate a significant amount of empirical temporal data and achieve a higher level of representational adequacy, can serve as conceptual experiments in sense discussed by Franklin.

Notes

- 1.

Parker eschews talk of “systems” and “target systems” in her discussion because she wants to avoid an account where what defines a target of experiment is dependent upon the intent of the researcher (Parker 2009, 487). I retain this terminology because it is more consistent with that used in scientific literature.

- 2.

Ricarte and Natarajan have shown that existing models of black hole seed formation designed to predict electromagnetic detection signatures are actually unable to distinguish seed signatures from effects of background assumptions regarding accretion mechanics. Models predicting gravitational wave signatures fare better because they do not require accretion assumptions, but they fall victim to highly uncertain dynamics (Ricarte and Natarajan 2018).

- 3.

It is possible and sometimes desirable to generate fake or otherwise simplified time series data sets. This practice may be particularly useful (or necessary) when we are confronted with processes that cannot be observed in their entirety and are part of systems that we do not yet understand well enough to extrapolate from background theory. These data sets, when they are largely randomly generated rather than empirically derived, should not be understood to play the representational role I discuss here.

- 4.

There must also be added complexity for the representation of systems with stochastic or indeterminate properties.

References

Anderl, Sibylle. 2016. Astronomy and Astrophysics. In The Oxford Handbook of Philosophy of Science, ed. Paul Humphreys. Oxford: Oxford University Press.

———. 2018. Simplicity and Simplification in Astrophysical Modeling. Philosophy of Science 85: 819–831.

De Baerdemaeker, Siska. 2022. Method-Driven Experiments and the Search for Dark Matter. Philosophy of Science 88 (1): 124–144.

Danks, David, and Sergey Pils. 2019. Amalgamating Evidence of Dynamics. Philosophy of Science 196 (8): 3213–3230.

De Baerdemaeker, Siska, and Nora Mills Boyd. 2020. Jump Ship, Shift Gears, or Just Keep on Chugging: Assessing the Responses to Tensions Between Theory and Evidence in Contemporary Cosmology. Studies in History and Philosophy of Science Part B: Studies in History and Philosophy of Modern Physics 72: 205–216.

Dubois, Yohan, et al. 2021. Introducing the NewHorizon Simulation: Galaxy Properties with Resolved Internal Dynamics Across Cosmic Time. Astronomy and Astrophysics 651: A109.

Elder, J. Forthcoming. Theory Testing in Gravitational-Wave Astrophysics. In Philosophy of Astrophysics: Stars, Simulations, and the Struggle to Determine What is Out There, ed. Nora Mills Boyd, Siska De Baerdemaeker, Vera Matarese, and Kevin Heng. Synthese Library.

Feigelson, Eric D., G. Jogesh Babu, and Gabriel A. Caceres. 2018. Autoregressive Times Series Methods for Time Domain Astronomy. Frontiers in Physics 6: 80. https://doi.org/10.3389/fphy.2018.00080.

Franklin, Allan. 1981. What Makes a ‘Good’ Experiment? British Journal for the Philosophy of Science 32 (4): 367–374.

———. 2016. What Makes a Good Experiment? Pittsburgh: University of Pittsburgh Press.

Gelfert, Axel 2009. Rigorous results, cross-model justification, and the transfer of empirical warrant: the case of many-body models in physics. Synthese 169 (3): 497–519.

Graham, M.J., S.G. Djorgovski, A. Mahabal, et al. 2012. Data Challenges of Time Domain Astronomy. Distrib Parallel Databases 30: 371–384.

Griesemer, James and Yamashita, G. 2005. “Zeitmanagement bei Modellsystemen: drei Beispiele aus der Evolutionsbiologie.” In Lebendige, by H. Schmidgen. Berlin: Kulturverlag Kadmos.

Guala, Francesco. 2006. The Methodology of Experimental Economics. Cambridge: Cambridge University Press.

Hacking, Ian. 1989. Extragalactic Reality: The Case of Gravitational Lensing. Philosophy of Science 56 (4): 555–581.

Jacquart, Melissa. 2020. Observations, Simulations, and Reasoning in Astrophysics. Philosophy of Science 87 (5): 1209–1220.

Kuhn, Thomas. 1957. The Copernican Revolution: Planetary Astronomy in the Development of Western Thought. Cambridge: Harvard University Press.

———. 1962/1970. The Structure of Scientific Revolutions. Chicago: University of Chicago Press.

Lloyd, Elisabeth A. 2012. The Role of ‘Complex’ Empiricism in the Debates about Satellite Data and Climate Models. Studies in History and Philosophy of Science 43: 390–401.

Melson, Tobias, Hans-Thomas Janka, and Andreas Marek. 2015. Neutrino-Driven Supernova of a Low-Mass Iron-Core Progenitor Boosted by Three-Dimensional Turbulent Convection. The Astrophysical Journal Letters 801 (2): L24.

Morgan, Mary S. 2005. Experiments versus models: New phenomena, inference and surprise, Journal of Economic Methodology, 12 (2), 317–329.

Morrison, Margaret. 2009. Models, Measurement, and Computer Simulation: The Changing Face of Experimentation. Philosophical Studies 143 (1): 33–57.

National Academies of Sciences, Engineering, and Medicine. 2021. Pathways to Discovery in Astronomy and Astrophysics for the 2020s. Washington, DC: The National Academies Press. https://doi.org/10.17226/26141.

Parker, Wendy. 2009. Does Matter Really Matter? Computer Simulations, Experiments, and Materiality. Synthese 169 (3): 483–496.

Peebles, P.J.E. 2020. Cosmology’s Century. Princeton University Press.

Reardon, D., et al. 2021. The Parkes Pulsar Timing Array Second Data Release: Timing Analysis. Monthly Notices of the Royal Astronomical Society stab1990.

Reiss, Julian. 2015. Causation, Evidence, and Inference. Routledge.

Ricarte, Angelo, and Priyamvada Natarajan. 2018. The Observational Signatures of Supermassive Black Hole Seeds. MNRAS 481 (3): 3278–3292.

Roush, Sherrilyn. 2017. The Epistemic Superiority of Experiment to Simulation. Synthese 195: 4883–4906.

Sandell, Michelle. 2010. Astronomy and Experimentation. Techné 14 (3).

Sarkar, Subhajit, Nikku Madhusudhan, and Andreas Papageorgiou. 2020. JexoSim: a Time-Domain Simulator of Exoplanet Transit Spectroscopy with JWST. MNRAS 491: 378–397.

Scargle, J. D. 1997. Astronomical Time Series Analysis. In Astronomical Time Series, by Dan, Sternberg, Amiel, Leibowitz, Elia M. Maoz, 1–12. Springer Netherlands.

Scheck, L., K. Kifonidis, H. Janka, and E. Müller. 2008. Multidimensional Supernova Simulations with Approximative Neutrino Transport. Astronomy & Astrophysics 457: 963–986.

Smeenk, Chris. 2013. Philosophy of Cosmology. In The Oxford Handbook of Philosophy of Physics, ed. Robert Batterman. Oxford: Oxford University Press.

Vaughan, Simon. 2013. Random Time Series in Astronomy. Philosophical Transactions of the Royal Society A 371: 20110549. https://doi.org/10.1098/rsta.2011.0549.

Wilson, Katia. 2016. Astrophysics in Simulacrum: The Epistemological Role of Computer Simulations in Dark Matter Studies. PhD thesis. University of Melbourne.

———. 2017. The Case of the Missing Satellites. Synthese 145.

Winsberg, E. 2010. Science in the Age of Computer Simulation. Chicago: University of Chicago Press.

Woodward, James F. 2003. Making things happen: a theory of causal explanation. New York: Oxford University Press.

———. 2008. Causation and manipulability. Stanford Encyclopedia of Philosophy.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this chapter

Cite this chapter

Abelson, S.S. (2023). Simulation and Experiment Revisited: Temporal Data in Astronomy and Astrophysics. In: Mills Boyd, N., De Baerdemaeker, S., Heng, K., Matarese, V. (eds) Philosophy of Astrophysics. Synthese Library, vol 472. Springer, Cham. https://doi.org/10.1007/978-3-031-26618-8_11

Download citation

DOI: https://doi.org/10.1007/978-3-031-26618-8_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-26617-1

Online ISBN: 978-3-031-26618-8

eBook Packages: Religion and PhilosophyPhilosophy and Religion (R0)