Abstract

Understanding how AI and robotics impact the workplace is fundamental for understanding the broader impact of these technologies on the economy and society. It can also help in developing realistic scenarios about how jobs and skill demand will be redefined in the next decades and how education systems should evolve in response. This chapter provides a literature review of studies that aim at measuring the extent to which AI and robotics can automate work. The chapter presents five assessment approaches: 1) an approach that focuses on occupational tasks and analyzes whether these tasks can be automated; 2) an approach that draws on information from patents to assess computer capabilities; 3) indicators that use AI-related job postings as a proxy for AI deployment in firms; 4) measures relying on benchmarks from computer science; 5) and an approach that compares computer capabilities to human skills using standardized tests developed for humans. The chapter discusses the differences between these measurement approaches and assesses their strengths and weaknesses. It concludes by formulating recommendations for future work.

Ms Staneva prepared the chapter included in this book in her personal capacity. The opinions expressed and arguments employed herein do not necessarily reflect the official views of the member countries of the OECD.

Mr Elliott prepared the chapter included in this book in his personal capacity. The opinions expressed and arguments employed herein do not necessarily reflect the official views of the member countries of the OECD.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

In the past, three major technological breakthroughs have ushered in industrial revolutions by enabling mass production, accelerating growth and shifting employment from agriculture to manufacturing and later to services: the introduction of steam-powered mechanical manufacturing in the 18th century; the progress in electrical engineering in the 19th century; and the invention of the computer in the 20th century. It is widely believed that artificial intelligence and robotics (in the following AI) resemble these technologies in their potential to transform work and the economy. Like these technologies, AI and robotics are seen as “general-purpose technologies” that are applicable in different economic sectors and can raise productivity across vast parts of the economy [7]. Therefore, they may trigger a Fourth Industrial Revolution in the 21st century.

While there is general agreement that AI will transform the economy, the direction of this transformation is still less clear. On the one hand, these technologies may lead to technological unemployment by replacing workers at the workplace. On the other hand, they can complement and augment workers’ capabilities and, with that, raise productivity, create new jobs and boost new demand for labor. To unravel this impact, studies must first understand what AI and robotics can and cannot do. Knowing which AI and robotics capabilities are now available and how they relate to human skills can shed light on the work tasks that these technologies can overtake from humans and the extent to which they can automate jobs in the future.

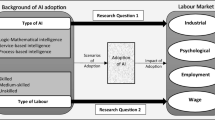

This chapter provides an overview of different methodological approaches to assessing the impact of AI and robotics on the workplace. These approaches aim at quantifying the degree to which occupations can be carried out by machines. Most of them stem from the social sciences and economics, but there are also important contributions from cognitive psychology and computer science. Some of this work has an exclusive focus on AI and robotics, e.g. [8, 12, 16]. Other studies view these technologies as part of a more general process of technological advancement, e.g. [4, 13].

Measuring the impact of AI on the workplace is fundamental for understanding its broader impact on the economy and society. It also helps in developing realistic scenarios about how jobs and skill demand will be redefined in the next decades. This should enable policy makers to adjust education and labor market systems to the challenges to come and to prepare today’s students and workers for the future.

The chapter proceeds as follows. The subsequent sections present five methodological approaches for assessing the impact of AI and robotics on work (Sects. 2, 3, 4, 5 and 6): a task-based approach; an approach that draws on information from patents; indicators based on AI-related job postings; measures relying on benchmarks; and a skills-based approach. The chapter discusses the differences between these measurement approaches and assesses their strengths and weaknesses (Sect. 7). It concludes by formulating recommendations for future work (Sect. 8).

2 The Task-Based Approach

In analyzing the extent to which AI can displace workers, many economic studies follow a so-called “task-based” approach: they focus on occupations and their corresponding tasks and analyze whether these tasks can be automated. This way they quantify the share of tasks within an occupation that can be performed by computers. This analysis typically relies on expert judgement or, sometimes, on the authors’ own notion of what tasks computers can perform. The main goal of this literature is to assess the impact of automation on the economy. To this end, studies map the information on occupations’ automatability to micro-level labor market data and study employment and wage levels in occupations and industries at high risk of automation.

The “task-based” approach has its origin in the work of Autor, Levy and Murnane [4]. The authors make the assumption that computers can replace workers in routine cognitive and manual tasks. The reason is that these tasks follow exact repetitive procedures that can be easily codified. By contrast, non-routine tasks are assumed hard to formalize and automate because they are more tacit and inexplicable. To measure automatability, Autor, Levy and Murnane [4] use several variables from the U. S. Department of Labor’s Dictionary of Occupational Titles (DOT) taxonomy. They categorize tasks that require direction, control, and planning of activities, or quantitative reasoning as non-routine and, thus, non-automatable. Tasks that require workers to precisely follow set limits, tolerances, or standards, or tasks that involve finger dexterity are labelled as manual and, thus, automatable.

Autor, Levy and Murnane [4] use their measures to explain changes in labor demand over time. They hypothesize that, as technology gets cheaper, employers would increasingly replace workers in routine tasks with machines. At the same time, the demand for workers in non-routine tasks would raise, because, according to the model, the computerization of the workplace increases the demand for problem-solving, analytical and managerial tasks.

Frey and Osborne’s study [13] extends the approach of Autor, Levy and Murnane [4] to account for more recent technological advancements that have expanded the potential for work automation. Progress in AI and machine learning, in particular, has enabled the automation of many non-routine tasks, such as translation, disease diagnosing and driving, that were seen as ‘uncodifiable’ in the Autor, Levy and Murnane’s framework.

Frey and Osborne’s [13] methodological approach to measure the automatability of the workplace follows three major steps:

-

First, the authors define three types of work tasks that are not yet automatable: perception and manipulation tasks, such as interacting with objects in unstructured environments; creative intelligence tasks, such as developing novel ideas; and social intelligence tasks, such as negotiating. Frey and Osborne [13] operationalize these so-called bottlenecks to automation with O*NET, a widely used occupation taxonomy that systematically links occupations to tasks. Concretely, they draw on nine task-related variables from O*NET: the degree to which an occupation requires finger dexterity, manual dexterity and working in cramped spaces (as proxy for perception and manipulation tasks); the degree to which occupations require originality and knowledge of fine arts (as proxy for creative intelligence); and the extent to which occupations involve social perceptiveness, negotiation, persuasion, or assisting and caring for others (as measurements for social intelligence).

-

Second, Frey and Osborne [13] assess the automatability of 70 of the 700 occupations in the O*NET database by drawing on expert judgment. Specifically, the authors provided computer experts with task descriptions of occupations in O*NET and asked them to classify occupations as either automatable or non-automatable based on this information.

-

Third, the automatability of occupations derived from the expert assessments is modelled as a function of the nine O*NET variables that measure the bottlenecks to automation. The obtained estimates are used to predict the probability of automation of all 700 occupations in O*NET.

The studies of Arntz, Gregory and Zierahn [3] and Nedelkoska and Quintini [19] introduce important improvements in Frey and Osborne’s approach. Instead of estimating the risk of automation at the level of occupations, they estimate the automatability of individual jobs. This accounts for the fact that jobs within the same occupation may differ in their task mix and, hence, in their proneness to automation. More precisely, the studies map the expert ratings on automatability from the Frey and Osborne’s study [13] to micro-level data of the Survey of Adult Skills of the Programme for the International Assessment of Adult Competencies (PIAAC). The PIAAC data provides detailed information on the tasks that individuals perform in their jobs. The studies use this information to estimate the relationship between individual-level job tasks and automatablity for the 70 occupations initially assessed by the experts. They then use the estimated parameters to infer the probability of automation of all jobs in the PIAAC sample.

Following this revised approach, the two studies estimate much smaller shares of jobs prone to automation than suggested by Frey and Osborne [13]. Arntz, Gregory and Zierahn [3] find that 9% of jobs in the United States are highly automatable, as opposed to a share of 47% estimated by Frey and Osborne [13]. Nedelkoska and Quintini [19] show that 14% of jobs in the economies represented in PIAAC are at high risk of automation.

Other studies develop alternative task-based measures. Brynjolfsson, Mitchell and Rock [8], for example, develop a rubric for assessing task automatability. The rubric contains approximately 20 task characteristics that make a task more or less suitable for machine learning. These characteristics include, for example, the need for complex, abstract reasoning for solving the task, or the availability of immediate feedback on how successfully the task was completed. The authors let crowdworkers rate 2,069 work activities in O*NET with the rubric and map the scores to the corresponding occupations. This way they derive a measure of occupations’ suitability to machine learning. The authors find that this measure is only weakly correlated with wages.

3 Assessing Automation Through the Content of Patents

A number of studies draw on information from patents to determine what new technologies can and cannot do in the workplace. The text of patents provides, namely, detailed descriptions of technologies and their capabilities. Studies typically link this information to occupational descriptions from taxonomies such as O*NET to determine the extent to which the patented technologies are applicable in the workplace. This analysis usually relies on natural language processing techniques to detect whether patent texts indicate the workplace applicability of the technologies and to determine their textual similarity to occupational descriptions.

The study of Webb [26] develops a patent-based approach to study the exposure of occupations to computer software applications, industrial robots and AI. It draws on patents from the Google Patents Public Data by IFI CLAIMS Patent Services and links them to task descriptions of occupations provided in O*NET. The study builds this linkage in several methodological steps.

First, Webb [26] identifies patents of the three technologies of interest by searching the entire patent database for particular keywords in the patent titles and descriptions. For example, patents of AI technology are sorted out through keywords such as “neural network” and “machine learning”. Webb [26] then extracts all verb-noun pairs from the patent titles in these subsets of patents (e.g., diagnose, disease). The verb-noun pairs are used to indicate the tasks that the patented technology is intended to address. To determine the prevalence of technologies addressing a particular task, the frequency of occurrence of each verb-noun pair (and pairs similar to it) is calculated. For example, 0.1% of the AI patents may contain the pair “diagnose, disease” (or similar pairs) in their title.

Second, Webb [26] turns to the task descriptions of occupations and applies a similar natural language processing technique to extract the verb-noun pairs from those. He then assigns to each pair the relative frequency score estimated in the patent analysis. For example, the occupation “medical doctor” contains the task “diagnose patient’s condition”, from which the verb-noun pair “diagnose, condition” is extracted. When analyzing the impact of AI on occupations, this pair receives the score 0.001 because it is addressed by 0.1% of the AI patents.

Third, Webb [26] aggregates the tasks to the occupation level to obtain a measure of occupations’ exposure to automation. In O*NET, occupations are linked to a set of tasks and information is provided about the relevance of each task for the occupation. Webb [26] averages the scores of tasks across occupations by weighting the tasks by their importance for an occupation.

The results show that jobs occupied by low-skilled workers and low-wage jobs are most exposed to robotic technologies, while jobs held by college-educated workers are most exposed to AI. In addition, increases in occupations’ susceptibility for robotic technologies are linked to declines in employment and wages.

Montobbio and colleagues [17, 18] develop an approach for quantifying automation that relies on patents of robots. The goal is to identify robotic technologies that explicitly aim at replacing workers in the workplace [18] and to determine the exposure of occupations to such innovations [17]. The empirical analysis contains several methodological steps.

In a first study, Montobbio and colleagues [18] develop an approach for identifying labor-saving robotic technologies. This analysis draws on all patents published between 2009 and 2018 by the United States Patent and Trade Mark Office. First, the authors select patents of robotic technologies by using patent codes available in the data that indicate robotic patents and by additionally scanning the patent texts in the entire database for the morphological root ‘robot’. Second, the authors identify the labor-saving patents among the subset of robotic patents. This is achieved by analyzing the co-occurrence of certain verbs (e.g. ‘reduce’, ‘save’), objects (e.g. ‘worker’, ‘labor’) and object attributes (e.g. ‘task’, ‘hour’) at the sentence level. Finally, they describe the set of human tasks that the labor-saving technologies aim at reproducing by using a probabilistic topic model. This natural language processing algorithm elicits in an unsupervised manner the semantic topics that occur in the patent texts of labor-saving robotic technologies.

In a second study, the authors draw a more direct link to occupations [17]. They use the robotic labor-saving patents identified by Montobbio et al. [18] and their corresponding CPC (Cooperative Patent Classification) codes. The CPC codes contain definitions of the technological content of patents. The authors quantify the textual proximity of these codes to task descriptions from O*NET using natural language processing techniques. They then aggregate these similarity measures from the task to the occupation level, by taking into consideration the relevance of different tasks within an occupation. By linking the similarity measure to US labor market data, the authors show that the manufacturing sector is most exposed to automation. Furthermore, occupational exposure to labor-saving technology is negatively correlated with wage level in 2019 and wage growth in 1999–2019, as well as employment level and growth in the same period.

Squicciarini and Staccioli [23] adopt the methodology by Montobbio et al. [17, 18] to study automation and its impact on employment. They connect the measure of occupational exposure to labor-saving technologies with employment data from 31 OECD countries to study changes in employment within occupations at high risk of labor displacement. The study finds that low-skilled and blue-collar occupations are most exposed to labor-saving robotic technologies, but also analytic professions. However, there is no evidence of labor displacement, as employment shares in these occupations remain constant over time.

4 Using AI-Related Job Postings as an Indicator for the Use of AI in Firms

Another way to assess the use of AI in the workplace is to track the demand for AI experts in firms. The underlying assumption of this approach is that firms deploying AI technology also need workers with AI-related skills to manage and maintain this technology. Studies following this idea obtain information on firms’ skills needs from job postings. The main source for such data is Burning Glass Technologies (BGT), a company that collects online vacancies daily and provides systematic information on their skill requirements, job title and occupation group. Studies link BGT data to firm-level data to study how AI deployment in firms, as proxied by the demand for AI skills in vacancies, is linked to wages, employment and growth.

Studies differ in the way they identify AI-related job postings. Squicciarini and Nachtigall [22], for example, scan job postings for a list of AI-related keywords. These keywords are obtained from the study of Baruffaldi et al. [6], who apply bibliometric analysis and text mining techniques on scientific publications, open source software and patents to assess developments if the field of AI. Four types of keywords are considered: generic terms, such as “artificial intelligence” and “machine learning”; approaches, such as “supervised learning” and “neural network”; applications, such as “image recognition”; and software and libraries, such as Keras and TensorFlow. To avoid over-identification, the authors categorize job postings as AI-related if they contain at least two keywords from different keyword groups. The study provides rich descriptive information on these job postings, among others, on their distribution across occupations and industrial sectors. It shows that the share of AI-related job postings increased considerably between 2012 and 2018 in the four observed countries Canada, Singapore, United States and United Kingdom. Such postings appear in all sectors of the economy, most frequently in the sectors “Information and Communication”, “Financial and Insurance Activities” and “Professional, Scientific and Technical Activities”.

Using BGT data, the studies of Acemoglu et al. [1] and Alekseeva et al. [2] adopt a similar approach to measuring AI activity in firms. Acemoglu et al. [1] classify firms as AI-adopting if their vacancies contain at least one keyword from a simple list of AI-related skills. The study links this measure to indicators of AI exposure and shows that firms that involve tasks at a high risk of automation, as identified by the indicators of Webb [26] and Felten, Raj and Seamans [12] (see below), are more likely to deploy AI, as measured by the demand for AI skills in job postings.

Similarly, the study of Alekseeva et al. [2] scans job postings from the BGT data for particular skills. These skills were identified as AI-related by Burning Glass Technology and refer to the knowledge of AI (e.g. machine vision) or to AI-related software (e.g. Pybrain, Nd4J). The study shows that the number of job postings that demand AI-related skills have considerably increased in the US economy over the last ten years. The study also find a wage premium for such vacancies.

By contrast, Babina and colleagues [5] use a skills-similarity approach to determine the AI-relatedness of job postings. Instead of pre-specifying keywords relevant for AI, the authors measure how frequently the skills contained in BGT job postings co-occur with four core AI terms: “artificial intelligence”, “machine learning”, “natural language processing” and “computer vision”. From this co-occurrence frequency they build a measure of AI-relatedness for each skill contained in the data, assuming that skills that often appear in vacancies containing the core AI terms are strongly related to AI. Subsequently, the authors estimate the AI-relatedness of job postings by averaging the AI-relatedness of all skills required in a posting. In contrast to previous studies using a binary classification of AI- and non-AI-related vacancies, this measure is continuous, allowing it to distinguish between jobs requiring more or less AI-related skills (e.g. deep learning vs. information retrieval).

In addition, Babina et al. [5] estimate the AI-relatedness of employees’ resumes obtained from data from Cognism Inc. This way the authors use both the demand and the existing stock of AI skills in firms to approximate AI deployment. Concretely, the authors search the resume data for terms that obtained high AI-relatedness scores in the analysis of job postings. This enables them to classify AI workers in firms. In a final step, Babina et al. [5] map both the job postings data and the resume data to firm-level data to study the characteristics of firms deploying AI and the effects of AI deployment on sales, employment and the market share of firms. The study shows that larger firms with higher sales, markups and cash holdings are more likely to deploy AI. In addition, the measure of AI deployment is positively linked to firm growth, both in sales and employment.

5 AI Measures Relying on Benchmarks

In computer science, the performance of AI and robotic systems are measured with so-called benchmarks. A benchmark is a task or a set of tasks that has been explicitly designed to evaluate the performance of a system. Typically, a benchmark provides training data, which the system uses to learn the task; a test dataset on which the task is performed; an evaluation framework and continuous numerical feedback to rate the system’s performance on the task [24]. The purpose is to compare different systems against the same benchmark test. Often, the performance of systems is compared to human performance on the benchmark.

There have been efforts to assess more systematically the state of the art of AI technology using information from benchmarks. Stanford University’s AI Index Report, for example, collects and tracks leading benchmarks to assess the technical performance of AI in different domains, such as computer vision, language, speech recognition and reinforcement learning [27]. Other studies concentrate on single domains, such as the overview of natural language inference benchmarks by Storks, Gao and Chai [24].

Some studies connect the information on AI capabilities contained in benchmarks to information on the content of occupations in order to assess how progress in AI can impact the workplace. Martínez-Plumed et al. [16] and Tolan et al. [25] collect information on 328 different AI benchmarks. Instead of looking at AI performance on these benchmarks, the studies use the research output related to each benchmark (e.g., research publications, news, blog-entries) as a proxy for AI progress and its future direction. The reason is that benchmarks use different performance metrics, which makes it difficult to compare AI performance on different tasks and in different domains. The studies connect developments in AI research to occupations by using cognitive abilities as an intermediate layer. Concretely, they connect AI benchmarks to cognitive abilities and then connect cognitive abilities to work tasks. The authors argue that mapping the information from benchmarks to occupations by way of cognitive abilities is a promising approach since it indicates the broader ability domains in which the use of AI in the workplace is advancing and, conversely, the key abilities that technology cannot yet achieve. For occupational tasks, the studies derive 59 key work tasks linked to 119 occupations from two labor force surveys—the European Working Conditions Survey (EWCS) and the OECD Survey of Adult Skills (PIAAC)—as well as from the O*NET database. For the intermediate layer of cognitive abilities, they use a taxonomy of 14 cognitive abilities (e.g., visual processing, navigation, communication) inspired by work in psychology, animal cognition and AI. The results suggest relatively high AI exposure for high-income occupations and low AI impact on low-income occupations, such as drivers or cleaners.

The study of Felten, Raj and Seamans [12] is another attempt to link AI capabilities assessed through benchmarks to abilities and skills required at the workplace. The authors use AI evaluation results across nine major AI application domains made available by the AI Progress Measurement project of the Electronic Frontier Foundation (EFF). They re-scale the metrics in each domain to make them comparable and calculate the average rate of progress in each domain for the period 2010 to 2015. The advancements in each AI application domain are then linked to 52 abilities required in the workplace from O*NET. O*NET provides information on the importance and the prevalence of each ability within occupations. For each AI application domain, the authors ask 200 gig workers on a freelancing platform to rate the relatedness between the domain and the 52 abilities. In total, 1,800 persons were surveyed. By linking the abilities to occupations, the authors assess the extent to which occupations are exposed to AI, a measure they call AI Occupational Impact (AIOI). They find that it is positively linked to wage growth, but not to employment.

6 A Skill-Based Approach

Another approach to measuring the impact of AI on work is to compare the capabilities of AI to the human skills required for work. Such a comparison directly addresses the question of whether AI can replace humans in the workplace. Moreover, it provides information about AI’s impacts that extends beyond the definition of current occupations to cover more general issues, such as the design of new occupations and workplaces or the development of education and training programmes that prepare students for the future. The comparison of human and computer capabilities typically relies on tests developed for humans. In computer science, such tests are used as benchmarks, on which the performance of systems is evaluated. Other work draws on expert judgement from AI researchers to determine whether state-of-the-art systems are capable of carrying out tests and tasks developed for humans.

Computer scientists have used different types of human tests to evaluate the capabilities of AI. Several examples should illustrate this since the full list of studies is long. Liu et al. [15] evaluate AI systems on IQ tests for humans. The authors construct a comprehensive dataset containing 10,000 IQ test questions by manually collecting them from books, websites and other sources. They test different systems on different subsets of questions, i.e. questions related to finding an analogical word given other words or finding the correct number given a sequence of numbers. Similarly, Ohlson et al. [20] test the ConceptNet 4 AI system on the Verbal IQ part of the Wechsler Preschool and Primary Scale of Intelligence (WPPSI-III). DeepMind, an AI-focused company owned by Google, famously evaluated the performance of state-of-the-art NLP systems on 40 questions from mathematics exams for 16 years old British schoolchildren [21]. Clark et al. [10] tested their ARISTO system on the Grade 8 New York Regents Science Exam.

Some computer scientists have argued that standardized tests are useful for measuring AI progress and stimulating AI research. According to Clark and Etzioni [9], standardized tests are suitable evaluation tools because “they are accessible, easily comprehensible, clearly measurable, and offer a graduated progression from simple tasks to those requiring deep understanding of the world” [9: 4]. Moreover, they can address a wide range of AI capabilities. Liu et al. [15] note that IQ tests assess systems in different areas, such as knowledge representation and reasoning, machine learning, natural language processing and image understanding.

Outside of computer science, Elliott [11] assesses AI capabilities with the OECD Survey of Adult Skills (PIAAC) by drawing on expert judgment. PIAAC is designed to measure literacy, numeracy and problem-solving skills of adults. The questions are presented in different formats, including pictures, texts and numbers. They are designed to resemble real-life tasks in work and personal life, such as handling money and budgets, managing schedules, or reading and interpreting statistical messages and graphs presented in the media. Elliott [11] asks 11 computer experts to rate the capabilities of AI of solving the PIAAC items. The results of these ratings are presented separately for questions of varying difficulty and allow for comparing AI to humans at different proficiency levels.

The study shows that, according to the interviewed computer experts, in 2016, successful performance of AI on literacy was expected to range from 90% on the easiest questions to 41% on the most difficult questions [11]. This roughly resembles the scores of low-performing adults on the literacy test. In numeracy, AI performance, as estimated by experts, ranged from 66% on the easiest to 52% on the hardest questions. This is below average human-level performance on the simplest numeracy tasks and beyond average human scores on the hardest tasks.

The study of Elliott [11] served as a stepping stone to a bigger project aimed at assessing AI at the Centre for Educational Research and Innovation (CERI) at the OECD. The AI and the Future of Skills project aims at comparing AI and human capabilities across the full range of skills needed in the workplace.Footnote 1 To this end, the project is developing an approach using a battery of different tests. For example, key cognitive skills will be addressed with education tests such as PIAAC and the Programme for International Student Assessment (PISA), while occupation-specific skills will be assessed with tests from vocational education and training that provide certification or license for specific occupations. In addition, tests from the fields of animal cognition and child development will be used to assess basic low-level skills that all healthy adult humans, but not necessarily AI, have (e.g., spatial memory, episodic memory and common sense for the physics of objects).

7 Strengths and Weaknesses of the Different Approaches

Existing approaches to assessing the impact of AI and robotics on employment and work have mostly focused on whether these technologies can automate tasks within occupations; whether they can reproduce human skills required in jobs; and whether they are reflected in the human capital investments firms make. Studies have used innovative methods and data to answer these questions. However, some gaps in the methodology remain. In the following, some strengths and weaknesses of the five approaches are discussed without the claim of comprehensiveness or the attempt to elevate one approach against the other.

The Task-Based Approach.

The task-based approach has sparked off much research. The reason, perhaps, is that it offers a convenient way to quantify the potential for automation in occupations and in the economy. This is made possible through the use of O*NET, a comprehensive taxonomy of occupations that provides systematic information on task content and allows mapping to labor market surveys to study the empirical distribution of occupations. However, although this research heavily relies on expert judgments of AI capabilities with respect to occupational tasks, it leaves the process of collecting these judgements less transparent, with the available studies not providing sufficient information on the selection of experts, the methodology used for expert knowledge elicitation or the rating process. Moreover, experts rate the capabilities of AI and robotics with regard to very broad descriptions of occupational tasks provided in O*NET (e.g., “Bringing people together and trying to reconcile differences”).

The Patent-Based Approach.

Linking patents to occupations with NLP techniques is an innovative way to assess the impact of technology on work, both in terms of the data and the method used. Like the task-based approach, this approach is convenient for quantifying the impact of AI in the economy. However, as Webb [26] and Georgieff and Hyee [14] note, the approach focuses on innovative efforts with regard to AI that have been described in patents rather than actual deployment of AI in the workplace. With that, the patent-based approach is an assessment of the potential impact of technology on work. At the same time, the approach may miss innovations that are not described in patents.

The Job-Postings-Based Approach.

In contrast to the patent-based approach, but also the task-based approach, which measure the potential for automation of occupations, indicators that rely on job postings aim at capturing the actual deployment of AI in firms. Moreover, they aim at providing a timely tracking of AI adoption by using up-to-date data on the demand for AI skills across the labor market. However, these measures rely on the strong assumptions that all companies that automate their work processes recruit workers with AI skills and, vice versa, that all companies that employ AI specialists are doing so to automate their own tasks. Georgieff and Hyee [14] point to several realistic scenarios that violate these assumptions. For example, AI-using firms may train their workers in AI rather than recruit new workers with AI skills. Or they may decide to outsource AI support and development to specialized firms. In addition, the use of some AI systems may not require specialized AI skills. Furthermore, indicators based on job postings can capture the use of AI at the firm level, but not at the level of concrete work tasks or occupations. For example, a company may recruit IT specialists with AI skills in order to automate routine manual tasks in production.

The Benchmarks-Based Approach.

Benchmarks offer an objective measure of AI capabilities since they evaluate the actual performance of current state-of-the-art systems. The problem of using benchmarks to measure AI’s impact on the workplace is that they do not systematically cover the whole range of tasks and skills relevant for work. To our knowledge, there has not been an attempt to systemize the information from benchmarks according to a taxonomy of work tasks or work skills in order to comprehensively assess the capabilities of AI and robotics. Studies that link information from benchmarks to occupations offer a promising way to measure the automatability of the workplace. However, they share some of the methodological problems of the task-based approach. Specifically, occupational tasks and abilities are crudely described and their relatedness to AI is assessed through subjective judgments.

The Skills-Based Approach.

The skills-based approach uses human tests to compare computer and human capabilities. The reference to humans is key to understanding which human skills are reproducible by AI and robotics, and which skills can be usefully complemented or augmented by machines. This can help design new jobs in future that make the best use of both humans and machines. Moreover, it helps answer additional questions related to skills supply: Which skills are hard to automate and, thus, worth investing in in future? What education and training can help most people develop work-related skills that are beyond the capacity of AI and robotics?

However, using human tests on AI and robotics bears some challenges. One challenge, also common for other benchmark tests, is overfitting. It means that a system can excel on a test without being able to perform other tasks that are slightly different from the test tasks. This is still typical for AI systems as they are generally ‘narrow’, e.g. able to perform very specific tasks. Another challenge comes from the fact that human tests are designed for humans and, thus, take for granted skills that all humans share. Since such skills cannot be assumed for AI, human tests can have different implications for humans and machines. For example, the simple task to count the objects in a picture tests humans’ ability to count, whereas, for AI, it is also a test for vision and object recognition.

8 Conclusion

Assessing AI and robotic capabilities is a necessary foundation for understanding the impact of these technologies on work and employment. Existing assessment approaches address different aspects of the relationship between technology and work, and have different implications for policy. The task-based and the patent-based approaches assess the automatability of tasks within occupations. The focus of these approaches is more on quantifying the potential of AI and robotics to automate the economy than on developing a comprehensive measure of what computers can and cannot do. By contrast, benchmarks provide information on the technical performance on AI by objectively evaluating the capabilities of current state-of-the-art systems. The job-postings approach sheds light on another important aspect of the use of AI and robotics in the workplace: the extent to which firms need AI skills, the type of AI skills they need, and the characteristics of firms that are linked to AI skills needs. Finally, the skills-based approach provides a useful comparison to human skills that provides information not only about the impact of AI on work, but also about how education should evolve in response.

A systematic assessment of AI and robotics requires a comprehensive framework that brings together different assessment approaches. Such a framework should…:

-

1)

Make use of different disciplines as they provide different perspectives and opportunities for understanding AI and robotics capabilities.

Concretely, computer science can provide useful ways to measuring AI and robotics capabilities. Approaches from the social sciences and economics are useful for linking these capabilities to occupations and assessing their impact on the economy and the society. Psychology and psychometrics can help linking measures of human abilities to AI and robotics.

-

2)

Provide a comparison to human skills.

As noted above, a reference to human skills would make it possible to compare the technical performance of AI and robotics to the proficiency with which humans carry out tasks in their jobs. This will provide information to help understand how AI and robotics will change the demand for skills and how policies should shape skills supply in order to respond to these changes.

-

3)

Use comprehensive tasks and skills taxonomies to guide the assessment of AI and robotics skills.

A comprehensive assessment of AI and robotics capabilities should cover the full range of tasks that appear in work, all relevant human skills as well as capabilities that are non-trivial for machines, but commonplace for humans. Such an assessment should focus not only on the capabilities and tasks that AI and robotics can achieve, but also on the stumble blocks to automation.

-

4)

Include benchmarks and evaluations from AI research.

A multitude of benchmarks and evaluations already assess AI and robotic systems empirically. However, these have not been systematically classified into a taxonomy of skills or tasks. Populating comprehensive skills and tasks taxonomies with information from such assessments would be an important component of any systematic measure of AI and robotics.

-

5)

Use expert judgement in a way that is transparent and meets established scientific standards.

Where benchmarks are lacking, expert judgment can provide important insights into the capabilities of AI and robotics. However, expert judgment should be elicited with care. Specifically, the choice of experts should be substantiated; computer capabilities should be rated with respect to standardized and detailed tasks; the degree of agreement between experts should be reported; and the aggregation of individual experts’ ratings should be explained.

A multidisciplinary approach that combines complementary methodologies, draws links to both work tasks and human skills, and uses standardized assessment instruments will provide valid and reliable measures of AI and robotics. Such an approach would also provide measures that are meaningful for the policy community.

References

Acemoglu, D., Autor, D., Hazell, J., Restrepo, P.: AI and jobs: evidence from online vacancies. NBER Working Paper Series 28257 (2020)

Alekseeva, L., Azar, J., Giné, M., Samila, S., Taska, B.: The demand for AI skills in the labor market. Labour Econ. 71, 1–27 (2021)

Arntz, M., Gregory, T., Zierahn, U.: The risk of automation for jobs in OECD countries: a comparative analysis. OECD Social, Employment and Migration Working Papers 189. OECD Publishing, Paris (2016)

Autor, D., Levy, F., Murnane, R.: The skill content of recent technological change: an empirical exploration. Q. J. Econ. 118(4), 1279–1333 (2003)

Babina, T. Fedyk, A., He, A., Hodson, J.: Artificial Intelligence, Firm Growth, and Product Innovation (2020). https://ssrn.com/abstract=3651052. Accessed 04 Aug 2022

Baruffaldi, S., van Beuzekom, B., Dernis, H., Harhoff, D., Rao, N., Rosenfeld, D., Squicciarini, M.: Identifying and measuring developments in artificial intelligence: Making the impossible possible. OECD Science, Technology and Industry Working Papers 2020/05. OECD Publishing, Paris (2020)

Bresnahan, T., Trajtenberg, M.: General Purpose Technologies “Engines of Growth?”. National Bureau of Economic Research. Working Paper No 4148 (1992)

Brynjolfsson, E., Mitchell, M., Rock, D.: What can machines learn and what does it mean for occupations and the economy? AEA Pap. Proc. 108, 43–47 (2018)

Clark, P., Etzioni, O.: My computer is an honor student — but how intelligent is it? standardized tests as a measure of AI. AI Mag. 37(1), 5–12 (2016)

Clark, P., et al.: From ‘F’ to ‘A’ on the N.Y. Regents Science Exams: An Overview of the Aristo Project. arXiv:1909.01958 (2020)

Elliott, S.: Computers and the Future of Skill Demand. Educational Research and Innovation. OECD Publishing, Paris (2017)

Felten, E., Raj, M., Seamans, R.: The variable impact of artificial intelligence on labor: the role of complementary skills and technologies. SSRN Electr. J. (2019)

Frey, C., Osborne, M.: The future of employment: how susceptible are jobs to computerisation? Technol. Forecast. Soc. Chang. 114, 254–280 (2017)

Georgieff, A., Hyee, R.: Artificial intelligence and employment: new cross-country evidence. OECD Social, Employment and Migration Working Papers, No. 265, OECD Publishing, Paris (2021)

Liu, Y., He, F., Zhang, H., Rao, R., Feng, Z., Zhou, Y.: How well do machines perform on IQ tests: a comparison study on a large-scale dataset. In: Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence (IJCAI-19) (2019)

Martínez-Plumed, F., Tolan, S., Pesole, A., Hernández-Orallo, J., Fernández-Macías, E., Gómez, E.: Does AI qualify for the job? a bidirectional model mapping labour and AI intensities. In: Proceedings of the 2020 AAAI/ACM Conference on AI, Ethics, and Society (AIES 2020), 7–8 February 2020, New York, USA (2020)

Montobbio, F., Staccioli, J., Virgillito, M. E., Vivarelli, M.: Labour-saving automation and occupational exposure: a text-similarity measure. LEM Working Paper Series 2021/43. Scuola Superiore Sant’ Anna, Italy (2021)

Montobbio, F., Staccioli, J., Virgillito, M.E., Vivarelli, M.: Robots and the origin of their labour-saving impact. Technol. Forecast. Soc. Chang. 174, 1–19 (2022)

Nedelkoska, L., Quintini, G.: Automation, skills use and training. OECD Social, Employment and Migration Working Papers 202. OECD Publishing, Paris (2018)

Ohlson, S., Sloan, R.H., Turán, G., Urasky, A.: Measuring an artificial intelligence system’s performance on a Verbal IQ test for young children. J. Exp. Theor. Artif. Intell. 29(4), 679–693 (2017)

Saxton, D., Grefenstette, E., Hill, F., Kohli, P.: Analysing mathematical reasoning abilities of neural models. arXiv:1904.01557 (2019)

Squicciarini, M., Nachtigall, H.: Demand for AI skills in jobs: Evidence from online job postings. OECD Science, Technology and Industry Working Papers 2021/03. OECD Publishing, Paris (2021)

Squicciarini, M., Staccioli, J.: Labour-saving technologies and employment levels: Are robots really making workers redundant?. OECD Science, Technology and Industry Working Papers 124. OECD Publishing, Paris (2022)

Storks, S., Gao, Q., Chai, J. Y.: Recent Advances in Natural Language Inference: A Survey of Benchmarks, Resources, and Approaches. arXiv:1904.01172 (2020)

Tolan, S., Pesole, A., Martínez-Plumed, F., Fernández-Macías, E., Hernández- Orallo, J., Gómez, E.: Measuring the occupational impact of AI: tasks, cognitive abilities and AI benchmarks. J. Artif. Intell. Res. 71, 191–236 (2021)

Webb, M.: The impact of artificial intelligence on the labor market. SSRN Electr. J. (2020)

Zhang, D., et al.: The AI Index 2022 Annual Report. Stanford Institute for Human-Centered AI, Stanford University, AI Index Steering Committee (2022)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this chapter

Cite this chapter

Staneva, M., Elliott, S. (2023). Measuring the Impact of Artificial Intelligence and Robotics on the Workplace. In: Shajek, A., Hartmann, E.A. (eds) New Digital Work. Springer, Cham. https://doi.org/10.1007/978-3-031-26490-0_2

Download citation

DOI: https://doi.org/10.1007/978-3-031-26490-0_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-26489-4

Online ISBN: 978-3-031-26490-0

eBook Packages: EngineeringEngineering (R0)