Abstract

Industry classification schemes provide a taxonomy for segmenting companies based on their business activities. They are relied upon in industry and academia as an integral component of many types of financial and economic analysis. However, even modern classification schemes have failed to embrace the era of big data and remain a largely subjective undertaking prone to inconsistency and misclassification. To address this, we propose a multimodal neural model for training company embeddings, which harnesses the dynamics of both historical pricing data and financial news to learn objective company representations that capture nuanced relationships. We explain our approach in detail and highlight the utility of the embeddings through several case studies and application to the downstream task of industry classification.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Financial markets are an important but challenging machine learning domain when it comes to analysis and prediction [3, 9, 17]. Their stochastic nature reflects a complex network of interactions involving a web of hidden factors and unpredictable events. Though the financial literature spans many sub-domains, the application of machine learning and deep learning techniques to financial markets has often been narrowly focused on the problem of returns forecasting for individual assets [25]. As a result, many other problems facing the financial sector have been underrepresented or ignored.

For example, the challenge of classifying companies based on a taxonomy of industry types is not well covered by contemporary machine learning research, even though it is an important task in several settings. In government, private sector, academia, and even the broader public, industry classification schemes are an integral part of using business and economic information [20]. Additionally, research has shown that 30% of publications at the top-three finance journals utilize industry classification schemes [27]. The ability to segment companies into market sectors is important for many types of financial and economic analysis—measuring economic activity, identifying peers and competitors, constructing ETF products, quantifying market share and bench-marking company performance—none of which would be possible without industry classifications [20].

The rise in popularity of sector-based investing has led to the development of new market-oriented industry classification schemes. However, despite their increased usage for choosing investments, many industry classification schemes have still not embraced the era of big data and remain a largely subjective undertaking. As a result, they have been shown to struggle with scalability [20], exhibit inconsistencies when determining the primary area of activity for a company [19], and they offer no way to quantitatively measure or rank similarity between companies. Other studies confirm significant disagreement between classification schemes when trying to categorize the same companies [12, 14].

Although research applying modern computational techniques to industry classification schemes, and the learning of asset relationships more broadly, has lagged, there has been marked progress within the computer science community on relevant areas like representation learning and machine learning on relational data. The model architecture presented in this paper takes inspiration from a class of modern language models that have proven to be very successful in the natural language processing (NLP) domain [10, 18].

In this work, we propose a novel training methodology for the learning of distributed representations of public companies, based on distributional similarities in both historical returns data and financial news content. We show how these multimodal embeddings can successfully capture the nuanced relationships that exist between companies and we demonstrate how they can be used to identify related companies. After discussing related work in the next section, we go on to explain the proposed approach before presenting several case-studies to highlight how the learned representations can be useful in financial applications. Before concluding, we present the results of an initial evaluation to demonstrate the effectiveness of these learned representations for the downstream task of industry classification.

2 Related Work

Finance has long been a pioneering industry in the application of machine learning techniques [25]. However, the literature applying modern computational techniques to financial markets overwhelmingly focuses on the forecasting of returns and volatility for individual stocks [16]. Although these applications have seen success, there are many other tasks within financial markets which have not received the same level of attention. In this paper, we look to address one of these – namely, the problem of industry classification.

Moving away from treating companies independently, and instead leveraging relationships, is key to tackling this task. Recent advances in areas such as representation learning and graph ML have encouraged research in this direction, with the most relevant literature to this work being papers proposing novel embedding frameworks for financial assets. For example, [28] suggest using matrix factorization to learn latent representations of stocks based on a co-occurrence matrix obtained from financial news articles. [8] propose a framework for learning stock embeddings from the co-dynamics of historical returns data. The authors in [24] use network theory and machine learning to generate fund and ETF embeddings based on overlapping asset allocation, [23] apply Node2Vec to the stock correlation matrix to learn embedded representations of stocks, and [2] obtain embeddings by combining company information with knowledge from Wikipedia and relationships from Wikidata.

Other relevant applications of NLP in the financial domain include [26] where sentiment dynamics in related companies are assessed by building a network from financial news data. Authors in [15] tackle industry classification by using NLP to extract distinguishable features from business descriptions in financial reports. Also, authors in [13] extract company embeddings by using the output of the BERT [6] language model applied to annual reports, and then use these embeddings in the industry classification task. Though textual data has been used to inform company embeddings in prior research, there is often a reliance on the aggregation of pre-trained word embeddings rather than a tailored company embedding framework, as proposed in this work.

3 A Multimodal Embedding-Based Approach

This paper proposes a method for training embeddings of companies using a probabilistic neural framework. In this section, we first outline the link with the NLP domain. We then describe a methodology for applying the proposed framework to historical returns data in Sect. 3.2, and financial news data in Sects. 3.3. Following this, we describe the training process in Sect. 3.4.

3.1 Language Modelling Origins

Inspired by the use of distributional semantics in natural language processing, we propose a model architecture that uses the idea of context companies to train distributed representations of target companies. In linguistics, the distributional hypothesis captures the idea that “a word is characterized by the company it keeps” [10], i.e., words that occur in the same contexts tend to have similar meanings. In language modelling, the context of any given word has quite a natural interpretation as the words immediately before and after it. However, defining context in the case of financial assets is not as intuitive. But, before laying out the proposed approach to for financial assets, we first give some background on the Word2Vec [18] architecture to frame the discussion.

The distributional hypothesis underpins many modern language models like Word2Vec [18]. The goal of such language models is to construct a lower dimensional, dense representation of words that capture meaningful semantic and syntactic relationships [24]. The typical model architecture is a shallow two-layer neural network where the embeddings themselves are also the model parameters/weights. The embeddings are randomly initialized, as would be expected for weights in a neural model, and then trained by using context words (the model input) to predict the center/target word (the model output) and back propagating the lossFootnote 1. The result of these iterative updates is that words which commonly appear in the same context will have similar representations in the latent space. Further information about this architecture and training process can be found in [18, 22].

To adapt this modelling framework to non-textual data, we will now consider the idea of context in the case of the two data sources we consider in this work: financial news and historical stock returns.

3.2 Selecting Context Companies from Historical Returns

To select context companies from historical returns data, we treat companies with similar returns at the same points in time as related. This idea is supported by research showing that companies from the same business sectors tend to exhibit similar stock price fluctuations [11].

Consider a universe of public companies \(U = \{a_1,...,a_{|U|}\}\) and for each company \(a_i\) we have a vector \(\textbf{p}_{a_i}=\{p_0^{a_i},...,p_T^{a_i}\}\) containing its prices at discrete time intervals (daily or weekly for example). From the pricing data, we then compute a returns vector \(\textbf{r}_{a_i}=\{r_1^{a_i},...,r_T^{a_i}\}\) using Eq. 1.

We generate target:context sets from these returns vectors. For a context size C, the context companies for target asset \(a_i\) at time t are simply the C companies which have the closest return at that point in time. The closest return is defined by the lowest absolute value difference in return for candidate company \(a_j\), formulated as \(|r_t^{a_i} - r_t^{a_j}|\). An example of this process is outlined in Fig. 1 with Apple Inc. (AAPL) as the context company and t as January 3rd 2000. We compute the absolute value difference between the return of AAPL on that day with the return of each other company on the same day. Then, we choose the C companies with the lowest difference values as the context companies, excluding AAPL itself. More generally, we generate a target:context set for every company at each point in time, which results in a total of \(|U|\times T\) sets for training.

An example of a target:context set for \(C=3\) might be [MSFT : IBM, AAPL, ORCL]. This tells us that, at some point in time, the three companies with the closest returns to Microsoft were IBM, Apple Inc. and Oracle.

Market data is notoriously noisy [5], and when looking at returns on the daily level, we see a bell shaped curve around that day’s market average. This means that if the target stock has a return close to the market average on a given day, a lot of the corresponding context stocks are likely due to random chance. In an effort to isolate meaningful cases and reduce noise, a context set \(\mathcal {S}(a_i,t)\), with target company \(a_i\) at time t, was deleted from the training data if the target stock return, \(r_t^{a_i}\), was within the interquartile range (IQR) of returns on that day. As a result, only sets where the target stock had a movement outside the IQR of market returns on a given day were included in training.

The remaining target: context sets are then passed into the embedding training architecture, which will be described in more detail in Sect. 3.4.

3.3 Selecting Context Companies from News Articles

Unsurprisingly, movements in financial markets and financial news have been found to be intrinsically linked. For example, a positive correlation exists between the number of occurrences of a company in the Financial Times and the transaction volume of that company’s stock both on the day before and the same day as the news is released [1]. Additionally, with the evolution of NLP techniques, the application of language modelling to financial news data for stock market forecasting on a stock-by-stock basis has become a popular area of research in recent years [29].

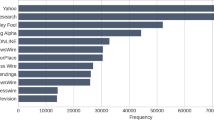

Focusing on individual companies/assets in isolation can mean that important relational information is missed. We hypothesize that companies co-mentioned in the same news articles are likely to be related [28], and that this can be leveraged to improve performance in tasks such as industry classification. As such, we want to learn embeddings in a way whereby companies which are commonly mentioned in the same news articles will end up having similar latent embeddings in terms of some suitable similarity metric, like cosine similarity for example. To do this, we use a corpus of over 100,000 financial news articles spanning 2006–2013 [7], and create target: context sets from every news article where more than one company is mentioned. Extracting the companies mentioned is helped by the fact that each company is followed by its associated stock ticker as shown in Fig. 2(b).

As an example, if a given news article mentions n companies, then each company will be put in a set as the target company with the remaining \(n-1\) companies listed as context companies in that set. Therefore, an article with n companies will result in n target:context sets for training. These sets are then all passed into the model framework, where the embeddings are trained. This process is shown in Fig. 2(a) and more detail will be given on the embedding training in Sect. 3.4.

3.4 The Training Process

The aforementioned shallow two-layer neural network model architecture is illustrated in Fig. 3. As previously mentioned, the model design is such that the company embeddings are the model parameters. As such, each row in the weight matrix \(\textbf{W}\) is a company embedding. In this section, we explain each step of this framework in detail and why the resulting embeddings capture the relationships of interest. Throughout this section, it is worth keeping in mind that two of these models will be used – one to learn the company embeddings for each data modality separately. The two independent embeddings are then concatenated to form the multimodal company embeddings.

The first step is to compute the hidden layer, which is simply an element-wise average of the context stock embeddings. To be more precise, the input to the model is a one-hot encoded version of the context set, and so, consists of C one-hot vectors \(\{\textbf{x}_1,\textbf{x}_2, ... , \textbf{x}_C\}\), one for each context stock. These vectors are used to extract the embeddings corresponding to the C context stocks. For example, computing \(\textbf{W}^T\cdot \textbf{x}_1\) will extract a single row from \(\textbf{W}\)—the embedding corresponding to the first context stock. The hidden layer, \(\boldsymbol{h}\), is a simple element-wise average of the extracted embeddings, and is formulated in Eq. 2. We note that the use of a relatively simple average here is by design, since the mean function is agnostic to the number of inputs and so allows flexible context sizes during training.

Thus, the hidden layer, \(\boldsymbol{h}\), is an N-dimensional vector and can be thought of as an aggregate embedding representation of the context stocks, where N is the embedding dimensionality. The next step is to estimate the probability of the target company given \(\boldsymbol{h}\) by applying Eq. 3.

Ensured by using the softmax activation, the output is a posterior probability distribution expressing the probability of each stock in the universe being the target stock given the context stocks observed. Since the dot product represents a measure of similarity between vectors, the model assigns higher probability to stocks whose embeddings are similar to hidden layer embedding \(\boldsymbol{h}\). In this way, when we apply back-propagation, stocks which commonly co-occur in target: context sets will end up closer in the embedding space. As a result, assuming our hypotheses are correct, the embeddings will capture nuanced relationships that are present in the historical returns data and financial news co-occurrence. We note that the ground truth here, y in Fig. 3, is a one-hot vector indicating the true target stock.

4 Evaluation

In this section, we will outline a number of interesting example case studies followed by an evaluation on the task of industry classification.

4.1 Datasets

For this analysis, we use publically available daily historical pricing data and over 100,000 financial news articles [7], both spanning 2006–2013. Included alongside the pricing data are two levels of industry labels for each stock, one high level like Finance, Technology etc., and the other a finer grained label like Major Bank or Semiconductors. The companies included in the analysis were selected based on the following inclusion criterion. Firstly, the company had to be publicly traded and have complete pricing data over the period in question. Secondly, we limited the dataset to companies mentioned in at least 50 news articles to ensure there was sufficient data for training. Additionally, the pricing data available contained only companies listed on the NYSE and NASDAQ exchanges, and so the dataset is also limited to these. After enforcing this inclusion criterion, we are left with 118 companies across seven industry sectors: Capital Goods, Consumer Non-Durables, Consumer Services, Energy, Finance, Health Care and Technology.

4.2 Company Knowledge Graph

Visualizing latent embeddings in two-dimensional space can often be a useful way of identifying relationships and clustering behavior. Figure 4 shows three knowledge graphs, where each node represents a company and nodes are colored by their industry sector label, with seven industries in total. Each graph is derived from different embeddings: one from the historical return embeddings, one from the financial news-based embeddings and one constructed from a concatenation of both embeddings. It is worth noting that the dimensionality of both types of embeddings, a hyperparameter, was chosen to be 20. As a result, the concatenated multimodal embeddings are 40-dimensional.

Firstly, to convert embeddings into knowledge graphs, a similarity matrix was computed using the cosine similarity between company embeddings. Then, if two companies had a similarity above a certain threshold, they would receive an edge between their nodes. The similarity threshold was chosen as 0.6, which resulted in approximately 5% of all possible edges being active. The plots are generated using a force-directed graph drawing algorithm in Gephi.

In each of the graphs in Fig. 4, we observe clear clustering of companies into industry sectors. Within each graph, edges tend to be present between nodes of the same industry sector, though there are some exceptions which will be discussed further in Sect. 4.4. This indicates that the proposed training framework successfully learns embeddings which pick up on relationships between companies in the case of both data modalities. In each case, using returns data or news co-occurrence, this is a very positive result because it suggests that it is possible to reconstruct important sectoral information from the embeddings, and indeed is likely to do so in a way that is more nuanced and objective than might be possible using simple sectoral labels.

Contrasting the three knowledge graphs, the graph constructed from the combined embeddings seems to best cluster the companies into industry sectors. This indicates a benefit in combining the embeddings from both data modalities, which will be explored in further evaluations.

4.3 Identifying Related Companies

This first case study looks to use the learned embeddings to identify related companies through a nearest neighbors analysis, a natural first point of reference to sanity check the latent space representations. We would hope that companies with very high similarity in the latent space should be related somehow. In order to find the k-nearest neighbors (kNN) for a given query company, we first must define what exactly we mean by nearest. We implement kNN using cosine similarity as the similarity metric; note that a similar pattern of related companies results if we use euclidean distance or dot product similarity instead.

Table 1 shows the top-3 (\(k=3\)) nearest neighbors for JPMorgan Chase, Intel Corporation, and Walmart, three well-known companies in very different sectors. In each case, the nearest neighbors pass the “sanity test” in that they belong to similar industry sectors and in many cases also agree on the finer-grained classification labelled as “Industry 2” in Table 1. For example, the three nearest neighbors of JPMorgan Chase, a major bank, are also all major banks. Remember, that no sectoral or industry information has been used in determining these nearest neighbors, and only daily returns and co-occurence in news articles have been used to generate the distributed representations used for similarity assessment.

There is considerable scope for the use of nearest neighbor companies by investors. Firstly, we can develop a company recommendation system which, when given a target company – a novel company for the investor or one already in their portfolio – can generate a ranked list of similar companies based on their historical returns data and appearance in financial news. A system like this addresses a major pitfall of classic industry classification schemes, where no rank ordering is possible. This could have a variety of use cases, for example, investors and fund managers could consult this ranked list when conducting comparable company analysis or looking for alternative investment opportunities; it could be of use to sales representatives looking to recommend complementary investment opportunities to clients; investors could devise a tax loss harvesting strategy [24]; and asset managers could be assisted in the construction of market sector ETFs.

4.4 Analyzing High Similarity Mismatches

Though the vast majority of edges in Fig. 4 occur between nodes from the same industry, it is not true in all cases. In other words, there are some instances where two companies have a high embedding similarity, but their industry sector labels don’t match. Does this highlight a flaw in the embeddings, where companies achieve very high embedding similarity when they should not? To answer this, we provide some examples of these high similarity mismatches. We consider pairs of companies that have a high cosine similarity between embeddings and are members of different industry sectors. A number of examples are shown in Table 2.

The first example is that of General Electric, classified in the Energy (Consumer Electronics) sector, and Boeing, classified in the Capital Goods (Aerospace) sector. These two companies receive a high multimodal embedding similarity of 0.8 despite being classified in different industry sectors. However, upon closer inspection, we note that one of General Electric’s main business areas is the manufacturing of aircraft engines, with Boeing being one of their largest customers. As a result, they are commonly mentioned in news articles with Boeing and other aerospace companies, which results in the high embedding similarity.

Another example is that of Johnson & Johnson and Colgate-Palmolive, classified as Consumer Non-Durables (Cosmetics) and Healthcare (Major Pharma) respectively. These two companies again have a high embedding similarity, but have been classified into different industry sectors. The relationship could be explained by the large presence both companies have in the consumer healthcare market, resulting in exposure to similar idiosyncratic risk factors and the resulting headwinds.

The final example in Table 2 is Honeywell and 3M. Again, they have relatively high similarity in their multimodal company embeddings, despite being classified in different industry sectors. The two companies are both multinational conglomerates operating in similar business sectors. In addition to this, both are constituents of three of the most popular indexes (Dow Jones Industrial Average, S &P 500 and S &P 100), and index inclusion has been shown to lead to more frequent news mentions and greater co-movement in returns [4].

Through these examples, we can see that current industry classification schemes will often segment quite similar companies into different industry sectors. The line is not always clear and, despite the increased usage of industry classification schemes for choosing investments, many have not embraced the era of big data and fail to utilize the countless data points being generated each day. Instead, the company allocation procedure remains a largely subjective task [20].

4.5 Using Multimodal Embeddings for Industry Classification

Using the embeddings generated by the proposed training framework, we can use a classification model to segment companies into business sectors in an objective manner. To do this, we train a support vector classifier [21] with embeddings as the input and industry sector label as the output. We used k-fold cross validation with \(k=4\) and account for a class imbalance in the data by using SMOTE.

There are a number of considerations here which will undoubtedly limit the accuracy of the classification model. Firstly, the embeddings themselves are derived solely from historical returns data and financial news, which are both influenced by a complex network of unpredictable factors. Secondly, as we saw in Sect. 4.4 when looking at high similarity mismatches, there are a number of companies with subjective ground truth labels that could be placed in a number of the industry categories. As a result, industry classification in the financial domain is a challenging problem.

Despite these hurdles, we see agreement of 90% with the traditional labels when using the pipeline in Fig. 5 to classify companies into industry sector. After an ablation study, we see an agreement of 85% when using the news-only embeddings, which is also above the baseline of 72% from the returns-only method Table 3.

5 Conclusion

This work has focused on leveraging multiple sources of data to tackle the industry classification problem using machine learning. We proposed an approach for learning dense vector representations of companies that capture nuanced and interesting relationships between companies. The potential utility of these embeddings to financial analysts was discussed in relation to a number of tasks, and the evaluation results speak to the potential benefits of our approach and provide a useful starting point for further exploration and development. From examples in Sect. 4.3, we saw that the embeddings trained on each modality picked up on interesting relationships of different types. The benefits of the multimodal approach were also highlighted in the results of the industry classification model in Sect. 4.5, where combining modalities increased overall accuracy and F1 score.

In future work, we plan to adapt the proposed framework to generate multimodal embeddings optimized to capture dissimilarity, in addition to similarity, which is an important tool for effective portfolio optimization. We believe that these embeddings have the potential to be useful in the asset management space by informing successful diversification and risk management strategies. In addition, we plan to utilize other sources of data and introduce sentiment aware context company selection to assess the impact on performance.

Notes

- 1.

There are actually two Word2Vec architectures, we focus on CBOW and do not describe Skip-Gram here.

References

Alanyali, M., Moat, H.S., Preis, T.: Quantifying the relationship between financial news and the stock market. Sci. Rep. 3(1), 1–6 (2013)

Ang, G., Lim, E.P.: Learning knowledge-enriched company embeddings for investment management. In: Proceedings of the Second ACM ICAIF, pp. 1–9 (2021)

Bachelier, L.: Théorie de la spéculation. In: Annales scientifiques de l’École normale supérieure, vol. 17, pp. 21–86 (1900)

Barberis, N., Shleifer, A., Wurgler, J.: Comovemen. J. Financ. Econ. 75(2), 283–317 (2005)

De Long, J.B., Shleifer, A., Summers, L.H., Waldmann, R.J.: Noise trader risk in financial markets. J. Polit. Econ. 98(4), 703–738 (1990)

Devlin, J., et al.: Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805 (2018)

Ding, X., et al.: Using structured events to predict stock price movement: an empirical investigation. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), pp. 1415–1425 (2014)

Dolphin, R., Smyth, B., Dong, R.: Stock embeddings: Learning distributed representations for financial assets. arXiv preprint arXiv:2202.08968 (2022)

Fama, E.F.: The behavior of stock-market prices. J. Bus. 38(1), 34–105 (1965)

Firth, J.R.: A synopsis of linguistic theory, 1930–1955. Studies in linguistic analysis (1957)

Gopikrishnan, P., Rosenow, B., Plerou, V., Stanley, H.E.: Identifying business sectors from stock price fluctuations. arXiv preprint cond-mat/0011145 (2000)

Guenther, D.A., Rosman, A.J.: Differences between COMPUSTAT and CRSP SIC codes and related effects on research. J. Account. Econ. 18(1), 115–128 (1994)

Ito, T., et al.: Learning company embeddings from annual reports for fine-grained industry characterization. In: Proceedings of the Second Workshop on Financial Technology and Natural Language Processing, Kyoto, Japan (2020)

Kahle, K.M., Walkling, R.A.: The impact of industry classifications on financial research. J. Financ. Quant. Anal. 31(3), 309–335 (1996)

Kim, D., Kang, H.G., Bae, K., Jeon, S.: An artificial intelligence-enabled industry classification and its interpretation. Internet Res. 32(2), 406–424 (2021)

Li, W., et al.: Modeling the stock relation with graph network for overnight stock movement prediction. In: Proceedings of the Twenty-Ninth IJCAI (2021)

Malkiel, B.G., Fama, E.F.: Efficient capital markets: a review of theory and empirical work. J. Finance 25(2), 383–417 (1970)

Mikolov, T., Chen, K., Corrado, G., Dean, J.: Efficient estimation of word representations in vector space. arXiv preprint arXiv:1301.3781 (2013)

Parker, R.: Restoring the enterprise statistics program (esp) for the 2012 economic census. Reports of the Census Bureau (2012)

Phillips, R.L., Ormsby, R.: Industry classification schemes: an analysis and review. J. Bus. Finance Librarianship 21(1), 1–25 (2016)

Platt, J., et al.: Probabilistic outputs for support vector machines and comparisons to regularized likelihood methods. Adv. Large Margin Classifiers 10(3), 61–74 (1999)

Rong, X.: word2vec parameter learning explained. arXiv:1411.2738 (2014)

Sarmah, B., Nair, N., Mehta, D., Pasquali, S.: Learning embedded representation of the stock correlation matrix using graph machine learning. arXiv preprint arXiv:2207.07183 (2022)

Satone, V., Desai, D., Mehta, D.: Fund2vec: mutual funds similarity using graph learning. In: Proceedings of the Second ACM ICAIF, pp. 1–8 (2021)

Vachhani, H., et al.: Machine learning based stock market analysis: a short survey. In: Raj, J.S., Bashar, A., Ramson, S.R.J. (eds.) ICIDCA 2019. LNDECT, vol. 46, pp. 12–26. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-38040-3_2

Wan, X., et al.: Sentiment correlation in financial news networks and associated market movements. Sci. Rep. 11(1), 1–12 (2021)

Weiner, C.: The impact of industry classification schemes on financial research. Available at SSRN 871173 (2005)

Wu, Qiong, e.a.: Equity2vec: end-to-end deep learning framework for cross-sectional asset pricing. In: Proceedings of the Second ACM ICAIF (2021)

Xing, F.Z., Cambria, E., Welsch, R.E.: Natural language based financial forecasting: a survey. Artif. Intell. Rev. 50(1), 49–73 (2018)

Acknowledgements

This publication has emanated from research conducted with the financial support of Science Foundation Ireland under Grant number 18/CRT/6183.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this paper

Cite this paper

Dolphin, R., Smyth, B., Dong, R. (2023). A Machine Learning Approach to Industry Classification in Financial Markets. In: Longo, L., O’Reilly, R. (eds) Artificial Intelligence and Cognitive Science. AICS 2022. Communications in Computer and Information Science, vol 1662. Springer, Cham. https://doi.org/10.1007/978-3-031-26438-2_7

Download citation

DOI: https://doi.org/10.1007/978-3-031-26438-2_7

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-26437-5

Online ISBN: 978-3-031-26438-2

eBook Packages: Computer ScienceComputer Science (R0)