Abstract

Generative models are becoming popular for the synthesis of medical images. Recently, neural diffusion models have demonstrated the potential to generate photo-realistic images of objects. However, their potential to generate medical images is not explored yet. We explore the possibilities of synthesizing medical images using neural diffusion models. First, we use a pre-trained DALLE2 model to generate lungs X-Ray and CT images from an input text prompt. Second, we train a stable diffusion model with 3165 X-Ray images and generate synthetic images. We evaluate the synthetic image data through a qualitative analysis where two independent radiologists label randomly chosen samples from the generated data as real, fake, or unsure. Results demonstrate that images generated with the diffusion model can translate characteristics that are otherwise very specific to certain medical conditions in chest X-Ray or CT images. Careful tuning of the model can be very promising. To the best of our knowledge, this is the first attempt to generate lungs X-Ray and CT images using neural diffusion models. This work aims to introduce a new dimension in artificial intelligence for medical imaging. Given that this is a new topic, the paper will serve as an introduction and motivation for the research community to explore the potential of diffusion models for medical image synthesis. We have released the synthetic images on https://www.kaggle.com/datasets/hazrat/awesomelungs.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

During the last decade, there has been a surge in studies on generative models for medical image synthesis [1, 2]. Generative Adversarial Networks (GANs) and deep autoencoders are two primary examples of deep generative models that have shown remarkable advancements in synthesis, denoising, and super-resolution of medical images [1, 3]. Many studies have shown the great potential of GANs to generate realistic magnetic resonance imaging (MRI), Computed Tomography (CT), or X-Ray images that can help in training artificial intelligence (AI) models [1, 4,5,6]. With the recent success of neural diffusion models for the synthesis of natural images [8, 9], there is now an increasing interest in exploring the potential of neural diffusion models to generate medical images. For generating natural images such as art images, objects, models such as DALLE2Footnote 1, Mid-JourneyFootnote 2, and Stable DiffusionFootnote 3 have pushed the state-of-art. Amongst the three, only the latter is available with open-source code. Compared to GANs, diffusion models are becoming popular for their training stability.

Figure modified from [7].

Forward pass and reverse pass in diffusion model training.

A diffusion model, in simple words, is a parameterized Markov chain trained using variational inference. The transition is learned through a diffusion that adds noise to the data. In principle, the diffusion model transforms the input data into noisy data by adding Gaussian noise and then recovers the data distribution by reversing the noise. Once the model learns the distribution, it can generate useful data from random noise input. So, diffusion models transform a latent encoded representation into a more meaningful representation of image data. In this context, diffusion models can be compared to denoising autoencoders. As shown in Fig. 1, the overall process can be summarized as a two-step phenomenon, the forward pass, i.e., the transformation of the data distribution to noise (\(X_i\) to \(X_T\)), and the reverse pass, i.e., reversing the noise distribution to data distribution (\(X_T\) to \(X_i\)). Training a diffusion model implies the learning of the reversing process i.e., \(p(x_{t-1} | x_t)\). The diffusion model can be implemented by using a neural network for the forward and reverse training steps. However, the architecture must have the same input and output dimensions.

While previously, the generating ability of diffusion models was mostly used for unconditional generation of data, more recent attempts have shown conditioned generation by introducing guided-diffusion models [8,9,10]. These works have demonstrated the generation of photo-realistic images guided by the context of the input text or image. The existing use cases of diffusion models comprise text-to-image applications, i.e., generating images according to a given text prompt. In addition, Han et al. [11] presented a classification and regression diffusion model (CARD), and demonstrated the use of the diffusion model for classification as well as regression tasks. In CARD, the authors approached the task of supervised learning using generative modeling conditioned on the class labels. Though the objective was not to claim state-of-the-art results, the method has shown promising results on the benchmark dataset. For CIFAR-10 classification, the model reached an accuracy of 90.9%.

Given the potential of diffusion models to learn the representation, one can expect their potential to generate a diverse set of medical images. Furthermore, they can add a new dimension to existing approaches for medical image applications, such as noise adaptation, noise removal, super-resolution, domain-to-domain translation, and data augmentation. To the best of our knowledge, no work other than the recent pre-print [12], exists currently on the synthesis of medical images using neural diffusion models. Walter et al. [12] used latent diffusion models to generate T1w MRI images of the brain. Using 31,740 brain MRI images from the UK Biobank, they have generated a stack of 100,000 images conditioned on key variables such as age, sex, and brain volume. In this work, we explore neural diffusion models to generate synthetic images of lung CT and X-Ray. We use the DALLE2 model and the stable diffusion model to generate the images and present them to two radiologists for their feedback. We then summarize the feedback received from the radiologists and identify some of the challenges in using the neural diffusion model for medical image synthesis.

The remaining paper is organized as: Sect. 2 explains the methodology of our work. Section 3 presents the results of generating lung CT and X-Ray images, while Sect. 4 provides insights into the results and also highlights the limitations of the approach. Finally, Sect. 5 concludes the paper.

2 Methodology

In this work, we devised two experiments for generating synthetic images of lungs X-Ray and CT. In the first experiment, we used the OpenAI DALLE2 APIFootnote 4 to generate images based on the input text. The DALLE2 model recently gained much attention for its ability to generate photo-realistic images of objects given a certain input text. Using the API, we generated multiple images of lungs CT and X-Ray. We then presented a randomly selected set of the generated images to two trained radiologists. We asked the radiologists for two key tasks. First, we asked them to label each image as real, fake, or uncertain about, as per their perceived understanding. Second, we asked them to provide a brief description of the possible information related to lung condition or diagnosis of disease (for example, normal lungs, severely damaged lungs, pneumonia-affected lungs, etc.). The radiologists did not have prior information on the labels of the images. In fact, all the images that we presented to the radiologists were synthetic. The radiologists did not know each other and performed the tasks independently. Of the two radiologists, one radiologist had prior knowledge of artificial intelligence and generative models, while the other radiologist was naïve to deep generative models.

In the second experiment, we used the stable diffusion model [13]. We trained the stable diffusion model using 3165 X-Ray images from [14]. We resized the images to 256 by 256 resolution. No other pre-processing was done. Using the X-Ray images, we trained a stable diffusion model on a server equipped with NVIDIA Quadro RTX 8000 GPU with a 48 GB memory. We set the batch size equal to 32 and ran the training for 700000 training steps.

3 Results

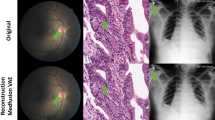

Using the DALLE2 API, we generated a total of 150 images. We have uploaded the synthetic images to KaggleFootnote 5. We believe the number of generated images is only limited by the tokens available to us. Sample X-Ray and CT images are shown in Fig. 2 and Fig. 3, respectively. Out of 40 images that we presented to the radiologists, radiologist \(\mathcal {A}\) identified 14 X-Ray images and three CT images as real, while four X-Ray and 17 CT images as fake. Radiologist \(\mathcal {A}\) labeled two X-Ray images as unsure. The second radiologist (radiologist \(\mathcal {B}\)) identified ten X-Ray images and only two CT images as real, while all the remaining images as fake.

Agreement between radiologists: Of the 20 CT images, only three images were labeled as real by both radiologists. Similarly, five X-Ray images were marked as real by both radiologists. There were two X-Ray and two CT images for which both the radiologists were uncertain.

For task 2, where we asked the radiologist to provide a brief description of what the images may reveal, the radiologists made some interesting observations. For example, some descriptions are listed in Table 1. These descriptions clearly reveal that some of the images carried representations similar to real X-Ray or CT images, and the model was able to generate features that are specific lung conditions.

4 Discussion

Some of the generated images lacked the characteristics of realistic images and were quickly identified by the radiologists as fake. These images were termed as having unusual ribs appearance or showing unusual exposure. Similarly, it was easy to spot big vessels contour and lung fields that appeared to have been drawn and not imaged. One key observation for fake images was that the trachea is visible behind the heart shadow, which does not happen in real X-Ray imaging. A few sample images that were termed fake by at least two radiologists are shown in Fig. 4. Many of the generated images from the pre-trained model clearly lacked the characteristics of realistic images and were quickly identified by the radiologists as fake. These images were termed as having unusual ribs appearance, strange clavicle appearance, or showing unusual exposure. The evaluation by radiologist \(\mathcal {A}\) is summarized in Fig. 5.

4.1 Limitations

One challenge identified in diffusion models is the limited ability to produce details in complex scenes [9]. So, generating complex medical images would need to be complemented with noise adaptation or super-resolution techniques [5]. Like many other AI models, diffusion model training is prone to bias in the dataset; for example, unbalanced representation of medical conditions in the input X-Ray or CT image or inherent noise in data. Thus, the synthetic data from such a diffusion model will also carry the bias. Eventually, if the generated data are made public and used for onward model training, the bias may turn into a cascade behavior and will be further augmented [7]. The model has been used pretty much as a black-box model; hence, not much explainability can be offered on how certain images were generated. Unlike the work reported in Walter et al. [12], our generated images are not conditioned on additional variables such as gender, age, etc. Diffusion models are very slow to train as they require the number of training steps to be in the order of several hundred thousand. Our training took around one day for 100k training steps. This study is presented as a means to infuse interest in the potential of diffusion models for the synthesis of medical images.

5 Conclusion and Future Work

In this work, we have demonstrated the potential of neural diffusion models for the synthesis of lungs X-Ray and CT images. Though the radiologists spotted many images as fake, few images were still labeled as real by them. The labeling from the radiologists reflects that some of the generated X-Ray images carried a great resemblance to real images. However, the identification of fake images was straightforward for the CT images. Through qualitative analysis of the generated images, we showed that neural diffusion models have great potential to learn complex representations of medical images. Although the performance of diffusion models is superior to GANs-based methods for synthesizing natural images, research efforts on the diffusion model for medical image synthesis have yet to mature.

References

Yi, X., Walia, E., Babyn, P.: Generative adversarial network in medical imaging: a review. Med. Image Anal. 58, 101552 (2019)

Jiang, Y., Chen, H., Loew, M., Ko, H.: Covid-19 CT image synthesis with a conditional generative adversarial network. IEEE J. Biomed. Health Inform. 25(2), 441–452 (2020)

Chen, M., Shi, X., Zhang, Y., Wu, D., Guizani, M.: Deep feature learning for medical image analysis with convolutional autoencoder neural network. IEEE Trans. Big Data 7(4), 750–758 (2017)

Ali, H., et al.: The role of generative adversarial networks in brain MRI: a scoping review. Insights Imaging 13(1), 1–15 (2022)

Ahmad, W., Ali, H., Shah, Z., Azmat, S.: A new generative adversarial network for medical images super resolution. Sci. Rep. 12(1), 1–20 (2022)

Munawar, F., Azmat, S., Iqbal, T., Grönlund, C., Ali, H.: Segmentation of lungs in chest X-ray image using generative adversarial networks. IEEE Access 8, 153535–153545 (2020)

Ho, J., Jain, A., Abbeel, P.: Denoising diffusion probabilistic models. In: Advances in Neural Information Processing Systems, vol. 33, pp. 6840–6851 (2020)

Dhariwal, P., Nichol, A.: Diffusion models beat GANs on image synthesis. In: Advances in Neural Information Processing Systems, vol. 34, pp. 8780–8794 (2021)

Ramesh, A., Dhariwal, P., Nichol, A., Chu, C., Chen, M.: Hierarchical text-conditional image generation with clip latents. arXiv preprint arXiv:2204.06125 (2022)

Song, Y., Sohl-Dickstein, J., Kingma, D.P., Kumar, A., Ermon, S., Poole, B.: Score-based generative modeling through stochastic differential equations. arXiv preprint arXiv:2011.13456 (2020)

Han, X., Zheng, H., Zhou, M.: CARD: classification and regression diffusion models. arXiv preprint arXiv:2206.07275 (2022)

Pinaya, W.H., et al.: Brain imaging generation with latent diffusion models. arXiv preprint arXiv:2209.07162 (2022)

O’Connor, R.: How to run stable diffusion locally to generate images. https://www.assemblyai.com/. Accessed 01 Oct 2022

Chowdhury, M.E., et al.: Can AI help in screening viral and Covid-19 pneumonia? IEEE Access 8, 132665–132676 (2020)

Acknowledgments

The authors are grateful to Surendra Maharjan from Indiana University Purdue University Indianapolis, USA, for useful comments on this work. The authors are thankful to Dr. Jens Schneider for facilitating the GPU access.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this paper

Cite this paper

Ali, H., Murad, S., Shah, Z. (2023). Spot the Fake Lungs: Generating Synthetic Medical Images Using Neural Diffusion Models. In: Longo, L., O’Reilly, R. (eds) Artificial Intelligence and Cognitive Science. AICS 2022. Communications in Computer and Information Science, vol 1662. Springer, Cham. https://doi.org/10.1007/978-3-031-26438-2_3

Download citation

DOI: https://doi.org/10.1007/978-3-031-26438-2_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-26437-5

Online ISBN: 978-3-031-26438-2

eBook Packages: Computer ScienceComputer Science (R0)