Abstract

Data that may be used for personalised recommendation purposes can intuitively be modelled as a graph. Users can be linked to item data; item data may be linked to item data. With such a model, the task of recommending new items to users or making new connections between items can be undertaken by algorithms designed to establish the relatedness between vertices in a graph. One such class of algorithm is based on the random walk, whereby a sequence of connected vertices are visited based on an underlying probability distribution and a determination of vertex relatedness established. A diffusion kernel encodes such a process. This paper demonstrates several diffusion kernel approaches on a graph composed of user-item and item-item relationships. The approach presented in this paper, RecWalk*, consists of a user-item bipartite combined with an item-item graph on which several diffusion kernels are applied and evaluated in terms of top-n recommendation. We conduct experiments on several datasets of the RecWalk* model using combinations of different item-item graph models and personalised diffusion kernels. We compare accuracy with some non-item recommender methods. We show that diffusion kernel approaches match or outperform state-of-the-art recommender approaches.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The recommendation task has conventionally been cast as a matrix completion task - the data consists of sparse user-item matrix and the recommendation task is to make predictions for the missing elements. Multiple varieties of collaborative filtering (CF) have been proposed for this - nearest-neighbour based approaches (e.g. based on Pearson Correlation Coefficient), Matrix Factorisation [10] such as PureSVD and SVD++. More recently, He et al. [7] proposed a Neural Collaborative Filtering Framework and Ning et al. [14] proposed the SLIM method which builds a model using an elastic net regression approach. Item-based approaches convert the user-item matrix into an item-item matrix, typically using a measure of similarity, which can be mined for if you liked that, you may also like this type recommendations. Both user-item, and item-item matrices are sparse and this has negative impact on recommendation coverage and quality.

However, user-item and item-item data can intuitively be modelled as a graph. With such a model, the task of recommending new items to users, or making new connections between items can be undertaken by algorithms designed to establish the relatedness between vertices in a graph. The sparsity issues are lessened due to the transitivity relations between vertices. One such class of algorithm is based on the random walk diffusion process, whereby a sequence of connected vertices are visited by random agents based on the transition probabilities of the links. The diffusion is a time-controlled stochastic process controlled by specific equations governing the traversal. As they move from vertex to vertex, the agents record a trace of their walk through the network that will reflect the probability of reaching a specific vertex from another vertex. We can identify patterns in the trajectories of multiple agents and use these to estimate the proximity or relatedness of the vertices in the graph. A kernel is a generalisation of such relatedness between vertices in the graph. The visitation decisions of the agents are underpinned by the rules of the diffusion. For instance, some diffusions will allow an agent to stop at random, or to restart the diffusion from another randomly selected vertex. Applying a kernel to network data provide a measures of similarity or relatedness between vertices that are not directly connected in the graph. Therefore, we can use kernels to make recommendations of related vertices based on an set of item vertices from a user profile.

The followings are the contributions of this paper. Firstly, we present a graph-based recommender method - RecWalk*. Our method adopts the RecWalk approach of Nikolakopolos Karypis [13] that combines a user-item interaction component with an item-item interaction component representing the similarities between items. Our approach uses a different weight initializing scheme built from the primary database. After that, we apply and evaluate several diffusion-based random walk algorithms on the network to discover the user-item or item-item relationships that are not found in the traditional item-based CF approaches. For instance, the standard item-based approaches only consider one-neighbour-connected items, while our proposed method will exploit two-hop or multi-hop neighbours within a network to improve recommendation accuracy. We apply three different diffusion kernels on eight public datasets and compare the performance with state-of-art recommender algorithms.

2 Notations and Definitions

In this paper, some notations are used to denote specific technical terms. Vectors, which are assumed to be column vectors, are represented by bold lower-case letters (e.g., p). Matrices are denoted by bold upper-case letters (e.g., M). Specifically, the \(i^{th}\) column and the \(j^{th}\) row of the matrix M are depicted as \(\boldsymbol{p_i}\) and \(\boldsymbol{{q_j}^T}\) respectively. Besides, a boldface 1 is used to represent a column-wise vector where all values are ones. In addition, the diagonal values of a matrix M are denoted as Diag (M). Furthermore, \(\boldsymbol{\Vert *\Vert }\) is used to denote the Euclidean norm. Sets are represented using the calligraphic upper-case letters (e.g. {

,

,

}). Finally, we use ‘\(\boldsymbol{=}\)’ to express a definition statement.

}). Finally, we use ‘\(\boldsymbol{=}\)’ to express a definition statement.

A set of users (

) and a set of items (

) and a set of items (

) within a dataset are represented by {\(U_1, U_2,..., U_n\)} and {\(I_1, I_2,..., I_m\)} respectively. Let \(\boldsymbol{R^{U \times I}}\) be the user-item matrix. Each entry of the matrix is the value of the user-item rating, provided that user (u) has rated the item (i); otherwise the entry is zero. Each user (u) is modelled by a row-vector \(\boldsymbol{{r_u}^T \in R^I}\), which is obtained from the user-item interaction matrix R; Similarly, each item (i) will be expressed as a column vector \(\boldsymbol{{r_i} \in R^U}\). Finally, the item-model is defined as a matrix \(\boldsymbol{W \in R^{I \times I}}\), which gives a measure of similarity or relatedness between items i and j.

) within a dataset are represented by {\(U_1, U_2,..., U_n\)} and {\(I_1, I_2,..., I_m\)} respectively. Let \(\boldsymbol{R^{U \times I}}\) be the user-item matrix. Each entry of the matrix is the value of the user-item rating, provided that user (u) has rated the item (i); otherwise the entry is zero. Each user (u) is modelled by a row-vector \(\boldsymbol{{r_u}^T \in R^I}\), which is obtained from the user-item interaction matrix R; Similarly, each item (i) will be expressed as a column vector \(\boldsymbol{{r_i} \in R^U}\). Finally, the item-model is defined as a matrix \(\boldsymbol{W \in R^{I \times I}}\), which gives a measure of similarity or relatedness between items i and j.

3 Related Works

3.1 Item Models

Item models are one of the most popular and essential components used in collaborative recommender methods (e.g., FISM [8]). Such methods aim to build an item-item interaction matrix (W) to capture the relations between items. An item model may also be represented as a graph in which pair of items are linked by their relatedness (e.g., similarity scores) in the item-item interaction matrix.

3.2 Random Walk

Graph-based approaches enable items that are not directly linked in the item-item graph to be considered as relevant recommendation candidates. Karypis and Nikolakakopoulos [13] propose a simple random walk (SRW) approach on an an item-item graph. A random walk is a graph-based algorithm, defined as a stochastic process that begins a graph traversal at a vertex and moves to another connected vertex randomly at each time step with a probability proportional to the edge value in a transition probability matrix (P) [6]. Karypis and Nikolakakopoulos’s approach applies a finite-step random walk on an item-item network starting with the item nodes rated by a user. The terminated state of SRW scores item nodes (excluding those the user has rated in the past) with cumulative landing probabilities. Items with the highest landing probabilities will be recommended to the user.

Formula (1) above illustrates the linear mathematical operation form of the K-step SRW algorithm for a particular user (u). We use a state variable (\(\boldsymbol{{e_u}^T}\)) to record the landing probability of each item in the database after each step and use a row-vector (\(\boldsymbol{{r_u}^T}\)), which is obtained from the user-item matrix (R), to represent the user’s past behaviour in the database. The start state vector (\(\boldsymbol{{\omega }_u^T}\)) is initialised as the normalised row-vector (\(\boldsymbol{{r_u}^T}\)). After each move, the new state is updated by the product of the current state vector and the transition probability matrix (P). The final state will be the product result of \(\boldsymbol{{\omega }_u^T}\) and the power (K) of P.

3.3 Diffusion Kernels

Diffusion is a concept that refers to the net movement of a substance from an area of higher concentration to an area of lower concentration [9]. In the domain of computer science, a diffusion kernel is a matrix used to measure the relatedness or proximity between a pair of nodes within a graph. The relatedness score is based on the probability of information diffusing from one node to another, which is determined by a function known as a kernel function. There are different types of diffusion kernels [2], such as Markov-based kernels: Personalised PageRank (PPR), exponential-based kernels: Communicability (DR), and Laplacian-based kernels: Regularised Laplacian (LAP). Table 1 below describes three diffusion kernels (PPR, DR, and LAP) in detail. Specifically, the PPR and LAP diffusion kernels are given with both infinite series and linear forms as shown in their mathematical expressions Eq. (1.1), Eq. (1.2) and Eq. (1.5), Eq. (1.6), respectively. For the PPR kernel, S is a stochastic matrix of an adjacency matrix (W) with the PageRank scores between pair of nodes as shown in Eq. (1.3); k indicates the number of steps required in a random walk; p is a damping factor from 0–1 to control the strength and speed of energy propagation. DR is one of the exponential kernels that cannot be written in a linear form, thereby keeping the series form only in Eq. (1.4). The Laplacian matrix (L) of an adjacency matrix is represented as the subtraction of the degree matrix (D) and the adjacency matrix (W) in Eq. (1.7).

4 Proposed Method

4.1 RecWalk*

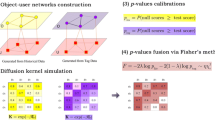

This paper extends the RecWalk approach, a combined-random-walk framework, proposed by Nikolakakopoulos and Karypis [13] by adding and evaluating two different diffusion kernels on several real datasets. RecWalk* builds a network consisting of two sub-components: a user-item bipartite graph whose weights are initialised from a user-item matrix, denoted as (2), and an item-item interaction graph. Figure 1 illustrates the RecWalk* graph construction from a user-item co-rated table to a user-item combination graph.

The adjacency matrix of

is expressed as \(\boldsymbol{{A_G} \in R^{(U+I) \times (U+I)}}\) and is given by (3)

is expressed as \(\boldsymbol{{A_G} \in R^{(U+I) \times (U+I)}}\) and is given by (3)

RecWalk* model construction. (a) shows an example of a user-item co-rated matrix, and (b) is the graph representation of the matrix correspondingly. (c) illustrates an example of the item model built up from the user-item matrix in (a) using Cosine Similarity. The combination graph comprises a user-item-bipartite component and an item-item component, as shown in (d).

To investigate whether the network properties will influence the recommendation result, RecWalk* adopts a different weight-initializing strategy in the transition probability matrix of the user-item subgraph. RecWalk* defines a single bidirectional transition probability between a user node and an item node. This is the reciprocal value of the number of items rated by the user. This is a simplification of the original RecWalk weighting, which initialises a transition probability from a user node to an item node as well as from an item node to a user node.

There are two preconditions to build up our proposed framework. 1) A random walk always starts at a user node and ends with an item node; 2) The item-item component must be a connected graph.

Algorithm 1 shows the implementation of the transition probability matrix in the RecWalk* model. We adopt the same random walk strategy used in RecWalk. Each move is determined by a biased coin-toss. Assuming that the walker currently occupies a node c

, the next move is determined by a biased coin-toss that yields heads with probability (a) and tails with probability (1 – a). Provided that the current node (c) is a user node (c

, the next move is determined by a biased coin-toss that yields heads with probability (a) and tails with probability (1 – a). Provided that the current node (c) is a user node (c

): i) if the coin-toss yields heads, the walker jumps to one of the items rated by the user randomly; ii) if the coin-toss yields tails, the walker stays put. While the current node (c) is an item node (c

): i) if the coin-toss yields heads, the walker jumps to one of the items rated by the user randomly; ii) if the coin-toss yields tails, the walker stays put. While the current node (c) is an item node (c

): i) if the coin-toss yields heads, the walker jumps to one of the users that have rated the item randomly; ii) if the coin-toss yields tails, the walker moves to a connected item node in the inter-item component. This algorithm accepts three inputs: a user-item co-rated matrix R, an item model W, a parameter ’a’ that denoted the biased probability. Lines 1–2 declare two adjacency matrices for the item-item component (\(\boldsymbol{M_I}\)) and the user-item component (\(\boldsymbol{A_G}\)), respectively. Lines 3–4 initialises the user-item transition probability matrix H symmetrically, and line 5 constructs the final transition probability matrix P by a linear combination of these two components with the biased probability a.

): i) if the coin-toss yields heads, the walker jumps to one of the users that have rated the item randomly; ii) if the coin-toss yields tails, the walker moves to a connected item node in the inter-item component. This algorithm accepts three inputs: a user-item co-rated matrix R, an item model W, a parameter ’a’ that denoted the biased probability. Lines 1–2 declare two adjacency matrices for the item-item component (\(\boldsymbol{M_I}\)) and the user-item component (\(\boldsymbol{A_G}\)), respectively. Lines 3–4 initialises the user-item transition probability matrix H symmetrically, and line 5 constructs the final transition probability matrix P by a linear combination of these two components with the biased probability a.

4.2 Communicability Kernel (DR)

The implementation of the communicability kernel (DR) with the RecWalk* model is shown in Algorithm 2. DR is an exponential-based kernel, thereby providing the truncated way only. The truncated way, described by Torres [16], means that a diffusion process finishes (truncates) the random walk after the required number of moves. Such an approach is computed by the iterative matrix multiplication and is applicable to all diffusion-based random walks. This algorithm accepts three inputs: a user ‘u’ from all existing users in the database, a damping factor \(\beta \) used to control the diffusion intensity, and a constant number ‘k’ indicating the number of moves of a random walk. Initially, a state variable \(\boldsymbol{{\lambda }^T}\) is used to record the diffusion scores of all items after each move, and the primary state \(\boldsymbol{{{\lambda }_{(0)}}^T}\) is a row-wise vector \(\boldsymbol{{e_{u}}^T}\) with the size of

+

+

where the element one on the position that corresponds to user u and zeros elsewhere. P is the transition probability constructed by the RecWalk* model. Lines 2–5 give the update procedure of DR in each move followed by the Eq. (1.4) in Table 1, and the state variable is normalised after each iteration to ensure that all entries sum up to be 1. \(\boldsymbol{{{\omega }_u}^T}\) is the output vector that contains the final diffusion score of each item as shown in line 6.

where the element one on the position that corresponds to user u and zeros elsewhere. P is the transition probability constructed by the RecWalk* model. Lines 2–5 give the update procedure of DR in each move followed by the Eq. (1.4) in Table 1, and the state variable is normalised after each iteration to ensure that all entries sum up to be 1. \(\boldsymbol{{{\omega }_u}^T}\) is the output vector that contains the final diffusion score of each item as shown in line 6.

4.3 Regularised Laplacian Kernel (LAP)

Algorithm 3 gives the details of RecWalk* with the regularised Laplacian diffusion kernel. The initialisation procedure is the same as the DR kernel. However, it lists two alternative ways: truncating the infinite series (‘Truncated’) or using the linear system (‘Linear’). A linear solver is a powerful and direct way to reach the convergent state in the shortest time, but not effective with a large sparse matrix. Meanwhile, a truncated approach works for any type of kernel but would be memory-consuming for the large matrix computation in each iteration. In short, for both ’Truncated’ and ’Linear’ ways, each one has its own strengths and weakness. Lines 1–2 aim to obtain the Laplacian matrix (L) of the transition probability matrix (P). Lines 3–9 is the truncated implementation based on the Eq. (1.5) in Table 1 and lines 10–12 is the linear solver implementation based on the Eq. (1.6) in Table 1.

When a diffusion process finishes or reaches a convergent state, each item node will receive a final diffusion score. All items will be sorted by their diffusion scores in descending order, and those with top rankings which are not included in the rated item lists of the user will be returned as recommendation candidates.

5 Experiments

5.1 Datasets Preparation

Table 2 provides details of eight well-known rating datasets from different domains that have explicit and implicit feedback. The Movielens!1M [11] and Yahoo!Movie [17] datasets represent film ratings. The Amazon product datasets provided by McAuley [1]: Baby, Cell Phones and Accessories, Apps for Android, and Health and Care represent consumer ratings. Book-Crossing (explicit rating version), collected by Ziegler [3] represents user rating of books. Steam Video Game represents users’ purchasing records from a popular PC Gaming hub [15]. We applied some filtering to each dataset (except Movielens) as the sparsity of some user and items records caused problems when sampling data for training and testing purposes. Our filtering approach ensuring that each user had at least three rated items, and each item was rated by at least by one user. Table 2 gives the statistics for the Unfiltered vs Filtered data.

5.2 State-of-Art Algorithms

We apply the RecWalk* model using three diffusion kernels (PPR, DR, and LAP) as functional kernels on two standard item-based models: (SLIM and NNCosine). State-of-the-art methods, such as Matrix Factorisation (PureSVD, EigenRec) and random walk (\(\boldsymbol{P^{n}}\)), were evaluated as baselines.

Baseline

-

PureSVD: one of the classical Matrix Factorisation recommender methods, aiming to reduce the dimensionality of the matrix [10]

-

EigenRec: an extension of PureSVD that adds a scaling component for each item [12]

-

\(\boldsymbol{P^{n}}\): an n-step random walk model on the user-item bipartite network, starting at a user node and ending with an item node where n must be an odd number [4]

Item Model

-

NNCosine: a neighbour-based similarity recommender method (ItemKNN) [5]

-

SLIM: a recommender method which solves an item model by using ElasticNet Regression [14]

5.3 Evaluation Metrics

To evaluate the performances of our model, Leave-one-out Cross Validation (LOOCV) [13] was adopted in our experiments. For each user, an item rated by the user in the past was sampled and put into a test set (

), and remaining data were put into a training set (

), and remaining data were put into a training set (

). We repeated this process 3 times, and recorded the average. The standard sampling strategy, Random Sampling - One of the items rated by a user were selected randomly, was used to partition the dataset. For a particular user, we consider their corresponding test item together with 1000 randomly sampled unrated items. These 1001 items are ranked according to their prediction scores generated by each recommender method. To the experimental settings, we only consider the implicit feedback where the real value of rating was set to 1, and use Hit Rate (HR@10) [14] to show the accuracy of prediction result.

). We repeated this process 3 times, and recorded the average. The standard sampling strategy, Random Sampling - One of the items rated by a user were selected randomly, was used to partition the dataset. For a particular user, we consider their corresponding test item together with 1000 randomly sampled unrated items. These 1001 items are ranked according to their prediction scores generated by each recommender method. To the experimental settings, we only consider the implicit feedback where the real value of rating was set to 1, and use Hit Rate (HR@10) [14] to show the accuracy of prediction result.

5.4 Running Environment

Our all experiments were implemented by using Python 3.8.8 and Numpy 1.21.3 running on Windows 10 operating systems.

6 Results

For baseline algorithms, experimental results showed that EigenRec performed consistently better than PureSVD, and \(\boldsymbol{P^{n} (n=3)}\) gained the best performance against all different n valuesFootnote 1. Therefore, EigenRec and \(\boldsymbol{P^{3}}\) were selected as the representative baseline algorithms for each dataset. For the RecWalk* model, we made experiments on two item models (NNCosine and SLIM) with three diffusion kernels (+PPR/+DR/+LAP) and reported results.

Table 3 illustrates the results of baseline algorithms and item models with their diffusion-based RecWalk* in HR@10. The EigenRec and \(\boldsymbol{P^{3}}\) columns give the scores using the EigenRec and \(\boldsymbol{P^{3}}\) recommendation algorithms, respectively, as the baseline results. NNCosine and SLIM are two baseline item models, and the result of each algorithm are presented in the Base column. For each item-based approach, the results of three diffusion-based approaches are presented in the +PPR, +DR, and +LAP columns, respectively, and we use the bold font to mark the kernel with the best performance.

Table 3 shows that the diffusion-based approaches consistently outperform the baseline item models – NNCosine and SLIM – in terms of accuracy, and this difference is most noticeable in NNCosine. Despite a much smaller improvement for SLIM, a paired ‘T-test’ was conducted for all datasets and found that the difference was significant at a p value of the significance level of 0.05. (The p values for LAP are shown in the table). Therefore, SLIM+RecWalk*+LAP consistently performed better than SLIM. Furthermore, the diffusion-based approaches behaved better than EigenRec and \(\boldsymbol{P^{3}}\) in accuracy except for the MovieLens dataset.

In addition, we compared the result (NDCG@10 [13]) of RecWalk* and RecWalk with NNCosine to examine whether the simplified weight initialising scheme of RecWalk* had any affect. Table 4 shows that RecWalk* and RecWalk performed similarly on PPR and DR, but that RecWalk* was better than RecWalk on LAP.

7 Conclusion and Future Work

Diffusion approaches on networks have recently been used to determine relatedness between vertices [16] and to produce recommendations [13]. The work in this paper evaluates three different diffusion kernels on eight recommender datasets, represented as graphs. For each user in the data set, the diffusion process produces a ranking of related item nodes that are treated as recommendation candidates. Our experiments have determined that the diffusion kernel approaches are at least as good as state of the art of techniques for the top-n recommendation task, with the LAP diffusion kernel out performing other diffusion kernels.

Currently, our work focuses on the standard datasets only where the roles of users and items are well-defined with one type of action (e.g., Purchasing or Rating history). For each item in the database, we aim to add their semantic attributes as additional features so that the user would gain cross-domain recommendation results. Therefore, we will link each item to its corresponding entity node in a knowledge graph (e.g., DBpedia), extract a local subgraph and combine it with a CF-item model (e.g., NNCosine) as a semantic CF-item model. We will conduct experiments on the new semantic CF-item model with the RecWalk* and RecWalk model to examine the performances.

Notes

- 1.

The full results are available at the appendix: https://github.com/easternbob2019/.

References

Aamazon product data (2018). https://jmcauley.ucsd.edu/data/amazon/

Avrachenkov, K., Chebotarev, P., Rubanov, D.: Kernels on graphs as proximity measures. In: Bonato, A., Chung Graham, F., Prałat, P. (eds.) WAW 2017. LNCS, vol. 10519, pp. 27–41. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-67810-8_3

Book crossing (2022). https://grouplens.org/datasets/book-crossing/

Cooper, C., Lee, S.H., Radzik, T., Siantos, Y.: Random walks in recommender systems: exact computation and simulations. In: Proceedings of the 23rd International Conference on World Wide Web, pp. 811–816 (2014)

Cremonesi, P., Koren, Y., Turrin, R.: Performance of recommender algorithms on top-n recommendation tasks. In: Proceedings of the Fourth ACM Conference on Recommender Systems, pp. 39–46 (2010)

Eksombatchai, C., et al.: Pixie: a system for recommending 3+ billion items to 200+ million users in real-time. In: Proceedings of the 2018 World Wide Web Conference, pp. 1775–1784 (2018)

He, X., Liao, L., Zhang, H., Nie, L., Hu, X., Chua, T.S.: Neural collaborative filtering. In: Proceedings of the 26th International Conference on World Wide Web, pp. 173–182 (2017)

Kabbur, S., Ning, X., Karypis, G.: Fism: factored item similarity models for top-n recommender systems. In: Proceedings of the 19th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (2013)

Kondor, R.I., Lafferty, J.: Diffusion kernels on graphs and other discrete structures. In: Proceedings of the 19th International Conference on Machine Learning, vol. 2002, pp. 315–322 (2002)

Koren, Y.: Factorization meets the neighborhood: a multifaceted collaborative filtering model. In: Proceedings of the 14th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 426–434 (2008)

Movielens 1m dataset (2022). https://grouplens.org/datasets/movielens

Nikolakopoulos, A.N., Kalantzis, V., Garofalakis, J.D.: Eigenrec: an efficient and scalable latent factor family for top-n recommendation, p. 47. arXiv preprint arXiv:1511.06033 (2015)

Nikolakopoulos, A.N., Karypis, G.: Recwalk: nearly uncoupled random walks for top-n recommendation. In: Proceedings of the Twelfth ACM International Conference on Web Search and Data Mining. pp. 150–158 (2019)

Ning, X., Karypis, G.: Slim: Sparse linear methods for top-n recommender systems. In: 2011 IEEE 11th International Conference on Data Mining. pp. 497–506. IEEE (2011)

Steam video games (2022), https://www.kaggle.com/tamber/steam-video-games/data

Torres-Tramón, P.: Diffusion-based models for semantic relatedness. Ph.D. thesis, NUI Galway (2020)

Yahoo!movie (2022), https://webscope.sandbox.yahoo.com/

Acknowledgement

This publication describes research supported in part by a grant from Science Foundation Ireland under Grant number SFI/12/RC/2289_P2 and from the Hardiman scholarship provided by the College of Science and Engineering at the University of Galway. For the purpose of Open Access, the author has applied a CC BY public copyright licence to any Author Accepted Manuscript version arising from this submission.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this paper

Cite this paper

Zhou, Y., Hayes, C. (2023). Graph-Based Diffusion Method for Top-N Recommendation. In: Longo, L., O’Reilly, R. (eds) Artificial Intelligence and Cognitive Science. AICS 2022. Communications in Computer and Information Science, vol 1662. Springer, Cham. https://doi.org/10.1007/978-3-031-26438-2_23

Download citation

DOI: https://doi.org/10.1007/978-3-031-26438-2_23

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-26437-5

Online ISBN: 978-3-031-26438-2

eBook Packages: Computer ScienceComputer Science (R0)