Abstract

Imbalanced image datasets are commonly available in the domain of biomedical image analysis. Biomedical images contain diversified features that are significant in predicting targeted diseases. Generative Adversarial Networks (GANs) are utilized to address the data limitation problem via the generation of synthetic images. Training challenges such as mode collapse, non-convergence, and instability degrade a GAN’s performance in synthesizing diversified and high-quality images. In this work, MSG-SAGAN, an attention-guided multi-scale gradient GAN architecture is proposed to model the relationship between long-range dependencies of biomedical image features and improves the training performance using a flow of multi-scale gradients at multiple resolutions in the layers of generator and discriminator models. The intent is to reduce the impact of mode collapse and stabilize the training of GAN using an attention mechanism with multi-scale gradient learning for diversified X-ray image synthesis. Multi-scale Structural Similarity Index Measure (MS-SSIM) and Frechet Inception Distance (FID) are used to identify the occurrence of mode collapse and evaluate the diversity of synthetic images generated. The proposed architecture is compared with the multi-scale gradient GAN (MSG-GAN) to assess the diversity of generated synthetic images. Results indicate that the MSG-SAGAN outperforms MSG-GAN in synthesizing diversified images as evidenced by the MS-SSIM and FID scores.

This work is supported by the Munster Technological University’s Risam Scholarship Award.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Generative adversarial networks (GANs) are generative models used for image synthesis in the computer vision domain [1]. GANs are composed of generator and discriminator models. The generator takes a random vector input and generates a noisy image. This image is passed to the discriminator model. The discriminator model classifies the generated images from the real images and provides gradient feedback to the generator. The generator model updates its learning of the feature distribution of real images through feedback provided by the discriminator. GANs work with adversarial training where the generator and the discriminator try to improve their performance based on each other’s feedback [2].

GANs face difficulty in synthesizing images with complex and diverse features. This problem arises due to technical challenges that occur during the training of GANs. Training challenges include mode collapse, non-convergence, and instability [3]. Mode collapse refers to the generation of identical synthetic images by the generator regardless of diverse real images while the non-convergence and instability problem imbalanced the training due to the vanishing gradient problem. These problems limit the utility of GANs for image datasets with a diverse range of salient image features [4]. In general, GANs are designed with convolutional neural networks (CNNs) that fail to capture image features such as texture, geometry, position, and color of the objects. One of the reasons could be that the CNNs mostly utilize convolutional features in modeling the dependencies over diverse image regions [5].

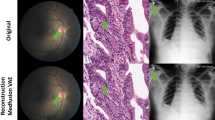

In the domain of biomedical imaging, the diverse features of biomedical images are important to consider in disease recognition or computer-based diagnosis tasks [6]. These diverse features contain significant information about the disease being diagnosed and analyzed. GANs have been utilized for biomedical image synthesis. Several imaging modalities such as X-rays, Computed Tomography (CT), Magnetic Resonance (MR), Ultrasound, and Positron Emission Tomography (PET) have utilized GANs to generate synthetic samples [7]. The generation of diversified synthetic images is a significant barrier for GANs that limits their utility in the biomedical imaging domain.

X-ray images are widely utilized to diagnose diseases in the human body. X-ray images contain a wide spectrum of disease features that help physicians to monitor diseases more accurately [8]. Publicly available X-ray image datasets are limited and imbalanced [9]. Image synthesis is a potential means of augmenting and balancing these X-ray images. In image synthesis, synthetic images are produced by replicating the actual distributions of image features. Therefore, this method is significant as compared to the traditional augmentation approaches such as geometrical transformations [10]. GANs have demonstrated remarkable advancements in image synthesis in the biomedical imaging domain [11].

State-of-the-art GANs such as ProGAN [12], StyleGAN [13], and MSG-GAN [14] have been used for biomedical image synthesis. These GAN architectures have demonstrated significant performance in generating diverse images [15]. Minibatch discrimination, PixNorm, progressive growth of GAN layers, and Spectral normalization techniques have also been utilized to enhance the diversity of synthetic images. The multi-scale gradient technique enables the discriminator learning more robust for the classification of real and synthetic images [16]. Biomedical images contain salient disease features such as the location, size, color, and structure of the disease region of interest. These features are susceptible and important to predict and analysis of the disease. GANs learn images through convolutional features without giving attention to these salient features when generating synthetic images. However, it is important for a GAN to learn these biomedical image features during the training process.

In the domain of image recognition, self-attention is considered the best approach to focusing on diverse features of the images [17]. The self-attention measures relative information of features based on their feature maps and combines them globally with a weighted scoring function. Consequently, it helps to focus on the significant features for the specific application tasks [5].

To address the training challenges of GANs, several GAN variants based on the attention mechanisms have attempted to improve the training performance of GANs for natural and biomedical images [17]. Self-attention improves the learning of generator and discriminator models in generating diversified biomedical images [18].

In order to balance and stabilize the training of a GAN, the loss function has also a great impact on the GAN’s training performance for generating realistic synthetic images. Loss functions such as WGAN-GP, Hinge, and relativistic hinge losses have shown a reasonable improvement in generating diversified synthetic images [19]. However, the hinge loss has shown a great capacity to improve the GAN’s learning to generate diverse biomedical images [20].

The occurrence of mode collapse and diversity of synthetic images is assessed by the Multi-scale Structural Similarity Index Measure (MS-SSIM) and Frechet Inception Distance (FID). The MS-SSIM score can detect the lack of diversity using perceptual similarity measures in synthetic images while the FID score provides a distance between the feature distributions of real and synthetic images [21].

This work contributes a novel GAN architecture for diversified X-ray image synthesis. The generator and discriminator models use multi-scale gradient learning to learn the gradient information at intermediate layers of the generator and discriminator models using multi-scale image resolutions during the training of GAN. A self-attention layer is proposed in the generator and discriminator models to learn the long-range dependencies of X-ray image features during training through a multi-scale gradient approach. The relativistic-hinge loss is used to stabilize the training and generate diverse synthetic images. The MS-SSIM and FID scores are used to evaluate the diversity of generated images.

2 Related Work

Several GAN models with modified architectures and loss functions have been proposed to improve the generation of diverse synthetic images. GAN architectures have been proposed with novel discriminators and generators based on the application domains. The performance of GANs has improved by embedding new convolutional layers, normalization, and regularization techniques in the generator and discriminator models [29,30,31]. Several loss functions have been proposed to stabilize the training of GANs [32]. These advancements demonstrate significant improvements in GANs but have a limited scope for synthesizing improved diversified and high-quality images for different application domains.

In the domain of biomedical imaging, despite of above contributions, variants of attention mechanisms are proposed in GAN architectures to enhance the capacity of GANs to generate diversified and high-quality images as detailed in Table 1. Several attention mechanisms with GANs have been proposed for different applications such as image segmentation, image reconstruction, image synthesis, and image-image translation as detailed in Table 1. The attention mechanisms embedded in the generator, discriminator, or both models can improve the diversity and quality of generated images. These GANs utilize conditional information for the segmentation and reconstruction of biomedical images using different attention mechanisms. For image synthesis, self-attention with progressively growing GAN is proposed to generate diversified dermoscopic images. The authors succeed to alleviate partial mode collapse in their GAN architecture. Similarly, a mask-attention is proposed to generate high-quality Computed Tomography (CT) images with a conditional GAN. The authors utilize additional information on attention maps of targeted diseases to improve the quality of generated images. This approach also requires additional effort for mapping the attention masks of the diseases.

Generally, conditional masks of diseases are not available publicly in the domain of biomedical imaging. It requires an additional effort from physicians to annotate the disease masks. This problem limits the scope of GANs to only annotated biomedical image datasets. However, unconditional biomedical images require more work in the context of GANs to address this limitation. Therefore, this work investigates the utility of self-attention feature maps to guide a GAN using multi-scale gradient learning for synthesizing diversified biomedical images.

3 Methodology

The workflow of the proposed approach has been depicted in Fig. 1. The MSG-SAGAN generates synthetic X-ray images using multi-scale gradient learning between the intermediate layers of the generator and discriminator models. The generator and discriminator models are developed with the convolutional and self-attention layers to enable the relationships among long-range dependencies of image features for stabilizing the training and generating diversified X-ray images. Self-attention utilizes feature attention maps to improve the learning of the generator and discriminator models as depicted in Fig. 2.

3.1 Dataset

In this work, the publicly available dataset of Corona Virus Disease (COVID-19) chest X-ray images is utilized [33]. The dataset contains 3616 X-ray images. The images were resized into \(64 \times 64\) resolution. The X-ray images were preprocessed using a horizontal flipping to augment the data size.

3.2 GAN Architecture

The Multi-scale Gradient Self-attention GAN (MSG-SAGAN) architecture utilizes a multi-scale gradient [16] learning approach between the generator and discriminator models. In MSG-SAGAN, the discriminator analyzes the output of the intermediate layers of the generator instead of looking only at the final layer output. The discriminator sends gradient feedback to multiple scales of the generator that helps a generator to create realistic diversified images. The training stabilizing techniques such as PixNorm and Mini-batch standard deviation are implemented within the GAN architecture. The PixNorm is embedded in the generator model to normalize the feature vectors. The Mini-batch standard layer is embedded into the discriminator of the GAN architecture to improve the diversity of generated image samples. The MSG-SAGAN architecture is trained with 500 epochs with a batch size of 16. As a baseline, the MSG-GAN [16] is reimplemented and trained on the CelebA dataset using the same parameters such as WGAN-GP loss, RMSprop optimizer, and 0.003 learning rates for the generator and discriminator models.

The proposed architecture of MSG-SAGAN. The MSG-SAGAN is trained using multi-scale gradient learning at intermediate layers of the generator and discriminator models to generate X-ray images. The embedding of the self-attention mechanism in each block of the generator and discriminator models helps to generate improved diversified images through learning long-range dependencies of image features.

Hyperparameters: The hyperparameters have a huge impact on the training performance of MSG-SAGAN architecture. The selection of efficient hyperparameters can improve the stability of GANs and their capacity to generate diversified synthetic images. In this work, the proposed MSG-SAGAN is trained with an Adam optimizer. The generator and discriminator models are fine-tuned using different learning rates such as 0.003, 0.0003, 0.0002, and 0.0001 to evaluate the MSG-SAGAN for diverse image synthesis. The equalized learning rates are used for both generator and discriminator models to balance the training of MSG-SAGAN.

Spectral Normalization: Spectral normalization is used in the generator and discriminator models of the MSG-SAGAN. It helps the MSG-SAGAN avoid noisy gradients and enables fewer discriminator updates per generator, reducing the computational cost of training and improving the diversity of synthetic images.

Loss Function: The experiments were conducted using a relativistic-hinge loss function as defined in Eq. 1 and 2. Relativism in the hinge loss helps the discriminator to improve its learning by making predictions of the real images as half of the images are fake on average instead of taking them all as real. This prior training information helps the discriminator to classify and predict the real and fake images more accurately [19].

In Eq. 1 and 2 as reported in [19], discriminator and generator losses are defined for real and generated images. The real image samples are defined with \(x_r\) and the generated samples are defined with \(x_g\) where P and Q refer to the distributions of real and generated data respectively. The non-transformed layer is denoted by \(C\left( x\right) \) while \(D\left( x\right) \) denotes the transformed layer.

Self-attention Mechanism: The self-attention is embedded in the generator and discriminator models of the MSG-SAGAN. The self-attention has a significant capacity for modeling relationships between diverse features in images. These diverse features include different spatial regions, channels, and pixels of images [17]. The self-attention utilizes two feature spaces f and g transformed by previous hidden layer \(x \in \) \(\mathbb {R}^{C \times N}\) to calculate the attention [5] shown in Fig. 2. The attention function is calculated using the following equation where feature spaces f and g are \(f(x)=W_f x, g(x)=W_g x\):

In Eq. 3, \(\beta _{j, i}\) indicates the range of attention where the model computes mapping of \(j^{\text{ th }}\) location of the \(j^{\text{ th } }\) feature regions. Moreover, C denotes the number of channels while N denotes the number of feature locations of features transformed by the prior hidden layer. The output of the overall attention layer is formulated [5] as follows:

In Eq. 4, the output o is unrolled as \(o=\left( o_1, o_2, \ldots , o_j, \ldots , o_N\right) \in \) \(\mathbb {R}^{C \times N}\) while \(W_g \in \mathbb {R}^{C \times C}, W_f \in \mathbb {R}^{C \times C}\), \(\boldsymbol{W}_{\boldsymbol{h}} \in \mathbb {R}^{C \times C}\), and \(\boldsymbol{W}_{\boldsymbol{v}} \in \mathbb {R}^{C \times C}\) are learned weight metrics. These weight metrics are implemented as \(1 \times 1\) convolutions within the attention mechanism. The channel count is reduced as c/k to improve the memory efficiency where k is set to 8 as suggested in [5].

Furthermore, the output of the attention layer is multiplied by a scale parameter and appended back to the input feature map [5]. So, the final output of the self-attention layer will be:

In Eq. 5, \(\gamma \) is a learnable scale parameter that is initialized at zero.

3.3 Identification of Mode Collapse Problem

The occurrence of mode collapse is identified by the MS-SSIM. The MS-SSIM computes the similarity score between two images using contrast, structure, and luminance features. MS-SSIM score is measured using randomly selected image pairs from the dataset to asses the diversity of synthetic images. The diversity of images is compared by measuring the MS-SSIM score from the real dataset and synthetic image dataset generated by GANs. A higher MS-SSIM score of the synthetic dataset indicates the occurrence of mode collapse in GANs. MS-SSIM can be computed between two image samples a and b as defined in Eq. 6 [34].

Contrast (C) and structural (S) features of images are computed at scale j as denoted in Eq. 6. Luminance (I) is calculated at the coarsest scale (M). The \(\alpha \), \(\beta \), and \(\gamma \) are the weight parameters as detailed in [35]. In this work, 3616 real and 3616 synthetic X-ray images are used to compute the MS-SSSIM scores of real and synthetic image datasets.

3.4 Evaluation of the Diversity and Quality of Synthetic X-ray Images

The diversity and quality of generated images are evaluated using the FID scores. FID computes the Wasserstein-2 distance between synthetic images and real images using feature activations [36]. It captures the multivariate Gaussian activations by calculating the mean and covariance of the images (real and synthetic) using the last pooling layer of an Inception-V3 model. The FID score is calculated as shown in Eq. 7, [34].

In Eq. 7, r and s denote real and synthetic images while \(\left( \mu _{r}, \varSigma _{r}\right) \) and \(\left( \mu _{s}, \varSigma _{s}\right) \) denote the mean and covariances of real and synthetic images. The FID score ranges from 0.0 to \(+\infty \). The higher FID score shows a larger distance between synthetic and real data distributions that indicates the occurrence of mode collapse [34]. A lower FID score shows a smaller distance between synthetic and real data distributions that indicates a higher degree of diversity. This work measures FID using 3616 real and 3616 generated images.

4 Results and Discussion

The MSG-SAGAN is proposed to alleviate the mode collapse in the MSG-GAN and improve the diversity of generated synthetic images in the context of X-ray images. MSG-SAGAN is a variant of MSG-GAN that utilizes an attention mechanism with multi-scale gradient learning to enhance the efficacy of synthesizing improved diversified X-ray images. The MS-SSIM score is used to identify the occurrence of mode collapse while the FID scores are used for the evaluation of the diversity in synthetic images. Resultant MS-SSIM and FID scores of MSG-GAN and MSG-SAGAN architectures are compared under a range of parameter settings as denoted in Table 2.

The reimplementation of the MSG-GAN as detailed in [16] resulted in a higher FID score than the original work when evaluated against the CelebA dataset. This was likely due to the number of real and synthetic images used in the calculation of FID. These details are omitted from [16] while in this work 10,000 real and 10,000 synthetic images were used in calculating the FID.

In the context of diverse synthetic X-ray images, the MSG-GAN\(_{2}\) is trained using the same parameter settings including the loss, optimizer, learning rate, and horizontal flipping data augmentation. MSG-GAN underperformed in synthesizing diversified X-ray images as indicated by the degraded MS-SSIM and FID scores.

The WGAN-GP loss is used to stabilize the training of GANs by avoiding the vanishing gradient problem. However, the RMSprop optimizer does not converge the training using the WGAN-GP loss for X-ray images because the RMSprop only relies on the second-order moment of gradients which leads to unstable training. Therefore, this parameter setting of MSG-GAN was not efficient to alleviate the mode collapse, stabilize the training, and generate diversified X-ray images.

The X-ray images contain salient features such as the spine, heart, and lungs with their visual signatures like ribs, aortic arch, and distinct curvature of lower lungs. All these features are important to learn by the discriminator so that it can provide constructive feedback to the generator model. So, a GAN should focus on these X-ray image features when generating synthetic images. The proposed architecture of MSG-SAGAN has the capacity to learn these X-ray features using the attention feature maps as depicted in Fig. 2.

Firstly, the effect of data augmentation is analyzed. The MSG-GAN\(_{3}\) does not utilize the horizontal flipping and the results of MS-SSIM and FID are slightly improved but no significant improvement was seen as the higher MS-SSIM score of synthetic X-ray images than the MS-SSIM score of real images indicates the occurrence of mode collapse. The MSG-GAN\(_{7}\) with Adam optimizer and relativistic hinge loss is trained without horizontal flipping but the results were degraded as compared to MSG-GAN\(_{6}\) with flipping. Furthermore, the MSG-SAGAN\(_{6-8}\) utilizes the horizontal flipping and alleviated mode collapse, and improved the diversity of synthetic images as compared to the MSG-SAGAN\(_{3-5}\) that does not utilize the horizontal flipping.

Secondly, the MSG-GAN\(_{4}\) is trained with an Adam optimizer and WGAN-GP loss that degrade the results. Moreover, the MSG-GAN\(_{6-11}\) and MSG-SAGAN\(_{1-8}\) are trained with the Adam optimizer and the relativistic hinge loss that alleviates the mode collapse and improves the diversity of generated images. The degraded results are evident from the other parameters such as spectral norm and attention mechanism. The Adam optimizer outperformed RMSprop due to the fact that it has the capacity to stabilize the training and converge faster because it uses both first and second-order moments of the gradients.

Thirdly, the relativistic hinge loss is used with the Adam and RMSprop optimizer in the MSG-GAN\(_{6-11}\) and MSG-SAGAN\(_{1-8}\). The relativistic hinge loss indicates significant improvement to alleviate the mode collapse and improve the diversity of synthetic images because relativism in the hinge loss helps a discriminator to provide constructive feedback to the generator.

The learning rate has a huge impact on the training of the GAN architectures. The most performant learning rate for MSG-GAN was 0.003 while 0.0001 for MSG-SAGAN. This happens because the multi-scale gradient learning stabilizes the training with a learning rate of 0.003 while the self-attention mechanism balances the training with a learning rate of 0.0001 as indicated in Table 2.

Results indicate that spectral normalization degrades the training of the MSG-GAN while improving the training of the MSG-SAGAN as indicated in Table 2. In the MSG-GAN, spectral normalization degrades the significant gradients that are flowing between the generator and the discriminator models (See MSG-GAN\(_{11}\)). Whereas, spectral normalization helps to avoid noisy gradients that are produced during the training of MSG-SAGAN due to the attention mechanism.

MSG-SAGAN\(_{8}\) outperforms the MSG-GAN\(_{6}\) in terms of synthesizing diversified images and stabilizing the training process. Integrating the self-attention mechanism improves the flow of multi-scale gradients between the generator and discriminator models with small learning rates while degrading with large ones. The multi-scale gradients help improve the generator’s learning capacity and discriminator models by propagating the gradients between the intermediate layers of the generator to the discriminator and vice versa. Consequently, the feature attention maps help a GAN to make relationships between long-range dependencies of the diverse image features.

The most performant MSG-GAN\(_{6}\) instance results in an improved MS-SSIM of 0.474 for synthetic X-ray images as compared to real images and an FID of 167.1. However, the most performant MSG-SAGAN\(_{8}\) instance results in an improved MS-SSIM of 0.50 for synthetic X-ray images as compared to real images and an improved FID of 139.6. The MS-SSIM and FID scores for MSG-SAGAN\(_{8}\) indicate a stable training period and a reduction in the impact of mode collapse while synthesizing improved diversified X-ray images as compared to the alternate instances evaluated.

5 Conclusion

In this work, MSG-SAGAN was proposed to reduce the impact of mode collapse and training instability for generating synthetic X-ray images. The MSG-SAGAN demonstrated an improved capacity for the synthesis of diversified X-ray images using the attention mechanism as compared to the MSG-GAN. The MSG-SAGAN was evaluated under different settings to quantify their impact on the diversity of synthetic images generated. Results were evaluated using the MS-SSIM and FID scores. The most performant MS-SSIM (0.50) and FID (139.6) were produced by MSG-SAGAN.

The MS-SSIM and FID scores indicate that the multi-scale gradients approach in a GAN is performant with a learning rate of 0.003 for X-ray images. However, an attention mechanism with multi-scale gradient learning is the most performant with a learning rate of 0.0001. These results of MS-SSIM and FID demonstrate the impact of learning rates in the training of GANs to synthesize diversified X-ray images. A learning rate of 0.0001 utilizes small training steps to update the gradient weights for each iteration to converge the MSG-SAGAN training to balance and stabilized training.

Spectral normalization degrades the training stability of MSG-GAN while improving the training stability of MSG-SAGAN. Adam was the most performant optimizer in both MSG-GAN and MSG-SAGAN. Relativistic hinge loss stabilizes the training and improves the generation of diversified X-ray images. The data augmentation of horizontal flipping indicates a significant improvement in stabilizing the training of MSG-SAGAN to synthesize diversified X-ray images. Horizontal flipping provides mirror copies of X-ray images that improve the learning of MSG-SAGAN with training more on salient features of X-ray images.

In future work, different variants of attention mechanisms will be investigated with a multi-scale gradient approach in the GAN architecture for synthesizing X-ray images. The self-attention will be integrated with different positions in the generator and discriminator models or only with the generator or discriminator model in the MSG-SAGAN. Different learning rates will also be investigated to synthesize the improved diversified X-ray images. This work will be extended with the integration of self-attention and its variants into state-of-the-art GANs such as StyleGAN V3, and Projected GANs.

References

Wang, Z., She, Q., Ward, T.E.: Generative adversarial networks in computer vision: a survey and taxonomy. ACM Comput. Surv. (CSUR) 54(2), 1–38 (2021)

Goodfellow, I., et al.: Generative adversarial networks. Commun. ACM 63(11), 139–144 (2020)

Jabbar, A., Li, X., Omar, B.: A survey on generative adversarial networks: variants, applications, and training. ACM Comput. Surv. (CSUR) 54(8), 1–49 (2021)

Wu, Z., Wang, Z., Yuan, Y., Zhang, J., Wang, Z., Jin, H.: Black-box diagnosis and calibration on GAN intra-mode collapse: a pilot study. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 17(3s), 1–18 (2021)

Zhang, H., Goodfellow, I., Metaxas, D., Odena, A.: Self-attention generative adversarial networks. In: International Conference on Machine Learning, pp. 7354–7363. PMLR (2019)

Liu, Z., et al.: A survey on applications of deep learning in microscopy image analysis. Comput. Biol. Med. 134, 104523 (2021)

AlAmir, M., AlGhamdi, M.: The role of generative adversarial network in medical image analysis: an in-depth survey. ACM Comput. Surv. (CSUR) 55, 1–36 (2022)

Aggarwal, R., et al.: Diagnostic accuracy of deep learning in medical imaging: a systematic review and meta-analysis. NPJ Digit. Med. 4(1), 1–23 (2021)

Álvarez-Rodríguez, L., de Moura, J., Novo, J., Ortega, M.: Does imbalance in chest X-ray datasets produce biased deep learning approaches for Covid-19 screening? BMC Med. Res. Methodol. 22(1), 1–17 (2022)

Shorten, C., Khoshgoftaar, T.M.: A survey on image data augmentation for deep learning. J. Big Data 6(1), 1–48 (2019)

Ahmad, W., Ali, H., Shah, Z., Azmat, S.: A new generative adversarial network for medical images super resolution. Sci. Rep. 12(1), 1–20 (2022)

Kim, M., Kim, S., Kim, M., Bae, H.J., Park, J.W., Kim, N.: Realistic high-resolution lateral cephalometric radiography generated by progressive growing generative adversarial network and quality evaluations. Sci. Rep. 11(1), 1–10 (2021)

Hong, S., et al.: 3D-StyleGAN: a style-based generative adversarial network for generative modeling of three-dimensional medical images. In: Engelhardt, S., et al. (eds.) DGM4MICCAI/DALI 2021. LNCS, vol. 13003, pp. 24–34. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-88210-5_3

Molahasani Majdabadi, M., Choi, Y., Deivalakshmi, S., Ko, S.: Capsule GAN for prostate MRI super-resolution. Multimed. Tools Appl. 81(3), 4119–4141 (2022)

Park, H.Y., et al.: Realistic high-resolution body computed tomography image synthesis by using progressive growing generative adversarial network: visual turing test. JMIR Med. Inform. 9(3), e23328 (2021)

Karnewar, A., Wang, O.: MSG-GAN: multi-scale gradients for generative adversarial networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7799–7808 (2020)

Guo, M.H., et al.: Attention mechanisms in computer vision: a survey. Comput. Vis. Media 8, 331–368 (2022)

Abdelhalim, I.S.A., Mohamed, M.F., Mahdy, Y.B.: Data augmentation for skin lesion using self-attention based progressive generative adversarial network. Expert Syst. Appl. 165, 113922 (2021)

Jolicoeur-Martineau, A.: The relativistic discriminator: a key element missing from standard GAN. arXiv preprint arXiv:1807.00734 (2018)

Kim, E., Cho, H., Ko, E., Park, H.: Generative adversarial network with local discriminator for synthesizing breast contrast-enhanced MRI. In: 2021 IEEE EMBS International Conference on Biomedical and Health Informatics (BHI), pp. 1–4. IEEE (2021)

Saad, M.M., Rehmani, M.H., O’Reilly, R.: Addressing the intra-class mode collapse problem using adaptive input image normalization in GAN-based X-ray images. In: 2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), pp. 2049–2052 (2022). https://doi.org/10.1109/EMBC48229.2022.9871260

Li, H., et al.: Explainable attention guided adversarial deep network for 3D radiotherapy dose distribution prediction. Knowl.-Based Syst. 241, 108324 (2022)

Shang, C., et al.: Short-axis pet image quality improvement by attention CycleGAN using total-body pet. J. Healthcare Eng. 2022 (2022)

Tang, J., Zou, B., Li, C., Feng, S., Peng, H.: Plane-wave image reconstruction via generative adversarial network and attention mechanism. IEEE Trans. Instrum. Meas. 70, 1–15 (2021)

Yin, J., Zhou, Z., Xu, S., Yang, R., Liu, K.: A generative adversarial network fused with dual-attention mechanism and its application in multitarget image fine segmentation. Comput. Intell. Neurosci. 2021 (2021)

Deng, H., Zhang, Y., Li, R., Hu, C., Feng, Z., Li, H.: Combining residual attention mechanisms and generative adversarial networks for hippocampus segmentation. Tsinghua Sci. Technol. 27(1), 68–78 (2021)

Liu, Y., Meng, L., Zhong, J.: MAGAN: mask attention generative adversarial network for liver tumor CT image synthesis. J. Healthcare Eng. 2021 (2021)

Kearney, V., et al.: Attention-aware discrimination for MR-to-CT image translation using cycle-consistent generative adversarial networks. Radiol. Artif. Intell. 2(2) (2020)

Radford, A., Metz, L., Chintala, S.: Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv preprint arXiv:1511.06434 (2015)

Miyato, T., Kataoka, T., Koyama, M., Yoshida, Y.: Spectral normalization for generative adversarial networks. arXiv preprint arXiv:1802.05957 (2018)

Nie, W., Patel, A.B.: Towards a better understanding and regularization of GAN training dynamics. In: Uncertainty in Artificial Intelligence, pp. 281–291. PMLR (2020)

Pan, Z., et al.: Loss functions of generative adversarial networks (GANs): opportunities and challenges. IEEE Trans. Emerg. Top. Computat. Intell. 4(4), 500–522 (2020)

Rahman, T., et al.: Exploring the effect of image enhancement techniques on Covid-19 detection using chest X-ray images. Comput. Biol. Med. 132, 104319 (2021)

Borji, A.: Pros and cons of GAN evaluation measures. Comput. Vis. Image Underst. 179, 41–65 (2019)

Wang, Z., Simoncelli, E.P., Bovik, A.C.: Multiscale structural similarity for image quality assessment. In: The Thirty-Seventh Asilomar Conference on Signals, Systems & Computers, vol. 2, pp. 1398–1402. IEEE (2003)

Miyato, T., Koyama, M.: cGANs with projection discriminator. In: International Conference on Learning Representations (2018). https://openreview.net/forum?id=ByS1VpgRZ

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this paper

Cite this paper

Saad, M.M., Rehmani, M.H., O’Reilly, R. (2023). A Self-attention Guided Multi-scale Gradient GAN for Diversified X-ray Image Synthesis. In: Longo, L., O’Reilly, R. (eds) Artificial Intelligence and Cognitive Science. AICS 2022. Communications in Computer and Information Science, vol 1662. Springer, Cham. https://doi.org/10.1007/978-3-031-26438-2_2

Download citation

DOI: https://doi.org/10.1007/978-3-031-26438-2_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-26437-5

Online ISBN: 978-3-031-26438-2

eBook Packages: Computer ScienceComputer Science (R0)