Abstract

Recently, significant efforts have been made to explore human activity recognition (HAR) techniques that use information gathered by existing indoor wireless infrastructures through WiFi signals without demanding the monitored subject to carry a dedicated device. The key intuition is that different activities introduce different multi-paths in WiFi signals and generate different patterns in the time series of channel state information (CSI). In this paper, we propose and evaluate a full pipeline for a CSI-based human activity recognition framework for 12 activities in three different spatial environments using two deep learning models: ABiLSTM and CNN-ABiLSTM. Evaluation experiments have demonstrated that the proposed models outperform state-of-the-art models. Also, the experiments show that the proposed models can be applied to other environments with different configurations, albeit with some caveats. The proposed ABiLSTM model achieves an overall accuracy of 94.03%, 91.96%, and 92.59% across the 3 target environments. While the proposed CNN-ABiLSTM model reaches an accuracy of 98.54%, 94.25% and 95.09% across those same environments.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- WiFi

- Channel State Information (CSI)

- Human Activity Recognition (HAR)

- Deep learning

- Convolutional Neural Network (CNN)

- Long Short Term Memory (LSTM)

1 Introduction

With the rise of the Internet of Things, human sensing techniques have gained prominence with numerous persuasive applications such as human activity recognition, smart healthcare, safety surveillance, and ubiquitous interaction all gaining popularity. Previously established research approaches to HAR have fallen into three categories of techniques: vision-based, low-cost radar-based and wearable sensor-based approaches [11]. However, there are many limitations to using traditional techniques for HAR. Vision-based approaches can only operate in a limited number of line-of-sight (LOS) environments. They are vulnerable to lighting conditions, obstacles, as well as the problem of dead angles. Vision systems also raise many issues related to human privacy. Meanwhile, low-cost radar-based systems often circumvent the problems of privacy but have short operational distances, typically in the tens of centimetres. Although wearable sensor-based solutions achieve fine-grained behaviour awareness, their high cost and limitations with respect to real-time nature make them unsuitable for some applications (e.g. survival applications).

From a different technology perspective, WiFi technology has opened the way for numerous technological revolutions. It has an impact on almost every aspect of modern life, especially in indoor environments. It does not require wearable sensors, yet it has important features that make it a desirable choice when compared to other sensing technologies. WiFi signals travel freely in the atmosphere due to the unguided property of radio signal propagation and may be reflected by the wall and/or other objects in an environment. Antennas at the receiver may thus receive signals from two or more paths, a phenomenon known as a multi-path phenomenon. The core idea of human activity recognition using WiFi signals is that moving bodies affect multi-path propagation and that different moves have different discernible effects. WiFi-based technology typically includes two types of wireless signals: RSSI (Radio Signal Strength Indicator) and CSI (Channel State Information) [11]. RSS describes coarse-grained information about the communication link, whereas CSI describes fine-grained information about the state of the communication channels. RSS is less stable than CSI because it cannot capture dynamic changes in the signal while the activity is being performed. In recent years, CSI has received more attention than RSS as a more informative specification of WiFi signals for different sensing applications such as human activity recognition, human presence detection, fall detection, gesture recognition, people counting, and so on. Such models take advantage of the fact that when a person moves between the transmitter and receiver, the reflected wireless signals from the body create a distinct pattern [12]. CSI is a complex value representing the amplitude and phase information of multiple paths. From this, we can then analyze the received signals in terms of amplitude and phase shift to recognize human activities. In this paper, we propose a WiFi CSI-based human activity recognition approach using two deep learning models to recognize predefined different human activities.

Despite the numerous advantages of using CSI to recognize human activities, there are some drawbacks. Firstly, the complicated relationship between CSI measurements and human activities is challenging to model precisely using statistical models or traditional machine learning algorithms. Secondly, WiFi network settings may affect network performance. Thus, some WiFi sensing applications necessitate a high CSI measurement frequency in order to achieve optimal performance. This could result in increased overhead for WiFi communications, resulting in decreased sensing performance and efficiency.

In light of the above, the contributions in this paper are summarized as follows: First, we propose a human activity recognition framework using Attention-based Bidirectional Long Short Term Memory (ABiLSTM) and Convolutional neural network ABiLSTM (CNN-ABiLSTM). Second, we conduct experiments on three different indoor environments and evaluate the proposed framework across these environments. The performance evaluation shows that our system is significantly robust and generic enough to be used in training for other environments. Third, we investigate the impact of the spatial environment on the trained models and their ability to transfer across environments using the transfer learning approach.

The rest of the paper is organized as follows. Section 2 discusses work related to human activity recognition using WiFi Technology. Section 3 describes the proposed methodology. Later, in Sect. 4 we describe the evaluation and results of the proposed approach. Finally, we conclude the work and explain the future work in Sect. 5.

2 Related Work

WiFi-based human activity recognition has gained tremendous attention recently due to its ubiquitous availability in indoor areas. This section provides a brief literature review of the existing works related to WiFi-based human activity recognition.

The RSSI can be used to measure the distance as well as the channel condition between the transmitter and receiver as an indication of the power level being received at the receiver. Most of the previous work proposed the use of RSS changes to recognise human activities by analysing a specific space. For example, the authors in [18] captured RSSI values from WiFi signals to recognize four activities: lying down, crawling, standing, and walking, and achieved recognition accuracy of over 80%. In [7], the authors proposed an approach based on the slow fading component of the received RSSI and the SVM algorithm that achieves an overall accuracy of 94%. However, because RSSI only provides coarse-grained information about channel variations, it is frequently influenced by multi-path effects and noise.

Unlike RSSI, CSI can capture the combined effects of scattering, fading, and even power delay as a function of distance. Fine-grained changes in wireless channels can be detected by CSI. It is a common communication link channel property. Because of the use of the release of the Linux 802.12n CSI tool [9], a significant amount of research has been conducted to use CSI measurements in HAR tasks.

In [22], Wang et al. gathered 1440 CSI samples for six daily activities such as walking, sitting and standing. They proposed a multi-task 1D convolutional neural network (CNN) with a ResNet-based basic architecture [10] and a simple aggregate loss function. This architecture achieved an average accuracy of 88.13% which is somewhat low accuracy with respect to the number of activities included in the dataset.

A framework called CSITime was proposed by Yadav et al. [23]. CSITime is a generic neural network architecture for CSI-based HAR. They treated the HAR problem as a multivariate time series classification problem, using time-series methods as inspiration. They evaluate the model on three different datasets: ARIL [8], StanWiFi [24], and SignFi [14]. CSITime achieved an accuracy of 98.20%, 98%, and 95.42%, on the ARIL, StanWiFi, and SignFi datasets, respectively. However, due to the lack of a standard dataset that uses the CSI data collected in such settings, they were unable to assess the performance of the proposed model in an environment with high interference.

Memmesheimer et al. [15] used Efficient-Net [20] to classify the multi-variate signal sequences after encoding them as images. They evaluated the model’s performance on ARIL [8] and found accuracy to be 94.91%. The time-consuming nature of the computational process required to convert time-series data into image sequences is however one of the limitations of this work. Additionally, adopting a very complex model like Efficient-NET also increases the time needed for the computational process. In our own work, we directly extract helpful features from the time series data to avoid the requirement for this expressive encoding of time series sequences.

Damodaran et al. [5] proposed two different learning models for CSI-based HAR in indoor settings. One model uses sophisticated preprocessing and feature extraction methods based on wavelet analysis with a support vector machine (SVM) as a classifier, and the other model uses raw data directly with a long short-term memory (LSTM) network. In that work, the LSTM-based algorithms performed similarly to SVM-based algorithms despite requiring less preprocessing.

The work of Dempster et al. [6] addresses the trade-off between computational load versus accuracy of time series data-driven models. Their work transforms and classifies time series data using a random convolutional kernels method called ROCKET. They found that using a large number of random kernels was effective for capturing discriminative patterns in time series data, achieving state of the art classification results with a much reduced computational load. Their work is not focused in the domain of WiFi signals, but is of interest as a mechanism to address the complexity of time-series input.

Based on the previous achievements and limitations, we propose two deep learning models to automatically extract features from CSI measurements collected from multiple sub-carriers simultaneously in three different environments. The first model is mainly built based on attention-based bidirectional LSTM (BiLSTM). In the second model, we adopt the first model with a CNN layer before the BiLSTM layer to improve the recognition accuracy. The proposed models overcome some of the limitations of the mentioned related work such as the high cost of encoding time series sequences into image sequences and the difficulty of evaluating the proposed model in an environment with high interference.

3 Design and Methodology

In this section, we present the methodology of the proposed system for HAR including the data collection phase for model train and test, the methods adopted for data pre-processing and a description of the architecture of the deep neural network models. An overview of the proposed system is illustrated in Fig. 1. Each phase of the proposed model will be explained in detail as follows:

3.1 Data Collection

A publicly available dataset [2] is used to train our models. As the dataset is collected at the German Jordanian University, we named this dataset “GJWiFi”. The dataset was collected in three separate spatial environments: Laboratory is denoted E1, Hallway is denoted E2 and a Hybrid (Laboratory, Hallway and between them there is a barrier of 8 cm thickness) which is denoted E3. The settings of the three different environments are summarized in Table 1. Figure 2 shows a sketch of the three environments where Tx stands for transmitter and Rx stands for the receiver [2]. E1 and E2 are in LOS configurations while E3 is in NLOS configurations. For each environment, 10 subjects voluntarily participated in the data collection process. Each subject performed each activity a total of 20 times. The dataset contains 12 activities with different numbers of samples (i.e. the total number of CSI values for each activity) as illustrated in Table 2. Each environment contains 3000 data files. Each file contains measurements for 1 trial for 1 activity performed by 1 subject. The number of measurements in files is unequal. A Direct URL to the data: https://data.mendeley.com/datasets/v38wjmz6f6/1 is provided by the original authors.

3.2 Data Preprocessing

The data preprocessing phase consists of 5 stages:

-

Data Restructuring: The WiFi signals are stored in .csv files. We extract the CSI values only. The extracted CSI values are in complex form. So, we convert these complex CSI values to real values. Thus, amplitude measurements can be easily used in the training process.

-

Data Balancing: The number of instances for each activity is unequal as shown in Table 2 because the timing of performing each activity is different [2]. Classification based on an unbalanced dataset performs poorly [13]. To balance our dataset, we used under-sampling [21], balancing class distribution by removing the majority of class samples randomly. This is repeated until the instances of the majority and minority classes are balanced to 200258 samples for each activity.

-

Outliers Removal: Outlier values may result from noise in the environment, incorrect device reading, and an unexpected change in the environment. The Hampel filter [16] is used to eliminate these outliers by removing any reading that is greater than three times the standard deviation by replacing that value with the mean of the values within the sliding window. Since the transmitter is sending packets with a sampling rate of 320 packets/second. we apply the Hampel filter with a window size of 32 data points which is equivalent to 0.1 s.

-

Data Normalization: Because time series data can have a wide range of values, it should be scaled to a similar range of values to speed up the learning process [17]. For this, we used the robust-scalar technique. It scales data by subtracting the median and scales the data according to the Interquartile Range (IQR). The IQR is the range between the 1st quartile and the 3rd quartile as shown in Eq. (1), where X is the value of the feature, \(Q_{1}(X)\) is the 1st quartile, and \(Q_{3}(X)\) is the third quartile [19].

$$\begin{aligned} X_{scaled}=\frac{X-median(X)}{Q_{3}(X)-Q_{1}(X)} \end{aligned}$$(1) -

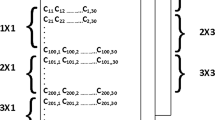

Data Windowing: Classification algorithms cannot be applied directly to raw time-series data. So, the windowing approach [4] was applied to transform the raw time-series data from the shape of the 2-D vector (samples, features) into a 3-D vector (timestamp, samples, features). At each time instance, 1 \(\times \) 3 \(\times \) 30 CSI streams are recorded during the measurements. A sliding window of 1.0 s is applied for all the instances to construct the CSI vector used in the training process. Then, new features were generated by aggregating the raw samples within each window. The activity with the highest number of occurrences (i.e. the most frequent) in that window was used to assign a class label to the transformed features. Moreover, we consider overlapping windows with 10% overlap instead of taking discrete windows. This ensures that each subsequent row in the transformed vector contains information from the previous window.

3.3 Deep Learning Models

After preprocessing, the collected activity data may still be very complex. The literature shows that it is difficult to effectively analyze CSI data using traditional methods such as SVM, decision trees, and fuzzy rule-based classifiers. The “GJWiFi” dataset is composed of time series measurements for human activities. To address the issue of extracting hidden patterns and dependencies from the complex data flow, we employ deep learning techniques that are effective in generating discriminative representations from complex data. We implemented two deep learning models for activity classification. The first model is built mainly using Attention-based Bidirectional LSTM (BiLSTM). The BiLSTM is a special LSTM that can extract both forward and backward long-term time dependencies on the time series sequences to make predictions more accurate. In the second model, a CNN is added to attention-based BiLSTM to take advantage of CNN in the detection of the most important local features without any human supervision and weight sharing that minimizes the cost of computing.

Attention-Based BiLSTM (ABiLSTM): The conventional LSTM can only process sequential CSI measurements in only forward direction. Thus, only past CSI information has been considered for the current hidden state. However, future information is extremely important when learning representative features for these similar activities. For example, laying down and sitting both require the human body to be lowered first, but the final positions for the two activities are different. As a result, we employ a BiLSTM network to learn effective features from raw CSI measurements. The BiLSTM is a bidirectional variant of LSTM that connects two hidden layers of opposite directions to the same output, so the network can remember information from forward and backward direction. This adds more context for recognition in memory as compared to LSTM. An attention layer is added to the BiLSTM model to give a different focus to the information extracted from the forward hidden layer and the backward hidden layer of BiLSTM. The learned features from BiLSTM are used as inputs for attention layer to generate an attention matrix that indicates the importance of features and time steps. The attention layer is implemented with normalised weights for each feature as input and time step as outputs. Then, learned features are combined with the attention matrix via element-wise multiplication, producing the modified feature matrix. In this model, the input layer takes the preprocessed CSI sequence as the input vector. The BiLSTM layer is configured with 64 hidden units using tanh as the activation function and the return sequence variable is set to true to use the complete feature matrix as input to the next layer. The attention layer has 64 hidden units. It is implemented as a softmax regression layer. The attention layer is followed by the dropout layer to decrease the probability of overfitting. The output layer is a dense layer which consists of 12 neurons and uses softmax as its activation function to calculate the likelihood of 12 different activities.

CNN and Attention-Based BiLSTM (CNN-ABiLSTM): This second model is an adaptive version of the ABiLSTM model. A CNN layer and max-pooling layer are added to the architecture of the ABiLSTM model to improve the accuracy. The CNN layer is added after the input layer directly. The CNN layer has 32 filters with a kernel size of 3 and uses rectified linear unit (relu) as an activation function. The CNN layer is followed by a max-pooling layer with a pool size of 2 to reduce the dimensions of the feature maps. The pooling layer summarises the features in a region of the feature map produced by a convolution layer. This makes the model more resistant to changes in features. As a result, it reduces the number of parameters to learn as well as the amount of computation done in the network.

Training of Learning Models: To develop our proposed models, we used a Google Colab (a web IDE) that executed commands written in pro-version Python. We train the proposed models using the three environments E1, E2 and E3 separately. Because of limited RAM resources in Google Colab, we took 12800 samples for each activity per subject (i.e. the total number of measurements for each activity class is 128000). Thus, 1536000 samples in total for each environment. We apply stratified splitting to split the samples of each environment into 80% for the training dataset and 20% for the testing dataset. We took 20% of the training dataset for validation. In each environment, samples for each subject are represented in training, validation and testing data. Then we evaluate the performance of the proposed models for the three environments E1, E2 and E3 independently in terms of precision, recall and f1-score. The models were trained using the Adam optimizer with a batch size of 64 and a learning rate of \( 10^{-3} \) to minimise data sample loss. We also use early stopping during training to prevent overfitting. The validation loss was monitored and the training is stopped when the validation loss worsened from one epoch to the next using a patience value equal to 1. After evaluating the proposed models on E1, E2 and E3 separately, we want to discover whether the proposed models are applicable to other environments with different settings. So, we apply the transfer learning approach using the proposed models trained in one environment and tested in the other two environments.

4 Results and Discussion

We compare the performance of the proposed model based on three aspects: first, we evaluate the overall performance of the proposed models in terms of precision, recall, f1-score, and accuracy for each activity; second, we evaluate the proposed models that were trained in one environment and tested using the other two environments before and after applying transfer learning [3]; third, we compare the proposed deep learning models with hand-crafted state-of-the-art models.

4.1 Overall Performance

Because of the distinctive multi-path distribution, the spatial environment in which the experiments were carried out is an important factor for the WiFi-based human activity recognition system. Therefore, we evaluate the activity recognition performance of ABiLSTM and CNN-ABiLSTM models for the three environments E1, E2 and E3 independently in terms of precision, recall, and f1-score as shown in Table 3. From Table 3, it is shown that the performance improves for the three environments while using the CNN-ABiLSTM learning model than the ABiLSTM learning model indicating the good effect of adding the CNN. A decrease in the performance is observed in the E2 and E3 environments when compared to the experiments performed on in the E1 environment. This may be due to two factors: (i) the distance between the transmitter and the receiver in the E2 and E3 environment is longer than the distance between the transmitter and receiver in the E1 environment. and (ii) the experiments conducted in E2 and E3 involves the hallway which is considered a public area in the university that may be affected by surroundings and cause fading effects for the signals.

4.2 Impact of Environment

To verify the effectiveness of the proposed models, we propose to evaluate the performance of each model trained in one environment and tested using the other two environments before and after applying transfer learning. Before applying transfer learning means testing the proposed models trained in one environment and testing using the other two environments. While after applying transfer learning means that we freeze all the layers of the pre-trained model and add a fully connected layer and an output layer. As shown in Table 4, the accuracy of testing the pre-trained models without transfer learning gives very low accuracy. This is to be expected as using WiFi signals in HAR for different spatial environments with different configurations affects the signals in various ways. Moreover, different human subjects with individual characteristics such as heights, weights, ages, genders, and body shapes will affect the signals in different ways, even if they are doing the same activity. When the transfer learning approach is applied, the recognition accuracy increased again after several epochs. Thus, the pre-trained models in one environment can adapt to the new environments after a few epochs of training. Therefore, the use of transfer learning to minimise retraining of CSI-based HAR models is promising.

4.3 Comparison with State-of-Art Approaches

We compare the proposed models with state-of-the-art approaches using the “GJWiFi” dataset to illustrate that the proposed deep learning-based approaches earlier outperformed hand-crafted approaches. We compare the proposed models with the support vector machine (SVM) model applied to the same dataset. The authors in [1] apply the SVM model for 6 activities instead of 12 activities as they combine some activities and consider them as one class. Specifically, they combine stationary activities like lying down, sitting and standing into one class. Moreover, they conducted experiments in the laboratory and hallway environments only. Table 5 summarizes a comparison between the proposed ABiLSTM, CNN-ABiLSTM and state-of-art SVM model. The results show that the recognition accuracy of the deep learning models is higher than the SVM model for the environments E1 and E2 except for ABiLSTM for E1 which has the same accuracy as SVM. However, the ABiLSTM model for E1 gives 94% accuracy for 12 activities instead of 6 activities. So it is better than the SVM model that gives the same accuracy for 6 activities only.

5 Conclusion and Future Work

This paper proposes a complete WiFi-based human activity recognition workflow from data collection to model evaluation. We developed two deep learning models: BiLSTM and CNN-BiLSTM. We evaluate the proposed model on different environment configurations separately using different performance metrics. We also evaluate the proposed models trained in one environment and tested using the other two environments before and after applying transfer learning. These experiments show a significant increase in the recall after applying transfer learning. Also, the recognition accuracy of the proposed deep learning models is significantly high compared to the state-of-art handcrafted models.

The potential areas for improvement in future work will include (i) extending the proposed model to recognize complex human activities, (ii) using transfer learning and fine-tuning approaches to improve the accuracy of the proposed models when tested in different environments, (iii) combine the three environments in one dataset and build a discriminator for the environment and propose a deep learning model to recognize the activity, human and in which environment the activity is performed, and (iv) examine approaches for treatment of time-series data input with a view to reducing computational load and/or increasing model accuracies.

References

Alsaify, B.A., Almazari, M., Alazrai, R., Alouneh, S., Daoud, M.I.: A CSI-based multi-environment human activity recognition framework. Appl. Sci. 12(2), 930 (2022)

Alsaify, B.A., Almazari, M.M., Alazrai, R., Daoud, M.I.: A dataset for Wi-Fi-based human activity recognition in line-of-sight and non-line-of-sight indoor environments. Data Brief 33, 106534 (2020)

Alshalali, T., Josyula, D.: Fine-tuning of pre-trained deep learning models with extreme learning machine. In: Proceedings - 2018 International Conference on Computational Science and Computational Intelligence, CSCI 2018, pp. 469–473 (2018)

Banos, O., Galvez, J.M., Damas, M., Pomares, H., Rojas, I.: Window size impact in human activity recognition. Sensors 14(4), 6474 (2014)

Damodaran, N., Haruni, E., Kokhkharova, M., Schäfer, J.: Device free human activity and fall recognition using WiFi channel state information (CSI). CCF Trans. Pervasive Comput. Interact. 2(1), 1–17 (2020)

Dempster, A., Petitjean, F., Webb, G.I.: ROCKET: exceptionally fast and accurate time series classification using random convolutional kernels. Data Min. Knowl. Discov. 34(5), 1454–1495 (2020)

Dib, W., Ghanem, K., Ababou, A., Nedil, M., Eskofier, B.: Receive signal strength- based human activity recognition. In: 2021 IEEE International Symposium on Antennas and Propagation and North American Radio Science Meeting, APS/URSI 2021 - Proceedings, pp. 365–366 (2021)

Federico, C., et al.: A public domain dataset for human activity recognition in free-living conditions. In: Proceedings - IEEE SmartWorld, Ubiquitous Intelligence and Computing, Advanced and Trusted Computing, Scalable Computing and Communications, Internet of People and Smart City Innovation, pp. 166–171 (2019)

Halperin, D., Hu, W., Sheth, A., Wetherall, D.: Tool release. ACM SIGCOMM Comput. Commun. Rev. 41(1), 53 (2011)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition 2016-December, pp. 770–778 (2015)

Khalili, A., Soliman, A.H., Asaduzzaman, M., Griffiths, A.: Wi-Fi sensing: applications and challenges. J. Eng. 2020(3), 87–97 (2020)

Liu, J., Teng, G., Hong, F.: Human activity sensing with wireless signals: a survey. Sensors 20(4), 1210 (2020)

Luque, A., Carrasco, A., Martín, A., de las Heras, A.: The impact of class imbalance in classification performance metrics based on the binary confusion matrix. Pattern Recognit. 91, 216–231 (2019)

Ma, Y., Zhou, G., Wang, S., Zhao, H., Jung, W.: SignFi. Proc. ACM Interact. Mob. Wearable Ubiquit. Technol. 2(1), 1–21 (2018)

Memmesheimer, R., Theisen, N., Paulus, D.: Gimme signals: discriminative signal encoding for multimodal activity recognition. In: IEEE International Conference on Intelligent Robots and Systems, pp. 10394–10401 (2020)

Pearson, R.K., Neuvo, Y., Astola, J., Gabbouj, M.: Generalized hampel filters. EURASIP J. Adv. Signal Process. 2016(1), 1–18 (2016)

Raju, V.N., Lakshmi, K.P., Jain, V.M., Kalidindi, A., Padma, V.: Study the influence of normalization/transformation process on the accuracy of supervised classification. In: Proceedings of the 3rd International Conference on Smart Systems and Inventive Technology, ICSSIT 2020, pp. 729–735 (2020)

Sigg, S., Shi, S., Buesching, F., Ji, Y., Wolf, L.: Leveraging RF-channel fluctuation for activity recognition: active and passive systems, continuous and RSSI-based signal features. In: ACM International Conference Proceeding Series, pp. 43–52 (2013)

Singh, D., Singh, B.: Investigating the impact of data normalization on classification performance. Appl. Soft Comput. 97, 105524 (2020)

Tan, M., Le, Q.V.: EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks (2019)

Tumrate, S., et al.: Classification of imbalanced data: review of methods and applications. IOP Conf. Ser.: Mater. Sci. Eng. 1099(1), 012077 (2021)

Wang, F., Feng, J., Zhao, Y., Zhang, X., Zhang, S., Han, J.: Joint activity recognition and indoor localization with WiFi fingerprints. IEEE Access 7, 80058–80068 (2019)

Yadav, S.K., et al.: CSITime: privacy-preserving human activity recognition using WiFi channel state information. Neural Netw.: Official J. Int. Neural Netw. Soc. 146, 11–21 (2022)

Yousefi, S., Narui, H., Dayal, S., Ermon, S., Valaee, S.: A survey on behavior recognition using WiFi channel state information. IEEE Commun. Mag. 55(10), 98–104 (2017)

Acknowledgement

This research was conducted with the financial support of Science Foundation Ireland under Grant Agreement No. 13/RC/2106_P2 at the ADAPT SFI Research Centre at Technological University Dublin ADAPT, the SFI Research Centre for AI-Driven Digital Content Technology, is funded by Science Foundation Ireland through the SFI Research Centres Programme.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this paper

Cite this paper

Elkelany, A., Ross, R., Mckeever, S. (2023). WiFi-Based Human Activity Recognition Using Attention-Based BiLSTM. In: Longo, L., O’Reilly, R. (eds) Artificial Intelligence and Cognitive Science. AICS 2022. Communications in Computer and Information Science, vol 1662. Springer, Cham. https://doi.org/10.1007/978-3-031-26438-2_10

Download citation

DOI: https://doi.org/10.1007/978-3-031-26438-2_10

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-26437-5

Online ISBN: 978-3-031-26438-2

eBook Packages: Computer ScienceComputer Science (R0)