Abstract

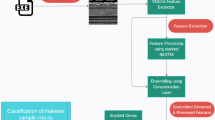

In previous work, “gist descriptor” features extracted from images have been used in malware classification problems and have shown promising results. In this research, we determine whether gist descriptors are robust with respect to malware obfuscation techniques, as compared to Convolutional Neural Networks (CNN) trained directly on malware images. Using the Python Image Library (PIL), we create images from malware executables and from malware that we obfuscate. We conduct experiments to compare classifying these images with a CNN as opposed to extracting the gist descriptor features from these images to use in classification. For the gist descriptors, we consider a variety of classification algorithms including k-nearest neighbors, random forest, support vector machine, and multi-layer perceptron. We find that gist descriptors are more robust than CNNs, with respect to the obfuscation techniques that we consider.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Malware is software created with the intent to be malicious or have a malicious effect [1]. Malware includes threats like viruses, worms, Trojan horses, and spyware. It can even be used in connection with other kinds of security threats like spam, bugs, and denial-of-service attacks. As a result of these factors, many people fall into the trap of having their devices infected with malware. In 2019, Kaspersky Lab released a threat report that stated that the number of users who encountered malware had tripled to 1.7 million [12].

Due to the recent COVID-19 pandemic, there has been an increase in people spending time online. An empirical study of the relation of cybercrime and COVID-19 found that there was a positive relationship between the number of malware infections with closed non-essential businesses and the number of malware infections with positive COVID-19 cases [4]. In this time period, people have to stay at home and interact with others online. This causes an increase in online presence. The more people there are online, the more people there are to fall victim to malware attacks. In addition to this, malware is constantly evolving to bypass security measures that protect devices. The attackers that create the malware can even use obfuscation techniques to disguise the malware to prevent it from being detected.

Image-based malware analysis is the study of malware converted to images to identify them. There are many machine learning algorithms that are useful in classifying images and have helped in making this approach successful. Often when looking at image classification, Convolutional Neural Networks (CNN) are used. CNNs are fast, extracting information easily, and give high accuracies. However, with certain obfuscation techniques, CNNs cannot give the same high accuracies [14].

This makes it important to also study the results of malware obfuscation with respect to images. In the field of image-based malware analysis, one approach is the use of so-called “gist” descriptors, which are designed to extract general features from images [13]. Using gist descriptors might reduce the effectiveness of some types of obfuscation that are commonly applied to malware. In previous research the robustness of gist descriptors in the malware domain was briefly considered [13].

In this paper, the main objective is to extensively analyze the robustness of gist descriptors as features for malware classification. With the gist descriptors as features, we use four different classifiers to compare to the accuracy that a deep learning algorithm, CNN, can have on an image of malware. This paper is structured in sections as follows. In Sect. 2 we review representative examples of relevant related work. Section 3 introduces a wide range of relevant background topics. Section 4 consists of the experiments done for this research and the results. Section 5 summarizes our experimental findings and discusses future work.

2 Related Work

There are several examples of research in image-based malware analysis. Most of this research is based on converting binary files to grayscale images but color images have recently been studied. There are many different ways to create such color images. In some of the related work, obfuscation was considered.

2.1 Color Images for Malware Analysis

A crucial part of image-based malware analysis is in the images that the analysis is performed on. This means that the way these images are created from the malware is important. Many different ways to create these images have been considered.

A common methods to create malware images focuses on the bytes of the executable. D. Vasan et al. [12] considered the malware files as a binary object, converted the binary to an 8-bit vector, and then organized it into a 2D array, which can be represented as a grayscale image. Applying a color-map onto the 2D array would result in a color image. In another approach, J. Fu et al. [3] manipulated the bytes in a more complicated manner. With the malware file, J. Fu et al. only focused on the bits in the PE sections because they contain the crucial information of the executable related to its structure. Since RGB images are made of three channels, the PE format section is split up again into three sections to set up for each of the channels. After the split, the entropy values, byte values, and relative size values are computed with the bytes of the binary to create RGB channels. The entropy values, byte values, and relative size values are computed because they can also be important features that reflect aspects of malware and computing them can create malware images that reflect that feature. These two methods have achieved a high accuracy of more than 90%. While the method from J. Fu et al. obtained higher accuracies, it is computationally expensive when compared to the approach by D. Vasan et al.

Another approach to creating images from malware is based on the instructions of the executable. To get the instructions of a malware executable, the file is first disassembled to obtain assembly code and mnemonic opcodes are extracted. J. Chen [2] took these instructions and filtered for the ones that would be more closely aligned with actions malware could use to do harm. These instructions are grouped together in threes and each instruction is taken as their machine code form to create the bytes for a pixel of an RGB image. In a slightly different approach, K. Han et al. [5] considered the instructions in a more complicated way. They also filtered for specific instructions but took the opcode sequences and put them through a hash to obtain an RGB color alongside the coordinates for that color to be converted in an image matrix. The images created from this approach look vastly different from the other approaches because, instead of an image with every pixel representing a part of the malware, this approach uses an image matrix and creates specific RGB pixels depending on a specific location. This means that not all the coordinates of the image are utilized. Creating images based on instructions is reasonable and allows for a more guided approach on filtering unnecessary information. However, disassembling an executable and filtering for instructions is a costly operation.

2.2 Obfuscation

Malware writer use obfuscation as a means to evade detection. There are many different approaches to malware obfuscation. To deal with malware obfuscation, various techniques that have been developed considered in the malware analysis literature.

S. Yajamanam et al. [13] used gist descriptors. From the malware images, gist descriptors are extracted to be used in classifying the malware. Gist descriptors are designed to get the “gist” of the crucial components of an image. Extracting the gist of the malware image means learning the general points of the malware, which might increase the likelihood of ignoring obfuscations. In another approach, H. Yakura et al. [14] proposed an image creation method that extracted sequences from the whole binary data. This method makes the model overcome obfuscation techniques that would be implemented in the data section. This idea is similar to the previous method of dealing with malware obfuscation, namely, they want to look at the “big picture” so that the parts that have been obfuscated do not confuse the model from classifying the malware correctly.

3 Background

In this Section, we first introduce the various classifiers that are used in our experiments. Then, we summarize the computing environment and we provide details on the dataset. We also discuss the various methods that we used to generate images from malware samples, and we introduce the obfuscation techniques that we employ in our robustness experiments. We conclude this Section with a discussion of gist descriptors, which are an integral component of the experiments and results in Sect. 4.

3.1 Classifiers

In this research, several different classifiers were used to classify the malware. To test the robustness of gist descriptors, they were applied as features when classifying with classification algorithms k-Nearest Neighbor (k-NN), Random Forest, Support Vector Machine (SVM), and Multi-Layer Perceptron (MLP). As a comparison, a Convolutional Neural Network (CNN) was used to classify based on the images themselves.

k-Nearest Neighbor. The k-Nearest Neighbor (k-NN) approach is a supervised machine learning algorithm. It requires data from a training dataset that includes the labels. The classification of new points is based on that data. Such classification is based on a voting system. It takes a new point and classifies it based on the k-number of neighbors that are closest to that point. As shown in Fig. 1, the point x is the new point that needs to be classified. Depending on the k value chosen, k-NN calculates the k number of nearest points to x to determine if it belongs to the b-class or the r-class. For example, if k was 3, the x point looks at the three closest points to it. In this case, it is closest to two r-class data points and one b-class data point, thus, the majority rules the x point to be classified as r-class.

k-Nearest neighbor [11]

Random Forest. Random Forest is a classification algorithm based on decision trees. A decision tree takes a dataset and splits it into branches based on different decisions that it makes about the features. This algorithm creates multiple decision trees as shown in Fig. 2, showing different possibilities of decision trees that can be created from the dataset. The data point that has to be classified is in these multiple decision trees. Its classification, then, depends on the majority prediction that is made from the multiple decision trees.

Random forest [7]

Support Vector Machine. Support Vector Machine (SVM) is a supervised machine learning algorithm. Based on a training dataset, the algorithm decides on a hyperplane that best separates the classes from each other. As shown in Fig. 3, the red-class data points and blue-class data points are separated by the yellow hyperplane. The classification of new points depends on which side of the hyperplane they lie. For this example, if it is on the upper right side of the hyperplane, it will classify as red-class.

Support vector machine [11]

Multi-layer Perceptron. Multi-Layer Perceptron (MLP) is a deep learning algorithm. Deep learning algorithms are made of neural networks, which are a set of algorithms that are created from layers of neurons. As shown in Fig. 4, it is comprised of an input layer, several hidden layers, and an output layer. Between each layer, the edges represent weights that decide the output node that a data point would lead to. To use MLP as a classifier, it is first trained on a training dataset. With this training dataset, the model is trained by setting and adjusting its weights. After the model is trained with the weights adjusted to the specific classification problem, it can then classify inputs by predicting what the classification would be from the output.

Multi-layer perceptron [11]

Convolutional Neural Network. Convolutional Neural Network (CNN) is a deep learning algorithm. Like the MLP, it is also comprised of a neural network. However, the CNN includes a convolutional layer and a pooling layer. The convolutional layer of a CNN preprocesses the image and extracts feature information from the images. It creates a convolved feature. The pooling layer then takes this convolved feature and reduces it. The structure of this neural network is different from the MLP. Like the MLP, though, it first trains on a training dataset to set its weights for the specific problem and performs classifications based on the trained model.

3.2 Computing Environment

The environment used to conduct experiments for this research is shown in Table 1. A Jupyter notebook in Python was used to perform these experiments, while a Jupyter notebook in MATLAB was used to extract the gist descriptor features from the images.

3.3 Dataset

This dataset was from a project called the Malicia Project [8]. The project is a collection of malware binaries that were collected from 500 drive-by download servers over 11 months. Table 2 shows information about the dataset files. Table 3 shows the malware features for each file. Table 4 shows the top five families and the number of files in each.

In the dataset, the malware binaries had to be extracted from the folders. The labels were also extracted. After extracting the labels, the data had to be cleaned and checked for missing labels. Through this process, we were able to find that there were 1,759 files that were missing a family label. There were also 305 files missing the file type label. These files were excluded from the experiments.

We also only focused on the executable files. This means that we exclusively focused on the files with the file type label “EXE.” Besides the executable files, there were also 276 dynamic-link library files. These were not used. These experiments focused only on the top five largest families: Winwebsec, Zbot, Zeroaccess, Securityshield, and Cridex. In these five families, there was only one dynamic-link library file in Zbot, making the number of Zbot family files that we used equal to 2,167.

3.4 Images from Malware

In our research, the Python Image Library (PIL) was used to create the images from malware executables. The PIL takes bytes from the executables and creates the images from those bytes. The size of the images created for the experiments are \(60\times 60\). This size was chosen because the smallest sized file we consider is 3,677 bytes. The PIL would not be able to make larger images. We wanted to keep the image size consistent and the executables were truncated to only take the number of bytes necessary for the image to be created. The PIL offers different modes for the images to be created into and these different modes are explored in the experiments [6]. Such modes represent different ways to create the images. It determines what the type and depth of a pixel for each image. The modes used in our experiments are listed in Table 5.

To perform these experiments, the images were created first. The top five largest families were all converted to images. Different image mode versions of each of the malware files were created and saved. Examples of a malware executable converted to imaged form in some of the different color modes are shown in Fig. 5.

3.5 Obfuscating Malware Images

The obfuscation technique we applied in this research is salting. In each family, we salted all of the files with another family in percentages. These are in pairs since the experiments are all binary classification experiments. We applied two different salting techniques in this research.

The amount of obfuscation is based on percentage of the file. Specifically, we considered 10%, 30%, 50%, 60%, 75%, 80%, and 100% for the Winwebsec versus Zbot experiments, and 10%, 50%, 75%, and 100% with the other family pairs.

The first method of salting is implemented in the second set of experiments. The images are salted by taking random bytes of the other family to salt the original family. We refer to this approach as random salting.

For example, if we are trying to salt the Winwebsec family with Zbot, we take each file in the Winwebsec family and convert a specific percentage of the file to a random section of a Zbot file.

For example, the steps of this process using Winwebsec salted by Zbot by 50% are:

-

1.

Take a file in the Winwebsec family

-

2.

Take a random file in the Zbot family

-

3.

Get the size of the Winwebsec file and calculate the number of bytes of that file that would be 50% of the file

-

4.

With that number, extract that many bytes from the Zbot family at random positions in that file

-

5.

Those bytes replace the Winwebsec family at the same spot that they were taken from in the Zbot file (ex. if the byte at index 12 was taken from the Zbot file, replace the byte at index 12 in the Winwebsec file by that byte)

-

6.

This results in a Winwebsec family file obfuscated by the Zbot family file and the image is saved

-

7.

Repeat steps from the beginning with the next Winwebsec family file until performed for all files in the Winwebsec family.

The second method of salting is implemented in the third set of experiments. The images are salted by taking a sequential chunk of the other family and replacing the end of the original family file with it. We refer to this as contiguous salting.

For example, the steps of this process using Winwebsec salted by Zbot by 50% are:

-

1.

Take a file in the Winwebsec family

-

2.

Take a random file in the Zbot family

-

3.

Get the size of the Winwebsec file and calculate the number of bytes of that file that would be 50% of the file

-

4.

With that number, we replace the end (lower half of the image) of the Winwebsec family file with the ending of the Zbot family file that is that amount of bytes

-

5.

This results in a Winwebsec family file obfuscated by the Zbot family file and the image is saved

-

6.

Repeat steps from the beginning with the next Winwebsec family file until performed for all files in the Winwebsec family.

3.6 Gist Descriptors

“Gist descriptors” are features that were created in an effort to represent the “gist” of an image. Designed by A. Oliva and A. Torralba, that were trying to create what they called a “Spatial Envelope” [10]. This spatial envelope would represent the shape of a scene and nothing else; this means it should only contain what is essential to the image. Through experimentation, the authors were able to narrow down properties that would represent a spatial envelope as degrees of naturalness, openness, roughness, expansion, and ruggedness. These five properties would give a high-level representation of the scene and show the “gist” of it.

While gist descriptors have been used in many different applications, this research focuses on its use in malware classification. L. Nataraj et al. extract gist descriptors to use as their features in multiple experiments to classify malware [9]. They were able to obtain a classification accuracy of 98% when looking at a dataset that contained 25 malware families and a benign set of executables.

Our research is partially inspired by S. Yajamanam et al. [13]. In this previous work, the authors conducted a few experiments testing the robustness of gist descriptors. They obfuscated the malware, extracted the gist descriptors from the imaged versions of the obfuscated malware, and used a classification algorithm to classify them. However, the research by S. Yajamanam et al. was not focused on the robustness of gist descriptors, thus, there were only a few experiments to test robustness. To test the robustness of gist descriptors, they experimented with salting, an obfuscation technique that takes part of a different file and adds it to the malware in order to add noise and make it less identifiable. Three different experiments with salting were performed, that is, salting one family, salting with two closely related families, and salting all the malware families with benign samples. The experiments showed that the decline in accuracy due to the salting was not particularly evident. S. Yajamanam et al. concluded that, while there was the need of more experimentation, it seemed that gist descriptors was robust against this obfuscation technique.

In relation to the gist descriptor experiments, we first started with extracting the gist descriptors from the images. The gist descriptor features were then saved. They only need to be extracted once from each image [10].

To be able to compare our results with gist descriptors to the past works from [13] and [9], we used 320 gist descriptors instead of the full 512 gist descriptors that are extracted with the MATLAB code. These works had only used 320 gist descriptors because they wanted dimensions that have the global image properties with some of the local information.

4 Experiments and Results

These experiments are performed using the images that were created with the PIL. The first experiment classifies the images by applying CNN with all the family pairs.

The second and third experiments are the obfuscation experiments - with the second experiment testing the first salting method (random salting) while the third experiment tests the second salting method (contiguous salting). In these experiments, we performed binary classification. While the CNN uses the original images directly, the other four classification algorithms require to extract the gist descriptors first. With the gist descriptor experiments, a stratified 5-fold cross validation was used. These experiments were performed with a test split ratio of 0.2.

Here is the list of the tested ratios for the obfuscation set of experiments:

-

0%: accuracy obtained without any obfuscation

-

10%: accuracy obtained when 10% of the malware is salted by the other family

-

30%: accuracy obtained when 30% of the malware is salted by the other family

-

50%: accuracy obtained when 50% of the malware is salted by the other family

-

60%: accuracy obtained when 60% of the malware is salted by the other family

-

75%: accuracy obtained when 75% of the malware is salted by the other family

-

80%: accuracy obtained when 80% of the malware is salted by the other family

-

100%: accuracy obtained when 100% of the malware is salted by the other family.

In all of these experiments, we show the Winwebsec family. For binary classification experiments, we highlight the Winwebsec family versus the Zbot family.

4.1 Comparing Image Modes with CNN

The first set of experiments are a baseline to check what the classification accuracy is by using the CNN to classify the images that were simply created by the PIL. A binary classification was performed with the malware images created by the PIL. Different PIL image modes were used to experiment and understand how the modes would affect the accuracy.

An initial binary classification test with Winwebsec versus Zbot was performed with the different optimizers to check which gave the best result. We looked at few optimizer algorithms, that is, Root Mean Squared Propagation (RMSprop), Adaptive Moment Estimation (Adam), Stochastic Gradient Descent (SGD), Adadelta, and Adaptive Gradient (Adagrad). This initial test was performed with all the optimizers, a test split size of 0.2, and over five epochs. In Fig. 6, we see a comparison of the loss and accuracy values with the training and test data over the number of epochs. From Fig. 6 we determined that the Adam optimizer with three epochs is the best for this experiment. Thus, the rest of this experiment was performed with Adam optimizer, test split of 0.2, and over three epochs.

Table 6 shows the binary classification results between Winwebsec and the other top five largest families of the dataset by using CNN. This set of experiments was also used to select the top two modes that we then applied for the rest of the experiments.

Figure 7 shows a comparison between the modes and the accuracy ranking that they obtained. The ranking accuracy is the ranking that the accuracy value was in among the other modes. Rank 1 is the best while rank 5 is the worst. This means that the lower bars are better. From Fig. 7, we determine that mode 1 and mode YCbCr perform the best among the other modes.

4.2 Random Salting Experiments

The second set of experiments introduces the first obfuscated technique. Specifically, we used the random salting method discussed in Sect. 3.5. Recall that for this salting technique, we randomly select bytes of another class to salt the executable before converting it to an image.

We experimented with CNN, k-NN, RF, SVM, and MLP classifiers and image modes 1, and YCbCr. In each case, we consider obfuscation rates of 0%, 10%, 30%, 50%, 60%, 75%, 80%, and 100%, and all experiments are based on an 80-20 training-test split.

For our CNN experiments, three training epochs are performed. We found that this obtains promising results without overfitting.

For k-NN, we needed to choose a value of k that avoids overfitting. Hence, we graphed accuracy versus the k value and looked for an “elbow” in the curve. After determining k, we used a grid search to find the best distance metric for each mode. We tested four distance metrics, namely, Euclidian, Manhattan, Chebyshev, and Minkowski.

For mode 1, we chose \(k=35\) based on Fig. 8(a). For mode YCbCr, we chose \(k=25\) based on Fig. 8(b). Based on a grid search, we found that the best distance metric for mode 1 and mode YCbCr is the Manhattan distance.

We now focus on using Random Forest to classify the malware based on the gist descriptors as features. To choose how many trees to use, we looked at the accuracy versus the number of estimators (number of trees). For mode 1, Fig. 9(a) suggests that 135 yields the best result, while for mode YCbCr, Fig. 9(b) shows that 160 is ideal.

We then focused on using SVM to classify the malware based on the gist descriptors as features. We used a grid search to tune the hyperparameters. We tested regularization values of 0.1, 1, 10, 100, and 1000, and we tested gamma values of 1, 0.1, 0.01, 0.001, and 0.0001. We also tested linear, polynomial, radial basis function (RBF), and sigmoid kernel functions. For mode 1, we found that the best hyperparameters are regularization 10, gamma 0.001, and the RBF kernel. For YCbCr mode, we found regularization 10, gamma 0.01, and again the best kernel function is RBF.

Then, we implemented MLP to classify the malware samples based on the gist descriptors. We again used a grid search to tune the hyperparameters. We tested a few hidden layer activation functions, that is, identity, logistic, hyperbolic tangent (tanh), and rectified linear unit (ReLU). We tested the weight optimization solvers Quasi-Newton, Limited-memory BFGS (LBFGS), SGD, and Adam. We tested L2 penalty values of 0.1, 0.01, 0.05, 0.001, and 0.0001, and we tested learning rate schedules constant, invscaling, and adaptive. We also tested maximum iterations of 50, 100, 150, and 200.

With mode 1, we found that the best hyperparameters are hidden layer activation function ReLU, weight optimization solver LBFGS, L2 penalty value 0.001, learning rate schedule invscaling, and maximum iteration value 200. With mode YCbCr, the best hyperparameters are hidden layer activation function tanh, weight optimization solver Adam, L2 penalty value 0.1, learning rate schedule adaptive, and maximum iteration value 50.

Using the hyperparameter values as discussed above, the results of all of our experiments are summarized in Fig. 10. We note that mode YCbCr is much more robust to obfuscation, and that CNN is the least robust of the classification techniques considered. Furthermore, RF, SVM, and MLP are the most robust classification strategies, regardless of which image conversion technique is used.

4.3 Contiguous Salting

The third set of experiments uses the contiguous salting method, that is, we salt executables with contiguous bytes from another class. These experiments use the same classifiers and the same hyperparameters tuning approach as discussed in the previous Section. We omit the hyperparameter tuning details and describe directly the results of our experiments.

Our contiguous salting experiments are summarized in Fig. 11. We see that mode YCbCr is extremely robust with respect to this method of salting. As with random salting, we observed that CNN is the least robust of the classification techniques.

4.4 CNN Experiments Without Obfuscation

The first experiment with CNN showed that CNN yields good results in binary classification. In all the binary classification combinations with the top five largest families in the Malicia dataset, nearly all of the accuracies are more than 92%. There are only three pairs from the first experiment that yielded low results.

This set of experiments was performed to decide the best modes to use for the rest of the experiments. While we chose mode 1 and mode YCbCr to use since they yielded the best accuracies, the other modes were often close in accuracy.

5 Conclusion and Future Work

From the results of the experiments performed in this research, we found that using gist descriptors extracted from the images is a more robust approach than directly applying a CNN to images. In fact, the experiments showed that the CNN did not obtain the same high accuracies as the classifiers that instead relied on the gist descriptors.

When experimenting with the different modes, we found that mode YCbCr and mode 1 yielded the best results in combination with the gist descriptors. In particular, with mode YCbCr, we were able to obtain the best accuracy for higher obfuscation percentages, as compared to mode 1.

Future work could focus on testing different obfuscation methods. Regarding the salting technique itself, more experiments could be performed testing different methods of salting. This research focused on bytes, while a different method may obtain different accuracy results with other image modes. Finally, other image modes could also be considered.

References

Aycock, J.: Computer Viruses and Malware, 1st edn. Springer Publishing Company, Incorporated, New York (2010)

Chen, J.: A malware detection method based on RGB image. In: Proceedings of the 2020 6th International Conference on Computing and Artificial Intelligence, ICCAI 2020, pp. 283–290. Association for Computing Machinery, New York (2020). https://doi.org/10.1145/3404555.3404622

Fu, J., Xue, J., Wang, Y., Liu, Z., Shan, C.: Malware visualization for fine-grained classification. IEEE Access 6, 14510–14523 (2018). https://doi.org/10.1109/ACCESS.2018.2805301

Gero, S., Back, S., LaPrade, J., Kim, J.: Malware infections in the US during the COVID-19 pandemic: an empirical study. Int. J. Cybersecurity Intell. Cybercrime 4, 25–37 (2021)

Han, K., Kang, B., Im, E.G.: Malware analysis using visualized image matrices. Sci. World J. 2014, 132713 (2014). https://doi.org/10.1155/2014/132713

Lundh, F., Clark, A.: Concepts (2022). https://pillow.readthedocs.io/en/stable/handbook/concepts.html

Mbaabu, O.: Introduction to random forest in machine learning (2020). https://www.section.io/engineering-education/introduction-to-random-forest-in-machine-learning/

Nappa, A., Rafique, M.Z., Caballero, J.: The MALICIA dataset: identification and analysis of drive-by download operations. Int. J. Inf. Secur. 14(1), 15–33 (2014). https://doi.org/10.1007/s10207-014-0248-7

Nataraj, L., Karthikeyan, S., Jacob, G., Manjunath, B.S.: Malware images: visualization and automatic classification. In: Proceedings of the 8th International Symposium on Visualization for Cyber Security, VizSec 2011. Association for Computing Machinery, New York (2011). https://doi.org/10.1145/2016904.2016908

Oliva, A., Torralba, A.: Modeling the shape of the scene: a holistic representation of the spatial envelope. Int. J. Comput. Vision 42, 145–175 (2004). http://people.csail.mit.edu/torralba/code/spatialenvelope/

Stamp, M.: Introduction to Machine Learning with Applications in Information Security, 2nd edn. Chapman & Hall/CRC, Boca Raton (2022)

Vasan, D., Alazab, M., Wassan, S., Naeem, H., Safaei, B., Zheng, Q.: IMCFN: image-based malware classification using fine-tuned convolutional neural network architecture. Comput. Netw. 171, 107138 (2020). https://doi.org/10.1016/j.comnet.2020.107138. https://www.sciencedirect.com/science/article/pii/S1389128619304736

Yajamanam, S., Selvin, V.R.S., Troia, F.D., Stamp, M.: Deep learning versus gist descriptors for image-based malware classification. In: Mori, P., Furnell, S., Camp, O. (eds.) Proceedings of the 4th International Conference on Information Systems Security and Privacy, ICISSP 2018, pp. 553–561. SciTePress (2018)

Yakura, H., Shinozaki, S., Nishimura, R., Oyama, Y., Sakuma, J.: Malware analysis of imaged binary samples by convolutional neural network with attention mechanism. In: Proceedings of the Eighth ACM Conference on Data and Application Security and Privacy, CODASPY 2018, pp. 127–134. Association for Computing Machinery, New York (2018). https://doi.org/10.1145/3176258.3176335

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this paper

Cite this paper

Tran, K., Di Troia, F., Stamp, M. (2022). Robustness of Image-Based Malware Analysis. In: Bathen, L., Saldamli, G., Sun, X., Austin, T.H., Nelson, A.J. (eds) Silicon Valley Cybersecurity Conference. SVCC 2022. Communications in Computer and Information Science, vol 1683. Springer, Cham. https://doi.org/10.1007/978-3-031-24049-2_1

Download citation

DOI: https://doi.org/10.1007/978-3-031-24049-2_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-24048-5

Online ISBN: 978-3-031-24049-2

eBook Packages: Computer ScienceComputer Science (R0)