Abstract

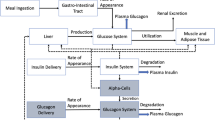

Artificial Pancreas Systems (APS) aim to improve glucose regulation and relieve people with Type 1 Diabetes (T1D) from the cognitive burden of ongoing disease management. They combine continuous glucose monitoring and control algorithms for automatic insulin administration to maintain glucose homeostasis. The estimation of an appropriate control action—or—insulin infusion rate is a complex optimisation problem for which Reinforcement Learning (RL) algorithms are currently being explored due to their performance capabilities in complex, uncertain environments. However, insulin requirements vary markedly according to sleep patterns, meal and exercise events. Hence, a large dynamic range of insulin infusion rates is required necessitating a large continuous action space which is challenging for RL algorithms. In this study, we introduced the use of non-linear continuous action spaces as a method to tackle the problem of efficiently exploring the large dynamic range of insulin towards learning effective control policies. Three non-linear action space formulations inspired by clinical patterns of insulin delivery were explored and analysed based on their impact to performance and efficiency in learning. We implemented a state-of-the-art RL algorithm and evaluated the performance of the proposed action spaces in-silico using an open-source T1D simulator based on the UVA/Padova 2008 model. The proposed exponential action space achieved a 24% performance improvement over the linear action space commonly used in practice, while portraying fast and steady learning. The proposed action space formulation has the potential to enhance the performance of RL algorithms for APS.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bothe, M.K., Dickens, L., et al.: The use of reinforcement learning algorithms to meet the challenges of an artificial pancreas. Expert Rev. Med. Devices 10(5), 661–673 (2013)

Brew-Sam, N., Chhabra, M., et al.: Experiences of young people and their caregivers of using technology to manage type 1 diabetes mellitus: systematic literature review and narrative synthesis. JMIR Diabetes 6(1), e20973 (2021)

Brockman, G., et al.: OpenAI gym. arXiv Eprint arXiv:1606.01540 (2016)

Cobelli, C., Renard, E., Kovatchev, B.: Artificial pancreas: past, present, future. Diabetes 60(11), 2672–2682 (2011)

DiMeglio, L.A., Evans-Molina, C., Oram, R.A.: Type 1 diabetes. Lancet 391(10138), 2449–2462 (2018)

Dulac-Arnold, G., Mankowitz, D., Hester, T.: Challenges of real-world reinforcement learning. arXiv preprint arXiv:1904.12901 (2019)

Fox, I., Wiens, J.: Reinforcement learning for blood glucose control: challenges and opportunities. In: Reinforcement Learning for Real Life (RL4RealLife) Workshop in the 36th International Conference on Machine Learning (2019)

Fox, I., et al.: Deep reinforcement learning for closed-loop blood glucose control. In: Machine Learning for Healthcare Conference, pp. 508–536. PMLR (2020)

Kovatchev, B.P., Breton, M., et al.: In silico preclinical trials: a proof of concept in closed-loop control of type 1 diabetes. J. Diabetes Sci. Technol. 3(1), 44–55 (2009)

Kovatchev, B.P., Clarke, W.L., et al.: Quantifying temporal glucose variability in diabetes via continuous glucose monitoring: mathematical methods and clinical application. Diabetes Technol. Ther. 7(6), 849–862 (2005)

Lazaric, A., Restelli, M., Bonarini, A.: Reinforcement learning in continuous action spaces through sequential monte carlo methods. In: Advances in Neural Information Processing Systems, vol. 20 (2007)

Lee, S., Kim, J., et al.: Toward a fully automated artificial pancreas system using a bioinspired reinforcement learning design: in silico validation. IEEE J. Biomed. Health Inform. 25(2), 536–546 (2020)

Lillicrap, T.P., Hunt, J.J., Pritzel, A., et al.: Continuous control with deep reinforcement learning. arXiv preprint arXiv:1509.02971 (2015)

Lim, M.H., Lee, W.H., et al.: A blood glucose control framework based on reinforcement learning with safety and interpretability: in silico validation. IEEE Access 9, 105756–105775 (2021)

Mann, H.B., Whitney, D.R.: On a test of whether one of two random variables is stochastically larger than the other. Ann. Math. Stat. 18, 50–60 (1947)

Naik, A., Shariff, R., et al.: Discounted reinforcement learning is not an optimization problem. arXiv preprint arXiv:1910.02140 (2019)

Nathan, D., Genuth, S., et al.: The effect of intensive treatment of diabetes on the development and progression of long-term complications in insulin-dependent diabetes mellitus. N. Engl. J. Med. 329(14), 977–986 (1993)

Online: Insulin pump comparison. http://www.betterlivingnow.com/forms/Insulin-Pump-Comparison.pdf. Accessed 24 Mar 2022

Rorsman, P., Eliasson, L., Renstrom, E., Gromada, J., Barg, S., Gopel, S.: The cell physiology of biphasic insulin secretion. Physiology 15(2), 72–77 (2000)

Schrittwieser, J., Antonoglou, I., et al.: Mastering Atari, go, chess and shogi by planning with a learned model. Nature 588(7839), 604–609 (2020)

Schulman, J., Moritz, P., Levine, S., et al.: High-dimensional continuous control using generalized advantage estimation. arXiv preprint arXiv:1506.02438 (2015)

Schulman, J., Wolski, F., et al.: Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347 (2017)

Shah, R.B., Patel, M., et al.: Insulin delivery methods: past, present and future. Int. J. Pharm. Investig. 6(1), 1–9 (2016)

Shapiro, S.S., Wilk, M.B.: An analysis of variance test for normality (complete samples). Biometrika 52(3/4), 591–611 (1965)

Sutton, R.S., Barto, A.G.: Reinforcement Learning: An Introduction. MIT Press, Cambridge (2018)

Tassa, Y., Doron, Y., Muldal, A., Erez, T., et al.: DeepMind control suite. arXiv preprint arXiv:1801.00690 (2018)

Todorov, E., Erez, T., Tassa, Y.: MuJoCo: a physics engine for model-based control. In: 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 5026–5033. IEEE (2012)

Vajapey, A.: Predicting optimal sedation control with reinforcement learning. Ph.D. thesis, Massachusetts Institute of Technology (2019)

Xie, J.: Simglucose v0. 2.1 (2018). https://github.com/jxx123/simglucose. Accessed 13 Jan 2022

Zahavy, T., et al.: Learn what not to learn: action elimination with deep reinforcement learning. In: Advances in Neural Information Processing Systems, vol. 31 (2018)

Zhu, T., Li, K., Georgiou, P.: A dual-hormone closed-loop delivery system for type 1 diabetes using deep reinforcement learning. arXiv preprint arXiv:1910.04059 (2019)

Zhu, T., Li, K., Herrero, P., Georgiou, P.: Basal glucose control in type 1 diabetes using deep reinforcement learning: an in silico validation. IEEE J. Biomed. Health Inform. 25(4), 1223–1232 (2020)

Acknowledgments

This research was funded in part by the Australian National University and the Our Health in Our Hands initiative.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Ethics declarations

Code Availability

A repository of code used in this study, and further supplementary material, is available at https://github.com/chirathyh/G2P2C.

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Hettiarachchi, C., Malagutti, N., Nolan, C.J., Suominen, H., Daskalaki, E. (2022). Non-linear Continuous Action Spaces for Reinforcement Learning in Type 1 Diabetes. In: Aziz, H., Corrêa, D., French, T. (eds) AI 2022: Advances in Artificial Intelligence. AI 2022. Lecture Notes in Computer Science(), vol 13728. Springer, Cham. https://doi.org/10.1007/978-3-031-22695-3_39

Download citation

DOI: https://doi.org/10.1007/978-3-031-22695-3_39

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-22694-6

Online ISBN: 978-3-031-22695-3

eBook Packages: Computer ScienceComputer Science (R0)