Abstract

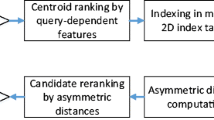

Dense retrieval models represent queries and documents with one or multiple fixed-width vectors and retrieve relevant documents via nearest neighbor search. Recently these models have shown improvement in retrieval performance and have drawn increasing attention from the IR community. Among a variety of dense retrieval models, the models that employ multiple vectors to represent texts achieve the state-of-the-art ranking performance. However, the multi-vector representation schema imposes tremendous storage overhead compared with single-vector representation, which may hinder its application in practical scenarios. We therefore intend to apply vector compression methods such as Product Quantization (PQ) to reduce the storage cost and improve retrieval efficiency. However, the gap between the original embeddings and the quantized vectors may degenerate retrieval performance. Recently, improved dense retrieval models such as JPQ have been proposed to reduce storage space while maintaining ranking effectiveness by jointly training the encoder and PQ index. They have achieved promising improvement in the single-vector dense retrieval scenario. We therefore try to introduce this joint optimization framework to tackle the storage overhead of the multi-vector models. The key idea is to Jointly optimize Multi-vector representations with Product Quantization (JMPQ). JMPQ prevents effectiveness degeneration by leveraging a joint optimization framework for the query encoding and index compressing processes. We evaluate the performance of JMPQ on publicly available ad-hoc retrieval benchmarks. Extensive experimental results show that JMPQ substantially reduces the memory footprint while achieving ranking effectiveness on par with or even better than its uncompressed counterpart.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Barnes, C.F., Rizvi, S.A., Nasrabadi, N.M.: Advances in residual vector quantization: a review. IEEE Trans. Image Process. 5(2), 226–262 (1996)

Craswell, N., Mitra, B., Yilmaz, E., Campos, D.: Overview of the TREC 2020 deep learning track (2021)

Craswell, N., Mitra, B., Yilmaz, E., Campos, D., Voorhees, E.M.: Overview of the TREC 2019 deep learning track. arXiv preprint arXiv:2003.07820 (2020)

Devlin, J., Chang, M.W., Lee, K., Toutanova, K.: BERT: pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805 (2018)

Gao, L., Dai, Z., Callan, J.: COIL: revisit exact lexical match in information retrieval with contextualized inverted list. arXiv preprint arXiv:2104.07186 (2021)

Ge, T., He, K., Ke, Q., Sun, J.: Optimized product quantization. IEEE Trans. Pattern Anal. Mach. Intell. 36(4), 744–755 (2013)

Guo, J., Cai, Y., Fan, Y., Sun, F., Zhang, R., Cheng, X.: Semantic models for the first-stage retrieval: a comprehensive review. arXiv preprint arXiv:2103.04831 (2021)

Hartigan, J.A., Wong, M.A.: Algorithm as 136: a k-means clustering algorithm. J. Royal Stat. Soc. Ser. c (applied statistics) 28(1), 100–108 (1979)

Indyk, P., Motwani, R.: Approximate nearest neighbors: towards removing the curse of dimensionality. In: Proceedings of the Thirtieth Annual ACM Symposium on Theory of Computing, pp. 604–613 (1998)

Jegou, H., Douze, M., Schmid, C.: Product quantization for nearest neighbor search. IEEE Trans. Pattern Anal. Mach. Intell. 33(1), 117–128 (2010)

Johnson, J., Douze, M., Jégou, H.: Billion-scale similarity search with GPUs. IEEE Trans. Big Data 7(3), 535–547 (2019)

Khattab, O., Zaharia, M.: ColBERT: efficient and effective passage search via contextualized late interaction over BERT. In: Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 39–48 (2020)

Lin, J., Nogueira, R., Yates, A.: Pretrained transformers for text ranking: BERT and beyond. Synth. Lect. Hum. Lang. Technol. 14(4), 1–325 (2021)

Lin, S.C., Yang, J.H., Lin, J.: Distilling dense representations for ranking using tightly-coupled teachers. arXiv preprint arXiv:2010.11386 (2020)

Liu, Y., et al.: RoBERTa: a robustly optimized BERT pretraining approach. arXiv preprint arXiv:1907.11692 (2019)

Luan, Y., Eisenstein, J., Toutanova, K., Collins, M.: Sparse, dense, and attentional representations for text retrieval. Trans. Assoc. Comput. Linguist. 9, 329–345 (2021)

Nguyen, T., et al.: MS MARCO: a human generated machine reading comprehension dataset. In: CoCo@ NIPS (2016)

Robertson, S.E., Walker, S.: Some simple effective approximations to the 2-Poisson model for probabilistic weighted retrieval. In: SIGIR 1994, pp. 232–241. Springer (1994). https://doi.org/10.1007/978-1-4471-2099-5_24

Santhanam, K., Khattab, O., Saad-Falcon, J., Potts, C., Zaharia, M.: ColBERTv2: effective and efficient retrieval via lightweight late interaction (2021)

Vaswani, A., et al.: Attention is all you need. Adv. Neural Inf. Process. Syst. 30 (2017)

Xiao, S., Liu, Z., Shao, Y., Lian, D., Xie, X.: Matching-oriented product quantization for ad-hoc retrieval. arXiv preprint arXiv:2104.07858 (2021)

Xiong, L., et al.: Approximate nearest neighbor negative contrastive learning for dense text retrieval. arXiv preprint arXiv:2007.00808 (2020)

Zhan, J., Mao, J., Liu, Y., Guo, J., Zhang, M., Ma, S.: Jointly optimizing query encoder and product quantization to improve retrieval performance. In: Proceedings of the 30th ACM International Conference on Information and Knowledge Management, pp. 2487–2496 (2021)

Zhan, J., Mao, J., Liu, Y., Guo, J., Zhang, M., Ma, S.: Learning discrete representations via constrained clustering for effective and efficient dense retrieval (2021)

Zhan, J., Mao, J., Liu, Y., Guo, J., Zhang, M., Ma, S.: Optimizing dense retrieval model training with hard negatives. In: Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 1503–1512 (2021)

Zhan, J., Mao, J., Liu, Y., Guo, J., Zhang, M., Ma, S.: Optimizing dense retrieval model training with hard negatives. In: Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 1503–1512 (2021)

Acknowledgment

This work is supported by the Natural Science Foundation of China (Grant No. 61732008) and Tsinghua University Guoqiang Research Institute.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Fang, Y., Zhan, J., Liu, Y., Mao, J., Zhang, M., Ma, S. (2022). Joint Optimization of Multi-vector Representation with Product Quantization. In: Lu, W., Huang, S., Hong, Y., Zhou, X. (eds) Natural Language Processing and Chinese Computing. NLPCC 2022. Lecture Notes in Computer Science(), vol 13551. Springer, Cham. https://doi.org/10.1007/978-3-031-17120-8_49

Download citation

DOI: https://doi.org/10.1007/978-3-031-17120-8_49

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-17119-2

Online ISBN: 978-3-031-17120-8

eBook Packages: Computer ScienceComputer Science (R0)