Abstract

Moving around in a virtual world is one of the essential interactions for Virtual Reality (VR) applications. The current standard for moving in VR is using a controller. Recently, VR Head Mounted Displays integrate new input modalities such as hand tracking which allows the investigation of different techniques to move in VR. This work explores different techniques for bare-handed locomotion since it could offer a promising alternative to existing freehand techniques. The presented techniques enable continuous movement through an immersive virtual environment. The proposed techniques are compared to each other in terms of efficiency, usability, perceived workload, and user preference.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Recent advances in hardware development of Head Mounted Displays (HMDs) provide affordable hand tracking out of the box. In the future, a hand held controller as input modality could be replaced by using hand gestures in many scenarios. Therefore, the potential of this technology should be explored. The use of hand tracking is already widely acknowledged and research is being conducted in many different areas of human computer interaction. For example, hand tracking can be used to pick up virtual objects in more natural ways than with a controller such as done by Schäfer et al. [30, 33] or Kang et al. [21]. Moving around in Virtual Reality (VR) is one of the essential interactions within virtual environments but the capability of this technology for moving around in VR is largely unexplored.

Generally, there are two ways to move in virtual environments: Teleportation based locomotion and continuous locomotion. Teleportation locomotion instantly changes the position of the user. Continuous locomotion on the other hand is more like a walk, where the user gradually moves in the desired direction. Teleportation based locomotion is known to cause less motion sickness compared to continuous locomotion, but the latter is more immersive [2, 14, 25]. It is a trade off between immersion and motion sickness. Therefore, if the application scenario permits, care should be taken to allow the user to choose between the two methods. Games and other commercial applications using a controller usually allow for an option to choose which locomotion method is desired. In this work, three novel locomotion techniques using bare hands for continuous locomotion are proposed and evaluated. A technique which uses index finger pointing as metaphor was implemented. Steering is performed by moving the index finger into the desired direction. A similar technique using the hand palm for steering was implemented. The third bare handed technique utilizes a thumbs up gesture to indicate movement. Compared to other freehand locomotion techniques which involve rather demanding body movements, locomotion using hand gestures could be a less stressful and demanding technique. This assumption arises from the fact that only finger and hand movements are required for locomotion, whereas other techniques require large parts of the body to be moved. The three techniques are compared to each other and to the current standard for moving in VR, the controller. This work aims to provide more insights into hand gesture based locomotion and whether it is applicable and easy to use by users. In particular, the research gap of continuous locomotion with hand gestures should be addressed, as most existing techniques use teleportation. In addition, it is not yet clear which hand gestures are suitable for the locomotion task in VR, and further research should be conducted to find suitable techniques. The contributions of this paper are as follows:

-

Introducing three novel locomotion techniques for VR using bare hands

-

A comprehensive evaluation of these techniques

2 Related Work

2.1 Locomotion Techniques in VR Without Using Controllers

Several techniques for moving in VR have been proposed by researchers. Some of these techniques involve rather large and demanding body movements such as the well established technique Walking in place (WIP). To move virtually with this technique, users perform footsteps on a fixed spatial position in the real world. This technique is already widely explored and a large body of existing work can be found in the literature. Templeman et al. [34] attached sensors to knees and the soles of the feet to detect the movements, which are then transmitted to the virtual world. Bruno et al. [8] created a variant for WIP, Speed-Amplitude-Supported WIP which allows users to control their virtual speed by footstep amplitude and speed metrics.

Another technique for moving without controllers is leaning. This technique uses leaning forward for acceleration of the virtual avatar. Buttussi and Chittaro [9] compared continuous movement with controller, teleportation with controller, and continuous movement with leaning. Leaning performed slightly worse compared to the other techniques. Langbehn et al. [24] combined WIP with leaning where the movement speed of the WIP technique is controlled by the leaning angle of the user. Different techniques for leaning and controller based locomotion was evaluated by Zielasko et al. [37]. The authors suggest that torso-directed leaning performs better than gaze-directed or virtual-body-directed leaning. In another work, Zielasko et al. [36] compared leaning, seated WIP, head shaking, accelerator pedal, and gamepad to each other. A major finding of their study is that WIP is not recommended for seated locomotion. A method that pairs well with WIP is redirected walking. With this method, the virtual space is changed so that the user needs as little physical space as possible. Different techniques exist to achieve this, for example manipulating the rotation gains of the VR HMD [16] or foldable spaces by Han et al. [17]. More redirected walking techniques are found in the survey from Nilsson et al. [27]. Since a large body of research work exists around locomotion in virtual reality, the reader is referred to surveys such as [1, 3, 13, 26, 28, 31] to gain more information about different locomotion techniques and taxonomies.

Physical movement coupled with virtual movement offers more immersion, but hand gesture-based locomotion is expected to be a less strenuous and demanding form of locomotion than those mentioned above. Furthermore it is a technique that requires minimal physical space and can be used in seated position as well as standing.

2.2 Locomotion in VR Using Hand Gestures

Early work on how hand gestures can be used for virtual locomotion was conducted by Kim et al. [22, 23]. The authors presented Finger Walking in Place (FWIP), which enables virtual locomotion through the metaphor of walking triggered by finger movements. Four different locomotion techniques for teleportation using hand gestures are compared to each other by Schäfer et al. [32]. Two two-handed and two one-handed techniques are proposed. The authors came to the conclusion that palm based techniques perform better than index pointing techniques but overall the user should decide which technique to use. Huang et al. [20] used finger gestures to control movement within virtual environments. The gestures are used to control the velocity of moving forward and backwards. Four different locomotion techniques are proposed by Ferracani et al. [15]. The techniques are WIP, Arm Swing, Tap, and Push. Tap uses index finger pointing and Push involves closing and opening the hand. The authors conclude that the bare handed technique Tap even outperformed the well established WIP technique. Zhang et al. [35] proposes a technique to use both hands for locomotion. The left hand is used to start and stop movement while the right hand uses the thumb to turn left and right. Cardoso [12] used hand gestures with both hands as well for a locomotion task. Movement was controlled by opening/closing both hands, speed was controlled by the number of stretched fingers, and the rotation of the avatar was mapped to the tilt angle of the right hand. The authors concluded that the hand-tracking based technique outperformed an eye gaze based technique but was inferior to a gamepad. Hand gestures were also used by Caggianese et al. [11] in combination with a navigation widget. Users had to press a button to move through a virtual environment whereas with the proposed technique, users can move by performing a hand gesture. In subsequent work, Caggianese et al. [10] compared three freehand and a controller based locomotion technique. In their experiment, participants had to follow a predefined path. The authors show that freehand steering techniques using hand gestures have comparable results to controller. While Caggianese et al. [10] uses hand gestures to start/stop movement, this work compares two techniques with a 3D graphical user interface, a one-handed gesture to start movement. The direction of movement was also tied to the direction of the hand, whereas in this work the direction of movement is tied to the direction of the user’s VR HMD. Bozgeyikli et al. [4, 5] compared Joystick, Point and Teleport, and WIP. The results showed that the hand gesture based teleportation technique is intuitive, easy to use, and fun.

3 Proposed Locomotion Techniques

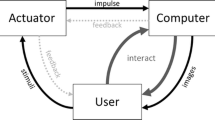

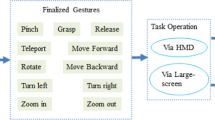

Four different locomotion techniques were developed: Controller, FingerUI, HandUI, and ThumbGesture. The proposed locomotion techniques are depicted in Fig. 1. The implementation of each technique is briefly explained in this section.

Controller. This technique uses the standard implementation for continuous locomotion with the Software Development Kit (SDK) of the chosen VR HMD. The thumbstick on the left controller is used for acceleration and the thumbstick on the right controller can be used to rotate the user. Using the right thumbstick is optional since the user can turn normally by just moving the head.

FingerUI. If the user points the index finger forward, a 3D graphical user interface will be shown. A 3D arrow for the four different directions Forward, Backward, Left, and Right are shown. While the user is maintaining the index finger forward pose with the hand, locomotion is achieved by moving the hand to one of the arrows depending on which movement is desired. The arrows are only for visualisation purposes. The actual movement is triggered when the index finger enters invisible zones which are placed around the 3D arrows indicating the movement direction. Only touching the arrows would be too strict, whereas the introduction of movement zones allows more room for user error. For this reason, zones are actually larger than the arrows shown to the user. This is depicted in Fig. 2. Furthermore, the zones for moving left and right are generally bigger than for moving forward and backward. The reason for this is that during first pilot testing it was found that users generally made wide movements to the left and right. If the hand moves out of a zone, movement will unintentionally stop. Moving the hand forward was restricted due to arm length and moving backwards was restricted because the own body was in the way. Furthermore, with the design showed in Fig. 2, users could move forward by putting the hand forward and then swiped to the left/right to rotate instead of moving the hand to the center and then to the left/right. Once the UI is shown, the zones are activated for all movement directions and the center can be used to indicate that no movement is desired.

The 3D graphical user interface which is visible once a specific hand gesture is detected. The interface will be shown around the hand of the user. The left image shows the possible movement actions. The right image shows zones which are invisible to the user. If the hand/index finger enters one of these zones, the respective movement is triggered. The two techniques FingerUI and HandUI use different sizes for the zones (smaller zones for the IndexUI).

HandUI. This technique is similar to FingerUI. The difference is the hand pose to enable the user interface. A “stop” gesture, i.e. palm facing away from the face and all fingers are up, is used to show the user interface. Instead of the index finger, the palm center needs to enter a zone to enable movement. The size of the zones is also adjusted (bigger and more space in the center for no movement).

ThumbGesture. A thumbs up gesture is used to activate movement. The four movement directions are mapped to different gestures. Thumb pointing up = Forward; Thumb pointing towards face = Backward; Thumb left = Left; Thumb right = Right.

All hand based locomotion techniques used a static gesture to activate locomotion and no individual finger movement was necessary. Furthermore, while the gestures and controller had a dedicated option to rotate the virtual avatar, users could also rotate by looking around with the VR HMD. Users can not change the locomotion speed but once the user enters a zone with their hand to enable movement, the users locomotion speed increases over the first second up to a maximum of 28.8 km/h (8 m/s). The time it takes a user to rotate their body about 90\(^\circ \) using hand gestures or the controller is 1.5 s. Movement is immediately stopped if the users’ hand is no longer in a movement zone.

3.1 Explanation of Chosen Techniques

Techniques with different input modalities such as controller can be adapted or serve as metaphor to implement bare handed techniques for locomotion. With this in consideration, the proposed techniques were implemented. ThumbGesture was implemented since it is quite similar to rotating a thumbstick into the desired direction as it uses the direction of the thumb to indicate the movement direction. Furthermore, ThumbGesture can be seen as a variation of the locomotion technique introduced by Zhang et al. [35]. ThumbGesture however uses only one hand instead of two. FingerUI was developed to use the metaphor of pointing forward to enable movement. The shown 3D graphical user interface is similar to a digital pad on common controllers that allow movement of virtual characters. Previous studies suggest that the gesture for pointing forward could be error-prone due to tracking failures since the index finger is often obscured for the cameras by the rest of the hand [33]. For this purpose, HandUI was implemented which should be easy to track by the hand tracking device since no finger is occluded. Controller was added as a baseline and serves as the current gold standard for locomotion in VR. Only one-handed techniques were implemented, as one hand should be free for interaction tasks.

4 Evaluation

4.1 Objectives

The goal of this study was to compare the three locomotion techniques using bare hands. Controller was added as a baseline, to generally compare hand gesture locomotion with the gold standard. It was anticipated that a controller will outperform the bare handed techniques. However, the main objective was to find out which of the three bare handed techniques is best in terms of efficiency, usability, perceived workload, and subjective user rating. The efficiency of the different techniques was measured by the task completion time. The well known System Usability Scale (SUS) [6, 7] was used as usability measure. The perceived workload was measured by the NASA Task Load Index (NASA-TLX) [18, 19]. Since hand tracking is still a maturing technology and tracking errors are expected, NASA-TLX should give interesting insights into possible frustration and other measures. It was deliberately decided not to use more questionnaires to keep the experiment short. This was because it was expected that some participants would suffer from motion sickness and might decide to abort the experiment if it takes too long. It was also decided not to include any questionnaire for motion sickness as it can be expected that the proposed techniques are similar in this regard.

4.2 Participants

A total number of 16 participants participated in the study and 12 completed the experiment. Four participants cancelled the experiment due to increased motion sickness during the experiment. The participants’ age ranged between 18 and 63 years old (Age \(\mu \) = 33.38). Six females participated in the study. All participants were laypeople to VR technology and wore a VR HMD less than five times.

4.3 Apparatus

The evaluation was performed by using a gaming notebook with an Intel Core I7-7820HK, 32 GB DDR4 RAM, Nvidia Geforce GTX 1080 running a 64 bit Windows 10. Meta Quest 2 was used as the VR HMD and the hand tracking was realized using version 38 of the Oculus Integration Plugin in Unity.

4.4 Experimental Task

The participants had to move through a minimalistic, corridor-like virtual environment and touch virtual pillars. The environment is 10m wide and 110m long. A total of ten pillars are placed in the environment about 10m apart from each other. The pillars are arranged in a way that users had to move left and right to reach the pillars (See Fig. 3). After a pillar was touched, its color changed to green, indicating that it was touched. Once ten pillars were touched, a trial was completed.

4.5 Procedure

The experiment had a within-subject design. Each participant had to move twice through the virtual environment with each technique. This allowed the subjects to understand and learn the technique in one trial and the latter trial can be used more reliable as measure for task completion time. A short video clip was shown to the participant to inform them how to move with the current technique. The experiment was conducted in seating position and users could rotate their body with a swivel chair. The order of locomotion techniques was counterbalanced using the balanced latin square algorithm. After a participant touched all ten pillars in the virtual environment twice, the participant was teleported to an area where questionnaires should be answered. Participants first filled in the NASA-TLX and then the SUS. The answers could be filled in with either the controller or using bare hands in VR. This was repeated for each locomotion technique. After the last, a final questionnaire was shown to the participant were they could rate each technique on a scale from 1 (bad) to 10 (good). One user session took about 30 min.

5 Results

5.1 Task Completion Time

For the task completion time, the time between touching the first and the last pillar is measured. The average time to touch all ten pillars in a trial is depicted in Fig. 4. Levene’s test assured the homogeneity of variances of the input data and therefore one-way ANOVA was used. The result F(3,47) = 8.817 with p value \(< 0.01\) showed significant differences between the techniques. The post-hoc test TukeyHSD revealed the following statistically significant differences between technique pairs: Controller-FingerUI \(p < 0.001\); Controller-HandUI \(p < 0.05\); ThumbGesture-FingerUI \(p < 0.01\).

5.2 NASA Task Load Index (NASA-TLX)

The NASA-TLX questionnaire was answered after performing the experimental task with a technique. A task took about two minutes to complete and the completion of the questionnaires allowed a break of about two minutes between each successive task. The raw data of the NASA-TLX is used without additional subscale weighting in order to further reduce the amount of time required by participants to spend in VR (Questionnaires were answered within the virtual environment). Using the raw NASA-TLX data without weighting is common in similar literature [10, 33]. The questionnaire measures the perceived mental and physical workload, temporal demand, performance, effort, and frustration of participants. The overall workload of the proposed techniques is calculated by the mean of the six subscales. The overall score for each technique in order from high to low: The highest perceived workload was using HandUI (M = 53.72), followed by FingerUI (M = 46.13), a slightly lower workload by using ThumbGesture (M = 41.55), and finally Controller (M = 37.92).

5.3 System Usability Scale (SUS)

The SUS gives insight into the subjective perceived usability for the different techniques. Generally, a higher value means better perceived usability and a value above 69 can be considered as above average according to Sauro [29]. It is to note that the SUS scores of this evaluation are only meaningful within this experiment and should not be compared to SUS scores of techniques within other research work. The following SUS scores were achieved: Controller 66.1; FingerUI 62.9; HandUI 61.4; ThumbGesture 76.8. The scores are depicted in Fig. 6.

5.4 Subjective Ranking of Techniques

Participants were asked to rate each technique on a scale from 1 (bad) to 10 (good). The techniques got the following average rating from users: Controller 8.5; FingerUI 6.42; HandUI 5.21; ThumbGesture 7.57. The scores are depicted in Fig. 6.

6 Discussion and Future Work

It was anticipated that the controller outperforms the hand gesture based techniques in task completion time. However, no statistically significant difference was found between Controller and ThumbGesture. Another noteworthy observation is that ThumbGesture received a better SUS score than Controller. This could be explained by the fact that all participants were laypeople to VR and therefore have minimal experience with using a controller which lead to a better usability rating.

No significant differences were found in the overall scores regarding the perceived workload of the techniques. However, it can be observed in Fig. 5 that controller required less effort and led to lower frustration by the participants.

Ranking of techniques was also in favor of Controller but ThumbGesture received similar results. Overall it can be said that ThumbGesture was the winner out of the three proposed one-handed locomotion techniques as it got the best SUS scores, highest user rating, and fastest task completion time. This leads to the conclusion that a one-handed technique for continuous locomotion should use a simple gesture for moving without an additional user interface.

Interestingly, some participants exploited the fact that turning the head also rotated the virtual character. Thus, only the gesture for moving forward was necessary to achieve the goal. A follow-up study could investigate whether gestures to change the direction of movement offer added value or if they are unnecessary.

It was also interesting that three out of four subjects who stopped the experiment, stopped during the controller condition (the last participant interrupted at HandUI). This could be a hint that the controller actually causes more motion sickness than gesture-based locomotion. However, more data is required to support this hypothesis.

7 Limitations

Little research has been performed on how bare hands can be used to move in virtual environments. Therefore, it is not yet clear which bare handed technique is performing well enough to compare it to other freehand techniques which are widely researched and acknowledged such as WIP. In that regard, once suitable bare handed locomotion techniques have been found, they should be compared to sophisticated techniques such as WIP. Only then can a well-founded insight be gained into whether hand gestures are a valid alternative.

The robustness of the bare handed techniques is highly dependent on the quality of the hand tracking solution. Some participants had problems with the gestures, even though they were quite simple. This was particularly noticeable with the ThumbGesture technique, where the virtual hand sometimes had an index finger pointing outwards, even though the physical hand was correctly shaped. Similar false hand configurations occurred once the index finger pointed outwards because the finger was covered by the cameras. Furthermore, no questionnaire for motion sickness was used. The experiment was designed without a questionnaire on motion sickness in order to keep it as short as possible, also so that subjects would not have to spend much time in VR. However, since some subjects dropped out due to motion sickness, an evaluation in this regard would have been useful.

Another limitation is the number of participants. Only a limited number of participants could be recruited due to the COVID-19 pandemic. More participants would be required in order to be able to draw stronger conclusions about the proposed techniques.

8 Conclusion

This work presents three one-handed techniques for continuous locomotion in VR. The techniques are compared with a standard controller implementation and the respective other techniques. The techniques are compared with respect to task completion time, usability, perceived workload, and got ranked by the participants. Controller was fastest in task completion time and got the highest rating from participants. In the other measurements, however, there is no clear winner between the use of a controller and one of the presented one-handed techniques for continuous locomotion. ThumbGesture even got a higher SUS score than Controller. Overall, it can be said that out of the three one-handed techniques, ThumbGesture was the winner in this experiment. This technique received the highest scores in the SUS and ranking by participants. Furthermore, it got lowest perceived workload out of the three one-handed techniques. It was also the fastest in task completion time among the bare handed techniques. This work aims towards using natural hand gestures for moving around in VR. The techniques presented show promising results overall, but further techniques should be evaluated to find potential suitable hand gestures for the locomotion task. This is especially important if physical controllers are to be replaced by hand tracking in the future or if controllers are not desired for an application.

References

Al Zayer, M., MacNeilage, P., Folmer, E.: Virtual locomotion: a survey. IEEE Trans. Visual. Comput. Graph. 26(6), 2315–2334 (2020). https://doi.org/10.1109/TVCG.2018.2887379. ISSN 1941–0506

Berger, L., Wolf, K.: Wim: Fast locomotion in virtual reality with spatial orientation gain & without motion sickness. In: Proceedings of the 17th International Conference on Mobile and Ubiquitous Multimedia, MUM 2018, pp. 19–24. Association for Computing Machinery, New York (2018). ISBN 9781450365949. https://doi.org/10.1145/3282894.3282932

Bishop, I., Abid, M.R.: Survey of locomotion systems in virtual reality. In: Proceedings of the 2nd International Conference on Information System and Data Mining - ICISDM 2018, pp. 151–154. ACM Press, Lakeland (2018). ISBN 978-1-4503-6354-9. https://doi.org/10.1145/3206098.3206108

Bozgeyikli, E., Raij, A., Katkoori, S., Dubey, R.: Locomotion in virtual reality for individuals with autism spectrum disorder. In: Proceedings of the 2016 Symposium on Spatial User Interaction, SUI 2016, pp. 33–42. Association for Computing Machinery, New York (2016a). ISBN 9781450340687. https://doi.org/10.1145/2983310.2985763

Bozgeyikli, E., Raij, A., Katkoori, S., Dubey, R.: Point & teleport locomotion technique for virtual reality. In: Proceedings of the 2016 Annual Symposium on Computer-Human Interaction in Play, CHI PLAY 2016, pp. 205–216. Association for Computing Machinery, New York (2016b). ISBN 9781450344562. https://doi.org/10.1145/2967934.2968105

Brooke, J.: SUS: a quick and dirty’ usability. Usability Eval. Ind. 189 (1996)

Brooke, J.: Sus: a retrospective. J. Usability Stud. 8(2), 29–40 (2013)

Bruno, L., Pereira, J., Jorge, J.: A new approach to walking in place. In: Kotzé, P., Marsden, G., Lindgaard, G., Wesson, J., Winckler, M. (eds.) INTERACT 2013. LNCS, vol. 8119, pp. 370–387. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-40477-1_23

Buttussi, F., Chittaro, L.: Locomotion in place in virtual reality: a comparative evaluation of joystick, teleport, and leaning. IEEE Trans. Visual. Comput. Graph. 27(1), 125–136 (2021). https://doi.org/10.1109/TVCG.2019.2928304. ISSN 1941–0506

Caggianese, G., Capece, N., Erra, U., Gallo, L., Rinaldi, M.: Freehand-steering locomotion techniques for immersive virtual environments: a comparative evaluation. Int. J. Human-Comput. Inter. 36(18), 1734–1755 (2020). https://doi.org/10.1080/10447318.2020.1785151

Caggianese, G., Gallo, L., Neroni, P.: Design and preliminary evaluation of free-hand travel techniques for wearable immersive virtual reality systems with egocentric sensing. In: De Paolis, L.T., Mongelli, A. (eds.) AVR 2015. LNCS, vol. 9254, pp. 399–408. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-22888-4_29

Cardoso, J.C.S.: Comparison of gesture, gamepad, and gaze-based locomotion for VR worlds. In: Proceedings of the 22nd ACM Conference on Virtual Reality Software and Technology, VRST 2016, pp. 319–320. Association for Computing Machinery, New York (2016). ISBN 9781450344913. https://doi.org/10.1145/2993369.2996327

Cardoso, J.C.S., Perrotta, A.: A survey of real locomotion techniques for immersive virtual reality applications on head-mounted displays. Comput. Graph. 85, 55–73 (2019). https://doi.org/10.1016/j.cag.2019.09.005. ISSN 0097–8493

Clifton, J., Palmisano, S.: Comfortable locomotion in VR: teleportation is not a complete solution. In: 25th ACM Symposium on Virtual Reality Software and Technology, VRST 2019. Association for Computing Machinery, New York (2019). ISBN 9781450370011. https://doi.org/10.1145/3359996.3364722

Ferracani, A., Pezzatini, D., Bianchini, J., Biscini, G., Del Bimbo, A.: Locomotion by natural gestures for immersive virtual environments. In: Proceedings of the 1st International Workshop on Multimedia Alternate Realities, pp. 21–24. ACM, Amsterdam The Netherlands (2016). ISBN 978-1-4503-4521-7. https://doi.org/10.1145/2983298.2983307

Grechkin, T., Thomas, J., Azmandian, M., Bolas, M., Suma, E.: Revisiting detection thresholds for redirected walking: combining translation and curvature gains. In: Proceedings of the ACM Symposium on Applied Perception, SAP 2016, pp. 113–120. Association for Computing Machinery, New York (2016), ISBN 9781450343831. https://doi.org/10.1145/2931002.2931018

Han, J., Moere, A.V., Simeone, A.L.: Foldable spaces: an overt redirection approach for natural walking in virtual reality. In: 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), pp. 167–175 (2022). https://doi.org/10.1109/VR51125.2022.00035

Hart, S.G.: Nasa-task load index (NASA-TLX); 20 years later. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 50(9), 904–908 (2006). https://doi.org/10.1177/154193120605000909

Hart, S.G., Staveland, L.E.: Development of NASA-TLX (task load index): results of empirical and theoretical research. In: Hancock, P.A., Meshkati, N. (eds.) Human Mental Workload, Advances in Psychology, North-Holland, vol. 52, pp. 139–183 (1988). https://doi.org/10.1016/S0166-4115(08)62386-9

Huang, R., Harris-adamson, C., Odell, D., Rempel, D.: Design of finger gestures for locomotion in virtual reality. Virtual Reality Intell. Hardware 1(1), 1–9 (2019). https://doi.org/10.3724/SP.J.2096-5796.2018.0007. ISSN 2096–5796

Kang, H.J., Shin, J.h., Ponto, K.: A comparative analysis of 3D user interaction: how to move virtual objects in mixed reality. In: 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), pp. 275–284 (2020). ISSN 2642-5254. https://doi.org/10.1109/VR46266.2020.00047

Kim, J.-S., Gračanin, D., Matković, K., Quek, F.: Finger walking in place (FWIP): a traveling technique in virtual environments. In: Butz, A., Fisher, B., Krüger, A., Olivier, P., Christie, M. (eds.) SG 2008. LNCS, vol. 5166, pp. 58–69. Springer, Heidelberg (2008). https://doi.org/10.1007/978-3-540-85412-8_6

Kim, J.-S., Gračanin, D., Matković, K., Quek, F.: The effects of finger-walking in place (FWIP) for spatial knowledge acquisition in virtual environments. In: Taylor, R., Boulanger, P., Krüger, A., Olivier, P. (eds.) SG 2010. LNCS, vol. 6133, pp. 56–67. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-13544-6_6

Langbehn, E., Eichler, T., Ghose, S., Bruder, G., Steinicke, F.: Evaluation of an omnidirectional walking-in-place user interface with virtual locomotion speed scaled by forward leaning angle. In: GI AR/VR Workshop, pp. 149–160 (2015)

Langbehn, E., Lubos, P., Steinicke, F.: Evaluation of locomotion techniques for room-scale VR: joystick, teleportation, and redirected walking. In: Proceedings of the Virtual Reality International Conference - Laval Virtual, VRIC 2018. Association for Computing Machinery, New York (2018). ISBN 9781450353816. https://doi.org/10.1145/3234253.3234291

Marie Prinz, L., Mathew, T., Klüber, S., Weyers, B.: An overview and analysis of publications on locomotion taxonomies. In: 2021 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), pp. 385–388 (2021).https://doi.org/10.1109/VRW52623.2021.00080

Nilsson, N.C., et al.: 15 Years of research on redirected walking in immersive virtual environments. IEEE Comput. Graph. Appl. 38(2), 44–56 (2018). https://doi.org/10.1109/MCG.2018.111125628. ISSN 1558–1756

Nilsson, N.C., Serafin, S., Steinicke, F., Nordahl, R.: Natural walking in virtual reality: a review. Comput. Entertainment 16(2), 1–22 (2018). https://doi.org/10.1145/3180658

Sauro, J.: A practical guide to the system usability scale: background, benchmarks & best practices. Measuring Usability LLC (2011)

Schäfer, A., Reis, G., Stricker, D.: The gesture authoring space: authoring customised hand gestures for grasping virtual objects in immersive virtual environments. In: Proceedings of Mensch und Computer 2022. Association for Computing Machinery, New York (2022). ISBN 978-1-4503-9690-5/22/09. https://doi.org/10.1145/3543758.3543766

Schuemie, M.J., van der Straaten, P., Krijn, M., van der Mast, C.A.: Research on presence in virtual reality: a survey. CyberPsychology Behav. 4(2), 183–201 (2001). https://doi.org/10.1089/109493101300117884. ISSN 1094–9313

Schäfer, A., Reis, G., Stricker, D.: Controlling teleportation-based locomotion in virtual reality with hand gestures: a comparative evaluation of two-handed and one-handed techniques. Electronics 10(6), 715 (2021). https://doi.org/10.3390/electronics10060715. ISSN 2079–9292

Schäfer, A., Reis, G., Stricker, D.: Comparing controller with the hand gestures pinch and grab for picking up and placing virtual objects. In: 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), pp. 738–739 (2022). https://doi.org/10.1109/VRW55335.2022.00220

Templeman, J.N., Denbrook, P.S., Sibert, L.E.: Virtual locomotion: walking in place through virtual environments. Presence 8(6), 598–617 (1999). https://doi.org/10.1162/105474699566512

Zhang, F., Chu, S., Pan, R., Ji, N., Xi, L.: Double hand-gesture interaction for walk-through in VR environment. In: 2017 IEEE/ACIS 16th International Conference on Computer and Information Science (ICIS), pp. 539–544 (2017). https://doi.org/10.1109/ICIS.2017.7960051

Zielasko, D., Horn, S., Freitag, S., Weyers, B., Kuhlen, T.W.: Evaluation of hands-free HMD-based navigation techniques for immersive data analysis. In: 2016 IEEE Symposium on 3D User Interfaces (3DUI), pp. 113–119 (2016). https://doi.org/10.1109/3DUI.2016.7460040

Zielasko, D., Law, Y.C., Weyers, B.: Take a look around - the impact of decoupling gaze and travel-direction in seated and ground-based virtual reality utilizing torso-directed steering. In: 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), pp. 398–406 (2020). https://doi.org/10.1109/VR46266.2020.00060

Acknowledgement

Part of this work was funded by the Bundesministerium für Bildung und Forschung (BMBF) in the context of ODPfalz under Grant 03IHS075B. This work was also supported by the EU Research and Innovation programme Horizon 2020 (project INFINITY) under the grant agreement ID: 883293.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this paper

Cite this paper

Schäfer, A., Reis, G., Stricker, D. (2022). Controlling Continuous Locomotion in Virtual Reality with Bare Hands Using Hand Gestures. In: Zachmann, G., Alcañiz Raya, M., Bourdot, P., Marchal, M., Stefanucci, J., Yang, X. (eds) Virtual Reality and Mixed Reality. EuroXR 2022. Lecture Notes in Computer Science, vol 13484. Springer, Cham. https://doi.org/10.1007/978-3-031-16234-3_11

Download citation

DOI: https://doi.org/10.1007/978-3-031-16234-3_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-16233-6

Online ISBN: 978-3-031-16234-3

eBook Packages: Computer ScienceComputer Science (R0)