Abstract

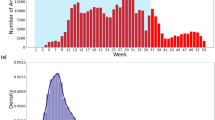

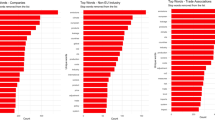

In this paper, we develop an economic policy uncertainty (EPU) index for the USA and Canada using natural language processing (NLP) methods. Our EPU-NLP index is based on an application of several algorithms, including the rapid automatic keyword extraction (RAKE) algorithm, a combination of the RoBERTa and the Sentence-BERT algorithms, a PyLucene search engine, and the GrapeNLP local grammar engine. For comparison purposes, we also develop an index based on a strictly Boolean method. We find that the EPU-NLP index captures COVID-19-related uncertainty better than the Boolean index. Using a structural VAR approach, we find that a one-standard deviation (SD) economic policy uncertainty shock with EPU-NLP leads, both for Canada and the USA, to larger declines in key macroeconomic variables than a one SD EPU-Boolean shock. In line with the COVID-19 impact, the SVAR model shows an abrupt contraction in economic variables both in Canada and the USA. Moreover, an uncertainty shock with the EPU-NLP caused a much larger contraction for the period including the COVID-19 pandemic than for the pre-COVID-19 period.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

RoBERTa is the “robustly optimized” version of [9]’s seminal neural network-based BERT (Bidirectional Encoder Representations from Transformers). We describe these algorithms below.

- 2.

It would seem that in the case of the BBD-EPU for countries with a native language other than English, there was a more cursory verification process and that the selection of articles was entirely Boolean in nature (see the online Appendix to [3]).

- 3.

We used the Calgary Herald, the Financial Post, the Montreal Gazette, the National Post, the Ottawa Citizen, the Toronto Star, and the Vancouver Sun for Canada and USA Today, the Los Angeles Times, The Wall Street Journal, The Dallas Morning News, the Miami Herald, and The New York Times for the USA.

- 4.

To be more precise, 10% of the masked terms are replaced with randomly selected keywords, 10% are replaced with the true word, and 80% are replaced with the token [MASK].

- 5.

The cosine-similarity measure is given by \(\sigma \left (A,B\right )=\frac {A\cdot B}{\Vert A\Vert _{2}\Vert B\Vert _{2}}\)where A and B are n dimensional vectors and ∥.∥2 is L2 norm. The cosine-similarity measure σ(A, B) takes the value 1 when the two vectors are exactly the same and the value − 1 when they are completely dissimilar. Comparing this similarity measure to cross-entropy or to mean-squared error (which uses Euclidean distance as its measure of closeness), this measure has the advantage of being dependent only on the direction of the vectors and not on their magnitudes and, hence, is independent of the scaling of the two vectors.

- 6.

We use the RoBERTa model to map tokens in a sentence to the contextual word embeddings from RoBERTa. The next layer in our model consists of averaging (“mean-pooling”) all contextualized word embeddings obtained from RoBERTa. In other words, each sentence is passed first through the word_embedding_model (in Roberta) and then through the pooling_model to give fixed-sized vectors. Vectors of fixed length are required by SBERT.

- 7.

Unitex/GramLab is an open-source, cross-platform, multilingual, lexicon- and grammar-based corpus processing tool. It can be downloaded from https://unitexgramlab.org/.

- 8.

We used the GPU accelerator offered by Google Colab Pro for data cleaning, semantic search, and RAKE (Python), the Nvidia Tesla P100 GPU offered by the Kaggle platform for the grammar, and an Intel Xeon i9-7980Xe 36-core server with Nvidia Titan V GPU and 126GB DDR4 RAM for fine-tuning the RoBERTa model.

- 9.

We use industrial production at the monthly frequency as a proxy for real GDP in the case of the USA since real GDP is not available on a monthly basis for the USA.

- 10.

Since our primary purpose is not to make a comparison of the EPU-NLPCanada and EPU-NLPUSA indices, using different variables is not consequential.

References

Altig, D., Baker, S., Barrero, J.M., Bloom, N., Bunn, P., Chen, S., Davis, S.J., Leather, J., Meyer, B., Mihaylov, E., et al.: Economic uncertainty before and during the covid-19 pandemic. J. Public Econ. 191, 104274 (2020)

Altug, S., Demers, F.S., Demers, M.: The investment tax credit and irreversible investment. J. Macroecon. 31(4), 509–522 (2009)

Baker, S.R., Bloom, N., Davis, S.J.: Measuring economic policy uncertainty. Q. J. Econo. 131(4), 1593–1636 (2016)

Baker, S.R., Bloom, N., Davis, S.J., Terry, S.J.: Covid-induced economic uncertainty. Technical report, National Bureau of Economic Research (2020)

Banbura, M., Giannone, D., Reichlin, L.: Large bayesian vars. Technical report, European Central Bank (2008)

Bernanke, B.S.: Irreversibility, uncertainty, and cyclical investment. Q. J. Econ. 98(1), 85–106 (1983)

Caballero, R.J., Engel, E.M.: Explaining investment dynamics in us manufacturing: a generalized (s, s) approach. Econometrica 67(4), 783–826 (1999)

Demers, M.: Investment under uncertainty, irreversibility and the arrival of information over time. Rev. Econ. Stud. 58(2), 333–350 (1991)

Devlin, J., Chang, M.-W., Lee, K., Toutanova, K.: Bert: Pre-training of deep bidirectional transformers for language understanding. Preprint (2018). arXiv:1810.04805

Dixit, A., Pindyck, R.: Investment Under Uncertainty. Princeton University Press, Princeton, NJ (1994)

Gentzkow, M., Kelly, B., Taddy, M.: Text as data. J. Econ. Lit. 57(3), 535–574 (2019)

Gross, M.: The construction of local grammars. In: Roche, E., Shabes, Y. (eds.) Finite-State Language Processing. MIT Press, Cambridge, MA (1997)

Hamilton, J.D.: Why you should never use the hodrick-prescott filter. Rev. Econ. Stat. 100(5), 831–843 (2018)

Jurado, K., Ludvigson, S.C., Ng, S.: Measuring uncertainty. Am. Econ. Rev. 105(3), 1177–1216 (2015)

Liu, Y., Ott, M., Goyal, N., Du, J., Joshi, M., Chen, D., Levy, O., Lewis, M., Zettlemoyer, L., Stoyanov, V.: Roberta: A robustly optimized bert pretraining approach. Preprint (2019). arXiv:1907.11692

Moran, K., Stevanović, D., Touré, A.K.: Macroeconomic Uncertainty and the Covid-19 Pandemic: Measure and Impacts on the Canadian Economy. CIRANO (2020)

Paumier, S., Nakamura, T., Voyatzi, S.: Unitex, A Corpus Processing System with Multi-Lingual Linguistic Resources. In: eLEX2009, vol. 173 (2009)

Reimers, N., Gurevych, I.: Sentence-bert: Sentence embeddings using siamese bert-networks. Preprint (2019). arXiv:1908.10084

Rose, S., Engel, D., Cramer, N., Cowley, W.: Automatic keyword extraction from individual documents. Text Mining Appl. Theory 1, 1–20 (2010)

Sastre, J.: Efficient Finite-State Algorithms of Application of Local Grammars. Ph. D. thesis (2011)

Sastre, J.: Grapenlp grammar engine in a kaggle notebook (2020). Available at https://www.kaggle.com/javiersastre/grapenlp-grammar-engine-in-a-kaggle-notebook

Sastre, J., Vahid, A.H., McDonagh, C., Walsh, P.: A text mining approach to discovering covid-19 relevant factors. In: 2020 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), pp. 486–490. IEEE (2020)

Acknowledgements

This research is partially funded by a Carleton University FPA Research Engagement Grant. The authors sincerely thank three anonymous referees for comments, and Javier Sastre, Asma Djaidri, and Raheel Ahmed for their support.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Qureshi, S., Chu, B., Demers, F.S., Demers, M. (2023). Using Natural Language Processing to Measure COVID-19-Induced Economic Policy Uncertainty for Canada and the USA. In: Valenzuela, O., Rojas, F., Herrera, L.J., Pomares, H., Rojas, I. (eds) Theory and Applications of Time Series Analysis and Forecasting. ITISE 2021. Contributions to Statistics. Springer, Cham. https://doi.org/10.1007/978-3-031-14197-3_8

Download citation

DOI: https://doi.org/10.1007/978-3-031-14197-3_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-14196-6

Online ISBN: 978-3-031-14197-3

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)