Abstract

Reinforcement learning has been shown to be an effective strategy for automatically training policies for challenging control problems. Focusing on non-cooperative multi-agent systems, we propose a novel reinforcement learning framework for training joint policies that form a Nash equilibrium. In our approach, rather than providing low-level reward functions, the user provides high-level specifications that encode the objective of each agent. Then, guided by the structure of the specifications, our algorithm searches over policies to identify one that provably forms an \(\epsilon \)-Nash equilibrium (with high probability). Importantly, it prioritizes policies in a way that maximizes social welfare across all agents. Our empirical evaluation demonstrates that our algorithm computes equilibrium policies with high social welfare, whereas state-of-the-art baselines either fail to compute Nash equilibria or compute ones with comparatively lower social welfare.

The extended version of this paper can be found at [3].

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

1 Introduction

Reinforcement learning (RL) is an effective strategy for automatically synthesizing controllers for challenging control problems. As a consequence, there has been interest in applying RL to multi-agent systems. For example, RL has been used to coordinate agents in cooperative systems to accomplish a shared goal [22]. Our focus is on non-cooperative systems, where the agents are trying to achieve their own goals [17]; for such systems, the goal is typically to learn a policy for each agent such that the joint strategy forms a Nash equilibrium.

A key challenge facing existing approaches is how tasks are specified. First, they typically require that the task for each agent is specified as a reward function. However, reward functions tend to be very low-level, making them difficult to manually design; furthermore, they often obfuscate high-level structure in the problem known to make RL more efficient in the single-agent [14] and cooperative [22] settings. Second, they typically focus on computing an arbitrary Nash equilibrium. However, in many settings, the user is a social planner trying to optimize the overall social welfare of the system, and most existing approaches are not designed to optimize social welfare.

We propose a novel multi-agent RL framework for learning policies from high-level specifications (one specification per agent) such that the resulting joint policy (i) has high social welfare, and (ii) is an \(\epsilon \)-Nash equilibrium (for a given \(\epsilon \)). We formulate this problem as a constrained optimization problem where the goal is to maximize social welfare under the constraint that the joint policy is an \(\epsilon \)-Nash equilibrium.

Our algorithm for solving this optimization problem uses an enumerative search strategy. First, it enumerates candidate policies in decreasing order of social welfare. To ensure a tractable search space, it restricts to policies that conform to the structure of the user-provided specification. Then, for each candidate policy, it uses an explore-then-exploit self-play RL algorithm [4] to compute punishment strategies that are triggered when some agent deviates from the original joint policy. It also computes the maximum benefit each agent derives from deviating, which can be used to determine whether the joint policy augmented with punishment strategies forms an \(\epsilon \)-Nash equilibrium; if so, it returns the joint policy.

Intuitively, the enumerative search tries to optimize social welfare, whereas the self-play RL algorithm checks whether the \(\epsilon \)-Nash equilibrium constraint holds. Since this RL algorithm comes with PAC (Probably Approximately Correct) guarantees, our algorithm is guaranteed to return an \(\epsilon \)-Nash equilibrium with high probability. In summary, our contributions are as follows.

-

We study the problem of maximizing social welfare under the constraint that the policies form an \(\epsilon \)-NE. To the best of our knowledge, this problem has not been studied before in the context of learning (beyond single-step games).

-

We provide an enumerate-and-verify framework for solving the said problem.

-

We propose a verification algorithm with a probabilistic soundness guarantee in the RL setting of probabilistic systems with unknown transition probabilities.

Motivating Example. Consider the road intersection scenario in Fig. 1. There are four cars; three are traveling east to west and one is traveling north to south. At any stage, each car can either move forward one step or stay in place. Suppose each car’s specification is as follows:

-

Black car: Cross the intersection before the green and orange cars.

-

Blue car: Cross the intersection before the black car and stay a car length ahead of the green and orange cars.

-

Green car: Cross the intersection before the black car.

-

Orange car: Cross the intersection before the black car.

We also require that the cars do not crash into one another.

Clearly, not all agents can achieve their goals. The next highest social welfare is for three agents to achieve their goals. In particular, one possibility is that all cars except the black car achieve their goals. However, the corresponding joint policy requires that the black car does not move, which is not a Nash equilibrium—there is always a gap between the blue car and the other two cars behind, so the black car can deviate by inserting itself into the gap to achieve its own goal. Our algorithm uses self-play RL to optimize the policy for the black car, and finds that the other agents cannot prevent the black car from improving its outcome in this way. Thus, it correctly rejects this joint policy. Eventually, our algorithm computes a Nash equilibrium in which the black and blue cars achieve their goals.

1.1 Related Work

Multi-agent RL. There has been work on learning Nash equilibria in the multi-agent RL setting [1, 12, 13, 21, 23, 24]; however, these approaches focus on learning an arbitrary equilibrium and do not optimize social welfare. There has also been work on studying weaker notions of equilibria in this context [9, 27], as well as work on learning Nash equilibria in two agent zero-sum games [4, 20, 26].

RL from High-Level Specifications. There has been recent work on using specifications based on temporal logic for specifying RL tasks in the single agent setting; a comprehensive survey may be found in [2]. There has also been recent work on using temporal logic specifications for multi-agent RL [10, 22], but these approaches focus on cooperative scenarios in which there is a common objective that all agents are trying to achieve.

Equilibrium in Markov Games. There has been work on computing Nash equilibrium in Markov games [17, 25], including work on computing \(\epsilon \)-Nash equilibria from logical specifications [6, 7], as well as recent work focusing on computing welfare-optimizing Nash equilibria from temporal specifications [18, 19]; however, all these works focus on the planning setting where the transition probabilities are known. Checking for existence of Nash equilibrium, even in deterministic games, has been shown to be NP-complete for reachability objectives [5].

Social Welfare. There has been work on computing welfare maximizing Nash equilibria for bimatrix games, which are two-player one-step Markov games with known transitions [8, 11]; in contrast, we study this problem in the context of general Markov games.

2 Preliminaries

2.1 Markov Game

We consider an n-agent Markov game \(\mathcal {M}= (\mathcal {S}, \mathcal {A}, P, H, s_0)\) with a finite set of states \(\mathcal {S}\), actions \(\mathcal {A}= A_1\times \cdots \times A_n\) where \(A_i\) is a finite set of actions available to agent i, transition probabilities \(P(s'\mid s,a)\) for \(s,s'\in \mathcal {S}\) and \(a\in \mathcal {A}\), finite horizon H, and initial state \(s_0\) [20]. A trajectory \(\zeta \in \mathcal {Z}=(\mathcal {S}\times \mathcal {A})^*\times \mathcal {S}\) is a finite sequence \(\zeta =s_0\xrightarrow {a_0}s_1\xrightarrow {a_1}\cdots \xrightarrow {a_{t-1}}s_t\) where \(s_k \in \mathcal {S}\), \({a_k} \in \mathcal {A}\); we use \(|\zeta | = t\) to denote the length of the trajectory \(\zeta \) and \(a_k^i\in A_i\) to denote the action of agent i in \(a_k\).

For any \(i\in [n]\), let \(\mathcal {D}(A_i)\) denote the set of distributions over \(A_i\)—i.e., \(\mathcal {D}(A_i) = \{\varDelta :A_i\rightarrow [0,1]\mid \sum _{a_i\in A_i}\varDelta (a_i) = 1\}\). A policy for agent i is a function \(\pi _i:\mathcal {Z}\rightarrow \mathcal {D}(A_i)\) mapping trajectories to distributions over actions. A policy \(\pi _i\) is deterministic if for every \(\zeta \in \mathcal {Z}\), there is an action \(a_i\in A_i\) such that \(\pi _i(\zeta )(a_i) = 1\); in this case, we also use \(\pi _i(\zeta )\) to denote the action \(a_i\). A joint policy \(\pi : \mathcal {Z}\rightarrow \mathcal {D}(A)\) maps finite trajectories to distributions over joint actions. We use \((\pi _1,\dots , \pi _n)\) to denote the joint policy in which agent i chooses its action in accordance to \(\pi _i\). We denote by \(\mathcal {D}_{\pi }\) the distribution over H-length trajectories in \(\mathcal {M}\) induced by \(\pi \).

We consider the reinforcement learning setting in which we do not know the probabilities P but instead only have access to a simulator of \(\mathcal {M}\). Typically, we can only sample trajectories of \(\mathcal {M}\) starting at \(s_0\). Some parts of our algorithm are based on an assumption which allows us to obtain sample trajectories starting at any state that has been observed before. For example, if taking action \(a_0\) in \(s_0\) leads to a state \(s_1\), we assume we can obtain future samples starting at \(s_1\).

Assumption 1

We can obtain samples from \(P(\cdot \mid s,a)\) for any previously observed state s and any action a.

2.2 Specification Language

We consider the specification language Spectrl to express agent specifications. We choose Spectrl since there is existing work on leveraging the structure of Spectrl specifications for single-agent RL [16]. However, we believe our algorithm can be adapted to other specification languages as well.

Formally, a Spectrl specification is defined over a set of atomic predicates \({\mathcal {P}}_0\), where every \(p \in {\mathcal {P}}_0\) is associated with a function \({\llbracket p \rrbracket }:\mathcal {S}\rightarrow \mathbb {B}=\{{\texttt {true}}, {\texttt {false}}\}\); we say a state s satisfies p (denoted \(s\models p\)) if and only if \({\llbracket p \rrbracket }(s)={\texttt {true}}\). The set of predicates \(\mathcal {P}\) consists of conjunctions and disjunctions of atomic predicates. The syntax of a predicate \(b\in \mathcal {P}\) is given by the grammar \( b ~::=~ p \mid (b_1 \wedge b_2) \mid (b_1 \vee b_2), \) where \(p\in \mathcal {P}_0\). Similar to atomic predicates, each predicate \(b\in \mathcal {P}\) corresponds to a function \({\llbracket b \rrbracket }:\mathcal {S}\rightarrow \mathbb {B}\) defined naturally over Boolean logic. Finally, the syntax of Spectrl is given byFootnote 1

where \(b\in \mathcal {P}\). Each specification \(\phi \) corresponds to a function \({\llbracket \phi \rrbracket }:\mathcal {Z}\rightarrow \mathbb {B}\), and we say \(\zeta \in \mathcal {Z}\) satisfies \(\phi \) (denoted \(\zeta \models \phi \)) if and only if \({\llbracket \phi \rrbracket }(\zeta )={\texttt {true}}\). Letting \(\zeta \) be a finite trajectory of length t, this function is defined by

Intuitively, the first clause means that the trajectory should eventually reach a state that satisfies the predicate b. The second clause says that the trajectory should satisfy specification \(\phi \) while always staying in states that satisfy b. The third clause says that the trajectory should sequentially satisfy \(\phi _1\) followed by \(\phi _2\). The fourth clause means that the trajectory should satisfy either \(\phi _1\) or \(\phi _2\).

2.3 Abstract Graphs

Spectrl specifications can be represented by abstract graphs which are DAG-like structures in which each vertex represents a set of states (called subgoal regions) and each edge represents a set of concrete trajectories that can be used to transition from the source vertex to the target vertex without violating safety constraints.

Definition 1

An abstract graph \(\mathcal {G}= (U,E,u_0,F,\beta ,\mathcal {Z}_{\text {safe}})\) is a directed acyclic graph (DAG) with vertices U, (directed) edges \(E\subseteq U\times U\), initial vertex \(u_0\in U\), final vertices \(F\subseteq U\), subgoal region map \(\beta :U\rightarrow 2^S\) such that for each \(u\in U\), \(\beta (u)\) is a subgoal region,Footnote 2 and safe trajectories \( \mathcal {Z}_\text {safe}= \bigcup _{e \in E}\mathcal {Z}_\text {safe}^e\cup \bigcup _{f \in F}\mathcal {Z}_\text {safe}^f, \) where \(\mathcal {Z}_\text {safe}^e\subseteq \mathcal {Z}\) denotes the safe trajectories for edge \(e \in E\) and \(\mathcal {Z}_\text {safe}^f\subseteq \mathcal {Z}\) denotes the safe trajectories for final vertex \(f\in F\).

Intuitively, (U, E) is a standard DAG, and \(u_0\) and F define a graph reachability problem for (U, E). Furthermore, \(\beta \) and \(\mathcal {Z}_{\text {safe}}\) connect (U, E) back to the original MDP \(\mathcal {M}\); in particular, for an edge \(e=u\rightarrow u'\), \(\mathcal {Z}_{\text {safe}}^e\) is the set of safe trajectories in \(\mathcal {M}\) that can be used to transition from \(\beta (u)\) to \(\beta (u')\).

Definition 2

A trajectory \(\zeta =s_0\xrightarrow {a_0}s_1\xrightarrow {a_1}\cdots \xrightarrow {a_{t-1}}s_t\) in \(\mathcal {M}\) satisfies the abstract graph \(\mathcal {G}\) (denoted \(\zeta \models \mathcal {G}\)) if there is a sequence of indices \(0=k_0\le k_1<\cdots <k_\ell \le t\) and a path \(\rho =u_0\rightarrow u_1\rightarrow \cdots \rightarrow u_\ell \) in \(\mathcal {G}\) such that

-

\(u_\ell \in F\),

-

for all \(z\in \{0,\ldots ,\ell \}\), we have \(s_{k_z}\in \beta (u_z)\),

-

for all \(z < \ell \), letting \(e_z=u_z\rightarrow u_{z+1}\), we have \(\zeta _{k_z:k_{z+1}}\in \mathcal {Z}_{\text {safe}}^{e_z}\), and

-

\(\zeta _{k_\ell :t}\in \mathcal {Z}_\text {safe}^{u_\ell }\).

The first two conditions state that the trajectory should visit a sequence of subgoal regions corresponding to a path from the initial vertex to some final vertex, and the last two conditions state that the trajectory should be composed of subtrajectories that are safe according to \(\mathcal {Z}_\text {safe}\).

Prior work shows that for every Spectrl specification \(\phi \), we can construct an abstract graph \(\mathcal {G}_\phi \) such that for every trajectory \(\zeta \in \mathcal {Z}\), \(\zeta \models \phi \) if and only if \(\zeta \models \mathcal {G}_\phi \) [16]. Finally, the number of states in the abstract graph is linear in the size of the specification.

2.4 Nash Equilibrium and Social Welfare

Given a Markov game \(\mathcal {M}\) with unknown transitions and Spectrl specifications \(\phi _1,\dots , \phi _n\) for the n agents respectively, the score of agent i from a joint policy \(\pi \) is given by

Our goal is to compute a high-value \(\epsilon \)-Nash equilibrium in \(\mathcal {M}\) w.r.t these scores. Given a joint policy \(\pi = (\pi _1,\dots , \pi _n)\) and an alternate policy \(\pi '_i\) for agent i, let \((\pi _{-i},\pi '_i)\) denote the joint policy \( (\pi _1, \dots , \pi '_i, \dots , \pi _n)\). Then, a joint policy \(\pi \) is an \(\epsilon \)-Nash equilibrium if for all agents i and all alternate policies \(\pi '_i\), \(J_i(\pi )\ge J_i((\pi _{-i},\pi '_i)) - \epsilon \). Our goal is to compute a joint policy \(\pi \) that maximizes the social welfare given by

subject to the constraint that \(\pi \) is an \(\epsilon \)-Nash equilibrium.

3 Overview

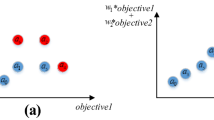

Our framework for computing a high-welfare \(\epsilon \)-Nash equilibrium consists of two phases. The first phase is a prioritized enumeration procedure that learns deterministic joint policies in the environment and ranks them in decreasing order of social welfare. The second phase is a verification phase that checks whether a given joint policy can be extended to an \(\epsilon \)-Nash equilibrium by adding punishment strategies. A policy is returned if it passes the verification check in the second phase. Algorithm 1 summarizes our framework.

For the enumeration phase, it is impractical to enumerate all joint policies even for small environments, since the total number of deterministic joint policies is \(\varOmega (|\mathcal {A}|^{|\mathcal {S}|^{H-1}})\), which is \(\varOmega (2^{n|\mathcal {S}|^{H-1}})\) if each agent has atleast two actions. Thus, in the prioritized enumeration phase, we apply a specification-guided heuristic to reduce the number of joint policies considered. The resulting search space is independent of \(|\mathcal {S}|\) and H, depending only on the specifications \(\{\phi _i\}_{i\in [n]}\). Since the transition probabilities are unknown, these joint policies are trained using an efficient compositional RL approach.

Since the joint policies are trained cooperatively, they are typically not \(\epsilon \)-Nash equilibria. Hence, in the verification phase, we use a probably approximately correct (PAC) procedure (Algorithm 2) to determine whether a given joint policy can be modified by adding punishment strategies to form an \(\epsilon \)-Nash equilibrium. Our approach is to reduce this problem to solving two-agent zero-sum games. The key insight is that for a given joint policy to be an \(\epsilon \)-Nash equilibrium, unilateral deviations by any agent must be successfully punished by the coalition of all other agents. In such a punishment game, the deviating agent attempts to maximize its score while the coalition of other agents attempts to minimize its score, leading to a competitive min-max game between the agent and the coalition. If the deviating agent can improve its score by a margin \(\ge \epsilon \), then the joint policy cannot be extended to an \(\epsilon \)-Nash equilibrium. Alternatively, if no agent can increase its score by a margin \(\ge \epsilon \), then the joint policy (augmented with punishment strategies) is an \(\epsilon \)-Nash equilibrium. Thus, checking if a joint policy can be converted to an \(\epsilon \)-Nash equilibrium reduces to solving a two-agent zero-sum game for each agent. Each punishment game is solved using a self-play RL algorithm for learning policies in min-max games with unknown transitions [4], after converting specification-based scores to reward-based scores. While the initial joint policy is deterministic, the punishment strategies can be probabilistic.

Overall, we provide the guarantee that with high probability, if our algorithm returns a joint policy, it will be an \(\epsilon \)-Nash equilibrium.

4 Prioritized Enumeration

We summarize our specification-guided compositional RL algorithm for learning a finite number of deterministic joint policies in an unknown environment under Assumption 1. These policies are then ranked in decreasing order of their (estimated) social welfare.

Our learning algorithm harnesses the structure of specifications, exposed by their abstract graphs, to curb the number of joint policies to learn. For every set of active agents \(B \subseteq [n]\), we construct a product abstract graph, from the abstract graphs of all active agents’ specifications. A property of this product is that if a trajectory \(\zeta \) in \(\mathcal {M}\) corresponds to a path in the product that ends in a final state then \(\zeta \) satisfies the specification of all active agents. Then, our procedure learns one joint policy for every path in the product graph that reaches a final state. Intuitively, policies learned using the product graph corresponding to a set of active agents B aim to maximize satisfaction probabilities of all agents in B. By learning joint policies for every set of active agents, we are able to learn policies under which some agents may not satisfy their specifications. This enables learning joint policies in non-cooperative settings. Note that the number of paths (and hence the number of policies considered) is independent of \(|\mathcal {S}|\) and H, and depends only on the number of agents and their specifications.

One caveat is that the number of paths may be exponential in the number of states in the product graph. It would be impractical to naïvely learn a joint policy for every path. Instead, we design an efficient compositional RL algorithm that learns a joint policy for each edge in the product graph; these edge policies are then composed together to obtain joint policies for paths in the product graph.

4.1 Product Abstract Graph

Let \(\phi _1,\dots , \phi _n\) be the specifications for the n-agents, respectively, and let \(\mathcal {G}_{i} = (U_i, E_i, u_0^i, F_i, \beta _i, \overline{\mathcal {Z}}_{\text {safe},i})\) be the abstract graph of specification \(\phi _i\) in the environment \(\mathcal {M}\). We construct a product abstract graph for every set of active agents in [n]. The product graph for a set of active agents \(B \subseteq [n]\) is used to learn joint policies which satisfy the specification of all agents in B with high probability.

Definition 3

Given a set of agents \(B = \{i_1, \dots , i_m\} \subseteq [n]\) , the product graph \(\mathcal {G}_B = (\overline{U}, \overline{E}, \overline{u}_0, \overline{F}, \overline{\beta }, \overline{\mathcal {Z}}_{\text {safe}}) \) is the asynchronous product of \(\mathcal {G}_{i}\) for all \(i \in B\) , with

-

\(\overline{U} = \prod _{i\in B}U_{i}\) is the set of product vertices,

-

An edge \(e = (u_{i_1},\ldots ,u_{i_m})\rightarrow (v_{i_1},\ldots ,v_{i_m})\in \overline{E}\) if at least for one agent \(i\in B\) the edge \(u_{i}\rightarrow v_{i}\in E_{i}\) and for the remaining agents, \(u_{i} = v_{i}\) ,

-

\(\overline{u}_0 = (u_0^{i_1},\ldots ,u_0^{i_m})\) is the initial vertex,

-

\(\overline{F}= \varPi _{i\in B}F_{i}\) is the set of final vertices,

-

\(\overline{\beta } = (\beta _{i_1},\ldots ,\beta _{i_m})\) is the collection of concretization maps, and

-

\(\overline{\mathcal {Z}}_{\text {safe}} = (\overline{\mathcal {Z}}_{\text {safe},i_1},\ldots ,\overline{\mathcal {Z}}_{\text {safe},i_m})\) is the collection of safe trajectories.

We denote the i-th component of a product vertex \(\overline{u}\in \overline{U}\) by \(u_i\) for agent \(i\in B\). Similarly, the i-th component in an edge \(e = \overline{u}\rightarrow \overline{v}\) is denoted by \(e_i = u_i\rightarrow v_i\) for \(i \in B\); note that \(e_i\) can be a self loop which is not an edge in \(\mathcal {G}_i\). For an edge \(e\in \overline{E}\), we denote the set of agents \(i\in B\) for which \(e_i \in E_{i}\), and not a self loop, by \(\mathsf {progress}(e)\).

Abstract graphs of the black car and the blue car from the motivating example are shown in Figs. 2 and 3 respectively. The vertex \(v_1\) denotes the subgoal region \(\beta _{\text {black}}(v_1)\) consisting of states in which the black car has crossed the intersection but the orange and green cars have not. The subgoal region \(\beta _{\text {blue}}(v_2)\) is the set of states in which the blue car has crossed the intersection but the black car has not. \(\mathcal {Z}_1\) denotes trajectories in which the black car does not collide and \(\mathcal {Z}_2\) denotes trajectories in which the blue car does not collide and stays a car length ahead of the orange and green cars. The product abstract graph for the set of active agents \(B = \{\text {black, blue}\}\) is shown in Fig 4. The safe trajectories on the edges reflect the notion of achieving a product edge which we discuss below.

A trajectory \(\zeta = s_0\xrightarrow {a_0}s_1\xrightarrow {a_1}\cdots \xrightarrow {a_{t-1}}s_t\) achieves an edge \(e = \overline{u}\rightarrow \overline{v}\) in \(\mathcal {G}_B\) if all progressing agents \(i\in \mathsf {progress}(e)\) reach their target subgoal region \(\beta _i(v_i)\) along the trajectory and the trajectory is safe for all agents in B. For a progressing agent \(i\in \mathsf {progress}(e)\), the initial segment of the rollout until the agent reaches its subgoal region should be safe with respect to the edge \(e_i\). After that, the rollout should be safe with respect to every future possibility for the agent. This is required to ensure continuity of the rollout into adjacent edges in the product graph \(\mathcal {G}_B\). For the same reason, we require that the entire rollout is safe with respect to all future possibilities for non-progressing agents. Note that we are not concerned with non-active agents in \([n]{\setminus } B\). In order to formally define this notion, we need to setup some notation.

For a predicate \(b\in \mathcal {P}\), let the set of safe trajectories w.r.t. b be given by \(\mathcal {Z}_b = \{\zeta = s_0\xrightarrow {a_0}s_1\xrightarrow {a_1}\cdots \xrightarrow {a_{t-1}}s_t\in \mathcal {Z}\mid \forall \ 0\le k \le t, s_k \models b \}\). It is known that safe trajectories along an edge in an abstract graph constructed from a Spectrl specification is either of the form \(\mathcal {Z}_b\) or \(\mathcal {Z}_{b_1} \circ \mathcal {Z}_{b_2}\), where \(b, b_1, b_2\in \mathcal {P}\) and \(\circ \) denotes concatenation [16]. In addition, for every final vertex f, \(\mathcal {Z}_{\text {safe}}^f\) is of the form \(\mathcal {Z}_b\) for some \(b\in \mathcal {P}\). We define \(\mathsf {First}\) as follows:

We are now ready to define the notion of satisfiability of a product edge.

Definition 4

A rollout \(\zeta = s_0\xrightarrow {a_0}s_1\xrightarrow {a_1}\cdots \xrightarrow {a_{t-1}}s_k\) achieves an edge \(e = \overline{u}\rightarrow \overline{v}\) in \(\mathcal {G}_B\) (denoted \(\zeta \models _B e\)) if

-

1.

for all progressing agents \(i\in \mathsf {progress}(e)\), there exists an index \(k_i\le k\) such that \(s_{k_i}\in \beta _i(v_i)\) and \(\zeta _{0:k_i} \in \mathcal {Z}^{e_i}_{\text {safe}, i}\). If \(v_i \in F_i\) then \(\zeta _{k_i:k} \in \mathcal {Z}^{v_i}_{\text {safe}, i}\). Otherwise, \(\zeta _{k_i:k} \in \mathsf {First}(\mathcal {Z}^{v_i\rightarrow w_i}_{\text {safe},i})\) for all \( w_i \in \mathsf {outgoing}(v_i)\). Furthermore, we require \(k_i > 0\) if \(u_i\ne u_0^i\).

-

2.

for all non-progressing agents \(i \in B{\setminus }\mathsf {progress}(e)\), if \(u_i \notin F_i\), \(\zeta \in \mathsf {First}(\mathcal {Z}_{\text {safe},i}^{u_i\rightarrow w_i})\) for all \(w_i \in \mathsf {outgoing}(u_i)\). Otherwise (if \(u_i \in F_i\)), \(\zeta \in \mathcal {Z}^{u_i}_{\text {safe}, i}\)

We can now define what it means for a trajectory to achieve a path in the product graph \(\mathcal {G}_B\).

Definition 5

Given \(B\subseteq [n]\), a rollout \(\zeta = s_0\rightarrow \cdots \rightarrow s_t \) achieves a path \(\rho = \overline{u}_0 \rightarrow \cdots \rightarrow \overline{u}_{\ell }\) in \(\mathcal {G}_B\) (denoted \(\zeta \models _B \rho \)) if there exists indices \(0=k_0 \le k_1 \le \dots \le k_\ell \le t\) such that (i) \(\overline{u}_{\ell }\in \overline{F}\), (ii) \(\zeta _{k_z:k_{z+1}}\) achieves \(\overline{u}_{z}\rightarrow \overline{u}_{z+1}\) for all \(0\le z< \ell \), and (iii) \(\zeta _{k_\ell :t} \in \mathcal {Z}_{\text {safe},i}^{u_{\ell ,i}}\) for all \(i\in B\).

Theorem 2

Let \(\rho = \overline{u_0} \rightarrow \overline{u_1} \rightarrow \cdots \rightarrow \overline{u_\ell }\) be a path in the product abstract graph \(\mathcal {G}_B\) for \(B \subseteq [n]\). Suppose trajectory \(\zeta \models _B \rho \). Then \(\zeta \models \phi _i\) for all \(i \in B\).

That is, joint policies that maximize the probability of achieving paths in the product abstract graph \(\mathcal {G}_B\) have high social welfare w.r.t. the active agents B.

4.2 Compositional RL Algorithm

Our compositional RL algorithm learns joint policies corresponding to paths in product abstract graphs. For every \(B\subseteq [n]\), it learns a joint policy \(\pi _e\) for each edge in the product abstract graph \(\mathcal {G}_B\), which is the (deterministic) policy that maximizes the probability of achieving e from a given initial state distribution. We assume all agents are acting cooperatively; thus, we treat the agents as one and use single-agent RL to learn each edge policy. We will check whether any deviation to this co-operative behaviour by any agent can be punished by the coalition of other agents in the verification phase. The reward function is designed to capture the reachability objective of progressing agents and the safety objective of all active agents.

The edges are learned in topological order, allowing us to learn an induced state distribution for each product vertex \(\overline{u}\) prior to learning any edge policies from \(\overline{u}\); this distribution is used as the initial state distribution when learning outgoing edge policies from \(\overline{u}\). In more detail, the distribution for the initial vertex of \(\mathcal {G}_B\) is taken to be the initial state distribution of the environment; for every other product vertex, the distribution is the average over distributions induced by executing edge policies for all incoming edges. This is possible because the product graph is a DAG.

Given edge policies \(\varPi \) along with a path \( \rho =\overline{u}_{0}\rightarrow \overline{u}_{1}\rightarrow \cdots \rightarrow \overline{u}_{\ell } = \overline{u} \in \overline{F} \) in \(\mathcal {G}_B\), we define a path policy \({\pi }_{\rho }\) to navigate from \(\overline{u}_{0}\) to \(\overline{u}\). In particular, \({\pi }_{\rho }\) executes \(\pi _{e[z]}\), where \(e[z] = \overline{u}_{z}\rightarrow \overline{u}_{z+1}\) (starting from \(z=0\)) until the resulting trajectory achieves e[z], after which it increments \(z\leftarrow z+1\) (unless \(z=\ell \)). That is, \({\pi }_{\rho }\) is designed to achieve the sequence of edges in \(\rho \). Note that \(\pi _\rho \) is a finite-state deterministic joint policy in which vertices on the path correspond to the memory states that keep track of the index of the current policy. This way, we obtain finite-state joint policies by learning edge policies only.

This process is repeated for all sets of active agents \(B \subseteq [n]\). These finite-state joint policies are then ranked by estimating their social welfare on several simulations.

5 Nash Equilibria Verification

The prioritized enumearation phase produces a list of path policies which are ranked by the total sum of scores. Each path policy is deterministic and also finite state. Since the joint policies are trained cooperatively, they are typically not \(\epsilon \)-Nash equilibria. Thus, our verification algorithm not only tries to prove that a given joint policy is a \(\epsilon \)-Nash equilibrium, but also tries to modify it so it satisfies this property. In particular, our verification algorithm attempts to modify a given joint policy by adding punishment strategies so that the resulting policy is an \(\epsilon \)-Nash equilibrium.

Concretely, it takes as input a finite-state deterministic joint policy \(\pi = (M, \alpha , \sigma , m_0)\) where M is a finite set of memory states, \(\alpha : \mathcal {S}\times \mathcal {A}\times M\rightarrow M\) is the memory update function, \(\sigma :\mathcal {S}\times M \rightarrow \mathcal {A}\) maps states to (joint) actions and \(m_0\) is the initial policy state. The extended memory update function \(\hat{\alpha }: \mathcal {Z}\rightarrow M\) is given by \(\hat{\alpha }(\epsilon ) = m_0\) and \(\hat{\alpha }(\zeta s_t a_{t}) = \alpha (s_t, a_t, \hat{\alpha }(\zeta ))\). Then, \(\pi \) is given by \(\pi (\zeta s_t) = \sigma (s_t, \hat{\alpha }(\zeta ))\). The policy \(\pi _i\) of agent i simply chooses the \(i^{\text {th}}\) component of \(\pi (\zeta )\) for any history \(\zeta \).

The verification algorithm learns one punishment strategy \(\tau _{ij}:\mathcal {Z}\rightarrow \mathcal {D}(A_i)\) for each pair (i, j) of agents. As outlined in Fig. 5, the modified policy for agent i uses \(\pi _i\) if every agent j has taken actions according to \(\pi _j\) in the past. In case some agent \(j'\) has taken an action that does not match the output of \(\pi _{j'}\), then agent i uses the punishment strategy \(\tau _{ij}\), where j is the agent that deviated the earliest (ties broken arbitrarily). The goal of verification is to check if there is a set of punishment strategies \(\{\tau _{ij}\mid i\ne j\}\) such that after modifying each agent’s policy to use them, the resulting joint policy is an \(\epsilon \)-Nash equilibrium.

5.1 Problem Formulation

We denote the set of all punishment strategies of agent i by \(\tau _i=\{\tau _{ij}\mid j\ne i\}\). We define the composition of \(\pi _i\) and \(\tau _i\) to be the policy \(\tilde{\pi }_i = \pi _i\bowtie \tau _i\) such that for any trajectory \(\zeta = s_0\xrightarrow {a_0}\cdots \xrightarrow {a_{t-1}}s_t\), we have

-

\(\tilde{\pi }_i(\zeta ) = \pi _i(\zeta )\) if for all \(0\le k < t\), \(a_k = \pi (\zeta _{0:k})\)—i.e., no agent has deviated so far,

-

\(\tilde{\pi }_i(\zeta ) = \tau _{ij}(\zeta )\) if there is a k such that (i) \(a_k^j\ne \pi _j(\zeta _{0:k})\) and (ii) for all \(\ell < k\), \(a_\ell = \pi (\zeta _{0:\ell })\). If there are multiple such j’s, an arbitrary but consistent choice is made (e.g., the smallest such j).

Given a finite-state deterministic joint policy \(\pi \), the verification problem is to check if there exists a set of punishment strategies \(\tau = \bigcup _i \tau _i\) such that the joint policy \(\tilde{\pi } = \pi \bowtie \tau = ({\pi }_1\bowtie \tau _1,\ldots ,{\pi }_n\bowtie \tau _n)\) is an \(\epsilon \)-Nash equilibrium. In other words, the problem is to check if there exists a policy \(\tilde{\pi }_i\) for each agent i such that (i) \(\tilde{\pi }_i\) follows \(\pi _i\) as long as no other agent j deviates from \(\pi _j\) and (ii) the joint policy \(\tilde{\pi } = (\tilde{\pi }_1,\ldots ,\tilde{\pi }_n)\) is an \(\epsilon \)-Nash equilibrium.

5.2 High-Level Procedure

Our approach is to compute the best set of punishment strategies \(\tau ^*\) w.r.t. \(\pi \) and check if \(\pi \bowtie \tau ^*\) is an \(\epsilon \)-Nash equilibrium. The best punishment strategy against agent j is the one that minimizes its incentive to deviate. To be precise, we define the best response of j with respect to a joint policy \(\pi ' = (\pi _1',\ldots ,\pi _n')\) to be \({\text {br}}_j(\pi ') \in \arg \max _{\pi _j''}J_j(\pi _{-j}', \pi _j'')\). Then, the best set of punishment strategies \(\tau ^*\) w.r.t. \(\pi \) is one that minimizes the value of \({\text {br}}_j(\pi \bowtie \tau )\) for all \(j\in [n]\). To be precise, define \(\tau [j] = \{\tau _{ij}\mid i\ne j\}\) to be the set of punishment strategies against agent j. Then, we want to compute \(\tau ^*\) such that for all j,

We observe that for any two sets of punishment strategies \(\tau \), \(\tau '\) with \(\tau [j] = \tau '[j]\) and any policy \(\pi _j'\), we have \(J_j((\pi \bowtie \tau )_{-j}, \pi _j') = J_j((\pi \bowtie \tau ')_{-j}, \pi _j')\). This is because, for any \(\tau \), punishment strategies in \(\tau {\setminus }\tau [j]\) do not affect the behaviour of the joint policy \(((\pi \bowtie \tau )_{-j}, \pi _j')\), since no agent other than agent j will deviate from \(\pi \). Hence, \({\text {br}}_j(\pi \bowtie \tau )\) as well as \(J_j((\pi \bowtie \tau )_{-j},{\text {br}}_j(\pi \bowtie \tau ))\) are independent of \(\tau \setminus \tau [j]\); therefore, we can separately compute \(\tau ^*[j]\) (satisfying Eq. 1) for each j and take \(\tau ^* = \bigcup _j \tau ^*[j]\). The following theorem follows from the definition of \(\tau ^*\).

Theorem 3

Given a finite-state deterministic joint policy \(\pi =(\pi _1,\ldots ,\pi _n)\), if there is a set of punishment strategies \(\tau \) such that \(\pi \bowtie \tau \) is an \(\epsilon \)-Nash equilibrium, then \(\pi \bowtie \tau ^*\) is an \(\epsilon \)-Nash equilibrium, where \(\tau ^*\) is the set of best punishment strategies w.r.t. \(\pi \). Furthermore, \(\pi \bowtie \tau ^*\) is an \(\epsilon \)-Nash equilibrium iff for all j,

Thus, to solve the verification problem, it suffices to compute (or estimate), for all j, the optimal deviation scores

5.3 Reduction to Min-Max Games

Next, we describe how to reduce the computation of optimal deviation scores to a standard self-play RL setting. We first translate the problem from the specification setting to a reward-based setting using reward machines.

Reward Machines. A reward machine (RM) [14] is a tuple \(\mathcal {R}= (Q, \delta _u, \delta _r, q_0)\) where Q is a finite set of states, \(\delta _u: \mathcal {S}\times \mathcal {A}\times Q\rightarrow Q\) is the state transition function, \(\delta _r: \mathcal {S}\times Q\rightarrow [-1,1]\) is the reward function and \(q_0\) is the initial RM state. Given a trajectory \(\zeta = s_0 \xrightarrow {a_0}\ldots \xrightarrow {a_{t-1}}s_t\), the reward assigned by \(\mathcal {R}\) to \(\zeta \) is \(\mathcal {R}(\zeta ) = \sum _{k=0}^{t-1}\delta _r(s_k, q_k)\), where \(q_{k+1} = \delta _u(s_{k}, a_k, q_k)\) for all k. For any Spectrl specification \(\phi \), we can construct an RM such that the reward assigned to a trajectory \(\zeta \) indicates whether \(\zeta \) satisfies \(\phi \).

Theorem 4

Given any Spectrl specification \(\phi \), we can construct an RM \(\mathcal {R}_\phi \) such that for any trajectory \(\zeta \) of length \(t+1\), \(\mathcal {R}_\phi (\zeta ) = \mathbf {1}(\zeta _{0:t}\models \phi )\).

For an agent j, let \(\mathcal {R}_j\) denote \(\mathcal {R}_{\phi _j} = (Q_j, \delta _u^j, \delta _r^j, q_0^j)\). Letting \(\tilde{\mathcal {D}}_{\pi }\) be the distribution over length \(H+1\) trajectories induced by using \(\pi \), we have \(\mathbb {E}_{\zeta \sim \tilde{\mathcal {D}}_{\pi }}[\mathcal {R}_j(\zeta )] = J_j(\pi ).\) The deviation values defined in Eq. 2 are now min-max values of expected reward, except that it is not in a standard min-max setting since the policy of every non-deviating agent \(i\ne j\) is constrained to be of the form \(\pi _i\bowtie \tau _i\). This issue can be handled by considering a product of \(\mathcal {M}\) with the reward machine \(\mathcal {R}_j\) and the finite-state joint policy \(\pi \). The following theorem follows naturally.

Theorem 5

Given a finite-state deterministic joint policy \(\pi =(M, \alpha , \sigma , m_0)\), for any agent j, we can construct a simulator for an augmented two-player zero-sum Markov game \(\mathcal {M}_j^{\pi }\) (with rewards) which has the following properties.

-

The number of states in \(\mathcal {M}_j^{\pi }\) is at most \(2|S||M||Q_j|\).

-

The actions of player 1 is \(A_j\), and the actions of player 2 is \(\mathcal {A}_{-j}=\prod _{i\ne j}A_i\).

-

The min-max value of the two player game corresponds to the deviation cost of j, i.e.,

$${\texttt {dev}}_j^{\pi } = \min _{\bar{\pi }_{2}}\max _{\bar{\pi }_1}\bar{J}_j^{\pi }(\bar{\pi }_1,\bar{\pi }_2),$$where \(\bar{J}_j^{\pi }(\bar{\pi }_1,\bar{\pi }_2) = \mathbb {E}\big [\sum _{k=0}^{H} R_j(\bar{s}_k,a_k)\mid \bar{\pi }_1,\bar{\pi }_2\big ]\) is the expected sum of rewards w.r.t. the distribution over \((H+1)\)-length trajectories generated by using the joint policy \((\bar{\pi }_1,\bar{\pi }_{2})\) in \(\mathcal {M}_j^{\pi }\).

-

Given any policy \(\bar{\pi }_2\) for player 2 in \(\mathcal {M}_j^{\pi }\), we can construct a set of punishment strategies \(\tau [j] = \textsc {PunStrat}(\bar{\pi }_2)\) against agent j in \(\mathcal {M}\) such that

$$\max _{\bar{\pi }_1}\bar{J}_j^{\pi }(\bar{\pi }_1, \bar{\pi }_2) = \max _{\pi _j'}J_j((\pi \bowtie \tau [j])_{-j},\pi _j').$$

Given an estimate \(\tilde{\mathcal {M}}\) of \(\mathcal {M}\), we can also construct an estimate \(\tilde{\mathcal {M}}_j^{\pi }\) of \(\mathcal {M}_j^{\pi }\).

We omit the superscript \(\pi \) from \(\mathcal {M}_j^{\pi }\) when there is no ambiguity. We denote by \(\textsc {ConstructGame}(\tilde{\mathcal {M}},j,\mathcal {R}_j,\pi )\) the product construction procedure that constructs and returns \(\tilde{\mathcal {M}}_j^{\pi }\).

5.4 Solving Min-Max Games

The min-max game \(\mathcal {M}_j\) can be solved using self-play RL algorithms. Many of these algorithms provide probabilistic approximation guarantees for computing the min-max value of the game. We use a model-based algorithm, similar to the one proposed in [4], that first estimates the model \(\mathcal {M}_j\) and then solves the game in the estimated model.

One approach is to use existing algorithms for reward-free exploration to estimate the model [15], but this approach requires estimating each \(\mathcal {M}_j\) separately. Under Assumption 1, we provide a simpler and more sample-efficient algorithm, called BFS-Estimate, for estimating \(\mathcal {M}\). BFS-Estimate performs a search over the transition graph of \(\mathcal {M}\) by exploring previously seen states in a breadth first manner. When exploring a state s, multiple samples are collected by taking all possible actions in s several times and the corresponding transition probabilities are estimated. After obtaining an estimate of \(\mathcal {M}\), we can directly construct an estimate of \(\mathcal {M}_j^{\pi }\) for any \(\pi \) and j when required. Letting \(|Q| = \max _{j}|Q_j|\) and |M| denote the size of the largest finite-state policy output by our enumeration algorithm, we get the following guarantee.

Theorem 6

For any \(\delta >0\) and \(p\in (0,1]\), BFS-Estimate\((\mathcal {M},\delta ,p)\) computes an estimate \(\tilde{\mathcal {M}}\) of \(\mathcal {M}\) using \(O\left( \frac{|\mathcal {S}|^3|M|^2|Q|^4|\mathcal {A}|H^4}{\delta ^2}\log \left( \frac{|\mathcal {S}||\mathcal {A}|}{p}\right) \right) \) sample steps such that with probability at least \(1-p\), for any finite-state deterministic joint policy \(\pi \) and any agent j,

where \(\bar{J}^{\tilde{\mathcal {M}}_j^{\pi }}(\bar{\pi }_1,\bar{\pi }_2)\) is the expected reward over length \(H+1\) trajectories generated by \((\bar{\pi }_1,\bar{\pi }_2)\) in \(\tilde{\mathcal {M}}_j^{\pi }\). Furthermore, letting \(\bar{\pi }_2^* \in \arg \min _{\bar{\pi }_{2}}\max _{\bar{\pi }_1}\bar{J}^{\tilde{\mathcal {M}}_j}(\bar{\pi }_1, \bar{\pi }_{2})\) and \(\tau [j] = \textsc {PunStrat}(\bar{\pi }_2^*)\), we have

The min-max value of \(\tilde{\mathcal {M}}_j^{\pi }\) as well as \(\bar{\pi }_2^*\) can be computed using value iteration. Our full verification algorithm is summarized in Algorithm 2. It checks if \(\tilde{{\texttt {dev}}}_j \le J_j(\pi ) + \epsilon -\delta \) for all j, and returns True if so and False otherwise. It also simultaneously computes the punishment strategies \(\tau \) using the optimal policies for player 2 in the punishment games. Note that BFS-Estimate is called only once (i.e., the first time VerifyNash is called) and the obtained estimate \(\tilde{\mathcal {M}}\) is stored and used for verification of every candidate policy \(\pi \). The following soundness guarantee follows from Theorem 6.

Corollary 1 (Soundness)

For any \(p\in (0,1]\), \(\varepsilon >0\) and \(\delta \in (0,\varepsilon )\), with probability at least \(1-p\), if HighNashSearch returns a joint policy \(\tilde{\pi }\) then \(\tilde{\pi }\) is an \(\epsilon \)-Nash equilibrium.

6 Complexity

In this section, we analyze the time and sample complexity of our algorithm in terms of the number of agents n, size of the specification \(|\phi | = \max _{i\in [n]}|\phi _i|\), number of states in the environment \(|\mathcal {S}|\), number of joint actions \(|\mathcal {A}|\), time horizon H, precision \(\delta \) and the failure probability p.

Sample Complexity. It is known [16] that the number of edges in the abstract graph \(\mathcal {G}_i\) corresponding to specification \(\phi _i\) is \(O(|\phi _i|^2)\). Hence for any set of active agents B, the number of edges in the product abstract graph \(\mathcal {G}_B\) is \(O(|\phi |^{2|B|})\). Hence total number of edge policies learned by our compositional RL algorithm is \(\sum _{B\subseteq [n]}O((|\phi |^2)^{|B|}) = O((|\phi |^{2}+1)^n)\). We learn each edge using a fixed number of sample steps C, which is a hyperparameter.

The number of samples used in the verification phase is the same as the number used by BFS-Estimate. The maximum size of a candidate policy output by the enumeration algorithm |M| is at most the length of the longest path in a product abstract graph. Since the maximum path length in a single abstract graph \(\mathcal {G}_i\) is bounded by \(|\phi _i|\) and at least one agent must progress along every edge in a product graph, the maximum length of a path in any product graph is at most \(n|\phi |\). Also, the number of states in the reward machine \(\mathcal {R}_j\) corresponding to \(|\phi _j|\) is \(O(2^{|\phi _j|})\). Hence, from Theorem 6 we get that the total number of sample steps used by our algorithm is \(O\big ((|\phi |^{2}+1)^nC + \frac{2^{4|\phi |}|\mathcal {S}|^3n^2|\phi |^2|\mathcal {A}|H^4}{\delta }\log \big (\frac{|\mathcal {S}||\mathcal {A}|}{p}\big )\big )\).

Time Complexity. As with sample complexity, the time required to learn all edge policies is \(O((|\phi |^{2}+1)^n(C+|\mathcal {A}|))\) where the term \(|\mathcal {A}|\) is added to account for the time taken to select an action from \(\mathcal {A}\) during exploration (we use Q-learning with \(\varepsilon \)-greedy exploration for learning edge policies). Similarly, time taken for constructing the reward machines and running BFS-Estimate is \(O(\frac{2^{4|\phi |}|\mathcal {S}|^3n^2|\phi |^2|\mathcal {A}|H^4}{\delta }\log \big (\frac{|\mathcal {S}||\mathcal {A}|}{p}\big ))\).

The total number of path policies considered for a given set of active agents B is bounded by the number of paths in the product abstract graph \(\mathcal {G}_B\) that terminate in a final product state. First, let us consider paths in which exactly one agent progresses in each edge. The number of such paths is bounded by \((|B||\phi |)^{|B||\phi |}\) since the length of such paths is bounded by \(|B||\phi |\) and there are at most \(|B||\phi |\) choices at each step—i.e., progressing agent j and next vertex of the abstract graph \(\mathcal {G}_{\phi _j}\). Now, any path in \(\mathcal {G}_B\) can be constructed by merging adjacent edges along such a path (in which at most one agent progresses at any step). The number of ways to merge edges along such a path is bounded by the number of groupings of edges along the path into at most \(|B||\phi |\) groups which is bounded by \((|B||\phi |)^{|B||\phi |}\). Therefore, the total number of paths in \(\mathcal {G}_B\) is at most \(2^{2|B||\phi |\log (n|\phi |)}\). Finally, the total number of path policies considered is at most \(\sum _{B\subseteq [n]}2^{2|B||\phi |\log (n|\phi |)} \le ((n|\phi |)^{2|\phi |}+1)^n = O(2^{2n|\phi |\log (2n|\phi |)})\).

Now, for each path policy \(\pi \), the verification algorithm solves \(\tilde{\mathcal {M}_j^{\pi }}\) using value iteration which takes \(O(|\tilde{\mathcal {S}}||\mathcal {A}|Hf(|\mathcal {A}|)) = O(2^{|\phi |}n|\phi ||\mathcal {S}||\mathcal {A}|Hf(|\mathcal {A}|))\) time, where \(f(|\mathcal {A}|)\) is the time required to solve a linear program of size \(|\mathcal {A}|\). Also accounting for the time taken to sort the path policies, we arrive at a time complexity bound of \(2^{O(n|\phi |\log (n|\phi |))}\text {poly}(|\mathcal {S}|,|\mathcal {A}|,H,\frac{1}{p}, \frac{1}{\delta })\).

It is worth noting that the procedure halts as soon as our verification procedure successfully verifies a policy; this leads to early termination for cases where there is a high value \(\epsilon \)-Nash equilibrium (among the policies considered). Furthermore, our verification algorithm runs in polynomial time and therefore one could potentially improve the overall time complexity by reducing the search space in the prioritized enumeration phase—e.g., by using domain specific insights.

7 Experiments

We evaluate our algorithm on finite state environments and a variety of specifications, aiming to answer the following:

-

Can our approach be used to learn \(\epsilon \)-Nash equilibria?

-

Can our approach learn policies with high social welfare?

We compare our approach to two baselines described below, using two metrics: (i) the social welfare \({\texttt {welfare}}(\pi )\) of the learned joint policy \(\pi \), and (ii) an estimate of the minimum value of \(\epsilon \) for which \(\pi \) forms an \(\epsilon \)-Nash equilibrium:

Here, \(\epsilon _{\min }(\pi )\) is computed using single agent RL (specifically, Q-learning) to compute \({\text {br}}_i(\pi )\) for each agent i.

Environments and Specifications. We show results on the Intersection environment illustrated in Fig. 1, which consists of k-cars (agents) at a 2-way intersection of which \(k_1\) and \(k_2\) cars are placed along the N-S and E-W axes, respectively. The state consists of the location of all cars where the location of a single car is a non-negative integer. 1 corresponds to the intersection, 0 corresponds to the location one step towards the south or west of the intersection (depending on the car) and locations greater than 1 are to the east or north of the intersection. Each agent has two actions. STAY stays at the current position. MOVE decreases the position value by 1 with probability 0.95 and stays with probability 0.05. We consider specifications similar to the ones in the motivating example.

Baselines. We compare our NE computation method (HighNashSearch) to two approaches for learning in non-cooperative games. The first, maqrm, is an adaption of the reward machine based learning algorithm proposed in [22]. maqrm was originally proposed for cooperative multi-agent RL where there is a single specification for all the agents. It proceeds by first decomposing the specification into individual ones for all the agents and then runs a Q-learning-style algorithm (qrm) in parallel for all the agents. We use the second part of their algorithm directly since we are given a separate specification for each agent. The second baseline, nvi, is a model-based approach that first estimates transition probabilities, and then computes a Nash equilibrium in the estimated game using value iteration for stochastic games [17]. To promote high social welfare, we select the highest value Nash solution for the matrix game at each stage of value iteration. Note that this greedy strategy may not maximize social welfare. Both maqrm and nvi learn from rewards as opposed to specification; thus, we supply rewards in the form of reward machines constructed from the specifications. \(\textsc {nvi} \) is guaranteed to return an \(\epsilon \)-Nash equilibrium with high probability, but \(\textsc {maqrm} \) is not guaranteed to do so.

Results. Our results are summarized in Table 1. For each specification, we ran all algorithms 10 times with a timeout of 24 h. Along with the average social welfare and \(\epsilon _{\min }\), we also report the average number of sample steps taken in the environment as well as the number of runs that terminated before timeout. For a fair comparison, all approaches were given a similar number of samples from the environment.

Nash Equilibrium. Our approach learns policies that have low values of \(\epsilon _{\min }\), indicating that it can be used to learn \(\epsilon \)-Nash equilibria for small values of \(\epsilon \). nvi also has similar values of \(\epsilon \), which is expected since nvi provides guarantees similar to our approach w.r.t. Nash equilibria computation. On the other hand, maqrm learns policies with large values of \(\epsilon _{\min }\), implying that it fails to converge to a Nash equilibrium in most cases.

Social Welfare. Our experiments show that our approach consistently learns policies with high social welfare compared to the baselines. For instance, \(\phi ^3\) corresponds to the specifications in the motivating example for which our approach learns a joint policy that causes both blue and black cars to achieve their goals. Although nvi succeeds in learning policies with high social welfare for some specifications (\(\phi ^1,\phi ^3\), \(\phi ^4\)), it fails to do so for others (\(\phi ^2\), \(\phi ^5\)). Additional experiments (see extended version [3]) indicate that nvi achieves similar social welfare as our approach for specifications in which all agents can successfully achieve their goals (cooperative scenarios). However, in many other scenarios in which only some of the agents can fulfill their objectives, our approach achieves higher social welfare.

8 Conclusions

We have proposed a framework for maximizing social welfare under the constraint that the joint policy should form an \(\epsilon \)-Nash equilibrium. Our approach involves learning and enumerating a small set of finite-state deterministic policies in decreasing order of social welfare and then using a self-play RL algorithm to check if they can be extended with punishment strategies to form an \(\epsilon \)-Nash equilibrium. Our experiments demonstrate that our approach is effective in learning Nash equilibria with high social welfare.

One limitation of our approach is that our algorithm does not have any guarantee regarding optimality with respect to social welfare. The policies considered by our algorithm are chosen heuristically based on the specifications, which may lead to scenarios where we miss high welfare solutions. For example, \(\phi ^2\) corresponds to specifications in the motivating example except that the blue car is not required to stay a car length ahead of the other two cars. In this scenario, it is possible for three cars to achieve their goals in an equilibrium solution if the blue car helps the cars behind by staying in the middle of the intersection until they catch up. Such a joint policy is not among the set of policies considered; therefore, our approach learns a solution in which only two cars achieve their goals. We believe that such limitations can be overcome in future work by modifying the various components within our enumerate-and-verify framework.

Notes

- 1.

Here, achieve and ensuring correspond to the “eventually” and “always” operators in temporal logic.

- 2.

We do not require that the subgoal regions partition the state space or that they be non-overlapping.

References

Akchurina, N.: Multi-agent reinforcement learning algorithm with variable optimistic-pessimistic criterion. In: ECAI, vol. 178, pp. 433–437 (2008)

Alur, R., Bansal, S., Bastani, O., Jothimurugan, K.: A framework for transforming specifications in reinforcement learning. arXiv preprint arXiv:2111.00272 (2021)

Alur, R., Bansal, S., Bastani, O., Jothimurugan, K.: Specification-guided learning of Nash equilibria with high social welfare (2022). https://arxiv.org/abs/2206.03348

Bai, Y., Jin, C.: Provable self-play algorithms for competitive reinforcement learning. In: Proceedings of the 37th International Conference on Machine Learning (2020)

Bouyer, P., Brenguier, R., Markey, N.: Nash equilibria for reachability objectives in multi-player timed games. In: Gastin, P., Laroussinie, F. (eds.) CONCUR 2010. LNCS, vol. 6269, pp. 192–206. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-15375-4_14

Chatterjee, K.: Two-player nonzero-sum \(\omega \)-regular games. In: Abadi, M., de Alfaro, L. (eds.) CONCUR 2005. LNCS, vol. 3653, pp. 413–427. Springer, Heidelberg (2005). https://doi.org/10.1007/11539452_32

Chatterjee, K., Majumdar, R., Jurdziński, M.: On nash equilibria in stochastic games. In: Marcinkowski, J., Tarlecki, A. (eds.) CSL 2004. LNCS, vol. 3210, pp. 26–40. Springer, Heidelberg (2004). https://doi.org/10.1007/978-3-540-30124-0_6

Czumaj, A., Fasoulakis, M., Jurdzinski, M.: Approximate nash equilibria with near optimal social welfare. In: Twenty-Fourth International Joint Conference on Artificial Intelligence (2015)

Greenwald, A., Hall, K., Serrano, R.: Correlated Q-learning. In: ICML, Vol.3, pp. 242–249 (2003)

Hammond, L., Abate, A., Gutierrez, J., Wooldridge, M.: Multi-agent reinforcement learning with temporal logic specifications. In: International Conference on Autonomous Agents and MultiAgent Systems, pp. 583–592 (2021)

Hazan, E., Krauthgamer, R.: How hard is it to approximate the best nash equilibrium? In: Proceedings of the Twentieth Annual ACM-SIAM Symposium on Discrete Algorithms, SODA 2009, pp. 720–727. Society for Industrial and Applied Mathematics (2009)

Hu, J., Wellman, M.P.: Nash Q-learning for general-sum stochastic games. J. Mach. Learn. Res. 4(Nov), 1039–1069 (2003)

Hu, J., Wellman, M.P., et al.: Multiagent reinforcement learning: theoretical framework and an algorithm. In: ICML, vol. 98, pp. 242–250. Citeseer (1998)

Icarte, R.T., Klassen, T., Valenzano, R., McIlraith, S.: Using reward machines for high-level task specification and decomposition in reinforcement learning. In: International Conference on Machine Learning, pp. 2107–2116. PMLR (2018)

Jin, C., Krishnamurthy, A., Simchowitz, M., Yu, T.: Reward-free exploration for reinforcement learning. In: International Conference on Machine Learning, pp. 4870–4879. PMLR (2020)

Jothimurugan, K., Bansal, S., Bastani, O., Alur, R.: Compositional reinforcement learning from logical specifications. Adv. Neural Inf. Proc. Syst. 34, 10026–10039 (2021)

Kearns, M., Mansour, Y., Singh, S.: Fast planning in stochastic games. In: Proceedings of the Sixteenth Conference on Uncertainty in Artificial Intelligence, pp. 309–316 (2000)

Kwiatkowska, M., Norman, G., Parker, D., Santos, G.: Equilibria-based probabilistic model checking for concurrent stochastic games. In: ter Beek, M.H., McIver, A., Oliveira, J.N. (eds.) FM 2019. LNCS, vol. 11800, pp. 298–315. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-30942-8_19

Kwiatkowska, M., Norman, G., Parker, D., Santos, G.: PRISM-games 3.0: stochastic game verification with concurrency, equilibria and time. In: Lahiri, S.K., Wang, C. (eds.) CAV 2020. LNCS, vol. 12225, pp. 475–487. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-53291-8_25

Littman, M.L.: Markov games as a framework for multi-agent reinforcement learning. In: Machine Learning Proceedings 1994, pp. 157–163. Elsevier (1994)

Littman, M.L.: Friend-or-foe Q-learning in general-sum games. In: ICML, vol. 1, pp. 322–328 (2001)

Neary, C., Xu, Z., Wu, B., Topcu, U.: Reward machines for cooperative multi-agent reinforcement learning (2021)

Perolat, J., Strub, F., Piot, B., Pietquin, O.: Learning nash equilibrium for general-sum Markov games from batch data. In: Proceedings of the 20th International Conference on Artificial Intelligence and Statistics (2017)

Prasad, H., LA, P., Bhatnagar, S.: Two-timescale algorithms for learning nash equilibria in general-sum stochastic games. In: Proceedings of the 2015 International Conference on Autonomous Agents and Multiagent Systems, pp. 1371–1379 (2015)

Shapley, L.S.: Stochastic games. Proc. Nat. Acad. Sci. 39(10), 1095–1100 (1953)

Wei, C.Y., Hong, Y.T., Lu, C.J.: Online reinforcement learning in stochastic games. In: Proceedings of the 31st International Conference on Neural Information Processing Systems, pp. 4994–5004 (2017)

Zinkevich, M., Greenwald, A., Littman, M.: Cyclic equilibria in markov games. Adv. Neural Inf. Proc. Syst. 18, 1641 (2006)

Acknowledgements

We thank the anonymous reviewers for their helpful comments. This work is supported in part by NSF grant 2030859 to the CRA for the CIFellows Project, ONR award N00014-20-1-2115, DARPA Assured Autonomy award, NSF award CCF 1723567 and ARO award W911NF-20-1-0080.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this paper

Cite this paper

Jothimurugan, K., Bansal, S., Bastani, O., Alur, R. (2022). Specification-Guided Learning of Nash Equilibria with High Social Welfare. In: Shoham, S., Vizel, Y. (eds) Computer Aided Verification. CAV 2022. Lecture Notes in Computer Science, vol 13372. Springer, Cham. https://doi.org/10.1007/978-3-031-13188-2_17

Download citation

DOI: https://doi.org/10.1007/978-3-031-13188-2_17

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-13187-5

Online ISBN: 978-3-031-13188-2

eBook Packages: Computer ScienceComputer Science (R0)