Abstract

The core of this book are deep learning methods and neural networks. This chapter considers deep feed-forward neural (FN) networks. We introduce the generic architecture of deep FN networks, and we discuss universality theorems of FN networks. We present network fitting, back-propagation, embedding layers for categorical variables and insurance-specific issues such as the balance property in network fitting, as well as network ensembling to reduce model uncertainty. This chapter is complemented by many examples on non-life insurance pricing, but also on mortality modeling, as well as tools that help to explain deep FN network regression results.

You have full access to this open access chapter, Download chapter PDF

In the sequel, we introduce deep learning models. In this chapter these deep learning models will be based on fully-connected feed-forward neural networks. We present these networks as an extension of GLMs. These networks perform feature engineering themselves. We discuss how networks achieve this, and we explain how networks are used for predictive modeling. There is a vastly growing literature on deep learning with networks, the classical reference is the book of Goodfellow et al. [166], but also the numerous tutorials around the open-source deep learning libraries TensorFlow [2], Keras [77] or PyTorch [296] give an excellent overview of the state-of-the-art in this field.

7.1 Deep Learning and Representation Learning

In Chap. 5 on GLMs, we have been modeling the mean structure of the responses Y , given features x, by the following regression function, see (5.6),

The crucial assumption has been that the regression function (7.1) provides a reasonable functional description of the expected value \({\mathbb E}_{\theta (\boldsymbol {x})}[Y]\) of datum (Y, x). As described in Sect. 5.2.2, this typically requires manual feature engineering of x, bringing feature information into the right structural form.

In contrast to manual feature engineering, deep learning aims at performing an automated feature engineering within the statistical model by massaging information through different transformations. Deep learning uses a finite sequence of functions (z (m))1≤m≤d, called layers,

of (fixed) dimensions \(q_{m} \in {\mathbb N}\), 1 ≤ m ≤ d, and initialization q 0 = q being the dimension of the (raw) feature information \(\boldsymbol {x}\in \mathcal {X} \subset \{1\} \times {\mathbb R}^q\). Each of these layers presents a new representation of the features, that is, after layer m we have a q m-dimensional representation of the raw feature \(\boldsymbol {x} \in \mathcal {X}\)

Note that the first component is always identically equal to 1. For this reason we call the representation \(\boldsymbol {z}^{(m:1)}(\boldsymbol {x}) \in \{1\}\times {\mathbb R}^{q_m}\) of x to be q m-dimensional.

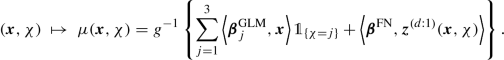

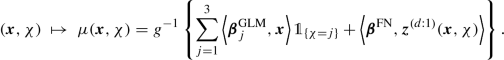

Deep learning now assumes that we have \(d \in {\mathbb N}\) appropriate transformations (layers) z (m), 1 ≤ m ≤ d, such that z (d:1)(x) provides a suitable q d-dimensional representation of the raw feature \(\boldsymbol {x} \in \mathcal {X}\), that then enters a GLM

with link function \(g:\mathcal {M}\to {\mathbb R}\) and regression parameter \(\boldsymbol {\beta } \in {\mathbb R}^{q_d+1}\). This regression architecture is called a feed-forward network of depth \(d \in {\mathbb N}\) because information x is processed in a directed acyclic (feed-forward) path through the d layers z (1), …, z (d) before entering the final GLM.

Each layer z (m) involves parameters. Successful deep learning simultaneously fits these parameters as well as the regression parameter β to the available learning data \(\mathcal {L}\) so that we obtain an optimal predictive model on the test data \(\mathcal {T}\). That is, the learned model should optimally generalize to unseen data, we refer to Chap. 4 on predictive modeling. Thus, the process of optimal representation learning is also part of the model fitting procedure. In contrast to GLMs, the resulting log-likelihood functions are non-concave in their parameters because, typically, each layer involves non-linear transformations. This makes model fitting a challenge. State-of-the-art model fitting in deep learning uses variants of the gradient descent algorithm which we have already met in Sect. 6.2.4.

Remark 7.1. Representation learning x↦z (d:1)(x) is closely related to Mercer’s kernel [272]. If we have a portfolio with features x 1, …, x n, we obtain a Mercer’s kernel by considering the matrix

In many regression problems it can be shown that one can equivalently work with the design matrix  or with Mercer’s kernel \(\mathbf {K} \in {\mathbb R}^{n\times n}\). Mercer’s kernel does not require the full knowledge of the learned representations z

(d:1)(x

i), but it suffices to know the discrepancies between z

(d:1)(x

i) and z

(d:1)(x

j) measured by the scalar products K(x

i, x

j). This is also closely related to the cosine similarity in word embeddings, see (10.11). This approach then results in replacing the search for an optimal representation learning by a search of the optimal Mercer’s kernel for the given data; this is called the kernel trick in machine learning.

or with Mercer’s kernel \(\mathbf {K} \in {\mathbb R}^{n\times n}\). Mercer’s kernel does not require the full knowledge of the learned representations z

(d:1)(x

i), but it suffices to know the discrepancies between z

(d:1)(x

i) and z

(d:1)(x

j) measured by the scalar products K(x

i, x

j). This is also closely related to the cosine similarity in word embeddings, see (10.11). This approach then results in replacing the search for an optimal representation learning by a search of the optimal Mercer’s kernel for the given data; this is called the kernel trick in machine learning.

7.2 Generic Feed-Forward Neural Networks

Feed-forward neural (FN) networks use special layers z (m) in (7.2)–(7.3), whose components are called neurons. This is discussed and studied in detail in this section.

7.2.1 Construction of Feed-Forward Neural Networks

FN networks are regression functions of type (7.3) where each neuron \(z_j^{(m)}\), 1 ≤ j ≤ q

m, of the layers  , 1 ≤ m ≤ d, has the structure of a GLM; the first component \(z_0^{(m)}=1\) always plays the role of the intercept and does not need any modeling.

, 1 ≤ m ≤ d, has the structure of a GLM; the first component \(z_0^{(m)}=1\) always plays the role of the intercept and does not need any modeling.

A first important choice is the activation function \(\phi : {\mathbb R} \to {\mathbb R}\) which plays the role of the inverse link function g −1. To perform non-linear representation learning, this activation function should be non-linear, too. The most popular choices of activation functions are listed in Table 7.1.

The first three examples in Table 7.1 are smooth functions with simple derivatives, see the last column of Table 7.1. Having simple derivatives is an advantage in gradient descent algorithms for model fitting. The derivative of the ReLU activation function for x ≠ 0 is given by the step function activation, and in 0 one typically considers a sub-gradient. We briefly comment on these activation functions.

-

We are mainly going to use the hyperbolic tangent activation function

$$\displaystyle \begin{aligned} x~\mapsto~\tanh (x)~=~\frac{e^{x}-e^{-x}}{e^{x}+e^{-x}} ~=~ 2\left(1+e^{-2x}\right)^{-1}-1 ~\in~ (-1,1). \end{aligned}$$Figure 7.1 illustrates the hyperbolic tangent activation function.

Fig. 7.1 The hyperbolic tangent activation function is anti-symmetric w.r.t. the origin with range (−1, 1). This anti-symmetry and boundedness is an advantage in fitting deep FN network architectures. For this reason we usually prefer the hyperbolic tangent over other activation functions.

-

The sigmoid activation function corresponds to the logistic function that was used in the Bernoulli and the categorical EFs, see Sects. 2.1.2 and 5.7. The sigmoid activation function can be obtained from the hyperbolic tangent activation function by setting \(\phi (x)=(\tanh (x/2)+1)/2\).

-

The step function activation is not really used in applications. However, it allows for nice interpretations, and it links FN networks to the theory of regression and classification trees (CARTs); see Breiman et al. [54] for CARTs.

-

The exponential activation function is a nice differentiable choice whenever the range should be one-sided bounded.

-

The ReLU activation function is also called hinge function or ramp function. This is the preferred choice in the machine learning community. However, typically, we will not use it because in our experience it is less robust in fitting compared to the hyperbolic tangent activation function. This may be for two reasons, firstly, the ReLU activation is unbounded, and secondly, it is identically equal to zero for x < 0, which implies that there is no sensitivity in negative choices of x.

A FN layer with activation function ϕ is a mapping

having neurons for 1 ≤ j ≤ q m

with given network weights \(\boldsymbol {w}^{(m)}_j=(w^{(m)}_{l,j})_{0\le l \le q_{m-1}} \in {\mathbb R}^{q_{m-1}+1}\).

Interpretation

Every neuron \(\boldsymbol {z}\mapsto z_j^{(m)}(\boldsymbol {z})\) describes a GLM regression function with link function ϕ −1 and regression parameter \(\boldsymbol {w}^{(m)}_j \in {\mathbb R}^{q_{m-1}+1}\) for features \(\boldsymbol {z} \in \{1\}\times {\mathbb R}^{q_{m-1}}\). These GLM regression functions can be interpreted as data compression, i.e., in each neuron the q m−1-dimensional feature z is projected to a real number \(\langle \boldsymbol {w}^{(m)}_j, \boldsymbol {z} \rangle \in {\mathbb R}\) which is then (non-linearly) activated by ϕ. Since this leads to a substantial loss of information, we perform this procedure of data compression q m times in FN layer z (m), so that each neuron in \((z_j^{(m)}(\boldsymbol {z}))_{1\le j \le q_m}\) represents a different projection of input z. Choosing suitable weights \(\boldsymbol {w}^{(m)}_j\) will allow us to extract the crucial feature information from z to receive good explanatory variables for the regression task at hand.

A FN network of depth \(d \in {\mathbb N}\) is obtained by composing d FN layers z (1), …, z (d) to receive the mapping

Choosing a strictly monotone and smooth link function g and a regression parameter \(\boldsymbol {\beta } \in {\mathbb R}^{q_d+1}\) we receive the FN network regression function

This FN network regression function (7.8) has a network parameter

of dimension

of dimension

In Fig. 7.2 we illustrate a FN network of depth d = 3, FN layers of dimensions (q 1, q 2, q 3) = (20, 15, 10) and input dimension q 0 = 40.Footnote 1 This gives us a network parameter \(\boldsymbol {\vartheta } \in {\mathbb R}^r\) of dimension r = 1′306. On the left-hand side we have the raw features \(\boldsymbol {x} \in \mathcal {X}\subset \{1\}\times {\mathbb R}^{q_0}\), these are processed through the three FN layers, where the black circles illustrate the neurons \(z_j^{(m)}\). The third FN layer z (3) has dimension q 3 = 10 providing the learned representation \(\boldsymbol {z}^{(3:1)}(\boldsymbol {x})\in \{1\}\times {\mathbb R}^{q_3}\) of x. This is used in the final GLM step (7.8) in the green box of Fig. 7.2.

Remarks 7.2.

-

One distinguishes between FN networks of depth d = 1, called shallow networks, and FN networks of depth d > 1, called deep networks. In this sense, deep learning means that we learn suitable feature representations through multiple FN layers d > 1. We come back to this in Sect. 7.2.2, below. Remark that some people would only call a network deep if d ≫ 1, here d > 1 will be chosen for the definition of deep (which is also a precise definition).

-

There are two ways of receiving a GLM. If we have a (trivial) FN network of depth d = 0, this naturally corresponds to a GLM, see Fig. 7.2. In that case, one works with the original features \(\boldsymbol {x} \in \mathcal {X}\) in (7.8). The second way of receiving a GLM is given by choosing the identity function as activation function ϕ(x) = x. This implies that x↦z (d:1)(x) = A x is a linear function for some matrix \(A \in {\mathbb R}^{(q_d+1) \times (q+1)}\) and, henceforth, we receive a GLM.

-

Under the above interpretation of the representation learning structure (7.7), we may also give a different intuition for the FN layers. Typically, we expect that the first FN layers decompose feature information x into bits and pieces, which are then recomposed in a suitable way for the prediction task. In this sense, we typically choose a larger dimension for the early FN layers otherwise we may lose too much information already from the very beginning.

-

The neural network introduced in (7.7) is called FN network because the signals propagate from one layer to the next (directed acyclic graph). If the network has loops it is called a recurrent neural (RN) network. RN networks have been applied very successfully in image and speech recognition, for instance, long short-term memory (LSTM) networks are very useful for time-series analysis. We study RN networks in Chap. 8, below. A third type of neural networks are convolutional neural (CN) networks which are very successfully applied to image recognition because they are capable to detect similar structures at different places in images, i.e., CN networks learn local representations. We will discuss CN network architectures in Chap. 9, below.

-

The generic FN network architecture (7.8) can be complemented by drop-out layers, normalization layers, skip connections, embedding layers, etc. Such layers are special purpose layers, for instance, taking care of over-fitting. We introduce and discuss these below.

-

The regression function (7.8) has a one-dimensional output for regression modeling. Of course, categorical classification can be done completely analogously by choosing a link function g suitable for classification, see Sect. 5.7. A similar approach also works if, for instance, we want to model simultaneously the mean and the dispersion of the data with a two-dimensional output function g −1.

7.2.2 Universality Theorems

The use of FN networks for representation learning is motivated by the so-called universality theorems which say that any compactly supported continuous (regression) function can be approximated arbitrarily well by a suitably large FN network. As such, we can understand the FN network framework as an approximation tool which, of course, is useful far beyond statistical modeling. In Chapter 12 we give some proofs of selected universality statements to illustrate the flavor of such results. In particular, Cybenko [86], Hornik et al. [192], Hornik [191], Leshno et al. [247], Park–Sandberg [293, 294], Petrushev [302] and Isenbeck–Rüschendorf [198] have shown (under mild conditions on the activation function) that shallow FN networks can approximate any compactly supported continuous function arbitrarily well (in supremum norm or in L 2-norm), if we allow for an arbitrary number of neurons \(q_1\in {\mathbb N}\) in the single FN layer. Roughly speaking, such a result for shallow FN networks holds true if and only if the chosen activation function is non-polynomial, see Leshno et al. [247]. Such results are proved either by algebraic methods of Stone–Weierstrass type or by Wiener–Tauberian denseness type arguments. Moreover, approximation results are studied in Barron [25, 26], Yukich et al. [399], Makavoz [262], Pinkus [303] and Döhler–Rüschendorf [108].

The above stated universality theorems say that shallow FN networks are sufficient from an approximation point of view. Nevertheless, we will mainly use deep (multiple layers) FN networks, below. These have better convergence properties to given function classes because they more easily promote interactions in feature components compared to shallow ones. Such questions have been studied, e.g., by Elbrächter et al. [120], Kidger–Lyons [215], Lu et al. [260] or Cheridito et al. [75]. For instance, Elbrächter et al. [120] compare finite-depth wide networks to finite-width deep networks (under the choice of the ReLU activation function), and they conclude that for many function classes deep networks lead to exponential approximation rates, whereas shallow networks only provide polynomial approximation rates at the same number of network parameters. This motivates to consider sufficiently deep FN networks for representation learning because these typically have a better approximation capacity compared to shallow ones.

We motivate this by two simple examples. For this motivation we use the step function activation  . If we have the step function activation, each neuron partitions \({\mathbb R}^{q_{m-1}}\) along a hyperplane, i.e.,

. If we have the step function activation, each neuron partitions \({\mathbb R}^{q_{m-1}}\) along a hyperplane, i.e.,

For a shallow FN network we can study the question of the maximal complexity of the resulting partition of the feature space \(\mathcal {X} \subset \{1\}\times {\mathbb R}^{q_0}\) when considering q 1 neurons (7.9) in the single FN layer z (1). Zaslavsky [400] proved that q 1 hyperplanes can partition the Euclidean space \({\mathbb R}^{q_0}\) in at most

This number (7.10) can be seen as a maximal upper complexity bound for shallow FN networks with step function activation. It grows exponentially for q 1 ≤ q 0, and it slows down to a polynomial growth for q 1 > q 0. Thus, the complexity of shallow FN networks grows comparably slow as the width q 1 of the network exceeds q 0, and therefore we often need a huge network to receive a good approximation.

This result (7.10) should be contrasted to Theorem 4 in Montúfar et al. [280] who give a lower bound on the complexity of regression functions of deep FN networks (under the ReLU activation function). Assume q m ≥ q 0 for all 1 ≤ m ≤ d. The maximal complexity is bounded below by

If we choose as an example a FN network with fixed width q m = 4 for all m ≥ 1 and an input of dimension q 0 = 2, we receive from (7.11) a lower bound of

Thus, we have an exponential growth in depth d →∞. This contrasts the polynomial complexity growth (7.10) of shallow FN networks.

Example 7.3 (Shallow vs. Deep Networks: Partitions). We give a second more explicit example that compares shallow and deep FN networks. Choose q 0 = 2 and assume we want to describe a regression function

If we think of a tool box of basis functions to build regression function μ we may want to choose indicator functions x↦χ A(x) ∈{0, 1} for arbitrary rectangles \(A=[x^-_{1},x^+_{1})\times [x^-_{2},x^+_{2}) \subset {\mathbb R}^2\). We show that we can easily construct such indicator functions χ A(x) for given rectangles \(A \subset {\mathbb R}^2\) with FN networks of depth d = 2, but not with shallow FN networks.

For illustrative purposes, we fix a square \(A=[-1/2,1/2)\times [-1/2,1/2) \subset {\mathbb R}^2\), and we want to construct χ A(x) with a network of depth d = 2. This indicator function χ A is illustrated in Fig. 7.3.

We choose the step function activation for ϕ and a first FN layer with q 1 = 4 neurons

This FN layer has a network parameter, see also (7.9),

having dimension q 1(q 0 + 1) = 12. For the second FN layer with q 2 = 4 neurons we choose the step function activation and

This FN layer has a network parameter

having dimension q

2(q

1 + 1) = 20. For the output layer we choose the identity link g(x) = x, and the regression parameter  . As a result, we obtain

. As a result, we obtain

That is, this network of depth d = 2, number of neurons (q 1, q 2) = (4, 4), step function activation and identity link can perfectly replicate the indicator function for the square A = [−1∕2, 1∕2) × [−1∕2, 1∕2), see Fig. 7.3. This network has r = 37 parameters.

We now consider a shallow FN network with q 1 neurons. The resulting regression function with identity link is given by

where we have used the step function activation  . As in (7.9), each of these neurons leads to a partition of the space \({\mathbb R}^{2}\) with a straight line. Importantly these straight lines go across the entire feature space, and, therefore, we cannot exactly construct the indicator function of Fig. 7.3 with a shallow FN network. This can nicely be seen in Fig. 7.4 (lhs), where we consider a shallow FN network with q

1 = 4 neurons, weights (7.12), and

. As in (7.9), each of these neurons leads to a partition of the space \({\mathbb R}^{2}\) with a straight line. Importantly these straight lines go across the entire feature space, and, therefore, we cannot exactly construct the indicator function of Fig. 7.3 with a shallow FN network. This can nicely be seen in Fig. 7.4 (lhs), where we consider a shallow FN network with q

1 = 4 neurons, weights (7.12), and  .

.

However, from the universality theorems we know that shallow FN networks can approximate any compactly supported (continuous) function arbitrarily well for sufficiently large q 1. In this example we can introduce additional neurons and let the resulting hyperplanes rotate around the origin. In Fig. 7.4 (middle, rhs) we show this for q 1 = 8 and q 1 = 64 neurons. We observe that this allows us to approximate a circle, see Fig. 7.4 (rhs), and having circles of different sizes at different locations will allow us to approximate the square A considered above. However, of course, this is a much less efficient way compared to the deep FN network (7.13).

Intuitively speaking, shallow FN networks act like additions where we add more and more separating hyperplanes for q 1 →∞ (superposition of basis functions). In contrast to that, going deep allows us to not only use additions but to also use multiplications (composition of basis functions). This is the reason, why we can easily construct the indicator function χ A in the deep case (where we multiply zero’s along the boundary of A), but not in the shallow case. \(\blacksquare \)

7.2.3 Gradient Descent Methods

We describe gradient descent methods in this section. These are used to fit FN networks. Gradient descent algorithms have already been used in Sect. 6.2.4 for fitting LASSO regularized regression models. We will give the full methodological part here, without relying on Sect. 6.2.4.

7.2.3.1 Plain Vanilla Gradient Descent Algorithm

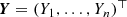

Assume we have independent instances (Y i, x i), 1 ≤ i ≤ n, that follow the same member of the EDF. We choose a regression function

for a strictly monotone and smooth link function g, and a FN network z

(d:1) with network parameter \(\boldsymbol {\vartheta } \in {\mathbb R}^r\). We assume that the chosen activation function ϕ is differentiable. We highlight in the notation that the mean functional μ

𝜗(⋅) depends on the network parameter 𝜗. The canonical parameter of the response Y

i is given by θ(x

i) = h(μ

𝜗(x

i)) ∈ Θ, where h = (κ′)−1 is the canonical link and κ the cumulant function of the chosen member of the EDF. This gives us (under constant dispersion φ) the log-likelihood function, for given data  ,

,

The deviance loss function in this model is given by, see (4.9) and (4.8),

The MLE of 𝜗 is found by either maximizing the log-likelihood function or by minimizing the deviance loss function in 𝜗. This problem cannot be solved in general because of complexity. Typically, the deviance loss function is non-convex in 𝜗 and it may have many local minimums. This is one of the reasons, why we are less ambitious here, and why we just try to find a network parameter \(\widehat {\boldsymbol {\vartheta }}\) which provides a “small” deviance loss \({\mathfrak D}(\boldsymbol {Y}, \widehat {\boldsymbol {\vartheta }})\) for the given data Y . We discuss this further, below, in fact, this is a crucial point in FN network fitting that is related to in-sample over-fitting and, therefore, this point will require a broader discussion.

For the moment, we just try to find a network parameter \(\widehat {\boldsymbol {\vartheta }}\) that provides a small deviance loss \({\mathfrak D}(\boldsymbol {Y}, \widehat {\boldsymbol {\vartheta }})\) for the given data Y . Gradient descent algorithms suggest that we try to step-wise locally improve our current position by changing the network parameter into the direction of the maximal local decrease of the deviance loss function. By assumption, our deviance loss function is differentiable in 𝜗. This allows us to consider the following first order Taylor expansion in 𝜗

This shows that the locally optimal change \(\boldsymbol {\vartheta } \mapsto \widetilde {\boldsymbol {\vartheta }}\) points into the opposite direction of the gradient of the deviance loss function. This motivates the following gradient descent step.

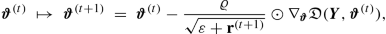

Assume that at algorithmic time \(t \in {\mathbb N}\) we have a network parameter \(\boldsymbol {\vartheta }^{(t)} \in {\mathbb R}^r\). Choose a suitable learning rate ϱ t+1 > 0, and consider the gradient descent update

This gradient descent update gives us the new (smaller) deviance loss at algorithmic time t + 1

Under suitably tempered learning rates (ϱ t)t≥1, this algorithm will converge to a local minimum of the deviance loss function as t →∞ (supposed that we do not get trapped in a saddlepoint).

Remarks 7.4. We give a couple of (preliminary) remarks on the gradient descent algorithm (7.15), more explanation, further derivations, and variants of the gradient descent algorithm will be discussed below.

-

In the applications we will early stop the gradient descent algorithm before reaching a local minimum (to prevent from over-fitting). This is going to be discussed in the next paragraphs.

-

Fine-tuning the learning rate (ϱ t)t is important, in particular, there is a trade-off between smaller and bigger learning rates: they need to be sufficiently small so that the first order Taylor expansion is still a valid approximation, and they should be sufficiently big otherwise the convergence of the algorithm will be very slow because it needs many iterations.

-

The gradient descent algorithm is a first order algorithm, and one is tempted to study higher order approximations, e.g., leading to the Newton–Raphson algorithm. Unfortunately, higher order derivatives are computationally not feasible if the size n of the data

and the dimension r of the network parameter 𝜗 are large. In fact, even the calculation of the first order derivatives may be challenging and, therefore, stochastic gradient descent methods are considered below. Nevertheless, it is beneficial to have a notion of a second order term. Momentum-based methods originate from approximating the second order terms, these will be studied in (7.19)–(7.20), below.

and the dimension r of the network parameter 𝜗 are large. In fact, even the calculation of the first order derivatives may be challenging and, therefore, stochastic gradient descent methods are considered below. Nevertheless, it is beneficial to have a notion of a second order term. Momentum-based methods originate from approximating the second order terms, these will be studied in (7.19)–(7.20), below. -

The gradient descent step (7.15) solves an unconstraint local optimization. Similarly to (6.15)–(6.16) we could change the gradient descent algorithm to a constraint optimization problem, e.g., involving a LASSO constraint that can be solved with the generalized projection operator (6.17).

7.2.3.2 Gradient Calculation via Back-Propagation

Fast gradient descent algorithms essentially rely on fast gradient calculations of the deviance loss function. Under the EDF setup we have gradient w.r.t. 𝜗

where the last step uses the variance function V (⋅) of the chosen EDF, we also refer to (5.9). The main difficulty is the calculation of the gradient

w.r.t. the network parameter  , and where each FN layer z

(m) involves the weights \(\mathcal {W}^{(m)}=(\boldsymbol {w}_1^{(m)}, \ldots , \boldsymbol {w}_{q_m}^{(m)}) \in {\mathbb R}^{(q_{m-1}+1)\times q_m}\). The workhorse for these gradient calculations is the back-propagation method of Rumelhart et al. [324]. Basically, the back-propagation method is a clever reparametrization of the problem so that the gradients can be calculated more easily. We therefore modify the weight matrices \(\mathcal {W}^{(m)}\) by dropping the first row containing the intercept parameters \(w_{0,j}^{(m)}\), 1 ≤ j ≤ q

m. Define for 1 ≤ m ≤ d + 1

, and where each FN layer z

(m) involves the weights \(\mathcal {W}^{(m)}=(\boldsymbol {w}_1^{(m)}, \ldots , \boldsymbol {w}_{q_m}^{(m)}) \in {\mathbb R}^{(q_{m-1}+1)\times q_m}\). The workhorse for these gradient calculations is the back-propagation method of Rumelhart et al. [324]. Basically, the back-propagation method is a clever reparametrization of the problem so that the gradients can be calculated more easily. We therefore modify the weight matrices \(\mathcal {W}^{(m)}\) by dropping the first row containing the intercept parameters \(w_{0,j}^{(m)}\), 1 ≤ j ≤ q

m. Define for 1 ≤ m ≤ d + 1

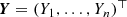

where \(w_{j_{m-1},j_{m}}^{(m)}\) denotes component j m−1 of \(\boldsymbol {w}_{j_m}^{(m)}\), and where we set q d+1 = 1 (output dimension) and \(w_{j_d,1}^{(d+1)}=\beta _{j_d}\) for 0 ≤ j d ≤ q d.

Proposition 7.5 (Back-Propagation for the Hyperbolic Tangent Activation). Choose a FN network of depth \(d\in {\mathbb N}\) and with hyperbolic tangent activation function \(\phi (x)=\tanh (x)\).

-

Define recursively

-

initialize q d+1 = 1 and \(\boldsymbol {\delta }^{(d+1)}(\boldsymbol {x})=\mathbf {1}\in {\mathbb R}^{q_{d+1}}\);

-

iterate for d ≥ m ≥ 1

$$\displaystyle \begin{aligned} \boldsymbol{\delta}^{(m)}(\boldsymbol{x})~=~ \mbox{diag}\left(1-\left(z^{(m:1)}_{j_m}(\boldsymbol{x})\right)^2\right)_{1\le j_m\le q_m} \mathcal{W}^{(m+1)}_{(-0)}\, \boldsymbol{\delta}^{(m+1)}(\boldsymbol{x}) ~\in ~{\mathbb R}^{q_m}. \end{aligned}$$

-

-

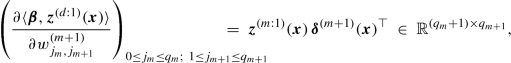

We obtain for 0 ≤ m ≤ d

where \(\boldsymbol {z}^{(0:1)}(\boldsymbol {x})=\boldsymbol {x} \in {\mathbb R}^{q_0+1}\) and \(\boldsymbol {w}_1^{(d+1)}=\boldsymbol {\beta } \in {\mathbb R}^{q_d+1}\).

Proof of Proposition 7.5. Choose 1 ≤ m ≤ d and define for the neurons 1 ≤ j m ≤ q m the variables

The learned representation in the m-th FN layer is obtained by activating these variables

For the output we define

The main idea is to calculate the derivatives of 〈β, z (d:1)(x)〉 w.r.t. these new variables \(\zeta _j^{(m)}(\boldsymbol {x})\).

Initialization for m = d + 1

This provides for m = d + 1 and 1 ≤ j d+1 ≤ q d+1 = 1

Recursion for m < d + 1

Next, we calculate the derivatives w.r.t. \(\zeta _{j_d}^{(d)}(\boldsymbol {x})\), for m = d and 1 ≤ j d ≤ q d. They are given by (note q d+1 = 1)

where we have used \(w_{j_d,1}^{(d+1)}=\beta _{j_d}\) and for the hyperbolic tangent activation function ϕ′ = 1 − ϕ 2. Continuing recursively for d > m ≥ 1 and 1 ≤ j m ≤ q m we obtain

Thus, the vectors  are calculated recursively in d ≥ m ≥ 1 with initialization δ

(d+1)(x) = 1 and the recursion

are calculated recursively in d ≥ m ≥ 1 with initialization δ

(d+1)(x) = 1 and the recursion

Finally, we need to show how these derivatives are related to the original derivatives in the gradient descent method. We have for 0 ≤ j d ≤ q d and j d+1 = 1

For 1 ≤ m < d, and 0 ≤ j m ≤ q m and 1 ≤ j m+1 ≤ q m+1 we have

For m = 0, and 0 ≤ l ≤ q 0 and 1 ≤ j 1 ≤ q 1 we have

This completes the proof of Proposition 7.5. □

Remark 7.6. Proposition 7.5 gives the back-propagation method for the hyperbolic tangent activation function which has derivative ϕ′ = 1 − ϕ 2. This becomes visible in the definition of δ (m)(x) where we consider the diagonal matrix

For a general differentiable activation function ϕ this needs to be replaced by, see (7.17),

In the case of the sigmoid activation function this gives us, see also Table 7.1,

_______________________________________________________________

Plain vanilla gradient descent algorithm for FN networks

________________________________________________________________________________________________

-

1.

Choose an initial network parameter \(\boldsymbol {\vartheta }^{(0)} \in {\mathbb R}^r\).

-

2.

Iterate for t ≥ 0 until a stopping criterion is met:

-

(a)

Calculate the gradient \(\nabla _{\boldsymbol {\vartheta }}{\mathfrak D}(\boldsymbol {Y}, \boldsymbol {\vartheta })\) in network parameter 𝜗 = 𝜗 (t) using (7.16) and the back-propagation method of Proposition 7.5 (for the hyperbolic tangent activation function).

-

(b)

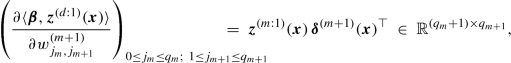

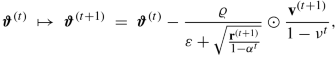

Make the gradient descent step for a suitable learning rate ϱ t+1 > 0

$$\displaystyle \begin{aligned} \boldsymbol{\vartheta}^{(t)} ~\mapsto ~\boldsymbol{\vartheta}^{(t+1)} =\boldsymbol{\vartheta}^{(t)}-\varrho_{t+1} \nabla_{\boldsymbol{\vartheta}}{\mathfrak D}(\boldsymbol{Y}, \boldsymbol{\vartheta}^{(t)}). \end{aligned}$$

-

(a)

__________________________________________________________________

Remark 7.7. The initialization \(\boldsymbol {\vartheta }^{(0)} \in {\mathbb R}^r\) of the gradient descent algorithm needs some care. A FN network has many symmetries, for instance, we can permute neurons within a FN layer and we receive the same predictive model. For this reason, the initial network weights \(\mathcal {W}^{(m)}=(\boldsymbol {w}_1^{(m)}, \ldots , \boldsymbol {w}_{q_m}^{(m)}) \in {\mathbb R}^{(q_{m-1}+1)\times q_m}\), 1 ≤ m ≤ d, should not be chosen with identical components because this will result in a saddlepoint of the corresponding objective function, and gradient descent will not work. For this reason, these weights are initialized randomly either using a uniform or a Gaussian distribution. The former is related to the glorot_uniform initializer in keras,Footnote 2 see (16) in Glorot–Bengio [160]. This initializer scales the support of the uniform distribution with the sizes of the FN layers that are connected by the corresponding weights \(\boldsymbol {w}_j^{(m)}\).

For the output parameter we usually set as initial value  , where \(\widehat {\beta }_0^{(0)}\) is the MLE in the corresponding null model (not considering any features) and transformed to the chosen link g. This choice implies that the gradient descent algorithm starts in the null model, and any decrease in deviance loss can be seen as an improved in-sample loss of using the FN network regression structure over the null model.

, where \(\widehat {\beta }_0^{(0)}\) is the MLE in the corresponding null model (not considering any features) and transformed to the chosen link g. This choice implies that the gradient descent algorithm starts in the null model, and any decrease in deviance loss can be seen as an improved in-sample loss of using the FN network regression structure over the null model.

7.2.3.3 Stochastic Gradient Descent

The gradient in (7.16) has two parts. We have a vector

and we have a matrix

The gradient of the deviance loss function is obtained by the matrix multiplication

Matrix multiplication can be very slow in numerical implementations if the sample size n is large. For this reason, one typically uses the stochastic gradient descent (SGD) method that does not consider the entire data  simultaneously.

simultaneously.

For the SGD method one chooses a fixed batch size \(b \in {\mathbb N}\), and one randomly partitions the entire data Y into (mini-)batches Y 1, …, Y ⌊n∕b⌋ of approximately the same size b (up to cardinality). Each gradient descent update

is then only based on the observations Y s in the corresponding batch 1 ≤ s ≤⌊n∕b⌋. Typically, one sequentially visits all batches, and screening each batch once is called an epoch. Thus, if we run the SGD algorithm over K epochs on batches of size b ≤ n, then we perform K⌊n∕b⌋ gradient descent steps.

Choosing batches of size b reduces the complexity of the matrix multiplication from n to b, and, henceforth, leads to much faster run times in one gradient descent step. On the other hand, batches should have a minimal size so that the gradient descent updates are not too erratic, i.e., if the batches are too small, the randomness in the data may point too often into a (completely) wrong direction for the optimal gradient descent step. For this reason, optimal batch sizes should be chosen carefully. For instance, if we study a low frequency claims count problem, say, with an expected frequency of λ = 10%, we can determine confidence bounds for parameter estimation. This will provide an estimate of a minimal batch size b for a reliable parameter estimate.

To have a few erratic steps in SGD, however, can also be beneficial, as long as there are not too many of those. Sometimes, the algorithm gets trapped in saddlepoints or in flat areas of the objective function (vanishing gradient problem). If this is the case, an erratic step may be beneficial because it may perturb the algorithm out of its bottleneck. In fact, often SGD has a better performance than the plain vanilla gradient descent algorithm that is based on the entire data Y because of these noisy contributions.

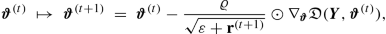

7.2.3.4 Momentum-Based Gradient Descent Methods

The gradient descent method only considers a first order Taylor expansion and one is tempted to consider higher order terms to improve the approximation. For instance, Newton’s method uses a second order Taylor term by updating

In many practical applications this calculation is not feasible as the Hessian \(\nabla ^2_{\boldsymbol {\vartheta }}{\mathfrak D}(\boldsymbol {Y}, \boldsymbol {\vartheta }^{(t)})\) cannot be calculated in a reasonable amount of time. Another (simple) way of considering the changes in the gradients is the momentum-based gradient descent method of Rumelhart et al. [324]. This is inspired by mechanics in physics and it is achieved by considering the gradients over several iterations of the algorithm (with exponentially decaying weights). Choose a momentum coefficient ν ∈ [0, 1) and define the initial speed v (0) = 0 \(\in {\mathbb R}^r\).

Replace the gradient descent update (7.15) by

For ν = 0 we have the plain vanilla gradient descent method, for ν > 0 we also memorize the previous gradients (with exponentially decaying weights). Typically this leads to better convergence properties.

Nesterov [284] has noticed that for convex functions the gradient descent updates may have a zig-zag behavior. Therefore, he proposed the so-called Nesterov-accelerated version

Thus, the calculation of the momentum v (t+1) uses a look-ahead 𝜗 (t) + ν v (t) in the gradient calculation (anticipating part of the next step). This provides for the update (7.21) the following equivalent versions, under reparametrization \(\widetilde {\boldsymbol {\vartheta }}^{(t)}= \boldsymbol {\vartheta }^{(t)}+\nu {\mathbf {v}}^{(t)}\),

For the Nesterov accelerated update we can also study, we use the last line of (7.22),

Compared to (7.19)–(7.20), we just shift the index by 1 in the momentum v (t) in the round brackets of (7.23). The typical way how the Nesterov-acceleration is formulated is, yet, another equivalent formulation, namely, only in terms of 𝜗 (t) and \(\widetilde {\boldsymbol {\vartheta }}^{(t)}\). From the second line of (7.22) and (7.21) we have the updates

Typically, one chooses the momentum coefficient ν in (7.24) time-dependent by setting ν t = t∕(t + 3).

In our applications we will use the R interface to the keras library [77]. This library has a couple of standard momentum-based gradient descent methods implemented which use pre-defined learning rates and momentum coefficients. In our analysis we are mainly relying on the variants rmsprop and the Nesterov-accelerated version of adam, called nadam. Therefore, we briefly describe these three variants, and for more information we refer to Sections 8.3 and 8.5 in Goodfellow et al. [166].

Predefined Gradient Descent Methods

-

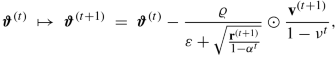

rmsprop stands for ‘root mean square propagation’, and its origin can be found in a lecture of Hinton et al. [187]. Denote by ⊙ the Hadamard product that computes the component-wise products of two matrices. Choose a weight α ∈ (0, 1) and calculate the accumulated squared gradients, set \({\mathbf r}^{(0)}=0 \in {\mathbb R}^r\),

$$\displaystyle \begin{aligned} {\mathbf r}^{(t)} ~\mapsto ~{\mathbf r}^{(t+1)}~=~\alpha {\mathbf r}^{(t)} + (1-\alpha)\left( \nabla_{\boldsymbol{\vartheta}}{\mathfrak D}(\boldsymbol{Y}, \boldsymbol{\vartheta}^{(t)}) \odot \nabla_{\boldsymbol{\vartheta}}{\mathfrak D}(\boldsymbol{Y}, \boldsymbol{\vartheta}^{(t)})\right) ~\in ~{\mathbb R}^r. \end{aligned} $$The sequence (r (t))t≥1 memorizes the (squared) magnitudes of the components of the gradients \(\nabla _{\boldsymbol {\vartheta }}{\mathfrak D}(\boldsymbol {Y}, \boldsymbol {\vartheta }^{(t)})\), t ≥ 1. This is done individually for each component because we may have directional differences in magnitudes (and momentum). In contrast to (7.19), r (t) does not model the speed, but rather an inverse weight. This then motivates the gradient descent update

where the square-root is taken component-wise, for a global decay rate ϱ > 0, and for a small positive constant ε > 0 to ensure that everything is well-defined.

-

adam stands for ‘adaptive moment’ estimation, and it has been proposed by Kingma–Ba [216]. The momentum is determined by the first two moments in adam, namely, we set \({\mathbf v}^{(0)}={\mathbf r}^{(0)}=0\in {\mathbb R}^r \) and we consider

$$\displaystyle \begin{aligned} \begin{array}{rcl}{} {\mathbf v}^{(t)} & \mapsto &\displaystyle {\mathbf v}^{(t+1)}~=~\nu {\mathbf v}^{(t)} + (1-\nu) \nabla_{\boldsymbol{\vartheta}}{\mathfrak D}(\boldsymbol{Y}, \boldsymbol{\vartheta}^{(t)}), \end{array} \end{aligned} $$(7.25)$$\displaystyle \begin{aligned} \begin{array}{rcl} {\mathbf r}^{(t)} & \mapsto &\displaystyle {\mathbf r}^{(t+1)}~=~\alpha {\mathbf r}^{(t)} + (1-\alpha)\left( \nabla_{\boldsymbol{\vartheta}}{\mathfrak D}(\boldsymbol{Y}, \boldsymbol{\vartheta}^{(t)}) \odot \nabla_{\boldsymbol{\vartheta}}{\mathfrak D}(\boldsymbol{Y}, \boldsymbol{\vartheta}^{(t)})\right),\qquad {} \end{array} \end{aligned} $$(7.26)for given weights ν, α ∈ (0, 1). Similar to Bayesian credibility theory, v (t) and r (t) are biased because these two processes have been initialized in zero. Therefore, they are rescaled by 1∕(1 − ν t) and 1∕(1 − α t), respectively. This gives us the gradient descent update

where the square-root is taken component-wise, for a global decay rate ϱ > 0, and for a small positive constant ε > 0 to ensure that everything is well-defined.

-

nadam is the Nesterov-accelerated [284] version of adam. Similarly as when going from (7.19)–(7.20) to (7.23), the acceleration is obtained by a shift of 1 in the velocity parameter, thus, consider the Nesterov-accelerated adam update

7.2.3.5 Maximum Likelihood Estimation and Over-fitting

As explained above, we model the mean of the datum (Y, x) by a deep FN network

for a network parameter \(\boldsymbol {\vartheta } \in {\mathbb R}^r\). MLE of this network parameter requires solving for given data Y

In Fig. 7.5 we give a schematic figure of a loss surface \(\boldsymbol {\vartheta } \mapsto {\mathfrak D}(\boldsymbol {Y}, \boldsymbol {\vartheta })\) for a (low-dimensional) example \(\boldsymbol {\vartheta } \in {\mathbb R}^2\). The two plots show the same loss surface from two different angles. This loss surface has three (local) minimums (red color), and the smallest one (global minimum) gives the MLE \(\widehat {\boldsymbol {\vartheta }}^{\text{MLE}}\).

In general, this global minimum cannot be found for more complex network architectures because the loss surface typically has a complicated structure for high-dimensional parameter spaces. Is this a problem in FN network fitting? Not really! We are going to explain why. The universality theorems in Sect. 7.2.2 state that more complex FN networks have an excellent approximation capacity. If we translate this to our statistical modeling problem it means that the observations Y can be approximated arbitrarily well by sufficiently complex FN networks. In particular, for a given complex network architecture, the MLE \(\widehat {\boldsymbol {\vartheta }}^{\text{MLE}}\) will provide the optimal fit of this architecture to the data Y , and, as a result, this network does not only reflect the systematic effects in the data but also the noisy part. This behavior is called (in-sample) over-fitting to the learning data \(\mathcal {L}\). It implies that such statistical models typically have a poor generalization to unseen (out-of-sample) test data \(\mathcal {T}\); this is illustrated by the red color in Fig. 7.6. For this reason, in general, we are not interested in finding the MLE \(\widehat {\boldsymbol {\vartheta }}^{\text{MLE}}\) of 𝜗 in FN network regression modeling, but we would like to find a parameter estimate \(\widehat {\boldsymbol {\vartheta }}\) that (only) extracts the systematic effects from the learning data \(\mathcal {L}\). This is illustrated by the different colors in Figs. 7.5 and 7.6, where we assume: (a) red color provides models with a poor generalization power due to over-fitting, (b) blue color provides models with a poor generalization power, too, because these parametrizations do not explain the systematic effects in the data at all (called under-fitting), and (c) green color gives good parametrizations that explain the systematic effects in the data and generalize well to unseen data. Thus, the aim is to find parametrizations that are in the green area of Fig. 7.5. This green area emphasizes that we lose the notion of uniqueness because there are infinitely many models in the green area that have a comparable generalization power. Next we explain how we can exploit the gradient descent algorithm to make it useful for finding parametrizations in the green area. Remark 7.8. The loss surface considerations in Fig. 7.5 are based on a fixed network architecture. Recent research promotes the so-called Graph HyperNetwork (GHN) that is a (hyper-)network which tries to find the optimal network architecture and its parametrization by an additional network, we refer to Zhang et al. [402] and Knyazev et al. [219].

7.2.3.6 Regularization Through Early Stopping

As stated above, if we run the gradient descent algorithm with properly tempered learning rates it will converge to a local minimum of the loss function, which means that the resulting FN network over-fits to the learning data. For this reason we need to early stop the gradient descent algorithm beforehand. Coming back to Fig. 7.5, typically, we start the gradient descent algorithm somewhere in the blue area of the loss surface (supposed that the red area is a sparse set on the loss surface). Visually speaking, the gradient descent algorithm then walks down the valley (green, yellow and red area) by exploiting locally optimal steps. Since at the early stage of the algorithm the systematic effects play a dominant role over the noisy part, the gradient descent algorithm learns these systematic effects at this first stage (blue area in Fig. 7.5). When the algorithm arrives at the green area the noisy part in the data starts to increasingly influence the model calibration (gradient descent steps), and, henceforth, at this stage the algorithm should be stopped, and the learned parameter should be selected for predictive modeling. This early stopping is an implicit way of regularization, because it implies that we stop the parameter fitting before the parameters start to learn very individual features of the (noisy) data (and take extreme values).

This early stopping point is determined by doing an out-of-sample analysis. This requires the learning data \(\mathcal {L}\) to be further split into training data \(\mathcal {U}\) and validation data \(\mathcal {V}\). The training data \(\mathcal {U}\) is used for gradient descent parameter learning, and the validation data \(\mathcal {V}\) is used for tracking the over-fitting by an instantaneous (out-of-sample) validation analysis. This partition is illustrated in Fig. 7.7, which also highlights that the validation data \(\mathcal {V}\) is disjoint from the test data \(\mathcal {T}\), the latter only being used in the final step for comparing different statistical models (e.g., a GLM vs. a FN network). That is, model comparison is done in a proper out-of-sample manner on \(\mathcal {T}\), and each of these models is only fit on \(\mathcal {U}\) and \(\mathcal {V}\). Thus, for FN network fitting with early stopping we need a reasonable amount of data that can be split into 3 sufficiently large data sets so that each is suitable for its purpose.

For early stopping we partition the learning data \(\mathcal {L}\) into training data \(\mathcal {U}\) and validation data \(\mathcal {V}\). The plain vanilla gradient descent algorithm can then be changed as follows.

________________________________________________________________________________________________

Plain vanilla gradient descent algorithm with early stopping

________________________________________________________________________________________________

-

1.

Choose an initial network parameter \(\boldsymbol {\vartheta }^{(0)} \in {\mathbb R}^r\).

-

2.

Iterate for t ≥ 0 until the early stopping criterion is met:

-

(a)

Calculate the gradient \(\nabla _{\boldsymbol {\vartheta }}{\mathfrak D}(\mathcal {U}, \boldsymbol {\vartheta })\) in network parameter 𝜗 = 𝜗 (t) on the training data \(\mathcal {U}\) using (7.16) and the back-propagation method of Proposition 7.5 (for the hyperbolic tangent activation function).

-

(b)

Make the gradient descent step for a suitable learning rate ϱ t+1 > 0

$$\displaystyle \begin{aligned} \boldsymbol{\vartheta}^{(t)} ~\mapsto ~\boldsymbol{\vartheta}^{(t+1)} =\boldsymbol{\vartheta}^{(t)}-\varrho_{t+1} \nabla_{\boldsymbol{\vartheta}}{\mathfrak D}(\mathcal{U}, \boldsymbol{\vartheta}^{(t)}). \end{aligned}$$ -

(c)

Calculate the validation loss \({\mathfrak D}(\mathcal {V}, \boldsymbol {\vartheta }^{(t)})\) on the validation data \(\mathcal {V}\).

-

(d)

Stop the algorithm if the validation loss increases, i.e., if

$$\displaystyle \begin{aligned} {\mathfrak D}(\mathcal{V}, \boldsymbol{\vartheta}^{(t)})~>~{\mathfrak D}(\mathcal{V}, \boldsymbol{\vartheta}^{(t-1)}),{} \end{aligned} $$(7.27)and return the learned parameter (estimate) \(\widehat {\boldsymbol {\vartheta }}=\boldsymbol {\vartheta }^{(t-1)}\).

-

(a)

__________________________________________________________________

In applications we use the SGD algorithm that can also have erratic steps because not all random (mini-)batches are necessarily typical representations of the data. In such cases we should use more sophisticated stopping criteria than (7.27), for instance, early stop if the validation loss increases five times in a row.

Figure 7.8 provides an example of the application of the SGD algorithm on training data \(\mathcal {U}\) and validation data \(\mathcal {V}\). The training loss is in blue color and the validation loss in green color. We observe that the validation loss has its minimum after 52 epochs (orange vertical line), and hence the fitting algorithm should be stopped at this point. We give a couple of remarks concerning Fig. 7.8:

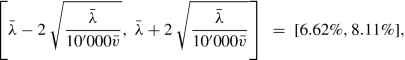

-

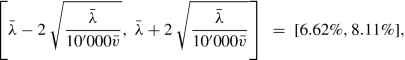

The learning data \(\mathcal {L}\) exactly corresponds to the claims frequency data of Sect. 5.2.4, see also Table 5.2. We take 10% as validation data which gives \(|\mathcal {U}|=549'185\) and \(|\mathcal {V}|=61'021\). For the SGD algorithm we use batches of size 10′000 which implies that one epoch corresponds to ⌊549′185∕10′000⌋ = 54 gradient descent steps. For batches of size 10′000 we expect an approximate estimation precision on an average frequency of \(\bar {\lambda }=7.36\%\) in the Poisson model of

Fig. 7.9 with an average exposure \(\bar {v}=0.5283\) on our learning data, we also refer to Example 3.22.

-

The FN network architecture used in Fig. 7.8 is the one shown in Fig. 7.2 using one-hot encoding for categorical variables, see Sect. 7.3.1, below, and the responses are modeled by a Poisson distribution.

-

The training loss \({\mathfrak D}(\mathcal {U}, \boldsymbol {\vartheta }^{(t)})\), blue curve in Fig. 7.8, is a bit wiggly which comes from the fact that we use a SGD where not every batch leads to the optimal decrease in loss. Remark that the loss figures in the graph correspond to average losses over an entire epoch, i.e., in our case an average over 54 SGD steps. Also remark that the y-scale does not show the Poisson deviance loss: we use the loss figures provided by keras [77] and these figures drop all terms of the deviance loss that are not relevant for parameter estimation.

We close this section with remarks.

Remarks 7.9.

-

We perform early stopping because otherwise a complex FN network would in-sample over-fit to the learning data. At this stage, one could be tempted to choose a smaller network to prevent from over-fitting. In general, this is not a sensible thing to do because the network needs sufficient flexibility to be able to be fitted to the data. That is, we need some redundancy in the model to be able to successfully apply the SGD algorithm, otherwise the algorithm may get trapped in saddlepoints or bottlenecks. Thus, the chosen network architecture should be above the bound of a necessary minimal complexity, and different architectures above this bound will provide similar accuracy (without a clear winner).

-

The chosen network will contain certain elements of randomness, and different runs of the SGD algorithm will provide different solutions. Firstly, the initialization \(\boldsymbol {\vartheta }^{(0)} \in {\mathbb R}^r\) of the algorithm is chosen at random, and since we early stop the algorithm and because we do not have a unique optimal point, the chosen solution will depend on this random initialization. Secondly, the split between training and validation data is done at random, and thirdly the partitioning of the training data into mini-batches is done at random. All these random elements make the early stopped SGD solution non-unique.

-

Early stopping implies that the chosen network parameter estimate \(\widehat {\boldsymbol {\vartheta }}\) does not correspond to a solution of the score equations and, henceforth, asymptotic results about MLEs do not apply, see Theorem 3.28.

7.3 Feed-Forward Neural Network Examples

7.3.1 Feature Pre-processing

Similarly to GLMs, we also need to pre-process the feature components in FN network regression modeling. The former Sect. 5.2.2 for GLMs has been called ‘feature engineering’ because we need to bring the feature components into an appropriate functional form w.r.t. the given regression task. The present section is called ‘feature pre-processing’ because we do not need to engineer the features for FN networks. We only need to bring them into a suitable (tabular) form to enter the network, and the network will then do an automated feature engineering through representation learning.

7.3.1.1 Categorical Feature Components: One-Hot Encoding

The categorical features have been treated by dummy coding within GLMs. Dummy coding provides full rank design matrices. For FN network regression modeling the full rank property is not important because, anyway, we neither have a single (local) minimum in the objective function, nor do we want to calculate the MLE of the network parameter. Typically, in FN network regression modeling one uses one-hot encoding for the categorical variables that encodes every level by a unit vector. Assume the raw feature component \(\widetilde {x}_j\) is a categorical variable taking K different levels {a 1, …, a K}. One-hot encoding is obtained by the embedding map

An explicit example is given in Table 7.2 which should be compared to Table 5.1.

as row vectors

as row vectors7.3.1.2 Continuous Feature Components

The continuous feature components do not need any pre-processing but they can directly enter the FN network which will take care of representation learning. However, an efficient use of gradient descent methods typically requires that all feature components live on a similar scale and that they are roughly uniformly spread across their domains. This makes gradient descent steps more efficient in exploiting the relevant directions.

One possibility is to use the MinMaxScaler. Let \(x_j^-\) and \(x_j^+\) be the minimal and maximal possible feature values of the continuous feature component x j, i.e., \(x_j \in [x_j^-,x_j^+]\). We transform this continuous feature component to unit scale for all data 1 ≤ i ≤ n by

The resulting feature values \((x_{i,j}^{\text{MM}})_{1\le i \le n}\) should roughly be uniformly spread across the interval [−1, 1]. If this is not the case, for instance, because we have outliers in the feature values, we may first transform them non-linearly to get more uniformly spread values. For example, we consider the Density of the car frequency example on the log scale.

An alternative to the MinMaxScaler is to consider normalization with the empirical mean \(\bar {x}_j\) and the empirical standard deviation \(\hat {\sigma }_j\) over all data x i,j. That is,

It depends on the application whether the MinMaxScaler or normalization with the empirical mean and standard deviation works better. Important in applications is that we use exactly the same values for the normalization of training data \(\mathcal {U}\), validation data \(\mathcal {V}\) and test data \(\mathcal {T}\), to make the same network applicable to all these data sets. For notational convenience we will drop the upper index in \(x_{i,j}^{\text{MM}}\) or \(x_{i,j}^{\text{sd}}\), respectively, and we throughout assume that all feature components are appropriately pre-processed.

7.3.2 Lab: Poisson FN Network for Car Insurance Frequencies

We present a first FN network example applied to the French MTPL claim frequency data studied in Sect. 5.2.4. We assume that the claim counts N i are independent and Poisson distributed with claim count density (5.26), where we replace the GLM regression function \(\boldsymbol {x} \mapsto \exp \langle \boldsymbol {\beta }, \boldsymbol {x} \rangle \) by a FN network regression function

We use a FN network of depth d = 3 having number of neurons (q 1, q 2, q 3) = (20, 15, 10) and using the hyperbolic tangent activation function. We pre-process the categorical variables VehBrand and Region by one-hot encoding providing input dimensions 11 and 22, respectively. The binary variable VehGas is encoded as 0–1. Because of scarcity of data we right-censor the continuous variables VehAge at 20, DrivAge at 90 and BonusMalus at 150, and we transform Density to the log scale. We then apply to each of these (modified) continuous variables Area, VehPower, VehAge, DrivAge, BonusMalus and log(Density) a MinMaxScaler. This provides us with an input dimension q 0 = 11 + 22 + 1 + 6 = 40. The resulting FN network is illustrated in Fig. 7.2, with the one-hot encoded variables VehBrand in orange color and Region in magenta color. It has a network parameter \(\boldsymbol {\vartheta } \in {\mathbb R}^r\) of dimension r = 1′306.

Listing 7.1 FN network of depth d = 3 using the R library keras [77]

Listing 7.2 FN network illustrated in Fig. 7.2

Listing 7.3 Fitting a FN network using the R library keras [77]

This network is implemented in R using the library keras [77]. The code is provided in Listing 7.1 and the resulting network architecture is summarized in Listing 7.2. This network is now fitted to the data. We use a batch size of 10’000, we use the nadam version of SGD, we use 10% of the learning data \(\mathcal {L}\) as validation data \(\mathcal {V}\) and the remaining 90% as training data \(\mathcal {U}\). We then run the corresponding SGD algorithm and we retrieve the network with the lowest validation loss using a callback. This is illustrated in Listing 7.3. The fitting performance on the training and validation data is illustrated in Fig. 7.8, and we retrieve the network calibration after the 52th epoch because it has the lowest validation loss. The results are presented in Table 7.3.

From the results of Table 7.3 we conclude that the FN network outperforms model Poisson GLM3 (out-of-sample) since it has a (clearly) lower out-of-sample deviance loss on the test data \(\mathcal {T}\). This may indicate that there is an interaction between the feature components that has not been captured in the GLM. The run time of 51s corresponds to the run time until the minimal validation loss is reached, of course, in practice we need to continue beyond this minimal validation loss to ensure that we have really found the minimum. Finally, and importantly, we observe that this early stopped FN network calibration does not meet the balance property because the resulting average frequency of this fitted model of 6.96% is below the empirical frequency of 7.36%. This is a major deficiency of this FN network fitting approach, and this is going to be discussed further in Sect. 7.4.2, below.

We can perform a detailed analysis about different batch sizes, variants of SGD methods, run times, etc. We briefly summarize our findings; this summary is also based on the findings in Ferrario et al. [127]. We have fitted this model on batches of sizes 2’000, 5’000, 10’000 and 20’000, and it seems that a batch size around 5’000 has the best performance, both concerning out-of-sample performance and run time to reach the minimal validation loss. Comparing the different optimizers rmsprop, adam and nadam, a clear preference can be given to nadam: the resulting prediction accuracy is similar in all three optimizers (they all reach the green area in Fig. 7.5), but nadam reaches this optimal point in half of the time compared to rmsprop and adam.

We conclude by highlighting that different initial points 𝜗 (0) of the SGD algorithm will give different network calibrations, and differences can be considerable. This is discussed in Sect. 7.4.4, below. Moreover, we could explore different network architectures, more simple ones, more complex ones, different activation functions, etc. The results of these different architectures will not be essentially different from our results, as long as the networks are above a minimal complexity bound. This closes our first example on FN networks and this example is the benchmark for refined versions that are presented in the subsequent sections.

7.4 Special Features in Networks

7.4.1 Special Purpose Layers

So far, our networks consist of stacked FN layers, and information is passed in a directed acyclic feed-forward path from one to the next FN layer. In this section we discuss special purpose layers that perform a specific task in a FN network. These include embedding layers, drop-out layers and normalization layers. These modules should be seen as add-ons to the FN layers. Besides these add-ons, there are also recurrent layers and convolutional layers. These two types of layers are going to be discussed in own chapters, below, because their importance goes beyond just being add-ons to the FN layers.

7.4.1.1 Embedding Layers for Categorical Feature Components

The categorical feature components have been treated either by dummy coding or by one-hot encoding, and this has resulted in numerous network parameters in the first FN layer, see Fig. 7.2. Natural language processing (NLP) treats categorical feature components differently, namely, it embeds categorical feature components (or words in NLP) into a Euclidean space \({\mathbb R}^b\) of a small dimension b. This small dimension b is a hyper-parameter that has to be selected by the modeler, and which, typically, is selected much smaller than the total number of levels of the categorical feature. This embedding technique is quite common in NLP, see Bengio et al. [27,28,29], but it goes beyond NLP applications, see Guo–Berkhahn [176], and it has been introduced to the actuarial community by Richman [312, 313] and the tutorial of Schelldorfer–Wüthrich [329].

We assume the same set-up as in dummy coding (5.21) and in one-hot encoding (7.28), namely, that we have a raw categorical feature component \(\widetilde {x}_j\) taking K different levels {a 1, …, a K}. In one-hot encoding these K levels are mapped to the K unit vectors of the Euclidean space \({\mathbb R}^K\), and consequently all levels have the same mutual Euclidean distance. This does not seem to be the best way of comparing the different levels because in our regression analysis we would like to identify the levels that are more similar w.r.t. the regression task and, thus, these should cluster. For an embedding layer one chooses a Euclidean space \({\mathbb R}^b\) of a dimension b < K, typically being (much) smaller than K. One then considers the embedding map

That is, every level a k receives a vector representation \(\boldsymbol {e}^{(k)} \in {\mathbb R}^b\) which is lower dimensional than its one-hot encoding counterpart in \({\mathbb R}^K\). Proximity of the representations e (k) and \(\boldsymbol {e}^{(k')}\) in \({\mathbb R}^b\), i.e., of two levels a k and \(a_{k'}\), should be related to similarity w.r.t. the regression task at hand. Such an embedding involves K vectors \(\boldsymbol {e}^{(k)}\in {\mathbb R}^b\) of dimension b, thus, it involves Kb parameters, called embedding weights.

In network modeling, these embedding weights e (1), …, e (K) can also be learned during gradient descent training. Basically, it just means that for the categorical variables we add an additional embedding layer before the first FN layer z (1), i.e., we increase the depth of the network by 1 for the categorical feature components (by a layer that is not fully connected). This is illustrated in Fig. 7.9 (rhs) for the French MTPL insurance example of Sect. 7.3.2. The graph on the left-hand side shows the network if we apply one-hot encoding to the categorical variables VehBrand and Region; this results in a network parameter of dimension r = 1′306. The graph on the right-hand side first embeds VehBrand and Region into two 2-dimensional spaces, illustrated by the orange and magenta circles. These embeddings are concatenated with the remaining feature components, which then provides a new dimension q 0 = 7 + 2 + 2 = 11 in that example. This results in a network parameter of dimension r = 726 + 22 + 44 = 792, where 22 + 44 = 66 stands for the 2-dimensional embedding weights of the 11 VehBrands and the 22 French Regions, see Listing 7.5. Example 7.10 (Embedding Layers for Categorical Features). We revisit the example of Sect. 7.3.2, but we replace one-hot encoding of the categorical variables by embedding layers of dimension b = 2. The corresponding R code is given in Listing 7.4 and the resulting model is illustrated in Listing 7.5 and Fig. 7.9 (rhs).

Listing 7.4 FN network of depth d = 3 using embedding layers

Listing 7.5 Summary of FN network of Fig. 7.9 (rhs) using embedding layers of dimension b = 2

Apart from replacing one-hot encoding by embedding layers, we use exactly the same FN network architecture as in Sect. 7.3.2 and we apply the same fitting strategy in terms of batch sizes, optimizer and early stopping strategy. The results are presented in Table 7.4.

A first remark is that the model calibration takes longer using embedding layers compared to one-hot encoding. The main reason for this is that having an embedding layer increases the depth of the network by one layer, as can be seen from Fig. 7.9. Therefore, the back-propagation takes more time, and the convergence is slower requiring more gradient descent steps. We have less over-fitting as can be seen from Fig. 7.10. The final fitted model has a slightly better out-of-sample performance compared to the one-hot encoding one. However, this slight improvement in the performance should not be overstated because, as explained in Remarks 7.9, there are a couple of elements of randomness involved in SGD fitting, and choosing a different seed may change the results. We remark that the balance property is not fulfilled because the average frequency of the fitted model does not meet the empirical frequency, see the last column of Table 7.4; we come back to this in Sect. 7.4.2, below.

A major advantage of using embedding layers for the categorical variables is that we receive a continuous representation of nominal variables, where proximity can be interpreted as similarity for the regression task at hand. This is nicely illustrated in Fig. 7.11 which shows the resulting 2-dimensional embeddings \(\boldsymbol {e}^{\mathtt {VehBrand}}\in {\mathbb R}^2 \) and \(\boldsymbol {e}^{\mathtt {Region}} \in {\mathbb R}^2\) of the categorical variables VehBrand and Region. The Region embedding \(\boldsymbol {e}^{\mathtt {Region}} \in {\mathbb R}^2\) shows surprising similarities with the French map, for instance, Paris region R11 is adjacent to R23, R22, R21, R26, R24 (which is also the case in the French map), the Isle of Corsica R94 and the South of France R93, R91 and R73 are well separated from other regions, etc. Similar observations can be made for the embedding of VehBrand, Japanese cars B12 are far apart from the other cars, cars B1, B2, B3 and B6 (Renault, Nissan, Citroen, Volkswagen, Audi, Skoda, Seat and Fiat) cluster, etc. \(\blacksquare \)

7.4.1.2 Drop-Out Layers and Regularization

Above, over-fitting to the learning data has been taken care of by early stopping. In view of Sect. 6.2 one could also use regularization. This can easily be obtained by replacing (7.14), for instance, by the following L p-regularized counterpart

for some p ≥ 1, regularization parameter λ > 0 and where the reduced network parameter \(\boldsymbol {\vartheta }_-\in {\mathbb R}^{r-1}\) excludes the intercept parameter β 0 of the output layer, we also refer to (6.4) in the context of GLMs. For grouped penalty terms we refer to (6.21). The difficulty with this approach is the tuning of the regularization parameter(s) λ: run time is one issue, suitable grouping is another issue, and non-uniqueness of the optimal network a further one that can substantially distort the selection of reasonable regularization parameters.

A more popular method to prevent from over-fitting individual neurons in a FN layer to a certain task are so-called drop-out layers. A drop-out layer is an additional layer between FN layers that removes at random during gradient descent training neurons from the network, i.e., in each gradient descent step, any of the earmarked neurons is offset independently from the others with a fixed probability δ ∈ (0, 1). This random removal will imply that the composite of the remaining neurons needs to be sufficiently well balanced to take over the role of the dropped-out neurons. Therefore, a single neuron cannot be over-trained to a certain task because it needs to be able play several different roles. Drop-out has been introduced by Srivastava et al. [345] and Wager et al. [373].

Listing 7.6 FN network of depth d = 3 using a drop-out layer, ridge regularization and a normalization layer

Listing 7.6 gives an example, where we add a drop-out layer with a drop-out probability of δ = 0.01 after the first FN layer, and in the second FN layer we apply ridge regularization to the weights \((w^{(2)}_{1,1}, \ldots , w^{(2)}_{q_1,q_2})\), i.e., excluding the intercepts \(w^{(2)}_{0,j}\), 1 ≤ j ≤ q 2. Both the drop-out layer and regularization are only used during the gradient descent fitting, and these network features are disabled during the prediction.

Drop-out is closely related to ridge regularization as the following linear Gaussian regression example shows; this consideration is taken from Section 18.6 of Efron–Hastie [117]. Assume we have a linear regression problem with square loss function

We assume in this Gaussian case that the observations and the features are standardized, see Sect. 6.2.4. This means that \(\sum _{i=1}^n Y_i=0\), \(\sum _{i=1}^n x_{i,j}=0\) and \(n^{-1}\sum _{i=1}^n x^2_{i,j}=1\), for all 1 ≤ j ≤ q. This standardization implies that we can omit the intercept parameter β 0 because its MLE is equal to 0.

We introduce i.i.d. drop-out random variables I i,j for 1 ≤ i ≤ n and 1 ≤ j ≤ q with (1 − δ)I i,j being Bernoulli distributed with probability 1 − δ ∈ (0, 1). This scaling implies \({\mathbb E}[I_{i,j}]=1\). Using these Bernoulli random variables we modify the above square loss function to

i.e., every individual component x i,j can drop out independently of the others. Gaussian MLE requires to set the gradient of \({\mathfrak D}_I(\boldsymbol {Y}, \boldsymbol {\beta })\) w.r.t. \(\boldsymbol {\beta } \in {\mathbb R}^{q}\) equal to zero. The average score equation is given by (we average over the drop-out random variables I i,j)

where we have used the normalization of the columns of the design matrix \(\mathfrak {X} \in {\mathbb R}^{n\times {q}}\) (we drop the intercept column). This is ridge regression in the linear Gaussian case with a regularization parameter λ = δ∕(2(1 − δ)) > 0 for δ ∈ (0, 1), see (6.9).

7.4.1.3 Normalization Layers

In (7.29) and (7.30) we have discussed that the continuous feature components should be pre-processed so that all components live on the same scale, otherwise the gradient descent fitting may not be efficient. A similar phenomenon may occur with the learned representations z (m:1)(x i) in the FN layers 1 ≤ m ≤ d. In particular, this is the case if we choose an unbounded activation function ϕ. For this reason, it can be advantageous to rescale the components \(z_j^{(m:1)}(\boldsymbol {x}_i)\), 1 ≤ j ≤ q m, in a given FN layer back to the same scale. To achieve this, a normalization step (7.30) is applied to every neuron \(z_j^{(m:1)}(\boldsymbol {x}_i)\) over the given cases i in the considered (mini-)batch. This involves two more parameters (for the empirical mean and the empirical standard deviation) in each neuron of the corresponding FN layer. Note, however, that all these operations are of a linear nature. Therefore, they do not affect the predictive model (i.e., these operations cancel in the scalar products in (7.6)), but they may improve the performance of the gradient descent algorithm.

The code in Listing 7.6 uses a normalization layer on line 6. In our applications, it has not been necessary to use these normalization layers, as it has not led to better run times in SGD algorithms; note that our networks are not very deep and they use the symmetric and bounded hyperbolic tangent activation function.

7.4.2 The Balance Property in Neural Networks

We have seen in Table 7.4 that our FN network outperforms the GLM for claim frequency prediction in terms of a lower out-of-sample loss. We interpret this as follows. Feature engineering has not been done in the most optimal way for the GLM because the FN network finds modeling structure that is not present in the selected GLM. As a consequence, the FN network provides a better generalization to unseen data, i.e., we can better predict new data on a granular level with the FN network. However, having a more precise model on an individual policy level does not necessarily imply that the model also performs better on a global portfolio level. In our example we see that we may have smaller errors on an individual policy level, but these smaller errors do not aggregate to a more precise model in the average portfolio frequency. In our case, we have a misspecification of the average portfolio frequency, see the last column of Table 7.4. This is a major deficiency in insurance pricing because it may result in a misspecification of the overall price level, and this requires a correction. We call this correction bias regularization.

7.4.2.1 Simple Bias Regularization

The straightforward correction is to adjust the intercept parameter \(\beta _0 \in {\mathbb R}\) accordingly. That is, compare the empirical mean

to the model average of the fitted FN network

where  is the learned network parameter from the (early stopped) SGD algorithm. The output of this fitted model reads as

is the learned network parameter from the (early stopped) SGD algorithm. The output of this fitted model reads as

where the hat in \( \widehat {\boldsymbol {z}}^{(d:1)}\) indicates that we use the estimated weights \(\widehat {\boldsymbol {w}}^{(m)}_l\), 1 ≤ l ≤ q m, 1 ≤ m ≤ d, in the FN layers. The balance property can be rectified by replacing \(\widehat {\beta }_0\) by the solution \(\widehat {\widehat {\beta }}_0\) of the following identity

Since g −1 is continuous and strictly monotone, there is a unique solution to this requirement supposed that the range of g −1 covers the support of the Y i’s. If we work with the log-link g(⋅) = log(⋅), this can easily be solved and we obtain

7.4.2.2 Sophisticated Bias Regularization Under the Canonical Link Choice

If we work with the canonical link g = h = (κ′)−1, we can do better because the MLE of such a GLM automatically provides the balance property, see Corollary 5.7. Choose the SGD learned network parameter  . Denote by \( \widehat {\boldsymbol {z}}^{(d:1)}\) the fitted network architecture that is based on the estimated weights \(\widehat {\boldsymbol {w}}^{(1)}_1, \ldots , \widehat {\boldsymbol {w}}^{(d)}_{q_d}\). This allows us to study the learned representations of the raw features x

1, …, x

n in the last FN layer. We denote these learned representations by

. Denote by \( \widehat {\boldsymbol {z}}^{(d:1)}\) the fitted network architecture that is based on the estimated weights \(\widehat {\boldsymbol {w}}^{(1)}_1, \ldots , \widehat {\boldsymbol {w}}^{(d)}_{q_d}\). This allows us to study the learned representations of the raw features x

1, …, x

n in the last FN layer. We denote these learned representations by

These learned representations can be used as new features to explain the response Y . We define the feature engineered design matrix by

Based on this new design matrix \(\widehat {\mathfrak {X}}\) we can run a classical GLM receiving a unique MLE \(\widehat {\boldsymbol {\beta }}^{\text{MLE}} \in {\mathbb R}^{q_d+1}\) supposed that this design matrix has a full rank q d + 1 ≤ n, see Proposition 5.1. Since we work with the canonical link, this re-calibrated FN network will automatically satisfy the balance property, and the resulting regression function reads as

This is the proposal of Wüthrich [390]. We give some remarks.

Remarks 7.11.

-