Abstract

This chapter presents a selection of different topics. We discuss forecasting under model uncertainty, deep quantile regression, deep composite regression and the LocalGLMnet which is an interpretable FN network architecture. Moreover, we provide a bootstrap example to assess prediction uncertainty, we discuss mixture density networks, and we give an outlook to studying variational inference.

You have full access to this open access chapter, Download chapter PDF

11.1 Deep Learning Under Model Uncertainty

We revisit claim size modeling in this section. Claim size modeling is challenging because often there is no (simple) off-the-shelf distribution that allows one to appropriately describe all claim size observations. E.g., the main body of the claim size data may look like gamma distributed, and, at the same time, large claims seem to be more heavy-tailed (contradicting a gamma model assumption). Moreover, different product and claim types may lead to multi-modality in the claim size densities. In Sects. 5.3.7 and 5.3.8 we have explored a gamma and an inverse Gaussian GLM to model a motorcycle claims data set. In that example, the results have been satisfactory because this motorcycle data is neither multi-modal nor does it have heavy tails. These two GLM approaches have been based on the EDF (2.14), modeling the mean x↦μ(x) with a regression function and assuming a constant dispersion parameter φ > 0. There are two natural ways to extend this approach. One considers a double GLM with a dispersion submodel x↦φ(x), see Sect. 5.5, the other explores multi-parameter extensions like the generalized inverse Gaussian model, which is a k = 3 vector-valued EF, see (2.10), or the GB2 family that involves 4 parameters, see (5.79). These extensions provide more complexity, also in MLE. In this section, we are not going to consider multi-parameter extensions, but in a first step we aim at robustifying (mean) parameter estimation within the EDF. In a second step we are going to analyze the resulting dispersion φ(x). For these steps, we perform representation learning and parameter estimation under model uncertainty by simultaneously considering multiple models from Tweedie’s family. These considerations are closely related to Tweedie’s forecast dominance given in Definition 4.22.

We emphasize that we remain within a single distribution function choice in this section, i.e., we neither consider mixture distributions nor composite models in this section. Mixture density networks are going to be considered in Sect. 11.6, below, and a composite model approach is studied in Sect. 11.3, below. These mixture density networks and composite models allow us to model the body and the tail of the data with different distribution functions by either mixing or concatenating suitable distributions.

11.1.1 Recap: Tweedie’s Family

Tweedie’s family with power variance function V (μ) = μ p, p ≥ 2, provides us with a rich model class for claim size modeling if the claim sizes are strictly positive, a.s., and extending to p ∈ (1, 2) allows us to model claims with a positive point mass in 0. This class of distribution functions contains the gamma case (p = 2) and the inverse Gaussian case (p = 3). In general, p > 2 provides us with positive stable generated distributions and p ∈ (1, 2) gives Tweedie’s CP models, see Table 2.1. Tweedie’s family has cumulant function for p > 1

on the effective domain θ ∈ Θ∈ (−∞, 0) for p ∈ (1, 2], and θ ∈ Θ∈ (−∞, 0] for p > 2. The mean and the power variance function are for p > 1 given by

The unit deviance takes the following form for p > 1 and p ≠ 2, see (4.18),

and in the gamma case p = 2 we have, see Table 4.1,

Figure 11.1 (lhs) shows the unit deviances \(y\mapsto {\mathfrak d}_p(y,\mu )\) for fixed mean parameter μ = 2 and power variance parameters p ∈{0, 2, 2.5, 3, 3.5}; the case p = 0 corresponds to the symmetric Gaussian case \({\mathfrak d}_0(y, \mu )=(y-\mu )^2\). We observe that with an increasing power variance parameter p large claims Y = y receive a smaller loss punishment (if we interpret the unit deviance as a loss function). This is the situation where we have a fixed mean μ and where we assess claim sizes Y = y relative to this mean. For estimation purposes we have fixed observations Y = y and we study the sensitivities in μ. Note that, in general, the unit deviances \({\mathfrak d}_p(y,\mu )\) are not symmetric in y and μ. This second case is shown in Fig. 11.1 (rhs), and the general behavior in p is similar. As a result, by selecting different hyper-parameters p > 1, we can control the influence of large (and small) claims on parameter estimation, because the unit deviances \({\mathfrak d}_p(y, \cdot )\) have different slopes for different p’s. Basically, the choice of the loss function (unit deviance) determines the choice of the underlying distributional model, which then assesses the claim observations Y = y according to their sizes and how these sizes match the model assumptions made.

In Lemma 2.22 we have seen that the unit deviances \({\mathfrak d}_p\left (y, \mu \right )\ge 0\) are zero if and only if y = μ. The second derivatives given in Lemma 2.22 allow us to consider a second order Taylor expansion around a minimum μ 0 = y 0

Thus, locally around the minimum the unit deviances behave symmetric and like Gaussian squares, but this is only a local approximation around a minimum μ 0 = y 0 as can be seen from Fig. 11.1. I.e., in general, model fitting turns out to be rather different from the Gaussian square loss if we have small and large claim sizes under choices p > 1.

Remarks 11.1

-

Since unit deviances are Bregman divergences, we know that every unit deviance gives us a strictly consistent scoring function for the mean functional, see Theorem 4.19. Therefore, the specific choice of the power variance parameter p seems less relevant. However, strict consistency is an asymptotic statement, and choosing a unit deviance that matches the property of the data has better finite sample properties, i.e., a smaller variance in asymptotic normality; we come back to this in Sect. 11.1.4, below.

-

A function (y, μ)↦ψ(y, μ) is called b-homogeneous if there exists \(b\in {\mathbb R}\) such that for all (y, μ) and all λ > 0 we have ψ(λy, λμ) = λ b ψ(y, μ). Unit deviances \({\mathfrak d}_p\) are b-homogeneous with b = 2 − p. This b-homogeneity has the nice consequence that the decisions taken are independent of the scale, i.e., we have an invariance under changes of currencies. On the other hand, such a scaling influences the estimation of the dispersion parameter, i.e., if we scale the observation and the mean with λ we have unit deviance

$$\displaystyle \begin{aligned} {\mathfrak d}_p (\lambda y,\lambda \mu)=\lambda^{2-p}\,{\mathfrak d}_p(y,\mu). \end{aligned} $$(11.4)This influences the dispersion estimation for the cases different from the gamma case p = 2, see, e.g., saddlepoint approximation (5.60)–(5.62). This also relates to the different parametrizations in Sect. 5.3.8 where we study the inverse Gaussian model p = 3, which has a dispersion φ i = 1∕α i in the reproductive form and \(\varphi _i=1/\alpha ^2_i\) in parametrization (5.51).

-

We only consider power variance parameters p > 1 in this section for non-negative claim size modeling. Technically, this analysis could be extended to p ∈{0, 1}. We do not consider the Gaussian case p = 0 to exclude negative claims, and we do not consider the Poisson case p = 1 because this is used for claim counts modeling.

We recall that unit deviances of the EDF are equal to twice the corresponding KL divergences, which in turn are special cases of Bregman divergences. From Theorem 4.19 we know that Bregman divergences D ψ are the only strictly consistent loss/scoring functions for mean estimation.

Lemma 11.2

Choose p > 1. The scaled unit deviance \({\mathfrak d}_p(y,\mu )/2\) is a Bregman divergence \(D_{\psi _p}(y,\mu )\) on \({\mathbb R}_+\times {\mathbb R}_+\) with strictly decreasing and strictly convex function on \({\mathbb R}_+\)

for canonical link \(h_p(y)=(\kappa ^{\prime }_p)^{-1}(y)=y^{1-p}/(1-p)\).

Proof of Lemma 11.2

The Bregman divergence property follows from (2.29). For p > 1 and y > 0 we have the strictly decreasing property

The second derivative is \(\psi ^{\prime \prime }_p(y) =h^{\prime }_p(y)=y^{-p}=1/V(y)>0\) which provides the strict convexity. □

In the Gaussian case we have ψ 0(y) = y 2∕2, and \(\psi _0^{\prime }(y)>0\) on \({\mathbb R}_+\) implies that this is a strictly increasing convex function for positive claims y > 0. This is different to Lemma 11.2.

Assume we have independent observations (Y i, x i) following the same Tweedie’s distribution, and with means given by μ 𝜗(x i) for some parameter 𝜗. The M-estimator of 𝜗 using this Bregman divergence is given by

If we turn this M-estimator into a Z-estimator (supposed we have differentiability), the parameter estimate \(\widehat {\boldsymbol {\vartheta }}\) is found as a solution of the score equations

In the GLM case this exactly corresponds to (5.9). To determine the Z-estimator from (11.5), we scale the residuals Y i − μ i inversely proportional to the variances \(V(\mu _i)=\mu _i^p\) of the chosen Tweedie’s distribution. It is a well-known result that if we scale individual unbiased estimators inversely proportional to their variances, we receive the unbiased estimator with minimal variance, we come back to this in (11.16), below. This gives us the intuition behind a specific choice of the power variance parameter for mean estimation, as the sizes of the variances \(\mu _i^p\) scale (weight) the observed residuals Y i − μ i, and balance potential outliers in the observations correspondingly.

11.1.2 Lab: Claim Size Modeling Under Model Uncertainty

We present a proposal for deep learning under model uncertainty in this section. We explain this on an explicit example within Tweedie’s distributions. We emphasize that this methodology can be applied in more generality, but it is beneficial here to have an explicit example in mind to illustrate the different phenomena.

11.1.2.1 Generalized Linear Models

We analyze a Swiss accident insurance claims data set. This data is illustrated in Sect. 13.4, and an excerpt of the data is given in Listing 13.7. In total we have 339’500 claims with positive payments. We choose this data set because it ranges from very small claims of 1 CHF to very large claims, the biggest one exceeding 1’300’000 CHF. These claims are supported by feature information such as the labor sector, the injury type or the injured body part, see Listing 13.7 and Fig. 13.25. For our analysis, we partition the data into a learning data set \(\mathcal {L}\) and a test data set \(\mathcal {T}\). We do this partition stratified w.r.t. the claim sizes and in a ratio of 9 : 1. This results in a learning data set \(\mathcal {L}\) of size n = 305′550 and in a test data set \(\mathcal {T}\) of size T = 33′950.

We consider three Tweedie’s distributions with power variance parameters p ∈{2, 2.5, 3}, the first one is the gamma model, the last one the inverse Gaussian model, and the power variance parameter p = 2.5 gives a model in between. In a first step we consider GLMs, this requires feature engineering. We have three categorical features, one binary feature and two continuous ones. For the categorical and binary features we use dummy coding, and the continuous features Age and AccQuart are just included in its raw form. As link function g we choose the log-link which respects the positivity of the dual mean parameter space \(\mathcal {M}\), see Table 2.1, but this is not the canonical link of the selected models. In the gamma GLM this leads to a convex minimization problem, but in Tweedie’s GLM with p = 2.5 and in the inverse Gaussian GLM we have non-convex minimization problems, see Example 5.6. Therefore, we initialize Fisher’s scoring method (5.12) in the latter two GLMs with the solution of the gamma GLM. The gamma and the inverse Gaussian cases can directly be fitted with the R command glm [307], for the power variance parameter case p = 2.5 we have coded our own MLE routine using Fisher’s scoring method.

Table 11.1 shows the in-sample losses on the learning data \(\mathcal {L}\) and the corresponding out-of-sample losses on the test data \(\mathcal {T}\). The fitted GLMs (gamma, power variance parameter p = 2.5 and inverse Gaussian) are always evaluated on all three unit deviances \({\mathfrak d}_{p=2}(y,\mu )\), \({\mathfrak d}_{p=2.5}(y,\mu )\) and \({\mathfrak d}_{p=3}(y,\mu )\), respectively. We give some remarks. First, we observe that the in-sample loss is always minimized for the GLM with the same power variance parameter p as the loss \({\mathfrak d}_{p}\) studied (2.0695, 7.6971 and 3.9398 in bold face). This result simply states that the parameter estimates are obtained by minimizing the in-sample loss (or maximizing the corresponding in-sample log-likelihood). Second, the minimal out-of-sample losses are also highlighted in bold face. From these results we cannot give any preference to a single model w.r.t. Tweedie’s forecast dominance, see Definition 4.20. Third, we calculate the AIC values for all models. The gamma and the inverse Gaussian cases have a closed-form solution for the normalizing term a(y;v∕φ) in the EDF density, and we can directly calculate AIC. The case p = 2.5 is more difficult and we use the saddlepoint approximation of Sect. 5.5.2. Considering AIC we give preference to Tweedie’s GLM with p = 2.5. Note that the AIC values use the MLE for φ which is obtained from a general purpose optimizer, and which uses the saddlepoint approximation in the power variance case p = 2.5. Fourth, under a constant dispersion parameter φ, the mean estimation \(\widehat {\mu }_i\) can be done without explicitly specifying φ because it cancels in the score equations. In fact, we perform this mean estimation in the additive form and not in the reproductive form, see (2.13) and the discussions in Sects. 5.3.7–5.3.8.

Figure 11.2 plots the deviance residuals (for unit dispersion) against the logged fitted means \(\widehat {\mu }(\boldsymbol {x}_i)\) for p ∈{2, 2.5, 3} for 2’000 randomly selected claims; this is the Tukey–Anscombe plot. The green line has been obtained by a spline fit to the deviance residuals as a function of the fitted means \(\widehat {\mu }(\boldsymbol {x}_i)\), and the cyan lines give twice the estimated standard deviation of the deviance residuals as a function of the fitted means (also obtained from spline fits). This estimated standard deviation corresponds to the square-rooted deviance dispersion estimate \(\widehat {\varphi }^{\mathrm {D}}\), see (5.30), however, in the additive form because we work with unscaled claim size observations. A constant dispersion assumption is supported by cyan lines of roughly constant size. In the gamma case the dispersion seems increasing in the mean estimate, and in the inverse Gaussian case it is decreasing, thus, the power variance parameters p = 2 and p = 3 do not support a constant dispersion in this example. Only the choice p = 2.5 may support a constant dispersion assumption (because it does not have an obvious trend). This says that the variance should scale as V (μ) = μ 2.5 as a function of the mean μ, see also (11.5).

Tukey–Anscombe plots showing the deviance residuals against the logged GLM fitted means \(\widehat {\mu }(\boldsymbol {x}_i)\): (lhs) gamma GLM p = 2, (middle) power variance case p = 2.5, (rhs) inverse Gaussian GLM p = 3; the cyan lines show twice the estimated standard deviation of the deviance residuals as a function of the size of the logged estimated means \(\widehat {\mu }\)

11.1.2.2 Deep FN Networks

We compare the above GLMs to FN networks of depth d = 3 with (q 1, q 2, q 3) = (20, 15, 10) neurons. The categorical features are modeled with embedding layers of dimension b = 2. We fit this network architecture with Tweedie’s deviances losses having power variance parameters p ∈{2, 2.5, 3}. Moreover, we use 20% of the learning data \(\mathcal {L}\) as validation data \(\mathcal {V}\) to explore the early stopping rule.Footnote 1 To reduce the randomness coming from early stopping with different seeds, we average the deviance losses over 20 runs (this is not the nagging predictor: we only average the deviance losses to have stable conclusions concerning forecast dominance). The results are presented in Table 11.2.

First, we observe that the networks outperform the GLMs, saying that the feature engineering has not been done optimally for GLMs. Second, in-sample we no longer receive the lowest deviance loss in the model with the same p. This comes from the fact that we exercise early stopping, and, for instance, the gamma in-sample loss of the gamma network (p = 2) 1.9738 is bigger than the corresponding gamma loss of 1.9712 from the network with p = 2.5. Third, considering forecast dominance, preference is given either to the gamma network or to the power variance parameter p = 2.5. In general, it seems that fitting with higher power variance parameters leads to less stable results, but this statement needs more analysis. The disadvantage of this fitting approach is that we independently fit the models with the different power variance parameters to the observations, and, thus, the learned representations z (d:1)(x i) are rather different for different p’s. This makes it difficult to compare these models. This is exactly the point that we address next.

11.1.2.3 Robustified Representation Learning

To deal with the drawback of missing comparability of the network approaches with different power variance parameters, we can try to learn a representation that simultaneously fits different models. The implementation of this idea is rather straightforward in network modeling. We choose the above network of depth d = 3, which gives us the new (learned) representation z i = z (d:1)(x i) in the last FN layer. The general idea now is that we design multiple outputs for this learned representation to fit the different distributional models. That is, in the case of three Tweedie’s loss functions with power variance parameters p ∈{2, 2.5, 3} we consider a three-dimensional output mapping

for different output parameters \(\boldsymbol {\beta }_{2}, \boldsymbol {\beta }_{2.5}, \boldsymbol {\beta }_{3} \in {\mathbb R}^{q_d+1}\). These three expected responses (11.6) share the network parameters \(\boldsymbol {w}=(\boldsymbol {w}_1^{(1)}, \ldots ,\boldsymbol {w}_{q_d}^{(d)})\) in the FN layers, and the network fitting should learn these parameters such that z i = z (d:1)(x i) gives a good representation for all considered loss functions. Choose positive weights η p > 0, and define the combined deviance loss function

for the given observations (Y i, x i, v i), 1 ≤ i ≤ n. Note that the unit deviances \({\mathfrak d}_p\) live on different scales for different p’s. We use the (constant) weights η p > 0 to balance these scales so that all power variance parameters p roughly equally contribute to the total loss, while setting φ p ≡ 1 (which can be done for a constant dispersion). This approach is now fitted to the available learning data \(\mathcal {L}\). The corresponding R code is given in Listing 11.1. Note that the fitting also requires that we triplicate the observations (Y i, Y i, Y i) so that we can simultaneously evaluate the three chosen power variance deviance losses, see lines 18–21 of Listing 11.1. We fit this model to the Swiss accident insurance data, and the results are presented in Table 11.3 on the lines called ‘multi-out’.

Listing 11.1 FN network with multiple output

This simultaneous representation learning across different loss functions leads to more stability in the results between the different loss function choices, i.e., there is less variability between the losses of the different outputs compared to fitting the three different models independently. The predictive performance seems slightly better in this robustified vs. the independent case (see bold face out-of-sample figures). The similarity of the results across the different loss functions (using the jointly learned representation z i) allows us to directly compare the corresponding predictors \(\widehat {\mu }_p(\boldsymbol {x}_i)\) for the different p’s.

Figure 11.3 compares the three predictors by considering the ratios \(\widehat {\mu }_{p=2}(\boldsymbol {x}_i)/\widehat {\mu }_{p=2.5}(\boldsymbol {x}_i)\) in black color and \(\widehat {\mu }_{p=3}(\boldsymbol {x}_i)/\widehat {\mu }_{p=2.5}(\boldsymbol {x}_i)\) in blue color, i.e., we divide by the (middle) predictor with power variance parameter p = 2.5. The figure on the left-hand side shows these ratios in-sample and ordered on the x-axis w.r.t. the observed claim sizes Y i, and the darkgray and cyan lines give spline fits to these ratios. The figure on the right-hand side shows these ratios out-of-sample and ordered on the x-axis w.r.t. the average predictors \(\bar {\mu }_i=(\widehat {\mu }_{p=2}(\boldsymbol {x}_i)+\widehat {\mu }_{p=2.5}(\boldsymbol {x}_i)+\widehat {\mu }_{p=3}(\boldsymbol {x}_i))/3\). In view of (11.5) we expect that the models with a smaller power variance parameter p over-fit more to large claims. From Fig. 11.3 (lhs) we can observe that, indeed, this is the case (see gray and cyan spline fits which bifurcate for large claims). That is, models with a smaller power variance parameter react more sensitively to large observations Y i. The ratios in Fig. 11.3 provide differences of up to 7% for large claims.

Ratios \(\widehat {\mu }_{p=2}(\boldsymbol {x}_i)/\widehat {\mu }_{p=2.5}(\boldsymbol {x}_i)\) (black color) and \(\widehat {\mu }_{p=3}(\boldsymbol {x}_i)/\widehat {\mu }_{p=2.5}(\boldsymbol {x}_i)\) (blue color) of the three predictors (lhs) in-sample figures ordered on the x-axis w.r.t. the logged observed claims Y i, darkgray and cyan lines give spline fits, (rhs) out-of-sample figures ordered on the x-axis w.r.t. the logged average size of the three predictors

Remark 11.3

The loss function (11.7) can also be interpreted as regularization. For instance, if we choose η 2 = 1, and if we assume that this is our preferred model, then we can regularize this model with further models, and their weights η p > 0 determine the degree of regularization. Thus, in contrast to ridge and LASSO regularization of Sect. 6.2, regularization does not directly act on the model parameters, here, but rather on what we learn in terms of the representation z i = z (d:1)(x i).

11.1.2.4 Using Forecast Dominance to Deal with Model Uncertainty

In GLMs, the power variance parameter p typically acts as a hyper-parameter, i.e., one fits different GLMs for different choices of p. Model selection is then done, e.g., by analyzing the Tukey–Anscombe plot, AIC, cross-validation or by studying out-of-sample forecast dominance. In networks we should not use AIC as we neither have a parsimonious network parameter nor do we use the MLE. Here, we focus on forecast dominance for the network predictors (based on the different chosen power variance parameters). If we are mainly interested in receiving a model that provides optimal forecast dominance, we should not consider three different outputs as in (11.7), but rather fit the same output to different loss functions; the required changes are minimal, see Listing 11.2. Namely, consider one FN network with one output μ(x i), but evaluate this output simultaneously on the different chosen loss functions

In contrast to (11.7), we only have one FN network regression function x i↦μ(x i), here.

Listing 11.2 FN network with a single output for multiple losses

We present the results on the last line of Table 11.3, called ‘multi-loss’. In our case, this approach is slightly less competitive (out-of-sample), however, it is less sensitive to outliers since we need to have a good regression function simultaneously for multiple loss functions. Of course, this multiple loss fitting approach is not restricted to different power variance parameters. As stated in Theorem 4.19, Bregman divergences are the only consistent loss functions for mean estimation, and the unit deviances are examples of Bregman divergences. Forecast dominance now suggests that we may choose any Bregman divergence as a loss function in Listing 11.2 as long as it reflects the expected properties of the model (and of the observed data), otherwise we will receive bad convergence properties, see also Sect. 11.1.4, below. For instance, we can robustify the Poisson claim counts model by additionally considering the deviance loss of the negative binomial model that also assesses over-dispersion.

11.1.2.5 Nagging Predictor

The loss figures in Table 11.3 are averaged deviance losses over 20 different runs of the gradient descent algorithm with different seeds (to receive stable results). Rather than averaging over the losses, we should improve the models by averaging over the predictors and, then, calculate the losses on these averaged predictors; this is exactly the proposal of the nagging predictor (7.44). We calculate the nagging predictor of the models that are simultaneously fit to the different loss functions (lines ‘multi-output’ and ‘multi-loss’ of Table 11.3). The resulting nagging predictors are reported in Table 11.4. This table shows that we give a clear preference to the nagging predictors. The simultaneous loss fitting (11.8) gives the best out-of-sample results for the nagging predictor, see the last line of Table 11.4.

Figure 11.4 shows the Tukey–Anscombe plot of the multi-loss nagging predictor for the different deviance losses (for unit dispersion). Again, the case p = 2.5 is closest to having a constant dispersion, and the other cases will require dispersion modeling φ(x).

Tukey–Anscombe plots giving the deviance residuals of the multi-loss nagging predictor of Table 11.4 for different power variance parameters: (lhs) gamma deviances p = 2, (middle) power variance deviances p = 2.5, (rhs) inverse Gaussian deviances p = 3; the cyan lines show twice the estimated standard deviation of the deviance residuals as a function of the size of the logged estimated means \(\widehat {\mu }\)

Figure 11.5 shows the empirical auto-calibration property of the multi-loss nagging predictor. This auto-calibration property is calculated as in Listing 7.8. We observe that the auto-calibration property holds rather accurately. Only for claim predictors \(\widehat {\mu }(\boldsymbol {x}_i)\) above 10’000 CHF (vertical dotted line in Fig. 11.5) the fitted means under-estimate the observed average claim sizes. This affects (only) 1.7% of all claims and it could be corrected as described in Example 7.19.

11.1.3 Lab: Deep Dispersion Modeling

From the Tukey–Anscombe plots in Fig. 11.4 we conclude that the dispersion requires regression modeling, too, as the dispersion does not seem to be constant over the whole range of the expected claim sizes. We therefore explore a double FN network model, in spirit this is similar to the double GLM of Sect. 5.5. We therefore assume to work within Tweedie’s family with power variance parameters p ≥ 2, and with unit deviances given by (11.2)–(11.3). The saddlepoint approximation (5.59) gives us

with power variance function V (y) = y p. This saddlepoint approximation is formulated in the reproductive form for Y = X∕ω = Xφ∕v. This requires scaling of the observations X with the unknown φ to receive Y . In Sect. 5.5.4 we have shown how this problem can be solved. In this section we give a different proposal which is more robust in network fitting, and which benefits from the b-homogeneity of \({\mathfrak d}_p\), see (11.4).

We consider the variable transformation y↦x = yω = yv∕φ. In the absolutely continuous case p ≥ 2 this gives us the approximation

with mean μ p = μv∕φ of X = Y v∕φ. We set ϕ = −1∕φ p−1 < 0. This gives us the approximation

For given mean μ p we again have a gamma approximation on the right-hand side, but we scale the dispersion differently. This gives us the approximate first moment

The remainder of this modeling is similar to the residual MLE approach in Section 5.5.3. Namely, we set up two FN network regression functions

Parameter fitting is achieved by alternating the network parameter fitting of μ p(x) and φ p(x) see also Section 5.5.4. We start the iteration by setting the dispersion constant to \(\widehat {\varphi }_p^{(0)}(\boldsymbol {x})\equiv \mathrm {const}\). In this case, the dispersion cancels in the score equations and the mean \(\widehat {\mu }_p^{(1)}(\boldsymbol {x})\) can be estimated without the explicit knowledge of the (constant) dispersion parameter \(\widehat {\varphi }^{(0)}_p\); this exactly provides the results of the previous Sect. 11.1.2. Then, we iterate this procedure for t ≥ 1. For given mean estimate \(\widehat {\mu }_p^{(t)}(\boldsymbol {x})\) we receive deviances \(v^{p-1}{\mathfrak d}_p(X, \widehat {\mu }_p^{(t)}(\boldsymbol {x}))\), and this allows us to estimate \(\widehat {\varphi }_p^{(t)}(\boldsymbol {x})\) from the approximate gamma model (11.9), and for given dispersion parameters \(\widehat {\varphi }_p^{(t)}(\boldsymbol {x})\) we estimate \(\widehat {\mu }_p^{(t+1)}(\boldsymbol {x})\) from the corresponding Tweedie’s model for the observation X.

Example 11.4

We revisit the Swiss accident insurance data example of Sect. 11.1.2, and we use the robustified representation learning approach (11.7) that simultaneously fits Tweedie’s models for the power variance parameters p = 2, 2.5, 3. The initial calibration step is done for constant dispersions \(\widehat {\varphi }_p^{(0)}(\boldsymbol {x})\equiv \mathrm {const}\), and it provides us with the estimated means \(\widehat {\mu }_p^{(1)}(\boldsymbol {x})\) as illustrated in Fig. 11.3. For stability reasons we choose the nagging predictor averaging over 20 different SGD runs with 20 different seeds. These estimated means \(\widehat {\mu }_p^{(1)}(\boldsymbol {x})\) give us the deviances \(v^{p-1}{\mathfrak d}_p(X, \widehat {\mu }_p^{(1)}(\boldsymbol {x}))\).

Using these deviances allows us to alternate the dispersion and mean estimation for t ≥ 1. For given means \(\widehat {\mu }_p^{(t)}(\boldsymbol {x})\), p = 2, 2.5, 3, we set up a deep FN network x↦z (d:1)(x) that allows for a robustified deep dispersion learning φ p(x), for p = 2, 2.5, 3. Under the log-link choice we consider the regression function with multiple outputs

for different output parameters \(\boldsymbol {\alpha }_{2}, \boldsymbol {\alpha }_{2.5}, \boldsymbol {\alpha }_{3} \in {\mathbb R}^{q_d+1}\). These three dispersion responses (11.10) share the common network parameter \(\widetilde {\boldsymbol {w}}=(\widetilde {\boldsymbol {w}}_1^{(1)}, \ldots ,\widetilde {\boldsymbol {w}}_{q_d}^{(d)})\) in the FN layers of z (d:1). The network fitting learns these parameters simultaneously for the different power variance parameters. Choose positive weights \(\widetilde {\eta }_p>0\), and define the combined deviance loss function (based on the gamma model κ 2 and having dispersion parameter 2)

where X = (X 1, …, X n) collects the unscaled observations X i = Y i v i∕φ i. Thus, for all power variance parameters p = 2, 2.5, 3 we fit a gamma model \({\mathfrak d}_2(\cdot ,\cdot )/2\) to the observed deviances (observations) \(v^{p-1}_i{\mathfrak d}_p(X_i, \widehat {\mu }_p^{(t)}(\boldsymbol {x}_i))\) providing us with the estimated dispersions \(\widehat {\varphi }_p^{(t)}(\boldsymbol {x}_i)\). This fitting step is received by the R code of Listing 11.1, where the losses on line 20 are all given by gamma deviance losses (11.11) and the deviances \(v_i^{p-1}{\mathfrak d}_p(X_i, \widehat {\mu }_p^{(t)}(\boldsymbol {x}_i))\) play the role of the responses (observations).

In the next step we update the mean estimates \(\widehat {\mu }_p^{(t+1)}(\boldsymbol {x}_i)\), given the estimated dispersions \(\widehat {\varphi }_p^{(t)}(\boldsymbol {x}_i)\) from the previous step. This requires that we optimize the expected responses (11.6) for given heterogeneous dispersion parameters. We therefore consider the loss function for positive weights η p > 0, see (11.7),

We fit this model by iterating this approach for t ≥ 1: we start from the predictors of Sect. 11.1.2 providing us with the first mean estimates \(\widehat {\mu }_p^{(1)}(\boldsymbol {x}_i)\). Based on these mean estimates we iterate this robustified estimation of \(\widehat {\varphi }_p^{(t)}(\boldsymbol {x}_i)\) and \(\widehat {\mu }_p^{(t)}(\boldsymbol {x}_i)\). We give some remarks:

-

1.

We use the robustified versions (11.11) and (11.12), respectively, where we simultaneously fit all power variance parameters p = 2, 2.5, 3 on the commonly learned representations z i = z (d:1)(x i) in the last FN layer of the mean and the dispersion network, respectively.

-

2.

For both FN networks of mean μ and dispersion φ modeling we use the same network architecture of depth d = 3 having (q 1, q 2, q 3) = (20, 15, 10) neurons in the FN layers, the hyperbolic tangent activation function, and the log-link for the output. These two networks only differ in their network parameters (w, β 2, β 2.5, β 3) and \((\widetilde {\boldsymbol {w}}, \boldsymbol {\alpha }_{2}, \boldsymbol {\alpha }_{2.5}, \boldsymbol {\alpha }_{3})\), respectively.

-

3.

For fitting we use the nadam version of SGD. For the early stopping we use a training data \(\mathcal {U}\) to validation data \(\mathcal {V}\) split of 8 : 2.

-

4.

To ensure consistency within the individual SGD runs across t ≥ 1, we use the learned network parameter of loop t as initial value for loop t + 1. This ensures monotonicity across the iterations in the log-likelihood and the loss function, respectively, up to the fact that the random mini-batches in SGD may distort this monotonicity.

-

5.

To reduce the elements of randomness in SGD fitting we run this iteration procedure 20 times with different seeds, and we output the nagging predictors for \(\widehat {\mu }_p^{(t)}(\boldsymbol {x}_i)\) and \(\widehat {\varphi }_p^{(t)}(\boldsymbol {x}_i)\) averaged over the 20 runs for every t in Table 11.5.

Table 11.5 Iteration of mean \(\widehat {\mu }_p^{(t)}\) and dispersion \(\widehat {\varphi }_p^{(t)}\) estimation for the gamma model p = 2, the power variance parameter p = 2.5 model and the inverse Gaussian model p = 3: the numbers correspond to \(-2 \ell _{\boldsymbol {X}}(\widehat {\mu }_p^{(t)}, \widehat {\varphi }_p^{(t)})\); the last line corrects \(-2 \ell _{\boldsymbol {X}}(\widehat {\mu }_p^{(t)}, \widehat {\varphi }_p^{(t)})\) by 2 ⋅ 2 ⋅ 812 = 3′248 (twice the number of parameters used in the mean and dispersion FN networks)

We iterate this algorithm over two loops, and the results are presented in Table 11.5. We observe a decrease of \(-2 \ell _{\boldsymbol {X}}(\widehat {\mu }_p^{(t)}, \widehat {\varphi }_p^{(t)})\) by iterating the fitting algorithm for t ≥ 1. For AIC, we would have to correct twice the negative log-likelihood by twice the number of MLE estimated parameters. We also adjust here correspondingly, though the correction is not justified by any theory, because we do not work with the MLE nor do we have a parsimonious model for mean and dispersion estimation. Nevertheless, we receive smaller values than in Table 11.1 which supports the use of this more complex double FN network model.

Comparing the three power variance parameter models, we now give preference to the inverse Gaussian model, as it has the biggest log-likelihood. Note that we directly compare all power variance models as the complexity is equal in all models (they only differ in the chosen power variance parameter) and the joint robustified fitting applies the same stopping rule to all power variance parameter models. The same result is obtained by comparing the out-of-sample log-likelihoods. Note that we do not compare the deviance losses, here, because the unit deviances are not designed to estimate parameters in vector-valued parameter families; we model dispersion as a second parameter.

Next, we study the estimated dispersions \(\widehat {\varphi }_p(\boldsymbol {x}_i)\) as a function of the estimated means \(\widehat {\mu }_p(\boldsymbol {x}_i)\). We fit a spline to \(\widehat {\varphi }_p(\boldsymbol {x}_i)\) as a function of \(\widehat {\mu }_p(\boldsymbol {x}_i)\), and we receive estimates that almost perfectly match the cyan lines in Fig. 11.4. This provides a proof of concept that the dispersion regression model finds the right level of dispersion as a function of the expected means.

Using the mean and dispersion estimates, we can calculate the dispersion scaled deviance residuals

This then allows us to give the Tukey–Anscombe plots for the three considered power variance parameters.

The corresponding plots are given in Fig. 11.6; the difference to Fig. 11.4 is that the latter considers unit dispersion whereas the former scales the residuals with the rooted dispersion  ; note that v

i ≡ 1 in this example. By scaling with the rooted dispersion the resulting deviance residuals \(r_i^{\mathrm {D}}\) should roughly have unit standard deviation. From Fig. 11.6 we observe that indeed this is the case, the cyan line shows a spline fit of twice the standard deviation of the deviance residuals \(r_i^{\mathrm {D}}\). These splines are of magnitude 2 which verifies the unit standard deviation property. Moreover, the cyan lines are roughly horizontal which indicates that the dispersion estimation and the scaling works across all expected claim sizes \(\widehat {\mu }_p(\boldsymbol {x}_i)\). The three different power variance parameters p = 2, 2.5, 3 show different behaviors in the lower and upper tails in the residuals (centering around the orange horizontal zero line in Fig. 11.6) which corresponds to the different distributional properties of the chosen models.

; note that v

i ≡ 1 in this example. By scaling with the rooted dispersion the resulting deviance residuals \(r_i^{\mathrm {D}}\) should roughly have unit standard deviation. From Fig. 11.6 we observe that indeed this is the case, the cyan line shows a spline fit of twice the standard deviation of the deviance residuals \(r_i^{\mathrm {D}}\). These splines are of magnitude 2 which verifies the unit standard deviation property. Moreover, the cyan lines are roughly horizontal which indicates that the dispersion estimation and the scaling works across all expected claim sizes \(\widehat {\mu }_p(\boldsymbol {x}_i)\). The three different power variance parameters p = 2, 2.5, 3 show different behaviors in the lower and upper tails in the residuals (centering around the orange horizontal zero line in Fig. 11.6) which corresponds to the different distributional properties of the chosen models.

Tukey–Anscombe plots giving the dispersion scaled deviance residuals \(r_i^{\mathrm {D}}\) (11.13) of the models jointly fitting the mean parameters \(\widehat {\mu }_p(\boldsymbol {x}_i)\) and the dispersion parameters \(\widehat {\varphi }_p(\boldsymbol {x}_i)\): (lhs) gamma model, (middle) power variance parameter p = 2.5 model, and (rhs) inverse Gaussian models; the cyan lines correspond to 2 standard deviations

We further analyze the gamma and the inverse Gaussian models. Note that the analysis of the power variance models for general power variance parameters p ≠ 0, 1, 2, 3 is more difficult because neither the EDF density nor the EDF distribution function have a closed form. To analyze the gamma and the inverse Gaussian models we simulate observations \(X^{\mathrm {sim}}_t\), t = 1, …, T, from the estimated models (using the out-of-sample features \(\boldsymbol {x}^\dagger _t\) of the test data \(\mathcal {T}\)), and we compare them against the true out-of-sample observations \(X^\dagger _t\). Figure 11.7 shows the results for the gamma model (lhs) and the inverse Gaussian model (rhs) on the log-scale. A good fit has been achieved if the black dots lie on the red diagonal line (in the colored version), because then the simulated data shares similar features as the observed data. The fit of the inverse Gaussian model seems reasonably good.

On the other hand, we see that the gamma model gives a poor fit, especially in the lower tail. This supports the AIC values of Table 11.5. The problem with the gamma model is that the data is more heavy-tailed than the gamma model can accomplish. As a consequence, the dispersion parameter estimates \(\widehat {\varphi }_2(\boldsymbol {x}^\dagger _t)\) in the gamma model are compensating for this by taking values bigger than 1. A dispersion parameter bigger than 1 implies a shape parameter in the gamma model of \(\widehat {\alpha }^\dagger _t=1/\widehat {\varphi }_2(\boldsymbol {x}^\dagger _t)<1\), and the resulting gamma density is strictly decreasing, see Fig. 2.1. If we simulate from this model we receive many observations \(X^{\mathrm {sim}}_t\) close to zero (from the strictly decreasing density). This can be seen from the lower-left part of the graph in Fig. 11.7 (lhs), suggesting that we have many observations with \(X^\dagger _t \in (0,1)\), or on the log-scale \(\mathrm {log}(X^\dagger _t)<0\). However, the graph shows that this is not the case in the real data. Figure 11.7 (middle) shows the boxplot of the estimated shape parameters \(\widehat {\alpha }^\dagger _t\) on the test data, 1 ≤ t ≤ T, verifying that most insurance policies of the test data \(\mathcal {T}\) receive a shape parameter \(\widehat {\alpha }^\dagger _t\) less than 1.

We conclude that the inverse Gaussian double FN network model seems to work well for this data, and we give preference to this model. \(\blacksquare \)

11.1.4 Pseudo Maximum Likelihood Estimator

This short section gives a mathematical foundation to parameter estimation under model uncertainty and model misspecification. We summarize the results of Gourieroux et al. [168], and we refrain from giving any proofs in this section. Assume that the real-valued observations Y i, 1 ≤ i ≤ n, have been generated by the model

with (true) parameter \(\zeta _0 \in \Lambda \subset {\mathbb R}^r\), feature \(\boldsymbol {x}_i \in \mathcal {X} \subseteq \{1\}\times {\mathbb R}^q\), and where the conditional distribution of the noise random variables (ε i)1≤i≤n satisfies the conditional independence property \(p_\varepsilon (\varepsilon _1,\ldots , \varepsilon _n|\boldsymbol {x}_1,\ldots , \boldsymbol {x}_n) = \prod _{i=1}^n p_\varepsilon (\varepsilon _i|\boldsymbol {x}_i)\). Denote by p x(x) the portfolio distribution of the features x. Thus, under (11.14), the claim Y of a randomly selected policy is generated by the joint probability measure p 𝜖,x(ε, x) = p ε(ε|x)p x(x). The technical assumptions under which the following statements hold are given in Assumption 11.9 at the end of this section.

Let F 0(⋅|x i) denote the true conditional distribution of Y i, given x i. Typically, this (true) conditional distribution is unknown. It is assumed to provide the first two conditional moments

Thus, \(\varepsilon _i|{ }_{\boldsymbol {x}_i}\) is assumed to be centered with conditional variance \(\sigma ^2_0(\boldsymbol {x}_i)\), see (11.14). Our goal is to estimate the (true) parameter ζ 0 ∈ Λ, based on the fact that the conditional distribution F 0(⋅|x) of the observations is unknown. Throughout we assume parameter identifiability, i.e., if \(\mu _{\zeta _1}(\boldsymbol {x})=\mu _{\zeta _2}(\boldsymbol {x})\), p x-a.s., then ζ 1 = ζ 2. The following estimator is called pseudo maximum likelihood estimator (PMLE)

where \({\mathfrak d}(y,\mu )\) is the unit deviance of a (pre-chosen) single-parameter linear EDF being parametrized by the same parameter space \(\Lambda \subset {\mathbb R}^r\) as the original random variables (11.14); note that Λ is not the effective domain Θ of the chosen EDF. \(\widehat {\zeta }^{\mathrm {PMLE}}_n\) is called PMLE because it is a MLE for ζ 0 ∈ Λ, but not in the right model, because the pre-chosen EDF in (11.15) typically differs from the (unknown) true conditional distribution F 0(⋅|x). Nevertheless, we may hope to find the true parameter ζ 0, but possibly at a slower asymptotic rate. This is exactly what is going to be stated in the next theorems.

Theorem 11.5 (Theorem 1 of Gourieroux et al. [168])

Denote by

the dual mean parameter space of the pre-chosen EDF (having cumulant function κ), and assume that

\(\mu _{\zeta }(\boldsymbol {x})\in \mathcal {M}\)

for all

\(\boldsymbol {x} \in \mathcal {X}\)

and ζ ∈ Λ. Let Assumption

11.9

, below, hold. The PMLE

\(\widehat {\zeta }^{\mathrm {PMLE}}_n\)

is strongly consistent for ζ

0

, i.e., it converges a.s. as n →∞.

the dual mean parameter space of the pre-chosen EDF (having cumulant function κ), and assume that

\(\mu _{\zeta }(\boldsymbol {x})\in \mathcal {M}\)

for all

\(\boldsymbol {x} \in \mathcal {X}\)

and ζ ∈ Λ. Let Assumption

11.9

, below, hold. The PMLE

\(\widehat {\zeta }^{\mathrm {PMLE}}_n\)

is strongly consistent for ζ

0

, i.e., it converges a.s. as n →∞.

This theorem tells us that we can perform MLE in a pre-chosen EDF (which may differ from the true data model), and asymptotically we find the true parameter ζ 0 of the data model F 0(⋅|x). Of course, this uses the fact that any unit deviance \({\mathfrak d}\) is a strictly consistent loss function for mean estimation, see Theorem 4.19. We do not only receive consistency, but the following theorem also gives us the rate of convergence.

Theorem 11.6 (Theorem 3 of Gourieroux et al. [168])

Set the same assumptions as in Theorem 11.5 . The PMLE \(\widehat {\zeta }^{\mathrm {PMLE}}_n\) has the following asymptotic behavior

with the following matrices evaluated in ζ = ζ 0

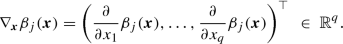

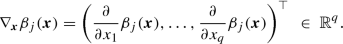

where h = (κ′)−1 is the canonical link of the pre-chosen EDF, and with the change of variable ζ↦θ = θ(ζ) = h(μ ζ(x)) ∈ Θ , for given feature x , having Jacobian

Remark that \(\mathcal {I}^\ast (\zeta )\) averages Fisher’s information \(\mathcal {I}^\ast (\zeta ;\boldsymbol {x})\) (of the chosen EDF) over the feature distribution p x. This theorem can be seen as a modification of (3.36) to the regression case. Theorem 11.6 gives us the asymptotic normality of the PMLE, and the resulting asymptotic variance depends on how well the pre-chosen EDF matches the true data distribution F 0(⋅|x). The following lemma corresponds to Property 5 in Gourieroux et al. [168].

Lemma 11.7

The asymptotic variance in Theorem 11.6 has the lower bound, set ζ = ζ 0 and \(\sigma ^2(\boldsymbol {x})=\sigma _0^2(\boldsymbol {x})\),

Proof

We set τ

2(x) = κ″(h(μ

ζ(x))). We have  . The following matrix is positive semi-definite and it satisfies

. The following matrix is positive semi-definite and it satisfies

This proves the claim. □

Theorem 11.6 and Lemma 11.7 tell us that if we estimate the parameter ζ 0 of the unknown model F 0(⋅|x) with PMLE based on a single-parameter linear EDF, we receive minimal asymptotic variance if we can match the variance \(V(\mu _{\zeta _0}(\boldsymbol {x}))=\kappa ''(h(\mu _{\zeta _0}(\boldsymbol {x})))\) of the chosen EDF with the variance \(\sigma ^2_0(\boldsymbol {x})\) of the true data model. E.g., if we know that the variance in the true model behaves as \(\sigma ^2_0(\boldsymbol {x}) = \mu ^3_{\zeta _0}(\boldsymbol {x})\) we should select the inverse Gaussian model with variance function V (μ) = μ 3 for PMLE.

If the members of the single-parameter linear EDF do not fully match the variance structure of the true data, we can turn our attention to a dispersion submodel as in Sect. 5.5.1. Assume for the variance structure of the true data

for a regression function \(\boldsymbol {x} \mapsto s^2_{\alpha _0}(\boldsymbol {x})\) involving the (true) regression parameter α 0 and exposures v i > 0. If we choose a fixed EDF, we have the log-likelihood function

Equating the variance structure of the true data model with the variance in this pre-specified EDF, we obtain feature-dependent dispersion parameter

with variance function V (μ) = (κ″ ∘ h)(μ). The following theorem proposes a two-step procedure for this estimation problem.

Theorem 11.8 (Theorem 4 of Gourieroux et al. [168])

Assume

\(\widetilde {\zeta }_n\)

and

\(\widetilde {\alpha }_n\)

are strongly consistent estimators for ζ

0

and α

0

, as n →∞, such that

and

and

are bounded in probability. The quasi-generalized pseudo maximum likelihood estimator (QPMLE) of ζ

0

is obtained by

are bounded in probability. The quasi-generalized pseudo maximum likelihood estimator (QPMLE) of ζ

0

is obtained by

Under Assumption 11.9 , below, \(\widehat {\zeta }^{\mathrm {QPMLE}}_n\) is strongly consistent and best asymptotically normal, i.e.,

This justifies the approach(es) in the previous chapters and sections, though, not fully, because we neither work with the MLE in FN networks nor do we care about identifiability in parameters. Nevertheless, this short section suggests to find strongly consistent estimators \(\widetilde {\zeta }_n\) and \(\widetilde {\alpha }_n\) for ζ 0 and α 0. This gives us a first model calibration step that allows us to specify the dispersion structure x↦φ(x) via (11.16). Using this dispersion structure and the deviance loss function (4.9) for a variable dispersion parameter φ(x), the QPMLE is obtained in the second step by, we replace the likelihood maximization by the deviance loss minimization,

This QPMLE is best asymptotically normal, thus, asymptotically optimal within the EDF. There might still be better estimators for ζ 0, but these are outside the EDF.

If we turn M-estimation into Z-estimation we have the requirement for ζ, see also (11.5),

Thus, it all boils down to find the right variance structure to receive the optimal asymptotic behavior.

The previous statements hold true under the following technical assumptions. These are taken from Appendix 1 of Gourieroux et al. [167], and they are an adapted version of the ones in Burguete et al. [61].

Assumption 11.9

-

(i)

μ ζ(x) and \({\mathfrak d}(y,\mu _{\zeta }(\boldsymbol {x}))\) are continuous w.r.t. all variables and twice continuously differentiable in ζ;

-

(ii)

\(\Lambda \subset {\mathbb R}^r\) is a compact set and the true parameter ζ 0 is in the interior of Λ;

-

(iii)

almost every realization of (ε i, x i) is a Cesàro sum generator w.r.t. the probability measure p 𝜖,x(ε, x) = p ε(ε|x)p x(x) and to a dominating function b(ε, x);

-

(iv)

the sequence (x i)i is a Cesàro sum generator w.r.t. p x and \(b(\boldsymbol {x})=\int _{{\mathbb R}} b(\varepsilon , \boldsymbol {x}) dp_{\varepsilon }(\varepsilon |\boldsymbol {x})\);

-

(v)

for each \(\boldsymbol {x} \in \{1\}\times {\mathbb R}^q\) , there exists a neighborhood \(N_{\boldsymbol {x}} \subset \{1\}\times {\mathbb R}^q\) such that

$$\displaystyle \begin{aligned} \int_{{\mathbb R}} \sup_{\boldsymbol{x}' \in N_{\boldsymbol{x}}} b(\varepsilon, \boldsymbol{x}')\, dp_\varepsilon(\varepsilon|\boldsymbol{x})<\infty; \end{aligned}$$ -

(vi)

the functions \({\mathfrak d}(Y,\mu _{\zeta }(\boldsymbol {x}))\), \(\partial {\mathfrak d}(Y,\mu _{\zeta }(\boldsymbol {x}))/\partial \zeta _k\), \(\partial ^2{\mathfrak d}(Y,\mu _{\zeta }(\boldsymbol {x}))/\partial \zeta _k \partial \zeta _l\) are dominated by b(ε, x).

11.2 Deep Quantile Regression

So far, in network regression modeling, we have not addressed the question of prediction uncertainty. As mentioned in Remarks 4.2 on forecast evaluation, there are different sources that contribute to prediction uncertainty. There is the model and parameter estimation uncertainty, which may result in an inappropriate model choice, and there is the irreducible risk which comes from the fact that we forecast random variables which inherit a natural randomness that cannot be controlled.

We have discussed methods of evaluating model and parameter estimation error, such as the asymptotic normality of MLEs within GLMs, and we have discussed forecast dominance, the bootstrap method or the nagging predictor that allow one to assess the different sources of prediction uncertainty. However, we have not explicitly quantified these sources of uncertainty within the class of network regression models. We do an attempt in Sect. 11.4, below, by considering the fluctuations generated by bootstrap simulations. The irreducible risk can be assessed once we have a suitable statistical model; in Example 11.4 we have studied a gamma and an inverse Gaussian model on an explicit data set, and these models can be used, e.g., to calculate quantiles. In this section we consider a distribution-free approach that directly estimates these quantiles. Recall from Section 5.8.3 that quantiles are elicitable with the pinball loss as a strictly consistent loss function, see Theorem 5.33. This allows us to directly estimate the quantiles from the data.

11.2.1 Deep Quantile Regression: Single Quantile

In this section we present a way of assessing the irreducible risk which does not require a sophisticated model evaluation of distributional assumptions. Quantile regression is increasingly used in the machine learning community because it is a robust way of quantifying the irreducible risk, we refer to Meinshausen [270], Takeuchi et al. [350] and Richman [314]. We recall that quantiles are elicitable having the pinball loss as a strictly consistent loss function, see Theorem 5.33. We define a FN network regression model that allows us to directly estimate the quantiles based on the pinball loss. We therefore use an adapted version of the R code of Listing 9 in Richman [314], this adapted version has been proposed in Fissler et al. [130] to ensure that different quantiles respect monotonicity. For any two quantile levels 0 < τ 1 < τ 2 < 1 we have

where F −1 denotes the generalized inverse of distribution function F, see (5.80). If we simultaneously learn these quantiles for different quantile levels τ 1 < τ 2, we need to enforce the network to respect this monotonicity (11.17). This can be achieved by exploring a special network architecture in the output layer, and this is going to be presented in the next section.

We start by considering a single deep τ-quantile regression for a quantile level τ ∈ (0, 1). For datum (Y, x) we consider the regression function

for a strictly monotone and smooth link function g, output parameter \(\boldsymbol {\beta }_\tau \in {\mathbb R}^{q_d+1}\), and where x↦z

(d:1)(x) is a deep network. We add a lower index Y |x to the generalized inverse \(F_{Y|\boldsymbol {x}}^{-1}\) to highlight that we consider the conditional distribution of Y , given feature \(\boldsymbol {x} \in \mathcal {X}\). In the case of a deep FN network, (11.18) involves a network parameter  that needs to be estimated. Of course, the deep network architecture x↦z

(d:1)(x) could also involve any other feature, such as CN or LSTM layers, embedding layers or a NLP text recognition feature. This would change the network architecture, but it would not change anything from a methodological viewpoint.

that needs to be estimated. Of course, the deep network architecture x↦z

(d:1)(x) could also involve any other feature, such as CN or LSTM layers, embedding layers or a NLP text recognition feature. This would change the network architecture, but it would not change anything from a methodological viewpoint.

To estimate this regression parameter 𝜗 from independent data (Y i, x i), 1 ≤ i ≤ n, we consider the objective function

with the strictly consistent pinball loss function L τ for the τ-quantile. Alternatively, we could choose any other loss function satisfying Theorem 5.33, and we may try to find the asymptotically optimal one (similarly to Theorem 11.8). We refrain from doing so, but we mention Komunjer–Vuong [222]. Fitting the network parameter 𝜗 is then done in complete analogy to finding an optimal network parameter for network mean modeling. The only change is that we replace the deviance loss function by the pinball loss, e.g., in Listing 7.3 we have to exchange the loss function on line 5 correspondingly.

11.2.2 Deep Quantile Regression: Multiple Quantiles

We now turn our attention to the multiple quantile case that should satisfy the monotonicity requirement (11.17) for any quantile levels 0 < τ 1 < τ 2 < 1. A separate deep quantile estimation for both quantile levels, as described in the previous section, may violate the monotonicity property, at least, in some part of the feature space \(\mathcal {X}\), especially if the two quantile levels are close. Therefore, we enforce the monotonicity by a special choice of the network architecture.

For simplicity, in the remainder of this section, we assume that the response Y is positive, a.s. This implies for the quantiles \(\tau \mapsto F_{Y|\boldsymbol {x}}^{-1}(\tau ) \ge 0\), and we should choose a link function with g −1 ≥ 0 in (11.18). To ensure the monotonicity (11.17) for the quantile levels 0 < τ 1 < τ 2 < 1, we choose a second positive link function with \(g^{-1}_+\ge 0\), and we set for multi-task forecasting

for a regression parameter  . The positivity \(g_+^{-1}\ge 0\) enforces the monotonicity in the two quantiles. We call (11.19) an additive approach as we start from a base level characterized by the smaller quantile \(F_{Y|\boldsymbol {x}}^{-1}(\tau _1)\), and any bigger quantile is modeled by an additive increment. To ensure monotonicity for multiple quantiles we proceed recursively by choosing the lowest quantile as the initial base level.

. The positivity \(g_+^{-1}\ge 0\) enforces the monotonicity in the two quantiles. We call (11.19) an additive approach as we start from a base level characterized by the smaller quantile \(F_{Y|\boldsymbol {x}}^{-1}(\tau _1)\), and any bigger quantile is modeled by an additive increment. To ensure monotonicity for multiple quantiles we proceed recursively by choosing the lowest quantile as the initial base level.

We can also consider the upper quantile as the base level by multiplicatively lowering this upper quantile. Choose the (sigmoid) function \(g_\sigma ^{-1} \in (0,1)\) and set for the multiplicative approach

Remark 11.10

In (11.19) and (11.20) we directly enforce the monotonicty by a corresponding regression function choice. Alternatively, we can also design a (plain-vanilla) multi-output network

If we just use a classical SGD fitting algorithm, we will likely result in a situation where the monotonicity will be violated in some part of the feature space. Kellner et al. [211] consider this problem. They add a penalization (regularization term) that punishes during SGD training network parameters that violate the monotonicity. Such a penalization can be constructed, e.g., with the ReLU function.

11.2.3 Lab: Deep Quantile Regression

We revisit the Swiss accident insurance data of Sect. 11.1.2, and we provide an example of a deep quantile regression using both the additive approach (11.19) and the multiplicative approach (11.20).

Listing 11.3 Multiple FN quantile regression: additive approach

We select 5 different quantile levels \(\mathcal {Q}=(\tau _1,\tau _2,\tau _3,\tau _4,\tau _5)=(10\%, 25\%, 50\%, 75\%, 90\%)\). We start with the additive approach (11.19). It requires to set τ 1 = 10% as the base level, and the remaining quantile levels are modeled additively in a recursive way for τ j < τ j+1, 1 ≤ j ≤ 4. The corresponding R code is given on lines 8–20 of Listing 11.3, and this compiles to the 5-dimensional output on line 22.

Listing 11.4 Multiple FN quantile regression: multiplicative approach

For the multiplicative approach (11.20) we set τ 5 = 90% as the base level, and the remaining quantile levels are received multiplicatively in a recursive way for τ j+1 > τ j, 4 ≥ j ≥ 1, see Listing 11.4. The additive and the multiplicative approaches take the extreme quantiles as initialization. One may also be interested in initializing the model in the median τ 3 = 50%, the smaller quantiles can then be received by the multiplicative approach and the bigger quantiles by the additive approach. We also explore this case and we call it the mixed approach.

Listing 11.5 Fitting a multiple FN quantile regression

These network architectures are fitted to the data using the pinball loss (5.81) for the quantile levels of \(\mathcal {Q}\); note that the pinball loss requires the assumption of having a finite first moment. Listing 11.5 shows the choice of the pinball loss functions. We then fit the three architectures (additive, multiplicative and mixed) to our learning data \(\mathcal {L}\), and we apply early stopping to prevent from over-fitting. Moreover, we consider the nagging predictor over 20 runs with different seeds to reduce the randomness coming from SGD fitting.

In Table 11.6 we give the out-of-sample pinball losses on the test data \(\mathcal {T}\) of the three considered approaches, and illustrating the 5 quantile levels of \(\mathcal {Q}\). The losses of the three approaches are rather close, giving a slight preference to the mixed approach, but the other two approaches seem to be competitive, too. We further analyze these quantile regression models by considering the empirical coverage ratios defined by

where \(\widehat {F}_{Y|\boldsymbol {x}^\dagger _t}^{-1}(\tau _j)\) is the estimated quantile for level τ j and feature \(\boldsymbol {x}_t^\dagger \). Remark that the coverage ratios (11.22) correspond to the identification functions that are essentially the derivatives of the pinball losses, we refer to Dimitriadis et al. [106]. Table 11.7 reports these out-of-sample coverage ratios on the test data \(\mathcal {T}\). From these results we conclude that on the portfolio level the quantiles are matched rather well.

In Fig. 11.8 we illustrate the estimated out-of-sample quantiles \(\widehat {F}_{Y|\boldsymbol {x}^\dagger _t}^{-1}(\tau _j)\) for individual claims on the quantile levels τ j ∈{10%, 25%, 50%, 75%, 90%} (cyan, blue, black, blue, cyan colors) using the mixed approach. The x-axis considers the logged estimated medians \(\widehat {F}_{Y|\boldsymbol {x}^\dagger _t}^{-1}(50\%)\). We observe heteroskedasticity resulting in quantiles that are not ordered w.r.t. the median (black line). This supports the multiple deep quantile regression model because we cannot (simply) extrapolate the median to receive the other quantiles.

Estimated out-of-sample quantiles \(\widehat {F}_{Y|\boldsymbol {x}^\dagger _t}^{-1}(\tau _j)\) of 2’000 randomly selected individual claims on the quantile levels τ j ∈{10%, 25%, 50%, 75%, 90%} (cyan, blue, black, blue, cyan colors) using the mixed approach, the red dots are the out-of-sample observations \(Y^\dagger _t\); the x-axis gives \(\mathrm {log} \widehat {F}_{Y|\boldsymbol {x}^\dagger _t}^{-1}(50\%)\) (also corresponding to the black diagonal line)

In the final step we compare the estimated quantiles \(\widehat {F}_{Y|\boldsymbol {x}}^{-1}(\tau _j)\) from the mixed deep quantile regression approach to the ones that can be calculated from the fitted inverse Gaussian model using the double FN network approach of Example 11.4. In the latter model we estimate the mean \(\widehat {\mu }(\boldsymbol {x})\) and the dispersion \(\widehat {\varphi }(\boldsymbol {x})\) with two FN networks, which then allow us to calculate the quantiles using the inverse Gaussian distributional assumption. Note that we cannot calculate the quantiles in Tweedie’s family with power variance parameter p = 2.5 because there is no closed form of the distribution function. Figure 11.9 compares the two approaches on the quantile levels of \(\mathcal {Q}\). Overall we observe a reasonably good match though it is not perfect. The small quantiles for level τ 1 = 10% seem slightly under-estimated by the inverse Gaussian approach (see Fig. 11.9 (top-left)), whereas big quantiles τ 4 = 75% and τ 5 = 90% seem more conservative in the inverse Gaussian approach (see Fig. 11.9 (bottom)). This may indicate that the inverse Gaussian distribution does not fully fit the data, i.e., that one cannot fully recover the true quantiles from the mean \(\widehat {\mu }(\boldsymbol {x})\), the dispersion \(\widehat {\varphi }(\boldsymbol {x})\) and an inverse Gaussian assumption. There are two ways to further explore these issues. One can either choose other distributional assumptions which may better match the properties of the data, this further explores the distributional approach. Alternatively, Theorem 5.33 allows us to choose loss functions different from the pinball loss, i.e., one could consider different increasing functions G in that theorem to further explore the distribution-free approach. In general, any increasing choice of the function G leads to a strictly consistent quantile estimation (this is an asymptotic statement), but these choices may have different finite sample properties. Following Komunjer–Vuong [222], we can determine asymptotically efficient choices for G. This would require feature dependent choices \(G_{\boldsymbol {x}_i}(y)=F_{Y|\boldsymbol {x}_i}(y)\), where \(F_{Y|\boldsymbol {x}_i}\) is the (true) distribution of Y i, conditionally given x i. This requires the knowledge of the true distribution, and Komunjer–Vuong [222] derive asymptotic efficiency when replacing this true distribution by a non-parametric estimator, this is in spirit similar to Theorem 11.8. We refrain from giving more details but refer to the corresponding paper.

11.3 Deep Composite Model Regression

We have established a deep quantile regression in the previous section. Next we jointly estimate quantiles and conditional tail expectations (CTEs), leading to a composite regression model that has a splicing point determined by a quantile level; for composite models we refer to Sect. 6.4.4. This is exactly the proposal of Fissler et al. [130] which we are going to present in this section. Note that having a composite model allows us to have different distributions and regression structures below and above the splicing point, e.g., we can have a more heavy-tailed model in the upper tail using a different feature engineering from the main body of the data.

11.3.1 Joint Elicitability of Quantiles and Expected Shortfalls

In the previous examples we have seen that the distributional models may misestimate the true tail of the data because model fitting often pays more attention to an accurate model fit in the main body of the data. An idea is to directly estimate this tail in a distribution-free way by considering the (upper) CTE

for a given quantile level τ ∈ (0, 1). The problem with (11.23) is that this is not an elicitable quantity, i.e., there is no loss/scoring function that is strictly consistent for the CTE functional.

If the distribution function F Y |x is continuous, we can rewrite the upper CTE as follows, see Lemma 2.16 in McNeil et al. [268] and (11.35) below,

This second object \(\mathrm {ES}^+_{\tau }(Y|\boldsymbol {x})\) is called the upper expected shortfall (ES) of Y , given x, on the security level τ. Fissler–Ziegel [131] and Fissler et al. [132] have proved that \(\mathrm {ES}^+_\tau (Y|\boldsymbol {x})\) is jointly elicitable with the τ-quantile \({F}_{Y|\boldsymbol {x}}^{-1}(\tau )\). That is, there is a strictly consistent bivariate loss function that allows one to jointly estimate the τ-quantile and the corresponding ES. In fact, Corollary 5.5 of Fissler–Ziegel [131] give the full characterization of the strictly consistent bivariate loss functions for the joint elicitability of the τ-quantile and the ES; note that Fissler–Ziegel [131] use a different sign convention. This result is used in Guillén et al. [175] for the joint estimation of the quantile and the ES within a GLM. Guillén et al. [175] use a two-step approach to fit the quantile and the ES.

Fissler et al. [130] extend the results of Fissler–Ziegel [131], allowing for the joint estimation of the composite triplet consisting of the lower ES, the τ-quantile and the upper ES. This gives us a composite model that has the τ-quantile as splicing point. The beauty of this approach is that we can fit (in one step) a deep learning model to the upper and the lower ES, and perform a (potentially different) regression in both parts of the distribution. The lower CTE and the lower ES are defined by, respectively,

and

Again, in case of a continuous distribution function F Y |x we have the following identity \(\mathrm {CTE}^-_{\tau }(Y|\boldsymbol {x})=\mathrm {ES}^-_{\tau }(Y|\boldsymbol {x})\). From the lower and upper CTEs we receive the mean of Y , given x, by

We introduce the auxiliary scoring functions

for \(y,a\in {\mathbb R}\) and for τ ∈ (0, 1). These auxiliary functions consider only the part of the pinball loss (5.81) that depends on action a, and we get the pinball loss as follows

Therefore, all three functions provide strictly consistent scoring functions for the τ-quantile, but only the pinball loss satisfies the calibration property (L0) on page 92.

For the following theorem we recall the general definition of the τ-quantile Q τ(F Y |x) of a distribution function F Y |x, see (5.82).

Theorem 11.11 (Theorem 2.8 of Fissler et al. [130], Without Proof)

Choose τ ∈ (0, 1) and let \(\mathcal F\) contain only distributions with a finite first moment, and being supported in the interval \({\mathfrak C} \subseteq {\mathbb R}\) . The loss function \(L: {\mathfrak C}\times {\mathfrak C}^3\to {\mathbb R}_+\) of the form

is strictly consistent for the composite triplet \((\mathrm {ES}^-_\tau , Q_\tau , \mathrm {ES}^+_\tau )\) relative to the class \(\mathcal {F}\) , if Ψ is strictly convex with (sub-)gradient ∇ Ψ such that for all \((e^-,e^+)\in {\mathfrak C}^2\) the function

is strictly increasing, and if \({\mathbb E}_F[|G(Y)|]<\infty \), \({\mathbb E}_F[|\Psi (Y,Y)|]<\infty \) for all \(Y\sim F\in \mathcal {F}\).

This opens the door for regression modeling of CTEs for continuous distribution functions F Y |x, \(\boldsymbol {x} \in \mathcal {X}\). Namely, we can choose a regression function ξ 𝜗 with a three-dimensional output

depending on a regression parameter 𝜗. This regression function is now used to describe the composite triplet \((\mathrm {ES}^-_{\tau }(Y|\boldsymbol {x}),F^{-1}_{Y|\boldsymbol {x}}(\tau ),\mathrm {ES}^+_{\tau }(Y|\boldsymbol {x}))\). Having i.i.d. data (Y i, x i), 1 ≤ i ≤ n, it can be fitted by solving

with loss function L given by (11.26). This then provides us with the estimates for the composite triplet

There remains the choice of the functions G and Ψ, such that Ψ is strictly convex and \(G_{e^-, e^+}\), defined in (11.27), is strictly increasing. Section 2.3 in Fissler et al. [130] discusses possible choices. A simple choice is to select the identity function G(y) = y (which gives the pinball loss on the first line of (11.26)) and

with ψ 1 and ψ 2 strictly convex and with (sub-)gradients \(\psi ^{\prime }_1>0\) and \(\psi ^{\prime }_2<0\). Inserting this choice into (11.26) provides the loss function

where L τ(y, q) is the pinball loss (5.81) and \(D_{\psi _1}\) and \(D_{\psi _2}\) are Bregman divergences (2.28). There remains the choices of ψ 1 and ψ 2 which should be strictly convex, the first one being strictly increasing and the second one being strictly decreasing.

We restrict ourselves to strictly convex functions ψ on the positive real line \({\mathbb R}_+\), i.e., for positive claims Y > 0, a.s. For \(b \in {\mathbb R}\), we consider the following functions on \({\mathbb R}_+\)

We compute the first and second derivatives. These are for y > 0 given by

Thus, for any \(b \in {\mathbb R}\) we have a convex function, and this convex function is decreasing on \({\mathbb R}_+\) for b < 1 and increasing for b > 1. Therefore, we have to select b > 1 for ψ 1 and b < 1 for ψ 2 to get suitable choices in (11.29). Interestingly, these choices correspond to Lemma 11.2 with power variance parameters p = 2 − b, i.e., they provide us with Bregman divergences from Tweedie’s distributions. However, (11.30) is more general, because it allows us to select any \(b \in {\mathbb R}\), whereas for power variance parameters p ∈ (0, 1) there do not exist any Tweedie’s distributions, see Theorem 2.18.

In view of Lemma 11.2 and using the fact that unit deviances \({\mathfrak d}_p\) are Bregman divergences, we select a power variance parameter p = 2 − b > 1 for ψ 2 and we select the Gaussian model p = 2 − b = 0 for ψ 1. This gives us the special choice for the loss function (11.29) for strictly positive claims Y > 0, a.s.,

with the Gaussian unit deviance \({\mathfrak d}_0(y,e^-)=(y-e^-)^2\) and Tweedie’s unit deviance \({\mathfrak d}_p\) with power variance parameter p > 1, see Sect. 11.1.1. The additional constants η 1, η 2 > 0 are used to balance the contributions of the individual terms to the total loss. Typically, we choose p ≥ 2 for the upper ES reflecting claim size models. This choice for ψ 2 implies that the residuals are weighted inversely proportional to the corresponding variances μ p within Tweedie’s family, see (11.5). Using this loss function (11.31) in (11.28) allows us to estimate the composite triplet \((\mathrm {ES}^-_{\tau }(Y|\boldsymbol {x}),F^{-1}_{Y|\boldsymbol {x}}(\tau ),\mathrm {ES}^+_{\tau }(Y|\boldsymbol {x}))\) with a strictly consistent loss function.

11.3.2 Lab: Deep Composite Model Regression

The joint elicitability of Theorem 11.11 allows us to directly estimate these functionals for a fixed quantile level τ ∈ (0, 1). In a similar way to quantile regression we set up a FN network that respects the monotonicity \(\mathrm {ES}^-_{\tau }(Y|\boldsymbol {x})\le F^{-1}_{Y|\boldsymbol {x}}(\tau )\le \mathrm {ES}^+_{\tau }(Y|\boldsymbol {x})\). We set for the regression function in the additive approach for multi-task learning

for link functions g and g + with \(g_+^{-1}\ge 0\), deep FN network \(\boldsymbol {z}^{(d:1)}:{\mathbb R}^{q_0+1} \to {\mathbb R}^{q_d+1}\), regression parameters \(\boldsymbol {\beta }_1,\boldsymbol {\beta }_2,\boldsymbol {\beta }_3 \in {\mathbb R}^{q_d+1}\), and with the action space \({\mathbb A}=\{(e^-,q,e^+)\in {\mathbb R}_+^3;e^-\le q \le e^+\}\) for positive claims. We also remind of Remark 11.10 for a different way of modeling the monotonicity.

Fitting this model is similar to the multiple deep quantile regression presented in Listings 11.3 and 11.5. There is one important difference though. Namely, we do not have multiple outputs and multiple loss functions, but we have a three-dimensional output with a single loss function (11.31) simultaneously evaluating all three components of the output (11.32). Listing 11.6 gives this loss for the inverse Gaussian case p = 3 in (11.31).

Listing 11.6 Loss function (11.31) for p = 3

We revisit the Swiss accident insurance data of Sect. 11.2.3. We again use a FN network of depth d = 3 with (q 1, q 2, q 3) = (20, 15, 10) neurons, hyperbolic tangent activation, two-dimensional embedding layers for the categorical features, exponential output activations for g −1 and \(g_+^{-1}\), and the additive structure (11.32). We implement the loss function (11.31) for quantile level τ = 90% and with power variance parameter p = 3, see Listing 11.6. This implies that for the upper ES estimation we scale residuals with V (μ) = μ 3, see (11.5). We then run an initial calibration of this FN network. Based on this initial calibration we can calculate the three loss contributions in (11.31) coming from the composite triplet. Based on these figures we choose the constants η 1, η 2 > 0 in (11.31) so that all three terms of the composite triplet contribute equally to the total loss. For the remainder of our calibration we hold on to these choices of η 1 and η 2.

We calibrate this deep FN architecture to the learning data \(\mathcal {L}\), using the strictly consistent loss function (11.31) for the composite triplet \((\mathrm {ES}^-_{90\%}(Y|\boldsymbol {x}), F_{Y|\boldsymbol {x}}^{-1}(90\%), \mathrm { ES}^+_{90\%}(Y|\boldsymbol {x}))\), and to reduce the randomness in prediction we average over 20 early stopped SGD calibrations with different seeds (nagging predictor).

Figure 11.10 shows the estimated lower and upper ES against the corresponding 90%-quantile estimates for 2’000 randomly selected insurance claims \(\boldsymbol {x}^\dagger _t\). The diagonal orange line shows the estimated 90%-quantiles \(\widehat {F}^{-1}_{Y|\boldsymbol {x}^\dagger _t}(90\%)\), and the cyan lines give spline fits to the estimated lower and upper ES. It is clearly visible that these respect the ordering

Comparison of the estimated lower \(\widehat {\mathrm {ES}}^{-}_{90\%}(Y|\boldsymbol {x}^\dagger _t)\) and the estimated upper \(\widehat {\mathrm {ES}}^{+}_{90\%}(Y|\boldsymbol {x}^\dagger _t)\) against the estimated 90%-quantile \(\widehat {F}^{-1}_{Y|\boldsymbol {x}^\dagger _t}(90\%)\) in the deep composite regression

for fixed features \(\boldsymbol {x}^\dagger _t \in \mathcal {X}\).

The deep quantile regression has been back-tested using the coverage ratios (11.22). Back-testing the ES is more difficult, the standalone ES is not elicitable, and the ES can only be back-tested jointly with the corresponding quantile. The part of the joint identification function that corresponds to the ES is given by, see (4.2)–(4.3) in Fissler et al. [130],

and

These (empirical) identifications should be close too zero if the model fits the data.

Remark that the latter terms in (11.33)–(11.34) describe the lower and upper ES also in the case of non-continuous distribution functions because we have the identity

the second term being zero for a continuous distribution F Y |x, but it is needed for non-continuous distribution functions.

We compare the deep composite regression results of this section to the deep gamma and inverse Gaussian models using a double FN network for dispersion modeling, see Sect. 11.1.3. This requires to calculate the ES in the gamma and the inverse Gaussian models. This can be done within the EDF, see Landsman–Valdez [233]. The upper ES in the gamma model Y ∼ Γ(α, β) is given by, see (6.47),

where \(\mathcal {G}\) is the scaled incomplete gamma function (6.48) and \(F^{-1}_{Y}(\tau )\) is the τ-quantile of Γ(α, β).

Example 4.3 of Landsman–Valdez [233] gives the inverse Gaussian case (2.8) with α, β > 0

where φ and Φ are the standard Gaussian density and distribution, respectively, \(F^{-1}_{Y}(\tau )\) is the τ-quantile of the inverse Gaussian distribution and

This now allows us to calculate the identifications (11.33)–(11.34) in the fitted deep double networks using the gamma and the inverse Gaussian distributions of Sect. 11.1.3.