Abstract

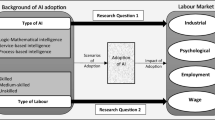

The chapter examines the question of whether people must fear for their jobs due to artificial intelligence (AI). A “competitive scenario” with a design to test for this appeared in the Delphi survey. The chapter shows how realistic this scenario is and its sociological implications, with a basis in expert opinions. In addition, the chapter sheds light on how much people see AI affecting themselves in their jobs, their future standard of living, and quality of life. The results in these respects paint a much more positive picture than the public discussion of AI leads us to expect. The chapter deals with ethical concerns that AI could lead to discrimination in the labor market and the perceived need for public policy interventions to ensure that AI develops ethically. An aggregate data analysis reveals substantial variations across EU countries and significant correlations with a country’s prosperity, risk of poverty, multi-ethnicity, and inherent trust in institutions and fellow men. We examine the odds of such concerns in Germany, as a function of socio-structural variables.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

2.1 Introduction

Artificial intelligence, robotics, and their joint potential to influence the future labor market have been under labor-market researchers’ scrutiny for some time. Their influence and closely related technologies, such as machine learning, have already shown great potential as drivers of economic growth (Graetz & Michaels, 2015; Aghion et al., 2019), and their influence is likely to accelerate in the future. Beginning with industrialization, the influence of automation on work has been a continuous presence, igniting both beneficial economic growth and structural changes concerning the labor market. Critical views concerning its influence on human labor conditions have appeared for just as long. Already in 1930, economist John M. Keynes had put his economic pessimism stemming from technological advancement into words, proposing:

We are being afflicted with a new disease of which some readers may not yet have heard the name, but of which they will hear a great deal in the years to come—namely, technological unemployment. This means unemployment due to our discovery of means of economising the use of labour outrunning the pace at which we can find new uses for labour. (Keynes, 1930/2010)

AI will change the working and professional world and, thus, a central pillar of life in society. In the past, human–robot collaboration and the use of AI was primarily limited to the takeover of repetitive tasks in the industrial workplace setting, but it has increasingly entered new fields—for example, the fields of customer service and health care (Decker et al., 2017; Huang & Rust, 2018). Jobs formerly deemed not substitutable through robots are no longer so to the same extent, raising fears of mass unemployment (Frank et al., 2019; Webb, 2019). However, research rates how much AI-driven automation threatens jobs differently because the labor market is complex, and the speed of technological progress is difficult to estimate.

Such expectations include major changes in company business models: the automation of routine processes and AI enhancement and support of a large part of the tasks awaiting solution. Thus, AI will become a constant companion of people in the workplace. According to Eurobarometer data for 2017 (European Commission & European Parliament, 2017), to a remarkable extent, working people attribute to AI the potential to take over their jobs in the future. Only 59% completely rule this out for themselves, women at a higher proportion than men (66–52%). However, AI will not only cost jobs; it will also create new ones. Also, AI may just lead to changed employee qualification requirements (Lane & Saint-Martin, 2021).

Still, 3 years ago, the expectation was that one in four employees in Germany would have to anticipate AI replacing their job, and that trend is rising.Footnote 1 According to this IAB study, manufacturing professions, company-related service professions, professions in corporate management and organization, transport, and logistics professions, as well as commercial professions would be among the most affected. Meanwhile, a more recent IAB study assumes for Germany that 34% of jobs are subject to high substitution potential. In addition, the study indicates a substantial increase in this figure from 15% in 2013 to 34% in 2019 (Wrobel & Althoff, 2021).

Workers must adapt and develop new skills to stay competitive in changing areas of work. At present, the consensus in labor-market research states that human–machine coexistence will be the future norm (Hamid et al., 2017; Bankins & Formosa, 2020).

AI touches not only simple routine activities. It is also advancing into areas of high-quality academic work. Predictably, recommendations and decisions will be more automatic in the future. “The McKinsey Global Institute estimates that through highly developed algorithms and thinking machines alone, worldwide 140 million knowledge workers will be replaced by technology in the coming years” (Goffart, 2019, p. 58). In fact, white-collar jobs are at risk, even in most creative areas like music and painting (Ford, 2016, pp. 113–114).

Similarly, Baldwin (2019, pp. 4–5) refers to skills that computers never had before—“things like reading, writing, speaking, and recognizing subtle patterns”—making “white-collar robots” fierce competitors for office jobs. He even talks of “globotics,” a combination of a new form of globalization and a new form of robotics, which he sees as different from the “century-old stories” of automation and globalization, “coming inhumanly fast, and it will seem unbelievably unfair.”

Regarding robots in the future taking over automatable activities, any associated job losses will threaten the professional existence of many people and families. Even if, in the next few years and decades, AI will create new jobs to the same extent—perhaps even more jobs than will be lost—the question remains whether these jobs will also offer the same economic and social security. Quite conceivably, AI will act as a catalyst for the erosion of standard employment. Digital crowd-working platforms, for example, can certainly be harbingers of a reality in which the model of “permanent employment with social security” as the livelihood of the middle class will have substantially lost its importance. In the platform economy already, only a few permanent employees with gigantic sales and value constitute such companies. At the same time, attention is turning to the fact that the design of business models aims at shifting the business risk from the platform to the respective users (Adams-Prassl, 2019).

As a consequence of technological advancement in artificial intelligence and robotics today, new challenges and possibilities concerning the structure and organization of work are continuously emerging and may certainly lead to future societal changes on a larger scale. With artificial intelligence evolving rapidly, the effort toward measuring and understanding the impact of such changes is of particular importance for social science research. While discussed extensively, empirical evidence detailing the extent of expected human job replacement is comparatively little, in addition to yielding different outcomes altogether (Felten et al., 2018).

Research that aims at predicting future events as complex as labor-market changes is a challenging endeavor. An important pillar in assessing future characteristics of a labor market that automation shapes even more is the gathering of expert knowledge. As Chap. 3 details, for such an assessment we used a real-time Delphi design that confronts the experts with a series of scenarios of different complexity. One of these scenarios is the “competitive scenario,” (see Sect. 2.1.), with a related population view (see Sect. 2.2). Following this, we devote Sect. 2.3 to ethical concerns that AI could lead to discrimination in the labor market and beyond. No doubt, biases in human perception can and do lead to discriminatory practices (e.g., toward job applicants), but letting algorithms aid in the process raises concerns that may not be unfounded, given the evidence of previous occurrences of systematic discrimination in automated decision-making (Kleinberg et al., 2020; Ferrer et al., 2021). In this regard as well, findings from the Delphi appear below. However, the major focus of Sect. 2.3 is the analysis of European countries, using data from Eurostat and aggregated survey data from Eurobarometer 92.3 and the European Social Survey. Section 2.3 also presents an analysis of the social structure of ethical concern that AI could lead to discrimination.

2.2 AI and the Labor Market

2.2.1 The Competitive Delphi Scenario: Expert Views

The competitive scenario is one out of five scenarios the Delphi survey poses. The Delphi reveals destructive competition for permanent appointments as an unlikely worst-case scenario (Fig. 2.1) that depicts a situation affecting even the highly skilled middle class. It describes a job market where AI handles a steadily increasing part of even highly skilled routine jobs. This trend accompanies declining workforce demand, forcing people into precarious employment on digital crowd-working platforms and threatening even the stability of democracy.

Engel and Dahlhaus (2022, p. 345) state that

though AI is expected to shape the job market in general, highly qualified staff is regarded as not that concerned, at least not for the near future. While 38 percent of the Delphi respondents anticipate a clear reduction of permanent appointments due to AI in Germany in 2030, the survey’s reference year, only 20 percent believe in corresponding job losses for highly skilled academic personnel. In this respect, the prevailing expert opinion (78 percent) anticipates primarily changing job specifications due to AI.

The fact that the substitution potential of jobs for highly qualified people is comparatively lower aligns with the recent IAB study cited above. However, a residual uncertainty may still exist. Even if AI cannot easily replace jobs that require demanding academic qualifications, the question remains whether the current assumption that these qualifications will protect them effectively against substitution is valid, especially against the background that the technology is still developing. In any case, the Delphi participants currently assume a rather low risk, partly concerning their own job profile. University education is an apt example. Using the standard response scale that appears throughout the surveys of the Bremen AI Delphi study (Q1 = 1.6; Q2 = 2.1; Q3 = 2.8), the respondents rate the following situation as unlikely to occur: “AI has changed academic teaching in the country. In many bachelor-degree programs, robot assistants now take over the lecture-accompanying exercises and exam preparation for students.”

The Delphi reveals the scenario of a destructive human–robot competition for permanent appointments as an unlikely worst-case scenario. But what if not? That competitive scenario would hit the well-educated middle class particularly hard. Granted, the intensified competition in the labor market would effectively turn out as this scenario assumes. Then, what are the expected consequences for the reference year 2030? Figure 2.2 displays the subjective expectations of some such consequences, four of which would appear likely: increased importance of education as a key to professional success, the demand for a lifelong and comprehensive tax-financed protection of an acceptable livelihood, accompanied by a controversial public discussion of a corresponding restructuring of the taxes and social security contribution system; a weakening of the liberal center in favor of the fringes of the political spectrum; and greater demand for psychosocial counseling.

The scales above include the following observations. For the reference year 2030, the “attitude to life” scale describes a situation that appears rather unlikely than likely to the Delphi respondents: “People’s attitude towards life will not have changed significantly.” On the one hand, the middle 50% of responses include “probably not” and, on the other hand, exclude “quite probable,” with the median located slightly below “possibly,” expressing maximum uncertainty.

Three further consequences appear essentially uncertain, exhibiting a narrow dispersion of responses around “possibly” while the middle 50% of responses exclude both bounds, “probably not” and “quite probable.” They are: “Since the state has guaranteed every citizen a basic income since 2025, AI has been viewed largely positively by the population—despite all competition” (Basic_income); “People would have to spend much more time than before to secure their living standard; time that is missing for a meaningful way of life” (Leisure); “An attitude towards life characterized by deep insecurity and fears of social decline will have spread across the population” (Decline).

Finally, a group of statements describes consequences that appear rather likely than unlikely to the Delphi respondents. Here, the middle 50% of responses exclude “probably not” and include “quite probable.” One is the statement that in the meantime, the population has massively asked the state for a comprehensive and lifelong tax-financed safeguarding of an acceptable standard of living (Livelihood). It also applies to the expected public debate on this topic. We phrased a corresponding statement as: “The decline in the factors of production of labor and human capital in value added, which was forecast in the decade before last, has meanwhile come true. Since then, a fundamental restructuring of the system of taxes and social security taxes has been the subject of very controversial public debate” (Security). Third is the statement concerning the central role of education, which the responses to two opposing statements indicate. On the one hand, we observe a clear rejection of the statement that for many, education is no longer the key to professional success. The people ask themselves: “Why invest in education if it doesn’t pay off for me?” (Education (−)). On the other hand, the Delphi respondents found it much more likely to expect that many consider education to be “the” key to professional success. Again for the reference year 2030, they expect that in recent years, Germany will have seen a real run to the qualification offers of universities and institutions of further education and training (Education (+)). Furthermore, it is more likely than unlikely that confidence in the free market would decline; the liberal center would have lost much popularity, while the fringes of the political spectrum would have gained significantly (Market). And people would have to pay a price for the burdens of increased competition. In the year 2030, the more likely consequences would also include a sharp rise in recent years in the demand for psychological and pastoral advice (Advice).

2.2.2 AI and the Anticipated Standard of Living and Quality of Life: Population Views

We balanced the wording of our survey question as well as possible:

It is widely expected that the introduction of artificial intelligence techniques will have an impact on the labor market. Three possibilities are foreseen: Downsizing: companies will reduce the number of their permanent employees; Compensation: job losses will be offset by job gains; Gain: More new jobs will be created than old ones will be eliminated. What do you suspect: What will the labor market likely to come to in the next ten years?

Even if only 7% expect a gain and 56% expect downsizing (compensation: 35%; don’t know: 3%), the answers to the following question clearly indicate anticipation of only a limited personal concern if just 6% expect substantial replacement. “If you are currently employed, do you think that your current job could be taken over by a robot or artificial intelligence in the future?” “Yes, completely” [1%]; “yes, for the most part” [5%]; “yes, but only partly” [41%]; “no, not at all” [51%]; “don’t know” [2%]. This is likely to be the case, although only around half of the respondents assume that robots cannot replace them at all on the job, at least if one also considers the perceived impact on one’s living standard and quality of life. In this regard, significantly greater proportions of people expect positive rather than negative effects for themselves. The survey question was: “What would you guess: Will artificial intelligence change the economy and the labor market in the country in such a way that these changes will have a positive or negative effect on your future standard of living? Or do you not expect any impact in this regard?” Thirty-five percent expected positive impact, 27% no impact, and 19% negative impact (don’t know: 19%). Even more pronounced is the apparent imbalance in the case of one’s quality of life. “Just as artificial intelligence may affect jobs and a person’s standard of living; this technology may also affect a person’s quality of life in a broader sense. (…) What would you guess? Could robots and artificial intelligence be something that has a positive or negative impact on your quality of life? Or would you not expect any effects in this regard?” Here, 46% expected positive impact, 26% no impact, and 13% negative impact (don’t know: 16%). A case study in Italy reports a similarly positive expectation (Operto, 2019, p. 291).

2.2.3 Ethical Concerns

AI applications that are error-free and safe for humans are not enough to consider them trustworthy in society. These properties are certainly necessary but not sufficient. The same applies to the usefulness of AI applications since usefulness or functionality alone cannot guarantee sufficient social acceptance. Achieving trustworthiness can only occur with adherence to ethical standards at the same time, in the population and among social stakeholders, both of whom “trustworthy AI” must convince to gain sufficient social acceptance.

Using the labor market and job search as an example can easily demonstrate the fact that this ethical dimension can quickly gain personal relevance in everyday life. In Chap. 1, we raised the question of the integrity of an AI-supported preselection of applicants for vacancies in large companies. While the analysis there is based on the population survey that we carried out for Bremen at the end of 2019, this section aims to extend the perspective to the European level. With Eurobarometer 92.3 (European Commission, 2019), a population survey is available that was fielded at the same time that we conducted the survey in Bremen.Footnote 2 The Eurobarometer involves a few AI-related survey questions, two of which the present section takes up.

2.2.3.1 Regulation Mode and Trust in Institutions

As part of our Delphi, we asked the respondents from science and politics to assess a scenario for the reference year 2030 that Chap. 5 of this volume also addresses:

Since its introduction in 2019, the implementation of the EU Commission’s ethics guideline for trustworthy AI has preoccupied the political discussion and the courts in the country. The focus is on the dispute about the guarantee of people’s right to self-determination and the protection against discrimination, stigmatization, and violation of personal rights through AI systems. Also in focus: questions of liability for self-learning, autonomously acting AI systems, their ethically acceptable programming and the question of what rights robots should be granted who live with people in common households. What would your expectation be: will this scenario become a reality?

Using the standard response scale implemented throughout the surveys of the present study, respondents rated this scenario as likely rather than unlikely (Q1 = 2.6; Q2 = 3.6; Q3 = 4.2). This is the scenario labeled “Conflict” in Fig. 2.1. In the present context, three single follow-up ratings are worth mentioning. Viewed from the reference year 2030 again, the first of these ratings addressed the premise that the EU ethics guideline had been a legal requirement for universal compliance since 2025: “The AI assistance systems developed for recruitment of job seekers have since then to be officially approved to guarantee protection against discrimination, stigmatization, and violation of personal rights.” The respondents rated this scenario as possible to quite probable.

In the present context, both the assumed need for state regulation and the problem of discrimination itself are important. For instance, as part of Eurobarometer 92.3, the survey asked the European population about the need for public policy intervention, if industry providers of Artificial Intelligence can deal with these issues themselves, or no need of specific action to ensure that the development of Artificial Intelligence applications occurs in an ethical manner (survey question QF4). Figure 2.3 graphs the weighted country-specific frequency distributions, and Table 2.3 documents them in the appendix to this chapter.

The graph localizes each country using its three proportions for “public policy intervention is needed” (Axis runs from bottom left [0] to top [1.0]); “industry providers of Artificial Intelligence can deal with these issues themselves” (Axis runs from top [0] to bottom right [1.0]); and “no specific action is needed” (Axis runs from bottom right [0] to bottom left [1.0]). By and large, the resulting spread indicates this situation: (1) There exists a clear tendency toward preferring public policy intervention over self-regulation by the relevant industry. (2) There exists a considerable spread between the poles of “public policy” and “industry,” with, at the same time, (3) significantly less between-country variation in the preference for “no specific action is needed.” Thus, for the most part, a propagated need appears for state or industry regulation. All of this, at least by and large, is because the scatter plot in Fig. 2.3 and the frequency distributions in Table 2.3 show that pronounced differences in the numbers between the countries exist at the same time.

Table 2.1 (first outcome row) also indicates this spread in the preference for public policy intervention. If we compute for each country the percentage of respondents who express this preference (using the weighted frequency distributions), we obtain one such value for each country. Thus, 28 countries yield 28 corresponding percentages, and Table 2.1 displays the summary statistics of the aggregate variable that these 28 values constitute. Such variables now characterize the countries, not the people living in them (aggregate data analysis). For instance, Table 2.1 shows that the lowest observed percentage of people expressing said preference is 19.0, and Table 2.3 in the appendix to this chapter reveals that this value pertains to Romania. At the other pole, Table 2.1 indicates a maximum value of 77.5 percentage points, while Table 2.3 reveals that this value pertains to the Netherlands. All other countries range between these min/max boundaries and constitute a frequency distribution whose summary statistics (first, second, and third quartile) appear in the present Table 2.1.

The same logic applies to the covariates in Table 2.1—for instance, to the five scales that indicate the trust people have in their country’s institutions. Eurobarometer 92.3 asked respondents to indicate if they tend to trust or not trust some institutions (survey question “QA6a”). Each referring to the respondent’s country, the selection involved the “political parties,” “justice/legal system,” “public administration,” “government,” and the “parliament.” Again using weighted frequency distributions, the respective percentage of those who tend to trust an institution (vs. tend not to trust and don’t know) characterize each country, and Table 2.1 displays the summary statistics for each of the resulting five country-level variables on trust in institutions. They also reveal substantial variation across EU countries.

Trust in the institutions of one’s country turns out to be a relevant covariate of the preference for public policy intervention. In principle, this applies to all five institutions but particularly to the legal system and the parliament. The higher a country’s percentage of trust in these institutions, the higher is its percentage in favor of public policy intervention in said regard (r = 0.64 and r = 0.57, respectively). In essence, this result will likely mean that to ensure ethical development of AI applications, advocating state regulation requires sufficient trust in the legal system and the state. Accordingly, the population shares do correspond at the country level.

2.2.3.2 Concerns that AI Could Lead to Discrimination

Following the above Delphi scenario was a request to the respondents to rate a series of ethical aspects. From the perspective of the reference year 2030, one of these ratings addressed possible discrimination against job seekers in the labor market: “Since AI made decisions about recruiting, discrimination, stigmatization, and violation of personal rights when looking for a job have decreased significantly.” We asked this because it would be very possible to use ethically acceptable programming to ensure that when looking for a job, for example, only the applicant’s qualifications count. However, the respondents considered this unlikely. In contrast, they regarded the following scenario as being possible to quite probable: “Since becoming AI-driven, the classification of creditworthiness not only includes characteristics of the loan seeker himself decisions on loans have been increasingly challenged in courts in recent years.”

European Countries Differ Substantially in the Percentage of Concerned People

Discrimination and creditworthiness are two topics that Eurobarometer 92.3 also addresses. The survey asked respondents if they were “concerned that the use of artificial intelligence could lead to discrimination in terms of age, gender, race, or nationality, for example in taking decisions on recruitment, creditworthiness, etc.” (survey question QF3_1). We pursue the same aggregate-data measurement strategy and describe each country by its percentage of people concerned in each respect. Table 2.1 displays the summary statistics of the pertinent frequency distribution, and Table 2.3 in the appendix to this chapter reports the percentages for each involved country. We see that the smallest percentage of concerned people is 16.6 (Estonia) and the largest is 57.1 (Netherlands). We also see that the middle 50% of the values of this frequency distribution are in the range of 27.4 to 38.6, with a mean value (median) of 34.2% concerned people. Figure 2.4 graphs the EU countries in terms of this concern that the use of AI could lead to discrimination.

What explains the considerable differences among the 28 European countries? We remain at the country level and include three additional groups of aggregate variables: the country’s level of prosperity and its inherent risk of poverty, the degree of multi-ethnicity, and trust in fellow men.

Covariate Measures Underlying the Correlation Analysis

Following Eurostat, we measured living standards in three ways. The first calculates living standards by measuring the price of certain goods and services in each country, relative to income in that country. EurostatFootnote 3 explains this measure as follows: “This is done using a common national currency called the purchasing power standard (PPS). Comparing gross domestic product (GDP) per inhabitant in PPS provides an overview of living standards across the EU.” We have taken over the index values Eurostat reports, showing the associated summary statistics in Table 2.1. The same goes for another measure, the poverty risk, i.e., the percentage of people at risk of poverty or social exclusion.Footnote 4 A third official measure is the material deprivationFootnote 5 rate for 2020,Footnote 6 with information from the EU-SILC survey. Eurostat explains this measure: “The indicator is defined as the percentage of population with an enforced lack of at least three out of nine material deprivation items in the ‘economic strain and durables’ dimension.”Footnote 7

In addition, we calculated a fourth measure using information from the European Social Survey (ESS), Round 9, for 2018. It reflects the respondent’s rating of household income in terms of possible feelings of deprivation (ESS Round 9 Source Questionnaire, p. 60, survey question F42). The ESS asked which of four descriptions “comes closest to how you feel about your household’s income nowadays? Living comfortably on present income (1), coping on present income (2), finding it difficult on present income (3), and finding it very difficult on present income (4).” We recoded (3) and (4) into one category and computed its percentage per country, each based on the weighted frequency distribution. For that, we used the recommended post-stratification weight.Footnote 8

Trust in fellow men is another relevant covariate of the concern that AI could lead to discrimination. We use three 11-point rating scales from Round 9 of the European Social Survey (ESS Round 9 Source Questionnaire, p. 5, survey questions A4 to A6). We asked respondents to indicate if “most people can be trusted” (scale score: 10) or if “you can’t be too careful in dealing with people” (scale score: 0). Also, respondents were asked if they think “that most people would try to take advantage of you if they got the chance” (score: 0), or if they would “try to be fair?” (score: 10). Then, respondents were to indicate if they “would say that most of the time people try to be helpful” (score: 10) or “they are mostly looking out for themselves” (score: 0). We stayed with the original scale directions toward “can be trusted,” “try to be fair,” and “try to be helpful,” computing the mean value of each pertinent country-related distribution (again, using the “pspwght” survey weight).

Finally, we approached ethnicity with a survey question from Round 9 of the European Social Survey, asking respondents how they would describe their “ancestry,” in terms of descent or family origins (ESS Round 9 Source Questionnaire, p. 72, survey question F61). Following recommended practice, we recoded all responses to the European Standard Classification of Cultural and Ethnic Groups available in ESS9 Appendix A6,Footnote 9 pp. 28–38, computing the pertaining “pspwght”-weighted country-related distributions and using their relative frequencies for the construction of a multi-ethnicity index. We applied two statistical formulas for this purpose, entropy and the Herfindahl index. Both formulas measure concentration. They produce their smallest index value when all cases concentrate on just one of the categories of a frequency distribution, and they attain their maximum value when the cases are evenly distributed. We compute both measures in their standardized variant as relative entropy

and as normalized “Herfindahl”

where I indicates the number of categories, so that the resulting index values range between min = 0 (one-point distribution) and max = 1 (uniform distribution), respectively.

Correlations at the Country Level

Table 2.1 reports a consistent finding in line with Inglehart’s post-materialism hypothesis of sociological value research. Higher (post-material) needs only arise when elementary (material) basic needs are satisfied. In psychological terms, the hypothesis assumes Maslow’s hierarchy of needs. For instance, for someone who struggles daily for his economic existence, ethical concerns about AI-related discrimination would accordingly be an expression of such a “higher” and, thus, likely deferred need. At the aggregate level, this hypothesis should by and large imply just the observation made in the present analysis, namely, the higher a country’s living standard, the higher its inherent percentage of people concerned that AI could lead to discrimination (r = 0.5). Correspondingly, a country’s risk of poverty and deprivation correlates negatively with its share of concerned people. For example, dealing with ethical questions of AI leading to discrimination evidently tends to presuppose a life of prosperity and economic security. To exaggerate, anyone struggling for their daily economic existence probably has other concerns than possible discrimination through AI. Paradoxically, however, a possible substitution due to AI could affect just this part of the population more than others in the labor market.

In this connection, the strong positive correlation of a country’s living standard with the expression of mean trust in fellow men is especially worth mentioning. As documented in the repository at github.com, referenced above, the correlations are r = 0.65 (trusted), 0.68 (fair), and 0.74 (helpful). Furthermore, mean trust in fellow men consistently correlates negatively and equally substantially with the deprivation measures, and slightly weaker with a country’s inherent poverty risk. Stated briefly, the higher a country’s deprivation and poverty-risk shares are, the smaller is its corresponding mean trust in fellow men. This is noteworthy because it indicates a relevant source of lacking or underdeveloped trust: unfavorable experiences in the daily competition for status and resources, both in economic and social regards. This becomes more understandable by attending to the opposite poles, namely, that “one can’t be too careful in dealing with people,” “most people would try to take advantage of others,” “people are mostly looking out for themselves”—in short, a typical competitive experience. And, as Table 2.1 correlations indicate, the experience entails less concern about AI as a technology that could lead to discrimination.

Finally, structural differentiation, in terms of ethnicity (family origins), turns out to be a relevant covariate of the concern about potentially discriminatory AI applications. As Table 2.1 shows, both indices correlate positively with this concern. This means that structurally more heterogenous countries also tend to have larger shares of people with concerns about AI as a possible discriminatory instrument. This may mean that populations in structurally more heterogeneous countries are more sensitive to the issue of discrimination.

2.2.3.3 Concerns that AI Could Lead to Discrimination: the German Case

Looking inside Germany, Fig. 2.5 reveals some variation across its federal states in the concern that AI could lead to discrimination. At first glance, the spread appears considerable, ranging from 14% in Saxony-Anhalt to 67% in Bremen. However, it shows only partial statistically significant variation around the overall mean of all federal states, which the baseline odds of model 1 estimate (Table 2.2)

and the federal state in which a respondent lives multiplicatively modifies. Such a factor represents an odds ratio that further modifies the baseline, the more the factor deviates from 1.0 (= no impact).

Table 2.2 reports these odds ratios along with the pertinent significance information. Using the usual two-tailed b/s. e ≥ |1.96| criterion, only four federal states deviate significantly from the baseline odds, \( {e}^{b_0} \), namely, Bremen, Berlin, Thuringia, and Saxony-Anhalt.

We deal with the social structure of said “concern” in the enlarged logistic regression equation of model 2. It replenishes the set of explanatory variables with information about gender, age, social class, and education. In this equation, too, the federal states show statistically significant spread around the overall mean for a subset of such states. Net of the effect of gender, age, social class, and education, significant spread remains for Bremen, Berlin, and Saxony-Anhalt. While Thuringia loses statistical significance in this enlarged equation, now Mecklenburg–Western Pomerania and Lower Saxony join the list. While such significant variation involves federal states of the former East and West Germany equally well, we observe a clear East/West difference in terms of the preference for public policy intervention to ensure that AI develops in an ethical manner. In this regard, the percentages are 65% for the West and 52% for East Germany.

According to model 2, some factors, in addition to federal state, that modify the baseline odds multiplicatively significantly modify the odds of having concerns that AI could lead to discrimination. This set of factors includes gender (women), age in years, and social class, whereas the respondent’s age when he/she stopped full-time education (higher education, as measured by age education 20+ vs. rest) contributes only insignificantly to the equation.

Using 1.0 as a benchmark indicating “no impact,” the factors (odds ratios) in Table 2.2 show, for instance, how these factors modify the odds of having concerns. Examples appear among women who are at 0.68 times the odds among men and 1.5 times larger among the middle (vs. working) class. In this connection, “concern” and age relate nonlinearly. This proves the coefficients for age and age-squared, evident by plotting the percentage of concern against six age groups, each covering a 10-year span. Then, the percentage runs from 29.8 in the youngest age group 15+; over 37.0, and 37.8 up to 48.6 in the group aged 45+; falling again to over 40.9 and 35.3 in the group aged 65 + .

2.3 Trust in the State and Ethical Concerns of the Secured and Wealthy

The competitive scenario of a destructive human–robot competition for permanent appointments would hit the well-educated middle class particularly hard. Following the expert opinions, such cutthroat competition appears as an unlikely worst-case scenario. But what if, contrary to expectations, it did happen in the future? Then, the Delphi respondents consider four sociological implications likely: increased importance of education as the key to professional success, demand for lifelong tax-financed protection of an acceptable livelihood, political weakening of the liberal center in favor of the fringes of the spectrum, and increased demand for psychosocial counseling.

But how does the population see it? In short, only a minority considers itself replaceable in the job, and relatively more people expect positive, not negative AI-related effects on their standard of living and quality of life. All in all, the personal concern appears limited.

AI trustworthiness is a big issue, including its ethically acceptable programming. Therefore, finding out how much AI encounters ethical concerns in the population is of vital interest. Based on data from EU statistics and European social research, an aggregate data analysis at the country level shows that ethical concerns do exist; the more prosperous a country or the more economically secure its population, the more that is true. The analysis suggests (for example) that dealing with ethical questions so that AI could lead to discrimination tends to presuppose a life of prosperity and economic security. It also suggests a negative correlation between the personal experience of having to sustain one’s position in the daily competition for status and resources and concerns about AI as a technology that could lead to discrimination. The struggle for daily economic existence will trigger concerns other than possible discrimination through AI.

A survey data analysis for Germany reveals a social-class effect on the odds of ethical concerns that also indicates the relevance of post-materialism. The analysis shows particularly that belonging to the middle class increases these odds. Compared to the working class, the odds ratios are all significantly greater than 1.0 for the lower-middle, middle, upper-middle, and higher classes. In addition, the curvilinear age effect may indirectly indicate a sensitivity to discrimination, reflecting typical biographical experiences in occupational life courses.

The analysis shows a preference for regulation. The European population was asked about the need for public policy intervention, the ability of industry providers of Artificial Intelligence to deal with these issues themselves, or if ensuring that Artificial Intelligence applications are developed ethically requires no specific action. The figures reveal a clearly propagated need for regulation to be met by either state or industry, with a clear preference for state regulation. However, with substantial differences between the EU countries, a significant covariate, namely, trust in the institutions of one’s country, turns out to be a relevant covariate of the preference for public policy intervention, particularly trust in the legal system and the parliament.

Notes

- 1.

Along with a selection of its findings, a report about this IAB study appears in an article published on 16 February 2018 at https://www.welt.de/wirtschaft/article173642209/Jobverlust-Diese-Jobs-werden-als-erstes-durch-Roboter-ersetzt.html

- 2.

Eurobarometer 92.3 (GESIS Study number ZA7601), fieldwork between Mid-November to Mid-December 2019.

- 3.

- 4.

- 5.

- 6.

Figures for 2020 taken. Concerning Italy, figure for 2019 is substituted for the missing value for 2020.

- 7.

- 8.

The so-called pspwght weight

- 9.

References

Adams-Prassl, J. (2019). What if your boss was an algorithm? Economic incentives, legal challenges, and the rise of artificial intelligence at work. Comparative Labor Law and Policy Journal, 41, 123. Retrieved December 29, 2021, from https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3661151

Aghion, P., Jones, B. F., & Jones, C. I. (2019). 9. Artificial intelligence and economic growth (pp. 237–290). University of Chicago Press.

Baldwin, R. (2019). The globotics upheaval. Globalisation, robotics, and the future of work. Weidenfeld & Nicolson.

Bankins, S., & Formosa, P. (2020). When AI meets PC: Exploring the implications of workplace social robots and a human-robot psychological contract. European Journal of Work and Organizational Psychology, 29(2), 215–229.

Decker, M., Fischer, M., & Ott, I. (2017). Service robotics and human labor: A first technology assessment of substitution and cooperation. Robotics and Autonomous Systems, 87, 348–354.

Engel, U., & Dahlhaus, L. (2022). Data quality and privacy concerns in digital trace data. In U. Engel, A. Quan-Haase, S. Liu, & L. Lyberg (Eds.), Handbook of computational social science, Vol. 1 - Theory, case studies and ethics (pp. 343–362). Routledge. https://doi.org/10.4324/9781003024583-23

European Commission. (2019, November–December). Brussels: Eurobarometer 92.3, 2019. Kantar Public, Brussels [Producer]. GESIS, Cologne [Publisher]: ZA7601, dataset version 1.0.0. Retrieved from https://doi.org/10.4232/1.13564

European Commission & European Parliament. (2017, March). Brussels: Eurobarometer 87.1, March 2017. TNS opinion, Brussels [Producer]. GESIS, Cologne [Publisher]: ZA6861, data set version 1.2.0. Retrieved from https://doi.org/10.4232/1.12922

European Social Survey. (2018). ESS round 9 source questionnaire. Retrieved from ERIC Headquarters c/o City, University of London. Retrieved from https://www.europeansocialsurvey.org/

Felten, E., Raj, M., & Seamans, R. (2018). A method to link advances in artificial intelligence to occupational abilities. AEA Papers and Proceedings, 108, 54–57.

Ferrer, X., van Nuenen, T., Such, J. M., Coté, M., & Criado, N. (2021). Bias and discrimination in AI: A cross-disciplinary perspective. IEEE Technology and Society Magazine, 40(2), 72–80.

Ford, M. (2016). The rise of the robots. Technology and the threat of mass unemployment. Oneworld Publications.

Frank, M. R., Autor, D., Bessen, J. E., Brynjolfsson, E., Cebrian, M., Deming, D. J., Feldman, M., Groh, M., Lobo, J., Moro, E., Wang, D., & Youn, H.& Rahwan, I. (2019). Toward understanding the impact of artificial intelligence on labor. Proceedings of the National Academy of Sciences, 116(14), 6531–6539.

Goffart, D. (2019). Das Ende der Mittelschicht, wie wir sie kennen. Focus 12/19, 16, 52–59.

Graetz, G., & Michaels, G. (2015). Robots at work, CEP Discussion Papers, Centre for Economic Performance, LSE. Retrieved December 30, 2021, from https://EconPapers.repec.org/RePEc:cep:cepdps:dp1335

Hamid, O. H., Smith, N. L., & Barzanji, A. (2017, July). Automation, per se, is not job elimination: How artificial intelligence forwards cooperative human-machine coexistence. In 2017 IEEE 15th International Conference on Industrial Informatics (INDIN) (pp. 899–904). IEEE.

Huang, M. H., & Rust, R. T. (2018). Artificial intelligence in service. Journal of Service Research, 21(2), 155–172.

Keynes, J. M. (2010 [1930]). Economic possibilities for our grandchildren. In Essays in persuasion (pp. 321–332). Palgrave Macmillan.

Kleinberg, J., Ludwig, J., Mullainathan, S., & Sunstein, C. R. (2020). Algorithms as discrimination detectors. Proceedings of the National Academy of Sciences, 117(48), 30096–30100.

Lane, M., & Saint-Martin, A. (2021). The impact of Artificial Intelligence on the labour market: What do we know so far? In OECD Social, Employment and Migration Working Papers, No. 256. OECD. https://doi.org/10.1787/7c895724-en

Operto, S. (2019). Evaluating public opinion towards robots: A mixed-method approach. Paladyn, Journal of Behavioral Robotics, 10, 286–297. https://doi.org/10.1515/pjbr-2019-0023

Webb, M. (2019). The impact of artificial intelligence on the labor market. Economics of Innovation eJournal. https://doi.org/10.2139/ssrn.3482150

Wrobel, M., & Althoff, J. (2021). Entwicklung der Substituierbarkeitspotenziale auf dem Arbeitsmarkt in Niedersachsen und Bremen von 2013 bis 2019. IAB-Regional 1|2021. Institut für Arbeitsmarkt- und Berufsforschung (Institute for Employment Research, Nuremberg, Germany]. Retrieved from https://doku.iab.de/regional/NSB/2021/regional_nsb_0121.pdf

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix

Appendix

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this chapter

Cite this chapter

Engel, U., Dahlhaus, L. (2023). Artificial Intelligence and the Labor Market: Expected Development and Ethical Concerns in the German and European Context. In: Engel, U. (eds) Robots in Care and Everyday Life. SpringerBriefs in Sociology. Springer, Cham. https://doi.org/10.1007/978-3-031-11447-2_2

Download citation

DOI: https://doi.org/10.1007/978-3-031-11447-2_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-11446-5

Online ISBN: 978-3-031-11447-2

eBook Packages: Social SciencesSocial Sciences (R0)