Abstract

As “scientists in the crib,” children learn through curiosity, tirelessly seeking novelty and information as they interact—really, play—with both physical objects and the people around them. This flexible capacity to learn about the world through intrinsically motivated interaction continues throughout life. How would we engineer an artificial, autonomous agent that learns in this way – one that flexibly interacts with its environment, and others within it, in order to learn as humans do? In this chapter, I will first motivate this question by describing important advances in artificial intelligence in the last decade, noting ways in which artificial learning within these methods are and are not like human learning. I will then give an overview of recent results in artificial intelligence aimed at replicating curiosity-driven interactive learning. I will then close by speculating on how AI that learns in this fashion could be used as fine-grained computational models of human learning.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

If we were to distill the learning that we see in a child’s playroom into a computer program, how would we? We might start by describing essential properties – the “engineering specifications” of childhood learning. Early childhood learning is incredibly interactive (Fantz 1964; Gopnik et al. 1999; Begus et al. 2014; Goupil et al. 2016; Twomey and Westermann 2018). Children play, grabbing and manipulating objects, learning about the properties and affordances of their worlds. Their learning is both autonomous and social. They engage in incredibly complex self-play, yet they also learn from demonstration and imitation (Tomasello et al. 1993; Tomasello 2016). Further, their behavior is curiosity-driven, satisfying not only instrumental needs, but also intrinsic motivations to understand and control (Kidd et al. 2012; Dweck 2017). In engaging in these activities, they build powerful, general representations about their worlds, including those that give them a sense of intuitive physics (Spelke 1985) and intuitive psychology (Colle et al. 2007; Woodward 2009).

While we know a great deal about childhood learning, our knowledge falls far short of being able to engineer this sort of learning within an artificial system. While Artificial Intelligence (AI) has advanced dramatically in recent years, how it learns is in many ways different from how children learn. Most artificial systems do not learn from this messy, interactive, social process, but rather on carefully curated datasets or large amounts of experience from simple, limited environments. Most AI learning behaviors are driven by handcrafted motivations.

Yet in recent years, artificial intelligence has grown increasingly inspired by the flexible, robust learning seen in childhood. Developmental psychology and AI have grown increasingly interwoven through attempts to replicate these sorts of learning processes (Smith and Slone 2017). This interweaving is hoped to be of mutual benefit for both fields. Advances in our understanding of human learning should help us build these sorts of artificial systems. In turn, the enterprise of trying to build curious, interactively learning artificial systems helps refine the questions we ask of our own cognition. Further, if we are successful in this engineering endeavor, AI may be able to serve as precise computational models of our learning.

In this chapter, I endeavor to describe recent works in the artificial intelligence of curiosity and interactive learning, as well as potential payoffs, to a broad audience within education and the learning sciences. I will begin by outlining two exemplar AI successes of the early 2010s: what they accomplished, ways that they reflect human learning, and ways in which they differ. I will then describe several recent results aimed at closing this gap. Lastly, I will speculate on how these efforts might benefit psychology, education, and the learning sciences, with a focus on their potential for modeling our learning in early childhood.

2 AI Successes of the Past Decade

In what follows, I will describe in broad strokes two large steps forward AI has taken within the last decade, focusing on (1) deep learning for computer vision and (2) deep reinforcement learning applied to single-player and competitive games. I will describe what it means for these artificial systems to succeed, note ways in which these resemble human learning, and highlight several of the differences between the ways these systems learn and the ways humans learn. To be very clear, this does not represent a representative survey of important AI advances of the past decade. However, this should help motivate more recent work in curiosity-driven, interactive artificial learning.

If you were shown a picture of a set dinner table, you could likely name just about every object (cups, bowls, plates, napkins, ...) and describe relations between various objects (“the plates are on top of the table,” “the chair is pulled under the table”). Likewise, if you were shown a video of a group of your friends, you could identify each of them immediately. You can make judgments about their internal states (“Kate is happy”), name a wide range of activities they are performing (“Rachel is walking,” “Pedro is waving”), and even infer goals and intentions (“Ruth is trying to get the others to walk over there.”). Computer vision is the domain of engineering artificial systems that can make these sorts of high-level judgements from image and video data. The capabilities of computer vision systems have steadily increased over the past several decades, and the so-called deep learning revolution has brought about dramatic improvements since the early 2010s.

How do we judge improvement? After all, these visual capacities could be interpreted and tested in different ways – assessing intelligence is inherently subjective. The field of AI grapples with this constantly, and much effort is spent on finding good benchmarks with which we can measure success. Benchmarks typically consist of a dataset or a virtual environment upon which an artificial system is supposed to perform a task, as well as a set of performance metrics with which to judge success at this task. In a typical cycle of AI research, a group of researchers propose a new benchmark (usually meant to reflect some challenging cognitive ability that humans possess), they and others show whether or not existing methods perform well on this benchmark (useful benchmarks are those which existing or obvious approaches fail on), and the community engineers new systems aimed at high performance (while often modifying the data/environment, instructions for use, and performance metrics along the way).

To give a concrete example benchmark for computer vision, we will examine ImageNet (Deng et al. 2009; Russakovsky et al. 2015), perhaps the prototypical success story coming out of the deep learning revolution. ImageNet contains millions of images of objects, each one labeled as one of a thousand categories (“centipede,” “street sign,” “balloon”). The task here is to build an artificial system which takes, as input, an image, and outputs the correct object category name. The data are divided into a training set (containing many images of each of the 1000 categories), with which an artificial system is meant to “learn” the pattern between the images and labels, and a separate test set (consisting of new images) upon which the trained artificial system is evaluated. It turns out that one can create an artificial system that solves this task with high accuracy (Krizhevsky et al. 2012) – in some cases, perhaps superhuman accuracy (He et al. 2016; Russakovsky et al. 2015).Footnote 1

In what ways does this resemble human learning?Footnote 2 First, we do describe the model as learning from training data, and being tested on test data. The model consists of a large number (usually in such applications, many millions) of parameters, and these parameters are used to define a mathematical function that takes, as input, an image, and outputs a probability for each object name. For each example image and category label, we can associate a loss that is a measure of how bad the model’s output currently is. If the model thinks the correct object name is unlikely, the loss is high, whereas if it is likely, the loss is low. At the beginning of training, these parameters are assigned values randomly (there is some art to choosing good initializations and bad), and the model’s parameters are optimized to produce low loss on the training data. At the end of training, the trained model should be able to make reasonable predictions on the training data.Footnote 3 Performance is then measured on test data by holding fixed the parameters learned during training and seeing if the trained model generalizes to the new data – intuitively, this prevents the model from simply “memorizing” the train data.

This artificial learning process has a number of properties that bare some analogy to human learning. For example, we only really expect a trained model to perform well on data that looks sufficiently like training data. If a model has only been trained on photographs taken during the day, we do not expect it to work well at night,Footnote 4 and if the training data has few examples of a particular object, the model will likely struggle to predict new instances of that object. The model can get confused by correlates: for example, if all German shepherds are depicted in the grass, and all Dobermans are in the snow, then a German shepherd in the snow could easily get mislabeled. And a model can “overfit” to training data: it is possible to produce models that perform very well during training but very poorly on new examples.Footnote 5

Dramatically, this analogy extends to the neural level. It turns out that trained systems yield the best-known predictive models of the neural activity in the human ventral visual system (Yamins et al. 2014). This represents a dramatic full-circle success story of the interplay between the study of human cognition and AI: these models were inspired by our ventral visual stream, and a model trained to perform well at ImageNet, a challenging task we are good at, yields a useful computational model of our biology. There is a sense in which training a model on ImageNet yields a general visual representation. These artificial systems consist of a sequence of layers of “neurons” that feed into each other. The later layers provide a representation useful for predicting object names, and, it turns out, also useful for performing many other visual tasks. We say that these visual representations are general in that they support transfer learning – they can be used to learn a new, related task with a limited amount of data.

In what ways is this not like human learning? While we can point to countless discrepancies, let me point out two motivating differences. First, this success is one of strong supervision. The ImageNet task provides a prime example of supervised learning: our model is attempting to learn to associate an output (the object name) to each input (the image). In particular, there is a sense in which this supervision is particularly strong: in order to provide the model with these data, humans must carefully curate a labeled dataset. Contrast this with human learning: while humans do sometimes learn this in a similarly supervised way, a great deal of human learning has little explicit, cleanly labeled supervision from others (e.g., a child learning how to manipulate toys), and when there are labels, the label-learning process is much less carefully curated (e.g., first language learning). Second, this artificial learning is passive, not interactive. The dataset it uses to learn is determined ahead of time. The system need not make decisions about what to do in order to learn. Rather, it counts on humans to curate a dataset that it can fit to.

Our second exemplar success, that of reinforcement learning applied to games, contrasts these limitations somewhat, but with its own critical issues. Our example benchmark here is Atari (the “Arcade Learning Environment” (Bellemare et al. 2013)), which consists of a suite of games from the Atari video game console. The objective of Atari is to maximize score.

The framework in which we think about this taskFootnote 6 consists of a back-and-forth process between an environment and an agent which can act within it (Sutton and Barto 2018). At each timestep, the environment provides an observation (e.g., the current game image, or some more explicit state such as where all of the relevant objects are) and reward (e.g., additional score) to the agent, which can then choose from one of a set of actions (e.g., up, down, left, right). Execution of this action leads to the next observation. The goal of a reinforcement learning algorithm is to come up with an action-choosing decision mechanism (called the policy) for the agent that maximizes reward. There are many deep reinforcement learning methods for this – these treat the agent’s experience (observations, actions, and rewards) as training data, upon which a model is optimized.

In what ways do these artificial reinforcement learners, trained on Atari, reflect human learning?Footnote 7 It turns out that one can train artificial reinforcement learners with comparable performance to humans (Mnih et al. 2015) – though, in some ways better, and in some ways worse. Further, unlike in our computer vision example, learning happens through an interactive process. In reinforcement learning, the agent gathers experience by interacting with its environment. Hence, in order to maximize reward, the agent must explore sufficiently so as to get a sense of how its actions affect the environment and what leads to reward, so that it can then seek that reward. As a result, interesting behaviors arise in the agent’s learning process. At first, agent behavior tends to appear random, and as it discovers sources of reward, its behavior looks more regular and deliberate. These “learning trajectories” are often quite interpretable and seem almost human in their successive improvements.

In what ways are these Atari-playing reinforcement learners different from human learners? Again, the differences are manifold, but here are some motivating differences. First, as noted, performance obtained is in some ways superhuman, but in some ways lags. Artificial systems trained specifically on this task can learn to react very quickly and efficiently, leading to scores on some games that will simply dwarf that of humans. On the other hand, they lag behind in several games, for instance, on games with infrequent rewards.

These systems, while not falling explicitly in the category of supervised learning, are in a sense very strongly supervised. In Atari and related environments, for all except the most challenging tasks, the agent gets regular, explicit feedback in the form of reward. For example, the agent gets positive feedback for collecting a coin, or breaking a block, which leads it on its way towards an end goal (e.g., finishing a level). Contrast this with many human behaviors: our explicit, external rewards are often much less frequent – even in everyday tasks such as preparing food, we must set several reward-free steps (assembling the ingredients, putting them together) before we obtain something clearly rewarding. As we will describe in more detail shortly, standard reinforcement learning techniques can fail dramatically when reward functions are not engineered just so.

Further, these high-performance systems require a great deal of experience within the training environment—sometimes, the equivalent of a human playing for many years—in order to obtain performance comparable to a casual human player. That is not to say that we simply should expect artificial systems, trained only on these games, to achieve performance comparable to humans in the amount of time a human takes to learn these games. A reinforcement learning algorithm, started de novo, is far from a human trying a game for the first time. Humans can recruit from their representations gained in experiences throughout their lives. As a result, they likely have strong guesses about how their actions affect the environment and what leads to reward — for example, this is how a body affects its surroundings, and the gold coins probably mean reward.

This points to an important difference in what we are asking artificial systems to do, in training solely on Atari games. As experience is narrowly within the context of the game, the artificial learner is not asked to learn general-purpose representations about the world and then recruit those in order to quickly become proficient at the game. While models need to “know” something about the physical dynamics of game environments (e.g., if I move forward now, I fall off this cliff), this is very specific to the task. We, on the other hand, display a remarkable ability to recruit flexible, general representations in order to do well in new environments. For instance, if you were to enter a new, fully stocked apartment for the first time, you could, with perhaps a few minutes of looking around, make a cup of coffee. A flexible coffee maker is, sadly, beyond the capacities of AI to this day.

3 Artificial Curiosity and Interactive Learning

Thus far, I have presented two exemplar AI successes from the early 2010s: deep learning for computer vision, and reinforcement learning as applied to Atari games. I emphasized both ways in which we can think of these as reflecting aspects of human learning as well as ways in which this artificial learning falls short. At a high level, two critical limitations are

-

Reliance on supervision – for computer vision, getting image category labels, and for reinforcement learning, a dependence on carefully crafted reward functions

-

The extent to which learning is not interactive – for computer vision, the AI system learns passively on a curated dataset, and for reinforcement learning, the agent interacts but does not learn general representations useful for many settings

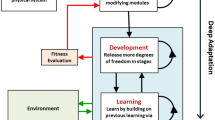

The above limitations argue for the development of artificial systems that (1) learn flexible representations that can be recruited for a wide range of tasks, (2) do so through interaction with their environments and others within them, and (3) do these things not through explicit, strong supervision signals, but rather learn in a more self-supervised manner, using more generic, flexible motivations. Here, I describe these desired traits in further detail, and, following that, I will outline several example successes along these lines.

Robust, flexible representation learning. The AI system learns general-purpose representations that are useful for accomplishing a wide range of tasks. To make this more concrete, let us look at several examples.

-

1.

Sensory representations: From the raw inputs of our visual system, humans must be able to perceive and identify objects, understand how objects might be used, and so on. Human visual systems process raw visual information in order to be able to make these sorts of judgements.

-

2.

Physical representations: As described in the introduction, humans have models of intuitive physics (Spelke 1985) that allow them to anticipate the world around them and perhaps facilitates plans. Some general-purpose ability to understand how the physical world evolves, in particular in response to actions, seems critical.

-

3.

Representations of others: Humans possess intuitive psychology (Woodward 2009; Colle et al. 2007), allowing them to assess the goals, affect, and internal states of others. We possess theory of mind and a flexible ability to mentalize about others.

Learning through interaction. The AI system acts upon its environment, and it observes the result of this. How the AI system behaves shapes what it learns.

Learning through self-supervision, with generic, flexible motivations. The AI system should not have access to explicit supervision signals (e.g., category labels, except when these are provided through environment interaction, or handcrafted reward signals). Instead, its learning depends only on what it observes through interaction. Further, its behavior should be shaped by generic, intrinsic motivations. These may include instrumental need satisfaction (for a human, food, water, warmth, etc.) and more generic motivations: information- or novelty-seeking (“curiosity”), control of environment, and social belonging, to name a few. As in the theory of Dweck (2017), such agents should be able to satisfy certain fundamental needs, and in order to do that, must be able to support the execution of and decision on a wide range of intermediate goals.

4 Examples of Artificial Curiosity and Interactive Learning

Now that I have described desired properties for more human-like artificial learning, I will dive into several works in this direction. I will begin with curiosity used as an exploratory aid, before moving to representation building for planning, and then ending with developmentally inspired curiosity-driven learning. This field has been incredibly active over the past several years, supported by decades of critical foundation work, and what is concretely described here represents only a sliver of these efforts. Further, it should be emphasized that the efforts described are several cases that build off of the successes described in the previous section and do not represent the first attempts to bring curiosity to AI. For a relevant survey of earlier attempts, please see Schmidhuber (2010), and Oudeyer et al. (2007) for a particularly relevant developmentally inspired work.

Imagine an exceedingly simple experiment: you are in a room with a button, and that button opens a door to another room, in which, at the other end, sits a cookie. After you eat the cookie, the environment resets, and you have the opportunity to start again and find the cookie. If you were in this environment but were not explicitly told about the cookie, you would probably find it quite quickly: you wonder what the button does, you find that it opens the door, you look around the other room, and, upon seeing the cookie, you recognize it as something you would like to eat. After the reset, if you would like to eat another cookie, you can go right to it, easily.

Yet imagine being in this environment with limited background knowledge (no knowledge of what buttons do, or how cookies taste) and with no sense of curiosity about the unknown. As a result, your exploratory behavior is completely unmotivated, and unless you somehow manage to put the cookie in your mouth, you do not realize that it is a good thing to do. Lacking any particular motivation, your behavior might look essentially random. Unless you somehow manage to, by chance, push the button, walk through the door, go to the other end of the room, and put the cookie in your mouth, you get absolutely no positive reinforcement for this chain of behaviors. As a result, it takes you an extraordinarily long time to begin to eat cookies.

This is an illustration of the sparse reward problem, an issue that plagues the standard reinforcement learning techniques used to solve Atari. Such systems need to be given much more handcrafted rewards (e.g., pushing buttons, going through doors, moving towards cookies) in order to efficiently reach high performance.

But what if we give the AI intrinsic motivation, or curiosity? The agent should be rewarded not only by the cookie, but also by finding situations that are somehow interesting. This leads the agent to try new things: to press the button, to go through the door, to explore the room behind the door. How exactly this should be done is a matter of active research, with many proposed techniques. Several techniques (Pathak et al. 2017; Burda et al. 2018a; Pathak et al. 2019) involve a world model (Schmidhuber 2010; Ha and Schmidhuber 2018), a predictive model of the environment (think of this as a potential instantiation of a representation – an example would be a forward model, which predicts what happens if the agent chooses a particular action). The world model is self-supervised: it learns from experience. The agent’s intrinsic motivation, then, relates to how the world model responds to new experience. For instance, it might be rewarded by experience it finds difficult to model,Footnote 8 or experience that leads it to make learning progress.Footnote 9

Aside from encouraging useful exploratory behaviors, world models are, in theory, useful for planning. If the agent knows what states of the environment provide reward, and it knows, given the current state of the environment and an action it chooses, how the state changes, it can “imagine” the results of successive action choices and choose ones that lead to reward. This is the essential idea behind model-based reinforcement learning: the agent somehow uses a world model to plan.

For years, researchers struggled to make this intuition into a high-performance technique. The first techniques successful on Atari, for instance, are model-free. In deep Q-learning (Mnih et al. 2015), for instance, the agent learns a function Q that takes as input the environment’s current state s and a proposed action a. Q(s, a) is then meant to estimate the total of all future rewardsFootnote 10 if the agent chooses action a in state s and then follows a policy for the rest of its actions. Q, if learned properly, tells the agent how to act – pick a so that Q is biggest! Note that this does not explicitly require a world model, but rather, its predictions are entirely in terms of rewards. Intuitively, this seems limited – if the agent is given a different task with a different reward, it is unclear how to transfer that knowledge. Further, the method at least seems inefficient: much information seems to be thrown out when predictions are all in terms of rewards.

Model-based reinforcement learning seems an obvious alternative. World models capture information about the environment, independently of reward, and if the agent’s task changes, this can be repurposed in a straightforward way. Yet model-based approaches lagged behind. One intuitive difficulty is simple: if the agent’s predictive model is wrong, planning can go horribly wrong. Only recently, model-based approaches have been shown to be competitive with, and in some ways superior to, model-free approaches (Hafner et al. 2019; Schrittwieser et al. 2020).

With this has come intriguing new advances that have brought us closer to the framework of the previous section. For example, in Sekar et al. (2020), an agent learns a world model independently of any objective – it is simply intrinsically motivated to improve its world model. It then can use this world model to accomplish a variety of tasks. This is tested in the DeepMind Control Suite environment (Tassa et al. 2018), in which an agent learns how to control its body, and is tested on its ability to walk forward, backward, and perform other physical feats. They demonstrate the agent’s ability to explore, build a world model, and then quickly perform these physical tasks when asked to do so. It is, at least in a sense, able to learn a general representation that it can recruit for performing a variety of specific tasks.

This sort of curiosity-based learning, then, moves us a step closer to the sort of learning we see in human development, so it is natural to ask: what does artificial curiosity achieve when placed in developmentally inspired environments? In the remainder of this section, I will describe two efforts in this direction: the first, in the domain of learning sensory and physical representations, the second, in the domain of representations of others.

In our first work (Haber et al. 2018), we designed a simple “playroom”: a 3D virtual environment in which an agent can move about a room and interact with a set of blocks (“toys” – see Fig. 1). For simplicity, the agent lacks a complex embodiment and instead can simply choose to move forward, backward, or turn. It has a limited field of view, and if it has a toy in view and that toy is sufficiently close, it can apply force and torque to the object.

The “toys” used in the 3D virtual environment in (Haber et al. 2018)

The environment provides no extrinsic reward. We sought to understand if intrinsic motivation enables the agent to develop “play” behaviors, and if, in doing so, it develops useful sensory and physical representations. To build these representations, the agent trains a simple inverse dynamics world model: from a sequence of raw images, could it tell what action was taken? We could then test the capacity of these representations by evaluating their usefulness in performing related visual tasks.Footnote 11

Without any intrinsic motivation, the agent interacts in an essentially random way, and the agent interacts with toys in less than 1% of its experience. As a result, while its world model becomes good at understanding the motion of its body, it takes a very long time to understand object interaction, and its visual representations are not useful for tasks related to these objects.

Yet if the agent is rewarded by finding examples that are difficult for its world model, complex behaviors arise. In a room with one toy, we found that the agent moves about its environment somewhat randomly before suddenly taking an interest in objects: it consistently approaches and interacts with its toys. Correspondingly, its world model first becomes proficient at understanding actions that involve only its body, and then, after gaining more experience with toys, its toy-dynamics understanding increases. Interestingly, if the environment contains two toys, the agent starts in much the same way, but after a period of time, it engages in a qualitatively different behavior: it gathers the toys together and interacts with them simultaneously. We found that the more sophisticated the agent’s behavior, the higher performance its physical and sensory representations. We next sought to extend this work on curiosity-driven learning from the physical to the social (Kim et al. 2020). We designed a simple environment meant to reflect aspects of social experience very early in life. Here the “baby” agent is surrounded by a variety of stimuli and can only “interact” with its environment by deciding what to look at. The stimuli we are surrounded with early in life—and throughout life—are wildly diverse. Some stimuli are static, like blocks: they only really do much when we physically interact with them. Others are dynamic, but really very regular: ceiling fans, mobiles, car wheels (and really, quite a lot of audio stimuli). On the opposite extreme, some stimuli are random, or noisy: they exhibit dynamics that are immensely challenging, if not impossible, to fully predict. The fluttering of leaves, the babble of a far-off crowd, the shimmering of light reflected off of water—in fact, while we pay little attention to most of these, most of the time, the noisy, random, and confusing surround us! Yet amidst this confusion are a particularly interesting class: animate stimuli. They exhibit incredibly complex behaviors that are very much unlike static or dynamic but regular inanimate objects (they exhibit self-starting motion, for instance, and are impossible to fully predict) yet are in some ways very regular — they act according to goals, affect, beliefs, and personality.

How do we design agents that can decide what to look at, in order to learn about these sorts of surroundings? What if we want this agent to learn as much as possible, as quickly as possible? Further, how do people make these sorts of visual attention decisions, when presented with novel stimuli? In answering these questions, we were faced with a problem: all of these different types of stimuli tend to look quite different. This would complicate the design of machines that can learn from all of them, and confound any human subject experiment. We hence designed environments that took these classes of stimuli—static, regular, noise, and animate—and stripped them down to basic informational essentials (Fig. 2). We designed spherical avatars that executed these sorts of behaviors with simple motion patterns. For instance, the regular stimuli simply rolled around in circles, or back and forth in a straight line. The noise stimuli performed a sort of random walk: randomly lurching in one direction, followed by another. We designed a wide range of stimuli meant to be animate. One chases another. One navigates towards a succession of objects. Another plays a sort of “peekaboo” with the viewer: if the viewing agent looks at the stimulus, it darts behind an object, and when the viewing agent looks away, it peeks out again.

“Social” virtual environment. The 3D virtual environment from Kim et al. (2020). The curious agent (white robot) is centered in a room, surrounded by various colored spheres contained in different quadrants, each with dynamics that correspond to a realistic inanimate or animate behavior (right box). The curious agent can rotate to attend to different behaviors as shown by the first-person view images at the top. See https://bit.ly/31vg7v1 for videos

We then experimented with different intrinsic motivation rewards and found that different ones led to drastically different behaviors. For instance, if an agent is motivated to find difficult examples for its world model, as it did in the previous study, it becomes fixated on the noise stimuli, as it is never able to precisely learn this phenomenon (an example of the white noise problem). If an agent is motivated to find easy examples, it spends most of its time on the simplest stimuli. Yet if an agent is motivated to make progress in modeling its world, it finds a balance. As noise stimuli are impossible to fully predict, it ceases making progress on them and it gets “bored” of them. This allows it to spend more time on the challenging but learnable regularities seen in animate stimuli. How progress should be estimated, precisely, is an intensely challenging problem – this is strongly related to computing expected information gain and is a key computational challenge found in active learning and optimal experiment design literature (Cox and Reid 2000; Settles 2009). We tried several methods and found one to exhibit a characteristic “animate attention” bump (See Fig. 3).

Emergence of animate attention. The bar plot shows the total animate attention, which is the ratio between the number of time steps an animate stimulus was visible to the curious agent, and the time steps a noise stimulus was visible. The time series plots in the zoom-in box show the differences between mean attention to the animate external agents and the mean of attention to the other agents in a 500-step window, with periods of animate preference highlighted in purple. Results are averaged across five runs. γ-Progress and δ-Progress are progress-based intrinsic rewards, Adversarial equates reward with loss, Random chooses actions randomly, RND is a novelty-based intrinsic reward, and Disagreement rewards based on variance of predictions between several independently initialized world models

We were able to track not only a variety of different learning behaviors, but also a variety of learning outcomes. The progress-based method that exhibited animate attention was able to learn the learnable (static, regular, animate) behaviors the best, and it was able to learn them fastest.Footnote 12 Agents that fixated on noise stimuli, or divided their attention more evenly between all stimuli, lagged behind in learning animate behaviors.

In ongoing work, we seek to understand what sort of intrinsic motivation best corresponds to human behavior. To answer this, we designed a physical version of the above environment. For the stimuli, we used Spheros (Sphero 2021), simple spherical robots with a gyroscopic motor that can be programmed or controlled remotely.Footnote 13 We recruited adults and tracked their gaze while they were asked to simply view these robotic scenes. Ongoing analyses will allow us to compare human attention behavior to artificial attention behavior, giving us a sense of what sorts of motivations humans have in these simple curiosity-driven learning environments.

5 Artificial Interactive Learning as Models of Human Learning

Thus far, we have examined gaps between learning in human development and learning in artificial systems, and we have discussed recent advances in artificial intelligence that are filling aspects of this gap. To be sure, the gap remains incredibly wide, but continuing advances in our understanding of human learning should help us close this gap. Not only can a fine-grained understanding of learning processes tell us how to engineer new artificial systems, but it also can tell us the right sorts of benchmarks and “specs” we should be engineering for. One of the most important questions learning science can teach artificial intelligence is simply: precisely what sorts of learning capacities should we try to engineer? ImageNet came out of this sort of thinking. It represents a difficult task that we know is important and doable for humans, and this combination helped bring about great success in the last decade.

Yet let us turn to an important speculative question: how might this AI enterprise help us better understand human learning? Of course, the enterprise of trying to build artificial systems that learn more like we do is broadly thought to be useful for better understanding how we learn. At the coarsest level, attempting to build these sorts of systems directs our energies towards understanding critical aspects of how humans learn. Engineering refines the questions we ask of cognition. In short, we expect a virtuous cycle of advances from the fields of cognitive and learning sciences and artificial intelligence, and the very act of doing this sort of research should yield us better models of human learning.

But what would it mean for AI to actually model human? We engineer an agent architecture, which, by placing it in an environment, yields us behaviors, representations, and learning. Different agent architectures, exposed to different environments, yield different behaviors, abilities, and representations. This association between agent architecture, environment, behaviors, learning outcomes, and representations becomes useful if it can yield predictive models of corresponding features of human learning. For instance, we might like to understand, in the human realm, what environments and/or behaviors tend to lead to what learning. Or, perhaps more impactfully, we would like to know, given an individual’s past environment (perhaps coupled with knowledge of past behavior and/or learning outcomes), what sort of environment should lead to desired learning outcomes.

But how might we get from artificial learning to human learning, in this way? Success here seems to hinge on a sort of task-driven modeling hypothesis (Yamins et al. 2014). That is, we must be able to identify human capacities and behaviors such that (1) we are able to come up with architectures that sufficiently accurately reflect these identified human behaviors and capacities, and (2) these capacities and behaviors represent sufficiently strong constraints that a limited collection of architectures satisfies them. This allows us to “triangulate” agent architectures that produce predictive models of human behavior. In essence, we hope that we can reduce this modeling problem to an engineering problem: create an artificial system that has the right sorts of capacities and behaviors, and since not many systems satisfy all of these properties, the result is human-like learning.

Early developmental learning, we hope, will be tractable for this sort of approach. Early developmental learning is critically important for the entire life course, and hence it is reasonable to hypothesize that humans are in a sense optimized to do this very well (though, surely, there is not just one concrete objective, or one “optimal” way of doing this). Hence, it is thought that an “ImageNet of developmental learning” can be found – some benchmark that allows us to refine artificial systems that then are able to model the developmental process in a fine-grained way. To do this, it seems likely that we will need extensive fine-grained data collection of developmental learning environments, behaviors, and learning outcomes.

One particularly exciting aspect of this modeling effort lies in its possibility to model not just the typical learner (which, surely, does not truly exist!), but rather, the full diversity of human learners. As a case study of this sort of thinking, consider the Autism Spectrum Disorder (ASD). ASD has historically been characterized by differences in high-level social behaviors and skills (Hus and Lord 2014). Yet over the past two decades, an intriguing new picture has emerged. ASD children exhibit differences in play behavior as well as sensory sensitivities (Robertson and Baron-Cohen 2017). Further, ASD children exhibit differences in social attention – this has been across 2–6 months of age (Jones and Klin 2013; Shic et al. 2014; Moriuchi et al. 2017). In short, evidence strongly supports the claim that the sort of early interactive learning we are attempting to engineer in artificial systems is somehow different in ASD children relative to the general population. Understanding the phenomenon of this difference on a computational level may help us reconceptualize learning differences like these, as well as replace coarse diagnostic criteria with a much finer-grained picture and more empowering learning tools.

Notes

- 1.

The extent to which this is “superhuman” is worth a caveat. Russakovsky et al. (2015) benchmark humans and point out the challenge of doing so. To perform well at ImageNet, a human must become familiar with the 1000 categories – there is a difference between intuitively having a good sense of what is in an image, and being able to select the right category. It takes considerable time to learn how to do this well, and only a limited sample of human “experts” was used for comparison.

- 2.

To keep this discussion simple, I am describing early deep learning for computer vision results (e.g. Krizhevsky et al. (2012)). More recent results certainly add many caveats to these statements.

- 3.

For those of you who are familiar with training statistical models, this simply uses standard statistical modeling techniques, but deep learning models tend to involve far more parameters than a linear regression.

- 4.

One might protest that this is decidedly not like how humans learn: when we are shown an object in sunlight, we can usually recognize it in the dark! But the question of what counts as a fair comparison arises – this might be more akin to the extreme deprivation of never seeing night. Arguments for the unique capacity for humans to generalize should consider the sorts of experience upon which we are training machines.

- 5.

Indeed, much of the art of choosing good model architectures – the particular ways parameters are used – amounts to finding ones that not only fit well to training data but also generalize well to test data.

- 6.

I should emphasize: I am simplifying the formalism here – see Markov Decision Processes, or Partially Observed Markov Decision Processes (Sutton and Barto 2018).

- 7.

Again, to keep this discussion simple, this really applies to early deep reinforcement learning results applied in this domain (e.g., Mnih et al. (2015)). Many nuances apply as we approach more recent work.

- 8.

This equates the interesting with the difficult. This is potentially problematic! If the agent encounters something it cannot model, it is then drawn to get stuck on this. This is sometimes called the white noise problem (Schmidhuber 2010; Pathak et al. 2019). Considerable attention has been paid to resolving this (Pathak et al. 2017; Burda et al. 2018a, b; Kim et al. 2020).

- 9.

Not all techniques involve world models – e.g., some involve exploration through arbitrary goal-setting (Florensa et al. 2018; Nair et al. 2018; Campero et al. 2020). Though, perhaps this dichotomy is fairly artificial. If one has a fairly inclusive definition of what “world model” means (e.g., to include a wide range of representation learning techniques), many of these techniques can be lumped under this banner.

- 10.

Really, a discounted sum that weights rewards farther into the future less, which, as long as the reward stays bounded, keeps this from being infinite.

- 11.

We used transfer learning: with these visual representations as inputs, we trained simple (linear) models for the positions and names of the objects, as a sort of “visual acuity test.”

- 12.

Of course, we are making subjective choices when deciding how to “test” these agents. We presented them with various situations in which they view the various stimuli and had them predict the future evolution of these stimuli – e.g., we had them “play peekaboo” with the peekaboo agent, and then examined world model predictions. This might be thought of as a sort of dynamical and social acuity test.

- 13.

These are marketed not as research robots but as educational toys.

References

Begus, K., Gliga, T., Southgate, V. (2014). Infants learn what they want to learn: Responding to infant pointing leads to superior learning. PloS one, 9(10), e108817.

Bellemare, M. G., Naddaf, Y., Veness, J., Bowling, M. (2013). The arcade learning environment: An evaluation platform for general agents. Journal of Artificial Intelligence Research, 47, 253-279.

Burda, Y., Edwards, H., Storkey, A., Klimov, O. (2018a). Exploration by random network distillation. arXiv preprint arXiv:1810.12894.

Burda, Y., Edwards, H., Pathak, D., Storkey, A., Darrell, T., Efros, A. A. (2018b). Large-scale study of curiosity-driven learning. arXiv preprint arXiv:1808.04355.

Campero, A., Raileanu, R., Küttler, H., Tenenbaum, J. B., Rocktäschel, T., Grefenstette, E. (2020). Learning with amigo: Adversarially motivated intrinsic goals. arXiv preprint arXiv:2006.12122.

Colle, L., Baron-Cohen, S., Hill, J. (2007). Do children with autism have a theory of mind? A non-verbal test of autism vs. specific language impairment. Journal of autism and developmental disorders, 37(4), 716-723.

Cox, D. R., & Reid, N. (2000). The theory of the design of experiments. CRC Press.

Deng, J., Dong, W., Socher, R., Li, L. J., Li, K., Fei-Fei, L. (2009, June). Imagenet: A large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition (pp. 248-255). IEEE.

Dweck, C. S. (2017). From needs to goals and representations: Foundations for a unified theory of motivation, personality, and development. Psychological review, 124(6), 689.

Fantz, R. L. (1964). Visual experience in infants: Decreased attention to familiar patterns relative to novel ones. Science, 146(3644), 668-670.

Florensa, C., Held, D., Geng, X., Abbeel, P. (2018, July). Automatic goal generation for reinforcement learning agents. In International conference on machine learning (pp. 1515-1528). PMLR.

Gopnik, A., Meltzoff, A. N., Kuhl, P. K. (1999). The scientist in the crib: Minds, brains, and how children learn. William Morrow & Co.

Goupil, L., Romand-Monnier, M., & Kouider, S. (2016). Infants ask for help when they know they don’t know. Proceedings of the National Academy of Sciences, 113(13), 3492-3496.

Ha, D., & Schmidhuber, J. (2018). Recurrent world models facilitate policy evolution. arXiv preprint arXiv:1809.01999.

Haber, N., Mrowca, D., Wang, S., Fei-Fei, L., Yamins, D. L. (2018, December). Learning to play with intrinsically-motivated, self-aware agents. In Proceedings of the 32nd International Conference on Neural Information Processing Systems (pp. 8398-8409).

Hafner, D., Lillicrap, T., Ba, J., Norouzi, M. (2019). Dream to control: Learning behaviors by latent imagination. arXiv preprint arXiv:1912.01603.

He, K., Zhang, X., Ren, S., Sun, J. (2016). Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 770-778).

Hus, V., & Lord, C. (2014). The autism diagnostic observation schedule, module 4: revised algorithm and standardized severity scores. Journal of autism and developmental disorders, 44(8), 1996-2012.

Jones, W., & Klin, A. (2013). Attention to eyes is present but in decline in 2–6-month-old infants later diagnosed with autism. Nature, 504(7480), 427-431.

Kidd, C., Piantadosi, S. T., Aslin, R. N. (2012). The Goldilocks effect: Human infants allocate attention to visual sequences that are neither too simple nor too complex. PloS one, 7(5), e36399.

Kim, K., Sano, M., De Freitas, J., Haber, N., Yamins, D. (2020, November). Active world model learning with progress curiosity. In International conference on machine learning (pp. 5306-5315). PMLR.

Krizhevsky, A., Sutskever, I., Hinton, G. E. (2012). Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems, 25, 1097-1105.

Mnih, V., Kavukcuoglu, K., Silver, D., Rusu, A. A., Veness, J., Bellemare, M. G., et al. (2015). Human-level control through deep reinforcement learning. Nature, 518(7540), 529-533.

Moriuchi, J. M., Klin, A., Jones, W. (2017). Mechanisms of diminished attention to eyes in autism. American Journal of Psychiatry, 174(1), 26-35.

Nair, A., Pong, V., Dalal, M., Bahl, S., Lin, S., Levine, S. (2018). Visual reinforcement learning with imagined goals. arXiv preprint arXiv:1807.04742.

Oudeyer, P. Y., Kaplan, F., Hafner, V. V. (2007). Intrinsic motivation systems for autonomous mental development. IEEE transactions on evolutionary computation, 11(2), 265-286.

Pathak, D., Agrawal, P., Efros, A. A., Darrell, T. (2017, July). Curiosity-driven exploration by self-supervised prediction. In International conference on machine learning (pp. 2778-2787). PMLR.

Pathak, D., Gandhi, D., Gupta, A. (2019, May). Self-supervised exploration via disagreement. In International conference on machine learning (pp. 5062-5071). PMLR.

Robertson, C. E., & Baron-Cohen, S. (2017). Sensory perception in autism. Nature Reviews Neuroscience, 18(11), 671-684.

Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., Ma, S., et al. (2015). Imagenet large scale visual recognition challenge. International journal of computer vision, 115(3), 211-252.

Schmidhuber, J. (2010). Formal theory of creativity, fun, and intrinsic motivation (1990–2010). IEEE Transactions on Autonomous Mental Development, 2(3), 230-247.

Schrittwieser, J., Antonoglou, I., Hubert, T., Simonyan, K., Sifre, L., Schmitt, S., et al. (2020). Mastering atari, go, chess and shogi by planning with a learned model. Nature, 588(7839), 604-609.

Sekar, R., Rybkin, O., Daniilidis, K., Abbeel, P., Hafner, D., Pathak, D. (2020, November). Planning to explore via self-supervised world models. In International Conference on Machine Learning (pp. 8583-8592). PMLR.

Settles, B. (2009). Active learning literature survey.

Shic, F., Macari, S., Chawarska, K. (2014). Speech disturbs face scanning in 6-month-old infants who develop autism spectrum disorder. Biological psychiatry, 75(3), 231-237.

Smith, L. B., & Slone, L. K. (2017). A developmental approach to machine learning?. Frontiers in psychology, 8, 2124.

Spelke, E. S. (1985). Object permanence in five-month-old infants. In Cognition.

Sphero. (2021). https://sphero.com/. Accessed: 2021-10-10.

Sutton, R. S., & Barto, A. G. (2018). Reinforcement learning: An introduction. MIT press.

Tassa, Y., Doron, Y., Muldal, A., Erez, T., Li, Y., Casas, D. D. L., et al. (2018). Deepmind control suite. arXiv preprint arXiv:1801.00690.

Tomasello, M. (2016). The ontogeny of cultural learning. Current Opinion in Psychology, 8, 1-4.

Tomasello, M., Kruger, A. C., Ratner, H. H. (1993). Cultural learning. Behavioral and brain sciences, 16(3), 495-511.

Twomey, K. E., & Westermann, G. (2018). Curiosity-based learning in infants: a neurocomputational approach. Developmental science, 21(4), e12629.

Woodward, A. L. (2009). Infants’ grasp of others’ intentions. Current directions in psychological science, 18(1), 53-57.

Yamins, D. L., Hong, H., Cadieu, C. F., Solomon, E. A., Seibert, D., DiCarlo, J. J. (2014). Performance-optimized hierarchical models predict neural responses in higher visual cortex. Proceedings of the national academy of sciences, 111(23), 8619-8624.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this chapter

Cite this chapter

Haber, N. (2023). Curiosity and Interactive Learning in Artificial Systems. In: Niemi, H., Pea, R.D., Lu, Y. (eds) AI in Learning: Designing the Future. Springer, Cham. https://doi.org/10.1007/978-3-031-09687-7_3

Download citation

DOI: https://doi.org/10.1007/978-3-031-09687-7_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-09686-0

Online ISBN: 978-3-031-09687-7

eBook Packages: Behavioral Science and PsychologyBehavioral Science and Psychology (R0)