Abstract

This chapter reflects on the contributions of different articles of the book from various perspectives. Seven categories provide perspectives to reflections. Four of them are connected to different levels of the educational system, others opening scenarios to research on education and learning with AI, and finally the last category is devoted to ethical challenges of AI in education and learning.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

1 Where Are We Now with AI?

In our concluding chapter, after briefly considering AI’s immense presence in the emerging information infrastructure of global societies and its importance for both education and education research, we reflect on the contributions to research, technology, and theory provided by the chapters of our volume. We characterize the vectors of development and the critical issues identified as priorities for the research ahead. This chapter also provides scenarios of the future development and changes when AI will be applied in learning and education.

Artificial intelligence, or simply AI, has become one of the most pervasively adopted technologies in history. It is now integrated into billions of smartphones for services as diverse as speech recognition agents like Siri and Alexa and recommendation services for music, movies, books, retail purchasing, and route mapping for driving. It is possible that AI and its related technologies could become highly consequential for the future of learning, teaching, and educational systems more broadly. Our scholarly community in education research, and all of education’s stakeholders, should critically consider how to best develop and use AI in education so that it will be equitable, ethical, and effective while guarding against data and design risks and harms.

In 1956, Stanford’s John McCarthy offered one of the first definitions of AI: “The study [of artificial intelligence] is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it” (Russel and Norvig 2010).

With its advances in 65 years, we find Accenture’s contemporary definition useful: “AI is a constellation of many different technologies working together to enable machines to sense, comprehend, act, and learn with human-like levels of intelligence.”

Integral to this AI terrain are machine learning and natural language processing. Machine learning is a type of AI enabling systems to learn patterns from data, make predictions, and then improve future experience through applying the discovered patterns to situations absent in their initial design (Popenici and Kerr 2017). When you get product recommendations in an online retail shopping site, these suggestions are driven by machine learning, as the AI is continuously improving at figuring out what you might buy. Useful as they are, these forms of AI are called Narrow AI, tooled to performing a single task or closely related tasks.

General AI, as in sci-fi films, where sentient machines emulate human intelligence and think strategically, handling a broad range of complex tasks, is not yet reality. Although AI computing works at exceptional speed and scale, human-machine collaboration is crucial as humans provide guidance by labelling data from which AI machines can learn. So, AI thus far augments human capabilities, rather than replacing them.

We anticipate that recognizing the possibilities and limits of AI technologies will become more an everyday topic of conversation as people seek to make sense of the stunning digital transformations they are experiencing and quest to fulfill their hopes to fully participate in, benefit from, and adapt to the new occupations and skill needs that will emerge.

In the spirit of supporting that quest, we now ask: How does AI relate to educational systems, teaching and learning processes, and educational research? The essential purpose is to explore how AI can serve human purposes in promoting learning and enhancing education research.

To begin, we note that in 2020 in Silicon Valley, the US non-profit organization Digital Promise convened a panel of 22 experts in AI and in learning for several days to consider these broad questions (Roschelle et al. 2020):

-

What do educational leaders need to know about AI in support of student learning?

-

What do researchers need to tackle beyond the ordinary to generate knowledge for shaping AI in learning for the good?

Their synthesis report suggests three layers for framing AI’s meaning for educators. First, AI can be viewed as a “computational intelligence” for contributing an additional resource to an educator’s abilities and strengths in tackling educational challenges. Second, AI brings specific and exciting new capabilities to computing, including sensing, recognizing patterns, representing knowledge, making and acting on plans, and supporting naturalistic interactions with people. These capabilities can be engineered into solutions to support learners with varied strengths and needs, such as allowing students to use handwriting, gestures, or speech as input modalities in addition to keyboard and mouse. Third, AI may be used as a tool kit to enable imagining, studying, and discussing future learning scenarios that don’t exist today. We find our authors making contributions to the AI in education literature in each of these layers.

Now let us consider ways to frame the full panoply of contributions from the chapters of our volume. Seven categories provide perspectives to reflections. Four of them are connected to different levels of the educational system, others opening scenarios to research on education and learning with AI, and finally the last category is devoted to ethical challenges of AI in education and learning. These reflections will help us sort through the forest of new work represented in this volume.

2 AI Contributions to Different Levels of Education Systems

2.1 K-12 Tutoring Systems and Other Adaptive Learning Technologies

As we indicate below, one preliminary and central idea we wish to communicate is that AI in education is about so much more than “ed tech” applications, such as intelligent tutoring systems (ITS) and adaptive learning technologies, although developments in AI are still contributing to this vision (see chapters by Niu et al. “Multiple Users’ Experiences of an AI-Aided Educational Platform for Teaching and Learning” and Chen et al. “Learning Career Knowledge: Can AI Simulation and Machine Learning Improve Career Plans and Educational Expectations?”). Niu et al.’s chapter on how their AI-aided educational smart learning partner platform provides intelligent services to support students’ learning contributes a multiuser perspectives account of the experiences of students, teachers, and school managers as they employ a system in which learners constantly receive individualized learning assessments and recommended improvements, teachers can attune their pedagogical strategies and actions according to students’ needs, and school management can more informedly support teachers’ teaching and students’ learning.

One chapter provides a bridge between K-12 education and career development. Chen et al.’s chapter on “Learning Career Knowledge: Can AI Simulation and Machine Learning Improve Career Plans and Educational Expectations?” details their approach to using AI capabilities to support youth career selection as they face job future uncertainties with automation’s advancements. They investigate how machine learning applications can help solve the problem of enabling youth to align their individual career goals with specific employment opportunities and know what capabilities and certifications specific jobs either demand or require. They describe how these applications have been implemented with tasks and goals to test players’ capacity, skills, and interests in selecting future occupations using simulated game-based scenarios that yield a player’s computer-generated characteristics. They share the machine learning decision tree algorithms derived to map out all the possible outcomes of job selections and to then narrow individual players’ opportunity choices given their current gameplay status. It is impressive how such gameplay can minimize risks and provide strategic advantages for young people with limited occupational knowledge.

The described examples provide signals that AI will change future curricula, assessment methods, student counseling, and teachers’ work. It demands radical changes in the whole educational ecosystem and supporting teachers to move toward new kinds of pedagogical orchestration in classrooms and beyond when expanding learning environments with AI.

2.2 Beyond K-12 Disciplinary Curriculum: Whole Child AI Technologies

AI is also being applied to what we might call “whole child education”, more than the standard curriculum and its learning standards. Increasingly, educational systems are taking more of a whole child development approach to education in which creating safe and supportive learning environments for equitably preparing each student to reach their full potential is a key goal. Such supportive environments aim to promote wellness and resilience for everyone participating in the school community, emphasizing not only academic but social-emotional outcomes such as self-regulation, stress management, and a sense of belonging since they affect productive engagement in learning.

Several chapters address, respectively, students’ broadly considered well-being and, more narrowly, their problem behaviors. Students’ well-being is critical as it marks their positive development in school life and ensures their future growth. Tang et al. chapter “Assessing and Tracking Students’ Wellbeing Through an Automated Scoring System: School Day Wellbeing Model” introduce an automated scoring well-being system—School Day Well-Being Model—featured as dynamic and real-time in giving immediate feedback at multiple organization layers (person, class, school). Task performance and emotion regulation skills were the most consistent skills to promote psychological well-being, academic well-being, and health-related outcomes. Penghe et al.’s chapter “An AI-Powered Teacher Assistant for Student Problem Behavior Diagnosis” proposes an AI-powered assistant for solving student problem behaviors in school, as defined by undesirable behavior compared with social norms. Interventions are based on automatically diagnosed unmet needs of students. They build a domain knowledge graph summarizing all relevant factors of diagnosed unmet student needs to guide the system, adopting reinforcement learning to learn dialogue policy on this topic and to implement the dialogue system for addressing student behavioral problems.

Socio-emotional factors are decisive for students’ successful learning (e.g., Durlak 2015). AI and its capacity to bring multimodal data into learning environment designs and interventions will open totally new opportunities to understand student’s behaviors and their needs for learning and well-being. However, we can also see that the mere data and even its effective interactive systems do not necessarily help without human scaffolding and interaction (Pea 2004). Human behavior has pervasive social foundations, and we need the integration of AI-based information and human users.

2.3 Higher Education and Lifelong Learning

Four chapters tackle the uses of AI in learning environments for college-age students and beyond, encompassing nursing education, VR training of hard procedural skills in industry, stress during simulation-based learning, and self-learning and emotional support through cognitive mirroring with intelligent social agents (ISA). Koivisto et al. “Learning Clinical Reasoning Through Gaming in Nursing Education: Future Scenarios of Game Metrics and Artificial Intelligence” report studies of nursing students using computer-based simulation games for learning clinical reasoning (CR) skills in an authentic 3-D hospital environment with nine scenarios based on different clinical situations in nursing care as they learn essential skills for ensuring patient safety and high-quality care as they assess patients’ clinical condition systemically by interviewing, observing, and measuring patient’s vital signs. Game metrics calculated during gameplay are used to evaluate nursing students’ CR skills and to target needs for improvements.

Korhonen et al.’s chapter “Training Hard Skills in Virtual Reality: Developing a Theoretical Framework for AI-Based Immersive Learning” explores learning with an immersive virtual reality-based hard-skills training guided by an AI tutor software agent. They observe how such environments, supported by sufficiently advanced tutoring software, may facilitate asynchronous, embodied learning approaches for learning hard, procedural skills in industrial settings. They unpack the mismatch between the philosophy of cognition underpinning intelligent tutoring system (ITS) software and emergent issues for the learner’s epistemology in a virtual world and its attendant shortcomings for learners’ experiences in the VR environments where they are learning. To counteract this mismatch of philosophy of cognition and technology-augmented learning environment design, they propose improved pedagogical approaches in employing the philosophies of embodied, embedded, enacted, and extended (4e) cognition as the underpinning for VR-native pedagogical principles. Ruokamo et al.’s chapter “AI-Supported Simulation-Based Learning: Learners’ Emotional Experiences and Self-Regulation in Challenging Situations” explores professionals’ learning experiences and their stress level during simulation-based learning, considered from physiological, emotional, motivational, and cognitive perspectives to identify key factors increasing and inhibiting their learning. In “Learning from Intelligent Social Agents as Social and Intellectual Mirrors” Maples et al. report on a mixed-method study report on a mixed-method study exploring relationships between user loneliness, use motivations, use patterns, and user outcomes for 27 adult users of Replika, a best-in-class “intelligent social agent” (ISA) sufficiently anthropomorphized to pass Turing tests in short exchanges. Their data indicate these users were lonely or experiencing a time of change and distress and they used Replika for its availability, friendship, therapy, and personal learning. For many, Replika provided critical emotional support; for some, belief in Replika’s intelligence led to a deeper cognitive proximity and increasingly profound engagement as they identified Replika as a human, a friend, and even an “extension of themselves”.

AI will change the landscape of life-long learning. The borders of formal and informal learning will be broken. AI will be the essential tool in learning of skills and competences in working life as well as in personal learning environments and contexts. So far, games and simulations have been an essential tool, but in the future, much training will happen in virtual reality, increasingly called the metaverse (Sparkes 2021). This also makes collaboration and social elements possible in skills and competence learning. As the future scenario, we may expect radical changes in adult education and job reskilling.

2.4 Enabling Media for the Learning Ecosystem

Two chapters are devoted to explicating how AI can provide advances in the core functionalities of the establishment of media for the learning ecosystem: one is devoted to intelligent e-textbooks and one to deep learning in automatic math word problem solvers (MWPs). Jiang et al.’s chapter “Recent Advances in Intelligent Textbooks for Better Learning” investigates the history and vital topic of how e-textbook platforms could promote learning. If we could understand how people interact with and read e-textbooks, we would have more guidance for providing intelligent learning support to learners in the design of e-textbooks. They review key intelligent technologies used in intelligent textbooks—student modeling and domain modeling technologies. Student modeling has three aspects: learner knowledge state modeling, learner learning behavior modeling, and learner psychological characteristic modeling. They introduce popular intelligent textbook authoring platforms used for creating intelligent textbooks. Zhang’s chapter “Deep Learning in Automatic Math Word Problem Solvers” provides a synoptic account of developments in automatic MWPs, from the 1960s to the uses of deep learning algorithms today as they seek to solve the challenging problem of parsing the human-readable word problems into machine-understandable logical expressions. As systems advance the intelligence level of AI agents in terms of natural language understanding and automatic reasoning, they promise intelligent support in education environments for learners’ developments in mathematical word problem-solving competencies.

Technological advances have made it possible to overcome many earlier barriers in how to support human learning. The future perspectives require that we understand more about the relationship of human and machine learning. With AI, we have two learners: a human and machine. This interaction needs new understanding of how this relationship can be supportive to different kinds of human learners and extraordinarily diverse learning situations. Success in this enterprise requires continuous collaboration between experts of computing sciences and learning sciences.

3 Roles of AI for Enhancing the Processes and Practices of Educational Research

Three chapters report how AI is contributing to facilitation of educational research. Marcelo Worsley characterizes different facets for how multimodal learning analytics employs AI for measuring student performances during complex learning tasks. He highlights how contemporary authentic and engaging learning environments transcend the traditional teacher-centric classroom context, incorporating types of learning experiences that are embodied, project-based, inquiry-driven, collaborative, and open-ended. He examines AI-based tools and sensing technologies that can help researchers and practitioners navigate and enact these novel approaches to learning with new analytic techniques and interfaces for helping researchers collect and analyze different types of multimodal data across contexts, while also providing a meaningful lens for student reflection and inquiry.

Vivitsou’s chapter “Perspectives and Metaphors of Learning: A Commentary on James Lester’s Narrative-Centered AI-Based Environments” centers on James Lester’s AI in education keynote address and associated interview, to discuss perspectives on narrative-centered learning and metaphors of AI-based learning environments, such as Crystal Island, an AI-based game for K-12 students learning science. She employs Ricoeur’s narrative theory and metaphor theory to examine the role of characters and the narrative plot in relation to Lester’s visualization of the future of learning with AI-based technologies, revealing new roles in AI-rich game-based learning such as drama manager. She also examines the importance of dynamic agency metaphors in AI for advancing learning environment design. With the intention of supporting the improvement of classroom teaching quality, Yu & Sun’s chapter “Analysis and Improvement of Classroom Teaching Based on Artificial Intelligence” depicts research and technology which seeks to transcend traditional labor-intensive classroom teaching event analysis methods by using their teaching event sampling analysis framework (TESTII), which employs computer vision, natural language processing, and other emerging AI technologies to perform classroom teaching event analysis for improving educational practices.

When AI comes to education and learning settings, the typical designed structures of lessons and learning environments will be changed. We need new concepts for understanding our life-long, life-wide, and life-deep learning environments (Bell and Banks 2012), and how analytic techniques and research methods must also be reconceived and re-designed with AI-based tools and learning environments.

4 Advancing the Learning of AI

Several chapters are devoted to the basic research problem of engineering AI to learn more productively, in hopes that such advances could improve human learning in educational systems as well. Haber considers how to build AI that learns via curiosity and interactions like humans, and Zhang asks how advances in deep learning with automatic math word problem solvers can represent progress toward the automatic reasoning of general AI. Haber’s chapter “Curiosity and Interactive Learning in Artificial Systems” introduces readers to results from AI’s deep reinforcement learning that aspire to replicate the processes and outcomes of human interactive learning, sparked by curiosity, seeking novelty and information, and social engagement. He asks how might we engineer an artificial, autonomous agent that can flexibly interact with its environment, and other agents within it, to learn as humans do. He argues that if this AI engineering program makes progress, it may shape the future of education by providing fine-grained computational models of learning and even enabling in silico testing of learning interventions, from early childhood through K-12 education. Zhang’s chapter “Deep Learning in Automatic Math Word Problem Solvers” provides a synoptic account of the technical history of automatic math word problem solvers (MWPs), from the 1960s to the uses of deep learning algorithms today that shrink the semantic gap between what humans can read and what machines can understand. MWPs seek to solve the challenging problem of parsing human-readable word problems into machine-understandable logical expressions. Different MWP architectures have been good test beds for appraising the intelligence level of agents in terms of natural language understanding and automatic reasoning, and their comparative performances on public benchmark datasets illuminate advances toward the automatic reasoning of general AI.

While even the latest AI techniques still find it challenging to simulate human learning and fully understand the semantics of human language, significant progress has been made in the fields of machine learning and natural language processing in recent years (Deng and Liu 2018). We believe that the learning capabilities of AI will be more powerful and effective in the near future, by leveraging the advancements of neuroscience that reveal how our human brain thinks, remembers, and learns (Savage 2019; Ullman 2019).

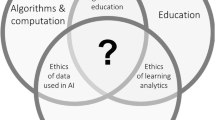

5 Ethical Dimensions of AI Integration into Human Learning Environments and Socio-Technical Systems for Education

Two chapters delve into national policy (comparing Finland and China) and stakeholder perspectives on AI in education (education technology industry and its educational system clients). Wei & Niemi’s chapter “Ethical Guidelines for Artificial Intelligence-Based Learning: A Transnational Study Between China and Finland” provides an AI policy analysis comparing programmatic policy documents developed by the Finnish and Chinese governments for promoting the development of AI-based learning in society. Five themes emerged: (1) the potential of AI for reshaping basic education and school quality; (2) emphasizing the importance of AI in the workforce and employment; (3) connecting AI with human development and students’ wellbeing; (4) promoting teachers’ AI literacy in digitalized times; and (5) AI for lifelong learning reform in a civil society. Yet promoting ethical guidelines for AI in learning is barely discussed at the policy level. Instead, policy documents discuss general ethical themes, not specifying ethical challenges for educational environments. Their chapter further analyzes detailed ethical challenges within the five themes when AI-based tools are used in educational environments and critically reflects on needed ethical guidelines when AI is applied in education.

Kousa & Niemi’s chapter “Artificial Intelligence Ethics from the Perspective of Educational Technology Companies and Schools” analyzes and reflects on the perspectives of multiple parties—companies producing AI-based tools and services and their users in schools and workplaces—concerning ethical opportunities and challenges which AI is establishing for learning in schools and working life. Corporate perspectives consider ethical challenges to be related to regulations, equality and accessibility, machine learning, and society. From the school users’ perspectives, the critical questions are: Who has the power to decide which educational services the school can use? Who is responsible for ethical issues (such as student privacy) of those services? Who will ensure that AI-based services and tools are equally accessible to and effective for all in supporting teaching and learning? The authors argue that continuing dialogue between producers and consumers is essential and that national and international guidance is needed on how to engage in ethically sustainable action. The aim is to increase common AI knowledge through education to understand its opportunities and challenges and keep up with our rapidly evolving society.

It is an important shortcoming that, despite increasing attention on privacy and ethics in educational technology (Henein et al. 2020, p. 3), there remains a “widespread lack of transparency and inconsistent privacy and security practices for products intended for children and students.” To advance educational research at scale, it is crucial to provide methods and processes for implementing privacy-preserving learning analytics globally (Every Learner Everywhere 2020; Joksimović et al. 2022).

Meta concerns for the ethics of AI in education are provided by Cowley et al.’s chapter “Artificial Intelligence in Education as a Rawlsian Massively Multiplayer Game: A Thought Experiment on AI Ethics” They provide a thought experiment for conceptualizing the possible benefits and risks to be revealed as AI is integrated into education. Actors with different stakes (humans, institutions, AI agents, and algorithms) all conform to the definition of a player—a role designed to maximize protection and benefit for human players. AI models that understand the game space provide an API for typical algorithms, e.g., deep learning neural nets or reinforcement learning agents, to interact with the game space. The thought experiment surfaces socio-cognitive-technological questions that must be discussed, such as benefits of using AI-based tools for supporting different learners, yet possible risks of algorithmic manipulation, or hidden algorithmic discrimination. The more we reflect on it, the clearer it becomes that the ethics of AI in education is a keystone issue which will ramify throughout future inquiries into the future of AI-augmented learning.

Finally, Pea et al.’s “Four Surveillance Technologies Creating Challenges for Education” introduces the capabilities of four core surveillance technologies now being embraced by universities and preK-12 schools: location tracking, facial identification, automated speech recognition, and social media mining. The chapter articulates challenges in how these technologies may be reshaping human development, risks of algorithmic biases and access inequities, and the need for learners’ critical consciousness concerning their data privacy. The chapter expresses hope that government, industry, and public sector collaboration on these issues can make more likely that continued advances in artificial intelligence will become a powerful aide to more equitable and just educational systems and an ingredient to engaging, innovative learning environments that will serve the needs of all our diverse learners and educators.

The ethical questions are burning when AI is applied in education and learning. Ethical demands concern the whole society, developers, and providers of new tools, environments, and services. It also concerns all users. Even though we have many national and international ethical guidelines appearing, many issues are still open and new problems are continually being discovered. Perhaps the biggest question is how users can trust that their privacy is not violated. AI has become ubiquitous, it is part of everyday life, and it will be a common tool in education and learning. For understanding what AI means in our life, we need a new civic skill. Support for this should be part of school curricula and easily available in society. AI users need basic knowledge about AI, its features and applications, and what are ethical regulations needed for its safe use. All people should also have information about what are their rights and what are the procedures to follow if there are misuses of their privacy with AI. Users will need this kind of knowledge in their school years and widely throughout their life. AI will be a powerful tool in our future, but we must remember that human beings have the ultimate responsibility when developing and using AI.

References

Bell, P., & Banks, J. (2012). Life-long, life-wide, and life-deep learning. Encyclopedia of Diversity in Education, 4, 1395–1396.

Deng, L., & Liu, Y. (Eds.). (2018). Deep learning in natural language processing. Springer.

Durlak, J. A. (Ed.). (2015). Handbook of social and emotional learning: Research and practice. Guilford Publications.

Every Learner Everywhere. (2020). Guiding principles and strategies for learning analytics implementation. Tyton Partners.

Henein, N., Willemsen, B., & Woo, B. (2020). The state of privacy and personal data protection, 2020–2022. Gartner Report.

Joksimović, S., Marshall, R., Rakotoarivelo, T., Ladjal, D., Zhan, C., & Pardo, A. (2022). Privacy-Driven Learning Analytics. In Manage Your Own Learning Analytics (pp. 1–22). Springer, Cham.

Pea, R. D. (2004). The social and technological dimensions of scaffolding and related theoretical concepts for learning, education, and human activity. The Journal of the Learning Sciences, 13(3), 423–451.

Popenici, S., & Kerr, S. (2017). Exploring the impact of artificial intelligence on teaching and learning in higher education. Research and Practice in Technology Enhanced Learning, 12(1), 1–13.

Roschelle, J., Lester, J. & Fusco, J. (Eds.) (2020). AI and the future of learning: Expert panel report. Digital Promise. https://circls.org/reports/ai-report.

Russel, S., & Norvig, P. (2010). Artificial intelligence - a modern approach. New Jersey: Pearson Education.

Savage, N. (2019). How AI and neuroscience drive each other forwards. Nature, 571(7766), S15-S15.

Sparkes, M. (2021, August 21). What is a metaverse. New Scientist, 251(3348), 18.

Tomasello, M. (1999). The human adaptation for culture. Annual review of anthropology, 28(1), 509–529.

Ullman, S. (2019). Using neuroscience to develop artificial intelligence. Science, 363(6428), 692–693.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this chapter

Cite this chapter

Pea, R.D., Lu, Y., Niemi, H. (2023). Reflections on the Contributions and Future Scenarios in AI-Based Learning. In: Niemi, H., Pea, R.D., Lu, Y. (eds) AI in Learning: Designing the Future. Springer, Cham. https://doi.org/10.1007/978-3-031-09687-7_20

Download citation

DOI: https://doi.org/10.1007/978-3-031-09687-7_20

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-09686-0

Online ISBN: 978-3-031-09687-7

eBook Packages: Behavioral Science and PsychologyBehavioral Science and Psychology (R0)