Abstract

“Ubiquitous AI”—embodied in cloud computing web services, coupled with sensors in phones and the physical world—is becoming infrastructural to cultural practices. It creates a surveillance society. We review the capabilities of four core surveillance technologies, all making headway into universities and PreK-12 schools: (1) location tracking, (2) facial identification, (3) automated speech recognition, and (4) social media mining. We pose primary issues educational research should investigate on cultural practices with these technologies. We interweave three priority themes: (1) how these technologies are shaping human development and learning; (2) current algorithmic biases and access inequities; and (3) the need for learners’ critical consciousness concerning their data privacy. We close with calls to action—research, policy and law, and practice.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

- Ubiquitous AI

- Surveillance society

- Location tracking

- Facial identification

- Automated speech recognition

- Social media mining

- Algorithmic biases

- Privacy policy

1 Introduction

Accelerating embeddedness of information and communication technologies in our social and physical worlds requires reflection on the future of learning environments and educational research. The ubiquitous AI—embodied in cloud computing web services which detects empirical patterns in accruing data, coupled with sensors in phones and the physical world—is becoming infrastructural to society’s cultural practices. We first sketch the surveillance state, enabled by pervasive sensors, cloud computing, and ubiquitous AI for pattern recognition and behavior prediction. We briefly characterize four surveillance technologies, all making headway into PreK-12 schools, universities, educational research, and technology design: (1) location tracking, (2) facial identification, (3) automated speech recognition, and (4) social media mining. We then pose primary issues educational research should investigate on cultural practices with these technologies for education and learning. We interweave three prioritized themes in our questioning: (1) how these technologies are shaping human development and learning; (2) current algorithmic biases and access inequities; and (3) the need for learners’ critical consciousness concerning their data privacy rights under threat and their agency in dealing with them efficaciously. We close with calls to action essential for guiding an educational future for our children and youth attuned to the risks of unreflective uses of these technologies and focused on demanding their transparent accountable uses for furthering our nation’s democratic society.

2 Surveillance State

Our networked society involves people exchanging personal details about themselves and what they’re doing for services and products on the web or apps (Ip 2018). Many accept the deals they’re offered in return for sharing insights about their behaviors, interests, and social lives. Pew Research Center research reveals a majority of Americans worry about these data being collected and used (Auxier et al. 2019). Zuboff (2019, 2020) calls this “surveillance capitalism,” a market-driven process commodifying personal data for profit-making, requiring capturing and producing these data through mass Internet surveillance. The concept arose after advertising companies foresaw using personal data to target consumers more specifically, and social media companies Facebook, Google, and Amazon exploited the insights to great fiscal rewards. “Analyzing massive data sets began as a way to reduce uncertainty by discovering the probabilities of future patterns in the behavior of people and systems” (Möllers et al. 2019). Turning humans into objects (data for monetization), not recognizing their agency as subjects, evokes warnings (Castells 1996: 371) of future networked inequalities where two profiles define humanity—the “interactive” (“using the Web’s full capacities”) and the “interacted” (limited to a “restricted number of prepackaged choices.”)

2.1 Location Tracking

Location tracking refers to processes of employing technologies that physically locate and electronically record and track movements of people or objects. This technology is used in GPS navigation, locations specified on digital snapshots, and when people search for businesses nearby or more general information using common apps. Where you are, where you have been, and what information you are seeking at specific locations are among the most personal of facts. Technologies that enable location tracking are thus among the most privacy sensitive of all. Yet, “every minute of every day, everywhere on the planet, dozens of companies—largely unregulated, little scrutinized—are logging the movements of tens of millions of people with mobile phones and storing the information in gigantic data files” (Thompson and Warzel 2019). The Times Privacy Project obtained from a concerned source the vastest location sensitive data file ever reviewed by journalists, containing over 50 billion precise location pings from over 12 million Americans’ phones when moving through several major cities—Washington, New York, San Francisco, and Los Angeles—during several months in 2016–2017. Even so, they note, “this file represents just a small slice of what’s collected and sold every day by the location tracking industry—surveillance so omnipresent in our digital lives that it now seems impossible for anyone to avoid.” They note there is no federal law limiting collecting or selling these data.

On American campuses, college students are being watched, tracked, and managed by an accelerating nexus of technologies whose data are mined for colleges’ purposes (Belkin 2020). Beyond all the activity logging attendant to their uses of student IDs, video surveillance cameras record students’ faces, GPS tracks their movements, and their messages and photos are monitored on social media and email. Online courses and digital textbooks log their study habits minutely, and their pathways through campus buildings are recorded whether in class, dorm, cafe, library, or sporting events. Colleges say they’re using these surveillance data to keep students safe, engaged, and making progress, but we should ask how the reduced freedom to act without surveillance is shaping student agency and responsibility, since surveillance is a means of control and suppression. How commonplace is such surveillance on college campuses, and whether students can opt out or not, does it affect their sense of belonging and trust in higher education spaces (Jones et al. 2020)? Members of minoritized and racialized groups such as first-gen low-income (FLI) and underrepresented minority (URM) students may be especially vulnerable to such threats. Are surveillance-data-informed nudges for participation in study groups or visiting teaching assistants when a student is struggling effective? How are universities promoting on-campus critical literacies regarding ongoing surveillance of both students and faculty?

Similar questions extend to K-12 learners, as we ask how tracking technologies are used in K-12 settings to ensure the safety and progress of students but also potentially to violate students’ rights. First, given the increasing prevalence of computer learning in schools, education stakeholders should know what student location data is collected when they use computing hardware like Chromebooks, websites, and apps as education requirements. To participate in schooling, students must access disparate technology subsystems that are part of their education, such as Kahoot!, Edmodo, and Classrooms. How is their information and learning profile being protected or tracked and used to advance capitalistic rather than student-centered interests? To what extent are K-12 students aware of and critically conscious about these tracking technologies and location data privacy? The default setting on many apps is “track” rather than “not track”—many apps thus track people without disclosure. Students’ sense of what these technologies imply about their learning environment may influence their personal agency, free movement, free expression of ideas and social affiliations, and feeling of belonging and trust in their school. Furthermore, deportation risks may lurk for undocumented students.

2.2 Facial Identification Technologies (FITs)

Facial recognition uses computer vision systems to identify specific human faces in photos/videos. Amazon, Microsoft, and smaller start-ups aggressively market FIT products to governments, law enforcement agencies, and private buyers (casinos and schools). Federal agencies ICE and FBI use face surveillance. Facebook and Google have their own proprietary algorithms. Apple and Google employ FIT for biometrically unlocking smartphones. The broader project of recognizing a person from photographs taken from live cams in public places like parks or streets was technically challenging for decades (Raviv 2020) but is now so advanced; it monitors millions of individuals in China (Economist 2018) and in US and UK urban settings (EFF 2020).

Facial recognition technology learns how to identify people by analyzing as many digital pictures as possible using “neural networks,” complex mathematical systems requiring vast amounts of data to build pattern recognition capabilities (Metz 2019). The New York Times has profiled the company Clearview AI selling access to facial recognition databases and tools to law enforcement agencies for presumed greater societal safety. Clearview violated service terms on diverse social media platforms to amass an enormous database of billions of images for facial recognition. The American Civil Liberties Union (ACLU) sued Clearview AI in its violation of state laws forbidding companies using residents’ face scans without consent. Beyond civil liberties issues, most commercial facial recognition systems exhibit biases, with false positives of African American and Asian faces 10–100 times more frequent than those of Caucasian faces (Buolamwini and Gebru 2018; Grother et al. 2019).

Governments use face surveillance technology to automatically identify an individual from a photo they have by scanning vast databases of labeled images (e.g., driver’s licenses) to find the faceprint matching the photo. For tracking, they use the technology once they know a person’s identity but want to track that person in real time and retroactively. Authorities use networks of surveillance cameras for tracking, and automation software builds records of everyone’s movements, habits, and associations. This is how China surveils ethnic minorities (Andersen 2020; Mitchell and Diamond 2018) and Russia monitors protests (Dixon 2021). Finally, “emotion detection” technology claims to read emotions based on a person’s facial expression in photos and videos. Amazon and Microsoft advertise “emotion analysis” as one of their facial recognition products.

There is an increasing normalization of K-12 schools’ FIT use as thousands now employ video surveillance justified by the promise of protecting young people and checking attendance (Andrejevic and Selwyn 2019; Simonite and Barber 2019). Schools serving primarily students of color are more likely to rely on more intense surveillance measures than other schools (Nance 2016). The Electronic Frontier Foundation argues that schools must stop using these invasive technologies (Wang and Gebhart 2020).

Empirical research is needed on how PreK-12 learners experience FITs. Do algorithmic biases lead to (in)accuracies in FIT uses for recognizing youth and by gender, race, and ethnicity at different ages? We should examine what K-12 learners understand about FITs and how their parents engage with their presence in their child’s learning environments. How are decisions made to embed them in school environments, with what accountability to parents and local, state, and federal data privacy laws? Such information would help inform researchers and policymakers of what learners do and don’t know about FIT and privacy and how parents and educators might deal with their uses in education.

What are the generational differences in normalized acceptance of facial recognition technology as students adopt more social media platforms—Facebook, Instagram, Snapchat, and TikTok? Many parents upload their children’s pictures to social media since birth—how does growing up with social media affect the new generation’s perception of FITs and attitudes toward digital privacy?

We have many urgent questions: How to center children and their rights in this reality? What legal protections exist for PreK-12 learners regarding video surveillance FITs? How can educators ensure student data security? What do teachers, parents, school administrators, and learners understand about these safeguards? With what frequency is PreK-12 FIT used illicitly and challenged by teachers, parents, and children and with what consequences?

What curricula are needed to advance informed action by parents, teachers, and school leaders concerning FITs in children’s everyday lives? We need to understand what they understand about the risks of the FIT technology in its providing of “false positives”—when the technology reports it has identified a specific person but in fact has not—and of “false negatives,” when the technology missed out in finding a person who is in fact present in the video scene. How do stakeholders think about the troubles of data privacy risks and the greater error-prone nature of FIT algorithms for Blacks and Asians versus their purported security benefits? What concepts and models should students be learning to understand their technologically rich and privacy-poor world of FITs?

2.3 Automated Speech Recognition

Automated speech recognition is the capability of natural language processing (NLP) software to “understand” human language. Millions use voice recognition systems like Alexa, Siri, Assistant, or Cortana, which hear their voices, process their language, and act based on its query content—finding information online, playing music, making purchases, or controlling lighting/heating. NLP capabilities expand accessibility for people with visual impairments, but as always-on components of home and mobile communication infrastructure, they raise serious data privacy questions for their influences on human development and society.

How are children/adults using virtual personal assistants explicitly for learning purposes and to what effects? Are youth as automated-speech-recognition natives learning differently than youth in the past and with what consequences? There are two competing developmental hypotheses on prospects for and effects of conversational AI. The first is child psychologists arguing interactions with smart speakers are too superficial to teach children complex interactions like speech (Hirsh-Pasek, quoted in Kelly, 2018). The second hypothesis is more optimistic—Siri’s co-creator Tom Gruber (Markoff and Gruber 2019) suggests conversational AI has potential to teach students skills like reading as computers may outperform humans because of their ability to learn exponentially with pattern recognition. What skills and topics AI conversation systems will be good at “teaching” students lies unexamined. What are their benefits and limitations? Will children more likely share their goals, feelings, or progress relative to their learning with an AI humanoid tutor rather than human teachers? What roles might such tutors positively play in education for K-12 learners?

Smart AI speakers transform how people access and interact with information. Children are becoming accustomed to receiving answers immediately when asking Siri or Alexa questions. Greenfield (2017) argues making search frictionless could “short-circuit the process of reflection that stands between one’s recognition of a desire and its fulfillment via the market.” What will be the developmental consequences of the bots’ displacement of unmediated processes of trial and error and reflection for children to learn to solve problems on their own?

Biased algorithms are concerning: Koenecke et al. (2020) found racial disparities in automated speech recognition for Black and White speakers. Given such algorithmic biases in speech recognition, what inequities in technology access for supporting human activities will be perpetuated or even amplified, as for intersectional identities such as people of color who rely on speech recognition technology for learning accommodations in educational settings?

Childhood speech is tough. It is difficult to be accurate in speech recognition for the sentences young learners produce. A youngster’s breaks in speech, pauses, and filler words may decrease speech recognition accuracy. A child’s frustration when an agent doesn’t recognize their questions may well increase their cognitive load. Children’s frequent use of agents may also affect their language development—semantics, syntax, pragmatics, and prosody. It is also worth investigating how hybrid language learning environments of adult speech to children with adult speech to agents influences children’s language learning. For adult learners, we need to study how well speech recognition systems perform on different accents, speech styles from different cultures, and colloquial speech. For people of all ages, biases in recognition over time may condition learners to modify their speech style, accent, and behavior to match what makes the recognition system work. If true, this adaptation could create a stereotype threat-like effect where learners are forced to modify behavior to fit into a “normal” defined by the dominance of Western White speaker data used in these speech recognition systems.

Since today’s conversational AI does not allow for the creative and flexible dialogues normally practiced by children and adults, youth may become less likely to question and explore ideas outside what these systems have programmed. As students more frequently use built-in speech recognition features of Google Docs to write their assignments by speaking, we wonder how their writing may be transformed. Literacy scholarship by Ong and McLuhan centers the ways “technologizing of the word” leads to interior transformations of consciousness, not only serving as exterior aids. It is worth asking how oppressive societies will control what kinds of answers are provided to questions doubting national authority. Differential access to such technologies by citizens of different nations may affect society and democracy at large.

Conversational agent futures will yield intelligent robotic assistants performing physical tasks to improve quality of life and increasing accessibility for many populations (e.g., the aged, students with special learning needs). The desire is that equity in access and utility of such tools can be promoted, while algorithmic biases are avoided as these assistants become ubiquitous in schools and homes which have diverse language practices.

Everyone needs to know about the safeguards that exist for data privacy when using these systems. Yet we know too little about how their users think about trade-offs between the convenient “frictionless” interactions which Weiser (1991) called “calm computing” and privacy-related drawbacks like ads, government surveillance, and hackers. Woven so effectively into the social fabric, the processes and effects of oppression become normalized, thus making it difficult to step outside of the system to discern how it operates (Adams et al. 2016). As speech recognition systems become embedded in smart toys for kids, research is needed into how children and parents navigate the ethical, trust, and safety issues in monitoring and recording interactions (McStay and Rosner 2021).

2.4 Social Media Mining

Social media are Internet-based apps for creating and exchanging user-generated content (Kaplan and Haenlein 2010 p. 61)—social networking, blogging, news aggregation, photo and video sharing, livecasting, social gaming, and instant messaging. Social media mining represents, analyzes, and extracts actionable patterns from social media data (Zafarani et al. 2014). Social media data mining analyzes user-generated content with rich social relationship information. Social media dissolve boundaries between physical and digital worlds when social media mining researchers integrate social theories with computational methods to study how individuals (“social atoms”) interact and how communities (“social molecules”) form.

We begin by asking about critical consciousness: What do adults of different demographic profiles know about the powers corporations and governments have in making possible and regulating the conditions of their social media usage and associated data mining? What is the relationship between their social media behaviors and their beliefs about epistemic inequality, i.e., “unequal access to learning imposed by hidden mechanisms of information capture, production, analysis, and control” (Zuboff 2020b, p. 175)? How does this vary depending on sociocultural contexts and norms? With the emergence of legislation and regulations such as Europe’s (EU, 2018) GDPR and California’s Consumer Privacy Act (2018: CCPA), we need to know if adults are aware of their newly granted extensive data privacy rights (the rights to know, delete, opt out, and nondiscrimination). How are they appropriately informed of these rights in ways supporting their agency—are learning resources available not requiring reading impenetrable legalese?

Social media is now a huge part of adolescent students’ culture. How could the varied ways they learn, interact, and do things participating in online communities be leveraged for meeting the educational needs of all students? It is important to study how youth are weighing the pros of making social connections, expressing themselves and developing their online identity against the cons of being surveilled, profiled, and controlled. We ask what types of sense-making discussions youth have around ads or “news” on social media they are presented with based on their data-aggregated profiles and how many modify their privacy settings.

We know too little about the consequences arising for children’s social life and learning ecologies as social platforms connect preadolescent children from 4- to 13-year old to other children and families. Facebook’s Messenger Kids is a parent-controlled kids’ version for those under 13 who cannot have Facebook accounts but want to chat with friends and family. After violating a children’s privacy law in 2019, the FTC fined child popular TikTok $5.7 million for allowing children under 13 to sign up without parental consent. TikTok made compliance changes allowing parents to set time limits, filter mature content, and disable direct messaging for kids’ accounts.

It is important that educational researchers and learning technology designers leverage these social media tools to further personalized learning while understanding the need to simultaneously continue pushing on the important questions about surveillance and privacy. It remains to be determined what parent education is needed for protecting children’s personal data and their critically informed social media uses. The California Consumer Privacy Act of 2018 requires children under 16 to provide opt-in consent for the sale of personal information, with parent or guardian consent for children under 13. The policy presumes that parents will prioritize the child’s privacy, but it is also frequently the case that parents themselves are uploading the child’s personal information to social media.

Learning researchers should examine what is being learned from experiences crafting and implementing K-12 curricula on social media use, Internet economics, and data privacy rights, whether these are deployed in computer science education, civics, or humanities. We wonder how these lessons transform youth learning ecology and social media practices and influence their civic engagement and democratic participation. Questions of social media mining and the future of data use are intertwined with economic system design and regulation. Congress (thus, we the people) could play a role in regulating the social media industry and its deployment of AI technologies following ethical guidelines. Policy research and development needs to define the best options for sustainable, equitable, and democratic economic models for the Internet’s social media moving forward and the associated legislation needed to achieve those models.

3 Call to Action

Free speech and assembly are rights guaranteed to US citizens under the First Amendment but are likely compromised when our networked world makes it difficult for people to avoid broadcasting spatiotemporal histories of where in the world they are with their faces, voices, spatial locations, and social media postings. We must ask about what consequences these constraints will have on human development and learning and what technology choices and political actions people should be making today to protect their privacy. All these questions indicate the need for greater attention, among educational researchers, policymakers, and education stakeholders, to vigilant enactment of the guidelines for ethical AI use in education, as discussed by Kousa and Niemi (this volume).

3.1 Research

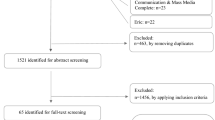

We need an agenda of research priorities for educational research and learning technology design which addresses these vital issues. First considerations are to engage in the systematic empirical investigation of how pervasive in school buildings and campuses the uses of these four surveillance technologies have become.

We must ask about what effective strategies exist for overcoming the widespread sense of disempowerment and willingness to compromise by surrendering one’s online data. We conjecture that adults and adolescents may be less complicit in the surveillance industry capturing so much personal information if they could see all the personal inferences that can be made from data captured from their behaviors. By analogy to the arguments for social distancing during the COVID-19 pandemic, might community-based action pushing back on surveillance capitalism be motivated because in doing so we would be caring for the most vulnerable in our communities? We need productive ways for adults and K-12 learners to acquire effective strategies to combat digital surveillance and to maintain their Internet privacy.

3.2 Policy and Law

Federal laws and guidelines protect pre-college students’ data privacy rights. The Federal Trade Commission (FTC), the federal agency that enforces antitrust laws and protects consumers, has established COPPA (Children’s Online Privacy Protection Act); it requires companies collecting online personal information from children under 13 to provide notice of their data collection and use practices and obtain verifiable parental consent. But schools can consent on behalf of parents to collection of student personal information—but only if such information is used for a school-authorized educational purpose and for no other commercial purpose. The FTC cites how edtech services should review the Family Educational Rights and Privacy Act (FERPA) and the Protection of Pupil Rights Amendment (PPRA)—laws administered by the US Department of Education’s Student Privacy Policy Office (SPPO)—and any state laws protecting preK-12 students’ privacy. The US Department of Education has provided new information on FERPA and virtual learning. In these regulations, we see the intersection of legal, commercial, and schooling issues.

Momentous impacts on society seem inevitable with the increasing embeddedness of facial recognition, voice recognition, location tracking, and social media mining. Society should regulate ethical guidelines for AI systems collecting and analyzing digital records of human faces, voices, spatial locations, and social relations, given the advent of AI-enhanced systems which identify us by those media and sell ads based on inferences predicting our behaviors. Arguably, these technical achievements have created benefits for consumers and citizens. But they’ve also raised difficult questions about personal rights and discriminative algorithmic biases. Protecting individual freedoms and maintaining a healthy democracy are priorities.

The United States has no laws or regulations governing the sale, acquisition, use, or misuse of face surveillance technology by the government. As of mid-2021, the few exceptions were municipal bans in California’s San Francisco and Oakland, in Massachusetts’ Boston, Somerville, Brookline, and Cambridge, and in Portland, Oregon. In 2020, Facebook agreed to pay $550 million to Illinois settling a class action lawsuit over its FIT use. In 2019, as part of a $5 billion privacy violations FTC settlement, Facebook agreed to “clear and conspicuous notice” about its face matching software and to get additional permission from people before using it for new purposes. We must also seek legal protections against discriminative uses of inaccurate FIT and speech recognition technologies for minoritized groups.

3.3 Practice

The practice of education by teachers, school leaders, and parents must seek to protect the digital privacy rights of children as they participate in the learning environments of their daily lives. Schools need to prepare school personnel, so they learn about the data sharing that they are (probably unknowingly) asking students and parents to participate in. Perhaps digital privacy health checkups should be a regular educational service for both adults and children.

Another issue is how academic and industrial researchers deal with the ethics of developing face recognition technologies, location tracking, automated speech recognition, and social media mining that can have widespread detrimental effects. Ethics and privacy considerations are commonly afterthoughts in technology development and, even then, described as a nuisance and as stifling innovation. However difficult to develop, foresight on future consequences of a technology capability should be built into training and R&D processes with transparency and accountability.

4 Conclusion

In this chapter, we introduced the capabilities of four core surveillance technologies, each becoming interwoven into the fabrics of universities and preK-12 schools: location tracking, facial identification, automated speech recognition, and social media mining. As such ubiquitous AI is becoming infrastructural to cultural practices, embodied in cloud computing web services and in sensors in phones and the physical world, creating a surveillance society, it is essential for education stakeholders, from policymakers to school leaders, teachers, parents, legislators, regulators, and industry itself, to tackle together the ethical issues of AI in education which these surveillance technologies foreground. We sketched challenges around how these technologies may be reshaping human development, risks of algorithmic biases and access inequities, and the need for learners’ critical consciousness concerning their data privacy.

Although ethical guidelines for education as a context of AI application are mainly lacking (Holmes et al. 2021), we may find utility for education’s issues with these four AI-enabled surveillance technologies in the five principles for ethical use of AI synthesized by Morley et al. (2020) and discussed in Kousa and Niemi’s chapter (this volume). Recall that these five complementary aspirational principles are beneficence, non-maleficence, autonomy, justice, and explicability.

AI beneficence means useful, reliable technology generously supporting the diversity of human well-being. AI non-maleficence would guarantee data security, accuracy, reliability, reproducibility, quality, and integrity. AI with human autonomy has humans free to make decisions and choices regarding AI use. AI with justice operates in a fair and transparent manner, not obstructing democracy or harming society. Explicable AI enables clear explanation and interpretation of system functioning for humans and corresponding accountability and responsibility.

We are hopeful that with concerted collaboration of government, industry, and the public sector on these issues, the continued advances in artificial intelligence will come to be a powerful aide to more equitable and just educational systems and an ingredient to engaging, innovative learning environments that will serve the needs of all our diverse learners and educators.

References

Adams, M., Bell, L. A., Goodman, D. J., & Joshi, K. (2016) (Eds.), Teaching for diversity and social justice (3rd ed.). New York: Routledge.

Andersen, R. (2020, September). The Panopticon is already here. The Atlantic Monthly.

Andrejevic, M., & Selwyn, N. (2019). Facial recognition technology in schools: critical questions and concerns. Learning, Media and Technology, 1-14.

Auxier, B., Rainie, L., Anderson, M., Perrin, A., Kumar, M., & Turner, E. (2019, November). Americans and privacy: Concerned, confused and feeling lack of control over their personal information. Pew Research Center.

Belkin, Douglas (2020, March 5). No Place to Hide: Colleges Track Students, Everywhere. Wall Street Journal.

Buolamwini, J., & Gebru, T. (2018, January). Gender shades: Intersectional accuracy disparities in commercial gender classification. In Proceedings of Machine Learning Research, 81: 1-15.

California Consumer Privacy Act of 2018 (CCPA): Fact Sheet.

Castells, M. (1996). The information age (Vol. 98). Oxford: Blackwell Publishers.

Dixon, R. (2021, April 17). Russia’s surveillance state still doesn’t match China. But Putin is racing to catch up. The Washington Post.

Economist (2018, October 24). China: facial recognition and state control

EFF (2020). Face recognition: Street level surveillance

EU (2018, May 25). EU GDPR (General Data Protection Regulation).

Greenfield, A. (2017). Radical technologies: The design of everyday life. Verso Books.

Grother, P., Ngan, M., & Hanaoka, K. (2019). Face Recognition Vendor Test (FRVT) Part 3: Demographic Effects. Technical Report NISTIR 8280, NIST.

Holmes, W., Porayska-Pomsta, K., Holstein, K., Sutherland, E., Baker, T., Shum, S.B., Santos, O.C., Rodrigo, M.T., Cukurova, M., Bittencourt, I.I. and Koedinger, K.R., (2021). Ethics of AI in education: Towards a community-wide framework. International Journal of Artificial Intelligence in Education, pp.1–23.

Ip, Greg (2018, November). The Unintended Consequences of the ‘Free’ Internet. Wall Street Journal.

Jones, K. M., Asher, A., Goben, A., Perry, M. R., Salo, D., Briney, K. A., & Robertshaw, M. B. (2020). “We’re being tracked at all times”: Student perspectives of their privacy in relation to learning analytics in higher education. Journal of the Association for Information Science and Technology, 71(9), 1044-1059.

Kaplan, A. M., & Haenlein, M. (2010). Users of the world, unite! The challenges and opportunities of Social Media. Business horizons, 53(1), 59–68.

Kelly, S.M. (2018, October 17). Growing up with Alexa: A child's relationship with Amazon's voice assistant. CNN Business. (https://edition.cnn.com/2018/10/16/tech/alexa-child-development/index.html)

Koenecke, A., Nam, A., Lake, E., Nudell, J., Quartey, M., Mengesha, Z.,Toups, C., Rickford, J.R., Jurafsky, D., & Goel, S. (2020). Racial disparities in automated speech recognition. Proceedings of the National Academy of Sciences, 117(14), 7684–7689.

Markoff, John & Gruber, Tom (2019, January 17). A Conversation about Conversational AI | Tom Gruber

McStay, A., & Rosner, G. (2021). Emotional artificial intelligence in children’s toys and devices: Ethics, governance and practical remedies. Big Data & Society, 8(1), 2053951721994877.

Metz, C. (2019, July 13). Facial recognition tech is growing stronger, thanks to your face. New York Times.

Mitchell, A., & Diamond, L. (2018, Feb 2). China’s surveillance state should scare everyone. The Atlantic Monthly.

Morley. J., Floridi, L., Kinsey, L., Elhalal, A. (2020). From what to how: An initial review of publicly available AI ethics tools, methods, and research to translate principles into practices. Science and Engineering Ethics, 26:2141–2168. https://doi.org/10.1007/s11948-019-00165-5

Möllers, N., Wood, D.M. & Lyon, D. (2019-03-31). Surveillance capitalism: An Interview with Shoshana Zuboff. Surveillance & Society, 17 (1/2): 257–266.

Nance, J. P. (2016). Student surveillance, racial inequalities, and implicit racial bias. Emory LJ, 66, 765.

Raviv, S. (2020, January 21). The secret history of facial recognition. Wired.

Simonite, T., & Barber, G. (2019). The delicate ethics of using facial recognition in schools. Wired, Business 10.17.2019

Thompson, Stuart A., Warzel, Charlie (2019, December 19). Twelve million phones, one dataset, zero privacy (Links to an external site). New York Times. Privacy Project.

Wang, Mona, & Gebhart, Gennie (2020, February 27). Schools are pushing the boundaries of surveillance technologies (Links to an external site.). Electronic Frontiers Foundation.

Weiser, M. (1991). The computer for the 21st Century. Scientific American, 265(3), 94-105.

Zafarani, R., Abbasi, M. A., & Liu, H. (2014). Social media mining: an introduction. Cambridge University Press.

Zuboff, S. (2019). The age of surveillance capitalism: The fight for a human future at the new frontier of power. New York: Public Affairs.

Zuboff, S. (2020, January 24). The known unknown: Surveillance capitalists control the science and the scientists, the secrets and the truth. New York Times, Sunday Review, pp. 1, 6, 7.

Zuboff, S. (2020b). Caveat Usor: Surveillance capitalism as epistemic inequality, in Kevin Werbach, Ed., After the Digital Tornado, Cambridge: Cambridge University Press.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this chapter

Cite this chapter

Pea, R.D. et al. (2023). Four Surveillance Technologies Creating Challenges for Education. In: Niemi, H., Pea, R.D., Lu, Y. (eds) AI in Learning: Designing the Future. Springer, Cham. https://doi.org/10.1007/978-3-031-09687-7_19

Download citation

DOI: https://doi.org/10.1007/978-3-031-09687-7_19

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-09686-0

Online ISBN: 978-3-031-09687-7

eBook Packages: Behavioral Science and PsychologyBehavioral Science and Psychology (R0)