Abstract

The rapid advancement of the Internet of Things (IoT) has reshaped the industrial system, agricultural system, healthcare systems, and even our daily livelihoods, as the number of IoT applications is surging in these fields. Still, numerous challenges are imposed when putting in place such technology at large scale. In a system of millions of connected devices, operating each one of them manually is impossible, making IoT platforms unmaintainable. In this study, we present our attempt to achieve the autonomy of IoT infrastructure by building a platform that targets a dynamic and quick Plug and Play (PnP) deployment of the system at any given location, using predefined pipelines. The platform also supports real-time data processing, which enables the users to have reliable and real-time data visualization in a dynamic dashboard.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Enterprise architectures with a large number of the Internet of Things (IoT) devices impose challenging operational issues. It is possible to deal with these issues for a limited number of IoT devices, yet it is almost impossible to do likewise for many devices. We argue that a faster approach to tackle the operational issues of IoT by adopting the Plug and Play (PnP) concept in IoT architecture. IoT is a concept that builds a connection between all devices to the internet and facilitates communication with each other over the internet, with over 10 billion IoT devices wirelessly connected as of recently [1, 2]. In today‘s IoT technology, the major requirement is an architecture which is capable of supporting a large number of devices and still provides reliable performance. With the increasing number of devices, it becomes exceedingly complex to add a new one to an existing platform, and to reduce the integration phase of the system, therefore the concept of PnP in a smart environment was first proposed in 2006 [2]. The main objectives of PnP architecture in IoT systems are to reduce user intervention for system integration, and to have a real time solution from the system services. As an example, Helal et al. proposed a platform based on the PnP principle that enables a seamless integration of devices to the back-end systems without exterior interventions from integrators or engineers [3]. Although the concept of PnP is very intriguing, the PnP based IoT systems’ reliability is the next big challenge. The reliability of a system can be evaluated by: the ability to perform a required task, ability to perform without failures, ability to perform under stated conditions, and ability to perform for a specified period [4].

The rest of the paper is organized as follows. Section 1 is the literature review of the existing architectures, implementation of IoT platforms, and visualization techniques. In Sect. 2, we propose our AMI IoT platform, wherein we give an overview of our architecture and implementations. In Sect. 3, we move into the details of our architecture and implementations of different components of the PnP base IoT architecture. In Sect. 4, we have the results and validation of our platform. We finally conclude the paper with a summary of our platform and its uniqueness compared to the other existing solutions in Sect. 5.

2 Literature Review

The reliability of PnP in IoT systems is linked to the diverse components of the IoT architecture. Therefore, there is a need to understand the different architectural implementations for IoT platforms that cover the existing domains. In the following, we discuss the existing systems and their architectural implementations.

IoT Platforms and Architectures

The IoT architectures that exist today have an evolving pattern, each new one overcomes the major challenges faced by the previous one. The initial approach was the three-layered architecture (Fig. 1.a) [5] with a limitation of having only the cloud layer for data processing, then came the Fog layer architecture, which resolved the limitations of cloud computing, by extending cloud-based services and bringing them closer to the IoT end-user environment, but it still had many issues on every layer, each distinguished by specific faults and errors that lead to an overall infective IoT system: * First, starting from the end-user layer, where the sensors and/or actuators recording environmental data could have reading errors [6]. * Second, the fog layer, where the gateways face a lack of memory, and processor overloading and overheating, which leads to serious issues in IoT systems [6, 7]. * Third, the network layer where communication links in the IoT system are frequently prone to breaking, malfunctioning (e.g., incorrect state), or unavailability of the medium [7]. * Fourth, the cloud layer with the challenge of sustaining all the different connected gateways, on both the computation and maintenance level.

IoT (a) three-layered, (b) five-layered, and (c) Fog/IoT architectures [5]

We run a generalized analysis on the existing IoT platforms based on the following metrics- the deployment duration, the real-time capability, the reliability of the system, the business layer (mainly the data visualization aspect), and finally the cost factor, and following are the results. The existing platforms can be classified into two major groups * Consumer IoT (e.g., OpenHAB [8], SmartThings [9], HomeKit [10]) and * Enterprise IoT (e.g., AWS IoT [11], Watson IoT [12]), the dependencies of this classification include functionality and contextual information, interoperability, scalability, security, and costs [13]. We can categorize the existing platforms into three sets. * The first set of the platform involves a centralized hub (e.g., OpenHAB or SmartThings). The advantage is that it provides data processing and storage in the local settings meaning fast data processing and real-time visualization along with offline capabilities. The disadvantages include higher resource requirements, higher cost of the entire setup. This implementation also involves a lot of user intervention for the configuration of the system which hinders its scalability factor and in turn does not support the PnP aspect of IoT systems. * The second set, which involves the cloud implementations, avoids the initial involvement of the users to a greater extent along with no requirements of any specific hubs which reduces the cost (e.g., AWS IoT, Watson IoT). The major drawback is the real-time performance or the reliability of the system as it depends on the connectivity of the systems. *The third set is the hybrid approach which uses most of the hub implementations and the cloud services. Watson IoT or AWS IoT or SmartThings have tried optimizing the entire platform pipeline by segregating them into the local edge processing and the cloud processing, but we still find issues with deployments or installations due to the complexities of having to configure the environmental settings. The other major issue is the cost of the systems, which are often not affordable to all users.

Plug and Play in IoT Platforms

In order to overcome the abovementioned issues, several studies draw their attention to bring the idea of PnP into IoT platforms. Kim et al. [14] proposed using web semantics to manage IoT devices. In their approach all sensors and actuators are connected to Arduino boards as embedded IoT devices. Each Arduino can accept configuration from central server and adapt their functionality to the new configuration. Meanwhile, they developed a Knowledge Base which stores the configuration of all supported devices and once a new device is connected, appropriate configuration is shared with the device so that they can start collecting data or triggering actions to control the environment. As a result, they were able to reduce the complexity of deployments and provide more dynamicity in IoT environments. However, they only focused on the perception layer and did not address the business and application layer, and how to visualize the result dynamically. *) Kim et al. [15] target a framework dynamic enough to integrate new sensors, devices or services to an environment. They proposed a device discovery service that (1) automatically detect and generate a description of the device and its functionalities, and (2) integrating that same device seamlessly in the reasoning process without causing any interruption to platform or the framework functionality. *) Kesavan et al., bring forward the scalability and dynamic approach which is an essential aspect for remote monitoring of patient data with real time analysis [16]. In their proposed method they focused on the scalability of the system in accordance with the PnP approach. The gateway is capable of handling dynamic addition of sensors and is capable of handling data transmission in a seamless manner. Another aspect of this method is the edge analytics part which informs the users of the battery level status of the sensors used so that necessary actions can be taken when required.

3 AMI Platform

To address all the drawbacks of the implementations described above, we built AMI platform, based on the fog layered architecture (Fig. 1.c). AMI platform architecture has five main layers (Fig. 2), the edge layer which includes all the sensing devices with different communication protocols, the fog layer which is tasked with preprocessing the acquired data before sending it to the above layers, then comes the network layer which deals with the communication protocols and the security and privacy of the data being transmitted to the cloud. The fourth layer is the cloud layer, where the data received is decoded and processed in specific models which are then stored in storage hubs. The final layer is the business layer where the real-time data is visualized in a dynamic pattern through dashboards based on the user specification. Our platform targets supporting PnP features in all the presented layers, and as well as the link between them.

3.1 Architecture Overview

The AMI Platform involves a set of physical (i.e., sensing devices and computing hardware) and logical (i.e., software applications and virtual computing resources) components that collect, process, transmit, store, and analyze data. These components are layered following Fog Computing architecture.

The physical gateway is situated in the end-user environment. It represents the fog layer and thus the bridge connecting the sensor devices in the end-user layer with the network layer. Additionally, a virtual gateway is located inside the cloud infrastructure as an extension of the network layer, to receive the data transmitted through the network layer, and push it to the cloud storage. The benefit of the separation is to utilize the computing resources on the gateways for pre-processing and ensuring the quality of service. A common challenge with the physical gateways is the deployment and update of the numerous services that are running on them that handle the communication and acquisition of data from sensors. Our focus while designing and building the physical gateway was to ensure the ease of PnP integration of a “Pipeline” that automatically or semi-automatically handles the deployment of said services. The data flows through the platform as follows: First, at the end-user layer, a cluster of heterogeneous sensors collects both environmental (e.g., temperature, motion, location) and biomedical (e.g., heart rate, breathing rate, sleep pattern) data. Next, the data is sent to the physical gateway for pre-processing and pushed to the cloud layer via a secure channel created by the network layer. Upon arriving at the cloud layer, the data will be decoded, processed in specific formats, and stored in databases. The business layer is for the user interface which also has models for dynamic graphs and charts creation which helps for a better adaptation of data visualization for any kind of user need. We also propose a different approach to resolve the existing challenges of scalability and maintainability based on microservices, where the different functional components of the system are split into independent applications [17].

3.2 Edge Layer

In the context of IoT, the end-user layer is located in the monitored person’s living environment, and it is considered the entry point of the platform. It consists of a set of sensing devices of heterogeneous nature that observe key parameters of both the living environment and the person in multiple dimensions. *) Wearable Sensors (e.g., Apple Watch, Garmin Watch, Fitbit Watch, Mi Bands, Pulse Oximeters), which are installed and/or implanted on participants’ bodies (e.g., wrist, fingers) to monitor their everyday activities, provide various measurements, including motion (e.g., acceleration, step count, distance walking), location (e.g., attitude, longitude, altitude), biomedical information (e.g., heart rate, heart rate viability, breathing rate, and oxygen saturation). One major advantage of wearable sensors is their portability: the participants can easily carry the devices either at their home, doing outdoor activities, or during clinical assessment. However, this type of sensor also shows limitations under specific circumstances. Because the patient’s expertise in advanced technologies cannot be guaranteed, ensuring proper charging, usage, and maintenance of the devices are challenging tasks. *) To overcome these limitations, there is the second type of sensing devices, Environmental Sensors (e.g., door/window, motion, temperature, illuminance, and humidity sensors) that are designed to track indoor environmental parameters and patients’ ADL continuously while maintaining their autonomy and independence [18]. These sensors are often installed in fixed positions (e.g., wall, door, and window) inside a subjective room. *) Additionally, other sensors can be placed or connected to furniture such as the smart mat device, a highly sensitive micro-bend fiber optic sensor (MFOS) often placed on top of the bed (under the mattress or bed sheet) or on a char non-intrusively to monitor location information and vital signs (e.g., heart rate (HR), breathing rate (BR), Ballistocardiograph (BCG)), which enables the possibility of detecting sleep cycles and sleep disorders, such as Apnea [19].

3.3 Fog Layer

Our implementation of the fog layer is represented as a physical gateway, that acts as the hub for multiple devices with different communication protocols. The physical gateway has the following major functionalities: *) Data acquisition: the gateway supports diverse communication protocols, e.g., Z-Wave, Insteon, Bluetooth, Serial line communications, and other similar protocols. Each adapter is an abstract layer for the hardware and constantly listens to its corresponding sensor. It also enables easy and reliable integration of new devices (PnP concept in sensor installation [20]) with the same set of protocols. *) Preprocessing of data: the raw data obtained from heterogeneous sensors suffers from poor interoperability, so the data format varies between different types of sensors, leading to difficulties in data interpretation and transmission. Therefore, we developed a data model library to overcome this issue. The data model library enables the gateway to pre-process the data by adding semantic information and populating its values into one of the compatible formats. *) Data Transfer: The next step is the transfer of data from the edge layer to the network layer, the processed data is sent through network protocols, for example, the messaging protocol called Message Queue Telemetry Transport (MQTT) or the hypertext transfer protocol (HTTP) protocol. Currently, in our implementation, we are using MQTT as it has a publish-and-subscribe architecture where the client devices and applications publish and subscribe to topics handled by a broker. *) Status Updates: The other service offered by the gateway is the system or status updates. All the above-mentioned services require system resources and to avoid failures the system check services provide live status updates of the resources, for example, CPU, memory, and internet connectivity.

3.4 Network Layer

Sending confidential data and information over the internet is vulnerable to attacks, so it is critical to create a secure channel for connections, which is taken care of by the network layer, the subspace between the physical gateway (at the edge of the end-user layer) and the virtual gateway (at the edge of the cloud layer). A great amount of effort is put into securing the network connection. *) Firstly, a virtual network is created to establish a peer-to-peer secure channel between the two gateways, adding an extra layer of security to our protocol of communication. The advantages of the software network solution are efficiency and quick adaptability. It takes advantage of the existing internet connection instead of setting up a new one. In our platform, we use OpenVPN to implement this secure channel. OpenVPN is an open-source virtual private network (VPN) system that creates routing and bridging connections between entities. An OpenVPN server automatically issues certificates for our physical and virtual gateways, triggered by their respective deployment pipelines, and then stores them in a secure storage hub. This approach limits the risks of transporting the certificates/keys from one entity to another one. *) lastly, enforce the firewall rule by allowing only a small number of essential connections and blocking all others. All communication between the physical gateways and virtual gateway goes through this channel, securing the confidential data transmitted between them.

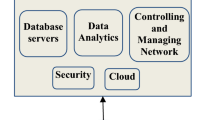

3.5 Cloud Layer

The cloud layer is responsible for the management, storage of the collected data. Considering the essential need for a real-time monitoring solution, the cloud infrastructure needs to have minimal delay and downtime, to allow for a reliable and real-time visualization and analysis. Hence, the agent-based microservices approach is adopted on the cloud layer. Each component on the cloud layer is containerized using Docker daemon. This allows easy deployment of components anywhere without having to worry about the specifics of the underlying platform.

Virtual Gateway:

Being the contact point of the cloud layer, the virtual gateway is created from a pre-built docker image and plays a crucial part in the platform. It connects with its peer physical gateway through the VPN in the network layer, receives data with the configured communication protocols (e.g., MQTT, REST), and reconstructs the data message using a mapping function to meet the requirement of the data storage. However, managing on the physical gateway the rapid evolution of sensors’ data models and numerous deployments of physical gateways requires a manual configuration and intervention before every deployment and after every update. This challenge pushed us to build the gateway in a way that will allow for a PnP integration seamlessly with a pipeline that automates its deployments, and updates.

Data Storage:

IoT data representation and storage are an ever-changing and expanding field, with this in mind we have opted to use a non-relation database, or more specifically document based. The advantages of this are: 1) first the dynamicity and flexibility of document-based databases, which is particularly useful for our platform due to the evolution of data generated by IoT devices over time, and by eliminating the time required to adapt or update the structure of tables or other entities to allow inserting new fields or data models; 2) second the horizontal scalability of non-relational databases, as opposed to vertical scalability of relational databases [21]. As consequence, keeping up with the increase in either the volume or velocity is only a question of adding more nodes to the database cluster. This architecture also ensures higher availability because, if any node fails, all its functions will be delegated to other nodes in that cluster. Our choice of data storage is Elasticsearch, an open-source distributed search and analytics engine at the center of the Elastic Stack [22]. It supports various commonly used data types, including numerical, textual, keywords, state, and multi-dimensional.

3.6 Business Layer

Data Visualization: Environmental and wearable sensors acquire a massive amount of data, e.g., on average, 1.2 to 1.8 million entries per day during the course of our first deployment. With such amount of data constantly generated, it is crucial to develop an appropriate visualization tool that can present collected information in real-time to provide better assistance to the end-users. However, the variety of needs, roles, and metrics in different applications along with changes in needs over time make it difficult to have only one dashboard to fit all the needs. Our adaptive solution enables even users without technical knowledge to create a dashboard adapted to their needs in minimal time.

In our architecture, a unique approach is taken to ensure PnP by visualizing data adaptively (Fig. 3). We first choose web-based technologies to create our system due to their accessibility. Unlike traditional software that is tied to a specific system, web applications are reachable from multiple platforms, including desktop, mobile, tablets, and smart TV. Through these web-based solutions, we enable users to dynamically define the environment and add sensors to it. When the environment model is defined, they can design the dashboard based on their needs which will be adapted to the environment automatically. This system consists of three major components:

-

1) Chart Template Designer: Empower users to dynamically design charts and save them as templates specific to each type of sensors. Users can choose the data source and visually generate queries to filter data for charts. Once the user finalizes the chart design, the configuration of the designed chart is stored in our data storage, and the defined query is parsed to accept parameters in order to enable linking the designed charts to external parameters. The first step to design charts is to retrieve required data from the data source. We used a query builder tool to allow users to define their intended criteria to filter fetched data. We added the concept of chart template to our platform in which when a request is sent to view a chart, the configuration of the chart along with other information will be loaded. Thus, the designed system gives the user flexibility to reuse designed charts in many places by only passing the required parameters (e.g., a device id).

-

2) Environment Configuration Tool: This tool enables non-technical users to create a 3D model of the deployment environment, input the address and occupants, and place items, furniture, and predefined sensors using simple drag-and-drop actions [Fig. 4]. The output of this component is used by Dashboard Designer and Reasoner Engine to generate the intended design and knowledge for each space in this environment. We defined the concept of “Space” in our platform as a group of sensors, associated with a place. The output generated by this tool is a number of “Spaces,” each of them includes several sensors and descriptive information about the area. This form of output enables all other consumer components to adapt themselves to the different Spaces.

-

3) Dashboard Designer: Allows users to dynamically design their desired pages according to the needs. This designer incorporates configuration tool data and designed chart templates to visualize sensors information on the pages automatically. Users can drag and drop necessary components to the page and easily move the elements and change components’ size to achieve the desired design. A rich set of components are added to the designer which enables users to add and customize pages and link them to environmental sensors. Once the design is finished, the design will be applied to the all provided “Spaces,” received from configuration tool and the data sources for elements in each page will be adapted to the related environment automatically. e.g., a user may design a chart to visualize room temperature, they can design it for one room and the dashboard engine will adapt the chart for other rooms provided by configuration tool and replace all the required parameters to visualize data related to each room. Another layer of flexibility is provided by allowing users to customize the elements for one specific environment so that they can fully adjust the design and deliver the data collected from sensors instantly to intended users. To manage the access level of designed pages, we built an integrated authentication system based on JWT tokens, each token contains information about the user and their assigned roles. Once a user logs into the system, a token is automatically added to the requests which allows the server to authenticate users. This authentication service is integrated with our designer to allow specific groups of users to have access to certain pages.

4 Pipelines

To speed up the process of PnP deploying and running the system, we have created a set of pipelines to automate the deployment of all services on every layer of the Fog Computing Architecture. It is a three steps process that starts from a sensor-gateway connection and ends with a running, real-time dashboard.

4.1 Gateway

The gateway acting as the hub is capable of handling multiple sensors simultaneously. For the installation of the services to provide the functionalities described in the previous section, we use Ansible to automatize the process. The ansible scripts are divided into three sections, first group of scripts are tasked with installation of all the required dependencies that our services would be needing to run properly in the system, along with this the setting up of firewall rules and other security aspects are also taken care of by these group of scripts, the second section includes the installation scripts for all the services provided by our platform, from cloning the repositories to providing them the execution rights to act as a system service is being handled in this section. The third section is the self-healing installation phase, it installs services that are used for monitoring the system resources, and report back for cases of abnormalities. This approach results in a significant decrease in user complexity and errors in the installation phase. Once the installation is done the gateway nodes are ready to plug and play for any deployment. The time taken for this installation phase of the deployment process is approximately less than thirty minutes. The gateway nodes have OpenVPN installed during this process, which gives them a secure connection to the servers to communicate with.

4.2 Server

The server pipeline consists of two main steps, both of which are automated with a script as well and require only the identifier of the gateway being deployed for an easy PnP solution. Each step corresponds to a layer in the Fog Computing Architecture. The first step is the configuration of the Network Layer by registering the gateway certificates in the VPN server and then setting up firewall rules to only accommodate the ports used by our services, thus protecting the connection between the server and the gateway by filtering and blocking all other traffic from gaining unauthorized access. The second step is to configure the node microservice that is responsible for receiving and pushing the data into our storage hub. As we support multiple communication protocols, the microservices need to be configured to a specific protocol before their deployment. Once the execution of the deployment script finishes, the gateway would be able to connect to our VPN server and start sending the data collected from the sensors and actuators to our node microservice, which will then be pushed to our data store.

4.3 Dashboard

This step completes the IoT cycle by offering a plug-and-lay solution to design a dashboard where we can monitor the collected data in real-time. The first step is to design the Environment in our 3D configuration tool where we would create the 3D space where we have deployed our gateway and communicate with it to collect the list of sensors and actuators that have been deployed in the environment. Right after we feed the generated Environment Model to our Dashboard Designer to automatically generate a view for all the spaces in the environment and the devices that have been deployed in each space. The user has full flexibility to design the required pages.

5 Results and Analysis/Validation

Our platform was deployed for a project which validates the working of our architectural metrics. The project was conducted for a span of six months at an apartment in AMI. The main goal of the project was to monitor the behavior change of a person based on the type of medication received at different stages of medical intervention. For the platform analysis, the metrics we targeted in this deployment are as follows:

5.1 Fast Deployment

We deployed in an apartment to monitor the behavior of a person and to mark the changes with the change of medication provided to that person. In this deployment, we deployed two nodes (Raspberry PIs), five door sensors, and seven motion sensors, along with a sleep mat. The steps followed in the deployment of our platform in the apartment are as follows: scouting of the area to have a better understanding of the environment, start connecting our nodes to the power outlets and configuring them with the network available in the apartment, once the node configuration is done with the network we start attaching the sensors at different places in the apartment like the walls and doors, with the completion of our entire setup the final step is to check the system performance and the data being transmitted to our servers. This entire deployment, from attaching the sensors to the walls and doors in every bedrooms and kitchen and living room, to deploying the nodes to set up the network for the nodes, took us less than two hours in the apartment.

5.2 System Reliability

The key challenge in any IoT system is the reliability of the platform. Reliability in this context refers to system failures and the efficiency of the platform in providing the decided upon services through various technical and non-technical challenges. The technical challenges include the resources of the system, the CPU and memory capacities might fail or overflow with time resulting in an unreliable system. The non-technical challenges include internet failures, the offline capability of a system is a big challenge in the development of any IoT system nowadays. In our deployment, we received certain reporting on our system status (Fig. 5) which highlighted the warnings related to CPU usage, physical memory usage, internet connectivity, and service resource consumption.

5.3 Real-Time Data Processing

In the healthcare system, a real-time platform is essential because of the impact each data might contain. For example, heart rate monitoring systems cannot have a huge delay for patients with cardiac issues. In IoT, there are very few systems in the market that can provide a real-time data processing platform. The deployment of our platform was at an apartment in Sherbrooke, Canada, which is around 10 kms (about 6.21 mi) from our servers. Our architecture has proven to be able to provide a real-time data processing and visualization technique with an average delay of less than one second (Fig. 6). We have a specific model for the data encapsulation in which we have two timestamps, the first timestamp (phenomenonTime.instant in Fig. 6) is recorded at the moment the data is received from the sensors and the other timestamp (resultTime in Fig. 6) is noted after the data is processed in the gateway and is ready to be transmitted. Based on these two timestamps we see that there is very little to no delay being added in our data processing period.

6 Discussion: Lessons Learned

The results obtained with the deployment of our platform showcase our real-time success however we faced a few major challenges throughout the period of deployment. The first major challenge was the sensors or device malfunctioning. Now our platform is dependent on third-party manufacturers for the devices and the sensors which hinder the performance of our system. One of the deployed sensors stopped working due to some manufacturing issues in the very first week of deployment. We quickly resolved the issue, by changing the sensor with a new one, as our system has a continuous monitoring aspect which informed us about the failure in a short time interval. Using trusted manufacturers for the devices might lead to higher prices but comes with greater reliability. The second challenge was with memory leaks. Currently, the physical gateways (in our case, the raspberry pi) have a limited memory resource. Due to the explicit number of services running there was a memory leak concerning the logging of issues. This issue was quickly resolved with the introduction of another self-monitoring service which monitored the overall memory usage of the system along with specific usages by each service. If the usage surpasses a specific threshold the logs are rotated and the unwanted system logs are deleted. Along with this our visualization platform provided a great level of flexibility and enabled us to design desired content for our deployments. However, there are still spaces left for improvement, especially when users add a large number of visualizations and charts. The high-frequency data generated by some devices (namely smart mats) was another challenge for visualization which we were able to overcome in the new versions of our platform. For our future works, we have already advanced on some new self-healing concepts [23] and distributed architecture to provide better performance while maintaining the quality of service (QoS) of our IoT platform.

7 Conclusion

Faster PnP deployments of IoT platforms with reliable performance, especially in tackling real-time data processing, is a challenge that hinders wider implementation of most IoT solutions. Thus, we focus in this paper on the approach to addressing these key issues. We start this paper by reviewing the progress of the research works related to IoT architectures and platforms that are in the market. After analyzing the existing solution, we come across the definitive drawbacks, and we present our architecture and IoT platform which addresses these drawbacks. We also detailed in this paper each of the components of the architecture and described how components are connected to overcome the said drawbacks. The logic in our business layer provides for a unique approach to handling user specifications by creating dynamic dashboards. We highlighted the success and the challenges faced during the six months of deployment of our system in a residence which acts as proof of concept for our main objectives. It also opened the possibility of future developments and improvements. We target in our future work to focus more on the quality-of-service (QoS) nature of the platform and to have a distributed architecture to enhance our scalability aspect.

References

Ali, R.F., Muneer, A., Dominic, P.D.D., Taib, S.M., Ghaleb, E.A.A.: Internet of Things (IoT) Security Challenges and Solutions: A Systematic Literature Review. In: Abdullah, N., Manickam, S., Anbar, M. (eds.) ACeS 2021. CCIS, vol. 1487, pp. 128–154. Springer, Singapore (2021). https://doi.org/10.1007/978-981-16-8059-5_9

Abdulrazak, B., Helal, A.: Enabling a Plug-and-play integration of smart environments, pp. 820–825 (Oct 2006). https://doi.org/10.1109/ictta.2006.1684479

Helal, A., Cook, D.J., Schmalz, M.: Smart Home-Based Health Platform for Behavioral Monitoring and Alteration of Diabetes Patients (2009). [Online]. Available: www.journalofdst.org

Moore, S.J., Nugent, C.D., Zhang, S., Cleland, I.: IoT reliability: a review leading to 5 key research directions. CCF Trans. Pervasive Comp. Intera. 2(3), 147–163 (2020). https://doi.org/10.1007/s42486-020-00037-z

Sethi, P., Sarangi, S.R.: Internet of things: architectures, protocols, and applications. J. Elec. Comp. Eng., vol. 2017. Hindawi Publishing Corporation (2017). https://doi.org/10.1155/2017/9324035

Raghunath, K.K.: Investigation of Faults, Errors and Failures in Wireless Sensor Network: A Systematical Survey.

Gupta, G., Younis, M.: Fault-tolerant clustering of wireless sensor networks. IEEE Wireless Comm. Netw. Conf. WCNC 3, 1579–1584 (2003). https://doi.org/10.1109/WCNC.2003.1200622

OpenHAB Community: Openhab documentation (2017). http://docs.openhab.org/index.html

Samsung : Smartthings developer documentation (2017). http://docs.smartthings.com/en/latest/getting-started/overview.html

Apple: Apple homekit documentation (2017). https://developer.apple.com/homekit/

Amazon: Amazon web services privacy (2017). online, https://aws.amazon.com/privacy/

IBM: Watson IoT platform (2017). online, URL https://console.bluemix.net/docs/services/IoT/feature_overview.html

Babun, L., Denney, K., Celik, Z.B., McDaniel, P., Uluagac, A.S.: A survey on IoT platforms: Communication, security, and privacy perspectives. Computer Networks, vol. 192. Elsevier B.V. (Jun 19 2021). https://doi.org/10.1016/j.comnet.2021.108040

Kim, W., Ko, H., Yun, H., Sung, J., Kim, S., Nam, J.: A generic Internet of things (IoT) platform supporting plug-and-play device management based on the semantic web. J. Ambient Intell. Huma. Comp. (2019). https://doi.org/10.1007/s12652-019-01464-2

Aloulou, H., Mokhtari, M., Tiberghien, T., Biswas, J., Kenneth, L.J.H.: A Semantic Plug&Play Based Framework for Ambient Assisted Living. In: Donnelly, M., Paggetti, C., Nugent, C., Mokhtari, M. (eds.) ICOST 2012. LNCS, vol. 7251, pp. 165–172. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-30779-9_21

Kesavan, S., Kalambettu, G.K.: IOT enabled comprehensive, plug and play gateway framework for smart health

Sun, L., Li, Y., Memon, R.A.: An open IoT framework based on microservices architecture. China Communications 14(2), 154–162 (2017). https://doi.org/10.1109/CC.2017.7868163. Feb.

Marques, G., Pitarma, R.: An indoor monitoring system for ambient assisted living based on internet of things architecture. Int. J. Environ. Res. Public Health (2016). https://doi.org/10.3390/ijerph13111152

Sadek, I., Seet, E., Biswas, J., Abdulrazak, B., Mokhtari, M.: Nonintrusive vital signs monitoring for sleep apnea patients: a preliminary study. IEEE Access 6, 2506–2514 (2017). https://doi.org/10.1109/ACCESS.2017.2783939. Dec.

Ruiz-Rosero, J., Ramirez-Gonzalez, G., Ruiz-Rosero, J., Ramirez-Gonzalez, G.: Firmware architecture to support Plug and Play sensors for IoT environment Wireless Sensor Network View project Focal and non-Focal Epilepsy Localisation View project Firmware architecture to support Plug and Play sensors for IoT environment (2015). [Online]. Available: https://books.google.com.co/books?id=JaPAAgAAQBAJ

Khasawneh, T.N., Al-Sahlee, M.H., Safia, A.A.: SQL, NewSQL, and NOSQL Databases: A Comparative Survey. In : 2020 11th International Conference on Information and Communication Systems, ICICS 2020, pp. 13–21 (Apr 2020). https://doi.org/10.1109/ICICS49469.2020.239513

Bajer, M.: Building an IoT Data Hub with Elasticsearch, Logstash and Kibana (2017). https://doi.org/10.1109/W-FiCloud.2017.40

Abdulrazak, B., Codjo, J.A., Paul, S.: Self-Healing approach for IoT Architecture: AMI Platform. In: International Conference On Smart Living and Public Health (2022)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this paper

Cite this paper

Abdulrazak, B., Paul, S., Maraoui, S., Rezaei, A., Xiao, T. (2022). IoT Architecture with Plug and Play for Fast Deployment and System Reliability: AMI Platform. In: Aloulou, H., Abdulrazak, B., de Marassé-Enouf, A., Mokhtari, M. (eds) Participative Urban Health and Healthy Aging in the Age of AI. ICOST 2022. Lecture Notes in Computer Science, vol 13287. Springer, Cham. https://doi.org/10.1007/978-3-031-09593-1_4

Download citation

DOI: https://doi.org/10.1007/978-3-031-09593-1_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-09592-4

Online ISBN: 978-3-031-09593-1

eBook Packages: Computer ScienceComputer Science (R0)