Abstract

The revolution in systems autonomy is underway. We are at the dawn of a radical change in the use we make of the equipment available to us, with the upcoming and progressive autonomy of this equipment allowing it to adapt to the situation. The danger and complexity of the military world makes it difficult to anticipate the major issues linked to the adoption of these machines, in particular that of the responsibility of the leaders who will allocate their tasks or missions, as well as that of those who design and test these systems. This chapter proposes to list some of the safeguards necessary for their use: the control of these systems by leaders, the necessary confidence in their use and the need to integrate into their embedded software rules to be respected when tasks are executed (rules of engagement linked to the circumstances, or standards not to be violated). It emphasises the absolute role of leaders whose choices must, at all times, prevail over those of AIs, especially in complex situations, and who must ensure the conduct of the manoeuver because they are the guarantor who gives meaning to military action.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

2.1 Introduction

We are on the cusp of a major but inevitable evolution in the delegation of tasks to machines, which is already underway in the civilian word. Technological evolutions represented by robots, artificial intelligence, remote storage and remote processing of information also have strong impacts on military organisations. Battlefield equipment and vehicles will be increasingly digitised and military actions more and more displaced. New tools imply new usages, and new usages imply new doctrines [6]. The way war is conducted is thus undergoing major changes.

2.2 Foreseen Changes in the Way of Conducting War

With the volume of information constantly increasing, the need for data processing capable of collecting, receiving and disseminating this information is a key evolution for the armies.

The number of combat functions remotely performed by robots will gradually increase, eventually including the use of lethal effector offsets. As an example, France can no longer do without drones in military operations, especially in Mali where it must cover a very large territory with 5000 soldiers. Recently, jihadist leaders were killed thanks to the contribution of armed drones.

2.2.1 Displacement of the Combatant’s Action

Military robots, new tactical tools made available to the armed forces and the combatant, offer several advantages: permanent reporting by sensors, remote effectors, omnipresence of the machine on the battlefield; delegating repetitive or specific tasks usually performed by humans on the battlefield to the machine; as well as a lower exposure to danger for the soldier which meets the demands of nations to preserve and protect their military personnel.

Furthermore, as with the development of artificial intelligence, machines will progressively acquire capacities to analyse the situation, ultimately allowing them to handle the unexpected and adapt to the environment and to the unknown, which is a move towards autonomy, although it must remain under supervision. Once the success rate of the algorithm becomes indisputably better than that of the best human operators, it will be difficult to refute the fact that algorithms are more efficient than humans.

The autonomy of the systems will also be favoured by the fact that robotic autonomous systems are faster and more reactive than humans, especially in case of emergencies requiring very fast reaction times; able to defend themself in response to an aggression; more precise than humans and capable of operating around the clock, subject to energy autonomy.

A new concept of Hyperwar was presented by General John R. Allen, former commander of the NATO International Security Assistance Force and U.S. Forces—Afghanistan (USFOR-A), in 2019. It is based on the time reduction of the decision process [1].

Migration from remote control of machines to automation is inevitable for these reasons, as well as to reduce operator cognitive load and free their movement.

2.2.2 A New Threat: Access to Innovation by Enemies

One major expected change is that robots on the battlefield will level the asymmetry and equalise the balance of power between states or governmental organisations.

To give some examples, a drone (Unmanned Aerial Vehicle) can scout and spot any weaknesses in the protection of a site from the air, using image identification algorithms that make it possible to find targets such as vehicles or people. Swarms of drones can enter a building without the assistance of a global navigation satellite system (GNSS). Drones also can be used as weapons with an explosive charge that can be remotely activated.

The fact is that today the technology to piece together and assemble components to produce such robots is freely available on the Internet. Information is available about the technology for manually piloted robots to fully autonomous robots, from unarmed to armed systems. Most of these new technologies are available in open source, which raises the question of their dissemination and use by many countries and by terrorist groups.

2.2.3 How to Avoid Dissemination?

Faced with such threats, ways must be found to prevent dissemination. One option is to make it compulsory to include the presence of a “secure element” inside the hardware of robots, using it to sign any embedded software, then require robot manufacturers to accept software updates only from a verified server, as is done for smartphone applications like the Google Play “app store” for example.

Drones coming from suppliers and countries not accepting these regulations could be prohibited from sale in democratic countries.

2.3 Impact on Responsibilities

New tools imply new uses, new uses imply new doctrines, and robots are no exception to this rule. One of the challenges will therefore consist in defining a doctrine for use of these robotic systems, starting from existing military doctrines and adapting them to the characteristics of the combat of the future.

2.3.1 Changes at the Organisational Level

Considering any robot as a partner implies changes at the organisational level, namely a reorganisation of military units to better adapt them to the use of these robots. This could include hiring some extra human resources to pilot or supervise robots and the corresponding training. Or transforming existing ways of conducting a military operation, such as, for example, military convoys that could be gradually automated.

2.3.2 Still the Need for Human Responsibility When Using the Machine

Leaders are the human keys of any military action. They give meaning to it, remain responsible for the manoeuvring and the conduct of the war, and adapt “en conduite” depending on events. For that, they must “feel” the battlefield, doing everything to reduce being overwhelmed by the fog of war and the uncertainty and threats.

With the use of robotic weapons systems with an autonomous decision-making capacity, they are then able to delegate tasks or missions to these systems, according to the tactical situation.

Because of that, leaders should remain masters of the action because, unlike machines, they can give meaning to the action and take responsibility for it.

2.3.3 Responsibility is also Common to All Stakeholders

Laws are made in such a way that for any use of autonomous machinery, we need to be able to clearly identify a responsible natural or legal person. In 2012, Keith Miller initiated and led a collective effort to develop a set of rules for “moral responsibility for computer artefacts” [3].

In our study, we will list three levels of responsibility.

2.3.3.1 Responsibility During the Design Phase

-

Designers and developers should apply a precautionary principle when developing the algorithms of autonomous systems;

-

designers should build humanisation into the designed system when they elaborate its doctrine of use, which means enabling the possibility for the operator to be in the loop;

-

they should limit the use of the machine to what it is made for, and the environment in which it is made to operate;

-

any autonomy for a system must be limited to a defined space and time for its use;

-

designers must ensure it is possible to remotely neutralise a machine in the event of loss of control, or internally if its embedded software “recognises” that a situation is unexpected and potentially dangerous.

2.3.3.2 Responsibility of the Leaders, the People Using Their Judgement to Determine the Best Option for Using It and When

-

The human and the machine have to train altogether to form a single military entity;

-

the leaders should prepare the mission in advance with a tactical reasoning that includes these machines from the start of the mission until its end;

-

the leaders will precisely define the rules of engagement of the machine, namely the conditions of activation and the constraints to be respected by these systems, while limiting their activation in time and space.

2.3.3.3 Responsibility of the Operators

-

Operators must ask themselves whether the use of the system is appropriate before its activation on the battlefield, and, if it is weaponised, about the proportionality of an armed response;

-

the operators have the closest situational awareness, and, although the machine can make probability calculations, probability does not take into account the complexity of military situations on the battlefield, which require human analysis.

2.4 The Place of Leaders in Complex Systems

The delegation of tasks to increasingly autonomous machines raises the question of the place of the humans who interface these systems and should remain in control of them.

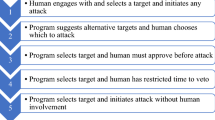

2.4.1 Leaders Must Always Control the Use of an Autonomous System

It is a fact that the military will not use equipment or tools that they do not control, and this applies to all armies around the world. Military leaders must be in control of the military action, and to this end, must be able to control the units and the means at their disposal. Military leaders place their confidence in them to carry out the mission, which is the basis of the principle of subsidiarity.

For this reason, it is not in their interest to have a robotic system that governs itself with its own rules and objectives, nor one that is disobedient or can break out of the framework that has been set for it. Similarly, such a system must respect military orders and instructions. The consequence is that at any time leaders must be able to regain control of a robotic system and potentially cause it to leave the “autonomous mode” in which they themselves had authorised it to enter.

Therefore, machines with a certain degree of autonomy must be subordinate to the chain of command and subject to orders, counter orders and reporting.

2.4.2 Operators Must Have Confidence When Delegating Tasks to an Autonomous System

The military will never use equipment or tools that they do not trust. This is why leaders must have confidence in the way a machine behaves or could behave. Consequently, military engineers should develop autonomous systems capable of explaining their decisions.

Automatic systems are predictable, and we can easily anticipate how they will perform the task entrusted to them. However, it becomes more complex with autonomous systems, especially self-learning systems where the objective of the task to be accomplished by the machine is known but not how it will operate. This poses a serious question of confidence in this system. When I ask an autonomous robot lawn mower to mow my lawn, I know my lawn will be mown, but I don’t know how the robot will proceed exactly.

The best examples to focus on are the soldier’s expectations about artificial intelligence (AI) embedded in autonomous systems.

-

AI should be trustable. This means that adaptive and self-learning systems must be able to explain their reasoning and decisions to human operators in a transparent and understandable manner;

-

AI should be explainable and predictable: you must understand the different steps of reasoning carried out by a machine that solves a problem for you or provides an answer to a complex question. For that, we need a human–machine interface (HMI) explaining its decision-making mechanism.

We therefore have to focus on more transparent and personalised human–machine interfaces at the service of users and leaders.

2.4.3 Cognitive Overload: The Need for a Digital Assistant

The digitisation of the battlefield began several years ago with weapon systems, vehicles and military equipment. One of its consequences is that it may generate information overload for leaders who are already very busy and focused on their tasks of commanding and managing. There is consensus within the military community that leaders can manage a maximum of seven different information sources at the same time, and fewer when under fire.

Delegating is one way to avoid cognitive overload, and one possible solution is to create a “digital assistant” who can assist leaders in the stages of information processing.

This assistant can initially be a person, a deputy who will manage the data generated by the battlefield. Then in some decades, the assistant may become digital, i.e. an autonomous machine that will assist leaders in filtering and processing information, and be a decision-making aid for them.

2.4.4 AI Will Influence the Decisions Made by the Leader

Stress is an inherent component of taking responsibility. It is common for military leaders to feel overwhelmed in a complex military situation. In such contexts, leaders will most often be inclined to trust a source of artificial intelligence because they will consider it, provided they have confidence in it, to be a serious decision-making aid that is not influenced by any stress, with superior processing capabilities and the ability to test multiple combinations for a particular effect.

The fact remains that any self-learning system must be taught. Military leaders will also be responsible for the proper learning and use of self-learning machinery in the field. To do so, they will have to supervise the learning process prior to its regular use and ensure its control over time.

2.5 New Rules for Autonomous Systems

Considering the major potential effects of systems with a certain form of autonomy on the battlefield, they should be constrained by new rules.

2.5.1 Machines Have to Respect Law Rules

Any military action must respect the different rules and constraints linked to the environment in which it takes place. Which means necessary supervision on the deontological, ethical and legal aspects.

For the military, these rules and constraints are manifold [2]:

-

the legal constraint linked to international regulations, in particular respect for the rules of international humanitarian law (principle of humanity, discrimination, precaution, proportionality and necessity). All military leaders are responsible for the means they engage on the battlefield and their use. Binding Article 36 of the additional protocol to the Geneva Convention stipulates that any new weapon system must be lawful;

-

the rules of engagement (ROE) formulated by military leaders depending on the context.Footnote 1In particular for any critical action such as opening fire, and other such decisions which involve personal responsibility;

-

professional ethical constraints, namely an ethical imperative that military leaders apply according to the situation on the battlefield. These constraints emanate from the authority employing the autonomous systems.

2.5.2 Hardware and Components Should Be Protected by Design

To ensure that the above rules are respected, it is essential to limit the framework of execution of the embedded software of autonomous systems. One possible solution is to integrate constraints “by design” in the initial design of the machine, such as execution safeguards in the hardware or the software, anticipating the worst-case scenario and ensuring they cannot occur.

2.5.2.1 Conception: Safeguards Within the Software Development Process

The following recommendations for designing systems having some form of autonomy have been inspired from document [6]:

-

follow design methodologies that clearly define responsibilities;

-

ensure the control of the various possible reactions of the machine. To do that, when a formal approach is too difficult, limit it to a corpus of known situations where a decision can be modelledFootnote 2;

-

frame the execution of the algorithms by defining red lines that must not be crossed relating to:

-

technological constraints;

-

rules to follow such as ethics or legal rules;

-

unwanted or unpredictable behaviours.

-

-

restrict the autonomy of these systems by implementing embedded software safeguards, designed to ensure that these red lines are never crossed.

2.5.2.2 Certification

In the case of autonomous systems, the complexity of their algorithms complicates the traditional approach to simulation testing. It is necessary to verify whether the autonomy of the robot can be diverted from its original purpose, voluntarily or involuntarily.

Moreover, it is rarely possible to carry out tests by controlling the entire information-processing chain within the device. Many evaluations are conducted in “black boxes”, which means that the evaluator can only control the test environment, i.e. the stimuli to be sent to the system, and observe the output behaviours.

As there is a general wish for transparency and control over the behaviour certification of the systems, there is a clearly identified need to set a formal and rigorous framework for the evaluation of these devices, and it is thus preferable to develop white box algorithms to facilitate their certification.

2.5.2.3 Ethics

The consideration of ethical issues in the development, maintenance and execution of software becomes an imperative in the context of the autonomy of robotics systems.

A machine is by nature amoral. The human being remains the one and only moral agent, therefore the only responsible.

In which case, should we be able to code ethical rules? This seems impossible, because ethical reasoning requires situational awareness and consciousness, which are uniquely human characteristics [5]. Nevertheless, the integration of partial ethical reasoning into a machine can be considered. This would amount to designing an artificial agent belonging to a human–robot system capable of having computer reasoning that takes into account the law rules to be respected (see above) and able to make a decision as well as explain it to an operator. Catherine Tessier describes in [6] three different approaches:

-

a consequentialist framework which focuses on the effect and therefore the finality;

-

an ethical framework that focuses on the means for obtaining the effects;

-

a deontological approach (knowing that military deontology is to respect the adversary and minimise casualties).

Notwithstanding the extreme difficulty in developing these approaches, this raises the question of how to organise the hierarchy of values.

2.6 Towards an International Standardisation

In a scenario where human beings move away from the decision-making process, in favour of machines, it is necessary to decide how to define a common base of ethical rules applicable at the international level, which in turn consists of two levels: the civilian and the military.

2.6.1 Civilian Level

At the civilian level, the Institute of Electrical and Electronics Engineers (IEEE) Ethically Aligned Design Document elevates the importance of ethics in the development of artificial intelligence (AI) and autonomous systems (AS) [4].

The possibility of digitally encoding ethics and making the algorithm of an autonomous system react in accordance with ethics from the human point of view is a very difficult subject of research. Indeed, it requires ethicists to translate ethical reasoning into algorithmic thought. This would seem very difficult still, except as explained above, by framing and safeguarding the possibilities of autonomous actions of the machine.

Moreover, different peoples have different ethics. Who has the legitimacy to determine the best criteria of ethical behaviour? And can we consider as acceptable a personalised ethics knowing that abuses are always possible?

2.6.2 Military Level

At the military level, ethical approaches are different as the objective of the armed forces is to be efficient on the battlefield, perform the missions entrusted to them, while respecting rules.

Looking at the international level, we are now witnessing a race for autonomous technologies. States will want to make sure that they take advantage of these systems, but regarding ethics, answers will vary. Liberal states will have to deal with the temptation of efficiency versus ethical constraints, while some totalitarian states will have a different response to these challenges, particularly for countries with a collective and not an individual ethic.

2.6.3 Lethal Autonomous Weapon Systems

While armed drones can limit collateral damage thanks to their precision, the question of delegating the opening of fire to lethal autonomous weapon systems (LAWS) is a question that has particular resonance in the international debate and raises the question of moral acceptance of autonomous weapons.

The author of this chapter thinks that LAWS are justifiable in the case of saturated threats, air or (sub)marine combat, in areas of total prohibition of human presence while limited in time and space. The author believes there is a need for operators to control LAWS, thus rendering such systems semi-autonomous (LSAWS). The ethical question of their use shifts to the level of the military decision-maker who will decide on the delegation of the activation of the “semi-autonomous fire” mode to the system [6].

2.7 Conclusion

Today, we are witnessing the first elements of a real revolution in the art of war. New robotic military equipment will progressively become autonomous and adapt to the terrain to accomplish their assigned missions.

This implies changes at the doctrinal and organisational levels and will challenge the role of leaders on the battlefield. Their credibility will depend on their ability to exercise authority over the human resources entrusted to them and on the control of the autonomous robots placed at their disposal, their conscience allowing them to humanise war, where the machine has no soul.

The development of new autonomous systems raises the question of controlling their proliferation for military uses, which may take the form of a binding international legal framework to restrict potential uses that do not comply with international rules.

But technological competition is repeating itself, and it is ineluctable that several states will try to impose their technological sovereignty through the development of these autonomous robots.

Notes

- 1.

ROE are military directives that delineate when, where, how and against whom military force may be used, and they have implications for what actions soldiers may take on their own authority and what directives may be issued by a commanding officer.

- 2.

Formal methods are mathematically rigorous techniques that contribute to the reliability and robustness of a design.

References

G.J. Allen, Hyperwar Is Coming (USFOR-A, 2019). Retrieved from https://www.youtube.com/watch?v=ofYWf2SKd_c

D. Danet, G. de Boisboissel, R. Doaré, Drones et Killer robots: faut-il les interdire? (Presses Universitaires de Rennes, 2015)

F. Grodzinsky, K. Miller, M. Wolf, Moral responsibility for computing artifacts: “the rules” and issues of trust. ACM SIGCAS Comput. Soc. 42(2), 15–25 (2012). https://doi.org/10.1145/2422509.2422511

IEEE, Ethically Aligned Design, 2nd edn. (Institute of Electrical and Electronics Engineers, 2017). Retrieved from https://standards.ieee.org/content/dam/ieee-standards/standards/web/documents/other/ead_v2.pdf

D. Lambert, Que penser de…? La robotique et l’Intelligence artificielle (Fidélité, 2020)

RDN, Autonomie et létalité en robotique militaire. (CREC, Ed.) Cahiers de la Revue Défense Nationale (2018). Retrieved from https://www.defnat.com/e-RDN/sommaire_cahier.php?cidcahier=1166

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this chapter

Cite this chapter

de Boisboissel, G. (2022). Evolution in the Way of Waging War for Combatants and Military Leaders. In: Laroche, H., Bieder, C., Villena-López, J. (eds) Managing Future Challenges for Safety. SpringerBriefs in Applied Sciences and Technology(). Springer, Cham. https://doi.org/10.1007/978-3-031-07805-7_2

Download citation

DOI: https://doi.org/10.1007/978-3-031-07805-7_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-07804-0

Online ISBN: 978-3-031-07805-7

eBook Packages: EngineeringEngineering (R0)