Abstract

Measurement in the social sciences is typically characterized by a multitude of instruments that are assumed to measure the same concept but lack comparability. Underdeveloped conceptual theories that fail to expose a measurement mechanism are one reason for the incommensurable measurements. Without such a mechanism measurements cannot be linked to a fundamental reference as required by metrological traceability. However, traditional metrological concepts can be extended by allowing for direct links between different instruments, so-called crosswalks. In this regard, Rasch Measurement Theory proves particularly useful as it facilitates a co-calibration of different instruments onto a common metric. The example of the measurement of nicotine dependence through self-report instruments serves as a showcase of the problems in social measurement and how they can be overcome contributing to metrological traceability in the social sciences.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

9.1 Introduction

Proper measurement is a sine qua non for quantitative research in any field of scientific enquiry [14]. The social sciences, encompassing a range of academic disciplines, such as health, education, psychology, or business, are no exception. As diverse as these fields of science are, when it comes to measurement they share one common characteristic: many properties of interest reside in, or are attributes of, human beings. In education such a concept of interest might be some sort of proficiency, in business research it could be corporate image perceived by consumers, in health science quality of life, while self-esteem would be an example from psychology.

In some instances, third persons (e.g., teachers, parents, clinicians, etc.) might act as raters and thereby produce the data. However, the majority of measurements in the social sciences, or social measurements for short, are based on self-report data, where items are presented to respondents (students, patients, consumers, etc.), who select the most appropriate answer option among a set of ordered response categories, mostly in the order of two to seven options. Typically, a number of items form a set, referred to as a scale or a measurement instrument, supposed to indicate values expressive of the same variable of interest, or construct. While the responses to items are observable, or manifest, the concept of interest is represented by an unobservable, hence latent variable. The inference of measured constructs never observed directly takes place whenever instruments are read, and so applies in all sciences.

Measurement is concerned with the inference of a magnitude of the latent variable from observable responses to manifest items. To enable this inference, the operation of measurement requires a measurement model that links observed responses to a latent variable. In social measurement, such models are also called psychometric models. Psychometric models are purely formal, statistical and “technical”. Their purpose is not to describe data. Rather, they need to embody requirements of quantitative inference. Thus, not every arbitrary model may serve the purpose of quantification. For measurement to be valid, the items also need to adequately represent the concept of interest. The assessment of validity is concerned with the question whether an instrument actually measures what it is supposed to measure. Consequently, to tackle the problem of validity, we need a conceptual theory that explains the concept of interest. Qualitative as well as quantitative evidence will then show whether the instrument indeed measures the variable of interest.

As social sciences mostly subscribe to a realist view [50, 57], their concepts of interest are, in the end, considered objectively given. They do not emerge from, or in any way depend on, our measurements. Rather, their existence gives rise to their measurement. Therefore, the same concept of interest should be measurable by different instruments, provided the concept exists as a quantitative construct. A range of instruments measuring the same concept can be beneficial to science, as instruments vary in terms of their range of operation, applicability under different circumstances or acceptability by respondent groups. From this it follows that if different instruments are supposed to measure the same concept, they need to refer to, or rather be inferred from, the same conceptual theory.

In practice, the situation is far from being straightforward. Many concepts lack universal agreement on exact definitions. Different instruments claiming to address the same concept may therefore measure somewhat different concepts. Conversely, instruments that do measure the same concept may bear different labels suggesting they are measuring different concepts (for example, to lend a unique selling point to a new scale). To complicate matters further, conceptual theories are sometimes rather vaguely defined. In some instances, they represent a post-hoc summary of the content of the items rather than a guiding principle for generating items. This problem can be illustrated by the difference between content validity and face validityFootnote 1 in the sense it is nowadays in use. Content validity means that all aspects present in the conceptual theory are adequately captured by items in the instrument. In contrast, face validity only asks whether what is included in the instrument “on the face of it” seems to be reasonable given the stated concept of interest.

The intricacies of concept definition are paralleled by a multiplicity of measurement models with some models failing to incorporate fundamental principles of quantification and, thus, to provide generalizable measurements that would be meaningful and interpretable beyond a particular study or even within one study. The conceptual and modelling problems have led to a very unsatisfactory state of affairs in social measurement. Measurement is not always properly justified, and measurements of the same concept lack comparability. The reason for the latter is that most measurements, even when theoretically and empirically supported, are expressed in a metric that is unique to the instrument they are based on. The lack of comparability prevents the social sciences from capitalizing on multiple instruments, which are applicable under various conditions, measuring the same concept. Even worse, incomparable measurements are a major obstacle to the synthesis of research findings.

Currently, only very few research initiatives in the social sciences have addressed this problem, which deserves and requires much broader attention. Lifting social measurement to a higher level by raising both its conceptual as well as psychometric rigor, and providing comparable measurements by establishing common metrics ought to be the core objective of social measurement science in the twenty-first century. This agenda is also in the interest of the recent rapprochement of social and natural sciences in terms of measurement. Social sciences have realized that fundamental principles of metrology, the science of measurement, specifically metrological traceability and measurement uncertainty, apply to all sciences. Thus, these principles need to be understood properly and addressed in the context of social measurement.

In the following, a brief history of measurement in the social sciences sheds light on the roots of problems social measurement is currently facing. In terms of a measurement model, the Rasch model [67] lends itself as a theoretical framework that certainly has the potential to lead social sciences out of the impasse they have ended up in regarding measurement. Next, we discuss the challenges of metrology, notably metrological traceability and measurement uncertainty, and how the social sciences can address them. One way of addressing traceability is to link various instruments by equating and thereby establishing a common metric. The example of measurement of self-reported nicotine dependence illustrates this approach.

9.2 Recourse to Literature

9.2.1 The Significance of Scientific Measurement

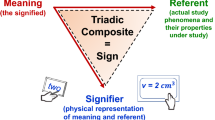

Scientific measurement is a clearly defined concept: it is the inference of a magnitude, an amount of a property supposed to be quantitative [59]. The natural sciences have made unprecedented progress based on quantitative theories and models [60]. Without measurement none of the theories could have been tested empirically. Today, a world without measurement has become inconceivable. In a sense, measurement is both a very simple and a very complex matter at the same time. It is complex inasmuch as quantity is probably one of the boldest propositions of an object’s attribute one can think of. However, once measurement has become a successful technology with instruments available readily, it summaries the variable in a very simple manner: by one number.

9.2.2 Measurement in the Social Sciences

9.2.2.1 The Challenge of Social Measurement

The social sciences have tried to follow the natural sciences in their footsteps as becoming quantitative as this seemed to be the sine qua none of science in the twentieth century [60]. However, the path towards quantitative science taken in the social sciences was anything but straightforward. How should psychological concepts, or constructs, be measured, and how should there being quantitative be demonstrated? Michell [59] disentangled the problems of inferring measurement by suggesting two tasks to be fulfilled: the scientific task of measurement and the instrumental task. The scientific task is concerned with whether the variable to be assessed is actually quantitative. The instrumental task pertains to the procedures of estimating the magnitude, the amount of the property to be measured. The scientific task is more fundamental providing the basis for technologies performing the instrumental task.

While these tasks arise regardless of the scientific discipline, the challenges involved vary, and certainly very different procedures are called for depending on the field of research. As an illustration from the physical sciences, the measurement of temperature [22] has to explicate what exactly temperature is and demonstrate that it is quantitative. Evidence of the latter allows for a range of instruments to be designed for its measurement. Today, physical measurement seems to be largely a matter of technology and for the most part it arguably is. But it should not be overlooked that the understanding of many physical attributes often took centuries with temperature being a good case in point. On the other hand, fundamental underpinnings of measurement are continuously advanced as demonstrated by the redefinition of fundamental units in physics [19]. Likewise, new problems and applications, such as self-driving cars, occur that require new measurement technologies [41].

9.2.2.2 The Path Toward Quantitative Social Sciences

At the beginning of the twentieth century, demonstrating the quantitative nature of psychological concepts such as attitudes, perceptions, feelings, or emotions—that is, demonstrating that the fundamental requirement of any measurement to be meaningful can be satisfied—appeared to be too enormous a challenge [60]. Though the theoretical terms predominating today were proposed much later, in retrospect it is plain that the social sciences struggled from the very beginning with addressing the scientific task of measurement. The path chosen in the social sciences as a way of resolving the difficulties encountered in meaningful quantification have appeared to be very effective. Stevens’ [79] suggestion that measurement is the assignment of a numeral to an observation according to a rule has been embraced widely. It essentially means that a numerical score is assigned to observed behaviors, such as the response to an item by ticking a box, and that the score is interpreted as a measure of some quantity. Today, social scientists, for the most part, subscribe to this definition without hesitation or reservation, and arguably with little awareness of its limitations. Measurement has become ubiquitous in the social sciences based on Stevens’ definition.

9.2.2.3 Measurement by Assigning Numerals

But are all these measurements justifiable? Can measurement be achieved by simply assigning numerals and claiming they are measures? One could argue that even the natural sciences measure by assigning values. For example, an analog bathroom scale comes with a kilogram scale that assigns values to the deflection of the pointer – values which we then use as measurements. However, constructing a bathroom scale is merely an instrumental task that builds upon established evidence of mass being quantitative and links the pointer’s deflection to a known metric of mass. In social measurement, the equivalent of such a bathroom scale would be a device built from scratch that somehow produces a deflection of the pointer which “defines” measurement by assigning numerals. Stevens’ definition essentially invokes the instrumental task and implies that the scientific task has been addressed if the instrumental task is carried out properly (“according to a rule”).

Stevens, though, fails to provide stringent guidelines as to how rules of assignment ought to be designed to yield measures of a quantity that are on a linear interval scale. Rather, he proposed different scale levels (nominal, ordinal, interval, ratio, and log-interval) that are supposed to reflect the true properties of what is to be assessed. The scheme is internally consistent but it is descriptive and not instructional. Without a clear rationale of whether numerals are interval-scaled or merely ordered, it is of limited use. Stevens arguably would be very skeptical of many social measurements explicitly or implicitly invoking his definition. But as a matter of fact, measurement by mere numerical assignments has opened the door to questionable practices in the social sciences. It definitely has contributed to a divergence of the social from the natural sciences in terms of a fundamental concept of science.

The following considerations apply to measurement of constructs in the social sciences inferred from data that are based on responses of subjects to stimuli, referred to as items, which collectively represent the construct to be measured. The items work as a set, a scale, and are proposed to be a measurement instrument. Instruments are included in questionnaires and surveys; they are administered on paper or online; they are self-administered or implemented by an interviewer. In any case, the observed responses are coded numerically. Thus, the administration of such an instrument necessarily provides numbers such as item scores and total scores across items. Traditionally, such scores are considered measures. However, observed scores can only be the input to measurement. Measurements are inferred from observed scores – provided they meaningfully represent a quantitative property. Automatically and uncritically equating observed scores with measurements means neglecting the scientific task of measurement.

9.2.2.4 The Quest for a Measurement Model for the Social Sciences

Addressing the scientific task of measurement in the social sciences is a matter of defining an appropriate measurement model that links inferred measurements and observed scores in a way as to require the property to be quantitative. The model has to specify restrictions imposed on observed responses that ensure that the inferred measures are magnitudes of a quantitative property. While the model is statistical in nature, the goal is not to account for the data in the best possible way but to challenge the data with respect to the requirements of quantity.

Since linking magnitudes and observed scores is a purely formal, statistical problem, the model is not specific to some particular variable. Rather it pertains to a specific type of data as the input. The data collection can be seen as a consequence of the instrumental task. The scientific task and the instrumental task are therefore intertwined in the social sciences at least initially. The fact that the measurement model is blind to the content of the property to be assessed implies that social measurement is both qualitative (are we measuring the right quality) and quantitative (are the measures truly quantitative). Thus, social measurement necessarily calls for mixed methods combining qualitative and quantitative research. Currently, the mixed methods complement one another. In the future, these methods should be more integrated as will be pointed out later.

It should also be noted that any proper measurement embodies a theory stating that the proposed property is quantitative (it can be measured; scientific task) and that the suggested instrument is capable of measurement (the property is measured; instrumental task). Scientific theories can, of course, be right or wrong. Measurement is no exception. Also, we can never prove that a theory is correct. Rather, failing to reject a theory increases our confidence in the theory and measurement. When we say measurement is valid, it is always a tentative statement confined to the context (population of subjects, method of data collection, etc.) and subject to further investigation.

9.2.3 The Rasch Model for Measurement in the Social Sciences

9.2.3.1 From Population-Based Score Statistics to Invariant Measurement

In terms of measurement models, social science has long relied on classical test theory (CTT; [52]), which essentially states that an observed score is composed of a true score and an error score. As such, CTT cannot be disproved, as explaining one observable score by two unobservable ones is tautological. CTT heavily focuses on group-based statistics, such as correlations of item scores or reliability defined as the ratio of true score variance and total variance. Reliability can be estimated, with some assumptions, from repeated measurements. In the end, though, CTT is a theory of error that presumes measurement. Later, in the twentieth century, a range of innovative measurement models, especially item response theory (IRT; [29]), were developed. IRT comprises probabilistic models that link the probability of a person choosing a particular response to an item (in the case of dichotomous response scales agree or disagree, for instance) to item and person parameters by means of a logistic function. The function relates theoretically unbound quantitative measures of the item and the person to a response probability between 0 and 1. IRT seems to have shifted the focus from groups, or samples, to the individual measurement.

However, most IRT models include a parameter describing item discrimination that can only be estimated under the assumption of a person distribution (typically a normal distribution). Consequently, the estimation of an item discrimination parameter makes IRT models multivariate group models. They are statistical models that “have little credibility as scientific models” [27, p. 23]. One type of model – the Rasch model [67] – named after the Danish statistician Georg Rasch, stands out as it requires item discrimination to be equal across items. Item discrimination is implicitly set to unity and not empirically estimated. As a result, item and person parameters can be separated; that is, the estimation of the item parameters does not depend on the estimation of the person parameters and vice versa.

9.2.3.2 Accounting for Measurement Requirements

The property of parameter invariance is the defining characteristic of a Rasch model for measurement. Rasch himself referred to it as specific objectivity [68]. However, requiring item discrimination to be equal across items is not just a convenience, a statistical trick, in terms of parameter estimation. Rather, it ensures that the ordering of all item response categories in terms of their probability is the same across the entire continuum of the latent variable and, hence, for all respondents. A change in the order would imply that one item is relatively harder to endorse than another item for one person but relatively easier to endorse for another person. The property of equal item discrimination in the Rasch model therefore reflects a fundamental requirement of meaningful measurement. Models estimating item discrimination, or to be specific, differences in item discrimination, mask counterintuitive and implausible relationships between item and person properties which render the observed responses unsuitable for inferring measurements reminiscent of Ragosa’s [66, p. 193] conclusion that “models for relations among variables [are to be seen as] statistical models without a substantive soul.” Of course, the Rasch model is also a statistical model, but it retains substantive interpretation of all parameters combining statistical and scientific elements in psychometrics [87].

9.2.3.3 Rasch Measurement Theory as a Framework for Quantification in the Social Sciences

The unique properties of the Rasch model ultimately led to suggesting the term Rasch measurement theory (RMT; [7]). RMT is a formal theory of inferring measures, which are linear and interval-scaled, from observed scores, which code manifest observations. Proposing the label of a measurement theory can only be justified if the theory has properties distinguishing it from other theories in this regard. Therefore, it is worth briefly delineating the relationships of RMT on the one hand, and CTT and IRT on the other. Both RMT and CTT make use of the simple unweighted raw score across items. In CTT, the raw score is a priori considered a measure, the behavior of which is investigated by means of group-based statistics such as variances, correlations and factor loadings.

In contrast, in RMT, the raw score is a sufficient statistic for the person measures with its meaningfulness being subject to data fitting the model. In a sense, RMT provides the justification of the raw score CTT relies on. RMT and IRT are obviously related by virtue of sharing the same type of link function, namely the logistic function. However, the statistical resemblance blurs far-reaching differences in the substantive underpinnings of RMT versus IRT. As pointed out above, the group-dependency of the discrimination parameter estimation in IRT implies that neither parameter invariance nor raw score sufficiency hold as equal item discrimination and raw score sufficiency are mutually dependent properties of the Rasch model [34]. Non-Rasch IRT models therefore do not allow for a simple raw score to measure conversion. While this might be seen as merely a pragmatic flaw, the lack of a unique ordering of items seriously compromises the interpretation of measures.

In this context, it is once again important to stress the difference between assumptions and requirements. RMT is a theoretical framework that embodies scientific requirements in a statistical model. These requirements are subject to tests of fit of the data to the model, hence they are falsifiable. They are not untested assumptions. Specific objectivity as a property of the model does not mean that empirical item parameter estimates are necessarily the same in the Rasch model, not even up to random error, regardless of who the respondents are. Rather, objectivity is confined to a frame of reference, for which invariance holds – hence the expression specific rather than general objectivity. Within the frame of reference, though, item parameter estimates are, in principle, independent of the sample composition.

The significance of the properties of the Rasch model is further demonstrated by their relationship to independence axioms as the bedrock of quantification and a cornerstone of the scientific task of measurement. The weak respondent independence axiom states that if a person A has a higher probability of success (or endorsement) with one item compared to person B, then person A has a higher probability on all items. The weak item independence axiom requires that if an item X implies a higher probability of success (or endorsement) than item Y for one respondent, then this has to be true for all respondents. Together these independence axioms are summarized as the single cancellation condition [47] establishing order relationships. IRT models only comply with weak respondent independence but generally do not meet weak item independence. Even more important are higher order cancellation conditions as they substantiate metric scales by enforcing transitivity of differences. Double cancellation involves sets of three items and three persons and requires one implication to hold true based on two premises. Details can be found in Karabatsos [47] and Salzberger [71].

Notwithstanding the special properties of the Rasch model, it has to be noted that RMT cannot, and indeed should not, imply or ensure measurement where the data do not allow for the inference of measurements. The model is the benchmark, an ideal against which empirical data are checked. The fit of the data to the model determines whether the attempt at measurement can be deemed successful or not. As fit will never be perfect, fit should be interpreted in a relative fashion. Fit, or the degree of fit, determines our confidence in measurement. This situation mirrors scientific practice in general. A single study neither confirms nor disconfirms a theory once and for all. Rather, it strengthens or weakens our confidence in the theory. The same is true for measurement. RMT equips social science with an appropriate tool to improve measurement by strengthening our confidence in well-performing instruments and exposing the weaknesses of less well-functioning scales.

RMT lends itself as the most suitable theoretical framework for measurement in the social sciences. Nevertheless, the intertwining of the scientific task and the instrumental task of measurement carries the risk that social measurements will not transcend the instrument used. The application of the Rasch model to data based on one instrument may provide evidence that the hypothesis of measurement can tentatively be confirmed. However, under different circumstances different instruments measuring the same property may be suited. In fact, it is the rule rather than the exception that multiple instruments exist to measure some property. The same, of course, is true in the natural sciences, where for example, a plethora of instruments measure temperature. But all these instruments express measurements in the same unit of measurement despite very different mechanisms used in the instruments. Consequently, temperature measurements are comparable regardless of the instrument provided they are all calibrated properly. Today, such measurement systems are extremely rare in the social sciences. Dealing with measurements of unknown quality and incomparable metrics is a major obstacle to progress in the social sciences. While linking different instruments supposed to measure the same property is a major challenge in social measurement, there are different avenues to pursue this goal. One calls for a stronger connection between the qualitative theory of the construct and the quantitative property, another exploits the invariance property of the Rasch model.

9.3 Metrology in the Social Sciences

9.3.1 Metrological Traceability

Comparable measurement lies at the core of metrology, the science of measurement in any field of science, with the focus on metrological traceability [12]. Traceability means that the result of measurement can be related to a reference through an unbroken chain of calibrations. A reference can be a definition of a measurement unit, a procedure or some standard. Metrological traceability ensures that measurements of the same property can be expressed in the same, hence comparable, metric. The measurement of mass nicely illustrates the role of the reference. Recently, the kilogram has been redefined based on Planck’s constant [80] linking the unit to a natural constant in the same way as the meter has been linked to the speed of light in vacuum [37]. This definition allows for the realization of the unit of mass through defined procedures. However, before 2019, the unit of mass was defined by an international prototype, a platinum alloy cylinder, stored in Paris [61]. This prototype was a standard in the sense that every measurement of mass had to be related to all the others through a chain of calibrations. Inevitably, every calibration (for example a national prototype against the international prototype; an intra-national prototype against the national, etc.) contributes to measurement uncertainty, which will be discussed later. The same is true for the new definition, even though it entails unique advantages, such as a stable reference (by contrast, the prototype itself has not remained completely stable over time) and realizations of fractions of a kilogram for different purposes of measurement.

Metrological traceability in the physical sciences can serve as a template for social measurement. It implies measurements based on various instruments are traceable to a common reference. To make this possible, we first need to develop a common reference to which different instruments can be related. One candidate for the common reference is the conceptual theory of the construct to be measured. It defines the variable and sets out its structure. However, today, conceptual theories are typically qualitative. At best, the theories suggest an order of items. As such, instruments cannot be quantitatively linked to conceptual theories. To overcome this problem, the conceptual theory needs to specify a quantitative link between a fundamental principle and properties of the measurement instrument. Specifically, that means revealing a measurement mechanism that explains item properties. Currently, examples of such advanced conceptual theories are still scarce [1, 35, 58, 76, 77, 78, 82].

A measurement mechanism in the social sciences corresponds to the most current definitions of units in the physical sciences, for example one meter as the unit of length defined as the distance light travels in a given interval of time in perfect vacuum. However, prior to this definition, an international prototype was used and referred to as a sort of “gold” standard of length. In a similar vein, the best available measurement instrument in social sciences could be seen as a gold standard [86]. Indeed, it is not uncommon in the social sciences to perceive one instrument as the undisputed benchmark of measurement. Thus, a reference would be established essentially by a consensus of scholars who agree on the gold standard similar to agreeing on a prototype of one kilo or one meter, the original definitions of the units of mass and length. Of course, the analogy is not perfect as the prototypes of one kilo and one meter demonstrably are magnitudes of quantitative properties. In the social sciences, the selection of one instrument as the gold standard is consensus-based. However, current psychometric models offer much stronger support for measurement instruments in the social sciences than ever before.

In terms of metrological traceability [12] in the social sciences, measurement mechanisms represent one possible realization of a fundamental reference to which various measurement instruments can be linked. As each instrument would be directly related to a reference that transcends all instruments and represents the common principle of all instruments, we might call this type of linking vertical linking. Different instruments would then be related indirectly via their linkages to the common principle. Alternatively, instruments can be linked to one another directly. Here, the instruments involved would be on the same level. Hence, we might refer to this as horizontal linking. It has to be noted that in the physical sciences no such differentiation (vertical versus horizontal) is made as traceability always implies an unbroken chain of calibrations against the fundamental reference as the root. From this it follows that addressing traceability in the social sciences by linking instruments with one another directly is an approach sui generis towards traceability. In the remainder of this chapter, the potential of linking instruments to another will be demonstrated.

9.3.2 Creating Measurement Systems

Providing for metrological traceability by linking different instruments measuring the same construct, that is the same measurand, means that measurements derived from different instruments are expressed in the same, hence comparable, metric. First, such a metric needs to be defined. The Rasch measurement model is particularly well suited to achieve this goal. The measurement instrument used in this regard corresponds to the gold standard mentioned earlier. For this task, it is therefore best to use the instrument with the most solid foundation in terms of its development.

Second, all instruments supposed to measure the same measurand need to be related to this metric. This can be done by co-calibrating the instruments with the gold standard instrument. In this regard, the invariance property of the Rasch model is particularly advantageous. As any set of items implies the same person measurement up to measurement error, scores on one instrument (one set of items) can be equated to scores on another instrument (another set of items) by aligning the respective estimates of person measurements. Particularly in the health sciences, the conversion of scores on one instrument to scores on another, mediated by an underlying common metric of the measurements, is referred to as a crosswalk [9, 85]. Metrological traceability among several instruments can then be established by a series of crosswalks. The instruments then form a measurement system.

Metrological traceability is by no means an end in itself. Rather, it is crucial for scientific synthesis of research findings. Crosswalks allow for linking existing findings based on different instruments that would otherwise be hard to compare. In case of a newly developed scale, crosswalks with existing instruments may also contribute to the acceptability and popularity of a new instrument by providing a smoother transition. Notwithstanding the practical potential of crosswalks, it has to be kept in mind that they do not shed light on the measurement mechanism. Crosswalks should be considered a transitory technology until conceptual theories are advanced enough to reveal the measurement mechanism.

9.3.3 Uncertainty

Apart from metrological traceability, a stated range of uncertainty [13] is a key feature of measurement. Every measurement, however carefully it may have been made, comes with some range of doubt as to its true value [10]. The range of uncertainty is a hallmark of quality in measurement. Uncertainty needs to be differentiated from error, which is the actual difference between the true value and the measured value, as it is referred to in CTT. Thus, CTT is effectively a theory of error, where error is the difference between the unknown true score and the observed score as the measurement. In every instance, the error score is a given but unknown value. If sources of error were known, the measured value could have been corrected. In contrast, uncertainty is concerned with our doubt as to how close the true value is to the measured value. Hence, uncertainty requires stating a range around the measured value in which the true value with some specified probability lies. The more sources of error impact on measurement, the larger the discrepancy between true values and measured values will be and, consequently, the wider the range of uncertainty will be. To know the range of measurement uncertainty is crucial as it determines whether the measurements are fit for purpose.

The sources of error in physical measurement are manifold [10], and they can be mapped onto social measurement, too. First, the measurement instrument can lead to error because of inadequate and/or outdated item wording or its unsuitable shape and appearance. This underlines not only the importance of careful instrument development but also stresses the necessity of continuously revisiting instruments over time. Second, the human beings whose properties we want to measure may show variation over time with respect to the manifestations of the measurand or the measurand itself. Changes in the measurand itself are particularly relevant when transient states rather than stable traits are to be measured. However, even if the true value of the measurand stays the same, inevitable fluctuations in the human mind, memory, and information processing in general imply variation in human responses to measurement instruments. Third, the measurement process may impact on the error emphasizing the significance of the context in which measurements are taken. For example, the administration of a questionnaire in a loud environment or under other inconvenient conditions arguably increases errors. Fourth, uncertainties in the calibration of the instrument itself contribute to error. The inference of measurements of person properties ultimately depends on accurate item calibrations.

The various sources of error show that careful instrument development and a suitable administration of the instrument are fundamental requirements of social measurement. That said, fluctuations in the respondents imply random variation in the responses of individuals that cannot be completely avoided. This variation is accounted for by the probabilistic element in psychometric models. The role of item calibration reveals an important insight into the mutual relationship of uncertainty in the item calibration and person calibration. Uncertainty of person measurements is reduced by item calibrations with low uncertainty. Conversely, item calibration uncertainty is a function of the sample size (the more subjects the better) and the sample composition. The closer the match of items and respondents in terms of their measurement values, in other words the better the targeting of the instrument, the more trustworthy item calibrations are. Proper targeting is therefore crucial for uncertainty. What is more, proper targeting also increases the power of tests of fit ensuring a more trustworthy assessment of the instrument’s suitability. The role of targeting also shows that uncertainty necessarily varies among individual respondents as the targeting of the instrument to the individual respondent depends on the respondent’s true value.

With all other factors considered given, uncertainty is only a function of how many measurements are taken. In the social sciences, this implies that with an increasing number of items, uncertainty decreases as error cancels out to some extent. In practice, this will only be correct up to a point, though. An extremely long instrument induces response burden, which may diminish concentration and increase rather than decrease error. In CTT, measurement uncertainty is derived from an estimate of reliability, which is defined as the true variance divided by the total observed variance, or by 1 minus the error variance over total variance. Combining reliability and the standard deviation of the person scores yields the standard error of measurement [84]. With perfect reliability, measurement uncertainty, that is the standard error of measurement, would be zero. With zero reliability, the standard error of measurement would equal the standard deviation of respondent scores. From this it follows that the standard error of measurement in CTT is both sample dependent and constant for all respondents irrespective of their true value.

By contrast, in the Rasch model the estimation of uncertainty with respect to the person measurement is based on the information the observed responses contain specifically for a person with a given total score across all items in the scale. For each item, information (INF) is a function of the probability of each response category. In the case of dichotomous items, information simplifies to the product of the two response probabilities (see Eq. 9.1; Fischer [33, p. 294]). In the polytomous case, information is a function of all item thresholds (see Stone [81], for a formula, or Dodd and Koch [26] and Muraki [62], for an alternative but equivalent formulation).

Information provided by each item adds up across items yielding total information (INFscale) from the entire scale. The SEM then simply is the inverse of the square root of INFscale (Eq. 9.2).

Considering the SEM approximately normally distributed implies that forming a confidence interval of the estimated person measurement ± SEM provides a range of measurement uncertainty with a confidence level of 68%. A probability of 95% can be achieved by multiplying SEM by 1.96 or 2 as an approximation. It should be noted that the symmetrical error distribution becomes rather implausible towards the extremes. Strictly speaking, no finite SEM can be estimated for extreme response patterns based on Eq. 9.2, as information would be zero. Therefore, the SEM for extreme scores is usually based on an extrapolation from SEMs for all other scores.

The way the Rasch model estimates the SEM essentially means that the range of uncertainty for each respondent not only takes the number of items into account but also their position relative to the respondent. As a consequence, information usually peaks close to the center of the scale, where the average distance to item thresholds is minimal, whereas uncertainty increases notably when approaching the extreme ends of the scale. The exact shape of the information curve depends on all thresholds, though.

9.4 Illustrative Example: Measurement of Self-Reported Nicotine Dependence

9.4.1 Purpose

As an illustration, the example of measuring nicotine dependence based on self-report instruments demonstrates the creation of a crosswalk, its usefulness, caveats and limitations.

9.4.2 Background and Literature

Nicotine dependence is one of several constructs that are important when it comes to understanding tobacco use [54]. The use of tobacco products, most notably cigarettes, still is the largest preventable cause of disease and premature death worldwide [46]. Tobacco-related health threats can best be prevented, or reduced, by cessation [83]. However, some tobacco users continue to use these products for various reasons. These users might benefit from less harmful products, also known as reduced-risk products, which also provide nicotine but otherwise reduce the amount of harmful chemicals [69]. Monitoring nicotine dependence in the transition phase may show how dependence is transferred from product to another and how it develops over time. As tobacco and/or other nicotine-containing products (TNPs) are subject to regulatory approval, authorities such as the Food and Drug Administration (FDA) in the USA also benefit from properly developed measurement instruments and traceable, comparable measurements of variables of interest.

The concept of nicotine dependence goes beyond physical addiction. It is a relatively complex construct consisting of behavioral and perceptive aspects. Apart from nicotine addiction as the key driver of dependence on TNPs, environmental and situational cues also have an impact [11]. The intricacy of measuring nicotine dependence is further exacerbated by the multiplicity of different TNPs available today. The first self-report instruments measuring nicotine dependence were developed for cigarettes, which historically were the predominant source of nicotine. Published in 1978, the eight-item Fagerström Tolerance Questionnaire (FTQ; [31]) was one of the first attempts in this regard. The FTQ was substantiated based on correlations with indicators of physical dependence (heart rate, body temperature) in experiments. Subsequently, the Fagerström Test for Nicotine Dependence (FTND; [42]) was developed as an improvement of the FTQ. The FTND is a six-item questionnaire related to smoking behavior. Due to its brevity and the meaningfulness of its content, the FTND has become one of the most widely used legacy measurement instruments in the field. Accordingly, it is often considered a gold standard for self-reported assessment in the field of nicotine dependence to the present day [65].

The context of measuring nicotine dependence through self-report instruments has changed, though, since the FTND was introduced. A range of alternative TNPs – such as smokeless tobacco or cigars or, more recently, electronic cigarettes – have become available [20, 21] and consumption of multiple TNPs in parallel can no longer be neglected [8]. In view of this development, the FTND was recently renamed as the Fagerström Test for Cigarette Dependence [32]. For alternative TNPs gaining in popularity, new self-report instruments for measuring dependence on these products have been suggested. (e.g., the Fagerström Test for Nicotine Dependence–Smokeless Tobacco questionnaire, FTND-ST [28], or the Penn-State Electronic Cigarette Dependence Index [36]). To complicate things further, product-specific instruments are not suitable for users of multiple TNPs concurrently. The multiplicity of instruments raises problems of comparability of measurements based on different instruments as each instrument has its own metric. From a metrology point of view, the incommensurability of measurements is a major shortcoming.

In order to overcome the limitations in comparable measurement of nicotine dependence, a new instrument, the ABOUT–Dependence self-report instrument,Footnote 2 was developed [23]. The self-reported instrument ought to capture the individual perspective of dependence on a range of TNPs as well as the concurrent use of multiple TNPs. From the outset, the new scale was not aiming at the addition of another self-report instrument to the existing inventory of dependence scales but at providing the basis for comparable measurements of nicotine dependence irrespective of TNP usage patterns. The ABOUT–Dependence was to establish a metric of nicotine dependence to which existing legacy instrument can be referenced through “crosswalks”.

9.4.3 Development of the ABOUT–Dependence Instrument

Based on a literature review, concept elicitation interviews with TNP users (n = 40) and input from experts [23, 24], a conceptual model of nicotine dependence comprising seven aspects of dependence experienced by TNP users (urgency to use upon waking up, compulsion to use, difficulty to cease using, need to use to function normally, automaticity of using, priority of using over social responsibilities, and self-awareness of dependence), thus comprising perceptual phenomena and self-reported behavioral aspects, was generated. The common theme behind these aspects is loss of control on the part of the product user. At this stage, a unidimensional structure of but no order relationships among the aspects of dependence were expected.

A first draft version of the instrument comprised 19 potential items that best represented the aspects of the concepts of interest was then analyzed by cognitive debriefing interviews (n = 40) to ensure proper understanding of instructions, items, and response options. While six items were identified as conceptually redundant, and one item appeared to be relevant only to some of the participants, all 19 items were subjected to a quantitative study and tested psychometrically to identify the best-working items. Three different response scales adapted to the item content were administered. Two items capturing the urgency and pervasiveness of product use over the past 7 days (see Table 9.1) were presented with a six-category scale (0–5 min, 6–15 min, 16–30 min, 31–60 min, more than 1–3 h, more than 3 h). Twelve items (eight in the final set) assessing the frequency of aspects of dependence over the past 7 days were administered with a five-category response scale (never, rarely, sometimes, most of the time, all the time). The remaining five items (two in the final set) referring to the intensity of current perceptions had a different five-category response scale (not at all, a little, moderately, very much, extremely). All studies were conducted in the United States.

9.4.4 Study Design, Data Sources and Sampling

The quantitative study, approved by the New England IRB (NEIRB#:120180022), consisted of a cross-sectional, two-wave, internet-based survey with purposive stratified sampling of adults legally authorized to purchase TNPs in the United States. Participants were identified via the proprietary GfK (Growth from Knowledge) consumer online panel KnowledgePanel®, which is representative of the US population. To ensure adequate coverage of the intended target population, quotas ensured that exclusive users of one TNP (n = 1181) and users of multiple TNPs (polyusers; n = 1253) were roughly equally represented implying a total sample size of 2434 respondents. Among exclusive users, an approximately equal number of users of cigarettes (n = 250), smokeless tobacco (n = 250), e-cigarettes (n = 252), and cigars/cigarillos (n = 250) were included, while the remaining 179 participants were exclusive users of pipes, waterpipes, or nicotine replacement therapy (NRT) products. Additional quotas on age, sex, and education were applied. Wave 2, which allowed assessment of stability over time (test–retest reliability), included 678 poly users and 743 exclusive users. Table 9.2 provides descriptive summary statistics of the sample of the quantitative study.

9.4.5 Psychometric Methods

The psychometric analysis was based on the unrestricted Rasch model for polytomous responses (partial credit model; [2, 4, 55]; see Eq. 9.3) using the computer software RUMM2030 [6], which applies the pairwise estimation algorithm [91] for item calibration and weighted maximum-likelihood estimation [51] for person calibration.

with,

The justification of measures was based on empirical evidence of data meeting the requirements of measurement as set out by RMT [43]. This included:

-

1.

Assessment of fit of observed item responses to expected responses by means of tests of fit that provide approximately chi-square-distributed statistics [64, 74]; these fit statistics were applied at an adjusted sample size of 500 to avoid excessive power of the test of fit and deviations from the chi-square-distribution;

-

2.

Assessment of local independence of items by means of residual correlations, which should be close to zero, ensuring that all items contribute equally and independently to the total score [25, 53, 89];

-

3.

Assessment of unidimensionality by means of principal component analysis of item residuals, expecting that correlations of residuals are all random and no common underlying component exists [38, 73];

-

4.

Assessment of item invariance by tests for differential item functioning for user type, TNPs, and various sociodemographic variables, which examine whether the observed responses only depend on the person location and not on third variables [18, 39];

-

5.

Assessment of reliability, defined as the proportion of true variance in the total variance of person measures, which is captured by the person-separation-index in RMT [3]; and

-

6.

Assessment of targeting by means of a graphical matching of item and person locations (targeting plot) including the information curve relevant for uncertainty [15, 40].

Based on the qualitative studies six items were suspected to be redundant, that is their content was mirrored in six other items. For these pairs of items, local independence was not expected to hold. It was further assumed that one item was problematic potentially resulting in misfit. For the final item-reduced scale(s), a minimum level of reliability (person-separation-index) of at least 0.7 was deemed acceptable, while 0.8 or more was considered desirable.

9.4.6 Results of Psychometric Analyses

Since the initial analysis of all 19 items showed some indication of a lack of strict unidimensionality, the conceptualization was reconsidered, and, eventually, three domains of self-reported dependence were proposed:

-

Extent of use, covering the timing, urgency, or pervasiveness with which the product[s] is/are used;

-

Behavioral impact, comprising the behavioral aspects of dependence and its impact on daily activities; and

-

Signs and symptoms, related to the perception of symptoms of dependence experienced by TNP users.

Analyses within each domain confirmed redundancy where it had been expected and also identified an item as inadequate that was suspected to misfit. The item-reduced version consisted of 12 items (see Table 9.3 for item locations and fit statistics, and Table 9.5 for descriptive item statistics) still providing full content coverage from a qualitative view as only redundant items had been eliminated. Reliability was acceptable (0.73) for the two-item extent-of-use scale but higher than 0.80 for the five-item behavioral-impact scale (0.81) and the five-item signs-and-symptoms scale (0.89). Table 9.3 summarizes the psychometric properties of the ABOUT–Dependence self-report instrument. The hierarchy of the items is reflected in Table 9.3 by the order in which items are listed. For signs and symptoms, low dependence is represented by a strong desire, followed by the feeling that completely quitting is difficult, while high dependence is associated with the impression that it is hard to control the need or urge to use the TNP. In the behavioral impact domain, the automaticity (use more than intended) marks the lower end of dependence, while avoiding activities altogether where one could not use the TNP, is at the upper end.

A noteworthy observation was the fact that the three domain scores correlated between 0.5 and 0.8 in the entire sample raising the question whether a higher-order essentially unidimensional variable could be established by combining the three domain scores. To this effect, items within each domain were added up to sum scores, which were treated as super-items or subtests. Adequate fit of the data to the model supported a common essentially unidimensional variable of self-reported dependence (Table 9.4). As a higher-order variable, the composite measure, named the ABOUT–Dependence Index, captures the common variance shared by the three domain scores. Any domain-specific variance is attributed to measurement error in this approach. Therefore, it is important to compare the reliability estimate with reliability of the individual scales at the domain-level. Since reliability remained high (PSI = 0.82), the composite measure was justifiable from a statistical point of view. In terms of invariance, the extent-to-use domain required adjustment for differential item functioning by the type of TNP used. This was accomplished by estimating TNP-specific parameters for this subtest. In contrast, the domains of behavioral impact and signs and symptoms demonstrated invariance with respect to the respondents using different TNPs. Thus, a unified metric for self-reported dependence could be defined, allowing for comparisons across user types and different TNPs. In the combined unidimensional measure, signs and symptom items are easier to endorse than items in the behavioral impact domain implying that feelings set in first with behavioral consequences signaling higher dependence, demonstrating that the composite measure also appeared to be conceptually meaningful.

It should be noted that the deviation from strict unidimensionality in the analysis of all items could also be accounted for by applying a multidimensional Rasch model [17, 49, 70]. In the interest of a parsimonious representation of the measurand and the correspondence of the newly developed instrument and the legacy instruments required for establishing crosswalks, we primarily, and successfully, pursued the higher-order variable approach.

The degree to which items match respondents (cigarette users) with regard to the measurand is visualized in the targeting plots for each of the three domains and the ABOUT–Dependence Index (Fig. 9.1). While the distributions of item thresholds (lower part of each plot) and person measurements (upper part) match well for the extent-of-use domain and the signs-and-symptoms domain, there is some mismatch for the behavioral-impact domain suggesting that these items generally indicate higher levels of dependence than those observed among respondents in the sample. This finding is not necessarily a disadvantage as it implies that the behavioral-impact domain captures the higher end of self-report dependence ensuring that the ABOUT–Dependence Index, which combines all three domains, covers the underlying latent variable very broadly. In fact, the targeting of the ABOUT–Dependence Index is very good implying a reliable item threshold estimation, robust fit assessment, and reasonable ranges of uncertainty for the vast majority of respondents.

Targeting plots of the three ABOUT–Dependence domains and the ABOUT–Dependence Index for cigarette users. (a) Targeting plot of the extent-of-use domain. (b) Targeting plot of the signs-and-symptoms domain. (c) Targeting plot of the behavioral-impact domain. (d) Targeting plot of the ABOUT-Dependence Index

The validity of measurements by the ABOUT–Dependence instrument has been further substantiated by addressing several aspects of validity. Experts agreeing to the content of the items being highly relevant and matching the conceptual definition of the variable provided evidence of content validity. Internal construct validity has been supported by fit of the data to the Rasch model (see Tables 9.3 and 9.4) and a meaningful hierarchy of items in terms of the amount of dependence the items represent [23, 24]. Correlations of measurements by the ABOUT–Dependence instrument and measurements from product-specific instruments (ranging between 0.83 and 1.00 when correcting for attenuation due to measurement error) demonstrated convergent validity, that is the correspondence of measurements of the same variable from different self-report instruments. These findings also support construct validity and justify the establishment of crosswalks.

The person location measures, obtained by non-linear transformations of the sum score across all items depending on different user types and TNPs because of differential functioning, are expressed in the same metric and are, thus, comparable. However, the metric is rather technical referring to natural logarithms of odds. Therefore, the linear measures on the logit scale were linearly transformed into a more intuitively interpretable and accessible metric of 0–100 (Table 9.5).

9.4.7 Addressing Traceability: Method

The new self-report instrument and existing self-report instruments measuring dependence are based on the same holistic concept of dependence, comprising behavioral aspects (self-reported use of TNPs), impact of the experience of dependence on daily activities, and perceptions of signs and symptoms of dependence experienced by TNP users. The conceptual correspondence was also supported by convergent validity. It is therefore desirable to establish a common metric of dependence and make measurements based on different instruments comparable.

The alignment of multiple instruments measuring the same measurand, or their equating, has a long research tradition with the Rasch model lending itself due to the invariance property [75, 88]. The Rasch model allows for the construction of test networks with comparable measurements [30]. Specifically in health measurement, linking instruments by equating is often referred to as a “crosswalk” between instruments. While equating generally means that linear person measures based on parameter estimation in the Rasch model are used, crosswalks typically accommodate the still widespread use of raw scores. A crosswalk converts the raw score on one instrument into an equivalent raw score on another instrument. While this is arguably useful, moving from raw scores to linear measures is a step long overdue. Hence, the illustration of a crosswalk for self-report dependence will not only provide a conversion of different raw scores but, more importantly, establish a common linear metric for different instruments by means of equating.

Two types of equating can be distinguished with either items or persons forming the link in the test design that ensures comparability of the metric [90]. In vertical equating, or common item equating, measures for different persons are placed onto the same metric by administering a common set of items to all persons while adding different items for different groups of persons [48, 63]. Essentially, this means that different instruments partially overlap. Common-item equating is widely used in education. In contrast, horizontal equating, or common-person equating, uses the same persons but mutually exclusive items forming different instruments [56]. Crosswalks are an example of common-person equating. While common-item equating sometimes suffers from targeting problems (the common items are relatively easy for one group of respondents but relatively hard for another), no such difficulties occur in common-person equating provided the instruments to be equated are properly targeted towards the sample. Related to equating but focusing on the unit of measurement, Humphry and Andrich [45] developed a framework for aligning measurements with a different unit. The approach has recently been applied to consumer data in marketing based on differently directed response scales [72].

9.4.8 Addressing Traceability: Establishing a Crosswalk

In the following, the establishment of a crosswalk between the newly developed ABOUT–Dependence instrument and the most widely used legacy instrument, the FTND, is demonstrated. The same can be done for any other existing instrument assessing nicotine dependence.

The crosswalk was based on a co-calibration of responses to the ABOUT–Dependence instrument and to the FTND. The sample was confined to exclusive users of cigarettes, for whom ABOUT–Dependence and FTND measurements were theoretically comparable. For the FTND, a subtest was defined, while the three subtests for the ABOUT–Dependence instrument were retained. In order to preserve the previously established metric, subtest parameter values for the ABOUT–Dependence instruments were anchored to the previously estimated values from the whole study sample. Therefore, only the subtest parameters of the FTND were estimated linking it to the metric established by ABOUT–Dependence. Next, conversions of raw scores to linear measures were retrieved for the ABOUT–Dependence instrument on the one hand and the FTND on the other. Each raw score on the FNTD was then matched with a score on the ABOUT–Dependence instrument in a way that the associated linear measures, which are on the same metric, were as close as possible.

As an illustration, a raw score of 1 on the FTND implied a linear measure on the common metric of −0.84 (see Table 9.6). The closest match on the ABOUT–Dependence instrument was −0.81, which corresponded to a raw score of 7. Therefore, a score of 7 on the ABOUT–Dependence was equivalent to a score of 1 on the FTND. The same was true for all raw scores between 5 and 9 on the ABOUT–Dependence instrument as their associated linear measures were closest to −0.81 on the FTND. Since all ABOUT–Dependence raw scores were also transformed into a linear 0-to-100 metric, FTND scores can also be converted to that metric, which is highly recommended as raw scores are non-linear whereas measures in the 0-to-100 metric are. This is particularly relevant, when establishing further crosswalks to other legacy instruments along the lines of the crosswalk to the FTND. Then the use of the 0-to-100 metric is crucial as is takes differential item, functioning into account. Figure 9.2 shows a graphical representation of the crosswalk. Table 9.7 shows the crosswalk between the FTND and the ABOUT–Dependence instrument.

9.4.9 Comparison of Predicted and Observed Scores on the Two Instruments

The crosswalk translates raw scores on one instrument into raw scores on the other instrument. Consequently, the scores on both instruments can be predicted based on the scores on the other. Table 9.8 shows the FTND raw score predicted from the ABOUT–Dependence Index raw score and the crosswalk compared to the actually observed FTND raw scores. The means of the observed score correspond reasonably well with the predicted FTND scores. A regression analysis of the mean observed score on the predicted score reveals a slight regression-to-the-mean effect (unstandardized regression coefficient β1 = 0.79), which can be attributed to measurement error in the ABOUT–Dependence Index as measurement error in the FTND is drastically reduced by taking the mean of the observed score. On average, the crosswalk works well, though (Pearson correlation r = 0.93 of the predicted score and the mean observed score). At the individual level, the relationship is weaker (r = 0.69 of the predicted FTND raw score and the individually observed FTND score), which is due to measurement uncertainty in the FTND. These findings need to be interpreted with caution, though, as the available sample sizes for expected scores of 0 and scores beyond 6 are small.

Conversely, Table 9.9 compares the ABOUT–Dependence Index raw score predicted from the FTND raw score with the actually observed ABOUT–Dependence Index raw score. While the means of the observed raw scores generally increase with the predicted score (except at the upper end), the regression-to-the-mean effect is now much stronger (unstandardized regression coefficient β1 = 0.52), which is mainly a consequence of the measurement error in the FTND. The observed regression to the mean compromises the prediction of the ABOUT–Dependence Index based on the FTND raw score, even though the predicted score and the mean observed score correlate at r = 0.98. At the individual level, the relationship between the predicted ABOUT–Dependence Index raw score and the individually observed ABOUT–Dependence Index score (r = 0.72) matches the relationship observed for predicted and observed FTND scores. The regression-to-the-mean effect remains strong, though (unstandardized regression coefficient β1 = 0.49).

Every sum score on the FTND can be converted to a corresponding sum score on the ABOUT–Dependence instrument by looking up the score in bold in columns 1 and 5, respectively, and reading the ABOUT–Dependence score in the same row in columns 4 and 8, respectively. Vice versa, every sum score on the ABOUT–Dependence instrument in columns 4 and 8, respectively can be converted to an equivalent score on the FTND in columns 1 and 5, respectively (the corresponding score may appear in parentheses). Each sum score on either instrument can also be converted to a measure in the transformed 0-to-100 metric in columns 2/3 and 6/7, respectively. In these columns, values in parentheses state the range of uncertainty implying a 68% confidence interval for the true measure. For a 95% interval, these values need to be multiplied by 1.96 (or 2). For extreme scores (0 and 100), uncertainty is based on extrapolated estimates of the standard error of measurement.

9.4.10 Measurement Uncertainty of Self-Reported Dependence

Crosswalks enable the expression of measurements based on different instruments measuring the same variable in a common metric. That said, the original uncertainty in the measurements is retained. The higher number of items in the ABOUT–Dependence instrument compared to the FTND implies that measurements are associated with lower uncertainty – that is, smaller standard errors of measurement (SEM; [5]). One SEM plus or minus around the estimate of the measure constitutes a 68% interval as the range of uncertainty. The difference in uncertainty has also been exemplified by the fact that, with the exception of a score of 48, multiple raw score values on the ABOUT–Dependence instrument map onto the same score on the FTND. Table 9.7 lists the range of uncertainty for measurements based on the FTND versus the ABOUT–Dependence for each measure on the 0-to-100 metric (SEM stated in parentheses implying a 68% confidence interval when adding and subtracting from the measurement value). Figure 9.3 shows the SEM of measures based on the ABOUT–Dependence versus the FTND for cigarettes. Uncertainty is smallest near the center of the scale (the exact location depends on all item threshold locations) but gets larger when approaching the extremes implying a U-shaped distribution. The lowest uncertainty for the ABOUT–Dependence instrument is at about 60 on the 0-to-100 metric. For the FTND, the lowest uncertainty is reached at about 70 on the common metric. Generally speaking, the ABOUT–Dependence instrument provides measurements with approximately half the uncertainty compared with to FTND. What is more, the lower number of items confines the FTND to the range between 27 and 80.

9.5 Discussion

The Rasch model for measurement allows for equating different instruments that measure the same measurand. Equating not only provides crosswalks that translate raw scores on one instrument to raw scores on another (a very useful procedure for clinical applications, for example), but even more importantly it establishes a common linear metric of the measurand. The metric becomes essentially independent of the instruments, which are consolidated to form a network of instruments. In other words, a crosswalk as such is a rather pragmatic tool, while the common metric represents a theoretical advance. A metrologically-situated approach to equating therefore goes well beyond ensuring comparability. A common framework for multiple measurement instruments may also facilitate the development of a more powerful conceptual theory of the measurand transcending a particular instrument.

Notwithstanding the theoretical and practical challenges measurement standards in metrology pose to the social sciences, adopting the principle of metrological traceability certainly has the potential to propel social measurement to a higher level. In the long run, revealing measurement mechanisms that theoretically explain why instruments work the way they do will be the pinnacle of social measurement. Given the intricacies involved, in the short run, capitalizing on equating different instruments that are supposed to measure the same measurand will help the social sciences realize part of the benefits metrological traceability has to offer.

However, RMT also provides more meaningful standard errors of measurement that allow for tackling the second fundamental principle of metrology, the range of uncertainty in measurement. Since the range of uncertainty is expressed in the same metric as the measurements, which is then common to all instruments connected through equating, uncertainty can be compared across instruments. This allows for a more informed assessment of measurement quality by each instrument.

Caution is required, though, when it comes to the implementation of a common metric for a measurand in the social sciences. It is crucial to establish the metric based on an instrument that allows for measurement that meets the requirements as set out by the Rasch model. New instrument development is the best avenue towards achieving this goal, even though a re-analysis of an existing scale with a sound qualitative underpinning can be a viable alternative. Apart from the scientific advantages in terms of metrological traceability, crosswalks also have the potential to facilitate the dissemination of new instruments. By linking past research, and continued use of legacy instruments, to current studies using the new instrument, a crosswalk provides a smooth transition and, thereby, is likely to raise acceptability and use of the new scale. Conversely, the integration of legacy instruments into newly established networks including the common metric may also mask limitations of existing instruments. So if there are deficiencies in these instruments, it is crucial to disclose them.

The measurement of dependence on TNPs through self-report instruments illustrates the limitations in social measurement and how they can be overcome using RMT. Until recently, measurement of nicotine dependence has been very fragmented with a number of different instruments providing measurements confined to specific TNPs. The increasing habit of using multiple products concurrently poses new problems for measurement of dependence. The development of a new instrument, the ABOUT–Dependence, helps overcome these scientifically challenging problems by providing comparable measurements of nicotine dependence in case of exclusive use of different TNPs or concurrent use of multiple TNPs. On the other hand, especially designed to accommodate cigarette use, the FTND represents a gold standard in the field of tobacco research when it comes to measuring nicotine dependence. Thus, these two instruments are prime candidates for a crosswalk linking them to one another. Further instruments can then be linked to the common metric contributing to a metrological system of nicotine dependence measurement.

The example illustrates how a crosswalk can be established. A possible limitation lies in the relatively small sample size available for linking the FTND to the common metric. While the common metric itself was established based on the entire sample in the quantitative study (n = 2434), the FTND could only be linked based on 250 exclusive users of cigarettes. A more serious limitation was revealed by comparing predicted raw scores based on the crosswalk and actually observed raw scores. For individual measures, the range of uncertainty in the FTND is relatively high implying non-trivial discrepancies between predicted scores on the ABOUT–Dependence and actual ABOUT–Dependence scores. This finding is hardly surprising. While the crosswalk establishes a link between instruments, the range of uncertainty in measurements is retained even though the measures are converted to a more elaborate metric. For obvious reasons, the conversion of ABOUT–Dependence scores to FTND scores is less problematic. But it is also less useful as it entails a loss in information. In practice, crosswalks between instruments of similar range of uncertainty are certainly more expedient.

Social sciences are encouraged to modify their research agenda by including the establishment of a common metric across different instruments by means of equating. This is an important step in taking metrological traceability into account. That said, addressing traceability by linking instruments should be considered a transitory technology permitting comparable measurement in the social sciences. In the long run, revealing measurement mechanisms and transforming conceptual theories into quantitative theories is key.

9.6 Conclusion

Measurement in the social sciences is, with respect to fundamental principles of metrology, lagging behind physical measurement. First, the widely used CTT-based measurement models essentially presume measurement and are mostly concerned with group level parameters of purported measurements and their statistical behavior. Second, measurement in the social sciences is based on conceptual theories that mostly are of qualitative nature with some theories at least allowing for a hypothesized ordering of item. In particular, in educational proficiency testing the order of item difficulties is, as a rule, anticipated. Nevertheless, these theories do not reveal a measurement mechanism or fundamental reference that could be linked quantitatively to the empirical properties of an instrument. Rather, the empirical findings inform the quantitative aspects of the conceptual theory. Consequently, third, the social sciences witness a fragmentation of measurement instruments that are supposed to measure the same concept but do not allow for comparable measurements. Traditional approaches for aligning scores from different instruments are cumbersome and require large representative samples. Thus, the state-of-affairs is highly unsatisfactory from the perspective of metrological traceability.

The status quo of social measurement, therefore, implies two fundamental challenges: first, the measurement theory is to be advanced to provide justifiable and comparable measurement; and second, more elaborate conceptual theories are to be developed. With respect to the measurement theory, recent progress in psychometric modelling has helped narrow the gap between physical and social measurement. Rasch measurement theory provides a framework for invariant measurement, which is a prerequisite for meaningful measurement at the individual level and straightforward comparability. In contrast to these universally applicable psychometric innovations, advances in the formation of more sophisticated conceptual theories apply to specific applications. What is more, today’s psychometrics results from decades of innovative research, while advances of conceptual theories required by theory-based traceability are, by comparison, a much more recent phenomenon.

Equating has a long tradition in measurement based on the Rasch model. In the past, though, it has primarily served pragmatic purposes, for example by linking multiple forms of a test to a common metric in education. In health, crosswalks help convert one raw score into another. A metrologically-situated approach to equating means that the method of equating is used to link multiple instruments to one another, while also establishing a common metric that transcends instruments. Measurements based on different instruments become traceable to one another and the range of uncertainty of a specific measurement becomes exposed, too, in a comparable way. This approach is an important step towards metrological traceability in social measurement.

Notes

- 1.

Originally, face validity, which has not always been defined uniformly, mostly meant that respondents recognize the concept the items are supposed to measure [44]. As such, face validity is not necessarily desirable [16], for example in projective measurement. Today, face validity is typically assessed from the researcher’s viewpoint, and is widely seen as an indication of but not a substitute for content validity.

- 2.

References

N.D. Adroher, A. Tennant, Supporting construct validity of the evaluation of daily activity questionnaire using linear logistic test models. Qual. Life Res. 28(6), 1627–1639 (2019)

D. Andrich, A rating formulation for ordered response categories. Psychometrika 43(4), 561–573 (1978)