Abstract

An historic shift in focus on the quality and person-centeredness of health care has occurred in the last two decades. Accounts of results produced from reinvigorated attention to the measurement, management, and improvement of the outcomes of health care show that much has been learned, and much remains to be done. This article proposes that causes of the failure to replicate in health care the benefits of “lean” methods lie in persistent inattention to measurement fundamentals. These fundamentals must extend beyond mathematical and technical issues to the social, economic, and political processes involved in constituting trustworthy performance measurement systems. Successful “lean” implementations will follow only when duly diligent investments in these fundamentals are undertaken. Absent those investments, average people will not be able to leverage brilliant processes to produce exceptional outcomes, and we will remain stuck with broken processes in which even brilliant people can produce only flawed results. The methodological shift in policy and practice prescribed by the authors of the chapters in this book moves away from prioritizing the objectivity of data in centrally planned and executed statistical modeling, and toward scientific models that prioritize the objectivity of substantive and invariant unit quantities. The chapters in this book describe scientific modeling’s bottom-up, emergent and evolving standards for mass customized comparability. Though the technical aspects of the scientific modeling perspective are well established in health care outcomes measurement, operationalization of the social, economic, and political aspects required for creating new degrees of trust in health care institutions remains at a nascent stage of development. Potentials for extending everyday thinking in new directions offer hope for achieving previously unattained levels of efficacy in health care improvement efforts.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

12.1 Introduction: The Role of Measurement in the Shift to Quality in Health Care

Over 20 years ago, the Institute of Medicine (IOM), now known as the National Academy of Medicine (NAM), released two landmark reports, To Err is Human [171] and Crossing the Quality Chasm [172]. Berwick and Cassel [26] reflect on the legacy of these reports. They trace out some of the developments that have followed upon the evidence presented as to the seriousness of problems associated with quality in health care in the United States. Even though Berwick and Cassel do not include measurement theory and practice in their account, their observations nonetheless speak directly to the problems addressed by improved quantitative methods. The upshot is that persistent quality improvement challenges in health care may be transformed into entrepreneurial opportunities in the context of person-centered outcome metrology.

While noting that progress in some areas has been significant, Berwick and Cassel make several observations that set the stage for crystallizing a new framework for quality improvement, one providing a needed transformation of the system redesigns originally provoked by the IOM/NAM reports. The relevant lessons learned over the last 20 years, according to Berwick and Cassel, include:

-

Wholesale, systemic improvement in quality of care is difficult to bring to scale.

-

Improvements tend to be local and not generalized.

-

Quality improvement increasingly takes a back seat to financial pressures.

-

A driving economic precept of other industries – namely, controlling costs by improving quality – seems unworkable in health care.

-

Value-based pay-for-performance schemes have proliferated but have scarcely dented the dominant fee-for-service model.

-

Accountability for outcomes has perversely led to serious, negative impacts on clinician morale, and has not led to the desired progress in quality and safety.

-

A balance between the critical need for accountability, on the one hand, and supports for a culture of trust focused on growth and learning, on the other, seems out of reach.

-

New payment models that would set more constructive priorities have proven politically unpalatable in light of the way cost controls seem to automatically implicate some form of rationing.

One theme in particular repeats itself in a subtle and unnoticed way throughout Berwick and Cassel’s account. That theme concerns the unquestioned assumption that quality improvement, accountability, system redesigns, health care economics, and measurement are all inherently and definitively approached via centrally planned data analytics and policy implementations. Conceptual tools and methods are always applied from the outside in and the top down. No other alternative is ever mentioned or considered.

This unexamined assumption is nearly all-pervasive within the paradigm informing quality improvement efforts in person-centered outcome measurement [282, 300]. Questions have been raised as to the shortcomings of this paradigm, and alternatives often based in Actor-Network Theory (ANT) and related perspectives have been proposed [23, 40, 70, 85, 185, 252, 264, 276]. Though measurement and metrological infrastructures are of central importance in Actor-Network Theory [33, 188, 259, 340], only a few accounts referencing it [101,102,103, 114, 128] focus on the opportunities and problems addressed in advanced measurement theory and practice. Conversely, efforts aimed at establishing metrological systems for person-centered outcome measurements rarely recognize or leverage relevant concepts from Actor-Network Theory [17, 18, 51, 92, 273], though they are key to understanding the propagation of representations across instruments across boundaries. Might opportunities for producing practical results multiply rapidly if advanced measurement and metrology were blended with the ANT-oriented approaches to lean thinking and quality improvement that have been recently initiated in health care [85, 264]?

Actor-Network Theory and related perspectives in science and technology studies show that, in science, new understandings emerge progressively as data patterns cohere into measured constructs, are explained by predictive theories, are then packaged in instruments calibrated and traceable to unit standards, and then distributed to end users. Networks, or, better, multilevel complex ecosystems, of persons equipped with such technologies are enabled to coordinate their decisions and behaviors without having to communicate or negotiate the details of what they see. This is the effect referred to in economics as the “invisible hand;” it becomes possible only in the wake of efforts focused on identifying persistently repeatable patterns in the world that can then be represented in standardized symbol systems. This virtual harmonization of decisions and behaviors is the purpose of measurement.

This chapter proceeds by providing some background on the differences between quantitative methods defined primarily in statistical terms and those defined in relation to metrological potentials. As will be shown, these differences are paradigmatically opposed in their fundamental orientations and in the kinds of information they produce. After introducing the metrological paradigm via this contrast with existing practice, some aspects of its potential for revitalizing the measurement and management of quality improvement efforts in health care are elaborated.

12.2 Modern Statistical Approaches vs. Unmodern Metrological Approaches

As the early psychometrician, Thurstone [321, p. 10] put it in 1937, mathematics is not just an analytic tool, it is the language in which we think. Thurstone sought to make mathematics the language of measurement and psychological science not just in the sense of expressing analytic results but as substantiating the meaning of the relationships represented in numbers. Broadly put, Thurstone was trying to counter the modern Western worldview’s Cartesian and positivist subject-object dualism. Here, subjective perceptions are pitted against an objective world assumed to exist independent of any and all human considerations. This is the worldview informing the assumption that mathematics provides analytic tools for application to problems. Understanding how mathematics constitutes the language in which we think entails a quite different perspective.

Alternatives to the modern worldview include postmodern and unmodern perspectives [189, 191, 192, 196]. Postmodern, deconstructive logic notes the historical evolution and theory-laden dependencies of the modern worldview’s presentation of transcendent universals [198, 199]. The modern and postmodern quickly become locked in futile and unproductive conflict, however, as their implicit assumptions set up irreconcilable differences. As Latour [191, p. 17] says, “postmodernism is a disappointed form of modernism” that still proceeds with a certain logic but abandons hope of ever arriving at useful generalizations. The modern and the postmodern each offer their distinctive though opposed contributions: laws and theories are indeed powerfully predictive at the same time they are unrealistic formalisms detached from local situations; what counts as data changes as theory changes; and of course, theories are tested by but never fully determined by data. The facts of these empirically observable associations are of no use, however, in resolving the debate.

But the situation changes when the role of standards in language and the history of science are included in the account. Even the philosopher most closely associated with postmodern deconstructions, Derrida, understood this, saying, “When I take liberties, it’s always by measuring the distance from the standards I know” [81, p. 62]. He had previously recognized the need to be able, like Levi Strauss in his anthropology, “to preserve as an instrument something whose truth value he [Levi-Strauss] criticizes” [80, p. 284], such as the standards of language. And just as postmodernism has to accept a role for linguistic standards in deconstruction, so also must modernist forms of strong objectivity accept that, though Ohm’s law may well be universal, it cannot be proven without a power source, cable, and an ohmmeter, wattmeter, and ammeter [188, p. 250].

Even more fundamentally, given that language itself is a human construction associating arbitrary sounds and shapes with ideas and things, if even linguistic technology was taken away, one would have no means at all of formulating ideas on, thinking about or communicating the supposedly self-evident and objectively independent natural world. The fact that nothing is lost in integrating the modern metaphysics of a universally transcendent nature with postmodern relativism in an unmodern semiotics is recognized by Ricoeur [294]. He similarly points at the objectivity of text as a basis for explanatory theory not derived from a sphere of events assumed to be natural (existing completely independent of all human conception or interests), but compatible with that sphere. A semiotic science taking language as a model then does not require any shift from a sphere of natural facts to a sphere of signs: “it is within the same sphere of signs that the process of objectification takes place and gives rise to explanatory procedures” [294, p. 210].

Latour also notes that the unmodern perspective loses nothing in its pragmatic idealism that was claimed by modernism, while also offering a new path forward for research. As he says [192, p. 119],

To speak in popular terms about a subject that has been dealt with largely in learned discourse, we might compare scientific facts to frozen fish: the cold chain that keeps them fresh must not be interrupted, however briefly. The universal in networks produces the same effects as the absolute universal, but it no longer has the same fantastic causes. It is possible to verify gravitation ‘everywhere’, but at the price of the relative extension of the networks for measuring and interpreting.

It is also refreshing to have a frank acknowledgment of how difficult and expensive it is to bring new things into language and keep them there [142, 188, p. 251; 247, 248, 278], as opposed to the way modernist metaphysics renders invisible the processes by which the facts of nature are made to seem obviously, “naturally,” freely, and spontaneously self-evident and available [107, 299].

Dewey [83, 84] and Whitehead [331, 332] anticipate important aspects of the unmodern alternative articulated largely by Latour and others in the domain of science and technology studies and Actor-Network Theory. The unmodern perspective brings the instrument to bear in a semiotics of theories, instruments, and data based in the semantic triangle of ideas, words, and things. This extension does nothing but accept the historical development of written language as a model of what Weitzel [329] referred to as “a perfect standardization process” in his study of the economics of standards in information networks. As most readers are likely unfamiliar with both unmodern ideas and semiotics, it will be worthwhile to linger a bit on this topic, and provide some background.

In the history of science, research brings new things into language via what Wise [103, 340] describes as a two-stage process. New constructs initially act as agents compelling agreement among observers as to their independent existence as something repeatedly reproducible. In the second stage, the agent of agreement is transformed into a product of agreement made recognizable and communicable in the standardized terms of consensus processes. These processes culminate in metrological networks of instruments calibrated to fit-for-purpose tolerances.

Our modern Western cultural point of view usually conceives of society as making use of symbols and technologies, but closer examinations of the historical processes of cultural change [190, 191, 193,194,195, 330] show that symbolization exerts more influence over society than vice versa [20, 48, 176]. Features of the world, after all, do not become socially significant and collectively actionable until they are symbolized in shared representations. Communications and metrological networks are, then, specialized instances of the broader political economy of societies structured by means of shared symbol systems: “social reality is fundamentally symbolic” [294, p. 219]. In Alder’s [5] terms, “Measures are more than a creation of society, they create society.”

Because we are born into a world of pre-existing languages, an economy of thought [16, 133, 134] facilitates communication by lifting the burden of initiation [137, p. 104] and absorbing interlocutors into a flowing play of signifiers. That is, language and its extensions into science via metrology are labor-saving devices in the sense that they relieve us of the needs to create our own symbols systems, and to then translate between them. In this sense, as Gadamer [137, p. 463] says, it is truer to say that language speaks us than to say that we speak language.

Wittgenstein [341, p. 107] concurs, adding, “When I think in language, there aren’t ‘meanings’ going through my mind in addition to the verbal expressions: the language is itself the vehicle of thought.” Attending to the unity of thing and thought in language constitutes the Hegelian sense of authentic method as meta-odos: how thought follows along after (meta) things on the paths (odos) they take of their own accord [137, pp. 459–461; 143, 160, p. 63; 256,257,258]. Understood in its truth, method embodies a performative logic of things as they unfold in the relational back and forth of dialogue.

As has been established at least since the work of Kuhn [182, 183] and Toulmin [323, 324], methods always entail presuppositions that cannot be explicitly formulated and tested. The sense of method that focuses on following rules must necessarily always fall short in its efforts at explanatory power and transparency [137]. Going with the flow of the mutual implication of subject and object, however, enables a tracking or tracing of the inner development of the topic of the dialogue as it proceeds at a collectively projected higher order complexity. Persistently repeated patterns in question-and-answer dialogues facilitated by tests, assessments, surveys, etc. are the focus of measurement investigations [104,105,106, 110, 120].

An example of how measurement scaling methodically anticipates, models, investigates, maps, documents, and represents the movement of things themselves experienced in thought is given in Fisher’s [106] interpretation of Wright and Stone’s study of the Knox Cube Test [317, 356]. This and related tests, such as the Corsi Block Test, have been taken up as candidates for metrological standardization in recent efforts in Europe [50, 229, 270, 286, 287]. This work may play an important role in creating and disseminating on broad scales a powerful new class of phenomenologically rich methods and instruments.

In the same way that we think and communicate only in signs [38, 39, 61, 71, 79, p. 50; 83, p. 210; 268, 269, p. 30; 294, pp. 210, 219; 332, p. 107; 341, p. 107], so, also, is measurement the medium through which mathematics functions as the language of science [255]. Instruments are the media giving expression to science’s mathematical language. In Latour’s [188, pp. 249–251] words,

Every time you hear about a successful application of a science, look for the progressive extension of a network.… The predictable character of technoscience is entirely dependent on its ability to spread networks further. …when everything works according to plan it means that you do not move an inch out of well-kept and carefully sealed networks. … Metrology is the name of this gigantic enterprise to make of the outside a world inside which facts and machines can survive. … Scientists build their enlightened networks by giving the outside the same paper form as that of their instruments inside. [They can thereby] travel very far without ever leaving home.

Dewey [83] more broadly described the situation, saying:

Our Babel is not one of tongues but of the signs and symbols without which shared experience is impossible. … A fact of community life which is not spread abroad so as to be a common possession is a contradiction in terms. ...the genuine problem is that of adjusting groups and individuals to one another. Capacities are limited by the objects and tools at hand. They are still more dependent upon the prevailing habits of attention and interest which are set by tradition and institutional customs. Meanings run in channels formed by instrumentalities of which, in the end, language, the vehicle of thought as well as of communication, is the most important. A mechanic can discourse of ohms and amperes as Sir Isaac Newton could not in his day. Many a man who has tinkered with radios can judge of things which Faraday did not dream of.

A more intelligent state of social affairs, one more informed with knowledge, more directed by intelligence, would not improve original endowments one whit, but it would raise the level upon which the intelligence of all operates. The height of this level is much more important for judgment of public concerns than are differences in intelligence quotients. As Santayana has said: ‘Could a better system prevail in our lives a better order would establish itself in our thinking.’

Taking up the same theme, Whitehead [332] noted that the then-recent changes in the science of physics came about because of new instruments that transformed the imaginations and thoughts of those trained in using them. Scientists had not somehow become individually more imaginative. He [331, p. 61] previously remarked on the way civilization advances by distributing access to operations that can be successfully executed by persons ignorant of the principles and methods involved. Much the same point is made in developmental psychology’s focus on the fact that “cultural progress is the result of developmental level of support” [63; 327].

We stand poised to transform the developmental levels of support built into the cognitive scaffolding embedded in today’s social environments. Our prospects for success depend extensively on our capacities for building trust, something that is quite alien to numbers situated in isolated contexts disconnected from substantive diagnostic, theoretical, or meaningful values [262, 280, p. 144].

Building trust by connecting numbers with widely distributed, quality assured, reliable, dependable, repeatable, and meaningful structural invariances will inevitably make use of Rasch’s probabilistic models for measurement, which are eminently suited to satisfying the need for metrological networks of assessment- and survey-based instruments [102, 108, 109, 111, 112, 214, 216, 271, 272]. Wright [352], a primary advocate and developer of Rasch’s mathematics, noted not only that “science is impossible without an evolving network of stable measures,” he also made fundamental contributions to theory; historical accounts; modeling; estimation; reliability, precision, and data quality coefficients; instrument equating; software; item bank development and adaptive administration; report formatting; the creation of new professional societies and journals; and applications across multiple fields [116, 337]. Over the last 40 years and more, research and practice in education, psychology, the social and environmental sciences, and health care have benefited greatly from the advances in measurement developed by Wright, his students, and colleagues [4, 8, 9, 12, 13, 29, 89, 90, 126, 200, 210, 225, 295, 335].

Though much has been accomplished in the way of consolidating theoretical conceptions and descriptions of how sociocognitive infrastructures are embedded in cultural environments [32, 35, 124, 177], practical applications of these ideas have scarcely begun. Focusing on the cultivation of trust and dependable measurement standards may go a long way toward starting to reverse many of the communications problems in the world today. Scientifically defensible theories of measured constructs create an important kind of trust as explanatory models persistently predict instrument performance. Experimental results of instrument calibrations across samples over time and space similarly support the verifiable trust needed for legal contracts and financial accounting. Finally, distributed networks of trust may come to be embodied in end users who experience the reliable repetition of consistent results, who are able to see themselves and others in their data, and the data in themselves and others.

12.3 Creating Contexts in Health Care for Success in Lean Thinking

With that background in mind, how might an unmodern, metrological point of view lead to transformed, trustworthy quality improvement systems in health care? Answers are suggested with particular pointedness by repeated conceptual disconnections between quality, costs, and reduced waste in health care’s lean applications.

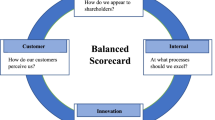

“Lean thinking” [279, 343] focuses on systematically removing processes that do not contribute to outcome quality. Lean processes are those from which ineffectual and costly, and so wasteful, factors have been eliminated, often via experimental comparisons. Manufacturing industries’ quality improvement and cost management successes with lean thinking have long been of interest in health care [27, 69]. The Toyota Production System is widely taken as a preeminent example of success in lean thinking. That success led Fujio Cho, a past Chairman of Toyota Motors, to remark, “We get brilliant results from average people managing brilliant processes. Our competitors get average results from brilliant people working around broken processes” (as quoted in [301], p. 84). Implementations of lean thinking over the years in health care, however, have tended to be piecemeal, isolated within departments, with little in the way of interdisciplinary boundary crossing, as was shown in a recent literature review [3]. As is also the case in education [87], because of these problems, health care organizations have a propensity to learn the same lessons over and over again, as a kind of “organizational amnesia” [261].

The goals for research capable of crossing boundaries include supplying evidence of more coherent knowledge, and taking a more integrated, authentic view of life [236]. A productive way of framing these goals pairs low and high levels of conceptual learning (“know why”) with low and high levels of operational learning (“know how”) to categorize types of situations in which organizations are able and unable to learn across boundaries [187]. Though this scheme has been productively applied in surgical contexts [132, 275], health care generally seems to be vacillating between firefighting, where conceptual and operational learning are both low; unvalidated theories, where conceptual learning is high and operational is low; and artisan skills, where conceptual learning is low and operational is high.

Berwick and Cassel’s reflections on quality improvement, and Akmal and colleagues’ [3] account of lean thinking, document the nearly complete failure in health care to achieve operationally validated theories, where both “know how” and “know why” are maximized. In a relevant insight, the blindered perspective on quality in health care is attributed in part to a “tool-myopic” thinking that undermines the generalizability of lean processes [3]. Going past Akmal et al.’s insight, we can see that this near sightedness comes from viewing measured outcomes only through the lenses of ordinal scores. When the meaning of numbers is tied to specific items, measurement cannot fulfill its role as the external scaffolding needed to facilitate socially distributed remembering and embedded cognitive supports, to adopt the language of Sutton and colleagues [318].

This myopia affecting quality improvement in health care is, then, ostensibly caused by the design limitations of quality-of-care instruments and measurement systems [130, 161, 356]. The theory of action following from ordinal metrics cannot tell people what to do in general; they can only inform the specific operations included in the score. As the economist, Joseph Stiglitz, put it, “What you measure affects what you do. If you don’t measure the right thing, you don’t do the right thing” (quoted by Goodman [146] in the New York Times; also see [131]). Stiglitz was speaking to the concrete content of what is measured, but including the right content is insufficient to the task of measurement. The content must be organized and configured in particular ways if it is to be meaningfully measured and appropriately actionable. Stiglitz’ observation ought then to be stated as, “The way you measure affects what you do. If you don’t measure the right way, you don’t do the right thing.”

What this means can be seen in the fallacy of misplaced concreteness [332, pp. 52–58] taken for granted as an unavoidable complication in most survey and assessment applications in health care. Though the intention is to obtain information applicable in general across departmental, organizational, and other boundaries, restricting the conceptualization and operationalization of measurement to numeric counts focuses attention counterproductively on the specific things counted. The maxim that we manage what we measure becomes in this context unintentionally ironic, as managers wind up focusing on bean counting instead of attending to their mission. Managing the numbers becomes a consequence evident in Berwick and Cassel’s list of lessons learned from quality improvement efforts in health care over the last 20 years.

Mainstream measurement methods in health care assume that assigning scores, counting events, summing ratings, or computing percentages suffice as measurement. Online searches of the contents of three recent textbooks on quantitative methods in health care [42, 263, 267], for instance, show that no mention is made of key words (such as invariance, statistical sufficiency, metrology, traceability; consensus standards, Rasch models, etc.) associated with measured quantities, as opposed to numeric statistics. As is the usual situation throughout psychology and the social sciences, quantitative methods in health care, more often than not, merely assume numbers to represent unit amounts of something real in the world, and never formulate or test the hypothesis that such quantities exist [10, 233, 314, 349, 352]. For instance, a January 2022 search of Google Scholar shows that only 2 of 74 peer-reviewed articles citing Nolan and Berwick [253] on “all-or-none measurement” even mention invariance or test the quantitative hypothesis [82, 175].

Concrete models of outcome quality implicated when measurement is misconceived as merely numeric are not the only option, however. Patterns of learning, healing, development, and growth demonstrated as invariantly structured across samples and instruments have been available and in use in health care for decades. A Google Scholar search of “Rasch model” and “health outcomes” in January of 2022 gave over 3000 results. A selection of even early examples shows a wide range of applications across diagnostic, clinical, treatment, and licensure/certification contexts [22, 24, 29, 30, 49, 54, 68, 97, 154, 163, 178, 209, 304, 354].

Most applications of Rasch’s models for measurement continue to operationalize them statistically as centrally planned exercises in data gathering and analysis. Fit to demanding measurement models may be highly prized in some quarters, but confidence in generalizing the results seems to be lacking. This remains the case despite instances in which the invariance of constructs has been demonstrated repeatedly over samples, over periods of years involving multiple instruments, or across multiple applications of the same instrument [52, 100, 102, 127, 148, 296, 305, 328, 334]. Exceptions to this pattern focus on developing and deploying adaptively administered item and scale banks [19, 285, 319, 326, 353], and self-scoring forms interpretable in measurement terms at the point of use [28, 34, 72, 165, 179, 201, 204, 274, 325, 342, 344, 351].

Another exception to the usual data-focused approach to measurement is the Low Vision Research Network [41, 53], which is based in an extended instrument equating and item bank development program [91, 145, 217,218,219,220,221,222,223,224]. Similar kinds of networks termed STEM (science, technology, engineering, and mathematics) ecosystems coordinating education and workforce development needs have mathematically modeled stakeholder empowerment to integrate measurement and management dialogues among formal and informal educators, technology companies, city and state governments, funding agencies, and philanthropists [237,238,239,240]. Finally, the European NeuroMet consortium [50, 229, 230, 270, 286, 287] is developing metrological standards for rehabilitative treatments of neurodegenerative conditions affecting cognitive performance. The instruments being studied in this project include variations on the Knox Cube Test, which was taken as a primary example of a simple exercise in scaling and theory development by Wright and Stone [317, 356].

This latter domain of attention span and short-term memory is of additional interest for its intensive focus on theory development [230, 311]. Rasch’s models facilitate not only empirical experiments testing whether concrete numeric counts falsify hypotheses of abstract measured quantities, they also structure and inform theoretical experiments testing whether the abstract scale is explained at a higher-order formal complexity affording explanatory and predictive power [77, 89, 94, 284, 311,312,313,315, 334, 335].

Automatic item generation [15, 88, 144, 166, 181, 277, 307, 313,314,315] becomes a possibility when theory advances to the point that empirically estimated item locations correspond usefully with theoretical predictions. The high cost of composing new test and assessment items is dramatically reduced by automation [181, 313]. The economy of thought structured by the model of language attains otherwise inaccessible degrees of market efficiency when single-use items formulated on the fly by a computer produce the response frequencies predicted by theory. Routine reproduction of these results in multiple domains will be key to altering the prevailing habits of attention and the overall social level of intelligence in the manner described by Dewey [83]. Instances of the stable reproduction of scales supported by theory and evidence, such as that described by Williamson [334], will be key to building out verified and justified investments of trust in numbers.

Measurement methodologies in use today in health care range, then, from ordinal item-dependent scores to interval analysis-dependent scales, and from there to metrologically traceable quantities. The larger modern cultural context accepts the objectivity of data as a record of events assumed to suffice as criterion for reproducibility. It seems that neither repeated conceptual critiques of these assumptions [59, 152, 153, 186, 228, 233, 302, 303, 349], nor readily available operational alternatives [7, 12, 95, 207, 246, 288, 347, 352] have had much impact on most researchers’ methodological choices. Is it possible to imagine any kind of a compelling motivation that would inspire wide adoption of more meaningful, rigorous, and useful measurement methods? The logic and examples developed and produced over the last 60 years have failed to shift the paradigm in the way that might have been expected, as has been recognized for some decades [58, 246]. Might there be other ways of making the superiority and advantages of some forms of information so attractive that few would miss the opportunity to use them?

12.4 Tightening the Focus on Metrological Potentials in Health Care

A plausible reason why conceptually and operationally superior measurement methods have not been more widely adopted is that the existing systems of incentives and rewards favor the status quo. It is often said that the inequities and biases of today’s institutions are systemic, that the institutions themselves seem designed to disempower individuals not born into advantageous circumstances [6, 245]. Mainstream methods of measurement and management prioritize concrete responses to the particular questions asked in local circumstances, doing so in ways that systematically undercut possibilities for creating the collective forms of information needed for reconfiguring our institutions. It may be that we will be unable to transform the systems of incentives and rewards dominating those institutions until we cultivate new ecologies of cross-disciplinary relationships that displace the existing ones by offering more satisfying substantive and economic outcomes. The root question is how to extend the economy of thought facilitated by language into new sciences and associated economic relationships.

That is, a new science of transdisciplinary research and practice is needed if we are to succeed in addressing the complex problems we face. The complexity of articulating how collective forms of knowledge are measured and managed, however, may cause even those focused squarely on the problem to nonetheless revert to established habits of mind and conceptual frameworks. The end result is often, then, a mere repetition of modern metaphysics in yet another take on concrete, individual-level solutions, as has been the case in some recent efforts [44, 316].

A number of fields, however, have taken steps toward practical understandings of collective complexities, with a particularly compelling convergence of perspectives:

-

Science and technology studies offer the concept of the boundary object [37, 308, 310] and the trading zone [140], in which meanings are recognized as simultaneously concrete and abstract, and as co-produced in tandem with standardized technologies ranging from alphabets and phonemes to clocks, thermometers, and computers [155, 174, 189].

-

Developmental psychology, similarly, offers a theory of hierarchical complexity [60, 64, 73, 96] that shows how thinking is general and specific at the same time; developmental transitions across 15 levels of complexity spanning the entire range of variation in the human life cycle are well documented.

-

Cognitive psychology also has in recent years come to be concerned with the ways in which thinking does not occur solely in the brain, neurologically, but depends materially on the sophistication of external supports like alphabets, phonemes, grammars, and other technologies embedded in the external environment [168, 169, 318].

-

Studies in the history of science similarly expand on the role of models as material artifacts mediating innovative advances [249,250,251]. Here again, formal conceptual ideals are represented in arbitrary standardized abstractions useful in negotiating local meanings in unique concrete circumstances.

-

Semiotics operationalizes the pragmatic consequences of knowing that all thought takes place in signs, with language as its vehicle, or medium [38, 39, 71, 260, 265, 266, 268, 269, 298]. The semiotic triangle’s thing-word-idea assemblages integrate concrete, abstract, and formal levels of complexity in practical, portable ways readily extended [115, 117] into the data-instrument-theory combinations documented in science and technology studies [1, 157, 170].

The overarching shared theme evident across these fields points at the need for distributed networks of instruments capable of operationalizing – simultaneously – formal explanatory power, abstract communications systems, and concrete local meaningfulness [115, 117].

Figure 12.1, adapted from Star and Griesemer [309], schematically illustrates the concrete data-level alliances of participants in knowledge infrastructures, their relations to abstract consensus standards’ obligatory passage points, and the encompassing umbrella of formal, theoretical boundary objects. Measurement research overtly addresses each of these levels of complexity. Systems integrating all three levels may become themselves integrated in meta-systematic systems of systems, or in paradigmatic supersystems [60, 115]. Associating substantive and reproducible patterns of variation consistently revealed in measurement research with consensus standards, and building these standards into applications across fields, may provide the direction needed for designing knowledge infrastructures capable of compelling adoption of improved methods in health care quality improvement. That is, translation networks’ alliances, obligatory passage points, and boundary objects define contexts in which systems elevate the overall level of intelligence and imagination, enabling everyday people to produce brilliant results. Lean thinking is, then, the apotheosis of the more broadly defined translation networks actualizing the economy of thought facilitated by language.

Levels of complexity in knowledge infrastructures (Ref. [309, p. 390]; annotated)

The history of economics points at the necessary roles played by measurement across legal, governance, communications, scientific, and financial institutions [2, 5, 14, 21, 25, 78, 254, 283, 320, 333]. Where the transcendental ideals of modern thinking assume that the objective world’s real and independent existence suffices to inform the assignment of numbers in measurement, the pragmatic unmodern recognition of the essential, difficult to craft, and highly expensive roles played by instruments and standards suggests a need for new directions.

Legal, financial, technical, and social networks and standards make nature seem given and obvious by weaving, as Callon [47] puts it, a “socionature:” an environment in which social associations mediated by mutually supportive shared symbol systems make things appear to be part of an objective reality independent of human concerns. Reality actually is, of course, independent of the concerns and interests of individual people, but it exists as a shared and accessible reality only when human interests collectively project higher order complexities and coordinate them in systems of trusted interdependent symbolic representations. Measurement research needs to feed into and become embedded in the legal, financial, social, and metrological infrastructures as part of the extension of everyday thinking and language into new domains.

Figure 12.2 is adapted from Bowker’s [36, p. 392] Fig. 29.1; his original version specifies only the vertical Global/Local and horizontal Technical/Social dimensions, and all text apart from those labels is inside one of the encircled four quadrants. The annotations of the quadrants outside of the circle have been added, as also have the expansions of the Technical to include the Scientific, and of the Social to include Communications. Finally, a third, orthogonal, dimension was added to further expand the Social to include Legal and Property Rights, and the financial domain of Markets and Accounting.

Co-produced technical and social infrastructural dimensions. (Adapted from Ref. [36, p. 392])

Figure 12.3 rotates Fig. 12.2 to reveal the Global and Local dimensions of the Legal/Property Rights and Markets/Accounting domains. The complex resonances of relationships coordinated and aligned in loosely coupled ways across these domains and levels of complexity suggest that different actors playing different roles at different levels are not going to agree entirely on what the object of interest is, or how it functions. Galison [140, 141] found the divergence of opinions about microphysical phenomena across experimentalists, instrument makers, and theoreticians so striking that he felt forced to assert that the disunity of science must play a key role in its success. Similarly, in contrast with the assumption that paradigms define contexts of general agreement in science, Woolley and Fuchs [345, p. 1365] “suggest that a spectrum of convergent, divergent, and reflective modes of thought may instead be a more appropriate indicator of collective intelligence and thus the healthy functioning of a scientific field.”

Co-produced legal and financial infrastructural dimensions. (Adapted from Ref. [36, p. 392])

In this context, when diverse alliances implement measurement standards to inform common product definitions and trustworthy expectations of market stability across technical, legal, financial, and communications sectors, it becomes apparent that certain kinds of instruments can make markets [121, 234]. Markets are not, contrary to popular opinion, made merely by exchange activity. Instead, “skilled actors produce institutional arrangements, the rules, roles and relationships that make market exchange possible. The institutions define the market, rather than the reverse” [234, p. 710].

In short, the economy is a metrological project [67, 235]. It is comprised of “a series of competing…rival attempts to establish metrological regimes, based upon new technologies of organization, measurement, calculation, and representation” [235, p. 1120]. Because they create a babble of incommensurable numeric comparisons, today’s ordinal, scale-dependent technologies quantify health outcomes in ways that organize, measure, calculate, and represent quality improvement opportunities inefficiently and ineffectively. The plethora of incommensurable scales makes it impossible for the instruments to ever disappear into their use as relatively transparent media uniting thing and thought. Instead of drawing attention to themselves only when they malfunction, ordinal scales’ lack of theoretical explanations and instrument standards make it impossible for the scales to not foreground themselves as an object of interest.

Because diagnostics and theory are overlooked in the development and use of these metrics [93], and because they are opaque black boxes incapable of providing the needed comparability, they have created a crisis of reproducibility [156, 173], and cannot serve as a basis for trusted relationships.

Advanced measurement, however, opens onto new technologies that appear likely to support broader, more productive, and historically proven approaches to creating competing metrological projects. Though clarified quantitative criteria for scaling interval measurement standards set the stage for improved communication and comparability, the way forward will not be without its challenges. The development of electrical standards, for instance, provides a telling account of the uneven and fraught processes bedeviling the creation of commercially viable metrological traceability for resistance and current metrics [167].

That said, there are pointed motivations for engaging with metrological opportunities in health care that were not of concern in markets focused exclusively on manufactured capital. The need to restore trust in our institutions must certainly be included among the highest priorities in this regard. Human, social, and natural capital markets are currently permeated by systemic biases and inequity, problems made to seem ineradicable by the unexamined assumptions of modern thinking and vested interests in maintaining the status quo. Continuing to assume that objective reality is completely independent of human conceptions (i.e., that the assignment of numbers to observations suffices as measurement, despite the resulting communications chaos) promotes and prolongs the domination of disempowering institutions, making it seem as though trying to alter or transform existing relations of power and authority is a futile quest.

Nothing, however, could be further from the truth than this complacent or hopeless attitude. Where ordinal measurements tied to item-dependent categorical scores cannot provide the comparability needed for creating new institutional arrangements in health care markets, metrologically traceable interval measurements possibly could [112, 117, 119, 121]. But, contrary to modernist assumptions as to individual minds being the seat of creativity, it ultimately will not likely be necessary to persuade and educate individuals as to the need for new methods.

That is, as cross-sector associations informed by advanced measurement results proliferate, it will become increasingly counterproductive to remain disconnected from the networks through which trusted, replicable cost-benefit relationships are collectively organized, measured, calculated, and represented. Where contemporary metrological regimes based in ordinal, concrete representations compete in centrally planned command economies, new regimes integrating concrete, abstract, and formal representations will distribute information and decision-making power to end users throughout coordinated networks and efficient markets. When that happens, it would be wise to bet that empowered individuals – clinicians, educators, managers, patients, etc. – will choose to make use of reliable, quality assured, and comparable precision measurements when making their treatment decisions. In this scenario, researchers and practitioners will ignore new metrological developments at their peril.

12.5 Discussion

Apt warnings as to the hubris of mounting such an effort aimed at improving the human condition are given in Scott’s [297] historical account of “high modern” schemes intended to serve the greater good. Scott [297] suggests the integrity of these efforts depends on capacities for:

-

enhancing the lives of those who are affected by them;

-

being deeply shaped by the values of those participating in them; and

-

permitting unique local creative improvisations that may not conform to conceptual ideals.

Even systems designed with the most benevolent of intentions can fail catastrophically in one or more of these three areas, with disastrous results. Scott [297] suggests taking language as a model of ways of effectively achieving these capacities for trustworthy relationships. This effectiveness follows, Scott says, from the way language serves as a medium through which broad principles are continually applied to novel circumstances. It is, as he says, “a structure of meaning and continuity that is never still and ever open to the improvisations of its speakers.” Scott is implicitly suggesting that the pragmatic idealism of language ought to serve as a model for how to integrate multiple levels of complexity, where formal idealizations, abstract standards, and concrete local events are in play simultaneously.

Well-designed outcome measurement systems [130, 335, 356] might meet all three of Scott’s integrity test criteria to the extent they:

-

provide end users with information they need to understand where they are now relative to where they have been, where they want to go, and what comes next in their journey, as in formative assessment [31, 54, 113];

-

are calibrated on data, provided by end users themselves, exhibiting patterns of structural invariance informing the interpretation of the measurements [288, 347]; and

-

can be managed in their specific idiosyncrasies to take special strengths and weaknesses into account irrespective of the pattern expected by the model [55,56,57, 130, 147, 164, 197, 202, 203, 227, 357].

These pragmatically ideal terms are also implicated in the correspondences across fields listed above, which also focus, in effect, on taking language as a model. Galison [139, pp. 46, 49], for instance, similarly finds in his ethnographic study of microphysics’ communities of theoreticians, instrument makers, and experimentalists that the seeming paradox of locally convergent and globally divergent meanings can be reconciled by seeing that “the resulting pidgin or creole is neither absolutely dependent on nor absolutely independent of global meanings.”

Star and Griesemer [309] (also see Star [308], Star and Ruhleder [310]) implicate this linguistic suspension between ideas and things when they explain that:

Boundary objects are objects which are both plastic enough to adapt to local needs and the constraints of several parties employing them, yet robust enough to maintain a common identity across sites. They are weakly structured in common use, and become strongly structured in individual site use. These objects may be abstract or concrete. They have different meanings in different social worlds but their structure is common enough to more than one world to make them recognizable, a means of translation. The creation and management of boundary objects is a key process in developing and maintaining coherence across intersecting social worlds.

In a study of how universalities are constructed in medical work, Berg and Timmermans [23] came to see that:

In order for a statistical logistics to enhance precise decision making, it has to incorporate imprecision; in order to be universal, it has to carefully select its locales. The parasite cannot be killed off slowly by gradually increasing the scope of the Order. Rather, an Order can thrive only when it nourishes its parasite--so that it can be nourished by it… . Paradoxically, then, the increased stability and reach of this network was not due to more (precise) instructions: the protocol’s logistics could thrive only by parasitically drawing upon its own disorder.

Berg and Timmermans provide here an apt description of the model-data relation in the context of probabilistic measurement. The models must be probabilistic because of the need to incorporate imprecision and uncertainty. When collectively projected patterns of invariance are identified and calibrated for use in distributed metrological systems, the locales in which the instruments are used must be carefully selected. Though the structural invariance modeled may eventually be replicated over millions of cases and thousands of items, imprecision and uncertainty are never completely overcome. Ongoing data collection will nourish the articulation of the scale to the extent that new persons and items teach new lessons, and the scale’s logistics will continue to thrive and its network will be increasingly stable and extended only to the extent that the stochastic invariance patterns persist.

Probabilistic models of measurement incorporate imprecision and uncertainty as the basis for estimating quantity, and counterintuitively provide a firmer basis for quantification than non-probabilistic models. As was noted by Duncan [86], “It is curious that the stochastic model of Rasch, which might be said to involve weaker assumptions than Guttman uses [in his deterministic models], actually leads to a stronger measurement model.” Measurements made via probabilistic modeling are, furthermore, evaluated for their usefulness in terms that provide the precision and information quality needed to support a decision process that takes language as a model by explicitly positing, testing, substantiating, and deploying simultaneously and systematically formal theoretical, abstract standard, and concrete data levels of complexity.

This semiotic explication operationalizes language meta-systematically, reflectively acting on it as an object of intentionally framed decisions, instead of allowing unexamined metaphysical assumptions to shift uncontrollably from prioritizing one or another hidden agenda to another. In Postman’s [281] terms,

If we define ideology as a set of assumptions of which we are barely conscious but which nonetheless directs our efforts to give shape and coherence to the world, then our most powerful ideological instrument is the technology of language itself.

The semiotics of language structure a usually unquestioned metaphysical ideology that, as Burtt [43, p. 229] recognized, is “passed on to others far more readily than your other notions inasmuch as it will be propagated by insinuation rather than by direct argument.” Modernist and postmodernist emphases on transcendental uniformity, local relativity, empiricism, operationalism, idealism, instrumentalism, etc. each selectively attend to or ignore one or another of the semiotic domains of language. Taking language altogether as a semiotically integrated model of how things are represented, maintained, and transformed sets up new paradigmatic alternatives [38, 39, 71, 255, 260, 265, 266, 298].

Rasch, though neither a philosopher, a semiotician, an historian of science, nor a developmental psychologist, was well aware that his models for measurement function at formal, abstract, and concrete levels of complexity. He [288, pp. 34–35] recognized his models are, for instance, as unrealistic in their form as the idealizations asserted in Newton’s laws, or in geometry. Just as no one has ever observed the inertial path of an object left entirely to itself unaffected by any forces, so, too, is it impossible to draw or observe the mathematical relationships illustrated in geometric figures. This is readily seen in the fact that a right isosceles triangle with unit sides of 1 has a hypotenuse the length of the square root of 2, an undrawable irrational number. Similarly, circles with a radius of 1 have a circumference of pi, another undrawable irrational number.

Butterfield [45, pp. 16–17, 25–26, 96–98] remarks that no amount of photographically recorded observations made during experiments on actual objects in motion would ever accumulate into the kind of geometric ideal envisaged by Galileo. Instead, he says, that vision requires a different kind of thinking-cap, an imaginative transposition that projects an unrealistic but solvable mathematical relationship that might possibly be made useful. As Burtt [43, p. 39] recognized, what Galileo, Copernicus, Newton, and other early physicists accomplished followed from imagining answers to the question as to “what motions should we attribute to the earth in order to obtain the simplest and most harmonious geometry of the heavens that will accord with the facts?”

Rasch, in effect, imagined that the same question could be usefully posed in the domain of human abilities [118, 122]. It is just as unrealistic to model Pythagorean triangles as it is for measurements of human performance to be functions of nothing but the differences between abilities and the difficulties of the challenges encountered. Rasch [288, pp. 34–35; 292] accordingly emphasized that models are not meant to be true, but must be useful. Rasch geometrizes psychology and the social sciences by conceptualizing the relations of infinite populations of persons exhibiting abilities, functionality, or health in the same frame of reference alongside the universes of all possible challenges to those capacities. He puts on the “different kind of thinking-cap” described by Butterfield and Burtt as beginning from the projection of geometric forms, instead of from the assumption that such forms will result from accumulated observations.

Rasch’s approach stands in stark contrast to the dominant paradigm in health care quality improvement and its unquestioned modernist supposition that empirical observations do in fact accumulate into patterns of lawful behavior. This assumption persists despite the more than six decades that have passed since Kuhn ([184], p. 219; original emphasis, and originally published in 1961) noted that

The road from scientific law to scientific measurement can rarely be traveled in the reverse direction. To discover quantitative regularity one must normally know what regularity one is seeking and one's instruments must be designed accordingly; even then nature may not yield consistent or generalizable results without a struggle.

Rasch’s models make the laws of measurement readily accessible, and offer a new paradigm and methods that fit squarely in the historical tradition of scientific laws that open broadly generalized imaginative domains. In principle, it is best to begin measurement research from a theoretical construct model that defines the regularity sought, and informs item writing and the selection of the sample measured [125, 322, 335, 336, 338, 339]. On the other hand, empirical scaling results estimated from data sets constructed on the basis of intuitive suppositions can often teach useful lessons [129, 304].

In addition to recognizing the value of theory, Rasch also understood anomalies as indicators of new directions for qualitatively focused investigations, giving the same example of the discovery of Neptune from aberrations in the orbit of Uranus [288, p. 10] as Kuhn mentioned in his 1961 article on the function of measurement in science [184, p. 205]. Rasch’s awareness of the simultaneous roles of formal ideals and concrete data in thinking was further complemented by his [291] projection that instrument equating methods will eventually lead to the deployment of abstract metrological standards and “an instrumentarium with which many kinds of problems in the social sciences can be formulated and handled.”

Separating and balancing these theoretical, instrumental, and experimental purposes requires closer attention to the parts and wholes of research. Efforts in this vein, explicating the inner workings of quantitative methods, were begun in the 1920s by Thurstone [321], continued in the 1940s to 1980s by Loevinger [205, 206], Guttman [150,151,152], Rasch [288,289,290, 293], Wright [346,347,348, 350,351,352, 354,355,356], Luce and Tukey [208], Luce [207], and their colleagues and students [7,8,9, 11, 29, 90, 95, 126, 220, 246, 295, 335].

This body of work explicitly pursues the questions raised by Shiffman and Shawar [300] concerning how the global health metrics system might work more effectively to advance human wellbeing. Writing in The Lancet, Shiffman and Shawar echo the challenge posed by Power [282, p. 778] as to the need for social scientists to “open up the black box of performance measurement systems, to de-naturalize them and to recover the social and political work that has gone into their construction as instruments of control.” Power, and Shiffman and Shawar, offer highly sophisticated perspectives on social theory, history, and philosophy in their critique of health care performance measurement systems, citing many of the same writers as those mentioned or implicated in this chapter, such as Bowker and Star, Foucault, Hacking, Heilbron, Kuhn, Miller and O’Leary, Popper, Porter, Scott, and Wise.

Instead of assuming this work of recovering the social and political construction of measurement systems has never been done, Power, and Shiffman and Shawar, might have invested more effort in seeking out and understanding efforts that have been underway for decades. One such work published in Lancet Neurology [162] provides an excellent explication of the technical issues, and cites a number of papers concerning the controversies involved (among others, see [11, 98, 231, 232, 351, 352, 354]. One of the papers [98] cited in Hobart et al.’s Lancet Neurology article explicitly concerns Power’s [282] themes concerning the de-naturalization of measurement systems and the recovery of the social and political work invested in their construction. Further searches of the measurement literature would reveal other contributions developing that theme and citing many of the same sources as Shiffman and Shawar, and Power [99, 123, 124, 211,212,213, 215, 226, 241,242,243,244, 306].

Though there surely remain many issues in need of much more extensive elaboration and conclusive resolution, this body of work suggests that, contrary to Power’s and Shiffman and Shawar’s broad categorizations, performance measurement systems in health care are not merely instruments of control, but offer the possibility of extending everyday language in creative and empowering ways. Meanings are not, after all, mere ancillary implications attached to words and numbers; they are fulfilled and operationalized only in use. The question is not one of how best to adapt to inevitably reductionist homogenizations of differences but is instead a matter of learning how to navigate the complex emergent flows of meaningful and co-evolving relationships. Numbers, like all words, have a long history of being used to oppress, manipulate, control, minimize, and render invisible situations, events, or people who fall outside the boundaries of rigidly defined norms. But numbers and words also have many instances in which they serve as media connecting things and ideas in fluid and adaptable ways. Because it incorporates multilevel complexities, taking language as a model for creating new vehicles of thought offers opportunities for opening imaginations to new possibilities for negotiating local meanings and agreements on the fly in the moment of use.

Habituating end users to new technologies and complex processes need not be inherently difficult, though arriving at intuitively accessible designs will likely be very challenging. Simplifications have, after all, made routine use of extremely technical mechanical and computational devices a fact of everyday life. Motivations for producing them followed from the same economies of thought extended into larger domains as those proposed here for quality improvements in person-centered health care. Might it be possible to imagine, orchestrate, and choreograph resonant correspondences of property rights, financial standards, regulatory approvals, contract law, communications, and technical requirements to fulfill hopes and dreams for creating trustworthy, brilliant systems everyday people can use to produce exceptional results?

12.6 Conclusion

Society expresses its standards for fair dealing in the uses it makes of measurements. Measurement is at once highly technical, mathematically challenging, and a normal routine of life performed dozens of times a day. It resides in the background as something taken for granted and usually understood only to the superficial extent needed to meet basic needs in the kitchen, the workshop, the construction site, and in scheduling meetings or making travel plans.

Health care, like many other areas of contemporary life, lacks systems of signs and symbols for representing meaningful amounts of changes recorded categorically as present or absent, as failing or good, or as ratings on a numeric scale. Existing categorical representations allow the same signs and symbols to mean different things at different times and places when the same measured construct could plausibly be the object of interest. Not having any way of being clearly communicated, the products of the health care industry – health, functionality, abilities, and quality of care – must necessarily then lack common definitions, making it impossible to make price comparisons or to take systematic steps toward progressive improvements in outcomes. But improved approaches to thinking about and doing measurement have long been available, as also has been documentary evidence of the multiple roles measurements play in creating coherent social ecologies and economies.

The challenges are immense, as is evident in the multiple demands for operationalizing the technical issues involved in measurement modeling, instrument design and calibration, metrological traceability, the distribution of standardized forms of information throughout networks of actors, and the coordination across multidisciplinary alliances and obligatory passage points of simultaneously formal, abstract, and concrete boundary objects. But shifting the paradigm away from the unexamined and taken-for-granted modern metaphysics of centrally planned statistical solutions applied from the top down, toward emergent, distributed, and reproducible measurement solutions sets the stage for envisioning alternative outcomes for the problems encountered in the shift to quality over the last 20 years [26]. Using advanced measurement in an unmodern paradigm of actor networks might make it possible to create new approaches to sustainable change [180] that organize new visions, plans, resources, incentives, and skills capable of facilitating a new array of outcomes:

-

Wholesale, systemic improvements in quality of care fulfilling their potential for being brought to scale.

-

Improvements that are generalized across boundaries because theory and practice inform each other.

-

Quality improvements ease financial pressures.

-

Controlling costs by improving quality in “lean” processes works in health care as it does in other industries.

-

Value-based pay-for-performance schemes completely replace the fee-for-service model.

-

Accountability for outcomes cultivated from replicable, trusted relationships inspires positive impacts on clinician morale, and leads to clear progress in quality and safety.

-

A balance between the critical need for accountability, on the one hand, and supports for a culture of trust focused on growth and learning, on the other, is brought within reach, and is grasped.

-

New payment models setting more constructive priorities are political imperatives because of the way cost controls and higher profits go hand-in-hand with quality improvements.

The premise of this chapter, and this book, is that positive, constructive, and productive paths forward in health care quality improvement are indeed possible. This book develops the idea that measurement systems are not primarily mechanisms of control and domination but serve as vehicles of thought, as the media through which creative innovations find expression. Science extends everyday language such that conceptual frameworks contextualize abstract standards for representing patterns projected by individuals, which in turn contextualize observations that never conform exactly to the model.

The pragmatic idealism of integrated formalisms, abstractions, and concrete data is as old as the birth of philosophy in Plato’s distinction between name and concept, figure and meaning [136, p. 100]. Plato redefined the elements of geometry to make this obvious, saying a point is an indivisible line, a line is length without breadth, etc. [46, p. 25]. This made irrational and incommensurable line segments equivalent to rational and commensurate ones; the existential threat to the Pythagorean worldview’s sense of the universe as mathematical was then removed. Though Plato is often mistakenly associated with an overriding emphasis on ideal forms, his idealism is quintessentially pragmatic in accepting in his philosophy the necessary and mutually complementary roles of both abstract representations and concrete phenomena that never fit the model.

The modern metaphysics in health care quality improvement is Pythagorean in the sense of mistaking numbers and numeric relationships for existence and reality [136, p. 35]. This confusion makes it impossible to think of and act on real situations collectively, as communities of practice, because of the lack of shared languages. Mistaking numbers for quantities is akin to not seeing the forest for the trees, or confusing the map for the territory [117]. Taking an unmodern perspective advancing a metrological agenda, in contrast, operationalizes the ontological distinction between ideas and what becomes [98, 104,105,106, 110]. Creating useful models at abstract and formal levels of complexity distinguishes forests from the actual trees, and draws maps with features that are not in one-to-one correspondence with the concrete world. These distinctions are essential if we are to create contexts for manifesting “the world of ideas from which science is derived and which alone makes science possible” [136, p. 35].

Whitehead saw that getting from things to ideas systematically at a societal level of organization requires capacities for apprehending higher order levels of complexity and packaging them in easy-to-use technologies. As he [331, p. 61] said, “Civilization advances by extending the number of important operations which we can perform without thinking about them.” The model of hierarchical complexity [60, 65, 73, 75, 76] spells out how the simplified packaging of complex operations happens; examples from the history of governance and science are instructive as to how today’s transitions might be negotiated [61,62,63, 66].

The theory of hierarchical complexity specifies how hidden assumptions informing operations at one level of complexity become objects of operations at the next level. A child able to say, “Nice cat,” for instance, may not know the alphabet or what a word is. When the child is able to say, “I know how to spell ‘cat:’ C-A-T,” a transition from the concrete to the abstract levels of complexity has occurred. The child previously unaware language was being used now is overtly conscious of it. To Whitehead’s point, however, the child had no input at all into determining the shape of the letters, their organization into a word, their pronunciation, etc. The child does not need to invest any effort in inventing communicative operations because the economy of thought facilitated by language has already done that work. That work ensures the child can inhabit a space in a community possessing shared standards, and take ownership of a trusted medium of expression with proven functionality.

As a society, we are similarly transitioning from concrete articulations focused on numeric counts to higher order quantitative abstractions, formalisms, systems, metasystems, and paradigms [74, 115, 117]. Over the last 100 years or so, dating from Thurstone’s [321] work in the 1920s, measurement operations involving abstract and formal expressions of quantity could not be performed without thinking about them. Technical skill has been essential to organizing concrete observations recorded as ordinal numbers and using them as a basis for estimating abstract quantities. Those lacking access to that skill, and who could not visualize, plan, resource, or incentivize it, have been unable to perform the operations of quantification.

The emergence of metrological theory and methods marks the beginning of the consolidation and integration of a new level of hierarchical complexity in society’s semiotic sociocognitive infrastructures. Metrology advances civilization by extending the number of measurement operations that can be performed by people lacking technical skill in those areas. The singularly important point elaborated in different ways by the chapters in this book concerns how numeric patterns cohere in abstract forms enabling the expression of the collectively-projected information that must be fed back via measurement to management. Quality improvement methods and lean thinking cannot fulfill their potential in health care unless the measurements used consistently stand for objectively reproducible quantities. Probabilistic models for measurement provide the analytic means by which such patterns can be identified and put to work in metrological networks of quality assured instrumentation. The chapters in this book articulate in detail the mathematics, the organization, and the interpretation of ways in which researchers and practitioners engage in that work to cultivate trust.

The consequences of making theories and data metrologically contestable must lead toward a radical departure from the current situation of incommensurable perspectives left isolated in their own domains, subject only to the competing interests of others who might command more persuasive rhetoric, greater personal charm, or larger funds. Marshaling predictive theory, explanatory models, instrument designs, consensus processes, quality assurance results, fit for purpose tolerances, traceability to standards, regulatory compliance, contractual obligations, etc. in trust markets with much higher stakes will require vastly different capacities for envisioning, planning, staffing, resourcing, and incentivizing sustainable change (taking up the frame of reference described by [180]). The needed shift in thinking does not mitigate the fact that, as was suggested some years ago [149], given the longstanding availability of the conceptual and practical resources needed for achieving fundamental measurement, continuing to accept today’s dysfunctional and counterproductive ordinal quality metrics constitutes a fraudulent malpractice and major liability compromising the integrity of health care institutions.

The goal of mediating conceptual ideals and real things in thoughtful, mindful uses of words (including number words) is to create opportunities for theoretical defensibility, communications standards, and residual anomalies to complement and augment each other, with each arising in safe and productive times and places as objects of debate and actionable considerations. When these multiple purposes are accomplished via somewhat divergent and somewhat convergent ideas and methods by communities of allied participants, modern metaphysics’ fixation with transcendent universals is overcome. This overcoming is not a matter of metaphysical assumptions being negated, defeated, or abandoned, since, in overcoming modern metaphysics, we must inevitably take it up and use it [80, pp. 280–281; 81, p. 62; 135, p. 240; 138, pp. 164; 184–185; 158, p. 19; 159, pp. 84–110]. This usage occurs in contexts that formally, systematically, metasystematically, and paradigmatically integrate semiotic levels of complexity. Simultaneously convergent and divergent, willed and unwilled, general and specific, global and local boundary objects already implicitly enacted across domains must become explicitly articulated in metrological research and practice. Beyond mere hope, semiotic models of language as the vehicle of thought offer actionable, pragmatic programs for sustainable change in health care’s person-centered quality improvement efforts.

References

J.R. Ackermann, Data, Instruments, and Theory: A Dialectical Approach to Understanding Science (Princeton University Press, 1985)

Z.J. Acs, S. Estrin, T. Mickiewicz, L. Szerb, Entrepreneurship, institutional economics, and economic growth: An ecosystem perspective. Small Bus. Econ. 51(2), 501–514 (2018)

A. Akmal, R. Greatbanks, J. Foote, Lean thinking in healthcare-findings from a systematic literature network and bibliometric analysis. Health Policy 124(6), 615–627 (2020)

S. Alagumalai, D.D. Durtis, N. Hungi, Applied Rasch Measurement: A Book of Exemplars (Springer-Kluwer, 2005)

K. Alder, The Measure of All Things: The Seven-Year Odyssey and Hidden Error that Transformed the World (The Free Press, 2002)

R. Alsop, N. Heinsohn, Measuring Empowerment in Practice: Structuring Analysis and Framing Indicators, Tech. Rep. No. World Bank Policy Research Working Paper 3510 (The World Bank, 2005), p. 123

E.B. Andersen, Sufficient statistics and latent trait models. Psychometrika 42(1), 69–81 (1977)

D. Andrich, A rating formulation for ordered response categories. Psychometrika 43(4), 561–573 (1978)

D. Andrich, Sage University Paper Series on Quantitative Applications in the Social Sciences. Vol. Series No. 07-068: Rasch Models for Measurement (Sage, 1988)

D. Andrich, Distinctions between assumptions and requirements in measurement in the social sciences, in Mathematical and Theoretical Systems: Proceedings of the 24th International Congress of Psychology of the International Union of Psychological Science, ed. by J. A. Keats, R. Taft, R. A. Heath, S. H. Lovibond, vol. 4, (Elsevier Science Publishers, 1989), pp. 7–16

D. Andrich, Controversy and the Rasch model: A characteristic of incompatible paradigms? Med. Care 42(1), I–7–I–16 (2004)

D. Andrich, Sufficiency and conditional estimation of person parameters in the polytomous Rasch model. Psychometrika 75(2), 292–308 (2010)

D. Andrich, I. Marais, A Course in Rasch Measurement Theory: Measuring in the Educational, Social, and Health Sciences (Springer, 2019)

W.J. Ashworth, Metrology and the state: Science, revenue, and commerce. Science 306(5700), 1314–1317 (2004)

Y. Attali, Automatic item generation unleashed: An evaluation of a large-scale deployment of item models, in International Conference on Artificial Intelligence in Education, (Springer, 2018), pp. 17–29

E. Banks, The philosophical roots of Ernst Mach’s economy of thought. Synthese 139(1), 23–53 (2004)

S.P. Barbic, S.J. Cano, S. Mathias, The problem of patient – Centred outcome measurement in psychiatry: Why metrology hasn’t mattered and why it should. J. Phys. Conf. Ser. 1044, 012069 (2018)

S. Barbic, S.J. Cano, K. Tee, S. Mathias, Patient-centered outcome measurement in psychiatry: How metrology can optimize health services and outcomes, in TMQ_Techniques, Methodologies and Quality, 10, Special Issue on Health Metrology, (2019), pp. 10–19