Abstract

Tactile sharing with others facilitates improving communications and augmenting cooperative tasks. An increase of persons sharing tactile sensations increases the effectiveness whereas the area of tactile stimuli given should be investigated for intuitive perception. This study investigated the effect of spatial correspondence between tactile and visual stimuli in identifying tactile stimuli. In the experiment, participants viewed simultaneously two videos of other agents’ hands each rubbing one of three textures and felt their vibrotactile stimuli in two locations. The videos were presented at different locations on the screen (Scene 1\(:\) left-right side or Scene 2 & 3\(:\) top-bottom) and the vibrotactile stimuli were presented either at the wrists of the left and right hand (Scene 1) or at the upper arm and the wrist of the right arm which either rested on the table (Scene 2) or was hanging down along the body (Scene 3). For each scene, visual and tactile stimuli were either spatially aligned (left and right video with tactile stimuli at the left and right wrist, and top and bottom video with top and bottom location on the right arm) or not. The result showed shorter response times for left-right spatial correspondence and for far (top) and close (bottom) visual stimuli corresponding to distal (wrist) and proximal (upper arm) locations on the body. This implied that the body schematic is an important factor for spatial compatibility of visual and tactile stimuli.

This work was supported by JST Moonshot R&D, JPMJMS2013 and JSPS 21H05071.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Tactile interfaces, such as tactile sensors and displays have advanced, thus making it possible to detect and transmit the tactile sensations that other people perceive. Tactile sensations are necessary for object identification and manipulation. Sharing tactile sensations with others can improve communication and augment cooperative tasks with others, thus expanding the range of our object perception. Because of COVID-19, remote audio-visual communication has been spread rapidly. Tactile sharing can enrich the communication by allowing people to recognize what their partners are touching or how they move their fingers [1]. This may also induce simultaneously sharing experiences with multiple persons. Tactile sharing can be further employed for human-human/robot collaboration, where reciprocal awareness induces a smooth collaboration [2]. Casalino et al. [3] demonstrated that vibrotactile stimulation to an operator when a robot recognized his/her action improved the collaborative task.

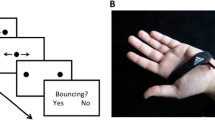

Identification task of two tactile stimuli corresponding to two videos on a monitor with vibrotactile displays on the arm. Three scenes with different postures and tactile presentation positions were used and two spatial conditions between tactile and visual stimuli: (a) spatial correspondence (b) spatial non-correspondence were employed for each scene.

For communication and cooperation, it is preferred that tactile stimulation from other people is presented to an area where the stimulation does not interfere with various operations of one’s hands or fingertips. Wearable tactile displays are suitable for perceiving tactile stimulation in areas other than the fingers and hands [4]. An increase in the number of persons sharing tactile sensations improves communication and cooperation. However, perceiving the tactile sensations of multiple persons simultaneously disperses attention to the stimuli [5] and increases the cognitive load. Wang et al. [6] showed that the correct response rate decreased as the number of stimuli increased to estimate the stimulus position when multiple tactile stimuli were presented to both arms simultaneously. Hence, an intuitive presentation of visuo-tactile stimuli is important.

This study investigated spatial compatibility between visual stimuli and their corresponding tactile stimuli as shown in Fig. 1. A previous study demonstrated spatial compatibility in the cognitive processes of perception and action [7]. In the compatibility condition where the visual cue and action spatially correspond, such as responding to the visual stimuli presented on the right side with the button on the right side, the response is faster and more accurate than in the incongruent condition. When presenting the tactile sensations of others in the left and right positions to the body, correspondence between the left and right is be expected to facilitate recognition. However, it is unclear how the visual stimuli at the upper and lower positions correspond to the body area where the tactile stimuli are presented. Video conferencing systems divide the video of individuals into up, down, left, and right grids, and at the human-human/robot collaboration, the location of operators including robots can be arranged. In this study, we consider remote communication with multiple persons through a monitor, and as basic research, recognitions of the tactile sensations of two other persons are investigated. The three scenes shown in Fig. 1 were utilized as common postures of arms taken during video conferencing systems and possible locations of tactile stimulation. Wearable tactile displays were attached to the arm and presented vibrotactile stimuli corresponding to each person on the monitor. The videos of each person were placed on the left and right, or top and bottom of the screen.

2 Method

In the experiment, the videos of two persons’ hands rubbing samples were given and participants identified each sample by perceiving tactile stimuli derived by rubbing the samples.

2.1 Experimental Setup

The skin vibrations caused when a fingertip touched an object were shared. Previous studies have shown that skin vibrations well represent various texture features [8, 9], such as roughness. Figure 2 shows an assembled skin vibration transmission system. The system consists of a wearable tactile sensor, a wearable tactile display, and I/O modules (amplifier (AP05, Fostex), USB audio interface (Sound Blaster Play! 3, Creative), and laptop computer (PC)). The skin-propagated vibration caused by touching an object with a fingertip was measured using a ring-type acceleration sensor (2302B, Showa Sokki Corporation) attached to the intermediate phalanx of the right index finger. The sensor signals were input to the PC at a sampling frequency of 48 kHz. A wearable vibrator using Vibro-Transducer Vp2 (Acouve Laboratory, Inc.) was employed to present skin vibrations. The vibrator is covered with a plastic case and has a strap to attach it to the arm. In this experiment, we recorded skin vibrations for different materials beforehand and presented them to the participants. The signal from the sensor can be also sent to the vibrator in real time [10]. We also used a wide-angle web camera (Buffalo BSW200MBK) to capture the rubbing motion while collecting tactile information. The videos were synchronized with the sensor signals based on the moment that the finger contacted the object and presented to the participants along with the tactile stimuli.

2.2 Conditions

Three natural postures of a person sitting in a chair and communicating were adopted (Fig. 1). For each scene, two conditions of spatial correspondence and non-correspondence were compared.

In Scene 1, the videos of two persons rubbing a sample were placed on the left and right panels of a monitor. Two tactile displays were attached to the left and right wrists of each participant. The tactile stimuli of the person in the video on the left and right panels on the monitor were presented to the left and right wrist, respectively (spatial correspondence), and vice versa (spatial non-correspondence). In Scenes 2 and 3, the videos of two persons were placed on the top and bottom panels. In Scene 2, the participants were asked to place their arms on the desk, while in Scene 3, participants were asked to place their arms hanging down. In the skin-propagated vibration with a distance of more than 80 mm for arm, the vibration intensities decrease below the perceptual detection threshold [11]. Therefore, two tactile displays were attached to the right wrist and the right upper arm of each participant, avoiding mechanical interference. For both Scene 2 and 3, the tactile stimuli of the person on the top and bottom panels on the monitor were presented to the right upper arm and the right wrist, respectively (spatial correspondence), and vice versa (spatial non-correspondence). In addition, for Scenes 1 and 2, participants were asked to place their both arms on the desk, with a distance of approximately 300 mm between the arms. During the experiment, the participant wore a black and white band beside the tactile displays, and correspondingly, the person in the video wore a black or white band. The colors of the bands of the two persons in the videos were randomly exchanged among trials.

2.3 Stimuli

Three different materials were prepared for the samples: wire mesh, glass beads, and wood as shown in Fig. 3. A preliminary test showed that they were easy to identify by presenting skin vibrations recorded for each sample. Figure 3 shows the skin vibration and its power spectrum density when each sample was rubbed with a fingertip. The duration for rubbing the sample once was approximately 1 s for all the samples. The samples in the video were processed to be blacked out so that they were visually indistinguishable.

2.4 Procedure and Analysis

Before the test, the intensity of the vibrotactile stimulation of the two tactile displays was adjusted to match the same in the subjective rating of each participant, and the participants practiced for about 2 min until they had sufficiently memorized all three samples while vibrotactile stimuli were simultaneously presented. Then, the participants were asked to recognize from the tactile stimuli which sample was explored in each video, and to say “Yes” as a signal of completing each trial after identifying the two samples. The responses were recorded in the identified sample and the required duration.

For each scene, 9 (combination of samples) \(\times \) 2 (presentation conditions) \(\times \) 2 (number of trials) = 36 trials were conducted for each participant. Different groups of eight volunteers participated in the experiment for each scene: 22–24 years old, 6 males and 2 females, 7 right handed and 1 left handed for Scene 1; 22–24 years old, 7 males and 1 females, all right handed for Scene 2; 22–24 years old, 7 males and 1 females, 7 right handed and 1 left handed for Scene 3. Informed consent was obtained from all of them. The experimental evaluation protocol followed the Declaration of Helsinki and was approved by the ethics committee of the Nagoya Institute of Technology. The participants wore headphones with white noise so they could not hear the sound of the vibrator.

The rate of correct responses and the mean response time were calculated for each participant and comparisons between each condition (spatial correspondence and spatial non-correspondence) were conducted for each scene. A Shapiro-Wilk test was conducted to confirm the assumption of normal distribution, and paired t-tests were conducted. When the normal distribution was denied, nonparametric Wilcoxon signed-rank tests were conducted. The significance level was set to \(\alpha =0.05\).

3 Results

The left panel of Fig. 4 shows the mean rate of correct responses for all participants and the standard deviation. The right panel of Fig. 4 shows the mean response time and its standard deviation. The results for the two conditions were presented for each scene.

In Scene 1, the paired t-test between the two conditions showed no significant difference in the correct response rate (\(t_{7}=0.158\), \(p=0.88\)). However, the Wilcoxon signed-rank test showed a significant difference in the response time (\(V_{7}=6373\), \(p=0.014\)). This indicates that the response time in the spatial correspondence condition was significantly shorter than that in the spatial non-correspondence condition. In Scene 2, the paired t-test showed that there was no significant difference in the correct response rate between the spatial correspondence and the spatial non-correspondence conditions (\(t_{7}=0.517\), \(p=0.62\)). However, the Wilcoxon signed-rank test showed that the response time was significantly shorter in the spatial non-correspondence condition than that in the spatial correspondence condition (\(V_{7}=6540\), \(p=0.0085\)). In Scene 3, similar to the results in Scene 2, the paired t-test showed no significant difference in the correct response rate (\(t_{7}=0.728\), \(p=0.49\)). Wilcoxon signed-rank test showed that the response time in the spatial non-correspondence condition was significantly shorter than that in the spatial correspondence condition (\(V_{7}=3707\), \(p=0.0025\)).

Experimental results for each scene with two conditions. Left and right panels present correct response rate and response time, respectively. (a) and (b) denote the spatial correspondence and the spatial non-correspondence conditions, respectively. ** and * indicate \(p<0.01\) and \(p<0.05\), respectively.

4 Discussion

In the case where the videos were placed on the left and right panels, the results showed that the response time was improved under the spatial correspondence condition, where the spatial relationship of the tactile stimulation (right and left wrist) corresponded with the positions of the images on the monitor (right and left panels). This is consistent with the left-right spatial stimulus-response compatibility effect [7].

In contrast to the case of the left and right images using the left and right hands, the response time in the spatial non-correspondence condition was significantly shorter in the case where the videos were placed on the top and bottom panels. This implies that the spatial correspondence between the tactile stimulation on the body and the visual information does not always induce intuitive perception. For both Scenes 2 and 3, the tactile stimuli corresponding to the video on the top and bottom panels were presented on the wrist and upper arm, respectively, yielding a significantly faster response. Regarding the spatial compatibility between the vertical and horizontal planes for the stimulus-response task, Vu et al. [12] demonstrated that the far and close locations on the horizontal plane corresponded to the vertical top and bottom. When the videos are presented at the top and bottom, participants might interpret the position of the videos on the screen along the vertical plane as depth, with the bottom video being perceived closer. These positions in depth are then mapped to the proximal and distal positions on the arm, independent of its pose. From the aspect of the body schematic, the wrist is distal and the upper arm is proximal.

In Scene 2, the participants took the posture placing the right arm on the desk, and the wrist and the upper arm were placed in the far and close position on the horizontal plane. Thus, considering the same manner of the spatial compatibility between the vertical and horizontal planes, it was considered that the wrist and the upper arm would correspond to the top and bottom positions in the video, respectively. The results supported this inference. The results of Scene 3, which showed the same trend as that of Scene 2, also indicate that body schematics affect spatial compatibility. In Scene 3, the right arm wearing tactile displays was down and the upper arm and the wrist were located above and below in the vertical plane, respectively. Therefore, both visual stimuli and tactile stimuli were located on the vertical plane, and the results showed that the correspondence of the body schematic had a significant effect (the wrist is distal and the upper arm is proximal). The spatial condition of tactile stimulation in Scene 1 also includes the correspondence of the body schematic. Thus, the present results indicate that the body schematic is an important factor for the correspondence between the spatial location of others and the tactile presentation position of the body.

In this study, we examined the spatial compatibility between the visual stimuli of other persons and the tactile stimuli on the body for three representative scenes in video communication. This finding is useful in arranging the location of other people in remote communication with others and in human-human/robot collaboration. Other viewpoints such as a face-to-face and third person perspective will be investigated. In addition, the size of the video as a perseptive cue and various combinations of body presentation positions and arm postures are possible factors [13], and the arm can be moved during the work. Influence of the body schematic will investigated under various conditions.

For all scenes, the correct answer rates were not significantly different between the conditions. A possible reason is that the vibrations of the three types of samples used in this study were easily identifiable. If we use samples that are difficult to discriminate or fix the time for discrimination, there may be a difference in the correct response rate between conditions. Different materials and an increase of materials will be tested for future work.

5 Conclusions

This study investigated the spatial compatibility between two visual stimuli and the corresponding two tactile stimuli presented on the arm to induce their accurate and fast perception. Although the combination was limited, the experimental results suggested the influence of the body schematic. The findings might be available for remote communication and human-human/robot collaborations. In the future, we would like to increase the number of tactile identifications and apply this method to interactive communication between multiple persons and cooperative work using shared tactile perception with other persons and robots.

References

Katagiri, T., Tanaka, Y., Sugiura, S., Minamizawa, K., Watanabe, J., Prattichizzo, D.: Operation identification by shared tactile perception based on skin vibration. In: 2020 IEEE International Conference on Robot and Human Interactive Communication (ROMAN), pp. 885–890 (2020)

Drury, J.L., Scholtz, J., Yanco, H.A.: Awareness in human-robot interactions. In: 2003 IEEE International Conference on Systems, Man and Cybernetics, Conference Theme-System Security and Assurance, vol. 1, pp. 912–918 (2003)

Casalino, A., Messeri, C., Pozzi, M., Zanchettin, A.M., Rocco, P., Prattichizzo, D.: Operator awareness in human-robot collaboration through wearable vibrotactile feedback. IEEE Robot. Autom. Lett. 3(4), 4289–4296 (2018)

Pezent, E., Israr, A., Samad, M., Robinson, S., Agarwal, P., Benko, H., Colonnese, N.: Tasbi: Multisensory squeeze and vibrotactile wrist haptics for augmented and virtual reality. In: 2019 IEEE World Haptics Conference (WHC), pp. 1–6 (2019)

Connell, L., Lynott, D.: When does perception facilitate or interfere with conceptual processing? The effect of attentional modulation. Front. Psychol. 3, 474 (2012)

Wang, D., Member, S., Peng, C., Afzal, N., Li, W., Wu, D., Zhang, Y.: Localization performance of multiple vibrotactile cues on both arms. IEEE Trans. Haptics 11(1), 97–106 (2018)

Nishimura, A., Yokosawa, K.: Effects of visual cue and response assignment on spatial stimulus coding in stimulus-response compatibility. Q. J. Exper. Psychol. 65(1), 55–72 (2012)

Bensmaia, S., Hollins, M.: Pacinian representations of fine surface texture. Percept. Psychophys. 67(5), 842–54 (2005)

Wiertlewski, M., Lozada, J., Pissaloux, E., Hayward, V.: Causality inversion in the reproduction of roughness. In: Kappers, A.M.L., van Erp, J.B.F., Bergmann Tiest, W.M., van der Helm, F.C.T. (eds.) EuroHaptics 2010. LNCS, vol. 6192, pp. 17–24. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-14075-4_3

Fukuda, T., Tanaka, Y.: Skin vibration-based tactile tele-sharing. In: Kajimoto, H., Lee, D., Kim, S.-Y., Konyo, M., Kyung, K.-U. (eds.) AsiaHaptics 2018. LNEE, vol. 535, pp. 82–84. Springer, Singapore (2019). https://doi.org/10.1007/978-981-13-3194-7_17

Shah, A.V., Casadio, M., Scheidt, A.R., Mrotek, A.L.: Vibration propagation on the skin of the arm. Appl. Sci. 9(20), 4329 (2019)

Vu, K.P.L., Proctor, R.W., Pick, D.F.: Vertical versus horizontal spatial compatibility: Right-left prevalence with bimanual responses. Psychol. Res. 64(1), 25–40 (2000)

Nicoletti, R., Umiltà, C.: Right-left prevalence in spatial compatibility. Percept. Psychophys. 35, 333–343 (1984)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this paper

Cite this paper

Uemura, K., Yukawa, H., Kitamichi, K., Shibasaki, M., Minamizawa, K., Tanaka, Y. (2022). Spatial Compatibility of Visual and Tactile Stimulation in Shared Haptic Perception. In: Seifi, H., et al. Haptics: Science, Technology, Applications. EuroHaptics 2022. Lecture Notes in Computer Science, vol 13235. Springer, Cham. https://doi.org/10.1007/978-3-031-06249-0_10

Download citation

DOI: https://doi.org/10.1007/978-3-031-06249-0_10

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-06248-3

Online ISBN: 978-3-031-06249-0

eBook Packages: Computer ScienceComputer Science (R0)