Abstract

The energy industry in Norway has a long tradition in using information technology to enable integrated operations, namely, remote collaboration between personnel at offshore installations and experts at onshore office environments. Currently, the industry is undergoing a digital transformation in which remote operations of unmanned offshore assets are the emerging standard. To ensure trustworthy and reliable operations, offshore remote sensing capabilities must be established through not only technical means but also a broader transformation involving new competence, work processes, and governance principles. In this chapter, we reconstruct this transformation and ask: What are the emerging capabilities that develop around the remote operation digital infrastructure? We unpack how the new digital infrastructure is a continuation of the practices and systems that have been established over time. We use historical reconstruction with vignettes from the development of a new generation of remotely operated offshore installations in oil and gas and wind facilities to describe the ongoing digital transformation as a process of infrastructuring in which the infrastructure gets increasingly entangled with internal and external systems, stakeholders, and agendas. In doing so, we shed light on how the established local and situated solutions evolve and are compensated for through the technical and organizational principles of the emerging information infrastructure.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

Offshore operations in the energy industry are undergoing a digital transformation. When most major oil companies and globally operating service companies addressed their future way of doing business in the mid-2000s, they described it as oil exploration and operation enabled by information and communications technology. So-called integrated operations (IO) then relied on instrumented and automated oil and gas fields that integrated people and technology to remotely monitor, model, and control all processes in a safe and environmentally friendly way to maximize their value [1]. In Norway, the Norwegian Oil and Gas Association (Norsk olje og gass) defined IO as a bundling of a company’s resources to configure sustainable capabilities: integration of people across geographical, organizational, and disciplinary boundaries, integration of processes in terms of business integration and vendor collaboration, and, finally, integration in relation to technology: data, sensors, protocols, fiber optics, standardization, and others [2].

IO encompassed both processes, methods, improved information and communications technology (ICT), and high-bandwidth fiber-optic networks that allowed real-time data sharing between remote locations. The effect was that experts from different disciplines could collaborate more closely, which facilitated a more rapid response and decision-making [1]. In Norway, IO and the knowledge associated with this development were created in the borderland between universities, companies, national legislative/governing bodies, and various global actors. Technologies for collaboration within the oil and gas industry that came with IO challenged traditional geographical, disciplinary, and organizational boundaries.

The industry thus moved over many years from being isolated islands of operations to becoming more open ecosystems. The Norwegian Oil and Gas Association [2] stressed the opening of existing boundaries, when they argued that IO would be implemented over two generations with increasing integration, across geography, across disciplines, and across organizational boundaries. The first-generation (G1) processes would integrate processes and people onshore and offshore using ICT solutions and facilities that improve onshore’s ability to support offshore operationally. The second-generation (G2) processes would help operators utilize vendors’ core competencies and service more efficiently [2]. As oil companies are now entering G2, we observe that by utilizing digital services and vendor products, operators are increasingly able to update reservoir models, update their drilling targets and well trajectories as wells are drilled, manage well completions remotely, and optimize production from reservoir to export.

In this chapter, we describe the transition from traditional, bounded operation to first G1 (IO) and then G2 (remote operations) as an ongoing process of infrastructuring [3]. We ask: What are the emerging technical and organizational capabilities that develop around the remote operation digital infrastructure? We identify and discuss the increasing degree of entanglement of the infrastructuring process over time (cf. [4, 5]). In doing so, we unpack how the new digital infrastructure is a continuation of the established practices and systems that came with IO. The transition toward trustworthy and reliable remote operations depends on establishing remote sensing capabilities that encompass not only new technologies but a broader digital transformation involving new competence, work processes, and governance principles.

This chapter is structured as follows. We start by defining integrated and remote operations as an infrastructuring process. Then we define this development from the late 1970s up to the present, as three distinct phases, and present what happened over these 30–40 years to challenge the notion of digitalization as a transformation process. To cover this period with distinct phases, we must paint with a broad brush. We have focused on operation and maintenance, and we are not able to portray all the features that characterize this development in other interesting oil and gas domains like drilling, production, and reservoir management. Still, we hope the reader will appreciate our attempt to draw some long lines related to the infrastructuring of ICT in the oil and gas business in Norway.

2 From Integrated to Remote Operations as Infrastructuring

The movement from integrated to remote operations is still ongoing. We thus look at this phenomenon as an example of the evolution of emergent infrastructures over time [4]. An information infrastructure perspective on IO and remote operations treats both as open-ended sociotechnical systems ([6]: 576, emphasis in original):

As a working definition, [information infrastructures] are characterised by openness to number and types of users (no fixed notion of ‘user’), interconnections of numerous modules/systems (i.e. multiplicity of purposes, agendas, strategies), dynamically evolving portfolios of (an ecosystem of) systems and shaped by an installed base of existing systems and practices (thus restricting the scope of design, as traditionally conceived). [Information infrastructures] are also typically stretched across space and time: they are shaped and used across many different locales and endure over long periods (decades rather than years).

Collaborative practices are achieved through collections of—rather than singular—artifacts from this perspective. Infrastructuring, the active process of developing information infrastructures, allows us to better capture the efforts required to integrate human and material components, the continuous work required to maintain it [3], as well as the elements of continuity in such complex systems [7]. As an analytical tool, infrastructuring overcomes the blurred boundaries between phases of design, implementation, use, and maintenance in infrastructure evolution [8] and thus highlights the ongoing, provisional, and contingent work that goes into working infrastructures of IO and remote operations. Sardo et al. [5] demonstrate how infrastructuring work during normal periods aimed at maintaining stability in the oil and gas branch is a source of valuable innovation and change at the intersection of different interlocked infrastructures. Governing stability and change in such settings is thus an endeavor orchestrated by actor constellations—or action nodes—that control the infrastructural interlinks and keep the industry stable while innovating it (ibid). In the context of IO, Parmiggiani et al. [4] illustrate this process in the case of real-time subsea environmental monitoring during offshore operations. The authors show how this infrastructuring process unfolded through increasing degrees of entanglement of the emergent infrastructure with different stakeholders, agendas, and other infrastructures. One fundamental instance of such an entanglement is represented by onshore support centers which enable companies to move work tasks from offshore platforms to land and which were central in the transformation from bounded to integrated operations, as we will show later [9, 10]. Hepsø and Monteiro [9] conceptualize these as centers of calculation (see [11]), namely, venues in which knowledge production builds upon the accumulation of resources through circulatory movements to other places over time. To enable such control centers, several artifacts and practices have become entangled: fiber-optic networks to shore, proper standards for communication and sharing of data, collaboration tools, and new work practices and competence. All these elements were necessary to enable real-time data and information to move from the local setting on an oil installation to a central location where experts could work with the data and mitigate the needed action. This was a sociotechnical bundling and development of capabilities that made it possible for local and bounded distinct readings/data to be transferred to any place in a larger ecosystem.

Another example is the aforementioned case of real-time subsea environmental monitoring during offshore operations. This is a compelling illustration of the entanglement of remote sensing capabilities for trustworthy and reliable operations. Monteiro and Parmiggiani [12] discuss how the objects of interest in remote work are digital representations that are unhinged from their physical counterpart (see also [13]). Technologies such as the Internet of Things (IoT) have been the vehicle of this decoupling as they can synthetize human sensing abilities to an increasingly effective degree [12]. Such remote sensing is not simply a matter of new technologies but depends on establishing sociotechnical capabilities to make sense of the digital representations as well as gradually tying these representations to organizational and political concerns (ibid).

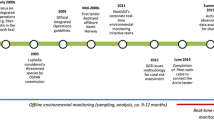

We are influenced by this phased development and describe a similar journey from integrated to remote operations through three phases: bounded, integrated, and remote operations. These phases bear similarities to the three generations developed by the Norwegian Oil and Gas Association [2], but they are not completely the same since at the time (2005) they were considered scenarios. We present the technical, organizational, competence, and governance capabilities that were developed in this process. How these infrastructuring capabilities developed over time is crucial to understand the transition from integrated to remote operation but also to see how remote operations is not a replacement of, but a continuation of, IO.

3 Three Phases of Development

3.1 Generation 0: Bounded Operations (1980–2000)

In the early part of this period, the oil industry in Norway is under development, and it matures substantially. It is important to develop competence and prove to be a good and reliable producer of oil and gas. Being heavily dependent on US competence in the early days, the industry is developing a unique Norwegian style both when it comes to petroleum and work environment legislation, working culture, petroleum engineering, and design. We have called this the phase of bounded operations.

The Norwegian Oil and Gas Association ([2]: 9–12) described this phase as follows: “The day to day control and optimisation process normally is managed by one or two operators located in the central control room (CCR) offshore. From this room the operators optimise wells and process trains in accordance with the production & injection plan, monitor critical systems and equipment and handle alarms, emergencies and shutdowns. In some cases, they manage dozens of wells and facilities that daily produce several hundred thousand barrels of oil equivalents. The decisions they make to optimise production are most often based on their own judgment and knowledge of the operation at hand. The CCR operators are supported by field operators that they guide through VHF and UHF radios. The field operators manually measure readings of critical instruments and valves, regulate manual controls, carry out first line, preventive maintenance, prepare and start up equipment after shutdowns, manage work orders, plan maintenance work and participate in the safety team. Support from onshore functions is limited and normally only available 5 days a week from 8 a.m. to 4 p.m., meaning that decisions of key importance to production as well as safety are made without support from the engineers that have developed the plans which the operators are implementing.”

The ICT infrastructure is thus bounded, with poor integration of IT systems, high cost for data storage, and poor capabilities for data transfer (low-bandwidth satellite) onshore-offshore. The main collaboration tools are e-mail, telex/fax, and telephone but hardly any real-time collaboration. IT is an expense, and IT expenditure must be kept as low as possible since it is difficult to document the business value. Most installations are fully manned (in the hundreds) with functions needed both to plan and execute the work offshore, and the level of instrumentation and sensors in the facility are simple or non-existing. Human operators in the field are used to compensate for this lack of instrumentation and readings to have an overview of the process and safety conditions out in the offshore facility. The operators conduct a “check-and-report” task in the plant, where they are on regular rounds using their senses to look for aberrancies, in the form of leakages, strange sounds, etc. If there is a situation out in the facility, the control room sends out an operator to verify the situation. The crew knows the facility in and out and tend to spend their time on the same installation, often the same shift with colleagues they know and trust, over many years. The offshore world is stable and divided in siloed disciplines.

The Norwegian Oil and Gas Association writes: “…most operative decisions are made offshore, in isolation or with limited support from experts onshore. Plans are relatively rigid and primarily changed at fixed intervals. The organisational structure is traditional, meaning that personnel onshore and offshore belong to several different units with different goals and Key Performance Indicators (KPIs). Plans are made, and problems solved in a fragmented manner. Basic as well as advanced education aims to develop disciplinary specialists, not professionals with a good understanding of value chains and work processes. IT systems are specialised, and it is difficult and time-consuming to gather the data necessary to optimise processes” ([2]: 09).

There is no real-time support organization onshore that can provide immediate help offshore for diagnosis and troubleshooting, and offshore and onshore seem to be very remote. In the late 1980s and early 1990s, the first condition-based monitoring of equipment concepts is implemented, vibration analysis is one example, and sand management is another [14, 15]. Reliability analyses like bathtub curves and mean time between failures (MTBFs) exist as concept and theories. The understanding of plant degradation mechanisms improved, as did the reliability and availability of this kind of equipment. These methods and models exist, but in disciplinary islands, when incorporated in software, it is difficult for such models to travel across the offshore and onshore boundaries.

3.2 Generation 1: Integrated Operations (2000–2015)

After the turn of the millennium, the Norwegian Oil and Gas Association, the Norwegian Petroleum Directorate [16], and the Petroleum Safety Authority Norway (PSA) increasingly saw IO as an opportunity for the Norwegian society [17,18,19,20], a potential to brand new integrated technologies and work processes in a sophisticated Norwegian style based on the tradition we have related to democratic industry collaboration. The initial growth period was over, the industry was maturing, and the focus was to continue the growth in a situation where the expected production output of the business would drop (due to less expected new discoveries) in the years to come. IO is in these years becoming the “Zeitgeist” of the industry, that is, the new management and regulatory mindset.

It is hard to give an exact year for the start of the era of IO in Norway. Around the end of the twentieth century, many new elements aligned. First was the coming of the fiber-optic infrastructure onshore-offshore, the increasing integration between telecom and information technology leading to new types of applications like videoconferencing and software collaboration tools, and the coming of enterprise resource planning systems like SAP. Important was also the ability to store and then access large amounts of historical data and information at low cost. At the same time, the first onshore support centers were set up. There was also a concurrent development in sensor development that enabled eased readings of petroleum-related phenomena either in the reservoir, well, or the process facility. Finally, standardization of ICT tools was also linked to new industry data formats and standards, like the XML standards for drilling data (WITSML), production data (PRODML), and process data (OPC). Norwegian petroleum authorities took a role in the development of standards for daily and monthly reporting systems between the authorities and the oil companies: drilling reports, production reports, resource reporting, and environmental reporting.

The Internet was now becoming a more mature platform for new services. Methods that had existed as standalone tools or concepts could now be incorporated into a real-time infrastructure. For example, condition-based equipment monitoring of equipment concepts was implemented and integrated with real-time data. Similar implementations were now possible to improve sand detection and management [14]. Methods and models could now be verified and improved with real-time data. This new situation led to an increase in new models and software incorporating phenomena that had not been possible to represent in the past. A compelling example of this was the integration of real-time subsea environmental monitoring modules with offshore facilities starting in the mid-2000s. These new modules made it possible to gradually shift from ex-post mitigation of environmental damage to preventive approaches to halt possible, future emissions based on real-time data [21].

As these cases illustrate, the models developed as part of these new approaches are not just integrated with real-time data and moved out of their local and bounded settings, but they had to become accessible in onshore control centers, too. To really integrate these new capabilities into the existing operations and maintenance work processes, it took time to develop the appropriate work processes and data governance mechanisms. As a result, in this period, the work processes and operational model of the business thus changed substantially. The Norwegian Oil and Gas Association described this in the following way: “The primary control of the process will still be with the operators in the CCR offshore. The engineers in the onshore support centre will have access to real-time information about the operations offshore and the competence and tools necessary to monitor and control the process, simulate the process and advice the CCR of how to get most out of the plant. The combined problem-solving capability will be improved since specialists will be able to give proactive advice regarding optimal operation and can support the offshore operators actively when problems arise. The operators in the CCR will still be supported by field operators. They will be equipped with first generation wireless mobile computers and video and audio equipment that allow them to access information online concerning the controls and equipment they are dealing with and discuss problems and solutions with onshore experts in real time” ([2]: 16).

The operators still conduct a “check-and-report” task in the plant, where they are on regular rounds using their senses to look for aberrancies, in the form of leakages, strange sounds, etc. However, the CCR now has additional tools, like CCTV and more instrumented systems with sensors that can track aberrancies and operational perturbations. This means that the situation more often can be confirmed without sending out the operator. Onshore collaboration centers start to monitor important pieces of rotating equipment like pumps and gas turbines. The engineers working with rotating machinery are moved onshore.

The first onshore operation centers came alive around the turn of the millennium. These centers of calculation [11] were an important precondition to understand the unbounded space that opened with the development of IO and later with remote operations. The technological capabilities were also realized in so-called collaboration rooms that facilitated for cooperation by utilizing videoconferencing, sharing of large data sets, and remote control and monitoring [10, 22]. With IO collaboration centers onshore, parts of the company buildings onshore were redeveloped with such new collaboration facilities. The offshore installations office facilities of old installations were modified to house video collaboration spaces, and all new installations were developed with offshore collaboration facilities (see [23, 24]). These new spaces and the coming of real-time data opened bounded offshore sites. This is a process that we will later show has expanded with remote operations, where boundaries are even more obscure and where all control functions ultimately can be operated from anywhere given the proper barriers and cybersecurity mitigation. Functions and people that had been offshore were now moved onshore with more centrally organized planning of activities and execution of scheduled activities offshore. A mechanical or automation engineer that in the past followed up one asset now followed up several assets with increasing new data streams and tools available for operational support.

The first years were dominated by strong technology optimism [25, 26]. In an official Norwegian Report to the Parliament (Storting), it is explicitly stated that most initiatives related to IO have addressed technology development and technology implementation [17]. An increased focus on issues related to safety, new work processes, and integration of information in the whole oil and gas value chain is heralded: “[Integrated operations] means that established functions and work tasks can change and be moved between those that do the different tasks and where they are executed. These changes must be done in a reasonable way with employee involvement” ([17]: 35 translated from Norwegian). Around 2005, there is a considerable shift in relation to the impact of technology associated with IO. Instead of focusing on personnel flexibility, involvement in the change process is stressed.

The active and positive participation from all involved parties were from now on increasingly seen as instrumental to be able to succeed with IO [16, 20]. From now on, the change processes associated with the passage to new operational concepts are addressed via increased focus on human and organizational factors, change management, employee participation, and measures to improve HSE and organizational culture [20].

In conjunction with this were a maturation of the management mindset around IO and an increasing awareness on their value potential [19]. Future strategic possibilities were described [20], the need for improved skills [27] and the consequences of implementing IO [28]. Those that participated in this infrastructuring discourse were not only the oil companies that originally had articulated the claims of IO but also unions; the oil industry association; the authorities/regulator, universities, and research institutions like SINTEF and IFE; contractor companies like Aker Kværner and FMC; and software and sensor development companies. IO thus became an arena where a multitude of actors met, often with different agendas and objectives. The number of workshops and conferences that attracted industrial companies and research and government institutions took up speed in the same period.

In this period, petroleum engineering and design also underwent substantial changes. A change in installation type had preceded this from the mid-1990s onward. Giant concrete installations were taken over by new floating/anchored concepts, and subsea installations became the new standard partly due to moving into deeper waters on the Norwegian continental shelf. Since most oil companies started to move traditional functions onshore, this reduced the need for office space and offshore accommodation. The manning levels of these new installations moved from the hundred(s) to below 50. Offshore installations were now designed and built with IO in mind. One example is Statoil’s (now Equinor) Kristin platform where the disciplinary coffee areas had been integrated into one area, where the whole offshore office environment was an open space area, and where the platform management sat in a collaboration room with live feed of video to the onshore collaboration center/room [23, 24]. Other examples were the Ormen Lange and Snøhvit gas fields that were built as remotely operated subsea systems, with a 180–200 km pipeline to the beach where the gas production was monitored from the onshore control room. However, except for Snøhvit, Ormen Lange and a few other simple installations IO lost remote operation along the way. The coming of the Åsgard subsea booster station in 2015 was a hallmark in remote operations. The decision was taken to control the booster station from the local control room at Åsgard. The booster station, with subsea gas turbines, the size of a soccer field was by then the most complicated subsea factory ever built.

3.3 Generation 2: Remote Operations (2016–)

It took many years to mature the remote operation mindset. We have argued earlier that there was an overoptimistic belief in IO at the turn of the millennium. Remote control was heralded with great technological enthusiasm and was later taken out of the colloquial use of IO that was around collaboration across boundaries, not around remote operations. This notion of IO took precedence. Around 2010, more people that previously had worked with IO started to work with autonomy questions in oil and gas ([29]: 9). “Autonomy becomes relevant when human risk is too high, or humans are unfit or not cost-effective decision makers. Such situations are typically characterised by the need to collect and assess data and make decisions in fractions of a second or dealing with latency imposed by distance. In these situations, autonomous technology enables humans to set overall goals and delegate operational decision-making and execution of decisions to autonomous systems.”

It took time before autonomy and artificial intelligence began to take momentum, and we come back to this. When Rosendahl and Hepsø [1] co-edited the book on Integrated Operations in 2012–2013, remote control had not proven to be as important as heralded. There were many reasons for this: mistrust in the reliability of the remote operations technology, lack of good operational models and concepts for remote operations, fear of loss of safety, loss of jobs, and others. Still, the sociotechnical complexity of operational and technical aspects of remote operations was the most important. Facilities had to be built differently, with less maintenance hours so that maintenance campaigns were possible, moving either to unmanned or periodic manning model [30]. There were challenges with the existing project development/engineering methods; they were not configured to build larger unmanned remotely operated installations, since the mindset was tuned to existing conceptions and practices. Over time, this changed. One of these changes addressed that remote operation had to leverage some important lessons from IO that had a high focus on new ways of working enabled by new ICT [30]. Edwards et al. describe the road to low manning, remote operation as a configuration of complexity of the installation systems, instrumentation needed to remotely control, and a low number of maintenance hours. All these together form a path to an operational model based on remote operations.

Over time, the focus changed from the technical concept of remote control, which included the technical capabilities that need to be in place to make remote control possible, to remote operations that is a socio-technical configuration. This is where the operational concept is the key and where the technical, organizational, and competence capabilities are included in the concept. The implementation of IO on the Norwegian continental shelf was relatively successful; severe challenges were faced regarding the development of new work practices and the management of change [1].

Still, much of the implementation of onshore-offshore collaboration became natural with better collaboration tools, videoconferencing, and integrated information infrastructures making IO invisible, a key feature of infrastructures. IO is now taken for granted.

The new understanding of remote operations developing in this phase is linked to the coming of digitalization, which becomes the new “Zeitgeist” and also becomes a key feature of the management mindset. Emerging paradigms are now the Internet of Things (IoT) and Industry 4.0 approaches to automation and manufacturing. As Gartner group defined it [31], “digitalization is the use of digital technologies to change a business model and provide new revenue and value-producing opportunities; it is the process of moving to a digital business”—as opposed to digitization, namely, the conversion of the analogue into a digital format.

The convergence of ICT tools and infrastructure that started with IO took up speed. One visible development is the change from bounded proprietary and expensive videoconferencing rooms and solutions to integrated and standard desktop video on each employees PC. Coupled to this was also the coming of social media used internally on the company intranets. Cheap storage and transfer of data became colloquial in the era of IO, but now oil companies and vendors start developing digital platforms with APIs to ease the communication and sharing of data across boundaries. This is addressed as a big data challenge. Traditional oil and gas vendors and software companies develop their own digital platforms and services to gain new market shares. Digital twins and analytics services are built on top of existing services, for example, a condition-based monitoring service is built on top of the equipment delivery. The emerging big data domain with machine learning and artificial intelligence provides the possibilities for these new services. Cloud-based infrastructures are important for eased access and sharing of data, and internal/external cloud services begin to take over both for admin systems and more business-critical software tools. This emerging cloud infrastructure with storage and communication solutions, VPN, and remote access of systems proves critical during the COVID-19 pandemic. From March 2020, most companies in Norway started working remotely, only keeping the business-critical operations and oil and gas installations to be operated as usual, while all support was conducted from the homes of the employees. It surprised many how well this virtual cloud-based operational model with MS Teams worked; it kept most of the businesses going.

As of 2021, there exists a larger ecosystem around a remotely operated asset that can consist of different types of centralized or unbounded centers. The IOGP recommended practice for remote operation [32] describes the following sociotechnical configurations in this infrastructure. First is the remote collaborative center which is the collaboration center we recognize from IO. They can sometimes be distributed over several locations (i.e., multiple interconnected collaborative centers). Typically, they can have less access controls than a control room; however, this depends on operational or security risks. Over time, these collaborative centers have taken over remote monitoring or monitoring and diagnostics of production, operations, and equipment conditions remotely using data generated and exported from the production site outside the control room. Remote at vendor premises, the second configuration, also came with IO and refers to any remote location belonging to a vendor (or subcontractor) and in their private premises. Contracts define the physical access and security restrictions at the vendor premises. Connection across boundaries to the operators usually involves communications links via public networks. This sociotechnical configuration normally performs monitoring but can also conduct remote operation of equipment given the right access and cyber physical safety. Remote access from anywhere is the final configuration defined by IOGP and refers to any external location, in a private or public area (e.g., a home, hotel, or airport), where people can sit distributed outside company/vendor premises and can access control functions.

In this new situation, the control room can exist in various sociotechnical realizations based on instrumentation level, installation reliability, maintenance load, manning, and operational principles. It can also operate several installations from the same location regardless of geography. IOGP argues that this location can be far away from the actual production site but is within the premises managed by the company. The primary purpose is to remotely control and operate the production site(s), but it may also include dedicated remote engineering or maintenance rooms. Since these connections allow interaction with safety critical equipment, physical access controls are typically strictly enforced. Remote control refers to remote actions such as control commands (adjusting plant or equipment operational parameters, set point changes, alarm acknowledgement, manual start/stop commands, etc.), set point changes, and operations monitoring on detailed graphical displays (e.g., process conditions, equipment status, alarms, errors). Safety functions can also be performed from the remote control room (such as executing manual shutdowns, operating critical action panels, etc.) [32]. Remote control requires read and write access to the system to enable operator interaction with the process and equipment on the production site. There are different preventive controls and recovery preparedness principles/measures in manned or unmanned situations and if there are people on site, or not.

The main control room is located outside the production site boundary and in a safe zone. A remotely operated but manned installation can have a local offshore control room, but during normal operations, the command and control of the installation are conducted from an onshore control room. Examples of this on the Norwegian continental shelf are the Martin Linge (Equinor) and Ivar Aasen (AkerBP) installations. Such an installation typically has a lean organization close to the emergency preparedness role requirements, and the crew are always on the installation in shift rotation. Compared to traditional oil and gas platforms described earlier, the biggest difference is that the onshore control room is always in control.

The concept of “check-and-report” is changing. If the installation is manned, they still conduct traditional “check-and-report” tasks in the plant. However, when the installations are manned only part of the time, for example, during maintenance campaigns every 2 of 6 weeks, the control room is dependent on using CCTV or the instrumentation of the offshore systems and equipment to follow up aberrancies and situations offshore. Moving sensor platforms in the shape of drones and robots are now introduced. On the subsea systems, resident subsea drones are deployed on fit for purpose garages and charging stations and used for inspection and check-and-report tasks. Crawling and flying drones are tested out on the topside installations to perform the same type of tasks. The remote sensor capabilities (CCTV coverage, remote actuation capabilities of equipment, and sensor systems) are more advanced since the installation is operated most of the time without any crew. The visit intervals are dependent upon the maintenance load and instrumentation level of the installation, often scheduled in maintenance campaigns. Ad hoc visits by helicopter can happen as last resorts. Maintenance campaigns typically range from manned for 2 out of 6 weeks to as little as one or two scheduled short campaigns in a year. A new installation type subsea-on-a-stick saw its light [33]. The idea of this design is to keep the simplicity and high reliability of subsea systems and make it more accessible on an installation above water.

Competence requirements also change. Even though situated offshore, competence is important; it is increasingly difficult to develop this competence on installations where there are no humans or onboard just for shorter periods of time. At the same time, the ability to read and diagnose offshore aberrancies and abstract this into digital knowledge becomes more important. More of the input in a remote operation situation is gathered indirectly through sensor readings, calculated values, models, and simulations. Using models for prediction and analytics had started with IO, where predictive and analytical capabilities were implemented and integrated with real-time data because of the new ICT infrastructure that developed. Methods and models could be verified and improved with real-time data, and models and software could travel across boundaries. Competence for understanding, working with, and governing data grew in this period. Now, machine learning and AI are increasingly used to improve the predictive analytics of models. Models were integrated with real-time data and moved out of their local and bounded settings already with IO. Now however, new and larger centralized centers can streamline both the ICT tool development, work processes, data governance, and competence development to scale up the operation and maintenance services. This development coincides with a more centralized organization model where support and competence centers provide services to lean organized local assets. The work processes and data curation/governance are better integrated into the existing operations and maintenance work processes, thanks to better integrated ICT tools and a more mature digital cultural practice.

3.4 Way Forward (2021–): The Boundaries of Infrastructuring

In 2021, is everything becoming boundaryless with digitalization and have all boundaries gone? Not quite, the final bounded frontier are industrial automation and control systems (IACS), the main control and automation systems of the control room. It includes the hardware and software that can affect or influence the safe, secure, and reliable operation of an oil and gas facility. This bounded area is called the operational technology (OT) domain. OT has existed as a digitally bounded island since the 1970s. Most new facilities include connections to enterprise networks to enable data export for plant monitoring and other types of administrative systems whether these are collaboration systems, portals, etc. that are more open to the external world. Still, these domains are strictly separated. The latter is the administrative domain defined as IT, and the colloquial understanding of digitalization has until recently been mostly connected to the “IT world.” Typically, the separation between OT and IT is implemented using firewalls that create a zone and conduit model to achieve appropriate network segmentation and restrict any direct connections between the OT and IT systems. An intermediate network or demilitarized zone (DMZ) network between OT and IT networks is typically used to prevent direct connections between enterprise network and control system networks. This makes it possible for office network-based systems and users to view data from control systems in a secure manner. The DMZ acts as a protection gateway between the safe zone and the enterprise network.

With the increased reliance on digital technologies, it is important to ensure that the design of the systems addresses the risks from safety hazards as well as cybersecurity threats. The dominant model for enterprise reference architecture for both OT is the Purdue Enterprise Reference Architecture (commonly known as the Purdue model) for control systems and network segregation. It shows the interconnections and interdependencies of all the main components of a typical OT architecture. When this architecture was developed in the 1990s, the big concern was keeping computing and networks deterministic so that they wouldn’t fault. Network segmentation was a means for keeping traffic in a control network at deterministic levels. Purdue has set the standard for why and how control system networks needed to be segmented and what expectations each layer had for responsiveness. Once the Purdue model became the industry standard, many companies started using these network models to facilitate new I/O for safety systems, and it has over time become a standard for addressing ICT security as well.

Purdue provides a model for enterprise control, which end users, integrators, and vendors can share in integrating applications at key layers in the enterprise. Still, as we have shown in this chapter, the industry has moved from a stable bounded order informed by the Purdue model to a situation below where the network architecture is opening, providing new possibilities and configurations but also new risks. This is a similar movement from bounded operation and integrated operations to a more open-ended future with remote operations that introduces new possibilities but also challenges.

The first trend is the movement from confined bounded applications using horizontal integration to increased vertical integration between OT and IT by more and more hybrid types of integration. While the traditional OT systems were built from scratch using vendor-specific proprietary standards with almost esoteric and specialized proprietary OT competence, the new systems are more based on higher compliance with international standards, like OPC UA, and are gaining more off-the-shelf qualities. The development of the applications becomes standardized and uses standards often associated with admin IT systems, like Windows servers and TCP-IP. During this travel, OT was characterized by good bounded connectivity and poor connectivity to other systems. We are entering a situation with the emerging Industry of Things technologies where every piece of equipment and machinery can be connected to each other. In the old bounded days, risks could be decomposed and made controllable either by technology/boundary management or by competence (few people knew the OT systems to be able to hack them). When OT systems use standard protocols and technologies that have known weaknesses, new risks emerge in a potentially large ecosystem. New types of connectivity also create new vulnerabilities (USB sticks, phishing, laptops, and smartphones).

4 Discussion: Balancing Entanglement and Modularization

In our presentation of the infrastructuring process that characterizes the movement from integrated to remote operations and onward, we have stressed that it is due to many concurrent capabilities that developed in parallel and became bundled. It would be easy to focus solely on telecommunication and ICT that developed over this period. However, we have demonstrated that the development was more the consequence of a mobilization of many organizational and technical capabilities that were configured in innovative ways over time by entangling different infrastructures, stakeholders, and interests.

We have shown that this process was a distributed and collective one. First, a diverse set of stakeholders influenced the infrastructuring process, but none were in complete control (cf: [5]). In addition, the “Zeitgeist,” which is the management mindset, and the operational models also shifted over time. The oil industry business got increasingly centralized along the way, and new organizational institutions and control/collaboration centers developed [9]. The nature of work and the human operator’s ability to assess physical phenomena “around check-and-report” also evolved, thanks to the improvement of remote sensing capabilities. Change in competence was substantial and so were the methods to address risk and understand the emerging situation. Over the years, we also saw a substantial development of petroleum engineering and change in design.

In Table 1, we outline the capabilities that concurred in each phase of this infrastructuring process. It is important to observe that while each phase succeeds in solving some problems that affected the previous one, it also unearths new, previously unexplored questions (cf. [21]).

One analytical implication of our study is a reframing of the role of control centers. Much literature has given prominent importance to control room-based work and technology configurations to perform remote monitoring (see, e.g., [34]). We however show that so-called control rooms (such as operation centers) are not bounded locales, but centers of calculations [11]. This conceptual shift is important because it emphasizes that control rooms are only the visible part of a broader sociotechnical process where knowledge circulates, transforms, is aligned, and accumulates over time.

More in general, our study runs counter the rhetoric of modularization as key enabler of digital innovation. The literature on digital transformation often heralds the importance of the modularization of digital services and the associated capabilities [35, 36]. The story of innovation that we have told in this chapter challenges this view. Although we have seen that technological optimism tends to resurface in the energy sector, the transition toward remote operations is much more than a story about exploiting the properties of modularization allowed by digital technologies. Rather, it is one where heterogeneous factors have become bundled over time and aligned with different actors and agendas over time. Infrastructuring is a useful lens to understand the process of aligning the new capacities with the existing tools, work practices, and the corporate and societal institutional arrangements that started with IO first and continued with remote operations. This evolution is not characterized by clear-cut boundaries or modules. Although a degree of modularization is obviously present, at the same time, the gradual yet increasing entanglement of the infrastructure with internal and external systems, stakeholders, and agendas played a key role. Paying attention to how the modularization is balanced by entanglement over time is important on the analytical level to better understand the success of some innovation processes. This observation subscribes to the literature in information systems stating that digitalization is hardly complete transformation, but a process that build on the existing sociotechnical configurations. For example, the experience with IO was a crucial precondition to the rather quick uptake of digitalization in remote operations. Our story thus illustrates that the installed base of the infrastructure [6] matters significantly and its role in directing subsequent innovation should not be underestimated in theories of digitalization.

Finally, our analysis challenges views of firm-centric innovation in favor of a data economy-based innovation. The infrastructuring process that we have described encompasses constellations of stakeholders (such as energy companies, service organizations, regulatory agencies, and other interest groups) that operate at the interlinks of the infrastructures that get entangled with one another (see also [5]). As a result, it involves the energy sector as a whole, as opposed to one or few innovative firms. This trend seems to become more apparent as we enter a phase in which sector-wide IT architecture (e.g., Purdue) and data collection (e.g., IoT) standards dominate. A compelling example of this transition from firm- to data economy-centric innovation is the development of oil and gas software, which used to be dominated by large companies like Schlumberger. It is interesting to see how this company has developed along these same three phases we describe. Schlumberger started in a bounded space where the business model was dependent upon selling domain-specific software to the oil and gas clients. All proprietary software development was done inhouse. From 2007 to 2008 onward, they developed their Ocean platform, where users that had Petrel licenses could develop apps that worked inside the Schlumberger software portfolio. Opening the application program interfaces (APIs) of Petrel made it possible to build on their existing platform, develop a proprietary customer ecosystem, and keep the existing revenue model plus introduce a new with the Ocean App Store.Footnote 1 Schlumberger opened the ecosystem to a certain extent for collaboration in software development with major customers and sub-vendors. This opening of boundaries is similar to what we saw with IO. However, recently, their revenue and software development model are changing. Schlumberger now supports the development of a vendor-neutral environment for the development of an open data platform and ecosystem. The OSDU initiativeFootnote 2 was initiated by their major competitor Halliburton Landmark. This is an open ecosystem where neither Landmark nor Schlumberger has architectural control of the software development. Nobody could have foreseen that Schlumberger would join this open ecosystem 5 years ago.

5 Conclusions

The main contribution of this chapter was to (1) understand the emergence of capabilities for remote operations as an historical process where (2) capabilities become bundled as the infrastructure entangles with other infrastructures and interest groups.

A corollary of our analysis relates to the importance of historical reconstructions: we believe that studies of digital transformation should rely on a longitudinal perspective on the factors that led to the current innovations in the industry. To do this, methods such as researching document archives or retrieving digital traces should not be underestimated.

To conclude, we are aware that our analysis was limited to a Scandinavian context. Other aspects might emerge from the study of digital transformation in the energy industry in other parts of the world, such as the United States or South-East Asia. However, we believe that our main contribution is still applicable, although with different observations in relation to, for example, the role of regulatory agencies.

Notes

- 1.

- 2.

The Open Group Open Subsurface Data Universe (OSDU) Forum delivers an open-source, standards-based, technology-agnostic data platform for the energy industry that stimulates innovation, industrializes data management, and reduces time to market for new solutions. See also https://osduforum.org/about-us/who-we-are/osdu-mission-vision/

References

Rosendahl, T., & Hepsø, V. (2012). Integrated operations in the oil and gas industry: Sustainability and capability development. IGI Global.

Norsk olje og gass. (2005). Digital infrastructure offshore. Accessed September 21, 2009, from http://www.olf.no/io/digitalinfrastruktur/?29220.pdf

Karasti, H., Pipek, V., & Bowker, G. C. (2018). An afterword to ‘Infrastructuring and Collaborative Design’. Computer Supported Cooperative Work (CSCW), 27(2), 267–289.

Parmiggiani, E., Monteiro, E., & Hepsø, V. (2015). The digital coral: Infrastructuring environmental monitoring. Computer Supported Cooperative Work (CSCW), 2, 423–460.

Sardo, S., Parmiggiani, E., & Hoholm, T. (2021). Not in transition: Inter-infrastructural governance and the politics of repair in the Norwegian oil and gas offshore industry. Energy Research & Social Science, 75, 102047.

Monteiro, E., Pollock, N., Hanseth, O., & Williams, R. (2013). From artefacts to infrastructures. Computer Supported Cooperative Work Journal, 22(4–6), 575–607.

Pipek, V., & Wulf, V. (2009). Infrastructuring: Toward an integrated perspective on the design and use of information technology. Journal of the Association for Information Systems, 10(5), 447–473. Article 6.

Karasti, H., Baker, K. S., & Millerand, F. (2010). Infrastructure time: Long-term matters in collaborative development. Computer Supported Cooperative Work (CSCW) Journal, 19(3–4), 377–415.

Hepsø, V., & Monteiro, E. (2021). From integrated operations to remote operations: Sociotechnical challenge for the oil and gas business. Presented at IEA 2021.

Rolland, K. H., Hepsø, V., & Monteiro, E. (2006). Conceptualizing common information spaces across heterogeneous contexts: Mutable mobiles and side-effects of integration. In P. J. Hinds & D. Martin (Eds.), CSCW’06. Proceedings of the 2006 20th anniversary conference on Computer supported cooperative work (pp. 493–500). ACM.

Latour, B. (1987). Science in action. Harvard University Press.

Monteiro, E., & Parmiggiani, E. (2019). Synthetic knowing: The politics of internet of things. MIS Quarterly, 43, 167–184.

Lusch, R., & Nambisan, S. (2015). Service innovation: A service-dominant logic perspective. Management Information Systems Quarterly, 39(1), 155–171.

Østerlie, T., Almklov, P. G., & Hepsø, V. (2012). Dual materiality and knowing in petroleum production. Information and Organization, 22(2), 85–105.

Østerlie, T., & Monteiro, E. (2020). Digital Sand: The becoming of digital representations. Information and Organization, 30, 1, 01–15.

NPD. (2006). Integrated operations forum. Accessed September 21, 2009, from www.npd.no/English/Emner/E-drift/E-driftforum/coverpage.htm

NOU. (2005–2006). NOU Official Norwegian Report to the Storting no. 12. Health, safety and environment in the petroleum activities (2005–2006). Accessed April 27, 2021, from https://www.regjeringen.no/contentassets/da47b0ff07c14288821c68c9c7e19d82/no/pdfs/stm200520060012000dddpdfs.pdf

Norsk olje og gass. (2005). Integrated work processes: Future work processes on the Norwegian continental shelf. Accessed September 21, 2009, from http://www.olf.no/getfile.php/zKonvertert/www.olf.no/Rapporter/Dokumenter/051101%20Integrerte%20arbeidsprosesser,%20rapport.pdf

Norsk olje og gass. (2006a). Verdipotensialet for Integrerte Operasjoner på Norsk Sokkel (The value potential for integrated operations on the NCS). Accessed September 21, 2009, from http://www.olf.no/?31293.pdf

Norsk olje og gass. (2006b). HMS og Integrerte Operasjoner: Forbedringsmuligheter og nødvendige tiltak Norsk olje og gass-report 2006 [HSE and Integrated operations: Opportunities for improvement and necessary measures - Norwegian Oil and gas-report 2006]. Accessed April 27, 2021, from https://www.yumpu.com/no/document/view/19796540/io-og-hmspdf

Parmiggiani, E. (2015). Integration by infrastructuring: The case of subsea environmental monitoring in oil and gas offshore operations (PhD Thesis). NTNU.

Hepsø, V. (2009). ‘Common’ information spaces in Knowledge Intensive Work: Representation and Negotiation of meaning in computer supported collaboration rooms. In D. Jemielniak, L. Kozminski, & J. Kociatkiewicz (Eds.), Handbook of research on knowledge-intensive organizations. Idea Group.

Næsje, P., Skarholt, K., Hepsø, V., & Bye, A. S. (2009). Empowering operations and maintenance: Safe operations with the ‘one directed team’ organizational model at the Kristin asset Safety. In S. Martorell et al. (Eds.), Reliability and risk analysis: Theory, methods and applications (pp. 1407–1414). Taylor & Francis Group.

Skarholt, K., Næsje, P., Hepsø, V., & Bye, A. S. (2009). Integrated operations and leadership – how virtual cooperation influences leadership practice. In S. Martorell et al. (Eds.), Safety, reliability and risk analysis: Theory, methods and applications (pp. 821–828). Taylor & Francis Group.

Edwards, T., Mydland, Ø., & Henriquez, A. (2010, March 23–25). Insights and lessons learned from the application of iE. Presented at the SPE Intelligent Energy Conference and Exhibition held in Utrecht, The Netherlands. SPE-Number-MS128669.

Hepsø, V. (2006). When are we going to address organizational robustness and collaboration as something else than a residual factor? Society Petroleum Engineers (SPE) paper no 100712.

NPD. (2006). Mapping the needs for competence for the extensive use of integrated operations in fields on the Norwegian shelf. Norwegian Petroleum Directorate (NPD). Accessed September 21, 2009, from http://www.npd.no/NR/rdonlyres/3F964DEA-7A44-4E15-B170-E50FF2D039F1/10943/XS6416kompetanserapportengendelig030406.doc

NPD. (2005). Utredning om konsekvenser av omfattende innføring av e-drift (integrerte operasjoner) på norsk sokkel for arbeidstakerne i petroleumsnæringen og muligheter for utvikling av nye norske arbeidsplasser, OD (2006) (Study on the consequences of the comprehensive introduction of e-operation (integrated operations) on the Norwegian continental shelf for employees in the petroleum industry and opportunities for the development of new workplaces in Norway). Accessed September 21, 2009, from http://www.npd.no/NR/rdonlyres/4B68AADC-4653-456E-BAA9-EEB6B37CCCD3/10938/SluttrapporteDriftogkonsekvenserrevidert121205.pdf

NFA (Norwegian Society of Automatic Control). (2013). Autonomous systems: Opportunities and challenges for the oil & gas industry. Report. Accessed April 27, 2021, from https://nfea.no/wp-content/uploads/2018/02/Autonomirapport-NFA.pdf

Edwards, A. R., & Gordon, B. (2015). Using unmanned principles and Integrated Operations to enable operational efficiency and reduce Capex and OPEX costs. SPE-Number-MS176813.

Gartner. (n.d.). Definition of digitalization. Accessed May 28, 2021, from https://www.gartner.com/en/information-technology/glossary/digitalization

IOGP (International association of oil and gas producers). (2018). Selection of system and security architectures for remote control, engineering, maintenance, and monitoring. Report 626, October. https://www.iogp.org/bookstore/product/iogp-report-627-selection-of-system-and-security-architectures-for-remote-control-engineering-maintenance-and-monitoring/

NPD. (2016). Unmanned well-head platforms UWHP. Summary report. Accessed April 27, 2021, from https://www.npd.no/globalassets/1-npd/publikasjoner/rapporter/unmanned-wellhead-platforms.pdf

Mentler, T., Rasim, T., Müßiggang, M., & Herczeg, M. (2018). Ensuring usability of future smart energy control room systems. Energy Informatics, 1(1), 26.

Henfridsson, O., Nandhakumar, J., Scarbrough, H., & Panourgias, N. (2018). Recombination in the open-ended value landscape of digital innovation. Information and Organization, 28(2), 89–100.

Yoo, Y., Hendridsson, O., & Lyytinen, K. (2010). The new organizing logic of digital innovation: An agenda for information systems research. Information Systems Research, 21, 5.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this chapter

Cite this chapter

Hepsø, V., Parmiggiani, E. (2022). From Integrated to Remote Operations: Digital Transformation in the Energy Industry as Infrastructuring. In: Mikalef, P., Parmiggiani, E. (eds) Digital Transformation in Norwegian Enterprises . Springer, Cham. https://doi.org/10.1007/978-3-031-05276-7_3

Download citation

DOI: https://doi.org/10.1007/978-3-031-05276-7_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-05275-0

Online ISBN: 978-3-031-05276-7

eBook Packages: Computer ScienceComputer Science (R0)