Abstract

The algorithms implemented through artificial intelligence (AI) and big data projects are used in life-and-death situations. Despite research that addresses varying aspects of moral decision-making based upon algorithms, the definition of project success is less clear. Nevertheless, researchers place the burden of responsibility for ethical decisions on the developers of AI systems. This study used a systematic literature review to identify five categories of AI project success factors in 17 groups related to moral decision-making with algorithms. It translates AI ethical principles into practical project deliverables and actions that underpin the success of AI projects. It considers success over time by investigating the development, usage, and consequences of moral decision-making by algorithmic systems. Moreover, the review reveals and defines AI success factors within the project management literature. Project managers and sponsors can use the results during project planning and execution.

This work was not supported by any organization.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Algorithms

- Artificial intelligence

- Big data

- Critical success factors

- Moral decision-making

- Project management

1 Introduction

Algorithmic decision-making is replacing or augmenting human decision-making across many industries and functions [1, 2]. The decisions range from trivial to life and death. For example, predicting the customer reaction to a social media advertisement [3] is insignificant compared to diagnosing Parkinson’s disease [4], breast cancer [5], or COVID-19 [6]. An “algorithm is a defined, repeatable process and outcome based on data, processes, and assumptions” [7]. Algorithms can take many forms and have been used in decision-making for centuries. Today, data-driven algorithms are usually the result of artificial intelligence (AI) or big data projects. Scholars anticipate that AI will significantly impact society, generating productivity and efficiency gains and changing the way people work [8]. Understanding success factors in AI projects is critical given the considerable impact on individuals, society, and the environment.

Sponsoring organizations invest in AI projects with the expectation that they will deliver measurable, meaningful benefits such as revenue or productivity gains [9]. These benefits are usually realized long after the projects are completed and the algorithms are used. However, the on-time and cost limits of the task or the goal orientation of projects create the risk that the interests of significant stakeholders may not be considered. Thus, weighing short-term project objectives against long-term social and environmental consequences raises essential questions about the definition of project success. While the literature acknowledges the importance of client consultation and client acceptances as critical success factors, the public interest is not considered in the most referenced project management success model [10].

Mitchell, Agle, and Wood [11] argue that managers should serve legitimate stakeholders’ legal and moral interests. However, the technology view of moral decision-making with AI does not consider non-technical stakeholders, or the ethical principles are so broad they are not practical for developers [12,13,14]. Manders-Huits [15] contends that the notion of consequences and the level of autonomy of action are preconditions or considerations for moral responsibility, arguing that the burden of responsibility for moral decisions is on the system designers’ shoulders. Martin [16] makes a similar case, stating that “developers are those most capable of enacting change in the design and are sometimes the only individuals in a position to change the algorithm.” However, Wachnik [17] warns that a high level of information asymmetry creates a moral hazard when the supplier (i.e., developers) and the client are from differing organizations. Moral hazards are defined as the ineffectiveness or the abuse of trust created by opportunistic behavior [17]. Thus, while research exists to address varying aspects of ethics and morality in AI projects, a practical definition of project success is needed that addresses all legitimate stakeholders.

This research uses a systematic review of the literature to answer a novel question regarding success factors in AI projects: what are the project success factors for moral decision-making with algorithms? It closes the gap on a lack of literature that translates AI ethical principles into practice [14] and introduces AI success factors into the project management literature.

The paper is structured as follows. Section two provides a literature review. Section three includes the theoretical framework and a description of the research methodology, and section four outlines the findings. Section five discusses the results, and section six provides conclusions, including the study’s contribution, implications, limitations, and considerations for future research.

2 Literature Review

2.1 Project Success Factors

Projects are temporary endeavors with an end date planned from the beginning. Thus, the project objectives and success criteria should be agreed upon with stakeholders before starting a project and be reflected in the project objectives, business case, and investments [18, 19]. Otherwise, the long-term needs of internal and external stakeholders may conflict with their temporality and end date [19].

Project success refers to delivering the expected output, achieving the intended objective, and meeting stakeholder requirements. Project efficiency is a subset of project success and is defined as project management success in relation to time, costs, and quality—the iron triangle [18, 20]. Within a project, success criteria and success factors are the dimensions of success [18, 20]: criteria measure success while factors identify the circumstances, conditions, and events for reaching the objectives. Consequently, the efficiency of a project can be measured once the outputs are produced. In contrast, project benefits and organizational performance impacts can be measured after the project outputs are put into use—fully or incrementally.

The project management critical success factor model drawn from Pinto and Slevin [21] is the most referenced [18]. It outlines ten success factors that the project team controls and four factors that influence project success but are not under their control. Davis [10] identifies three gaps with the Pinto and Slevin [21] model: project efficiency, accountability and stakeholder involvement, and benefits to stakeholder groups. The project success model from Turner and Zolin [20] examines how stakeholders perceive success after the project is completed. It was the first model to look at success outside the typical project life cycle and simultaneously consider multiple stakeholders [10]. It defines the project results at multiple timescales: project outputs at the end of the project, outcomes months after the end, and impacts years after the end. The success model from Shenhar, Dvir, Levy, and Maltz [9] identifies five dimensions of success: project efficiency, impact on the customer, business success, impact on the team, and preparation for the future. It also acknowledges the importance and relevance of shifts in the measures over time, with efficiency being important during the project but less so once it is complete.

Since each project management success model argues that success depends on the project objectives and type [9], success models from information technology projects were further investigated. The investigation included models for projects to digitally transform a specific area of a company (digitalization projects) [22]; software engineering projects [23, 24]; projects to implement and customize standardized information systems—enterprise resource planning [13, 25, 26]; information systems, big data analytics, and business intelligence implementation projects [27,28,29]; and artificial intelligence and robots projects [30].

Though phrased differently depending on the subject material, customer consultation and acceptance were success factors in all models. System usage was considered a prerequisite to realizing organizational performance benefits or societal impacts. Using the framework from Pinto and Slevin [21], Miller [27] concludes that ethical knowledge is a specialized skill needed by project personnel. The author also questions the role of moral decision-making in extreme situations and identifies ethical concerns as a risk to manage. However, in conclusion, stakeholder involvement and stakeholder benefits were concepts in some models [10, 20]—otherwise, the ethical and moral interests related to the public interest were not considered success factors in the reviewed models.

2.2 AI Projects

Algorithm Methods and Techniques.

The methods and techniques used for data-driven algorithm development encompass multiple disciplines or branches within computer science and statistics. They include machine learning and deep learning techniques to learn from data and define algorithms [27, 31, 32]. Machine learning uses supervised and unsupervised methods to discover and model the patterns and relationships in data, allowing it to make predictions. Deep learning uses machine-learning approaches to automatically learn and extract features from complex unverified data without human involvement [27, 31].

Machine-learning methods such as regression, discriminate analysis, and time series analysis are mature methods with many decades of usage [33]. Other approaches have emerged in the last few decades, such as natural language processing (NLP) and artificial neural networks (ANN). NLP covers automated methods to process and manipulate human language [32, 33]. Conceptually inspired by the human brain’s biological neurons and synapses, ANN models are trained on past data and use pattern recognition to make predictions [32, 33]. Other methods or combinations of methods exist for dealing with imprecise or vague data or approximating reasoning, e.g., artificial neural networks with fuzzy logic [33]. Each method requires data for learning. Most methods require high-performance computing systems and architectures [27]. The resulting algorithms are incorporated into computer systems (here after referred to as AI systems); technologies such as big data, predictive analytics, business intelligence, data mining, advanced analytics, and digitization are used in the system development.

Project Lifecycle.

The algorithmic decision-making life cycle can have three stages: development, usage, and consequence. The development stage produces an AI system in four steps [16, 27, 34]. The first step is the planning and design of the system. In the second step, the source data are collected from multiple sources; they are made fit for purpose, including augmenting data with tags, identifiers, or metadata; and stored in data repositories. For the third step, subsets of source data are transformed to train the models (subsequently referred to as training data). The models and algorithms are developed through extensive data and analytical methods. Here high-performance computing is needed to support the computational load and data volumes [27]. In the fourth step, the algorithms are verified and validated. A user interface (UI) is developed to produce autonomous decisions or provide input for human decision-making. This step may include other technical aspects, such as system deployment. However, though relevant, these topics are not the main focus of this study.

The usage stage includes the operation and monitoring of the system [16, 27, 34]. The algorithms are used by inputting parameters or data to invoke them; the algorithms then output the decisions. The algorithm or AI system may be a standalone system or integrated into other systems, robots, automobiles, or digital technology platforms such as a social media platform. The algorithms may produce the output with or without the user’s knowledge of their existence. They are monitored over time for their effectiveness and retrained or otherwise adjusted. In the consequences stage, the decision is finalized, and the consequences begin to impact people, organizations, and groups (i.e. stakeholders).

2.3 Morality and Ethics in AI

Jones [35] defines a moral issue as one where a person’s actions, when freely performed, have consequences (harms or benefits) for others. The moral issue must involve a choice on the part of the actor or the decision-maker. Jones also states that many decisions have a moral component—a moral agent is a person who makes a decision even when the decision-maker may not recognize that a moral issue is at stake. An ethical decision is legally and morally acceptable to the larger community; an unethical decision violates legal or moral codes. Much of the research reviewed treats the terms moral and ethical as equivalent and uses them interchangeably depending on the context.

The thesis from Anscombe [36] holds that the concepts “right” and “wrong” should be discarded and replaced with a definition of morality in terms of “intrinsically unjust” versus “unjust given the circumstances.” The author argues that the boundary between the two concepts is “according to what’s reasonable.” Anscombe [36] further theorizes that determining the expected consequences plays a part in determining what is just. These arguments place the responsibility for morality on the decision-maker. However, they do not define who is accountable when the decisions are delegated from humans to systems. Shaw, Stöckel, Orr, Lidbetter, and Cohen [37] argue that machines are artificial agents that should not be held to a higher moral standard than humans; they define four meta-moral qualities that machines should possess to be considered proper moral agents (robustness, consistency, universality, and simplicity).

Manders-Huits [15] contends that the notion of consequences and level of autonomy of action are preconditions or considerations for moral responsibility. First, the notion of consequences in information technology (IT) places the burden of responsibility for moral decisions on the shoulders of those who design complex IT systems. However, the definition of designers is unclear—including technicians, finance providers, and instructors—and the relationship between the responsibility of designers compared to end-users in final decision-making is also opaque. Martin [16] also places the responsibility for moral decision-making in the hands of the system developer and their companies. Second, the abundance of information that individuals have and understand enhances their ability to act autonomously. However, the actions or decisions integrated into IT applications are limited based on “implying an adequate understanding of all relevant propositions or statements that correctly describe the nature of the action and the foreseeable consequences of the action” [15]. Moreover, it is not likely that modelers can predict all potential uses of their models [38].

Wachnik [17] identifies several incidents of moral hazard in IT projects when the supplier and the client are from differing organizations, classified by ineffectiveness or the abuse of trust created by a high level of information asymmetry in a supplier-agent relationship or the opportunistic behavior of the supplier [17]. The study reveals unwanted behaviors of the supplier that introduce a risk of project failure, an increase in the transaction cost for the client, and result in a loss of mutual trust.

Much research has focused on defining values, principles, frameworks, and guidelines for ethical AI development and deployment [14, 39]. However, Mittelstadt [14] maintains that principles alone have a limited impact on AI design and governance. Conducting an analysis of 21 AI ethics guidelines, Hopkins and Booth [40] similarly finds that such regulations are ineffective and do not change the behavior of professionals throughout the technology community. One challenge is the difficulty in translating concepts, theories, and values into practice. Specifically, the translation process is likely to “encounter incommensurable moral norms and frameworks which present true moral dilemmas that principles cannot resolve” [14]. Furthermore, there are no proven methods to translate principles into practice. For example, Mittelstadt [14] warns that the solution to AI ethics should not be oversimplified to AI technical design or expertise alone.

Jobin, Ienca, and Vayena [39] conducted a content analysis of 84 AI ethical guidelines and identified five ethical principles that have converged globally (transparency, justice, fairness, non-maleficence, and privacy). Building on their research [39], Ryan and Stahl [12] provides a detailed explanation of the normative implication of AI ethics guidelines for developers and organizational users. The paper defines AI ethical principles and describes what users and developers should do to realize their moral responsibilities. However, it explicitly excludes other stakeholders. The study distinguishes 11 AI ethical categories: beneficence, dignity, freedom and autonomy, justice and fairness, non-maleficence, privacy, responsibility, solidarity, sustainability, transparency, and trust.

3 Research Methodology

3.1 Theoretical Framework

To answer the research question, this review seeks the deliverables, acts, or situations—success factors—necessary to avoid harm or ensure the benefits of an algorithm developed in projects. Thus, the project success model from Turner and Zolin [20] is relevant for identifying success factors, and the ethical principles from Ryan and Stahl [12] are useful to quantify and contextualize the success factors.

The model from Turner and Zolin [20] attempts to forecast project success beyond the initial project outputs, recognizing that project outcomes and impacts, and stakeholder interest change over time. It was chosen for four key reasons. First, the model focuses on projects, which are bound by time, team, tasks, and activity. These boundaries limit environmental considerations and give context to the investigation. This approach is relevant as personal experience, organizational norms, industry norms, and cultural norms affect stakeholders’ perceived alternatives, consequences, and importance. Second, decisions made during the project will have an impact many months or years in the future. However, project participants may not be aware of the magnitude of the consequences of their decisions initially, particularly in terms of harms or benefits for victims or beneficiaries. Thus, it is essential to consider the multiple time dimension available in the model. Third, stakeholders influence the project’s planning and outputs and are impacted by the results. Thus, multiple stakeholder perspectives are useful to consider the influence of decision-making and the resulting impact on the stakeholders. Finally, the model outlines the multiple types of success indicators that should be included in the investigation.

Consequently, the algorithmic development, usages, consequence stages, and AI components were aligned with the timescales with the model from Turner and Zolin [20]. Algorithm development aligns with the output, algorithm usage with the outcome, and decision-making consequences with the impact. Table 1 identifies the alignment of AI components according to the time scales.

The study from Ryan and Stahl [12] categorizes and subcategorizes ethical principles and concepts. It was chosen for the thematic mapping of success factors to ethical principles for three reasons. First, it includes a comprehensive set of categories by building on the 84 AI ethical guidelines reviewed by Jobin, Ienca, and Vayena [39]. Second, the subcategories contain semantically similar concepts that were not merged into a single concept. This method provides rich descriptions that permit the qualitative mapping of individual success factors to the most relevant ethical concept. Third, Ryan and Stahl [12] argues that the meanings within the corpus of AI ethics guidelines should include activities that are morally appropriate for developers and users. This argument aligns with this study’s goal to understand the activities necessary for project success.

3.2 Systematic Review Procedure

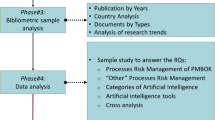

A single researcher used a systematic review of the literature to explore the research question, defined as “a review of a clearly formulated question that uses systematic and explicit methods to identify, select, and critically appraise relevant research, and to collect and analyze data from the studies that are included in the review” [41]. The purpose of the systematic review was to synthesize existing knowledge in a structured and rigorous manner. The procedure included 1) an identification of bibliographic databases from which to collect the literature, 2) a definition of the search process including the keywords and the search string, 3) definition of inclusion and exclusion criteria, 4) removing duplicates and screening the articles, 5) extracting data based on a full-text review of the articles, and 6) synthesizing the data using a coherent coding method. The details are described in the following sections, and Fig. 1 includes a flow of information through the systematic review.

Bibliographic Databases.

The first literature search was begun in October 2020 to collect peer-reviewed articles from the ProQuest, Emerald, ScienceDirect, and IEEE Xplore bibliographic databases. The focal keywords were “algorithm” and “stakeholder.” This search revealed key themes in how success was viewed in algorithmic projects. Keywords such as ethics, fairness, accountability, transparency, and explainability were frequently referenced in the articles. The analysis also identified the “ACM Conference on Fairness, Accountability, and Transparency (ACM FAccT)” as an important source for cross-disciplinary research. Thus, subsequent bibliographic searches were undertaken in March, July, and December 2021, adding the ACM Digital Library to the previous bibliographic databases. Articles from the initial search and FaccT conference were retained for analysis. Table 2 shows the article distribution by database.

Search String.

The ultimate search emphasized “accountability” instead of “stakeholder.” Stakeholder in combination with algorithm was not a frequent keyword. Accountability focuses on the relationship between project actors and those to whom the actors should be accountable [42]. Other frequent keywords were also included in the search string to make the results meaningful. Since not all databases allowed wild cards, variations of the search string were used, and adjustments were made in the syntax for each search engine. There were adjustments for typographical errors and refinements between database searches. The wildcard version of the last used search is as follows:

All = accountab* AND

Title = (“machine learning” OR “artificial intelligence” OR AI OR “big data” OR algorithm*) AND

Title = (fair* OR ethic* OR moral* OR success OR transparency OR expla* OR accountab*)

Inclusion and Exclusion Criteria.

Articles were retained in the search results for peer-reviewed journal articles or conference papers in the English language; book reviews were excluded. There were no filters for the dates. Duplicate entries and entries with no available document were removed. Next, literature was excluded or retained in an iterative process based on a review of the title, the abstract, and the full article text.

First, the titles of the articles were reviewed, and articles were retained that considered the process or considerations for the development, use, or outcomes of algorithms. Articles were excluded that discussed the structure or content of an algorithm, a specific use case, or wrongly identified articles, e.g., magazine articles and panel descriptions. Next, the abstracts were reviewed to determine if the article could yield information on the success factors. Finally, the full text of the included articles was reviewed and coded to answer the research question. Articles from the initial search and FaccT conference that did not appear in subsequent searches were retained for analysis. In total, the full text of 144 articles were analyzed; a subset of 55 articles introduced relevant and novel details and are included in the summary tables. The majority of the analyzed articles (64%) were published after 2019, and many (40%) were conference papers. Figure 1 shows the preferred reporting items for systematic reviews and meta-analyses (PRISMA) process flow.

Coding Strategy and Data Analysis.

Each of the 144 articles was reviewed in detail to code its success factors. The coding was conducted in NVivo 12 (Windows) software. We extracted terms and explanations to determine what was known about how different stakeholders viewed success; we then used the literature to clarify the definitions, provide examples, determine the main elements of success, and develop context. We compiled a resulting list of success factors that must be delivered: acts or situations that contributed to a positive outcome with algorithm decision-making projects. Then, where possible, each success factor was mapped to one or more ethical principles, as described in Ryan and Stahl [12]. The success factors were qualitatively grouped based on their common characteristics or responsibility patterns. Subsequently, the relationship between the groups of success factors was theorized, and a relationship model was defined. The results are summarized in the Research Findings section.

3.3 Validity and Reliability

This approach of defining elements is an acceptable method for placing boundaries around the meaning of a term [41]. First, external validity was ensured by using a theoretical model as a guiding framework for the thematical analysis. Second, validity was maintained by using the literature as primary and validation sources. The success factors were mapped at a detailed level to the original sources in the literature. The results were cross-validated with previous AI ethics literature reviews [12] to ensure completeness. The checklist and phase flow from the PRISMA statement were used to guide the study and report the results [41].

4 Research Findings

The literature review identified 82 success factors that were qualitatively consolidated in an iterative process into five broad categories and 17 groups. The meaning of each category is provided in Table 3. Table 4 identifies the success factors and references them according to success category and group. The ethical principles are shown next to each success factor based on mapping them to the 11 ethical categories from Ryan and Stahl [12]. Figure 2 visualizes the intersection between the success factors and the ethical principles, each column being one of the 11 ethical categories. The colors represent the percentage and the numbers the count of success factors that address the principle. The results describe the practical requirements for success with AI development and usage based on the moral issues and ethical principles found in the literature.

4.1 Project Governance

Project Management.

The scope definition document, or problem statement, defines the aims and rationale for the algorithmic system [45]. The requirement for the system, the moral issues, and all aspects of the project are impacted by the algorithm’s context (e.g., country, industry sector, functional topic, and use case). Trust is context-dependent since systems can work in one context but not another; thus, the scope should act as a contract that reveals the algorithm’s goal and the behavior that can be anticipated [46]. Furthermore, a clearly defined scope protects against spurious claims and the misapplication or misuse of the system.

Next, AI ethical principles argue that AI systems should be developed to do good or benefit someone or the society as a whole (beneficence); they should avoid harming others (non-maleficence) [12, 47]. Bondi, Xu, Acosta-Navas, and Killian [50] propose that the communities impacted by the algorithms should be active participants in AI projects. The community members should have some say in granting the project a moral standing based on if it benefits the community or makes the community more vulnerable. While these arguments were made in relationship to the “AI for social good” type projects, the community engagement is relevant for all types of AI projects. Finally, rules should be established to manage conflict of interest situations within the team or when the values of the system conflict with the interests or values of the users [43, 44].

A responsibility assignment matrix defines roles and responsibilities within a project, distinguishing between persons or organizations with responsibility and accountability [48]. Accountability ensures a task is satisfactorily done, and responsibility denotes an obligation to perform a task satisfactorily, with transparency in reporting outcomes, corrective actions, or interactive controls [47,48,49]. Both responsibility and accountability assume a degree of subject matter understanding and knowledge and should include moral, legal, and organizational responsibilities [12, 47]. The project organization should promote a diverse working environment, involving various stakeholders and people from numerous backgrounds and disciplines and promoting the exchange and cooperation across regions and among organizations [12, 54]. Furthermore, the project team needs members with specialized skills and knowledge to process data and accomplish the design and development of the algorithms; the quality of the team’s skills and expertise impacts the usability of the algorithms and the governance policies [30]. Standards and policy guidelines should be used to build consensus and provide understanding on the relevant issues, such as algorithmic transparency, accountability, fairness, and ethical use [14, 38, 51, 53].

Systematic record-keeping is the mechanism for retaining logs and other documents with contextual information about the process, decisions, and decision-making from project inception through system operations [12, 45, 51, 56]; the various types of records are listed as individual success factors. The disclosure records are logs that are themselves about disclosures or the processes for disclosure, what was actually released, how information was compiled, how it was delivered, in what format, to whom, and when [45, 51, 52]. Bonsón, Lavorato, Lamboglia, and Mancini [8] found that some European publicly-listed companies are disclosing their AI ethical approaches and facts about their AI projects in their annual and sustainability reports. The procurement records are contractual arrangements, tender documents, design specifications, quality assurance measures, and other documents that detail the suppliers and relevant due-diligence [45].

The risk assessment records identify the potential implications and risks of the system, including legality and compliance, discrimination and equality, impacts on basic rights, ethical issues, and sustainability concerns [45]. However, model risk assessment should be an active process of identifying and avoiding or accepting risks, changing the likelihood of a risk occurring or of its consequences, sharing risk responsibility, or retaining the risk as an informed decision [55]. Retraining and fine-tuning models, adding new components to original solutions (i.e., wrappers), and building functionally equivalent models (i.e., copies) are three methods suggested by Unceta, Nin, and Pujol [55] for mitigating AI risks.

Ethics Practices.

Ethics policies should include ethical principles with guidelines and rules for implementation and to verify and remedy any violations; the ethics policies should be shareable externally with the public or public authorities [2, 12, 14, 51]. Ethics training should cover the practical aspects of addressing ethical principles [2, 12, 51, 53]. An independent official such as an ombudsman, or a whistleblower process, should be available to hear or investigate ethical or moral concerns or complaints from team members [12, 42, 53]. Finally, professional membership in an association or standard organization (e.g., ACM, IEEE) that provides standards, guidelines, practices on ethical design, development, and usage activities should be encouraged and supported [14].

Investigations.

Algorithm auditing is a method that reveals how algorithms work. Testing algorithms based on issues that should not arise and making inferences from the algorithms’ data is a technique for auditing complex algorithms [1, 2, 7, 45, 53, 58, 59]. Audit finding records document the audit, the basis or other reasons it was undertaken, how it was conducted, and any findings [45]. Audit response records document remediations and subsequent actions or remedial responses based on audit findings [2, 45].

Algorithmic impact assessments investigate aspects of the system to uncover the impacts of the systems and propose steps to address any deficiencies or harm [42, 53, 57]. Certification ensures that people or institutions comply with regulations and safeguards and punishes institutions for breaches; it offers independent oversight by an external organization [38, 53, 59].

4.2 Product Quality

Source Data Qualities.

Data accessibility refers to data access and usage in the algorithm creation process. Several regulations and laws constrain how data may be accessed, processed, and used in analytical processes. Thus, a legal agreement to use the data and the confidentiality of personal data should be preserved [1, 7, 51, 52, 60, 61]. Data transparency reveals the source of the data collected, including the context or purpose of the data collection, application, or sensors (or users who collected the data), and the location(s) where the data are stored [6, 38, 51, 62,63,64,65,66]. The reviewability framework [45] recommends maintaining data collection records that include details on their lifecycle: purpose, creators, funders, composition, content, collection process, usage, distribution, limitations, maintenance, and data protection and privacy concerns [2, 45, 51, 62, 63]. Datasheet for datasets by Gebru, et al. [62] provides detailed guidance on document content.

Training Data Qualities.

Data quality and relevance refer to possessing data that are fit for purpose. The quality challenges relating to training data include the diversity of the data collected and used, how well it represents the future population, and the representativeness of the sample [45, 62, 63]. Individuals are entitled to physical and psychological safety when interacting with and processing data, i.e., interaction safety [7, 12, 38, 51, 54, 61, 74]. Kang, Chiu, Lin, Su, and Huang [70] recommends that in addition to labelling data points, that human experts annotate representations and rules to optimize models for human interpretation. Equitable representation applies to data and people. For data, it means having enough data to represent the whole population for whom the algorithm is being developed while also considering the needs of minority groups such as disabled people, minors (under 13 years old), and ethnic minorities. For people, it means, for example, including representatives from minority groups or their advocates in the project governance structures or teams that design and develop algorithms [38, 66,67,68,69]. Model training records should document the training workflow, model approaches, predictors, variables, and other factors; datasheets for datasets by Gebru, et al. [62] and model cards by Mitchell, et al. [71] provide a framework for the documentation.

Model and Algorithm Qualities.

Algorithm transparency refers to using straightforward language to provide clear, easily accessible descriptive information (including trade secrets) about the algorithms and data and explanations for why specific recommendations or decisions are relevant. The need for end-users to understand and explain the decisions produced influences the algorithm, data, and user interface transparency requirements [1, 6, 38, 51, 52, 58, 66, 71, 75]. For example, transparency may be needed for compatibility between human and machine reasoning, for the degree of technical literacy needed to comprehend the model, or to understand the model’s relevance or processes [45]. Here it is worth noting that the method and technique used to create the model influences it’s explainability and transparency [76]. Models can be interpretable by design, provide explanations of their internals, or provide post-hoc explanation of their outputs. Mariotti, Alonso, and Confalonieri [76] provides a framework for analyzing a model’s transparency. Chazette, Brunotte, and Speith [75] provide a knowledge framework that maps the relationship between a model’s non-functional requirements and their influence on explainability.

Equitable treatment means eliminating discrimination and differential treatment, whereby similarly situated people are given similar treatment. In this context, discrimination should not be equated to prejudice based on race. It is based on forming groups using “statistical discrimination” and refers to anti-discrimination and human rights protections [1, 2, 12, 66, 67, 74].

Model qualities include consistency, accuracy, interpretability, and suitability; there are no legal standards for acceptable error rates or ethical designs. Consistency means receiving the same results given the same inputs, as non-deterministic effects can occur based on architectures with opaque encodings or imperfect computing environments [7]. Accuracy is how effectively the model provides the desired output with the fewest mistakes (e.g., false positives, error rates) [6, 7, 38, 66, 68, 69]. Overconfidence is a common modeling problem that occurs when the model’s average confidence level exceeds its average accuracy [40]. Interpretability refers to how the model is designed to provide reliable and easy-to-understand explanations of its prediction [6, 12, 56]. Auditability refers to how the algorithm is transparent or obscured from an external view to allow other parties to monitor or critique it [2, 63, 66].

Model validation is the execution of mechanisms to measure or validate the models for adherence to defined principles and standards, effectiveness, performance in typical and adverse situations, and sensitivity. Validation should include bias testing, i.e., an explicit attempt to identify any unfair bias, avoid human subjectivity that introduces individual and societal biases, and reverse any biases detected. Models can be biased based on a lack of representations in the training data or how the model makes decisions, e.g., the selected input variables. The model outcomes should be traceable to input characteristics [2, 38, 40, 59, 72, 73].

The reviewability framework suggests maintaining model validation records that contain details on the model and how it was validated, including dates, version, intended use, factors, metrics, evaluation data, training data, quantitative analyses, ethical considerations, caveats and recommendations, or any other restrictions [45, 71]. Model cards by Mitchell, et al. [71] provide detailed guidance on the content.

User Interface (UI) Qualities.

Expertise is embodied in a model in a generalized form that may not be applicable in individual situations. Thus, human intervention is the ability to override default decisions [1, 6, 47]. Equitable accessibility ensures usability for all potential users, including people with disabilities; it considers the ergonomic design of the user interface [12, 38, 78]. Front-end transparency designs should meet transparency requirements and not unduly influence, manipulate, confuse, or trick users [52, 60, 64, 66, 77]. Furthermore, dynamic settings or parameters should consider the context to avoid individual and societal biases such as those created by socio-demographic variables [47]. App-Synopsis by Albrecht [77] provides detailed guidance on the recommended description of algorithmic applications.

System Configuration.

The system and architecture quality may impact the algorithm’s outcomes, introduce biases, or result in indeterminate behavior. Default choices (e.g., where thresholds are set and defaults specified) may introduce bias in the decision-making. Specifically, the selected defaults may be based on the personal values of the developer. The availability, robustness, cost, and safety capabilities of the software, hardware, and storage are essential to algorithm development and use [30]. Decisions on methods and the parallelism of processes may cause system behavior that does not always produce the same results when given the same inputs. Obfuscated encodings may make it difficult to process the results or audit the system. The degree of automation may limit the user’s choices [7, 56]. Security safeguards allow technology, processes, and people to resist accidental, unlawful, or malicious actions that compromise data availability, authenticity, integrity, and confidentiality [2, 52, 53, 79].

The reviewability framework suggests that systems should provide a technical logging process, including mechanisms to capture the details of inputs, outputs, and data processing and computation [45]. The framework also recommends records relevant to the technical deployment records and operations, including installation procedures, hardware, software, network, storage provisions or architectural plans, system integration, security plans, logging mechanisms, technical audit procedures, technical support processes, and maintenance procedures [45]. Hopkins and Booth [40] highlights the need for model and data versioning and metadata to evaluate data, direct model changes overtime, document changes, align the product to the end documentation, and to act as institutional knowledge.

Data and Privacy Protections.

Data governance includes the practices for allocating authority and control over data, including the way it is collected managed and used; it should consider the data development lifecycle of the original source and training data [63, 80]. Data retention policy specifies the time and obligations for keeping data; personal data should be retained for the least amount of time possible [12, 52].

Privacy safeguards include processes, strategies, guidelines, and measures to protect and safeguard data privacy and implement remedies for privacy breaches [1, 6, 7, 38, 51, 53, 60]. Informed consent is the right to be informed of the collection, use, and repurposing of their personal data [6, 7, 12, 38, 52, 53]. The legal and regulatory rules covering consent vary by region and usage purposes. Personal data control means giving people control of their data [1, 6, 60], while confidentiality concerns protecting and keeping data and proprietary information confidential [7, 12, 38, 56, 65, 74]. Data encryption, data anonymization, and privacy notices are examples of privacy measures [1, 6, 7, 38, 51, 53, 60]. Data anonymization involves applying rules and processes that randomize data so an individual is not identifiable and cannot be found through combining data sources. Data protection principles do not apply to anonymous information [38, 51, 53, 60]. Data encryption is an engineering approach to secure data with electronic keys.

4.3 Usage Qualities

System Transparency and Understandability. Stakeholder-centric communication considers the explainability of the algorithm to the intended audience. Scoleze Ferrer Paulo, Galvão Graziela Darla, and de Carvalho Marly [82] note that there are different audiences for model and algorithm explanations: executives and manager who are accountable for regulatory compliance, third-parties that check for compliance with laws and regulation, expert users with domain-specific knowledge, people affected by the algorithms that need to understand how they will be impacted, and models designers and product owner who research and develop the models. Thus, explanations should be comprehendible and transmit essential, understandable information rather than legalistic terms and conditions, even for complex algorithms [2, 6, 12, 52, 65, 81, 82]. Interpretable models refer to having a model design that is reliable, understandable, and facilitates the explanation of predictions by expert users [6, 12]. Choices allow users to decide what to do with the model results, maintaining a human-in-the-loop for a degree of human control [6, 12, 38, 47, 53, 56, 83].

Expertise is embodied in a generalized form that may not be applicable in individual situations, so specialized skills and knowledge may be required to choose among alternatives. Consequently, professional expertise, staff training and supervision, and on-the-job coaching may be necessary to ensure appropriate use and decision quality [56, 84]. Similarly, onboarding procedures are required to orient users on the system usage, their responsibilities, and system adjustments needed to address confidence levels, adjust thresholds, or override decisions [40]. Interaction safety refers to ensuring physical and psychological safety for the people interacting with AI systems [61]. Problem reporting is a mechanism that allows users to discuss and report concerns such as bugs or algorithmic biases [58].

Usage Controls.

The complaint process means having mechanisms to identify, investigate, and resolve improper activity or receive and mediate complaints [64]. Quality controls detect improper usage or under-performance. Improper usage occurs when the system is used in a situation for which it was not originally intended [38, 47]. Monitoring is a continual process of surveying the system’s performance, environment, and staff for problem identification and learning [84]. Staff monitoring identifies absent or inadequate content areas, identifies systematic errors, anticipates and prevents bias, and identifies learning opportunities. System monitoring verifies how the system behaves in unexpected situations and environments. Model values or choices become obsolete and must be reviewed or refreshed through an algorithm renewal process [38, 42, 53].

The reviewability framework recommends retaining usage, consequence, and process deployment records. Usage records contain model inputs and the outputs of parameters, operational records at the technical (systems log) level, and usage instructions [45, 51]. Consequence records document the quality assurance processes for a decision and log any actions taken to affect the decision, including failures or near misses [45, 59]. Logging and recording decision-making information are appropriate means of providing traceability. Process deployment records document relevant operational and business processes, including workflows, operating procedures, manuals, staff training and procedures, decision matrices, operational support, and maintenance processes [45].

Decision Quality.

Awareness is educating the public about the existence and the degree of automation, the underlying mechanisms, and the consequences [2, 6, 53]. Access and redress are ways to investigate and correct erroneous decisions, including the ability to contest automated decisions by, e.g., expressing a point-of-view or requesting human intervention in the decision [1, 2, 6, 12, 53, 65, 85]. Decision accountability is knowing who is accountable for the actions when decisions are taken by the automated systems in which the algorithms are embedded [2, 12, 53, 66, 85]. Privacy and confidentiality are the activities to protect and maintain confidential information of an identified or identifiable natural person [7, 12, 38, 56, 65, 74].

4.4 Benefits and Protections

Financial Benefits.

Intellectual property rights consist of the ownership of the design of the models, including the indicators. Innovation levels have to be balanced against the liability and litigation risks for novel concepts [38, 53]. Financial gains include increased revenues from a sale or licensing models that produce revenue through license or service fees [38, 74]; cost reductions from making faster, less expensive, or better decisions [65]; or improved efficiency from reducing or eliminating tasks [65]. Furthermore, proven successful models, concepts, algorithms, or businesses can attract investment funds [38]. Investment funds are needed to finance project resources and activities [30].

Financial Protections.

Intellectual property protection is achieved by hiding the algorithm’s design choices, partly or entirely, and establishing clear ownership of AI artifacts (e.g., data, models) [38, 53, 58]. Data and algorithm transparency and auditing requirements should be considered in deciding what to reveal [58]. Further, model development has environmental impacts and energy costs. The environmental impacts occur as training models may be energy-intense, using as much computing energy as a trans-American flight as measured by carbon emissions [12, 69]. The energy costs from computing power and electricity consumption (for on-premise or cloud-based services) are relevant for training models [12, 69]; for an incremental increase inaccuracy, the cost of training a single model may be extreme (e.g., 0.1 increase in accuracy for 150,000 USD) [69]. Cost efficiency occurs when acquiring and using information is less expensive than the costs involved if the data were absent [74]. Project efficiency evaluates the project management’s success in meeting stakeholder requirements for quality, schedule, and budget [18, 20].

Legal Protections.

Legal safeguards include protection against legal claims or regulatory issues that arise from algorithmic decisions [2, 52]—limiting liability or risk of litigation for users and balancing risks from adaptations and customizations with fear of penalties or liability in situations of malfunction, error, or harm [12, 38]. Regulatory and legal compliance involves meeting the legal and regulatory obligations for collecting, storing, using, processing, profiling, and releasing data and complying with other laws, regulations, or ordinances [7, 30, 45, 51, 53, 57, 59, 65].

4.5 Societal Impacts

Individual Protection.

Civil rights and liberties protection secures natural persons’ fundamental rights and freedoms, including the right to data protection and privacy and to have opinions and decisions made independently of an automated system [12, 60]. To ensure such rights, the product and usage qualities enumerated in their respective success groups must be implemented (e.g., equitable treatment, accessibility, choices, privacy, and confidentiality) [12, 47, 60]. Finally, AI systems may introduce new job structures and patterns, eliminate specific types of jobs, or change the way of working. Thus, programs may be required to address employee and employment-related issues [12, 53].

Sustainability.

Environmental sustainability is supported by limiting environmental impacts and reducing energy consumption in model creation [12, 69].

5 Discussion

This study framed the question of project success from the perspective of moral decision-making with algorithms. It listed success factors for each component of an AI system based on the development, usage, and consequences stages of an AI project lifecycle. The research revealed that the project team’s actions influence who judges the reasonability of a given decision [15]. Thus, the project team has some responsibility for the moral decisions produced by algorithmic systems. Based on the definition from Jones [35], project team members are moral agents because they make decisions that may affect others (whether harmful or beneficial), even if they do not recognize that a moral issue is at stake. Hence, the systems they develop are artificial agents that should abide by the moral laws of society. The analysis produced success groups that differentiate activities, clarifying the requirements for technicians, finance providers, instructors, and end-users in the final decision-making process. This separation provides some clarity to avoid any role confusion among IT designers, as described by Manders-Huits [15].

Figure 3 visualizes the relationship between the AI success categories and the project governance success groups. The findings differ between the information technology (IT) [13, 22,23,24,25,26,27,28,29,30] and project management success models [9, 10, 20, 21] in several important ways. First, the success groups include the ethical practices and investigation success categories, which were not explicitly considered in the investigated models. For the IT models, there are three additional differences. First, there is a direct bi-directional relationship between benefits and protections and product qualities. That is, there are consequences in developing AI systems for stakeholders with or without system usage. Second, it stresses that usages have non-financial performance impacts that may harm or benefit society. Third, the benefits and protection category introduces new factors such as intellectual property rights and protections.

For the project management models, there are four additional differences. First, the factors consider stakeholders beyond the customer and wider interests than those typically considered by customer consultation and customer acceptance. This finding is consistent with the observation from Davis [10] that accountability and stakeholder impact are project success factors. Consequently, external stakeholder engagement and interest occur throughout the project management success group (i.e., inclusion in a diverse working environment) through the investigation and societal impacts categories. Second, in addition to the technical task success category identified by Pinto and Slevin [21], usage qualities are an equally important success category. Third, the success group for legal and financial protections is explicit and specific in content. These qualities differentiate it from the “preparing for the future” success factor foreseen by Shenhar, Dvir, Levy, and Maltz [9] and the generic public success measures described by Turner and Zolin [20]. Fourth, the success categories consider a time dimension similar to Shenhar, Dvir, Levy, and Maltz [9] and Turner and Zolin [20] but with an AI focus. Consequently, a project team could forecast success expectations months or years after completion. The success categories, groups, and factors are discussed in the following sections.

5.1 Project Governance

The intersectionality of success groups and ethical principles in Table 4 highlights project managers’ and sponsors’ challenges in balancing the public’s interest with the organization’s interest. Some of the most crucial success groups have little or no overlap with ethical principles, while others are highly relevant across several specific principles. The project scope document is a factor in determining the product relationship to benefit society as a whole (beneficence). The ethical practices drive how the project managers, team members, and end-users evaluate what is in the best interest of the public and the organization.

The project team may face challenges in dealing with tensions between expectations and reality and with moral hazards. There are likely to be tensions between the expectations of users and managers and what is feasible given the available data, compute power, and expertise of the team [40]. In addition, when some or all project team members, project sponsors, or system users are from differing organizations, people may be prone to behaviors that create a moral hazard [17]. For example, a supplier may demonstrate opportunist behavior such as creating a high transparency gap by hiding information, engaging specialists with low competence and little experience, intentionally completing tasks poorly to create a competence gap for the client, or underpricing the development work to win the operational work. Thus, the responsibility assignment matrix is a vital tool to use when assigning tasks and roles to mitigate the risk of morally hazardous behaviors [17].

Third parties such as investigative reporters in the media, internal or external auditors, public crowds, or regulators also may attempt to expose algorithmic harms [53]. Furthermore, the user organization may be the target of investigations or investigate or audit the algorithms. Thus, product and usage quality may be challenged as part of an investigation.

5.2 Product Quality

Product quality success factors are under the control of the project team but influenced by many stakeholders. Thus, each development aspect needs to consider technical product qualities, usability features, information requirements, and legal and regulatory requirements. In this regard, several conflicting success factors have to be balanced. For example:

-

End-users may want a high degree of flexibility for human intervention, including making alternative choices. Similarly, the person impacted by the decision outcome will want to have erroneous (or biased) decisions reviewed and corrected. Conversely, the user’s organization would want to limit legal liabilities, leading to fewer choices. The more open the system, the harder it is to differentiate between a system error and user error and assign accountability.

-

The need for the end-users to understand and explain the decisions produced by the algorithms suggests a high degree of transparency for the algorithm, data, and front-end user interface. Conversely, preserving intellectual property rights is a factor for a lesser degree of transparency. Furthermore, external bad actors may try to manipulate the algorithm if too much information is understood about how it works, which would be a security concern [82].

-

Unbiased models can produce high error rates (or be inaccurate), and biased models can be accurate. Thus, there is a trade-off between utility and fairness due to bias or inaccuracies.

-

There is an additional trade-off between the degree of automation and human autonomy. Too much automation can give the perception that—or lead to a reality where—people are under constant surveillance, suggesting that the system knows too much and is what Watson and Conner [65] calls “creepy.” Meanwhile, the system can offer flexibility, accuracy, or benefits not available through human autonomy.

-

Developing large-scale language models produces carbon emissions and has a financial cost. However, the assumption (which is challenged) is that large models increase accuracy. Thus, there is a tradeoff between accuracy, environmental impacts, and financial costs.

5.3 Usage Qualities

Shin and Park [66] empirically have found that end-users understand, perceive, and process algorithm fairness, accountability, and transparency differently. Furthermore, the interaction between trust and algorithmic features influences user satisfaction. Thus, the end user’s confidence in and understanding of the system are some of the essential success factors that impact decision quality. Product quality determines the procedures implemented by the end-users and their organization. For example, modelers may defer responsibility to the users for addressing model overconfidence or adjusting thresholds. Such cases require onboarding procedures to calibrate user trust and understanding in the system [40].

The user’s organization and the platform providers must follow regulations and laws relevant to the industry, data processing, and data profiling. Furthermore, as of April 2021 in the European Union, the artificial intelligence regulation act requirements should be considered [86]. Thus, success factors are robust operational rules, policies, contracts, quality controls, and privacy and security safeguards.

5.4 Benefits and Protections

There are several success factors from a business and governance perspective for delivering the product, intellectual property rights, and protections, limiting liability, and ensuring legal safeguards and regulatory compliance. Similar to product quality, there are multiple conflicting success factors. For example:

-

The trade-off between accuracy, environmental, and financial costs, as already discussed.

-

The need for financial gains from algorithmic systems and the need to benefit society (beneficence) may result in conflicting objectives.

-

Project efficiency concerning quality, time, budget, and regulatory and legal compliance.

-

The need for algorithm, data, and front-end user interface transparency and the production of intellectual property rights by protecting trade secrets.

-

The need for legal safeguards, comparing the need for system flexibility to allow for choices at the point of decision versus restricting human intervention.

5.5 Societal Impacts

First, people impacted by algorithmic decisions want fairness, meaning moral or “just” treatment from algorithmic decision-making. However, fairness or the perception of fairness has several subjective components that fall out of the scope of any development project, including pre-established attitudes and emotional reactions to algorithmic outcomes [1, 67]. Therefore, product and usage qualities should incorporate the enumerated success factors to protect individual rights and avoid potential harms [12, 53].

Next, since the development of algorithms has an environmental impact, sustainable development is a success factor. Ziemba [87] proposes that sustainable development should include four dimensions; they each appear relevant to algorithmic development: ecological, socio-cultural, economic, and political. Ecological sustainability is the conservation and proper use of renewable resources (air, water, land), and an economic approach adopts sustainable practices for innovation, financial benefits, and reputation and brand value. Socio-cultural sustainability contributes to the community and its stability and security. Finally, political sustainability upholds democratic values and effectively advocates for all enumerated rights.

6 Conclusions

The importance of algorithms in society and individuals’ lives is becoming increasingly apparent. Therefore, it is crucial to discuss the success factors for AI projects, which are dramatically more expansive than a typical information systems project. This research identified five categories of AI project success factors in 17 groups related to moral decision-making, the relationship among the success factor categories, and the relationship between the success factor and AI ethical principles.

The review summarized the concerns for fair, moral algorithm development and usage in decision-making. It revealed that the project manager and project team need to consider many factors when defining the project scope and executing it, arguing that people who develop and operate AI systems are moral agents. Consequently, these actors should build AI systems and procedures to avoid harm and ensure benefits.

Projects are constrained by time and budget, limiting the availability of people and other resources. Nevertheless, the algorithms that result from AI projects can have a significant, long-term impact. Thus, it is necessary and relevant that a broad view of success be considered in planning and executing these projects and that the societal impacts should be addressed as an explicit critical success factor. In particular, designers and project managers should evaluate how well the public interest and concerns are addressed by the product and usage quality.

6.1 Contributions

The findings from this study provide some guidelines on the success factors that may only be used indirectly or over time to judge a project’s success. It makes four contributions. First, it closes the gap on a lack of literature that translates AI ethical principles into practice [14]. Second, it provides a descriptive view of the ethical and additional related project deliverables, acts, or situations necessary for AI project success from the perspective of moral decision-making. Third, it considers success across time by investigating the post-project activities—usage and investigations—and impacts. Finally, since AI projects are rarely discussed in the project management literature, it contributes a broader review of AI success factors.

6.2 Practical Implications

Projects, and especially AI projects, are context-sensitive. Because the factors presented here are generic, it is vital to adjust and validate these features in specific contexts. For example, developing an algorithm for a healthcare situation would have different considerations than a marketing approach. However, success factors provide insights into the activities and deliverables that should be considered during planning to ensure fair, ethical decisions. First, the project manager and sponsor should ensure project scopes consider moral decision-making with algorithms. This approach will dramatically affect the team compositions, the deliverables produced as part of the project, and the project budget. Moreover, the benefits to society and the environment could be highlighted and potentially measured.

Next, they should consider the success factors described herein to recognize moral issues that require decisions during the development process to mitigate project risks. Project managers and sponsors may be limited in influencing future usage and operational processes. Nevertheless, they should try to exert their influence on the ethical practices of system users and user organizations. As an agent of the sponsoring organization with a reputation to manage and business objectives to reach, the project manager should consider these success factors to ensure adherence to ethical, privacy, security, and societal norms and to avoid regulatory fines and legal issues.

6.3 Theoretical Implications

The research expands the existing project management literature on project success factors specific to the AI domain. This contribution is consistent with the direction identified by Ika [18] “that one should turn to context-specific and even symbolic and rhetoric project success and CSFs [critical success factors].” In addition, the study qualitatively confirmed the finding from Davis [10] that accountability and stakeholder impacts are project critical success factors.

6.4 Limitations and Future Research

This research was based on the latest available literature at a single point in time. However, AI is a fast-moving topic, judging from the number of recent articles. Thus, other methods such as a Delphi study with field experts could extend and update the study and validate the findings. Since a single researcher conducted the analysis, the results may be biased by the researcher’s perspective.

As an opportunity for additional research, the success factors could be used to investigate project accountability or stakeholder management. It could be expanded to identify measurable success criteria for some success factors. AI literature regarding ways to measure bias, inequality, and accuracy should be left to specialists; however, it would be interesting to understand how to evaluate the trade-offs needed to complete a project and still meet all stakeholder requirements while retaining an honest approach. Furthermore, following the model from Ziemba [87], the factors and their relationships should be empirically investigated in future research.

References

Helberger, N., Araujo, T., de Vreese, C.H.: Who is the fairest of them all? Public attitudes and expectations regarding automated decision-making. Comput. Law Secur. Rev. 39, 1–16 (2020). https://dx.doi.org/10.1016/j.clsr.2020.105456

Garfinkel, S., Matthews, J., Shapiro, S.S., Smith, J.M.: Toward algorithmic transparency and accountability. Commun. ACM 60(9), 5 (2017). https://dx.doi.org/10.1145/3125780

Boonjing, V., Pimchangthong, D.: Data mining for positive customer reaction to advertising in social media. In: Ziemba, E. (ed.) AITM/ISM -2017. LNBIP, vol. 311, pp. 83–95. Springer, Cham (2018). https://dx.doi.org/10.1007/978-3-319-77721-4_5

Yadav, G., Kumar, Y., Sahoo, G.: Predication of Parkinson's disease using data mining methods: a comparative analysis of tree, statistical and support vector machine classifiers. In: Proceedings International Conference Computing Communication Systems, pp. 1−8. IEEE (2012). https://doi.org/10.1109/NCCCS.2012.6413034

Abdelaal, M.M.A., Sena, H.A., Farouq, M.W., Salem, A.-B.M.: Using data mining for assessing diagnosis of breast cancer. In: Proceedings International Multiconference Computing Science Information Technology, pp. 11−17. IEEE (2010). https://dx.doi.org/10.1109/IMCSIT.2010.5679647

Hamon, R., Junklewitz, H., Malgieri, G., De Hert, P., Beslay, L., Sanchez, I.: Impossible explanations? Beyond explainable AI in the GDPR from a COVID-19 use case scenario. In: FAccT 2021: Proceedings 2021 ACM Conference Fairness Accountability and Transparency, pp. 549−559. ACM (2021). https://dx.doi.org/10.1145/3442188.3445917

Sherer, J.A.: When is a chair not a chair?: Big data algorithms, disparate impact, and considerations of modular programming. Comput. Internet lawyer 34(8), 6–10 (2017)

Bonsón, E., Lavorato, D., Lamboglia, R., Mancini, D.: Artificial intelligence activities and ethical approaches in leading listed companies in the European union. Int. J. Account. Inf. Syst. 43, 100535 (2021). https://doi.org/10.1016/j.accinf.2021.100535

Shenhar, A.J., Dvir, D., Levy, O., Maltz, A.C.: Project success: a multidimensional strategic concept. Long Range Plan. 34(6), 699–725 (2001). https://doi.org/10.1016/S0024-6301(01)00097-8

Davis, K.: An empirical investigation into different stakeholder groups perception of project success. Int. J. Project Manage. 35(4), 604–617 (2017). https://dx.doi.org/10.1016/j.ijproman.2017.02.004

Mitchell, R.K., Agle, B.R., Wood, D.J.: Toward a theory of stakeholder identification and salience: defining the principle of who and what really counts. Acad. Manage. Rev. 22(4), 853–886 (1997). https://dx.doi.org/10.5465/amr.1997.9711022105

Ryan, M., Stahl, B.C.: Artificial intelligence ethics guidelines for developers and users: clarifying their content and normative implications. J. Inf. Commun. Ethics Soc. 19(1), 61–86 (2021). https://dx.doi.org/10.1108/JICES-12-2019-0138

Leyh, C.: Critical success factors for ERP projects in small and medium-sized enterprises - the perspective of selected German SMEs. In: Proceedings 2014 Federated Conference Computing Science Information Systems FedCSIS 2014, pp. 1181−1190. ACSIS (2014). https://dx.doi.org/10.15439/2014F243

Mittelstadt, B.: Principles alone cannot guarantee ethical AI. Nat. Mach. Intell. 1(11), 501–507 (2019). https://doi.org/10.1038/s42256-019-0114-4

Manders-Huits, N.: Moral responsibility and it for human enhancement. In: SAC 2006: Proceedings 2006 ACM Symposium Application Computing, pp. 267–271. ACM (2006). https://dx.doi.org/10.1145/1141277.1141340

Martin, K.: Ethical implications and accountability of algorithms. J. Bus. Ethics 160(4), 835–850 (2018). https://dx.doi.org/10.1007/s10551-018-3921-3

Wachnik, B.: Moral hazard in IT project completion. An analysis of supplier and client behavior in polish and German enterprises. In: Ziemba, E. (ed.) Information Technology for Management. LNBIP, vol. 243, pp. 77–90. Springer, Cham (2016). https://dx.doi.org/10.1007/978-3-319-30528-8_5

Ika, L.A.: Project success as a topic in project management journals. Proj. Manag. J. 40(4), 6–19 (2009). https://dx.doi.org/10.1002/pmj.20137

Weninger, C.: Project initiation and sustainability principles: what global project management standards can learn from development projects when analyzing investments. In: PMI Research Education Conference Newtown Square, PA: Project Management Institute (2012)

Turner, R.J., Zolin, R.: Forecasting success on large projects: developing reliable scales to predict multiple perspectives by multiple stakeholders over multiple time frames. Proj. Manag. J. 43(5), 87–99 (2012). https://dx.doi.org/10.1002/pmj.21289

Pinto, J.K., Slevin, D.P.: Critical success factors across the project life cycle. Proj. Manag. J. 19(3), 67–75 (1988)

Leyh, C., Köppel, K., Neuschl, S., Pentrack, M.: Critical success factors for digitalization projects. In: Proceedings16th Conference Computing Science Intelligent System FedCSIS 2021, pp. 427−436. ACSIS (2021). https://dx.doi.org/10.15439/2021F122

Włodarski, R., Poniszewska-Marańda, A.: Measuring dimensions of software engineering projects’ success in an academic context. In: Proceedings 2017 Federated Conference Computing Science Information System FedCSIS 2017, pp. 1207−1210. ACSIS (2017). https://dx.doi.org/10.15439/2017F295

Ralph, P., Kelly, P.: The dimensions of software engineering success. In: Proceedings - 2017 IEEE/ACM 39th International Conference Software Engineering, pp. 24–35. ACM (2014). https://doi.org/10.1145/2568225.2568261

Chatzoglou, P., Chatzoudes, D., Fragidis, L., Symeonidis, S.: Examining the critical success factors for ERP implementation: an explanatory study conducted in SMEs. In: Ziemba, E. (ed.) AITM/ISM -2016. LNBIP, vol. 277, pp. 179–201. Springer, Cham (2017). https://dx.doi.org/10.1007/978-3-319-53076-5_10

Leyh, C., Gebhardt, A., Berton, P.: Implementing ERP systems in higher education institutes critical success factors revisited. In: Proceedings 2017 Federated Conference Computing Science Information System FedCSIS 2017, pp. 913−917. ACSIS (2017). https://dx.doi.org/10.15439/2017F364

Miller, G.J.: A conceptual framework for interdisciplinary decision support project success. In: 2019 IEEE Technology Engineering Management Conference TEMSCON 2019, pp. 1−8. IEEE (2019). https://dx.doi.org/10.1109/TEMSCON.2019.8813650

Miller, G.J.: Quantitative comparison of big data analytics and business intelligence project success factors. In: Ziemba, E. (ed.) AITM/ISM -2018. LNBIP, vol. 346, pp. 53–72. Springer, Cham (2019). https://dx.doi.org/10.1007/978-3-030-15154-6_4

Petter, S., McLean, E.R.: A meta-analytic assessment of the delone and mclean is success model: an examination of is success at the individual level. Inform. Manage. 46(3), 159–166 (2009). https://dx.doi.org/10.1016/j.im.2008.12.006

Umar Bashir, M., Sharma, S., Kar, A.K., Manmohan Prasad, G.: Critical success factors for integrating artificial intelligence and robotics. Digit. Policy Regul. Gov. 22(4), 307–331 (2020). https://doi.org/10.1108/DPRG-03-2020-0032

Iqbal, R., Doctor, F., More, B., Mahmud, S., Yousuf, U.: Big data analytics and computational intelligence for cyber-physical systems: Recent trends and state of the art applications. Future Gener. Comput. Syst. 105, 766–778 (2017). https://doi.org/10.1016/j.future.2017.10.021

Aggarwal, J., Kumar, S.: A survey on artificial intelligence. Int. J. Res. Eng. Sci. Manage. 1(12), 244–245 (2018). https://dx.doi.org/10.31224/osf.io/47a85

Homayounfar, P., Owoc, M.L.: Data mining research trends in computerized patient records. In: Proceedings 2011 Federated Conference Computing Science Information System FedCSIS 2011, pp. 133−139. IEEE (2011)

OECD: Artificial intelligence in society. OECD Publishing, Paris (2019)

Jones, T.M.: Ethical decision making by individuals in organizations: an issue-contingent model. Acad. Manage. Rev. 16(2), 366–395 (1991)

Anscombe, G.E.M.: Modern moral philosophy. In: Hudson, W.D. (ed.) The Is-Ought Question. CP, pp. 175–195. Palgrave Macmillan UK, London (1969). https://doi.org/10.1007/978-1-349-15336-7_19

Shaw, N.P., Stöckel, A., Orr, R.W., Lidbetter, T.F., Cohen, R.: Towards provably moral AI agents in bottom-up learning frameworks. In: AIES 2018: Proceedings 2018 AAAI/ACM Conference AI, Ethics Society, pp. 271–277. ACM (2018). https://dx.doi.org/10.1145/3278721.3278728

Cohen, I.G., Amarasingham, R., Shah, A., Xie, B., Lo, B.: The legal and ethical concerns that arise from using complex predictive analytics in health care. Health Affair 33(7), 1139–1147 (2014). https://dx.doi.org/10.1377/hlthaff.2014.0048

Jobin, A., Ienca, M., Vayena, E.: The global landscape of AI ethics guidelines. Nat. Mach. Intell. 1(9), 389–399 (2019). https://doi.org/10.1038/s42256-019-0088-2

Hopkins, A., Booth, S.: Machine learning practices outside big tech: How resource constraints challenge responsible development. In: AIES 2018: Proceedings 2018 AAAI/ACM Conference AI, Ethics Society, pp. 134–145. ACM (2021). https://dx.doi.org/10.1145/3461702.3462527

Moher, D., Liberati, A., Tetzlaff, J., Altman, D.G.: Preferred reporting items for systematic reviews and meta-analyses: the prisma statement. Int. J. Surg. 8(5), 336–341 (2010). https://dx.doi.org/10.1016/j.ijsu.2010.02.007

Wieringa, M.: What to account for when accounting for algorithms: a systematic literature review on algorithmic accountability. In: FAT* 2020 - Proceedings 2020 Conference Fairness Accountability Transparency, pp. 1–18. ACM (2020). https://dx.doi.org/10.1145/3351095.3372833

Aguirre, A., Dempsey, G., Surden, H., Reiner, P.B.: AI loyalty: a new paradigm for aligning stakeholder interests. IEEE Trans. Technol. Soc. 1(3), 128–137 (2020). https://dx.doi.org/10.1109/TTS.2020.3013490

Brady, A.P., Neri, E.: Artificial intelligence in radiology—ethical considerations. Diagnostics 10(4), 231 (2020). https://dx.doi.org/10.3390/diagnostics10040231

Cobbe, J., Lee, M.S.A., Singh, J.: Reviewable automated decision-making: a framework for accountable algorithmic systems. In: FAccT 2021: Proceedings 2021 ACM Conference Fairness Accountability Transparency, pp. 598–609. ACM (2021). https://dx.doi.org/10.1145/3442188.3445921

Jacovi, A., Marasovi: formalizing trust in artificial intelligence: prerequisites, causes and goals of human trust in AI. In: FAccT 2021: Proceedings 2021 ACM Conference Fairness Accountability Transparency, pp. 624–635. ACM (2021). https://dx.doi.org/10.1145/3442188.3445923

Loi, M., Heitz, C., Christen, M.: A comparative assessment and synthesis of twenty ethics codes on AI and big data. In: 7th Swiss Conference Data Science, pp. 41–46. IEEE (2020). https://dx.doi.org/10.1109/SDS49233.2020.00015

McGrath, S.K., Whitty, S.J.: Accountability and responsibility defined. Int. J. Manag. Proj. Bus. 11(3), 687–707 (2018). https://dx.doi.org/10.1108/IJMPB-06-2017-0058