Abstract

How can we engage the public in issues relating to data, when these matters are often complex, opaque and difficult to understand? Answers to this question are urgently needed, given mounting concern about the potential negative consequences of data power and desire for data justice. The citizen jury offers one solution. Citizen juries bring diverse citizens together to debate a complex issue of social importance and make a policy recommendation. In this chapter, we reflect on a citizen jury experiment where participants discussed their criteria for the design of ethical, just and trustworthy data-driven systems. We argue that the synthesis of participants’ opinions resulting from the deliberative approach is a unique strength of the citizen jury as a method for researching public perceptions of data power. However, we also argue that experts, brought in to citizen juries to share their expertise and inform deliberation, shape the process and the conclusions that citizen jurors draw. In the social sciences, it is widely acknowledged that methods shape empirical research findings, yet this is rarely discussed in data studies, in research into public perceptions of datafication or in relation to citizen juries. We call for greater critical thinking about methods in the field of critical data studies, and in so doing, we contribute to the advancement of the field.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

Introduction: Citizen Juries as Research Methods

Understanding public perceptions of data-drivensystems is an essential component of ensuring that data and related practices work “for people and society”, to quote the strapline of the UK Ada Lovelace Institute. In other words, engaging with publics about issues relating to data plays an important role in working towards data justice. And yet, data-related matters are complex and not easy to understand. The citizen jury offers a solution to the challenge of asking members of the public their views about complex data practices. Citizen juries are policy making aids, where diverse citizens are brought together to debate a complex issue of social importance and make a policy recommendation. They are increasingly used in research contexts (e.g. by Roberts et al., 2020) because, it is argued, they value citizens’ experiences and give citizens the opportunity to contribute their informed opinions about issues that materially impact their lives. In citizen juries, citizens are seen to have valuable experiential knowledge that they contribute to “a dynamic process of critical scrutiny of expert authority” (Moore, 2016).

A further strength of the citizen jury is that it gives access to collective views which are formed and given expression through the citizen jury process, something which is not possible through methods which produce individual accounts, like interviews. In contrast, the citizen jury format allows for the expression of community values, some writers claim (e.g. Geleta et al., 2018). This is achieved through the dialogic, deliberative process which is at the heart of citizen juries, through which, advocates argue, “participants can come to appreciate the concerns of others” (Evans & Kotchetkova, 2009, p. 628). Thus citizen juries move beyond the expression of multiple opinions; instead, they synthesise opinions through a deliberative process.

Central to this deliberative process are expert witnesses, who are brought in to present evidence and so facilitate an informed discussion. In the literature on citizen juries, expert witness selection is acknowledged as important. Roberts et al. (2020), who ran citizen juries about wind farms, argue that “the basis of witness recruitment for evidence-giving […] should be the level and relevance of expertise, and inclusion of a diversity of relevant perspectives” (Roberts et al., 2020, p. 9). They note that who experts are, their institutional affiliation and how clearly they can communicate and answer questions about complex subjects within a short and accessible presentation all matter. Evans and Kotchetkova (2009) argue that having the wrong experts can skew deliberations. Roberts et al. (2020) concur, noting that experts, the expertise they present and the manner in which they present it can sometimes have “too much influence” on how issues are framed and therefore how they are considered by participants.

Citizen juries are of growing interest to researchers and other stakeholders interested in understanding public perceptions of data-driven systems and what might make them trustworthy. In the UK, citizen juries have been used to research public opinion on matters such as ethical AI or fair data-sharing (e.g. the Information Commissioner’s Office (ICO, 2019), the Royal Society for the Encouragement of Arts, Manufacture and Commerce (RSA, 2018) and the Ada Lovelace Institute (2020), working with Understanding Patient Data and the Wellcome Trust). Like the literature discussed above, reports on these citizen juries also go some way towards acknowledging the role that experts play in shaping discussion and deliberation. For example, reporting on research into explanations of AI decisions, the ICO (2019) notes that emphasising the accuracy of AI decision systems and not acknowledging their limitations may have led jurors to trust AI decisions to be accurate and not give adequate consideration to the potential utility of explanations. The RSA conclude their report on their Forum on Ethical AI by noting that citizen jury discussions tend to be “framed from the top down, not reflecting the most pertinent questions to participants” (2018, p. 48).

These reflections notwithstanding, there is broad enthusiasm about the potential of citizen juries for capturing public perceptions of the ambivalences of data power, as witnessed in their growing use and claims about what they enable. In this chapter, we argue that this enthusiasm needs to be somewhat tempered. We propose that citizen juries can be usefully conceived through the lens of two sub-fields of sociology: the sociology of knowledge and expertise and the social life of methods, or SLOM. In the former, knowledge and expertise are seen as far from neutral, despite assumptions to the contrary. As Harding bluntly put it, they emerge from science which is shaped by “the institutionalised, normalised politics of male supremacy, class exploitation, racism and imperialism” (Harding, 1992, p. 568). In SLOM, methods are understood to be “shaped by the social world in which they are located” and to “help to shape that social world” (Law et al., 2011, p. 2). Methods constitute the things they claim to represent: “they have effects; they make differences; they enact realities; and they can help to bring into being what they also discover” (Law & Urry, 2004, pp. 392–3). The citizen jury as research method is no exception.

We build on these schools of thought to argue that citizen juries, like all methods, shape their own outcomes, not least because the expertise which informs deliberation is itself socially shaped. This is not to write off citizen juries, but rather to recognise their limitations alongside their strengths. In this chapter, we tell the story of a citizen jury that we held in the summer of 2019, to explore the usefulness of this approach for eliciting public views on data-driven systems and data management models. We argue that the synthesis of participants’ opinions which results from the deliberative approach is a strength that is unique to the citizen jury as a method for researching public perceptions of data power. At the same time, we propose that the expertise which informed deliberation was shaped by the experts called in to provide it and by broader social structures, and it shaped the way that deliberation proceeded and the conclusions that citizen jurors drew. We also argue that the citizen jury facilitator played a role in shaping its process.

Our chapter opens up two new perspectives on critical data studies. First, we propose the citizen jury as a mechanism to foster informed public participation in discussions about data power. Citizen juries can also contribute to data justice, because they enable civic engagement in data-related decision-making. Second, we call for more critical attention to methods and to the role that critical data studies researchers themselves play in framing and shaping their research. We conclude that there is a need for more reflection and greater transparency about researcher positionality in critical data studies and the ways in which it shapes how we understand data power, data justice and related matters.

The chapter proceeds with a brief discussion of literature about public perceptions of datafication in which we situate our research, which highlights the gap that our research aimed to fill. This is followed by a discussion of our citizen jury process, the conclusions that participants drew and reflection on the citizen jury as method.

Public Perceptions of Datafication

Interest in how the public perceives datafication has grown in recent years, amongst academic researchers, policy-makers and practitioners keen to understand citizens’ views of the new role of data in society (see Kennedy et al., 2020a for an extensive review of research in this area). Understanding public perceptions is seen as increasingly pressing, in order to address datafication’s trust problem (Royal Statistical Society, 2014) and to advance data justice. A major theme in recent research into public perceptions, therefore, is whether people trust data practices, by which we mean the systematic collection, analysis and sharing of data and the outcomes of these processes. Often this is examined by surveying whom people trust with their data (e.g. Dodds, 2018; ICO/Harris Interactive, 2019; Robinson & Dolk, 2015). Research into why people trust or distrust different institutions (such as Ipsos Mori, 2018) finds that feeling a lack of control over personal data sometimes leads to distrust. Where people do not trust organisations, this is often because of concern that organisations will sell or share data without consent in the case of the private sector or that they are not secure in the case of the public sector. Some research concludes that the public need to be informed about data practices in order to trust them and that “appropriate safeguards, accountability and transparency” are a way of building trust in data practices (Hopkins Van Mil, 2015, p. 1).

In contrast to the findings of surveys, qualitative research challenges simplistic understandings of trust and distrust as clearly distinct from each other. Such research draws attention to the multiple, interrelated, context-dependent layers of trust and distrust that people feel in their interactions with data practices. For example, in an article about focus group research that we carried out with BBC audiences, we, the authors of this chapter, highlight the complex range of factors that come together to engender or undermine trust in data practices (Steedman et al., 2020). These relate to whether people trust the institution that is gathering data in general, whether they trust it specifically to manage their data securely, degrees of trust in the broader data ecosystem and even whether they trust themselves to manage their own data carefully and thoughtfully. As a result, trust, scepticism and distrust sometimes co-exist. We argue that distrust is often appropriate, if organisational data practices are not deemed trustworthy, as in the case of scandals about data breaches (see also Pink et al., 2018 for another qualitative exploration of trust and data).

Qualitative research also calls into question the assumed relationship between trust and understanding, which is implied in the belief that clear information will result in greater trust found in some survey research (e.g. a report by Doteveryone (2018) proposes that without understanding, “it is likely that distrust of technologies may grow”). Pink et al. (2018) show that trust has affective dimensions which will not necessarily be addressed by clear and legible information. Exploring this relationship between trust, understanding and feelings about data practices is necessary to understand public perceptions and, in turn, move towards greater data justice, so we did just that in our citizen jury.

We focused on two areas: (1) trust in data-driven practices and (2) trust in data management models, the latter because they form an important part of the infrastructural arrangements within which data practices take place. There is increasing experimentation with alternative approaches to data management as a result of the individual rights to access and the portability of personal data that are enshrined in GDPR, and yet, there has been little attention paid to what the public thinks about these models and whether they are deemed just and trustworthy. We addressed this gap in a survey of the UK public undertaken in May 2019 (Hartman et al., 2020), which found that approaches that give people control over data about them, that include oversight from regulatory bodies or that enable people to opt out of data gathering were preferred. We carried out our citizen jury to explore the thinking behind these preferences (the why behind the what), whether, after informed deliberation, these characteristics remain important, and the role of feelings and understandings in the formation of preferences. We discuss the citizen jury in more detail in the next section.

Our Citizen Jury Process

Citizen juries often last for several days and bring in diverse experts to present evidence from different perspectives. In citizen juries, experts are understood to have specialist knowledge of the domain and issues under consideration, although we acknowledge that citizens are experts on their own lives, bringing valuable experiential knowledge to the deliberation (see Moore, 2016). Including experts in citizen juries is costly, requiring significant human and financial resources. We adapted the citizen jury model to fit with our limited resources and experimental aims, whilst also ensuring that we incorporated the key element of informed deliberation. Our citizen jury lasted for one day and, more importantly for our argument here, the experts who spoke to the participants were two of the authors of this chapter, Helen, who presented on the benefits and risks of data-driven systems, and Rhianne, who presented on the advantages and disadvantages of different data management models.

Helen and Rhianne are experts in the topics that they spoke about. Helen has been researching and teaching about the social implications of digital and data-driven systems in society for over 20 years and has played a key role in establishing the field of critical data studies in which this edited collection is situated. Rhianne is research lead in Human Data Interaction at BBC R&D and has been immersed in debates, developments and practices relating to data-driven technology for over 10 years. Roberts et al. argue that the task of being a citizen jury expert is difficult, and experts sometimes need to be trained in how to present their material effectively. This was not the case for us: as organisers of the citizen jury and familiar with the format, we knew what was required. Roberts et al. go on to distinguish between what they call “neutral experts”, who “explain the wider context and cover the range of issues that are relevant to the topic, rather as a teacher might” (2020, p. 17), and “advocate experts”, who present detailed information from their own stance on the topic under consideration. As our citizen jury lasted for one day and included only two experts, we needed neutral, not advocate, experts, so Helen and Rhianne drew on their knowledge of relevant debates to present a balance of perspectives. Given our extensive teaching experience, we had the skills, experience and breadth of knowledge required for this task.

However, as we note above, expertise is never neutral. It is shaped both by social structures and by the individual experts providing it—in our case, two white, (now if not always) middle-class professional women. As part of an ongoing debate about the relationship of expertise and political context, Jasanoff (2003) argues that expertise is neither neutral nor innocent. So who Helen and Rhianne are, our institutional affiliations, communication styles, how clearly we communicate and answer questions—to paraphrase the characteristics of experts that Roberts et al. (2020) claim matter—played a role in shaping the deliberation that took place and the conclusions that jurors reached. But because methods shape research findings, as SLOM literature proposes (Law et al., 2011), this would have been the case with all experts, regardless of their degree of involvement in the research. In one sense, it was not a problem that two of us were the presenting experts, because the deliberative process would have been shaped by any other experts we might have selected.

The role of the facilitator in citizen juries is also important, yet it is rarely acknowledged. Smith (2009) draws attention to the impact that different facilitation styles can have on the deliberative process, yet he notes that the values, principles and philosophy that underpin facilitator practice are seldom considered in the literature. In our case, Robin, the second author of this chapter, was the facilitator. Facilitators, like the methods they deploy, are “shaped by the social world” and “help to shape that social world” (Law et al., 2011, p. 2). Like methods and experts, they have effects. Robin, a white, middle-class, early career researcher interested in diversity in the media industries, was present for the whole of the citizen jury, whereas Helen and Rhianne only attended the expert sessions in which they presented. Thus Robin played a role in “bringing into being” the data that emerged from the citizen jury (Law & Urry, 2004). We say more about our roles as experts and facilitator below.

Twelve people participated in our citizen jury. They were from a range of socio-economic backgrounds and ethnicities, were of diverse ages, a mix of genders, and some of them had disabilities or health conditions. We selected these particular demographics because they have been shown to be important in shaping views on datafication in previous research (Kennedy et al., 2020b). Jurors worked in a range of industries including the service industry, healthcare, financial services, and travel, and some were retirees and students. Thus we included a diverse mix of people in our citizen jury. We used a market research company to recruit them, as this recruitment method has been shown to be effective for recruiting diverse participants for citizen juries (Street et al., 2014). Their task was to come up with criteria for trusted interactions with data-driven systems and for building trustworthy models for managing data. The day was divided into three sessions: in the morning participants discussed criteria for trusted interactions with data-driven systems and in the afternoon they discussed criteria for trusted ways of managing data. These two sessions included discussion amongst jurors, a presentation by and question and answer slot with an expert, and drafting and re-drafting of criteria. At the end of the day, in the third session, we asked participants to bring their two sets of criteria together to answer the question: what are the most important criteria for the design of ethical, just and trusted data-driven systems? Table 1 provides further detail on how we structured the citizen jury. The jury was recorded and transcribed for analysis, and participants were each given a £70 voucher to thank them for their contributions.

To address our interest in the role of feelings in trust in data practices, participants were asked to use what we called “feelings notes” to track how they felt at key moments and to trace whether their feelings changed over the course of the day. This involved writing their feelings on Post-it Notes at structured moments during the citizen jury. Each participant was assigned a number so the feelings of individuals could be traced throughout the day. We felt that it was important to attend to emotions because the citizen jury approach has been criticised for sidelining feelings and emphasising expertise and rational discussion, much like a legal jury (e.g. by Escobar, 2011). In our previous research (Kennedy et al., 2020b), we have found that thoughts and feelings about data practices are connected and understanding how people feel is important in comprehending their views about more just data futures. Moreover, Barnes (2008) argues that bringing emotion into deliberation makes the process more inclusive of diverse groups. Our participants, who we call jurors hereafter, started the day with a wide range of feelings—anxiety about or support for data practices, doubts about their own understanding and contradictory combinations of these emotions. We map their feelings throughout the day in the discussion of proceedings that follows. These feelings notes were collated in a table and analysed in conjunction with the transcripts to add an additional layer of contextual analysis regarding participants’ feelings throughout the day.

Session 1: What Are Your Criteria for Trusted Interactions with Data-Driven Systems?

We began the citizen jury with explanations of key terms that would surface during the day, such as “data-driven”, “artificial intelligence” and “automated decision-making”. We gave participants a handout explaining these terms that they could consult as needed throughout the day. We then proceeded to ground our discussion of data-driven technologies in concrete contexts, discussing four types of data-driven systems, giving examples in practice and explaining how they work. These were personalisation, voice assistants, data scoring and facial recognition technology. Jurors made lists of the benefits and risks of each type of data-driven system and then responded to these questions that Robin posed to them: “to what extent do those benefits/risks lead you to trust or not trust the data-driven system?” and “what would make it trustworthy for you?”

In regard to the trustworthiness of data-driven systems, most jurors agreed that it depends on context. Some jurors accepted personalisation in some contexts, whereas others did not trust it in any context. Jurors tended to be suspicious of voice assistant devices like Alexa, used in the home. They made Alexander, for example, feel “uneasy” because “[o]bviously with Alexa, to activate it you have to say ‘Alexa’, so it’s obviously always listening for that”. Some jurors were concerned about how voice assistant technology could evolve. For example, Allyssa said, “I think I trust it right now, but in the future I’m not sure, depending on how much they develop”. This was also a concern in relation to data scoring. There was some trust amongst jurors because it was perceived to be less biased than human decision-making. However, Lizzy noted that data scoring systems seem trustworthy “at the moment” but “in the future it scares me what it could become”. Jurors noted a relationship between trust in a data-driven system and regulation. Voice assistants would be more trustworthy if there was a strong regulatory framework, some jurors noted.

After this discussion, jurors were asked to draw up criteria for trusted interactions with data-driven systems, which they could modify later in the day after they had heard from an expert witness. Every criterion that a jury member suggested was added to a list. The open format aimed to encourage the free sharing of ideas. The interaction between the jurors and the facilitator was critical to this process. Jurors stated criteria and the facilitator clarified what they meant and formulated the phrasing to be used in the notes that were taken. In this process, Robin—as facilitator—tried not to add interpretation or meaning, but nonetheless, she played a role in shaping how criteria were recorded. We present the criteria that jurors came up with at this and subsequent points throughout the day in Table 2.

At the end of this initial discussion, jurors felt more concerned about and less trusting of data-driven services than they had felt beforehand. Jurors who began the day expressing positive feelings nuanced these feelings, with comments such as “feel comfortable in this moment in time but a little nervous for the future”. Jurors who began the day with strong negative feelings noted that these negative feelings had strengthened, and no juror reported feeling more positive after the first discussion. Deliberating data-driven services led jurors to feel more negatively towards them.

After a break, we held our first expert session. Acknowledging, like Harding and others, that neutral expertise is not possible, we aimed for balance in these sessions. Helen outlined five benefits and five risks that have been identified by other experts on data-driven systems in the first session, and later in the day, Rhianne summarised the advantages and disadvantages that have been noted in relation to different data management models. (In the interests of transparency, we have shared the slides and notes that we used.)Footnote 1

The benefits of data-driven systems that other experts have identified and that Helen discussed were enhanced human capability, enhanced understanding, enhanced communication, removal of error and human bias and wide-ranging economic benefits. The risks were concerns relating to ownership and control; less privacy and more surveillance; error and inaccuracies; bias, inequality, discrimination; and technological dependency (i.e. the belief that data-driven systems are accurate because they appear objective and scientific and subsequent deferral to them). The presentation was followed by a question and answer session, most of which was devoted to discussing the final risk Helen presented. Helen’s decision to talk about this risk shaped jurors’ discussion and eventually their criteria, as we describe below.

After this session, jurors revisited their criteria for trusted interactions with data-driven systems. They added six new criteria, shown in the middle row of the first column of Table 2, four of which were directly related to the final risk that Helen identified, such as “data should inform decision-making but not make decisions”, and “data systems should always have human oversight”. This demonstrates how the expert presentation and related discussion shaped the deliberative process. In the first draft of criteria, transparency, personal control, explanations, regulation and sanctions were identified as important. After the expert talk, jurors started thinking about the process of data-driven decision-making. They concluded that decisions should not be based on data alone and that human oversight of data-driven systems is needed.

Jurors then ranked their full list of 17 criteria, tweaking and rephrasing them in the process. Once the list was complete, each juror was asked to rank them from most to least important. After the jury, we compared individual rankings and produced a top five list, shown in the top row of the first column in Table 2. Interestingly, none of the criteria added after the expert talk were among the overall top five criteria at the end of the morning session. This suggests that the expert talk had some influence on jurors’ views, but that their own initial views remained important to them as the jury progressed.

At the end of the morning session, jurors produced further feelings notes. Most jurors expressed ambivalent feelings about data-driven systems. One felt that “they are useful if used ethically and securely” and another that they “can do some good but only in the right hands and with the right controls in place”. Jurors felt that having control over data-driven systems was important—either personal control or having “the right controls in place”. These feelings appear to reflect the nuance that was presented in the expert talk: data-driven systems offer some benefits, but they also pose some risks. The feelings expressed might also be described as deliberative—they reflect the thoughtful weighing of options that had taken place.

Session 2: What Are Your Criteria for a Trusted Way of Managing Data?

In the second session of the day, jurors discussed criteria for trusted ways of managing data. They completed feelings notes about data management models before the expert talk on this topic, most of which acknowledged a lack of knowledge. This is something we had expected, and it informed our decision to hold the expert presentation of data management models before asking jurors to discuss them.

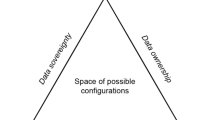

In this session, Rhianne gave an expert talk on five approaches to data management. In debates about data management, approaches which are subject to discussion and experimentation include personal data stores and data trusts, along with more community-based or commons-based approaches such as data collectives or cooperatives (Lehtiniemi & Ruckenstein, 2019; O’Hara, 2019). We explored each of these, as well as both the commonplace, existing approach whereby digital services are responsible for managing data and an option to opt out. These approaches are not mutually exclusive, but we separated them out so jurors could deliberate distinguishing features and potential benefits and drawbacks. For each example, arguments for and against were presented. We have discussed these and other models extensively elsewhere (Hartman et al., 2020). In the interest of brevity, we sum them up here as follows:

-

1.

Existing “terms of service” approach: digital services control people’s data in exchange for providing them with a service.

-

2.

Personal Data Store (PDS): a secure place where individuals can store and control data about them and who gets access to it.

-

3.

Delegating responsibility to oversee data about you: this could be to an independent person, organisation or public body.

-

4.

Data collectives: in which data is seen as a collective asset/public good which is managed collectively.

-

5.

Opting out of online data collection, storage and use.

The question and answer session following this expert talk focused on the practicalities of how the models would work, for example, if people wanted to change from one model to another, the costs of the different models, and the types of data that would be covered under them. In their subsequent discussion of the models, these questions continued to be significant, and jurors identified potential benefits and risks for all models. In regard to the existing terms of service model, some jurors felt that “If you’re thorough, and if it is clear, then […] you should know exactly what you’re signing up to” (Matthew), whereas others noted that people tend not to read terms and conditions in detail and that companies are aware of this and use it to their advantage.

Jurors asked lots of questions about other, less familiar models like the PDS. Some of them liked the idea of having all of their data in one place, and they felt that the control over their own data that this model enabled made it more trustworthy than other models. In contrast, some were concerned by the idea of all of their data being in one place, as this might make it less secure. Jurors were also concerned about how new models could be introduced: if the PDS model was adopted, would Facebook still control some historical personal data, they wondered.

For some jurors, the delegated responsibility model was trustworthy because it meant that data and related decision-making were in the hands of experts. Others were concerned that such an approach might be costly and impractical to introduce. One participant, Matthew, felt that delegated responsibility was less preferable to the PDS model for some types of data, but not all. He liked the idea of personal control over some of his data, but in the case of medical or health data, the delegated responsibility model felt more trustworthy than the PDS. Other jurors agreed with this view.

Jurors liked the democratic potential of the data collective model—Gillian described it as a “democratic way of working” and David felt it was more trustworthy than other models because “collective interest is involved”. Others were concerned about whether it would be effective and wondered why introducing a DIY approach was preferable when the delegation model puts data-related decision-making in the hands of experts. Some jurors considered the idea of merging a collective model and a delegation model, so that both professionals and citizens are involved.

Finally, some jurors loved the idea of opting out of data collection: it sounded easy and enabled people to make their own decisions about whether their data is collected. Some were concerned that choosing to opt out of data collection might not erase historical data that had been gathered about people and they felt that this model was less trustworthy because what would happen to past and present data was unclear. Some jurors argued that there are benefits to data collection, for example, in relation to health or disease prevention as in the COVID-19 pandemic, and for this reason were not in favour of opting out.

After some discussion, jurors ranked models individually using the Mentimeter platform (an online tool for quizzes and ranking exercises). This enabled jurors to see how their rankings compared with those of other jurors. Combining these individual rankings, the option of opting out was preferred, followed by the PDS, and then the existing terms of service model. Delegating responsibility was the fourth preference, and the data collective approach was the least preferred of the five options. This is only partly consistent with the findings of our survey on the same topic (Hartman et al., 2020), in which opting out and personal control (which the PDS offers) were seen as desirable, but where the terms of service model were by far the least preferred approach. We suggest that these differences arise from the deliberative character of the citizen jury, and they confirm our argument that our methods shaped our findings. Jurors’ deliberation focused on the practical difficulties of adopting new data management models and this made them doubt whether they could realistically be introduced. We suggest that this conclusion accounts for the relatively high ranking of the terms of service model. Furthermore, focusing only on combined rankings erases the nuance that was evident in jurors’ deliberation, for example, about the possibility of combining models. This nuance was better captured in jurors’ feelings notes about this exercise, in which they expressed caution about the models, which were seen to have “potential”, but were yet to be “figured out”.

After hearing from and questioning the expert witness and undertaking their deliberation, jurors came up with nine criteria in response to the question “What are your criteria for a trusted way of managing data?”, which they then ranked in order from most to least important. As with the first session, every criterion that was suggested was added to the list using the facilitator’s suggested phrasing. Jurors then ranked the criteria using Mentimeter and we identified the top five criteria after the jury was complete. These are shown in the top row of the middle column of Table 2.

Section 3: What Are the Most Important Criteria for the Design of Ethical, Just and Trusted Data-Driven Systems?

In the final session of the day, participants were asked to come up with their top five criteria to address the overarching question: what are your most important criteria for the design of ethical, just and trusted data-driven systems? Unlike in the previous sessions, in which we identified top criteria as part of our analysis, participants were tasked with collectively agreeing on the top five criteria and on their ranking. Whereas the first two sessions aimed to record the full spectrum of individual views on criteria, here we wanted the jurors to synthesise their views and come up with a collective recommendation. We anticipated that arriving at a consensus may be difficult, but in fact, jurors rapidly reached agreement on the five most important criteria for the design of ethical, just and trusted data-driven systems. Keen to ensure that the views of their co-jurors were reflected in the final list of criteria, they did so by sometimes combining multiple criteria and tweaking phrasing, as can be seen in Table 2. These criteria can be seen in the final column of Table 2.

Table 2 shows that the final criteria that jurors produced were very similar to those produced in the penultimate exercise of the day, which in turn built on criteria developed earlier. This suggests that the synthesis process was ongoing as it took place throughout the citizen jury. Some criteria were important from the beginning of the day, such as transparency and control. Some became more important as experts presented evidence, such as the need for human oversight of data-driven decisions, or as jurors deliberated, such as the need for regulation and sanctions. The final list of criteria, addressing the question: “What are the most important criteria for the design of ethical, just and trusted data-driven systems?” combines criteria which had previously been listed separately—transparency and accountability, regulation and sanctions—which suggests that jurors began to see a relationship between criteria as they deliberated them. Concluding feelings notes suggested that jurors could imagine a trustworthy data future based on the criteria that they produced. One wrote: “If most important criteria was implemented I would be confident in saying they were just and trusted data driven systems.”

Reflections on Findings and on the Citizen Jury as Method for Researching Public Trust in Datafication

With this chapter, we add the citizen jury to critical data studies’ methodological toolkit. We argue that with its aim of fostering informed public participation and civic engagement in data-related decision-making, it can facilitate data justice. In the citizen jury we describe here, criteria identified for the design of ethical, just and trustworthy data-driven systems echo some of the findings of previous research into public perceptions of data practices, including our own survey (Hartman et al., 2020). Like our jurors, participants in our survey preferred approaches that give people control over data about them, that enable people to opt out of data gathering or that include oversight from regulatory bodies. Other research has found that, like our jurors, people want transparency about and accountability in relation to data practices (e.g. Cabinet Office, Citizens Advice, 2016; see Kennedy et al., 2020 for more examples). Jurors’ final criterion, that there are always human fail-safes, has not been identified as a preference in previous research, perhaps because it has not been considered as an option within the research process. Although the RSA (2018) found a desire for oversight over data-driven decision-making, the human dimensions of such oversight have not previously been prioritised.

Following Barnes (2008), and informed by the findings of our own prior research (e.g. Kennedy et al., 2020b), we tracked feelings throughout our jury to acknowledge their importance and include diverse groups. On the whole, jurors’ thoughts and feelings changed throughout the day as they engaged in the process of deliberation. In the final feelings notes and as evidenced in the quote with which we end the previous section, five jurors explicitly referenced the criteria they had drafted. Their valuation of their own criteria is indicative of their understanding of the ambivalences of data power, at one and the same time potentially beneficial and potentially risky, and in need of careful governance. This also suggests that some jurors felt that one way to ensure that data-driven systems are ethical, just and trustworthy is to account for the views of citizens. The shifts that we saw in jurors’ thoughts and feelings also highlight the democratic possibilities that citizen juries afford, which suggests that views can change when people are brought together and when they learn from one another, as well as from facilitators and experts.

The finding that human fail-safes matter provides evidence of our argument in this chapter that experts are not neutral and that methods shape findings. Helen took the decision to include “technological dependency”—or the belief that data-driven systems are accurate because they appear objective and scientific, which in turn causes people to defer to them—as the final risk that she discussed in her expert presentation. She did this because this has been identified as a problem by other expert commentators (such as Eubanks, 2017). Nonetheless, this decision shaped the subsequent question and answer session which was dominated by discussion of this issue, which, in turn, shaped the deliberation that followed, in which jurors started thinking about the process of data-driven decision-making. Prior to this expert presentation, jurors were principally concerned with whatdata-driven systems do. Afterwards, they became concerned with howdata-driven systems do things.

The facilitator also shapes the outcomes of citizen jury research. As facilitator, Robin played a key role in generating the criteria, working to distil and translate the ideas of participants into words that could be added to criteria lists, often after a participant made a long statement or thought out loud. And yet, as Smith (2009) notes, facilitator style, values and philosophy are rarely acknowledged as a contributing factor in citizen jury literature. Participants also shape findings, and there is also little discussion of their role in the literature. The RSA’s report on their Forum on Ethical AI is an exception, as it notes that juror selection is important in shaping how a citizen jury proceeds—like all research, the results of a citizen jury depends on who is in the room. Street et al.’s (2014) systematic review of citizen jury studies is another exception, as it recognises that both juror recruitment and moderation are important.

Finally, the jurors themselves also influenced findings. For example, one juror worked in financial services and was very familiar with credit scoring, which shaped the discussion about kinds of data scoring as other jurors listened attentively to what she had to say. While no juror was an expert on data-driven systems or data management models, their experiential and professional knowledge influenced their views and subsequently the course of their deliberations. We cannot say whether the demographic profile of participants influenced outcomes because our sample is small and, like all qualitative research, we do not consider participants to be representative of the demographic groups to which they belong. Other research has addressed this question of difference and inequality (e.g. Kennedy et al., 2020a), and there is more research to be done in this regard.

Implicit in Street et al.’s comment on juror recruitment and moderation is a suggestion that it is possible to do both of these things in ways that minimise juror and facilitator effects. We do not agree. We recognise the value of citizen juries both in centring citizens’ experiential knowledge and in the deliberation and synthesising of collective views that they enable. But we also believe that all methods have effects, that they “bring into being what they also discover”, as Law and Urry (2004, p. 393) put it. We have been researching public views and feelings about datafication for a number of years (see Hartman et al., 2020; Kennedy, 2016; Steedman et al., 2020) and we have used a variety of methods to do so, including interviews, focus groups, surveys, digital methods and now a citizen jury. Some of us have also carried out an extensive review of research into public understanding and perceptions of data practices (Kennedy et al., 2020a). In this review, we note that research methods, questions asked, how findings are interpreted and presented, the disciplinary background and political orientation of researchers all play a role in shaping research findings and the claims that are made. We argue that “[t]he wording of a survey question, the effect of interviewer presence, the framing of an issue and the impact of others in a focus group setting can all affect responses to research questions” (Ibid., p. 44).

The review discussed above and our own empirical research, including the citizen jury that we discuss in this chapter, lead us to conclude that all empirical research findings are shaped by their methods. Yet, as we state in our review, these well-known issues in social research are not widely acknowledged in research into public understanding and perceptions of data practices. This is not to argue that such research should be abandoned; rather, it is an argument that suggests the field might benefit from more reflection and greater transparency about positionality and approach. As Law et al. put it, it is important to think critically about methods, “about what it is that methods are doing, and the status of the data that they’re making” (Law et al., 2011, p. 7). Neither the citizen jury nor research into public perceptions of datafication should be exempt from this kind of critical thinking. The contribution to critical data studies that we are trying to make in this chapter is to call for more critical attention to methods and to the role that researchers play in framing and shaping our research and the ways in which we understand data justice and civic engagement in datafied societies.

The review of research cited above (Kennedy et al., 2020a) also found that context influences perceptions of data practices. At the time of writing, exploring the effects of context on perceptions would mean researching whether and how the COVID-19 pandemic informs perceptions is necessary. Because this chapter focuses on research carried out before the pandemic, this important issue has not been discussed here. However, at the time of writing it is a central part of our current research projects, the results of which are forthcoming.

References

Ada Lovelace Institute, understanding patient data & the Wellcome Trust. (2020). The foundations of fairness for NHS health data sharing. https://www.adalovelaceinstitute.org/the-foundations-of-fairness-for-nhs-health-data-sharing/

Barnes, M. (2008). Passionate participation: Emotional experiences and expressions in deliberative forums. Critical Social Policy, 28(4), 461–481. https://doi.org/10.1177/0261018308095280

Citizens Advice—Illuminas. (2016). Consumer expectations for personal data management in the digital world. https://www.citizensadvice.org.uk/Global/CitizensAdvice/Consumer%20publications/Personal%20data%20consumer%20expectations%20research.docx.pdf

Dodds, L. (2018, July 5). Who do we trust with personal data? https://theodi.org/article/who-do-we-trust-with-personal-data-odi-commissioned-survey-reveals-most-and-least-trusted-sectors-across-europe/

Doteveryone. (2018). People, power and technology: The 2018 digital attitudes report. Doteveryone. https://doteveryone.org.uk/report/digital-attitudes/

Escobar, O. (2011). Public dialogue and deliberation. A communication perspective for public engagement practitioners. Edinburgh Beltane – UK Beacons for Public Engagement. https://oliversdialogue.wordpress.com/2013/08/01/public-dialogue-and-deliberation-a-communication-perspective-for-public-engagement-practitioners/

Eubanks, V. (2017). Automating inequality: How high-tech tools profile, police, and punish the poor. St. Martin’s Press.

Evans, R., & Kotchetkova, I. (2009). Qualitative research and deliberative methods: Promise or peril? Qualitative Research, 9(5), 625–643. https://doi.org/10.1177/1468794109343630

Geleta, S., Janmaat, J., Loomis, J., & Davies, S. (2018). Valuing environmental public goods: Deliberative citizen juries as a non-rational persuasion method. Journal of Sustainable Development, 11(3), 135. https://doi.org/10.5539/jsd.v11n3p135

Harding, S. (1992). After the neutrality ideal: Science, politics, and “strong objectivity”’. Social Research, 59(3), 567–587.

Hartman, T., Kennedy, H., Steedman, R., & Jones, R. (2020). Public perceptions of good data management: Findings from a UK-based survey. Big Data & Society, 7(1). https://doi.org/10.1177/2053951720935616

Hopkins Van Mil. (2015). Big data: Public views on the use of private sector data for social research. A findings report for the Economic and Social Research Council. https://esrc.ukri.org/files/public-engagement/public-dialogues/public-dialogues-on-the-re-use-of-private-sector-data-for-social-research-report/

ICO – Harris Interactive. (2019). Information rights strategic plan: Trust and confidence. https://ico.org.uk/media/about-the-ico/documents/2615515/ico-trust-and-confidence-report-20190626.pdf

ICO / Information Commissioner’s Office. (2019). When it comes to explaining AI decisions, context matters. https://ico.org.uk/about-the-ico/news-and-events/news-and-blogs/2019/06/when-it-comes-to-explaining-ai-decisions-context-matters/

Ipsos Mori. (2018). The state of the state 2017–2018: Austerity, government spending, social care and data. https://www.ipsos.com/sites/default/files/ct/publication/documents/2017-10/thestate-of-the-state-2017-2018.pdf

Jasanoff, S. (2003). Breaking the waves in science studies: Comment on H.M. Collins and Robert Evans, ‘The Third Wave of Science Studies’. Social Studies of Science, 33(3), 389–400. https://doi.org/10.1177/03063127030333004

Kennedy, H. (2016). Post, mine, repeat: Social media data mining becomes ordinary. Palgrave Macmillan. https://doi.org/10.1057/978-1-137-35398-6

Kennedy H., Oman, S., Taylor, M., Bates, J., & Steedman. R. (2020a). Public understanding and perceptions of data practices: A review of existing research. https://livingwithdata.org/project/wp-content/uploads/2020/05/living-with-data-2020-review-of-existing-research.pdf

Kennedy, H., Steedman, R., & Jones, R. (2020b). Approaching public perceptions of datafication through the lens of inequality: A case study in public service media. Information, Communication & Society. https://doi.org/10.1080/1369118X.2020.1736122

Law, J., Ruppert, E., & Savage, M. (2011). The double social life of methods. CRESC Working Paper, 95. http://www.open.ac.uk/researchprojects/iccm/library/164

Law, J., & Urry, J. (2004). Enacting the social. Economy and Society, 33(3), 390–410. https://doi.org/10.1080/0308514042000225716

Lehtiniemi, T., & Ruckenstein, M. (2019). The social imaginaries of data activism. Big Data & Society, 6(1), 1–12. https://doi.org/10.1177/2053951718821146

Moore, A. (2016). Deliberative elitism? Distributed deliberation and the organization of epistemic inequality. Critical Policy Studies, 10(2), 191–208. https://doi.org/10.1080/19460171.2016.1165126

O’Hara, K. (2019). Data trusts: Ethics, architecture and governance for trustworthy data stewardship. University of Southampton. https://doi.org/10.5258/SOTON/WSI-WP001

Pink, S., Lanzeni, D., & Horst, H. (2018). Data anxieties: Finding trust in everyday digital mess. Big Data & Society, 5(1), 1–14. https://doi.org/10.1177/2053951718756685

Roberts, J. J., Lightbody, R., Low, R., & Elstub, S. (2020). Experts and evidence in deliberation: Scrutinising the role of witnesses and evidence in mini-publics, a case study. Policy Sciences, 53(1), 3–32. https://doi.org/10.1007/s11077-019-09367-x

Robinson, G., & Dolk, H. (2015). Public attitudes to data sharing in Northern Ireland. ARK Research Update, No. 108. https://research.hscni.net/sites/default/files/0032-RESEARCH%20UPDATE%20108%20final.pdf

Royal Statistical Society. (2014). Royal Statistical Society research on trust in data and attitudes toward data use / data sharing. https://www.statslife.org.uk/images/pdf/rss-data-trust-data-sharing-attitudes-research-note.pdf

RSA / Royal Society for the Encouragement of Arts, Manufacture and Commerce. (2018). Artificial intelligence: Real public engagement. https://www.thersa.org/discover/publications-and-articles/reports/artificial-intelligence-real-public-engagement

Smith, G. (2009). Democratic innovations: Designing institutions for citizen participation. Cambridge University Press.

Steedman, R., Kennedy, H., & Jones, R. (2020). Complex ecologies of trust in data practices and data-driven systems. Information, Communication & Society, 23(6), 817–832. https://doi.org/10.1080/1369118X.2020.1748090

Street, J., Duszynski, K., Krawczyk, S., & Braunack-Mayer, A. (2014). The use of citizens’ juries in health policy decision-making: A systematic review. Social Science & Medicine, 109, 1–9. https://doi.org/10.1016/j.socscimed.2014.03.005

Acknowledgements

This work was supported by grant reference number R/161466 from the Engineering and Physical Sciences Research Council’s Human Data Interaction Network+.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this chapter

Cite this chapter

Kennedy, H., Steedman, R., Jones, R. (2022). Researching Public Trust in Datafication: Reflections on the Deliberative Citizen Jury as Method. In: Hepp, A., Jarke, J., Kramp, L. (eds) New Perspectives in Critical Data Studies. Transforming Communications – Studies in Cross-Media Research. Palgrave Macmillan, Cham. https://doi.org/10.1007/978-3-030-96180-0_17

Download citation

DOI: https://doi.org/10.1007/978-3-030-96180-0_17

Published:

Publisher Name: Palgrave Macmillan, Cham

Print ISBN: 978-3-030-96179-4

Online ISBN: 978-3-030-96180-0

eBook Packages: Literature, Cultural and Media StudiesLiterature, Cultural and Media Studies (R0)