Abstract

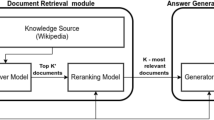

Open-domain Question Answering (OpenQA) is a trendy research hotspot in the field of Natural Language Processing (NLP). In the past few years, with the in-depth research on Deep Learning (DL) and Pre-training Language Modules (PLM), the research on OpenQA has made rapid progress. OpenQA aims to find relevant documents from a large-scale text corpus and then extract or generate correct answers. In this paper, we introduce the development and research status of OpenQA based on “Retriever-Reader.” Firstly, we briefly expound on the relevant theories and traditional architecture. Then the function and category of the Retriever and Reader and the related existing systems are analyzed. Retriever can be regarded as an Information retrieval (IR) system. The reader can be viewed as a machine reading comprehension system (MRC). The fourth section gives a relatively simple introduction to the latest end-to-end training methods and their related types. Finally, we summarize the content of the paper and look forward to the future development trend.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Voorhees, E.M.: The TREC-8 question answering track report. In: Trec, Vol. 99, pp 77–82. Citeseer (1999)

Chen, D.Q., Fisch, A., Weston, J., Bordes, A.: Reading wikipedia to answer open-domain questions. In: 55th ACL, pp. 1870–1879. ACL, Stroudsburg (2017)

Rajpurkar, P., Zhang, J., Lopyrev, K., Liang, P.: SQuAD: 100,000+ questions for machine comprehension of text. In: Proceedings of the 2016 Conference on EMNLP, pp. 2383–2392. ACL, Texas (2016)

Wang, S., et al.: R3: reinforced ranker-reader for open-domain question answering. In: AAAI-18. Louisiana (2018)

Wang, S., et al.: Evidence aggregation for answer re-ranking in open-domain question answering. In: 6th ICLR. ICLR, Vancouver (2018)

Lee, J., Yun, S., Kim, H., Ko, M., Kang, J.: Ranking paragraphs for improving answer recall in open-domain question answering. In: Proceedings of the 2018 conference on EMNLP, pp. 565–569. ACL, Brussels (2018)

Kratzwald, B., Eigenmann, A., Feuerriegel, S.: RankQA: neural question answering with answer re-ranking. In: 57th ACL, pp. 6076–6085. ACL, Florence (2019)

Kratzwald, B., Feuerriegel, S.: Adaptive document retrieval for deep question answering. In: Proceedings of the 2018 conference on EMNLP, pp. 576–581. ACL, Brussels (2018)

Devlin, J., Chang, M., Lee, K., Toutanova, K.: BERT: pretraining of deep bidirectional transformers for language understanding. In: Proceedings of the 2019 conference of the NAACL, pp 4171–4186. ACL, Minnesota (2019)

Yang, W., et al.: End-to-end open-domain question answering with BERTserini. In: Proceedings of the 2019 conference of the NAACL, pp. 72–77. ACL, Minnesota (2019)

Yang, P., Fang, H., Lin, J.J.: Anserini: enabling the use of lucene for information retrieval research. In: Proceedings of the 40th international ACM SIGIR conference on research and development in information retrieval, pp 1253–1256. ACM, Tokyo (2017)

Wang, Z., Ng, P., Ma, X., Nallapati, R., Xiang, B.: Multi-passage BERT: a globally normalized BERT Model for open-domain question answering. In: Proceedings of the 2019 Conference on EMNLP and 9th IJCNLP), pp 5878–5882. ACL, Hong Kong (2019

Lee, K., Chang, M., Toutanova, K.: Latent retrieval for weakly supervised open domain question answering. In: 57th ACL, pp. 6086–6096. ACL, Florence (2019)

Karpukhin, V., et al.: Dense passage retrieval for open-domain question answering. In: Proceedings of the 2020 conference on EMNLP, pp. 6769–6781. ACL, Online (2020)

Guu, K., Lee, K., Tung, Z., Pasupat, P., Chang, M.: Retrieval augmented language model pre-training. In: Hal, D., III, Aarti, S. (eds.) Proceedings of the 37th international conference on machine learning, vol. 119, pp. 3929-3938. PMLR, Proceedings of Machine Learning Research (2020)

Nishida, K., Saito, I., Otsuka, A., Asano, H., Tomita, J.: Retrieve-and-read: multi-task learning of information retrieval and reading comprehension. In: Proceedings of the 27th ACM international conference on information and knowledge management, pp. 647–656. ACM, Torino (2018)

Seo, M., Kembhavi, A., Farhadi, A., Hajishirzi, H.: Bidirectional attention flow for machine comprehension. arXiv preprint arXiv:1611.01603 (2016)

Nie, Y., Wang, S., Bansal, M.: Revealing the importance of semantic retrieval for machine reading at scale. In: Proceedings of the 2019 conference on EMNLP and 9th IJCNLP, pp. 2553–2566. ACL, Hong Kong (2019)

Zhang, Y., et al.: DC-BERT: decoupling question and document for efficient contextual encoding. In: Proceedings of the 43rd international ACM SIGIR conference on research and development in information retrieval, pp. 1829–1832. Association for Computing Machinery (2020)

Khattab, O., Zaharia, M.: ColBERT: efficient and effective passage search via contextualized late interaction over BERT. In: Proceedings of the 43rd international acm sigir conference on research and development in information retrieval, pp. 39–48. Association for Computing Machinery (2020)

Zhao, T., Lu, X., Lee, K.: SPARTA: efficient open-domain question answering via sparse transformer matching retrieval. In: Proceedings of the 2021 conference of the NAACL, pp. 565–575. ACL, Online (2021)

Yang, Z., et al.: HotpotQA: a dataset for diverse, explainable multi-hop question answering. In: Proceedings of the 2018 conference on EMNLP, pp. 2369–2380. ACL, Brussels (2018)

Qi, P,. Lin, X., Mehr, L., Wang, Z., Manning, C.D.: Answering complex open-domain questions through iterative query generation. In: Proceedings of the 2019 conference on EMNLP and 9th IJCNLP, pp. 2590–2602. ACL, Hong Kong (2019)

Das, R., Dhuliawala, S., Zaheer, M., McCallum, A.: Multi-step retriever-reader interaction for scalable open-domain question answering. In: 7th ICLR. ICLR, Louisiana (2019)

Feldman, Y., El-Yaniv, R.: Multi-Hop paragraph retrieval for open-domain question answering. In: 57th ACL, pp. 2296–2309. ACL, Florence (2019)

Asai, A., Hashimoto, K., Hajishirzi, H., Socher, R., Xiong, C.: Learning to retrieve reasoning paths over wikipedia graph for question answering. In: 7th ICLR. ICLR, Louisiana (2019)

Lin, Y., Ji, H., Liu, Z., Sun, M.: Denoising distantly supervised open-domain question answering. In: 56th ACL, pp. 1736–1745. ACL, Melbourne (2018)

Min, S., Chen, D., Zettlemoyer, L., Hajishirzi, H.: Knowledge guided text retrieval and reading for open domain question answering. arXiv preprint arXiv:1911.03868 (2019)

Liu, Y., et al.: Roberta: a robustly optimized bert pretraining approach. arXiv preprint arXiv:1907.11692(2019)

Joshi, M., Chen, D., Liu, Y., Weld, D.S., Zettlemoyer, L., Levy, O.: SpanBERT: improving pretraining by representing and predicting spans. Trans. Assoc Comput. Linguistics 8, 64–77 (2019)

Brown, T.B., et al.: Language models are few-shot learners. arXiv preprint arXiv:2005.14165 (2020)

Raffel, C., et al.: Exploring the limits of transfer learning with a unified text-to-text transformer. arXiv preprint arXiv:1910.10683 (2019)

Lewis, M., et al.: BART: denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension. In: 58th ACL, pp. 7871–7880. ACL, Online (2020)

Lewis, P., et al.: Retrieval-augmented generation for knowledge-intensive nlp tasks. arXiv preprint arXiv:2005.11401 (2020)

Izacard, G., Grave, E.: Leveraging passage retrieval with generative models for open domain question answering. In: 16th EACL, pp. 874–880. ACL, Online (2021)

Qu, Y., et al.: RocketQA: an optimized training approach to dense passage retrieval for open-domain question answering. In: Proceedings of the 2021 conference of the NAACL, pp. 5835–5847. ACL, Online (2021)

Seo, M., et al.: Real-time open-domain question answering with dense-sparse phrase index. In: 57th ACL, pp. 4430–4441. ACL, Florence (2019)

Seo, M., Kwiatkowski, T., Parikh, A.P., Farhadi, A., Hajishirzi, H.: Phrase-indexed question answering: a new challenge for scalable document comprehension. In: Proceedings of the 2018 conference on EMNLP, pp. 559–564. ACL, Brussels (2018)

Lee, J., Seo, M., Hajishirzi, H., Kang, J.: Contextualized sparse representations for real-time open-domain question answering. In: 58th ACL, pp. 912–919. ACL, Online (2020)

Lee, J., Sung, M., Kang, J., Chen, D.: Learning dense representations of phrases at scale. In: 59th ACL and 11th IJCNLP, pp. 6634–6647. ACL, Online (2021)

Yamada, I., Asai, A., Hajishirzi, H.: Efficient passage retrieval with hashing for open-domain question answering. In: 59th ACL and 11th IJCNLP, pp. 979–986. ACL, Online (2021)

Baudiš, P., Šedivý, J.: Modeling of the question answering task in the yodaqa system. In: International conference of the cross-language evaluation forum for European languages, pp. 222–228. Springer (2015)

Berant, J., Chou, A.K., Frostig, R., Liang, P.: Semantic parsing on freebase from question-answer pairs. In: Proceedings of the 2013 Conference on EMNLP, pp. 1533–1544. ACL, Washington (2013)

Kwiatkowski, T., et al.: Natural questions: a benchmark for question answering research, vol. 7, pp. 452–466 (2019)

Dhingra, B., Mazaitis, K., Cohen, W.W.: Quasar: datasets for question answering by search and reading. arXiv preprint arXiv:1707.03904 (2017)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Zheng, D., Yang, J., Yong, B. (2022). Open Domain Question Answering Based on Retriever-Reader Architecture. In: Hassanien, A.E., Xu, Y., Zhao, Z., Mohammed, S., Fan, Z. (eds) Business Intelligence and Information Technology. BIIT 2021. Lecture Notes on Data Engineering and Communications Technologies, vol 107. Springer, Cham. https://doi.org/10.1007/978-3-030-92632-8_68

Download citation

DOI: https://doi.org/10.1007/978-3-030-92632-8_68

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-92631-1

Online ISBN: 978-3-030-92632-8

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)